Cross-view and Cross-domain Underwater Localization based on Optical Aerial and Acoustic Underwater Images

Abstract

Cross-view image matches have been widely explored on terrestrial image localization using aerial images from drones or satellites. This study expands the cross-view image match idea and proposes a cross-domain and cross-view localization framework. The method identifies the correlation between color aerial images and underwater acoustic images to improve the localization of underwater vehicles that travel in partially structured environments such as harbors and marinas. The approach is validated on a real dataset acquired by an underwater vehicle in a marina. The results show an improvement in the localization when compared to the dead reckoning of the vehicle.

I INTRODUCTION

In autonomous vehicles, some localization methods go beyond a single view perception [1]. Cross-view localization methods combine data from different perspectives such as aerial and terrestrial images to estimate the terrestrial localization [2, 3]. Typically, these methods localize street view images by matching georeferenced aerial images from satellites or drones.

In this study, we localize an underwater vehicle by matching its underwater acoustic images with aerial georeferenced satellite images. It configures a Cross-View localization problem because the underwater acoustic images provide a frontal view and the aerial images provide a top view of the scene. In addition, we have the Cross-Domain problem because of the acoustic and the optical domains of the images. Fig. 1 represents the cross-view and cross-domain localization problem addressed in this work. While the gray-scale underwater acoustic images only provide distances and shapes of the observed objects the aerial optical images provide rich texture and color.

Our method is designed to operate in partially structured environments such as marinas and harbors. These places provide stable features such as piers, stones, and the shoreline that can be observed in both aerial and underwater images.

The problem of matching of underwater acoustic and optical aerial images was previously addressed on [4, 5, 6]. Santos et al. [4, 5] proposed a Deep Neural Network (DNN) based on a Siamese architecture [7] that handles the cross-domain problem by training two independent networks. Giacomo et al. [6] presented an approach inspired by Generative Adversarial Network (GAN) [8] and Triplets Network [9]. They trained two models using a quadruplet strategy, an adaptation of the triplet strategy with an additional anchor image. The paper showed the latter approach achieved better results and performance. However, none of the previous work addressed the underwater localization problem, only the matching problem.

The main contribution of this work is a new underwater localization framework based on a map built from georeferenced aerial image and data association with acoustic images on the Adaptive Monte Carlo Localization (AMCL) algorithm [10]. The method allows the robot localization in a GPS-denied environment such as the water.

The Monte Carlo Localization, also known as Particle Filter, is a well-known localization method that can model a multi-modal non-Gaussian probabilistic distribution function by spreading hypotheses in the map known as particles. The particles allow us to select the most likely regions on satellite images (map) that match the acoustic underwater image (perception).

The method is suitable for data association because we crop the aerial image such as both images, acoustic and aerial, have the same size and shape. Aerial images cover a large area and have a larger size than underwater acoustic images. Therefore, the aerial image must be cropped before its use on the image matching system.

The proposed localization framework can be applied to unmanned underwater vehicles or hybrid aerial-underwater vehicles [11, 12, 13, 14] to perform tasks, such as inspection and surveillance on harbors and marinas. This work is validated on real data collected by an underwater vehicle in a marina and optical aerial images acquired from a satellite.

The results showed the method can localize the underwater vehicle using satellite images and achieving better results than dead reckoning.

II RELATED WORK

Methods to fuse cross-view and cross-domain data on aerial and underwater domains to estimate underwater localization are not widely explored in the literature, being the first to the best of our knowledge. One of the main issues of this kind of method is the cross-view and cross-domain matching. Some work propose a cross-view matching of terrestrial and aerial images to geolocalize terrestrial images. Xiang Gao et al. [15] presents a review of these methods and classifies them as image-based and structure-based methods. Most of the aerial and terrestrial image matching methods explore self-similar features, semantic features, or Deep Neural Networks approaches.

Self-similar feature methods [16, 2] look for features present on structures such as skyscrapers facades that can be detected in both views. For example, street view and aerial images are matched using shared features such as texture and color from the same skyscrapers facade.

Methods based on structures [17, 18, 19] look for the same structures in both aerial and terrestrial view. Typically, this approach is applied when the environment does not provide enough features to compare the images. Semantic-based methods combine external information about the observed scene to perform matching [20, 21].

The deep learning methods perform matching based on learned features from the image datasets. Lin et al. proposed the first deep learning network called Where-CNN [22] to learn feature embedding for image matching. Workman et al. [23, 24] obtained state-of-the-art results on wide-area image geolocation with a new cross-view training strategy for learning a joint semantic feature representation for aerial images. Tian et al. [25] proposed a matching approach of terrestrial and aerial images based on urban buildings.

Karkus et al. [26] propose an end-to-end Recurrent Neural Network (RNN) that encodes a full differential particle filter algorithm. The method is designed to localize an indoor vehicle with a single camera. The simulated dataset House3D is adopted to train and validate the method. It is an interesting work because it allows training the network without labeled data. However, the authors do not test the system on a real-world environment, and it places in check if an end-to-end approach would be able to localize on real environment data.

Localization of underwater acoustic images using aerial satellite images has similar aspects with the terrestrial image localization with aerial images. Both problems involve cross-view matching. However, only the acoustic data is obtained in a diverse domain. Furthermore, the GPS system is usually available in ground robots while the underwater environment is GPS-denied.

Giacomo et al. proposed in [27] a neural network that translates a gray-scale acoustic image into a colored aerial satellite image. The proposed network is based on the U-Net architecture and employs techniques such as dilated convolutions, guided filters, and the Deep Convolutional Generative Adversarial Network (DCGAN).

A few work study the underwater acoustic and aerial image match. Santos et al. [5] proposed a Deep Neural Network (DNN) based on a Siamese architecture [7] that handles the cross-domain problem by training two independent networks.

Giacomo et al. [6] proposed a cooperative approach for training multiple networks to reduce the image dimensionality belonging to the same domain of the present work. Their method consists of cooperatively optimizing two neural networks that share the same architecture but not the same weights. Then, these networks update their weights following the triplet objective function. In the end, it is possible to use the trained networks to extract vectors that encode the images fed into them. Afterward, a distance between the extracted vectors can be calculated, such as the Euclidean distance. This network makes possible tasks such as matching and ranking, which are of primordial importance to the present work.

Santos et al. [5] and Giacomo et al. [6] research provide image matching methods but none of them estimate localization. In this work we adopt the quadruplet neural network from Giacomo et al. [6] in a complete framework that estimates the vehicle localization.

Structure-based approaches inspired our method because the structures can be easily identified on sonar images. Our approach uses the structure of sonar images for the localization in a map generated by the aerial image, in a probabilistic framework based on Adaptive Monte Carlo Localization (AMCL) [10].

III METHODOLOGY

The underwater localization method is shown in Fig. 2. A particle filter algorithm [10] estimates the vehicle localization based on the current observation , vehicle control , and the map of the scene . Where the observation is an underwater acoustic image and is the aerial satellite image.

The system has the following four main processes: A - Aerial Image Processing, B - Underwater Acoustic Image Processing, C - Cross-View and Cross-Domain Image Match, and D - Particle Filter. Step A runs once and offline. The remaining process runs in parallel in the computation graph of the Robot Operating System (ROS) [28]. Each process is described in the following sections.

III-A Aerial Image Processing

The Aerial Image Processing employs the neural network U-Net to semantic segment the aerial image in three classes: stationary structures, movable objects, and the water highlighted in green, red, and blue on Fig. 2-A. The process is better explored and better described in [29]. Movable objects such as boats cannot be trusted on the localization problem. However, stationary structures such as piers and stones are relevant information to incorporate into the system. This process runs once and offline before the mission starts. Then, the segmented images are loaded into the vehicle memory as a map . Manual segmentation also can be employed since the process runs offline with no significant time restrictions.

III-B Underwater Acoustic Image Processing

The Acoustic Image Processing smooths the current acoustic image referenced as observation by aligning a batch of previous images . The alignment process starts by transforming the acoustic images into a 2D point cloud. First, a border detection based on image gradient segments the image. Each 2D point is defined as the centroid of the segments. The Iterative Closest Point (ICP) algorithm finds the affine transform between the 2D point clouds. Then, all images are transformed into the current image view point and smoothed by averaging the pixels.

III-C Cross-View and Cross-Domain Image Match

The Image Match process uses the Quadruplet neural network proposed by Giacomo et al. [6] running in a Graphics Processing Unit (GPU). The process evaluates the similarity between a batch of semantically segmented satellite images and the current acoustic image as represented on Fig. 2-C.

Two neural networks are used to encode acoustic and segmentation images. Each network is used for one image domain, i.e., one for acoustic, and another for a segmented and cropped aerial image. A cooperative approach is used to train the encoding networks.

It is worth noting that both the acoustic and the segmented image encoder follow the same architecture. However, the weights are not shared, leading to different networks, as described in [6]. Due to the cooperative approach, the networks are trained to perform Metric Learning, by way of a triplet loss function. As a result, the networks produce vectors of reduced dimensionality from the original images. By taking the Euclidean distance between the produced vectors, a meaningful metric can be obtained. This metric is closer to zero when the images are similar and grows as they diverge. Therefore, the Euclidean distance between the vectors can be used to generate a rank of matching images.

This process receives a batch of satellite images and one acoustic image. The images are re-scaled to the lower resolution of to fit on the Quadruplet Neural Network input. This process output is a normalized matching score of each satellite image where is the timestamp of the acoustic image.

A inversion on the Euclidean distance between the vectors are performed to generate a matching score considering all images of the batch. Defining as the distance between the satellite image and current acoustic image, we find and and applying . The final scores are normalize such as where the denotes the number of particles in the current set, and is the timestamp.

III-D Particle Filter

The particle filter algorithm estimate the vehicle state based on belief that is approximated by a set of particles, where , is the particle weight, and each particle represents an hypothesis of the vehicle pose in the world at time . The belief is built on the map , i.e the semantic segmented satellite image. The transition model and the observation model update the particle set.

III-D1 Transition Model

The transition model estimates the particle state based on previous state and the vehicle control signal such as . A constant velocity model estimates the vehicle motion based on its control signal. The body-centered forward motion is converted to a motion on the world frame using current vehicle orientation measured by a compass. The transition model updates all particles every new control message .

III-D2 Observation Model

The observation model incorporates an enhanced acoustic image on the particle filter set. It computes the likelihood of given the particle state and the map such as . This is a key point of the method because the particle state allows us to crop an aerial satellite image of any size in such a way that it has the same shape and scale of the acoustic images, as shown in Fig. 3. Each particle generates an aerial satellite image crop that is compared with the current acoustic image using the matching process described on Sec. III-C. As result, the normalized score of each particle updates the particle weight .

This process runs for each new underwater acoustic image. Initially, a information test is made, i.e. when less than 2% of the image pixels are non-zero, we consider the image as non-informative and discard it due to lack of information. Otherwise, the image feeds the observation model that updates the particle’s weight. This verification is useful because the vehicle can be in open waters, where there is no structure to match the satellite image. In this case, the vehicle relies on the odometry. When it returns to a region with structures, the algorithm corrects the localization error using the satellite image.

III-D3 Initialization

The particle set is randomly initialized following a Gaussian distribution with mean on the last vehicle position before dive and a standard deviation .

III-D4 Re-sampling

A re-sampling process is performed on the particle set using the Survival of the fittest principle [10], where the unlikely particles are replaced by the more likely ones. We adopted the roulette wheel approach which uses binary search and re-sample all particles in . After the re-sampling, we check for bad particles with the following tests:

-

•

Is the particle on open water? We check if the cropped image views stationary structures or not, i.e green pixels on the semantic segmented aerial image.

-

•

Is the particle laying on the floor? We check if the particle position is on a stationary structure, i.e a green pixel on the semantically segmented satellite image.

-

•

Is the particle out of the map borders? We check if the particle position is out of the satellite image boundaries.

In any positive case, the particle is re-sampled again using a higher standard deviation. As a last chance, when a particle is re-sampled more than ten times in the same iteration, a uniform random pose in the map is set.

IV RESULTS

Our method is evaluated on real underwater scenario of the dataset ARACATI 2017 using Robot Operating System (ROS) [30].

IV-A Dataset ARACATI 2017

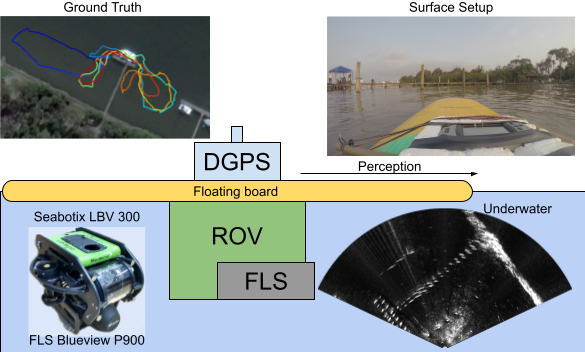

The dataset ARACATI 2017 was recorded on the marina of Yacht Club of Rio Grande Brazil with a Seabotix Little Benthic Vehicle LBV 300-5 and a Forward-Looking Sonar (FLS) BlueView P900 [31]. The vehicle was attached below a floating board such as it stays underwater while a Differential Global Position System DGPS stays in the top of the board outside water as shown in Fig. 4.

We evaluate the performance of the system by collecting acoustic images, compass, odometry and DGPS as ground truth at a maximum speed of 0.6 m/s. The marina has a minimum depth of 1 meter and a maximum depth of 5 meters. The coastline is covered by stones that have a strong acoustic signature. Pontoon objects, moving boats, fish, and acoustical signatures from the seafloor and surface are present in the sonar data111Dataset ARACATI 2017 is available at https://github.com/matheusbg8/aracati2017 .

IV-B Experimental results

The Localization and Matching process are evaluated on dataset ARACATI 2017 using a fixed number of 120 particles and the parameters of Table I. Where is the standard deviation and is the mean of a Gaussian distribution function used to estimate the state of each particle . The initial position guess is the last GPS message before dive.

| Particle Re-sampling | On Particle Selected with Roulette | |

| Particle Initialization | Initial Position Guess | |

| Bad Particle Re-sampling | On Particle Selected with Roulette |

The experiment resulted on the path shown in Fig. 5. The green line indicates the DGPS data adopted as ground truth, the blue line indicates the path from our localization method, and the red line indicates the dead reckoning. The localization error in meters of the dead reckoning and our method relative to the DGPS is shown in Fig. 6.

The vehicle path crosses the six marks represented by the circles in figures 5 and 6. It starts on the green circle, moves to yellow, blue, purple, orange, and ends on the red circle after 41 minutes. The results show that most of the time our method had a smaller localization error than the vehicle’s dead reckoning. However, some aspects must be considered. Our system is based on images and depends on structures to perform cross-view and cross-domain matching. When features are not available, our method relies most on odometry.

In the first seconds of the experiment, the particles were initializing while the vehicle was moving. It led the particles to lose part of the initial control signals and transition model updates, resulting in a higher localization error. In the next ten minutes, the localization remains high because of the lack of features on the acoustic images. After the yellow mark, the sonar detected the central pier, and the localization error dropped while the vehicle was getting closer to the structures. Then the vehicle traveled to the left side of the pier and moved in an open area between blue and purple marks. At this point, the method relied most on odometry again because of the leak of acoustic features. After the purple mark, the vehicle returned to the central pier. Its localization got corrected thanks to our observation model and the particle filter. After this point, the method had a better localization estimation than the dead reckoning222The experimental results are available in video at https://youtu.be/UOAcSODbaIw.

The experiment ran on a computer with a Processor Ryzen 7 2700x and a GPU NVIDIA RTX 3070. The image match evaluated 120 particles in 0.72 secs (1.39 Hz) and used 3.3 GB of RAM and 6 GB of VRAM. The particle filter process used 71 MB of RAM and the average execution time was 1.207 secs (0.83 Hz). The process runs in parallel, and their implementation is not optimized. The top speed of the underwater vehicle in the experiment was 0.63 m/s, and the results were achieved by processing one acoustic image every 4 seconds. We believe the system can run on an embedded platform such as NVIDIA Jetson AGX Xavier which has 32 GB of shared memory.

The current method does not estimate roll and pitch angles. We adopted an open frame vehicle calibrated to passively stabilize these angles. However, we believe that our method can be adapted to operate in parallel with the attitude control of a typical AUV.

V CONCLUSIONS

We proposed a new underwater vehicle localization method based on cross-view and cross-domain image match and validated it in a real experiment. The system uses a Deep Neural Network for image matching and an adapted Particle Filter for state estimation. The method can localize an underwater vehicle in a partially structured environment with acoustic images from a Forward-Looking Sonar and optical aerial images of the environment from satellites.

As future work, we want to compare different underwater localization approaches in a new experiment where we can improve the odometry model of the vehicle and test different observation models on the particle filter algorithm. We are also planning to perform real-time tests on embedded platforms such as NVIDIA Jetson Xavier running together with the robot using high-resolution images collected by Unmanned Aerial Vehicles (UAV).

References

- [1] Y. Wu, “Coordinated path planning for an unmanned aerial-aquatic vehicle (UAAV) and an autonomous underwater vehicle (AUV) in an underwater target strike mission,” Ocean Engineering, vol. 182, pp. 162 – 173, 2019.

- [2] M. Wolff, R. T. Collins, and Y. Liu, “Regularity-driven building facade matching between aerial and street views,” in IEEE CVPR, June 2016, pp. 1591–1600.

- [3] S. Hu, M. Feng, R. M. H. Nguyen, and G. Hee Lee, “CVM-Net: Cross-view matching network for image-based ground-to-aerial geo-localization,” in IEEE CVPR, 2018, pp. 7258–7267.

- [4] M. M. Dos Santos, G. G. De Giacomo, P. L. J. Drews-Jr, and S. S. C. Botelho, “Satellite and underwater sonar image matching using deep learning,” in 2019 Latin American Robotics Symposium (LARS), 2019 Brazilian Symposium on Robotics (SBR) and 2019 Workshop on Robotics in Education (WRE), 2019, pp. 109–114.

- [5] M. Machado Dos Santos, G. G. De Giacomo, P. L. J. Drews-Jr, and S. S. C. Botelho, “Matching color aerial images and underwater sonar images using deep learning for underwater localization,” IEEE Robotics and Automation Letters, vol. 5, no. 4, pp. 6365–6370, Oct 2020.

- [6] G. G. De Giacomo, M. M. dos Santos, P. L. Drews-Jr, and S. S. Botelho, “Cooperative training of triplet networks for cross-domain matching,” in 2020 Latin American Robotics Symposium (LARS), 2020 Brazilian Symposium on Robotics (SBR) and 2020 Workshop on Robotics in Education (WRE). IEEE, 2020, pp. 1–6.

- [7] S. Chopra, R. Hadsell, and Y. LeCun, “Learning a similarity metric discriminatively, with application to face verification,” in IEEE CVPR, vol. 1, 2005, pp. 539–546.

- [8] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial nets,” in Advances in neural information processing systems, 2014, pp. 2672–2680.

- [9] E. Hoffer and N. Ailon, “Deep metric learning using triplet network,” in International Workshop on Similarity-Based Pattern Recognition. Springer, 2015, pp. 84–92.

- [10] S. Thrun, “Probabilistic robotics,” Communications of the ACM, vol. 45, no. 3, pp. 52–57, 2002.

- [11] P. L. J. Drews-Jr, A. A. Neto, and M. F. M. Campos, “Hybrid unmanned aerial underwater vehicle: Modeling and simulation,” in IEEE/RSJ IROS, Sep. 2014, pp. 4637–4642.

- [12] A. A. Neto, L. A. Mozelli, P. L. J. Drews-Jr, and M. F. M. Campos, “Attitude control for an hybrid unmanned aerial underwater vehicle: A robust switched strategy with global stability,” in IEEE ICRA, 2015, pp. 395–400.

- [13] D. Mercado, M. Maia, and F. J. Diez, “Aerial-underwater systems, a new paradigm in unmanned vehicles,” JINT, vol. 95, no. 1, pp. 229–238, 2019.

- [14] A. C. Horn, P. M. Pinheiro, R. B. Grando, C. B. da Silva, A. A. Neto, and P. L. J. Drews-Jr, “A novel concept for hybrid unmanned aerial underwater vehicles focused on aquatic performance,” in 2020 Latin American Robotics Symposium (LARS), 2020 Brazilian Symposium on Robotics (SBR) and 2020 Workshop on Robotics in Education (WRE), 2020, pp. 1–6.

- [15] X. Gao, S. Shen, Z. Hu, and Z. Wang, “Ground and aerial meta-data integration for localization and reconstruction: A review,” Pattern Recognition Letters, vol. 127, pp. 202–214, 2018.

- [16] M. Bansal, K. Daniilidis, and H. Sawhney, “Ultrawide baseline facade matching for geo-localization,” in Large-Scale Visual Geo-Localization. Springer, 2016, pp. 77–98.

- [17] A. Li, V. I. Morariu, and L. S. Davis, “Planar structure matching under projective uncertainty for geolocation,” in ECCV, 2014, pp. 265–280.

- [18] A. L. Majdik, Y. Albers-Schoenberg, and D. Scaramuzza, “MAV urban localization from google street view data,” in IEEE/RSJ IROS, 2013, pp. 3979–3986.

- [19] A. Viswanathan, B. R. Pires, and D. Huber, “Vision based robot localization by ground to satellite matching in gps-denied situations,” in 2014 IEEE/RSJ IROS, Sep. 2014, pp. 192–198.

- [20] T.-Y. Lin, S. Belongie, and J. Hays, “Cross-view image geolocalization,” in IEEE CVPR, 2013, pp. 891–898.

- [21] F. Castaldo, A. Zamir, R. Angst, F. Palmieri, and S. Savarese, “Semantic cross-view matching,” in IEEE ICCVw, 2015, pp. 9–17.

- [22] T.-Y. Lin, Y. Cui, S. Belongie, and J. Hays, “Learning deep representations for ground-to-aerial geolocalization,” in The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2015.

- [23] S. Workman and N. Jacobs, “On the location dependence of convolutional neural network features,” in IEEE CVPRw, 2015, pp. 70–78.

- [24] S. Workman, R. Souvenir, and N. Jacobs, “Wide-area image geolocalization with aerial reference imagery,” in The IEEE International Conference on Computer Vision (ICCV), December 2015.

- [25] Y. Tian, C. Chen, and M. Shah, “Cross-view image matching for geo-localization in urban environments,” in IEEE CVPR, 2017, pp. 3608–3616.

- [26] P. Karkus, D. Hsu, and W. S. Lee, “Particle filter networks with application to visual localization,” in Conference on robot learning. PMLR, 2018, pp. 169–178.

- [27] G. G. De Giacomo, M. M. dos Santos, P. L. Drews-Jr, and S. S. Botelho, “Guided sonar-to-satellite translation.” J. Intell. Robotic Syst., vol. 101, no. 3, p. 46, 2021.

- [28] Stanford Artificial Intelligence Laboratory et al., “Robotic operating system.” [Online]. Available: https://www.ros.org

- [29] M. M. dos Santos, G. G. De Giacomo, P. L. J. Drews, and S. S. Botelho, “Semantic segmentation of static and dynamic structures of marina satellite images using deep learning,” in 2019 8th Brazilian Conference on Intelligent Systems (BRACIS), 2019, pp. 711–716.

- [30] M. Quigley, K. Conley, B. Gerkey, J. Faust, T. Foote, J. Leibs, R. Wheeler, and A. Y. Ng, “Ros: an open-source robot operating system,” in ICRA workshop on open source software, vol. 3, no. 3.2. Kobe, Japan, 2009, p. 5.

- [31] M. M. Santos, G. B. Zaffari, P. O. C. S. Ribeiro, P. L. J. Drews-Jr, and S. S. C. Botelho, “Underwater place recognition using forward-looking sonar images: A topological approach,” Journal of Field Robotics, vol. 36, no. 2, pp. 355–369, 2019. [Online]. Available: https://onlinelibrary.wiley.com/doi/abs/10.1002/rob.21822