Critical behaviour at the onset of synchronization in a neuronal model

Abstract

The presence of both critical behaviour and oscillating patterns in brain dynamics is a very interesting issue. In this paper, we consider a model for a neuron population where each neuron is modeled by an over-damped rotator. We find that in the space of external parameters, there exist some regions that system shows synchronization. Interestingly, just at the transition point, the avalanche statistics show a power-law behaviour. Also, in the case of small systems, the (partially) synchronization and power-law behavior can happen at the same time.

I Introduction

Scale-free and critical behavior has long been observed in animal and human brain experiments, both in vitro and in vivo Beggs ; Peterman ; Gireesh ; Hahn ; Priesemann ; Pasq . Neuronal avalanches, the bursts of activity and spiking of neurons that spread in large areas of the brain network, exhibit power-law forms in their size and duration distributions. Also, other data like the data of the human electroencephalography (EEG) show similar patterns in the background activity of the brain Freeman ; Shriki .

The criticality of neuronal systems is supported by the existence of power-law behavior and data collapse. Being at criticality is thought to play a crucial role in some of brain fundamental properties like optimal response and storage and transfer of information Shew ; Kinouchi ; ShewPlenz ; Gautam ; Legenstein . As there is no need to tune any parameter to arrive at criticality, it was suggested that the brain is an example of Self-Organized Critical (SOC) systemsHesse . The idea of self-Organized criticality was introduced by Bak, Tang, and Wiesenfeld BTW , where using a simple sandpile model shows that some complex systems can organize themselves towards their critical point and therefore, there is no need to regulate external parameters. A range of models was introduced to show that self-organized criticality is plausible in the neural systems Bornholdt ; De Archangelis ; Levina ; Millmann ; Meisel ; Tetzlaff ; Kossio . However, being at criticality does not necessarily imply that the system arrives at criticality through a self-organizing mechanism. Rather, it has been shown that neural systems may not be an example of SOC systems Bonachela . Also, for the brain network, which has a highly hierarchical and modular structure, the critical points are generalized to critical regions, similar to that of Griffiths phases Moretti ; Moosavi , and there may be no need to fine-tune a parameter. Actually, it seems that a long term evolutionary mechanism would be enough to explain why the brain works at criticality.

Therefore, some efforts have been made to find how power law can happen in models of the neuronal system even you need to tune some external parametersZare . Yet it remains to understand what is the phase transition that determines the critical state. One of the most promising candidates is the synchronization transition. It is found that the brain is more or less maintained on the transition point to synchronization Gautam ; Poil ; Dalla , and the criticality in avalanche statistics can happen at this transition pointFontenele ; Montakhab . Also, a general framework based on Landau-Ginzburg theory has been proposed to describe the dynamics of cortex and it is found that at the edge of synchronization scale-free avalanches appear Munoz . In similar studies, it is found that the bifurcation that leads to successive firings of a neuron might have effect on the overall dynamics .

In this paper, we study a network of neurons modeled by over-damped rotators to mimic type I neurons, rather than the usual integrate-and-fire model that more or less mimics type II neurons’ behaviour. Therefore, our setup is similar to that of the Kuramoto modelKuramoto and hence each oscillator is identified by a single-phase variable. This helps us to study the synchronization of the system more easily. Of course, the dynamics, especially the way the two neighbours affect each other is very different from the Kuramoto model and therefore it has completely different features. We explore the phase space of external parameters and find the transition lines of synchronization. The main parameters we have explored are the external stimulation power, the relative strength of inhibitory neurons to excitatory ones and the axonal time delay. We analyze the system’s avalanche size statistics at different points of the phase space of the parameters and interestingly observe that right at the transition the avalanche size and period statistics obey power-law.

II Models and Methods: The single neuron model

There are lots of models for single neuron dynamics. However, for simulating relatively large networks, simple models should be chosen. A simple model only keeps the main characteristics of the system and throws away other details. For a neuron, this main characteristic may be stated as follows: If a relatively small stimulation is applied to a neuron, It doesn’t react considerably. However, a neuron that receives a slowly varying current throws off a fairly regular set of spikes and in this situation, it may be regarded as an oscillator. In the language of dynamical systems, when a neuron is sufficiently stimulated, the stable fixed point is lost and its movement in phase space can be regarded as a limit cycle, at least in short times. Therefore, for the single neuron model, it is good to consider a simple model that has such a cycle when it is stimulated. The simplest model might be the well-known leaky integrate and fire model (LIF); however, the LIF model. As we will discuss later, this model more or less mimics the behavior of Type II neurons, that is, there is a ”discontinuity” in the frequency of successive firings of the modeled neuron, or its activity, at the onset activity. In fact the activity is continuous but with a logarithmic singularity which in some sense is equivalent to discontinuity. In contrast, Type I neurons are characterized mainly by the appearance of oscillations with arbitrarily low frequency as the current is injected. In our study, we prefer to use a simple model that behaves more closely to a type I neuron.

A suitable model for type I neurons is given by the equation

| (1) |

where is the external stimulation and is the dynamical variable. The model has been considered before as a simple model to simulate the dynamics of neurons and is closely related to quadratic non-linear integrate and fire modelErmentrout-1 ; Ermentrout-2 ; Ermen .

The equation (1) can be interpreted as an over-damped rotator in the following way: Consider a rod pendulum of mass and length driven by a constant torque in a viscous medium. The equation of motion of such pendulum is given by:

| (2) |

where is the angle of the rotating rod with the downward vertical, is the viscous damping constant, is the acceleration due to gravity, and is the external torque.

Equation (2) is a second-order system; however, in the limit of very large ’s, where the rotator is over-damped, it may be approximated with a first-order system. Neglecting the inertia term and non-dimensionalizing the resulted equation, one arrives at equation (1) Strogatz .

Although equation (2) has a very rich dynamics Strogatz , the over-damped limit is sufficient for modeling single neuron dynamics. It shows a saddle-node bifurcation at , when there exist two distinct fixed points, one of which is stable and the other is unstable. If , there will be no fixed points and the rotator will overturn continually. The rest state of the neuron corresponds with the stable fixed point and when and the rotator overturns continually, the neuron fires repeatedly.

It is more convenient to take and rewrite Eq. 1 as

| (3) |

Consider the case . The two equilibrium points are shown in figure 1.

When the system is at rest, it stands at the stable fixed point. If the rotator is stimulated slightly, it returns back to the stable fixed point. However, if the stimulation is large enough to take the rotator beyond the unstable fixed point, or the threshold, it will rotate a complete circle and then arrives at the stable fixed point. We call this complete rotation of the rotator a spike. Of course, usually, it is considered that the ”neuron” spikes when , that is, it produces an action potential right at that moment. This model can be mapped to quadratic integrate and fire neuron by the transformation Ermen . It is easy to show that Eq. 3 models type I neurons. If the current applied to the neuron exceeds the threshold value , it spikes repeatedly. The inter spike interval (ISI) is determined via

| (4) |

where is the spike time and is the spike angle. Therefore, the gain function is

| (5) |

and hence it grows as for currents slightly above the threshold current. Note that the gain function behaves as with and therefore the function is continuous. For , the function is clearly this continuous and for the case of it is usually regarded that there is jump in the function and is therefore discontinuous. In the case of the leaky integrate-and-fire model with the dynamics given by and the threshold potential , the gain function is proportional to , which resembles a discontinuity, although the function is actually continuous. Therefore, to mimic the type I neurons, we prefer to choose the overdamped rotator as our single-neuron model.

III Models and Methods: the network of neurons

We have considered a network composed of neurons modeled by the over-damped rotator where of them are randomly chosen to be excitatory neurons, and the rest are inhibitory ones. Each neuron, regardless of being excitatory or inhibitory, receives a constant number of internal connections, that is from a fixed number of neurons within the network. We denote this number by and write where and are the number of connections from excitatory and inhibitory neurons respectively. We have considered the ratio of inhibitory inputs to excitatory ones is the same as the population ratio, which is . The neurons also receive connections from external neurons. is taken to be equal to the number of excitatory connections from the network (). The external neurons fire randomly so that the intervals between two successive firings have a Poisson distribution with the average . These external neurons effectively play the role of external (noisy) current, therefore we set in the dynamics of over-damped rotators (Eq. 3) and control the external current through tuning .

When the rotator arrives at , it fires and hence changes the phase of its neighbour by the amount . For presynaptic excitatory neurons and for presynaptic inhibitory ones . The same strength is considered for external (excitatory) neurons. Also, we assume that if the neuron fires at time , the spike arrives at the neuron at , that is, an axonal time delay introduced to the system.

Now we can write the dynamics of the neuron in the following form

| (6) |

where the first summation, is on presynaptic neurons connected to the neuron , and the second summation is on the spikes of the neuron . In fact, the delta function represents the th spike from the neuron arriving at .

Usually, the models describing networks of neurons have a handful of parameters and are difficult to analyze completely. In our model, the parameters , and are the five adjustable parameters. The role of each parameter is obvious; controls the strength of external drive, controls the relative strength of inhibition to excitation within the network, is the axonal time delay, controls the strength of the synapses and is simply the total number of neurons. To make the parameter space smaller, we have fixed and . We try to investigate the dynamical characteristics of the system by exploring considerable parts of the remaining 3-dimensional space.

IV Synchronization of the neurons

We have simulated populations of the neurons to find out if synchronization happens throughout the system or not. It has been observed that in such neuron populations synchronization might happenBrunell ; Montakhab ; Shahbazi ; Luccioli . For example, in Brunell a network similar to ours is studied and it has been observed that in the presence of delay, one can find some regions where the system shows synchronization. In his paper, Brunell has studied its network for different values of external drive and the relative strength of inhibition to excitation in the system and has found regions in this space where synchronization happens. Also in Valizade17 , using a Kuramoto-like model it has been shown that in presence of delay a transition from a asynchrony to synchrony happens when the level of input to the network is changed. Note that although we have rotators as our single neuron model, it differs from Kuramoto model, both in single agent dynamics and and in interactions between two adjacent neurons: it has a preferred angle (corresponding to rest state of the neurons) and, when a neuron fires, it kicks the post-synaptic neuron. In Kuramoto model, there is no preferred angle and also the interactions comes from a term proportional to sine of the angle difference of the two neuron. Quite recently, a model has been proposed in which all the above terms are present: the intrinsic angular velocity of the rotator and the sine terms of the Kuramoto and the preferred rest point and the kicks of our model Monuz20 . Within this model both synchronous and asynchronous phases have been observed and a rich phase diagram has been obtained. Also in some transition points scaling laws in avalanche statistics is found.

To distinguish the synchron phase from asynchron one, we need a suitable order parameter which vanishes when the system is not synchronous and takes non-zero value otherwise. In the context of Kuramoto-type rotators Kuramoto , the order parameter is defined as , where is the phase of ’th rotator. If all the rotators are in phase, will be unity and if the rotators are all independent, it would be of the order of , which becomes negligible for sufficiently large systems. Hopefully, we have phases as our dynamical variables and at the first sight, one thinks of the same order parameter. However, as we discuss below, we find this definition inappropriate for our model. Our single-neuron model is given by Eq. 3. To have a better insight let us interpret the same equation as a Langevin-type equation:

| (7) |

where we have collected the effect of network spikes and external spikes in the noise term and have defined the potential as

| (8) |

This potential function is sketched schematically in figure 2 for .

The rotator tries to reach the local minimum, which is identified as the rest state of a neuron. However, because of the noise term which is resulted from stimulations received by the neurons, it may go up-hill and then fall off to the next minimum (and as a result fire). Therefore, it is very probable to find the rotator at the point , or equivalently find the neuron at rest. In such a situation, even if the firings of the neurons are irregular and asynchron, the order parameter can take a non-vanishing value. In fact, if the ratio of the neurons at rest to the total number of neurons is , the order parameter will be . Therefore, especially for small external stimulation, this order parameter is useless and it doesn’t show synchronize ”activity”.

If we want to see if the ”activity” is synchronous, it is better to consider just the neurons that are firing. In the case of rotators, we may consider only the rotators with the phase parameter in the interval . We will call these rotators, the spiking rotators. However, within this interval, the real part of is always negative and it’s average will be never zero. On the other hand, within the same interval, the imaginary part of , ( ) ranges from -1 to 1. Consider the quantity , where is the number of spiking rotators and the summation is just over the set of spiking rotators. If the network activity is irregular, then will be of the order of , however if all the rotators spike synchronously, that is for all spiking rotators, then . This function fluctuates in the interval , but we need a just number to distinguish the synchronous phase from asynchronous one. This leads us to the last step to define the proper order parameter as

| (9) |

where means time averaging over a period comparable with the spike period. In this way, if the network activity is irregular, and in the case of synchronous activity . Just we have to check is not very small, and as we will see in our model, in all the regions we are interested in, the activity is large enough.

|

|

|

|

In simulations, we have considered several samples with different sizes ranging from 1000 neurons to 16000 ones. Also, we have considered several random configurations of links with the above-mentioned statistics. As for the initial condition, we have assigned a random phase between and to each rotator and have numerically integrated the differential equations. The time steps were set to . After the system arrives at the steady-state, which happens typically no more than 20000 temporal steps, we begin our actual measurements.

A central quantity of a neuron population is its activity. The activity time series is defined as the number of spikes in the network between and . The parameter should be taken much smaller than the average period of a single neuron successive firings, but not too small that only single firings are found within each interval. We have taken of the order of which seems appropriate for our systems, therefore we fix and will simply denote by .

Within the same networks, we have calculated the order parameter (Eq. 9) for different values of , the external stimulation strength, relative number of inhibitory neurons to excitatory ones ,and the delay parameter . Figure 3 shows the order parameter in - plane for four different values of . We have considered where the network is active. In the simulation we have set and and takes the values 0.5, 1, 1.5 and 2.5 in sub-figures (a) to (d) respectively. For all values of , there are two distinct regions that the order parameter takes non-zero values. These regions are pushed towards the line as is increased. In the case , only one of the two regions is seen in the graph as the other is at much higher values of . We have also checked the case of and within a larger area (up to ) and observed no synchronous phases. In other words, the presence of the delay in the system plays a crucial role in the properties of this collective behaviour. For a larger delay, smaller external stimulation is needed to arrive at synchronization. We will denote the left region by L-Synch. and the right one by R-Synch. In the L-Synch. region, the excitatory neurons dominate and in R-Synch. region the inhibitory neurons dominate.

To see how the order parameter actually distinguishes the synchronous activity from an asynchronous activity, we have plotted the activity pattern of the system for a few points in the phase graph of Fig. 3(c) that is for . The points selected are , and which lay in the L-Synch. region, R-Synch. region and the region where , respectively. Actually, the order parameters of these points are , and . Fig. 4 illustrates activity patterns with their corresponding raster plots of the system at these points. It is clear that the order parameter is completely sensitive to synchronization. We have checked similar graphs for many different points and found the order parameter consistent with the activity patterns.

The next point to be explored is if the synchronous regions are changed if the system size is altered. We have done simulations for sizes and . Fig. 5 shows a cross-section of the phase diagram at , and . It is observed that the order parameter shows little dependence on the size of the system, the only difference is that the fluctuations are stronger in smaller systems. Also, as it is clearly seen in Fig. 5(c), larger networks have sharper transitions and in the limit the derivative of the order parameter will become discontinuous which is a hallmark of second-order phase transition. However, as we are dealing with systems with sizes up to , we have to be careful about the finite size effects.

One last point that is worth mentioning: the order parameter does depend on the number of synapses per neuron, but as we have kept this quantity constant in all simulations shown here, this effect is not observed. This dependence can be expected, for example, if we reduce this number to zero there will be no synchronization and on the other hand, if all the neurons are connected to each other the synchronization happens much more easily.

V Results: Synchronization and power-law behaviour

The phase diagram of the system (Fig.3) suggests something like a continuous phase transition between synchronous and asynchronous regions. To assess this transition and identify the possible critical behaviour, we investigate neural avalanches in the network. In the literature, the existence of scale-free neural avalanches is the most important indicator of criticality in neural systemsBeggs . Generally, the network displays successive activities of various sizes and duration , which are called avalanches. If the system is critical, the size and duration of avalanche distributions show power-law behaviour, i.e, and Beggs .

Identification of successive avalanches is a challenging task. One may think of non-stop continuous activities within the system so that at least one quiescent temporal step exists between two separated avalanches. However, this definition depends on , additionally for very large systems with lots of neurons, such a quiescent period may happen rarely. A better mechanism for identifying the avalanches is to consider the activity of the network and consider each period of time within which the activity is higher than the time-average of the network’s activity as an avalanche. This definition has been used before in some similar systems Delpapa ; Montakhab . To avoid incorrect statistics, we have considered the activity over the threshold as the size of activity VeligasSanto Probability distributions of avalanche size and avalanche period, for networks of 4000 neurons and various values of and , are performed in Fig 6. Asynchronous systems, whose order parameter is nearly zero, show sub-critical behaviour with a Poisson like distribution, whereas synchronous systems that lie within L-Synch. or R-Synch. regions, display super-critical distributions. However, right at the transition curve, the probability distribution clearly shows a power-law distribution, though there is a bump in the end that usually is referred to as a sign of super-critical behavior. We will come back to this issue later.

|

|

Consider a cross-section like the one shown in Fig. 5(a). Increasing from unity, the shape of the probability distribution function changes from a Poisson-like curve to a power-law and then again to a Poisson-like curve but with a bump at very large values of . Let us call the point that the system exhibits power-law behaviour by ”the scaling point”. This scaling point happens to be near the transition point of the system from the asynchronous region to the synchronous one. Identification of the exact value of for the scaling point in such a cross-section is hard because the probability distribution function gradually changes as we increase . In fact, moving from asynchronous region to the synchronous ones, a power-law behaviour is found without the bump in the end. Very quickly, with a little change in the external parameters, the bump forms and the probability distribution function keeps its form in a small interval of the external parameters and then the scaling behavior fades away. Although, usually in the literature of neural systems the presence of a bump is considered as a sign of super-criticality, this is not the case in the literature of critical systems and self-organized criticality. The presence of the bump can be a general feature in probability distribution functions to keep the total probability equal to unity. In addition to this reasoning, in our case, when the bump is present, due to the higher number of larger events we will have a finer tail with a less statistical error that allows us to check criticality through data collapse. Therefore, we consider the bumpy probability distribution to investigate the critical properties of the scaling point. We have also calculated indicators like Pruessner to identify the scaling point, however, such parameters show a relatively broad peak at the transition too, unless for our largest system sizes. For the cross-section and for systems with different sizes, the approximate values of of the scaling point is brought in table 1. For smaller systems, the scaling point is not so close to the transition point, but in the case of large systems the scaling point turns out to be right at the transition point.

| 1000 | 2000 | 4000 | 8000 | 16000 | |

|---|---|---|---|---|---|

In fact, it can be shown that this is a finite-size effect. When the usual statistical mechanics’ models are simulated, a size-dependent (pseudo)critical point is found. If we denote the (pseudo)critical point by and the actual critical point by then we have

| (10) |

where is the shifting exponent. Fig. 7 shows a log-log plot of as a function of size of the system . The parameters and are obtained by the best fitting. A very good linear fit is observed with and . The value obtained for is quite the same as the value of at which the transition to synchronization occurs within the precision we have. In fact, for the line we have for the transition point. We have checked the same phenomenon for a few other cross-sections, some of them being horizontal and some on the R-Synch. region, and in all cases the scaling point coincides with the transition point. Also, the scaling exponents obtained in the following are the same within error-bars.

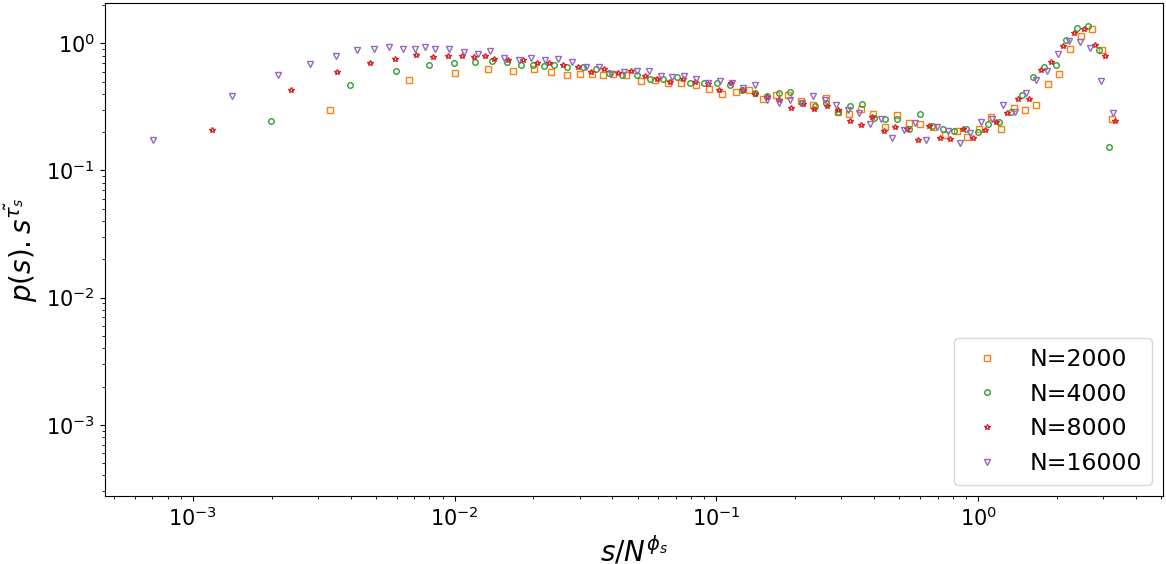

Let us focus on the probability distribution function on the scaling point. Fig. 8 shows the log-log plot of the probability distribution function of avalanche sizes and avalanche periods for different system sizes. Both plots show a linear part, which is the scaling region, and a cut-off that is clearly size-dependent, which is a hallmark of criticality. As said before, we have considered the external parameters where the probability distributions with a bump at the end of the plot. This consideration has no effect on the scaling exponents, however, gives a less statistical error because of finer data at the tail of the probability distribution. Note that the probability of avalanches at the bump is extremely small (for example for system size in the case of avalanche size distribution) and does not have an important role in the avalanche size statistics. The next interesting point is that in the case of avalanche size distribution, the linear part of the plot for systems with different sizes do not form a single line and for a system with a larger size, the line stands slightly above the line of a smaller system. This phenomenon, however, does not happen in the case of the period of avalanches. We will discuss it later and will see that the dependence causes some interesting phenomena to happen.

|

|

Despite this size dependency, the scaling exponent, , does not depend on the size. We have obtained the exponents using the technique explained in Clauset and found . Also for the case of the period of avalanches, we have . The values obtained from brain activity data are and , respectively Beggs . Considering the error bars of the two exponents obtained here, the exponent associated with the period of avalanches, , is not much far from the experimental value, however, is completely different. As we show in the following, this exponent is an apparent exponent and finite-size scaling reveals another (real) exponent for the system.

To study the scaling behavior of the system more thoroughly, we have performed a Finite Size Scaling analysis (FSS) for both the avalanche sizes and their periods. If a system is critical and hence scale-invariant, the probability distribution function should be of the form

| (11) |

where can be avalanche size or its period and and are the scaling exponents. Of course, the above equation should be valid for where is a lower cut-off. The function is usually called the scaling function and depends only on the quantity .

To check if a system obeys the above equation, we have to do a data collapse. This can be achieved by plotting against for the different system sizes. If for all greater than a lower cutoff, the data of different system sizes can be collapsed onto a single curve, then it is manifestly shown that the probability distribution function has the scaling property. Fig. 9 shows such a data collapse for avalanche size and avalanche period. The scaling exponents are obtained to be and for avalanche size and and for avalanche period. Interestingly, the value obtained for the scaling exponent is different from . Such a phenomenon has been observed before Chris-Prus . In fact, it is usually assumed that the scaling function has a finite value in the limit . However, in some cases, the scaling function behaves as in the limit mentioned above. If this is the case, we can write where is a finite number. Therefore, we will have

| (12) |

|

|

In the limit of large system sizes, for avalanche sizes much smaller than , the quantity becomes very small and therefore, we can effectively take the argument of equal to zero. Then, the probability distribution function will be proportional and hence, the apparent scaling exponent will be observed. As it is seen in Fig. 9, the scaling function does not have a finite value when its argument tends to zero, rather, it behaves as with , that is, the scaling function becomes very large when . Note that for each system size, there is a lower cut-off equal to below which the scaling function deviates from . This analysis gives the apparent scaling exponent which was obtained before by fitting in Fig. 8.

This size dependency of probability distribution function has some other consequences. As an example, consider the relation between mean avalanche size and mean avalanche period. Assuming narrow joint distributions, and are functions of each other and we may write

| (13) |

where is the average of conditioned to . In fact, Eq. 13 comes from two assumptions. First, the assumption of narrow joint distribution and second, the fact that both the probability distribution functions of and are given by Eq. 11. also, one may find a relation among the scaling exponents , and . This relation is read to be:

| (14) |

However, in this derivation it is assumed a finite value for . With a simple algebra, it can be shown that if , the relation between mean values of avalanche size and avalanche period can be written as

| (15) |

with

| (16) |

and

| (17) |

With the obtained values for scaling exponents we find and . Fig. 10 shows a log-log plot of for different systems sizes as a function of scaled by . In this graph, we have assumed and the best fit for the collapsed curves give which is consistent with some previously obtained value and experimental dataFriedman 2012 , although originated from different scaling exponents and .

VI Summary and conclusion

In this paper, we studied the behavior of neural networks consisting of over-damped rotators as a model for the dynamic of type I single neurons. Tuning external stimulation, inhibitory strength, and axonal delay time, we have identified both synchronous and asynchronous regions in the phase diagram of our system. Through finite size analysis, we have proposed that the transition is actually a second-order phase transition between synchronous and asynchronous states in the system. Interestingly we have observed that the probability distribution function shows the power-law distribution for both size and period of avalanches in the vicinity of critical lines. At these lines, the probability distribution functions show a very good data collapse. The interesting point is that for the smaller systems, the point where the power-law behavior emerges falls inside the synchronous region. Therefore, in these systems, it is possible to observe both criticality and synchronization. Although, it is seen that for a very large system, the phase transition happens right at the synchronization transition point.

Yet there remain some unanswered questions. First of all, the transition line hardly touches the line on which the balance between inhibitory and excitatory neurons happens. Also, we have not proposed a mechanism that takes the system towards the critical line. One of the answers might be the evolution of synapses which is absent in our theory. For example, if we devise a mechanism that strengthens the excitatory neurons at the synchronous phase and strengthens the inhibitory ones in asynchronous phase, the transition line to the r-synch region would become stable. However, at this point, we have not implemented any kind of synapse dynamics in the system.

References

- (1) Beggs, John M., and Dietmar Plenz. ”Neuronal avalanches in neocortical circuits.” Journal of neuroscience 23, no. 35 (2003): 11167-11177.

- (2) Petermann, Thomas, Tara C. Thiagarajan, Mikhail A. Lebedev, Miguel AL Nicolelis, Dante R. Chialvo, and Dietmar Plenz. ”Spontaneous cortical activity in awake monkeys composed of neuronal avalanches.” Proceedings of the National Academy of Sciences 106, no. 37 (2009): 15921-15926.

- (3) Gireesh, Elakkat D., and Dietmar Plenz. ”Neuronal avalanches organize as nested theta-and beta/gamma-oscillations during development of cortical layer 2/3.” Proceedings of the National Academy of Sciences 105, no. 21 (2008): 7576-7581.

- (4) Pasquale, V., P. Massobrio, L. L. Bologna, M. Chiappalone, and S. Martinoia. ”Self-organization and neuronal avalanches in networks of dissociated cortical neurons.” Neuroscience 153, no. 4 (2008): 1354-1369.

- (5) Hahn, Gerald, Thomas Petermann, Martha N. Havenith, Shan Yu, Wolf Singer, Dietmar Plenz, and Danko Nikolić. ”Neuronal avalanches in spontaneous activity in vivo.” Journal of neurophysiology 104, no. 6 (2010): 3312-3322.

- (6) Priesemann, V., M. Wibral, M. Valderrama, R. Pröpper, M. Le Van Quyen, T. Geisel, J. Triesch, D. Nikolic, and M. H. J. Munk. ”Spike avalanches in vivo suggest a driven, slightly subcritical brain state. Front Syst Neurosci 8 (108): 80–96. 10.3389/fnsys. 2014.00108.” (2014).

- (7) Freeman, Walter J., Linda J. Rogers, Mark D. Holmes, and Daniel L. Silbergeld. ”Spatial spectral analysis of human electrocorticograms including the alpha and gamma bands.” Journal of neuroscience methods 95, no. 2 (2000): 111-121.

- (8) Shriki, Oren, Jeff Alstott, Frederick Carver, Tom Holroyd, Richard NA Henson, Marie L. Smith, Richard Coppola, Edward Bullmore, and Dietmar Plenz. ”Neuronal avalanches in the resting MEG of the human brain.” Journal of Neuroscience 33, no. 16 (2013): 7079-7090.

- (9) Shew, Woodrow L., Hongdian Yang, Thomas Petermann, Rajarshi Roy, and Dietmar Plenz. ”Neuronal avalanches imply maximum dynamic range in cortical networks at criticality.” Journal of neuroscience 29, no. 49 (2009): 15595-15600.

- (10) Shew, Woodrow L., and Dietmar Plenz. ”The functional benefits of criticality in the cortex.” The neuroscientist 19, no. 1 (2013): 88-100.

- (11) Kinouchi, Osame, and Mauro Copelli. ”Optimal dynamical range of excitable networks at criticality.” Nature physics 2, no. 5 (2006): 348-351.

- (12) Gautam, Shree Hari, Thanh T. Hoang, Kylie McClanahan, Stephen K. Grady, and Woodrow L. Shew. ”Maximizing sensory dynamic range by tuning the cortical state to criticality.” PLoS computational biology 11, no. 12 (2015): e1004576.

- (13) Legenstein, Robert, and Wolfgang Maass. ”What makes a dynamical system computationally powerful.” New directions in statistical signal processing: From systems to brain (2007): 127-154.

- (14) Hesse, Janina, and Thilo Gross. ”Self-organized criticality as a fundamental property of neural systems.” Frontiers in systems neuroscience 8 (2014): 166.

- (15) Bak, Per, Chao Tang, and Kurt Wiesenfeld. ”Self-organized criticality: An explanation of the 1/f noise.” Physical review letters 59, no. 4 (1987): 381.

- (16) Bornholdt, Stefan, and Thimo Rohlf. ”Topological evolution of dynamical networks: Global criticality from local dynamics.” Physical Review Letters 84, no. 26 (2000): 6114.

- (17) Levina, Anna, J. Michael Herrmann, and Theo Geisel. ”Dynamical synapses causing self-organized criticality in neural networks.” Nature physics 3, no. 12 (2007): 857-860.

- (18) Meisel, Christian, and Thilo Gross. ”Adaptive self-organization in a realistic neural network model.” Physical Review E 80, no. 6 (2009): 061917.

- (19) Tetzlaff, Christian, Samora Okujeni, Ulrich Egert, Florentin Wörgötter, and Markus Butz. ”Self-organized criticality in developing neuronal networks.” PLoS Comput Biol 6, no. 12 (2010): e1001013.

- (20) de Arcangelis, Lucilla, Carla Perrone-Capano, and Hans J. Herrmann. ”Self-organized criticality model for brain plasticity.” Physical review letters 96, no. 2 (2006): 028107.

- (21) Millman, Daniel, Stefan Mihalas, Alfredo Kirkwood, and Ernst Niebur. ”Self-organized criticality occurs in non-conservative neuronal networks during ‘up’states.” Nature physics 6, no. 10 (2010): 801-805.

- (22) Kossio, Felipe Yaroslav Kalle, Sven Goedeke, Benjamin van den Akker, Borja Ibarz, and Raoul-Martin Memmesheimer. ”Growing critical: self-organized criticality in a developing neural system.” Physical review letters 121, no. 5 (2018): 058301.

- (23) Bonachela, Juan A., Sebastiano De Franciscis, Joaquín J. Torres, and Miguel A. Munoz. ”Self-organization without conservation: are neuronal avalanches generically critical?.” Journal of Statistical Mechanics: Theory and Experiment 2010, no. 02 (2010): P02015.

- (24) Moretti, Paolo, and Miguel A. Munoz. ”Griffiths phases and the stretching of criticality in brain networks.” Nature communications 4, no. 1 (2013): 1-10.

- (25) Moosavi, S. Amin, Afshin Montakhab, and Alireza Valizadeh. ”Refractory period in network models of excitable nodes: self-sustaining stable dynamics, extended scaling region and oscillatory behavior.” Scientific reports 7, no. 1 (2017): 1-10.

- (26) Zare, Marzieh, and Paolo Grigolini. ”Cooperation in neural systems: bridging complexity and periodicity.” Physical Review E 86, no. 5 (2012): 051918.

- (27) Poil, Simon-Shlomo, Richard Hardstone, Huibert D. Mansvelder, and Klaus Linkenkaer-Hansen. ”Critical-state dynamics of avalanches and oscillations jointly emerge from balanced excitation/inhibition in neuronal networks.” Journal of Neuroscience 32, no. 29 (2012): 9817-9823.

- (28) Dalla Porta, Leonardo, and Mauro Copelli. ”Modeling neuronal avalanches and long-range temporal correlations at the emergence of collective oscillations: Continuously varying exponents mimic M/EEG results.” PLoS computational biology 15, no. 4 (2019): e1006924.

- (29) Fontenele, Antonio J., Nivaldo AP de Vasconcelos, Thaís Feliciano, Leandro AA Aguiar, Carina Soares-Cunha, Barbara Coimbra, Leonardo Dalla Porta et al. ”Criticality between cortical states.” Physical review letters 122, no. 20 (2019): 208101.

- (30) Khoshkhou, Mahsa, and Afshin Montakhab. ”Spike-timing-dependent plasticity with axonal delay tunes networks of Izhikevich neurons to the edge of synchronization transition with scale-free avalanches.” Frontiers in Systems Neuroscience 13 (2019).

- (31) Di Santo, Serena, Pablo Villegas, Raffaella Burioni, and Miguel A. Muñoz. ”Landau–Ginzburg theory of cortex dynamics: Scale-free avalanches emerge at the edge of synchronization.” Proceedings of the National Academy of Sciences 115, no. 7 (2018): E1356-E1365.

- (32) Kuramoto, Yoshiki. Chemical oscillations, waves, and turbulence. Courier Corporation, 2003.

- (33) Ermentrout, G. Bard, and David H. Terman. Mathematical foundations of neuroscience. Vol. 35. Springer Science and Business Media, 2010.

- (34) Ermentrout, Bard. ”Type I membranes, phase resetting curves, and synchrony.” Neural computation 8, no. 5 (1996): 979-1001.

- (35) Ermentrout, G. Bard, and Nancy Kopell. ”Parabolic bursting in an excitable system coupled with a slow oscillation.” SIAM Journal on Applied Mathematics 46, no. 2 (1986): 233-253.

- (36) Strogatz, Steven H. Nonlinear dynamics and chaos with student solutions manual: With applications to physics, biology, chemistry, and engineering. CRC press, 2018.

- (37) Brunel, Nicolas. ”Dynamics of sparsely connected networks of excitatory and inhibitory spiking neurons.” Journal of computational neuroscience 8, no. 3 (2000): 183-208.

- (38) Esfahani, Zahra G., Leonardo L. Gollo, and Alireza Valizadeh. ”Stimulus-dependent synchronization in delayed-coupled neuronal networks.” Scientific reports 6, no. 1 (2016): 1-10.

- (39) Buend V., Villegas P., Burioni R., and Munoz M. A., 2020. Hybrid-type synchronization transitions: where marginal coherence, scale-free avalanches, and bistability live together. arXiv preprint, arXiv:2011.03263.

- (40) Luccioli, Stefano, and Antonio Politi. ”Irregular collective behavior of heterogeneous neural networks.” Physical review letters 105, no. 15 (2010): 158104.

- (41) Safari, Nahid, Farhad Shahbazi, Mohammad Dehghani-Habibabadi, Moein Esghaei, and Marzieh Zare. ”Spike-Phase Coupling As an Order Parameter in a Leaky Integrate-and-Fire Model.” arXiv preprint arXiv:1903.00998 (2019).

- (42) Del Papa, Bruno, Viola Priesemann, and Jochen Triesch. ”Criticality meets learning: Criticality signatures in a self-organizing recurrent neural network.” PloS one 12, no. 5 (2017): e0178683.

- (43) Villegas, Pablo, Serena di Santo, Raffaella Burioni, and Miguel A. Muñoz. ”Time-series thresholding and the definition of avalanche size.” Physical Review E 100, no. 1 (2019): 012133.

- (44) Clauset, Aaron, Cosma Rohilla Shalizi, and Mark EJ Newman. ”Power-law distributions in empirical data.” SIAM review 51, no. 4 (2009): 661-703.

- (45) Villegas, Pablo, Serena di Santo, Raffaella Burioni, and Miguel A. Muñoz. ”Time-series thresholding and the definition of avalanche size.” Physical Review E 100, no. 1 (2019): 012133.

- (46) Pruessner, Gunnar. Self-organised criticality: theory, models and characterisation. Cambridge University Press, 2012.

- (47) Christensen, Kim, Nadia Farid, Gunnar Pruessner, and Matthew Stapleton. ”On the scaling of probability density functions with apparent power-law exponents less than unity.” The European Physical Journal B 62, no. 3 (2008): 331-336.

- (48) Friedman, Nir, Shinya Ito, Braden AW Brinkman, Masanori Shimono, RE Lee DeVille, Karin A. Dahmen, John M. Beggs, and Thomas C. Butler. ”Universal critical dynamics in high resolution neuronal avalanche data.” Physical review letters 108, no. 20 (2012): 208102.