Covariate Adjusted Functional Mixed Membership Models

Abstract

Mixed membership models are a flexible class of probabilistic data representations used for unsupervised and semi-supervised learning, allowing each observation to partially belong to multiple clusters or features. In this manuscript, we extend the framework of functional mixed membership models to allow for covariate-dependent adjustments. The proposed model utilizes a multivariate Karhunen-Loève decomposition, which allows for a scalable and flexible model. Within this framework, we establish a set of sufficient conditions ensuring the identifiability of the mean, covariance, and allocation structure up to a permutation of the labels. This manuscript is primarily motivated by studies on functional brain imaging through electroencephalography (EEG) of children with autism spectrum disorder (ASD). Specifically, we are interested in characterizing the heterogeneity of alpha oscillations for typically developing (TD) children and children with ASD. Since alpha oscillations are known to change as children develop, we aim to characterize the heterogeneity of alpha oscillations conditionally on the age of the child. Using the proposed framework, we were able to gain novel information on the developmental trajectories of alpha oscillations for children with ASD and how the developmental trajectories differ between TD children and children with ASD.

Keywords: Bayesian Methods, EEG, Functional Data, Mixed Membership Models, Neuroimaging

1 Introduction

Cluster analysis is an unsupervised exploratory task that aims to group similar observations into “clusters” to explain the heterogeneity found in a particular dataset. When an outcome of interest is indexed by covariate information, it is often informative to adjust clustering procedures to account for covariate-dependent patterns of variation. Covariate-dependent clustering has found applications in post hock analyses of clinical trial data (Müller et al., 2011), where conditioning on factors such as dose response, tumor grade, and other clinical factors is often of interest when defining patients response subgroups. In addition to clinical trial settings, covariate-dependent clustering has also become popular in fields such as genetics (Qin and Self, 2006), flow cytometry (Hyun et al., 2023), neuroimaging (Guha and Guhaniyogi, 2022; Binkiewicz et al., 2017), and spatial statistics (Page and Quintana, 2016).

In statistics and machine learning, covariate-dependent clustering procedures have known a number of related nomenclatures, including finite mixture of regressions, mixture of experts, and covariate adjusted product partition models, which tend to refer to specific ways in which covariate information is used in data grouping decisions. The term finite mixture of regressions (McLachlan et al., 2019; Faria and Soromenho, 2010; Grün et al., 2007; Khalili and Chen, 2007; Hyun et al., 2023; Devijver, 2015) refers to the fitting of a mixture model, where the mean structure depends on the covariates of interest through a regression framework. The mixture of experts model (Jordan and Jacobs, 1994; Bishop and Svenskn, 2002) is similar to a finite mixture of regressions model in that it assumes that the likelihood is a weighted combination of probability distribution functions. However, in the mixture of experts model, the weights are dependent on the covariates of interest, providing an additional layer of flexibility beyond that of traditional finite mixture of regressions models. Although the mixture of experts model was originally designed for supervised learning settings, recent developments have expanded its application to unsupervised settings (Kopf et al., 2021; Tsai et al., 2020). Lastly, covariate adjusted product partition models (Müller et al., 2011; Park and Dunson, 2010) are a Bayesian non-parametric version of covariate adjusted clustering. Analogous to mixture of experts models, covariate adjusted product partition models allow the cluster partitions to depend on the covariates of interest.

In this manuscript, we extend the class of functional mixed membership models proposed by Marco et al. (2024a) to allow latent features to depend on covariate information. Mixed membership models (Erosheva et al., 2004; Heller et al., 2008; Gruhl and Erosheva, 2014; Marco et al., 2024a, b), sometimes referred to as partial membership models, can be thought of as a generalization of traditional clustering, where each observation is allowed to partially belong to multiple clusters or features. Although there have been many advancements in covariate adjusted clustering models for multivariate data, to the best of our knowledge, little work has been done on incorporating covariate information in functional mixed membership models or functional clustering models. Two important exceptions are Yao et al. (2011), who specified a finite mixture of regressions model where the covariates are functional data and the clustered data are scalar, and Gaffney and Smyth (1999) who proposed a functional mixture of regressions model where the function is modeled by a deterministic linear function of the covariates. Here, we consider the case where we have a covariate vector in and the outcome we cluster is functional.

Our work is primarily motivated by functional brain imaging studies on children with autism spectrum disorder (ASD) through electroencephalography (EEG). Specifically, Marco et al. (2024a) analyzed how alpha oscillations differ between children with ASD and typically developing (TD) children. Since alpha oscillations are known to change as children develop, the need for an age-dependent mixed membership model is crucial to ensure that shifts in the power spectrum of alpha oscillations do not confound measurements of alpha power (Haegens et al., 2014). Unlike mixture of experts models and covariate adjusted product partition models, we aim to specify a mixed membership model in which the allocation structure does not depend on the covariates of interest. Although previous studies have shown that the alpha peak shifts to a higher frequency and becomes more attenuated (Scheffler et al., 2019; Rodríguez-Martínez et al., 2017), the results can be confounded if this effect is not observed in all individuals. In our model, since we assume that each individual’s allocation parameters do not change with age, we can infer how alpha oscillations change as children age at an individual level, making a covariate adjusted mixed membership model ideal for this case study.

This manuscript starts with a brief review of functional clustering and covariate-dependent clustering frameworks, such as the mixture of experts model and the finite mixture of regressions model. Using these previous frameworks as a reference, we derive the general form of our covariate adjusted mixed membership model. In Section 2.2 we review the multivariate Karhunen-Loève (KL) theorem, which allows us to have a concise representation of the latent functional features. In Section 2.3, we leverage the KL decomposition to fully specify the covariate adjusted functional mixed membership model. A review of the identifiability issues that occur in mixed membership models, as well as a set of sufficient conditions to ensure identifiability in a covariate adjusted mixed membership model can be found in Section 2.4. Section 3 covers two simulation studies which explore the empirical convergence properties of the proposed model and evaluate the performance of various information criteria in choosing the number of features in a covariate adjusted functional mixed membership model. Section 4 illustrates the usefulness of the covariate adjusted functional mixed membership model by analyzing EEG data from children with ASD and TD children. Lastly, we conclude this manuscript with discussion on some of the challenges of fitting these models, as well as possible theoretical challenges when working with covariate adjusted mixed membership models.

2 Covariate Adjusted Functional Mixed Membership Model

Functional data analysis (FDA) focuses on the statistical analysis of sample paths from a continuous stochastic process , where is a compact subset of (Wang et al., 2016). In FDA, one commonly assumes that the random functions are elements of a Hilbert space, or more specifically that the random functions are square-integrable functions . In this manuscript, we assume that the observed sample paths are generated from a Gaussian process (GP), fully characterized by a mean function, , and a covariance function, , for . Since mixed membership models can be considered a generalization of finite mixture models, we will show how the finite mixture of regressions and mixture of experts models relate to our proposed mixed membership models. We relate our contribution to covariate adjusted product partition models only superficially, due to the significant differences in both theory and implementation for this model class (Müller et al., 2011; Park and Dunson, 2010). For the theoretical developments discussed in this section, we will assume that the number of clusters or features, , is known a-priori. Although the number of clusters or features is often unknown, Section 3.1 shows that the use of information criteria or simple heuristic methods such as the “elbow” method can be informative in choosing the number of features.

2.1 Mixture of Regressions, Mixture of Experts and Covariate Adjusted Mixed Membership

Functional clustering generally assumes that each sample path is drawn from one of underlying cluster-specific sub-processes (James and Sugar, 2003; Chiou and Li, 2007; Jacques and Preda, 2014). In the GP framework, assuming that are the underlying cluster-specific sub-processes with corresponding mean and covariance functions , the general form of a GP finite mixture model is typically expressed as:

| (1) |

where () are the mixing proportions and are the sample paths for . Let be the covariates of interest associated with the observation. Extending the multivariate finite mixture of regressions model (McLachlan et al., 2019; Faria and Soromenho, 2010; Grün et al., 2007; Khalili and Chen, 2007; Hyun et al., 2023; Devijver, 2015) to a functional setting, the covariate adjustments would be encoded through the mean by defining a set of cluster-specific regression functions . The mixture of GPs in (1) would then be generalized to include covariate information as follows:

| (2) |

where cluster specific GPs are now assumed to depend on mean functions , which are allowed to vary over the covariate space. Alternative representations of covariate adjusted mixtures have been proposed to allow the mixing proportions to depend on covariate information through generalized regression functions (Jordan and Jacobs, 1994) or implicitly defining covariate adjusted partitions through a cohesion prior (Park and Dunson, 2010).

In both the finite mixture of regressions and mixture of experts settings, covariate adjustment does not change the overall interpretation of the finite mixture modeling framework, and the underlying assumption is that a function exhibits uncertain membership to only one of well-defined subprocesses . Following Marco et al. (2024a), we contrast this representation of functional data with one where a sample path is allowed mixed membership to one or more well-defined sub-processes . In the mixed membership framework, the underlying subprocesses are best interpreted as latent functional features, which may partially manifest in a specific path .

Our representation of a functional mixed membership model hinges on the introduction of a new set of latent variables , where and . Given the latent mixing variables , each sample path is assumed to follow the following sampling structure:

| (3) |

Thus we can see that under the functional mixed membership model, each sample path is assumed to come from a convex combination of the underlying GPs, . To avoid unwarranted restrictions on the implied covariance structure, the functional mixed membership model does not assume that the underlying GPs are mutually independent. Thus, we will let represent the cross-covariance function between the GP and the GP, for . Letting be the collection of covariance and cross-covariance functions, we can specify the sampling model of the functional mixed membership model as

| (4) |

Leveraging the mixture of regressions framework in (2), the proposed covariate adjusted functional mixed membership model becomes:

| (5) |

In Section 2.2, we review the multivariate Karhunen-Loève (KL) construction (Ramsay and Silverman, 2005a; Happ and Greven, 2018), which allows us to have a joint approximation of the covariance structure of the underlying GPs. Using the joint approximation, we are able to concisely represent the GPs, facilitating inference on higher-dimensional functions such as surfaces. Using the KL decomposition, we are able to fully specify our proposed covariate adjusted functional mixed membership model (Equation 5) in Section 2.3.

2.2 Multivariate Karhunen-Loève Characterization

The proposed modeling framework in Equation (5) depends on a set of mean and covariance functions, which are naturally represented infinite-dimensional parameters. Thus, in order to work in finite dimensions, we assume that the underlying GPs are smooth and lie in the -dimensional subspace, , spanned by a set of linearly independent square-integrable basis functions, (). Although the choice of basis functions is user-defined, the basis functions must be uniformly continuous in order to satisfy Lemma 2.2 of Marco et al. (2024a). In this manuscript, we will utilize B-splines for all case studies and simulation studies.

The assumption that allows us to turn an infinite-dimensional problem into a finite-dimensional problem, making traditional inference tractable. Although tractable, accounting for covariance and cross-covariance functions separately leads to a model that needs parameters to represent the overall covariance structure. While the number of clusters, , supported in applications is typically small, the number of basis functions, , tends to follow the observational grid resolution, and may therefore be quite large. This is particularly true when one considers higher-dimensional functional data, such as surfaces, which necessitate a substantial number of basis functions, making it computationally expensive to fit models. Thus, we will use the multivariate KL decomposition (Ramsay and Silverman, 2005a; Happ and Greven, 2018) to reduce the number of parameters needed to estimate the covariance surface to , where is the number of eigenfunctions used to approximate the covariance structure. A more detailed derivation of a KL decomposition for functions in can be found in Marco et al. (2024a).

To achieve a concise joint representation of the latent GPs, we define a multivariate GP, which we denote by , such that

where and . Since is a Hilbert space, with the inner product defined as the inner product, we can see that , where is defined as the direct sum of the Hilbert spaces , making also a Hilbert space. Since is a Hilbert space and the covariance operator of , denoted , is a positive, bounded, self-adjoint, and compact operator, we know that there exists a complete set of eigenfunctions and the corresponding eigenvalues such that for . Using the eigenpairs of the covariance operator, we can rewrite as

where and is the element of . Since , we know that there exists such that , where . Similarly, since , we can introduce a mapping , such that . Therefore, we arrive at the general form of our decomposition:

| (6) |

Using this decomposition, our covariance and mean structures can be recovered such that and , for and . To reduce the dimensionality of the problem, we will only use the first eigenpairs to approximate the stochastic processes. Although traditional functional principal component analysis (FPCA) will choose the number of eigenfunctions based on the proportion of variance explained, the same strategy cannot be employed in functional mixed membership models because the allocation parameters of the model are typically not known. Therefore, we suggest selecting a large value of , and then proceeding with stable estimation through prior regularization.

2.3 Model and Prior Specification

In this section, we fully specify the covariate adjusted functional mixed membership model utilizing a truncated version of the KL decomposition, specified in Equation 6. We start by first specifying how the covariates of interest influence the mean function. Under the standard function-on-scalar regression framework (Faraway, 1997; Brumback and Rice, 1998), we have for . Since we assumed that for , we know that . Therefore, there exist and such that

| (7) |

Under a standardized set of covariates, specifies the population-level mean of the feature and encodes the covariate dependence of the feature. Thus, assuming the mean structure specified in Equation 7 and using a truncated version of the KL decomposition specified in Equation 6, we can specify the sampling model of our covariate adjusted functional mixed membership model.

Let be the observed sample paths that we want to model to the features, , conditionally on the covariates of interest, . Since the observed sample paths are observed at only a finite number of points, we will let denote the time points at which the function was observed. Without loss of generality, we will define the sampling distribution over the finite-dimensional marginals of . Using the general form of our proposed model defined in Equation 5, we have

| (8) |

where and is the collection of the model parameters. As defined in Section 2, are variables that lie on the unit simplex, such that and . From this characterization, we can see that each observation is modeled as a convex combination of realizations from the features with additional Gaussian noise, represented by . If we integrate out the variables, for and , we arrive at the following likelihood:

| (9) |

where is the collection of the model parameters excluding the parameters ( and ) and . Equation 9 illustrates that the proposed covariate adjusted functional mixed membership model can be expressed as an additive model; the mean structure is a convex combination of the feature-specific means, while the covariance can be written as a weighted sum of covariance functions and cross-covariance functions.

To have an adequately expressive and scalable model, we approximate the covariance surface of the features using scaled pseudo-eigenfunctions. In this framework, orthogonality will not be imposed on the parameters, making them pseudo-eigenfunctions instead of the eigenfunctions described in Section 2.2. From a modeling prospective, this allows us to sample on an unconstrained space, facilitating better Markov chain mixing and easier sampling schemes. Although direct inference on the eigenfunctions is no longer available, a formal analysis can still be conducted by reconstructing the posterior samples of the covariance surface and calculating eigenfunctions from the posterior samples. To avoid overfitting of the covariance functions, we follow Marco et al. (2024a) by using the multiplicative gamma process shrinkage prior proposed by Bhattacharya and Dunson (2011) to achieve adaptive regularized estimation of the covariance structure. Therefore, letting be the element of , we have the following:

where , , , and . By letting , we can show that for , leading to the prior on having stochastically decreasing variance as increases. This will have a regularizing effect on the posterior draws of , making more likely to be close to zero as increases.

In functional data analysis, we often desire smooth mean functions to protect against overfit models. Therefore, we use a first-order random walk penalty proposed by Lang and Brezger (2004) on the and parameters to promote adaptive smoothing of the mean function of the features in our model. Therefore, we have that for , where and is the element of . Similarly, we have that for and , where and is the row and column of . Following previous mixed membership models (Heller et al., 2008; Marco et al., 2024a, b), we assume that , , and . Lastly, we assume that . The posterior distributions of the parameters in our model, as well as a sampling scheme with tempered transitions, can be found in Section 2 of the Supplementary Materials. A covariate adjusted model where the covariance is also dependent on the covariates of interest can be found in Section 4 of the Supplementary Materials.

2.4 Model Identifiability

Mixed membership models face multiple identifiability problems due to their increased flexibility over traditional clustering models (Chen et al., 2022; Marco et al., 2024a, b). Like traditional clustering models, mixed membership models also face the common label switching problem, where an equivalent model can be formulated by permuting the labels or allocation parameters. Although this is one source of non-identifiability, relabelling algorithms can be formulated from the work of Stephens (2000). More complex identifiability problems arise since the allocation parameters are now continuous variables on the unit simplex, rather than binary variables like in clustering. Specifically, an equivalent model can be constructed by rotating and/or changing the volume of the convex polytope constructed by the allocation parameters through linear transformations of the allocation parameters (Chen et al., 2022). Therefore, geometric assumptions must be made on the allocation parameters to ensure that the mixed membership models are identifiable.

One way to ensure that we have an identifiable model up to a permutation of the labels is to assume that the separability condition holds (Pettit, 1990; Donoho and Stodden, 2003; Arora et al., 2012; Azar et al., 2001; Chen et al., 2022). The separability condition assumes that at least one observation belongs completely to each of the features. These observations that belong only to one feature act as “anchors”, ensuring that the allocation parameters are identifiable up to a permutation of the labels. Although the separability condition is conceptually simple and relatively easy to implement, it makes strong geometric assumptions on the data-generating process for mixed membership models with three or more features. Weaker geometric assumptions, known as the sufficiently scattered condition (Chen et al., 2022), that ensure an identifiable model in mixed membership models with 3 or more features are discussed in Chen et al. (2022). Although the geometric assumptions are relatively weak, implementing these constraints is nontrivial. When extending multivariate mixed membership models to functional data and introducing a covariate-dependent mean structure, ensuring an identifiable mean and covariance structure requires further assumptions.

Lemma 2.1

Consider a -feature covariate adjusted functional mixed membership model as specified in Equation 9. The parameters , , , , and are identifiable up to a permutation of the labels (i.e. label switching), for , , and , given the following assumptions:

-

1.

is full column rank with not in the column space of .

-

2.

The separability condition or the sufficiently scattered condition holds on the allocation matrix. Moreover, there exist at least observations , with allocation parameters such that the matrix is full column rank, where the row of is specified as .

-

3.

The sample paths are regularly sampled so that . Moreover, we will assume that the basis functions are B-splines with equidistant knots.

Lemma 2.1 states a set of sufficient conditions that lead to an identifiable mean and covariance structure up to a permutation of the labels. The proof of Lemma 2.1 can be found in Section 1 of the Supporting Materials. The first assumption is similar to the assumption needed in the linear regression setting to ensure identifiability; however, the intercept term is not included in the design matrix, as the parameters already account for the intercept. From the second assumption we can see that we assume geometric assumptions on the allocation parameters, provide a lower bound for the number of functional observations, and we assume that the matrix is full column rank. The matrix is constructed using the allocation parameters, and although the condition on may seem restrictive, it often holds in practice. We note that this condition may not hold if there are not enough unique allocation parameter values or if most of your allocation parameters lie on a lower-dimensional subspace of the unit -simplex. Although the individual parameters are not identifiable in our model, an eigen analysis can still be performed by constructing posterior draws of the covariance structure and calculating the eigenvalues and eigenfunctions of the posterior draws. Section 3 provides empirical evidence that the mean and covariance structure converge to the truth as more observations are accrued.

2.5 Relationship to Function-on-Scalar Regression

Function-on-scalar regression is a common method in FDA that allows the mean structure of a continuous stochastic process to be dependent on scalar covariates. In function-on-scalar regression, we often assume that the response is a GP, and that the covariates of interest are vector-valued. A comprehensive review of the broader area of functional regression can be found in Ramsay and Silverman (2005b) and Morris (2015). While there have been many advancements and generalizations (Krafty et al., 2008; Reiss et al., 2010; Goldsmith et al., 2015; Kowal and Bourgeois, 2020) of function-on-scalar regression since the initial papers of Faraway (1997) and Brumback and Rice (1998), the general form of function-on-scalar regression can be expressed as follows:

| (10) |

where is the response function evaluated at , is the functional coefficient representing the effect that the covariate () has on the mean structure, and is a mean-zero Gaussian process with covariance function . The function in Equation (10) represents the mean of the GP when all of the covariates, are set to zero. Unlike the traditional setting for multiple linear regression in finite-dimensional vector spaces, function-on-scalar regression requires the estimation of the infinite-dimensional functions and from a finite number of observed sample paths at a finite number of points ( for and ).

To make inference tractable, we assume that the data lie in the span of a finite set of basis functions, which will allow us to expand and as a finite sum of the basis functions. The set of basis functions can be specified using data-driven basis functions or by specifying the basis functions a-priori. If the basis functions are specified a-priori, common choices of basis functions are B-splines and wavelets due to their flexibility, as well as Fourier series for periodic functions. Alternatively, if the use of data-driven basis functions is desired, a common choice is to use the eigenfunctions of the covariance operator as basis functions. In order to estimate the eigenfunctions, functional principal component analysis (Shang, 2014) is often performed and the obtained estimates of the eigenfunctions are used. Functional principal component analysis faces a similar problem in that the objective is to estimate eigenfunctions using only a finite number of sample paths observed at a finite number of points. To solve this problem, Rice and Silverman (1991) proposes using a splines basis to estimate smooth eigenfunctions, while Yao et al. (2005) proposes using local linear smoothers to estimate the smooth eigenfunctions. Therefore, even using data-driven basis functions require smoothing assumptions, suggesting similar results between data-driven basis functions and a reasonable set of a-priori specified basis functions paired with a penalty to prevent overfitting.

Specifying the basis functions a-priori (), and letting be the observed sample paths at points (), we can simplify Equation (10) to get

| (11) |

where , , and are the set of basis function evaluated at the time points of interest. As specified in the previous sections, . Equation (11) shows that the function evaluated at the points can be represented by , and similarly the functional coefficients can be represented by . Therefore, we are left to estimate , , and the parameters associated with the covariance function of , denoted .

The covariance function represents the within-function covariance structure of the data. In the simplest case, we often assume that , which means that we only need to specify . In more complex models (Faraway, 1997; Krafty et al., 2008), one may make less restrictive assumptions and assume that the covariance , where is a low-dimensional approximation of a smooth covariance surface using a truncated eigendecomposition. Although functional regression usually assumes that the functions are independent, functional regression models have been proposed to model between-function variation, or cases where observations can be correlated (Morris and Carroll, 2006; Staicu et al., 2010).

Assuming a relatively general covariance structure as in Krafty et al. (2008), the function-on-scalar model assumes the following distributional assumptions on our sample paths:

| (12) |

From Equation (12) it is apparent that the proposed covariate adjusted mixed membership model specified in Equation (9) is closely related to function-on-scalar regression. The key difference between the two models is that the covariate adjusted mixed membership model does not assume a common mean and covariance structure across all observations conditionally on the covariates of interest. Instead, the covariate adjusted mixed membership model allows each observation to be modeled as a convex combination of underlying features. Each feature is allowed to have different mean and covariance structures, meaning that the covariates are not assumed to have the same affect on all observations. By allowing this type of heterogeneity in our model, we can conduct a more granular analysis and identify subgroups that interact differently with the covariates of interest. Alternatively, if there are subgroups of the population that interact differently with the covariates of interest, then the results from a function-on-scalar regression model will be confounded, as the effects will likely be averaged out in the analysis. The finite mixture of experts model and the mixture of regressions model offer a reasonably granular level of insight, permitting inference at the sub-population level. However, they do not provide the individual-level inference achievable with the proposed covariate adjusted functional mixed membership model.

3 Simulation Studies

In this section, we will discuss the results of two simulation studies. The first simulation study explores the empirical convergence properties of the mean, covariance, and allocation structures of the proposed covariate adjusted functional mixed membership model. Along with correctly specified models, this simulation study also studies the convergence properties of over-specified and under-specified models. The second simulation study explores the use of information criteria in choosing the number of features in a covariate adjusted functional mixed membership model.

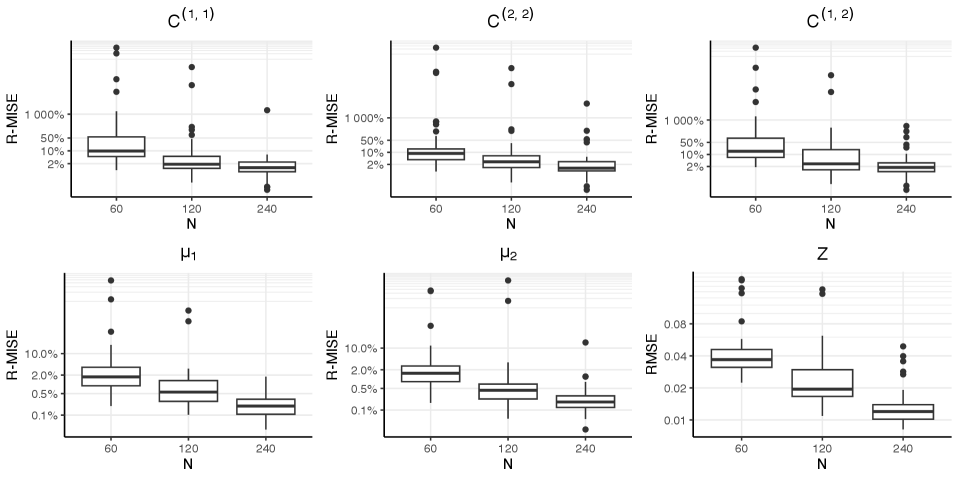

3.1 Simulation Study 1: Structure Recovery

In the first simulation study, we explore the empirical convergence properties of the proposed covariate adjusted functional mixed membership models. Specifically, we generate data from a covariate adjusted functional mixed membership model and see how well the proposed framework can recover the true mean, covariance, and allocation structures. To evaluate how well we can recover the mean, covariance, and cross-covariance functions, we calculate the relative mean integrated square error (R-MISE), which is defined as or in the case of a covariate adjusted model. In this simulation study, will be the posterior median obtained from our posterior samples. To measure how well we recover the allocation structure, , we calculated the root-mean-square error (RMSE). In addition to studying the empirical convergence properties of correctly specified models, we also included a scenario where we fit a covariate adjusted functional mixed membership model, when the generating truth had no covariate dependence. Conversely, we also studied the scenario where we fit a functional mixed membership model with no covariates, when the generating truth was generated from a covariate adjusted functional mixed membership model with one covariate.

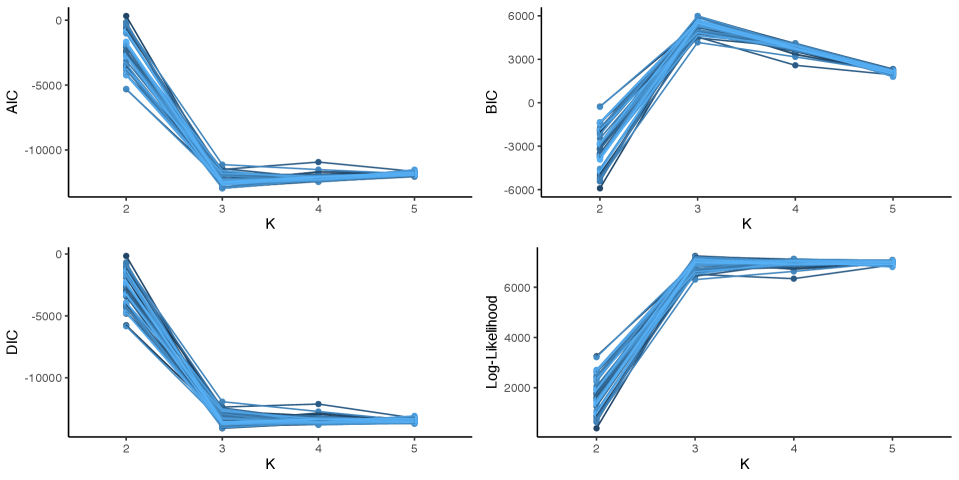

Figure 1 contains a visualization of the performance metrics from each of the five scenarios considered in this simulation study. We can see that when the model is specified correctly, it does a good job in recovering the mean structure, even with relatively few observations. On the other hand, a relatively large number of observations are needed to recover the covariance structure when we have a correctly specified model. This simulation study also shows that we pay a penalty in terms of statistical efficiency when we over-specify a model; however, the over-specified model still shows signs of convergence to the true mean, covariance, and allocation structures. Conversely, an under-specified model shows no signs of converging to the true mean and covariance structure as we get more observations. Additional details on how the simulation was conducted, as well as more detailed visualizations of the simulation results, can be found in Section 3.1 of the Supplementary Materials.

3.2 Simulation Study 2: Information Criteria

When considering the use of a mixed membership model to gain insight into the heterogeneity found in a dataset, a practitioner must specify the number of features to use in the mixed membership model. Correctly specifying the number of features is crucial, as an over-specification will lead to challenges in interpretability of the results, and an under-specification will lead to a model that is not flexible enough to model the heterogeneity in the model. Although information criteria such as BIC and heuristics such as the elbow-method have been shown to be informative in choosing the number of parameters in mixed membership models (Marco et al., 2024a, b), it is unclear whether these results extend to covariate adjusted models. In this case, we consider the performance of AIC (Akaike, 1974), BIC (Schwarz, 1978), DIC (Spiegelhalter et al., 2002; Celeux et al., 2006), and the elbow-method in choosing the number of features in a covariate adjusted functional mixed membership model with one covariate. The information criteria used in this section are specified in Section 3.2 of the Supplementary Materials. As specified, the optimal model should have the smallest AIC, the largest BIC, and the smallest DIC.

To evaluate the performance of the information criteria and the elbow method, 50 datasets were generated from a covariate adjusted functional mixed membership model with three features and one covariate. Each dataset was analyzed using four covariate adjusted functional mixed membership models; each with a single covariate and a varying number of features (). Figure 2 shows the performance of the three information criteria. Similarly to Marco et al. (2024a) and Marco et al. (2024b), we can see that BIC had the best performance, picking the three-feature model all 50 times. From the simulation study, we found that AIC and DIC tended to choose more complex models, with AIC and DIC choosing the correctly specified model 68% and 26% of the time, respectively. Looking at the log-likelihood plot, we can see that there is a distinct elbow at , meaning that using heuristic methods such as the elbow method tends to be informative in choosing the number of features in covariate adjusted functional mixed membership models. Thus, we recommend using BIC and the elbow-method in conjunction to choose the number of features, as even though BIC correctly identified the model every time in the simulation, there were times when the BIC between the three-feature model and the four-feature model were similar. Additional details on how this simulation study was conducted can be found in Section 3.2 of the Supplementary Materials.

4 A Case Study in EEG Imaging for Autism Spectrum Disorder

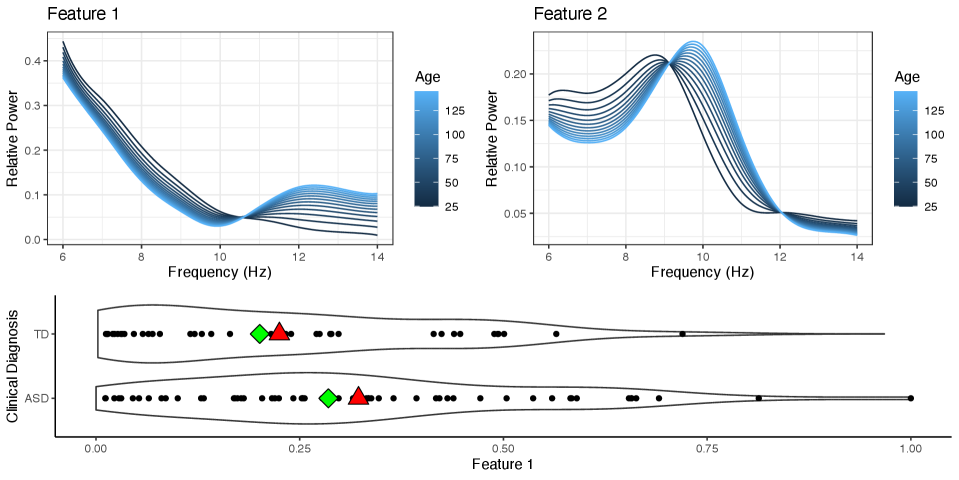

Autism spectrum disorder (ASD) is a developmental disorder characterized by social communication deficits and restrictive and/or repetitive behaviors (Edition, 2013). Although once more narrowly defined, autism is now seen as a spectrum, with some individuals having very mild symptoms, to others that require lifelong support (Lord et al., 2018). In this case study, we analyze electroencephalogram (EEG) data that were obtained in a resting-state EEG study conducted by Dickinson et al. (2018). The study consisted of 58 children who have been diagnosed with ASD between the ages of 2 and 12 years of age, and 39 age-matched typically developing (TD) children, or children who have never been diagnosed with ASD. The children were instructed to view bubbles on a monitor in a dark, sound-attenuated room for 2 minutes, while EEG recordings were taken. The EEG recordings were obtained using a 128-channel HydroCel Geodesic Sensory net, and then interpolated to match the international 10-20 system 25-channel montage. The data were filtered using a band pass of 0.1 to 100 Hz, and then transformed into the frequency domain using a fast Fourier transform. To obtain the relative power, the functions were scaled so that they integrate to 1. Lastly, the relative power was averaged across the 25 channels to obtain a measure of the average relative power. Visualizations of the functional data that characterize the average relative power in the alpha band of frequencies can be seen in Figure 3.

In this case study, we will specifically analyze the alpha band of frequencies (neural activity between 6Hz and 14Hz) and compare how alpha oscillations differ between children with ASD and TD children. The alpha band of frequencies has been shown to play a role in neural coordination and communication between distributed brain regions (Fries, 2005; Klimesch et al., 2007). Alpha oscillations are composed of periodic and aperiodic neural activity patterns that coexist in the EEG spectra. Neuroscientists are primarily interested in periodic signals, specifically in the location of a single prominent peak in the spectral density located in the alpha band of frequencies, called the peak alpha frequency (PAF). Peak alpha frequency has been shown to be a biomarker of neural development in typically developing children (Rodríguez-Martínez et al., 2017). Studies have shown that the alpha peak becomes more prominent and shifts to a higher frequency within the first years of life for TD children (Rodríguez-Martínez et al., 2017; Scheffler et al., 2019). Compared to TD children, the emergence of a distinct alpha peak and developmental shifts have been shown to be atypical in children with ASD (Dickinson et al., 2018; Scheffler et al., 2019; Marco et al., 2024a, b).

In Section 4.1, we analyze how age affects alpha oscillations by fitting a covariate adjusted functional mixed membership model with the log transformation of age as the only covariate. This model provides insight into how alpha frequencies change as children age, regardless of diagnostic group. Additionally, the use of a covariate adjusted functional mixed membership model allows us to directly compare the age-adjusted relative strength of the PAF biomarker between individuals in the study, including between diagnostic groups. This analysis is extended in Section 4.2 where an analysis on how developmental shifts differ based on diagnostic group. To conduct this analysis, a covariate adjusted functional mixed membership mode was fit with the log transformation of age, diagnostic group, and an interaction between the log transformation of age and diagnostic group as covariates. This analysis provides novel insight into the developmental shifts for children with ASD and TD children. Although these two models may be considered nested models, each model provides unique insight into the data.

4.1 Modeling Alpha Oscillations Conditionally on Age

In the first part of this case study, we are primarily interested in modeling the developmental changes in alpha frequencies. As stated in Haegens et al. (2014), shifts in PAF can confound the alpha power measures, demonstrating the need for a model that controls for the age of the child. In this subsection, we fit a covariate adjusted functional mixed membership model using the log transformation of age as the covariate. Using conditional predictive ordinates and comparing the pseudomarginal likelihoods, we found that a log transformation of age provided a better fitting model than using a model with age untransformed. Although this model does not use information on the diagnostic group, we are also interested in characterizing the differences in the observed alpha oscillations between individuals across diagnostic groups.

From Figure 4, we can see that the first feature consists mainly of aperiodic neural activity patterns, which are commonly referred to as a trend or pink noise. On the other hand, the second feature can be interpreted as a distinct alpha peak, which is considered periodic neural activity. For children that heavily load on the second feature, we can see that as they age, the alpha peak increases in magnitude and the PAF shifts to a higher frequency, which has been observed in many other studies (Haegens et al., 2014; Rodríguez-Martínez et al., 2017; Scheffler et al., 2019). From a clinical perspective, it is also valuable to compare children’s alpha power conditional on age, since we know that there are developmental changes in alpha oscillations as children age. From Figure 4, we can also see that on average children with ASD have less attenuated alpha peaks compared to their age-adjusted TD counterpart.

One key feature of this model is that it allows us to directly compare individuals in the TD group and ASD group on the same spectrum. However, to do this, we had to assume that the alpha oscillations of children with ASD and TD children can be represented as a continuous mixture of the same two features shown in Figure 4. Just as developmental shifts in PAF can confound the measures of alpha power (Haegens et al., 2014), the assumption that the alpha oscillations of children with ASD and TD children can be represented by the same two features can also confound the results found in this section if the assumption is shown to be incorrect. In Section 4.2, we relax this assumption and allow the functional features to differ according to the diagnostic group.

4.2 Modeling Alpha Oscillations Conditionally on Age and Diagnostic Group

Previous studies have shown that the emergence of alpha peaks and the developmental shifts in frequency are atypical in children with ASD (Dickinson et al., 2018; Scheffler et al., 2019; Marco et al., 2024a, b). Therefore, it may not be realistic to assume that the alpha oscillations of children with ASD and TD children can be represented by the same two features. In this section, we will fit a covariate adjusted functional mixed membership model using the log transformation of age, clinical diagnosis, and an interaction between the log transformation of age and clinical diagnosis as covariates of interest. By including the interaction between the log transformation of age and diagnostic group, we allow for differences in the developmental changes of alpha oscillations between diagnostic groups.

Compared to the model used in Section 4.1, this model is more flexible; it allows different features for each diagnostic group and different developmental trajectories for each diagnostic group. Although this model is more expressive, the comparison of individuals across diagnostic groups is no longer straightforward. The model in Section 4.1 did not use any diagnostic group information, which allowed us to easily compare TD children with ASD children as the distribution of the developmental trajectory depended only on the allocation parameters. On the other hand, comparing individuals across diagnostic groups is not as simple as looking at the inferred allocation parameters, as the underlying features are group-specific. Therefore, two individuals with the same allocation parameters that are in diagnostic groups will likely have completely different developmental trajectories. Moreover, in the model used in Section 4.1, we knew that at least one observation belonged to each feature, making the features interpretable as the extreme cases observed. However, for the more flexible model used in this subsection, there will not be at least one observation belonging to each feature for each diagnostic group. We can see that in the TD group, no individual has a loading of more than 50% on the first feature, meaning that the first TD-specific feature cannot be interpreted as the most extreme case observed in the TD group. Therefore, direct interpretation of the group-specific features is less useful, and instead we will focus on looking at the estimated trajectories of individuals conditional on empirical percentiles of the estimated allocation structure. Visualizations of the mean structure for the two features can be found in the Section 3.3 Supplementary Materials.

From Figure 5, we can see the estimated developmental trajectories of TD children and children with ASD, conditional on different empirical percentiles of the estimated allocation structure. Looking at the estimated developmental trajectories of children with a group-specific median allocation structure, we can see that both TD children and children diagnosed with ASD have a discernible alpha peak and that the expected PAF shifts to higher frequencies as the child develops. However, compared to TD children, ASD children have more of a trend, and the shift in PAF is not as large in magnitude. Similar differences between TD children and children diagnosed with ASD can be seen when we look at the percentile of observations that belong to the first group-specific feature. However, when looking at the group-specific percentile, we can see that children with ASD do not have a discernible alpha peak at 25 months old, and a clear alpha peak never really appears as they develop. Alternatively, TD children in the percentile still have a discernible alpha peak that becomes more prominent and shifts to higher frequencies as they develop. However, compared to TD individuals in the or percentile, there is more aperiodic signal ( trend) present in the alpha power of TD children in the percentile.

As discussed in Section 2.5, the proposed covariate adjusted mixed membership model can be thought of as a generalization of function-on-scalar regression. Figure 6 contains the estimated developmental trajectories obtained by fitting a function-on-scalar regression model using the package “refund” package in R (Goldsmith et al., 2016). The results of the function-on-scalar regression coincide with the estimated developmental trajectory of the alpha oscillations obtained from our covariate adjusted mixed membership model, conditional on the group-specific mean allocation (group-specific mean allocations are represented by triangles in the bottom panel of Figure 5). As discussed in Section 2.5, function-on-scalar regression assumes a common mean for all individuals, controlling for age and diagnostic group. This is a relatively strong assumption in this case, as ASD is relatively broadly defined; with diagnosed individuals having varying degrees of symptoms. Indeed, from the function-on-scalar results, we would only be able to make population-level inference; giving no insight into the heterogeneity of alpha oscillation developmental trajectories for children with ASD (Figure 5). Overall, the added flexibility of covariate adjusted mixed membership models allows scientists to have greater insight into the developmental changes of alpha oscillations. Technical details on how the models for Section 4.1 and Section 4.2 were fit can be found in Section 3.3 of the Supplementary Materials. Section 3.3 of the Supplementary Materials also discusses model comparison using conditional predictive ordinates (Pettit, 1990; Chen et al., 2012; Lewis et al., 2014) and pseudomarginal likelihood.

5 Discussion

In this manuscript, we extend the framework of the functional mixed membership model proposed by Marco et al. (2024a) to allow for covariate dependence. This work was primarily motivated by a neurodevelopmental EEG study on alpha oscillations, where alpha oscillations are known to change as children age. Although mixed membership models provided a novel way to quantify the emergence and presence of a developmental biomarker known as an alpha peak (Marco et al., 2024a, b), it has been shown that not accounting for developmental shifts in the alpha peak can confound measures of the peak alpha frequency (Haegens et al., 2014), leading to the need for a covariate adjusted functional mixed membership model. In Section 2, we derive the covariate adjusted functional mixed membership models and compare the framework with common covariate-dependent clustering frameworks, such as the mix of experts model and the mixture of regressions model. After specifying the proposed Bayesian model, we provide a set of sufficient conditions in Lemma 2.1 to ensure that the proposed covariate adjusted mixed membership model has identifiable mean, covariance, and allocation structures. Section 3 contains a simulation study exploring the empirical convergence properties of our model. We conclude by revisiting the neurodevelopmental case study on alpha oscillations in Section 4, where we fit two covariate adjusted functional mixed membership models to gain novel information on the developmental differences of alpha oscillations between children with ASD and TD children. In Section 4.1, we fit a covariate adjusted functional mixed membership model adjusting for age, which allowed us to directly compare children with ASD to TD children using the same spectrum while controlling for age. In Section 4.1, we fit a covariate adjusted functional mixed membership model adjusting for age and diagnostic group, which no longer allowed us to directly compare children with ASD to TD children; however, we were able to gain novel insight into the developmental trajectory of alpha oscillations for children with ASD.

Although the proposed model was introduced for an arbitrary number of features, and Lemma 2.1 holds for an arbitrary number of features, there are computational challenges to working with models with more than three features. As discussed in Section 2.4, we typically have to assume the separability condition or the sufficiently scattered condition. In a two-feature model, these two assumptions are equivalent and can be enforced by post-processing the Markov chain constructed through MCMC. When considering a model with three or more features, the separability condition becomes a relatively strong geometric assumption on the generating process, making the sufficiently scattered condition more theoretically appealing. For a three-feature model, we can similarly post-process the Markov chain utilizing the work of Pârvu and Gilbert (2016) to arrive at posterior samples that satisfy the sufficiently scattered assumption. However, ensuring that the sufficiently scattered assumption holds for models with four or more features is still an open problem. Similarly, more work is needed to derive a sampler that obeys the geometric constraints set forth by the sufficiently scattered condition.

As discussed in Section 2.5, covariate adjusted functional mixed membership models can be thought of as a more granular version of function-on-scalar regression. Although functional-on-scalar models can typically handle a large number of covariates, with regularized function-on-scalar models (Fan and Reimherr, 2017; Kowal and Bourgeois, 2020) becoming more popular, the model proposed in this manuscript can handle only a few covariates. Although fitting a covariate adjusted functional mixed membership model with more covariates may be computationally feasible, from the simulation study in Section 3.1, we can see that we need significantly more observations as we add covariates and lose a lot of statistical efficiency when over-specifying the model. Although one may be tempted to use information criteria to perform variable selection, we urge caution, as the addition of additional covariates can greatly impact the interpretation of the allocation parameters, as seen in Section 4.2. Thus, the incorporation of a covariate in the model should be decided based on the modeling goals, prior knowledge of how the covariates affect the observed functions, and computational/statistical efficiency considerations.

As observed in unadjusted mixed membership models (Marco et al., 2024a, b), the posterior distribution often has multiple modes, leading to poor posterior exploration using traditional sampling methods. Thus, we use an algorithm similar to Algorithm 1 described in the supplement of Marco et al. (2024a) to pick a good starting point for our Markov chain. In addition to finding good initial starting points, we also outline a tempered-transition sampling scheme (Pritchard et al., 2000; Behrens et al., 2012) in Section 2.2 of the Supplementary Materials, which allows us to traverse areas of low posterior probability. An R package for the proposed covariate adjusted functional mixed membership model is available for download at https://github.com/ndmarco/BayesFMMM.

6 Funding Acknowledgments

The authors gratefully acknowledge funding from the NIH/NIMH R01MH122428-01 (DS,DT).

7 Disclosure

The authors report that there are no competing interests to declare.

References

- Müller et al. [2011] Peter Müller, Fernando Quintana, and Gary L Rosner. A product partition model with regression on covariates. Journal of Computational and Graphical Statistics, 20(1):260–278, 2011.

- Qin and Self [2006] Li-Xuan Qin and Steven G Self. The clustering of regression models method with applications in gene expression data. Biometrics, 62(2):526–533, 2006.

- Hyun et al. [2023] Sangwon Hyun, Mattias Rolf Cape, Francois Ribalet, and Jacob Bien. Modeling cell populations measured by flow cytometry with covariates using sparse mixture of regressions. The Annals of Applied Statistics, 17(1):357–377, 2023.

- Guha and Guhaniyogi [2022] Sharmistha Guha and Rajarshi Guhaniyogi. Bayesian covariate-dependent clustering of undirected networks with brain-imaging data. 2022.

- Binkiewicz et al. [2017] Norbert Binkiewicz, Joshua T Vogelstein, and Karl Rohe. Covariate-assisted spectral clustering. Biometrika, 104(2):361–377, 2017.

- Page and Quintana [2016] Garritt L Page and Fernando A Quintana. Spatial product partition models. 2016.

- McLachlan et al. [2019] Geoffrey J McLachlan, Sharon X Lee, and Suren I Rathnayake. Finite mixture models. Annual review of statistics and its application, 6:355–378, 2019.

- Faria and Soromenho [2010] Susana Faria and Gilda Soromenho. Fitting mixtures of linear regressions. Journal of Statistical Computation and Simulation, 80(2):201–225, 2010.

- Grün et al. [2007] Bettina Grün, Friedrich Leisch, et al. Applications of finite mixtures of regression models. URL: http://cran. r-project. org/web/packages/flexmix/vignettes/regression-examples. pdf, 2007.

- Khalili and Chen [2007] Abbas Khalili and Jiahua Chen. Variable selection in finite mixture of regression models. Journal of the american Statistical association, 102(479):1025–1038, 2007.

- Devijver [2015] Emilie Devijver. Finite mixture regression: a sparse variable selection by model selection for clustering. 2015.

- Jordan and Jacobs [1994] Michael I Jordan and Robert A Jacobs. Hierarchical mixtures of experts and the em algorithm. Neural computation, 6(2):181–214, 1994.

- Bishop and Svenskn [2002] Christopher M Bishop and Markus Svenskn. Bayesian hierarchical mixtures of experts. In Proceedings of the Nineteenth conference on Uncertainty in Artificial Intelligence, pages 57–64, 2002.

- Kopf et al. [2021] Andreas Kopf, Vincent Fortuin, Vignesh Ram Somnath, and Manfred Claassen. Mixture-of-experts variational autoencoder for clustering and generating from similarity-based representations on single cell data. PLoS computational biology, 17(6):e1009086, 2021.

- Tsai et al. [2020] Tsung Wei Tsai, Chongxuan Li, and Jun Zhu. Mice: Mixture of contrastive experts for unsupervised image clustering. In International conference on learning representations, 2020.

- Park and Dunson [2010] Ju-Hyun Park and David B Dunson. Bayesian generalized product partition model. Statistica Sinica, pages 1203–1226, 2010.

- Marco et al. [2024a] Nicholas Marco, Damla Şentürk, Shafali Jeste, Charlotte DiStefano, Abigail Dickinson, and Donatello Telesca. Functional mixed membership models. Journal of Computational and Graphical Statistics, pages 1–11, 2024a.

- Erosheva et al. [2004] E Erosheva, S Fienberg, and J Lafferty. Mixed membership models of scientific publications. PNAS, 2004.

- Heller et al. [2008] Katherine A Heller, Sinead Williamson, and Zoubin Ghahramani. Statistical models for partial membership. In Proceedings of the 25th International Conference on Machine learning, pages 392–399, 2008.

- Gruhl and Erosheva [2014] Jonathan Gruhl and Elena Erosheva. A Tale of Two (Types of) Memberships: Comparing Mixed and Partial Membership with a Continuous Data Example, chapter 2. CRC Press, 2014.

- Marco et al. [2024b] Nicholas Marco, Damla Şentürk, Shafali Jeste, Charlotte C DiStefano, Abigail Dickinson, and Donatello Telesca. Flexible regularized estimation in high-dimensional mixed membership models. Computational Statistics & Data Analysis, page 107931, 2024b.

- Yao et al. [2011] Fang Yao, Yuejiao Fu, and Thomas CM Lee. Functional mixture regression. Biostatistics, 12(2):341–353, 2011.

- Gaffney and Smyth [1999] Scott Gaffney and Padhraic Smyth. Trajectory clustering with mixtures of regression models. In Proceedings of the fifth ACM SIGKDD international conference on Knowledge discovery and data mining, pages 63–72, 1999.

- Haegens et al. [2014] Saskia Haegens, Helena Cousijn, George Wallis, Paul J Harrison, and Anna C Nobre. Inter-and intra-individual variability in alpha peak frequency. Neuroimage, 92:46–55, 2014.

- Scheffler et al. [2019] Aaron W Scheffler, Donatello Telesca, Catherine A Sugar, Shafali Jeste, Abigail Dickinson, Charlotte DiStefano, and Damla Şentürk. Covariate-adjusted region-referenced generalized functional linear model for eeg data. Statistics in medicine, 38(30):5587–5602, 2019.

- Rodríguez-Martínez et al. [2017] EI Rodríguez-Martínez, FJ Ruiz-Martínez, CI Barriga Paulino, and Carlos M Gómez. Frequency shift in topography of spontaneous brain rhythms from childhood to adulthood. Cognitive neurodynamics, 11(1):23–33, 2017.

- Wang et al. [2016] Jane-Ling Wang, Jeng-Min Chiou, and Hans-Georg Müller. Functional data analysis. Annual Review of Statistics and its application, 3(1):257–295, 2016.

- James and Sugar [2003] Gareth M James and Catherine A Sugar. Clustering for sparsely sampled functional data. Journal of the American Statistical Association, 98(462):397–408, 2003.

- Chiou and Li [2007] Jeng-Min Chiou and Pai-Ling Li. Functional clustering and identifying substructures of longitudinal data. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 69(4):679–699, 2007.

- Jacques and Preda [2014] Julien Jacques and Cristian Preda. Model-based clustering for multivariate functional data. Computational Statistics & Data Analysis, 71:92–106, 2014.

- Ramsay and Silverman [2005a] JO Ramsay and BW Silverman. Principal components analysis for functional data. Functional data analysis, pages 147–172, 2005a.

- Happ and Greven [2018] Clara Happ and Sonja Greven. Multivariate functional principal component analysis for data observed on different (dimensional) domains. Journal of the American Statistical Association, 113(522):649–659, 2018.

- Faraway [1997] Julian J Faraway. Regression analysis for a functional response. Technometrics, 39(3):254–261, 1997.

- Brumback and Rice [1998] Babette A Brumback and John A Rice. Smoothing spline models for the analysis of nested and crossed samples of curves. Journal of the American Statistical Association, 93(443):961–976, 1998.

- Bhattacharya and Dunson [2011] Anirban Bhattacharya and David B Dunson. Sparse bayesian infinite factor models. Biometrika, pages 291–306, 2011.

- Lang and Brezger [2004] Stefan Lang and Andreas Brezger. Bayesian p-splines. Journal of computational and graphical statistics, 13(1):183–212, 2004.

- Chen et al. [2022] Yinyin Chen, Shishuang He, Yun Yang, and Feng Liang. Learning topic models: Identifiability and finite-sample analysis. Journal of the American Statistical Association, pages 1–16, 2022.

- Stephens [2000] Matthew Stephens. Dealing with label switching in mixture models. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 62(4):795–809, 2000.

- Pettit [1990] LI Pettit. The conditional predictive ordinate for the normal distribution. Journal of the Royal Statistical Society: Series B (Methodological), 52(1):175–184, 1990.

- Donoho and Stodden [2003] David Donoho and Victoria Stodden. When does non-negative matrix factorization give a correct decomposition into parts? Advances in neural information processing systems, 16, 2003.

- Arora et al. [2012] Sanjeev Arora, Rong Ge, and Ankur Moitra. Learning topic models–going beyond svd. In 2012 IEEE 53rd annual symposium on foundations of computer science, pages 1–10. IEEE, 2012.

- Azar et al. [2001] Yossi Azar, Amos Fiat, Anna Karlin, Frank McSherry, and Jared Saia. Spectral analysis of data. In Proceedings of the thirty-third annual ACM symposium on Theory of computing, pages 619–626, 2001.

- Ramsay and Silverman [2005b] J. Ramsay and B.W. Silverman. Functional Data Analysis. Springer Series in Statistics. Springer, 2005b. ISBN 9780387400808. URL https://books.google.com/books?id=mU3dop5wY_4C.

- Morris [2015] Jeffrey S Morris. Functional regression. Annual Review of Statistics and Its Application, 2:321–359, 2015.

- Krafty et al. [2008] Robert T Krafty, Phyllis A Gimotty, David Holtz, George Coukos, and Wensheng Guo. Varying coefficient model with unknown within-subject covariance for analysis of tumor growth curves. Biometrics, 64(4):1023–1031, 2008.

- Reiss et al. [2010] Philip T Reiss, Lei Huang, and Maarten Mennes. Fast function-on-scalar regression with penalized basis expansions. The international journal of biostatistics, 6(1), 2010.

- Goldsmith et al. [2015] Jeff Goldsmith, Vadim Zipunnikov, and Jennifer Schrack. Generalized multilevel function-on-scalar regression and principal component analysis. Biometrics, 71(2):344–353, 2015.

- Kowal and Bourgeois [2020] Daniel R Kowal and Daniel C Bourgeois. Bayesian function-on-scalars regression for high-dimensional data. Journal of Computational and Graphical Statistics, 29(3):629–638, 2020.

- Shang [2014] Han Lin Shang. A survey of functional principal component analysis. AStA Advances in Statistical Analysis, 98:121–142, 2014.

- Rice and Silverman [1991] John A Rice and Bernard W Silverman. Estimating the mean and covariance structure nonparametrically when the data are curves. Journal of the Royal Statistical Society: Series B (Methodological), 53(1):233–243, 1991.

- Yao et al. [2005] Fang Yao, Hans-Georg Müller, and Jane-Ling Wang. Functional data analysis for sparse longitudinal data. Journal of the American statistical association, 100(470):577–590, 2005.

- Morris and Carroll [2006] Jeffrey S Morris and Raymond J Carroll. Wavelet-based functional mixed models. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 68(2):179–199, 2006.

- Staicu et al. [2010] Ana-Maria Staicu, Ciprian M Crainiceanu, and Raymond J Carroll. Fast methods for spatially correlated multilevel functional data. Biostatistics, 11(2):177–194, 2010.

- Akaike [1974] Hirotugu Akaike. A new look at the statistical model identification. IEEE transactions on automatic control, 19(6):716–723, 1974.

- Schwarz [1978] Gideon Schwarz. Estimating the dimension of a model. The annals of statistics, pages 461–464, 1978.

- Spiegelhalter et al. [2002] David J Spiegelhalter, Nicola G Best, Bradley P Carlin, and Angelika Van Der Linde. Bayesian measures of model complexity and fit. Journal of the royal statistical society: Series B (statistical methodology), 64(4):583–639, 2002.

- Celeux et al. [2006] Gilles Celeux, Florence Forbes, Christian P Robert, and D Mike Titterington. Deviance information criteria for missing data models. Bayesian analysis, 1(4):651–673, 2006.

- Edition [2013] Fifth Edition. Diagnostic and statistical manual of mental disorders. Am Psychiatric Assoc, 21(21):591–643, 2013.

- Lord et al. [2018] Catherine Lord, Mayada Elsabbagh, Gillian Baird, and Jeremy Veenstra-Vanderweele. Autism spectrum disorder. The Lancet, 392(10146):508–520, 2018.

- Dickinson et al. [2018] Abigail Dickinson, Charlotte DiStefano, Damla Senturk, and Shafali Spurling Jeste. Peak alpha frequency is a neural marker of cognitive function across the autism spectrum. European Journal of Neuroscience, 47(6):643–651, 2018.

- Fries [2005] Pascal Fries. A mechanism for cognitive dynamics: neuronal communication through neuronal coherence. Trends in cognitive sciences, 9(10):474–480, 2005.

- Klimesch et al. [2007] Wolfgang Klimesch, Paul Sauseng, and Simon Hanslmayr. Eeg alpha oscillations: the inhibition–timing hypothesis. Brain research reviews, 53(1):63–88, 2007.

- Goldsmith et al. [2016] Jeff Goldsmith, Fabian Scheipl, Lei Huang, Julia Wrobel, J Gellar, J Harezlak, MW McLean, B Swihart, L Xiao, C Crainiceanu, et al. Refund: Regression with functional data. R package version 0.1-16, 572, 2016.

- Chen et al. [2012] Ming-Hui Chen, Qi-Man Shao, and Joseph G Ibrahim. Monte Carlo methods in Bayesian computation. Springer Science & Business Media, 2012.

- Lewis et al. [2014] Paul O Lewis, Wangang Xie, Ming-Hui Chen, Yu Fan, and Lynn Kuo. Posterior predictive bayesian phylogenetic model selection. Systematic biology, 63(3):309–321, 2014.

- Pârvu and Gilbert [2016] Ovidiu Pârvu and David Gilbert. Implementation of linear minimum area enclosing triangle algorithm: Application note. Computational and Applied Mathematics, 35:423–438, 2016.

- Fan and Reimherr [2017] Zhaohu Fan and Matthew Reimherr. High-dimensional adaptive function-on-scalar regression. Econometrics and statistics, 1:167–183, 2017.

- Pritchard et al. [2000] Jonathan K Pritchard, Matthew Stephens, and Peter Donnelly. Inference of population structure using multilocus genotype data. Genetics, 155(2):945–959, 2000.

- Behrens et al. [2012] Gundula Behrens, Nial Friel, and Merrilee Hurn. Tuning tempered transitions. Statistics and computing, 22(1):65–78, 2012.

- Roeder and Wasserman [1997] Kathryn Roeder and Larry Wasserman. Practical bayesian density estimation using mixtures of normals. Journal of the American Statistical Association, 92(439):894–902, 1997.

Supplemental Materials: Covariate Adjusted Functional Mixed Membership Models

1 Proof of Lemma 2.1

We will start by defining identifiability and defining some of the notation used in this section. Let , where . We will say that the parameters are unidentifiable if there exists at least one such that for all sets of observations following Assumptions (1)-(3). Otherwise, the parameters are called identifiable. In this case, is the likelihood specified in Equation 12 in the main text.

From equation 12 in the main text, we have that

|

|

(1) |

where

and

Assume that for all sets of observations that follow Assumptions (1)-(3) . Thus we would like to prove that must necessarily be true. Since is written as a quadratic form in and is full rank, we see that the following must necessarily be true:

-

1.

,

-

2.

,

for . By (1), we have that

Letting and ( is the design matrix with the row as ), we have

| (2) |

since by Assumption (3). Since is full column rank from Assumption (1), we have that

| (3) |

It is important to note that if Assumption (1) does not hold and is not full column rank, we could add any vector in the nullspace of to any row of () and equation 2 would still hold.

We can rewrite Equation 3 in matrix form such that

| (4) |

where and is the matrix of allocation parameters with as the row of . From this we can directly apply the results of Chen et al. [2022], to show that and up to a permutation of the labels. Specifically, if the seperability condition holds, then Proposition 1 of Chen et al. [2022] shows that and up to a permutation of the labels. If the sufficiently scattered condition holds, then Theorem 2 of Chen et al. [2022] shows that and up to a permutation of the labels. Therefore, assuming that Assumptions (1) - (3) hold, we have , , and up to the permutation of the labels, for and .

From (2), we have that

Suppose that , then we have that

by Assumption (3) () . Writing such that

we can see that rank, which implies that , leading to a contradiction. Therefore, we have and up to a permutation of the labels. Assuming no permutation of the labels (), we have that

since for all . Therefore, we have the following system of equations

for . Equality can be proved if we have linearly independent equations in our system of equations. Thus, if the coefficient matrix has rank , then we have linearly independent equations in our system of equations. We can see that Assumption (2) gives us that the coefficient matrix, denoted , has full column rank, meaning that we have linearly independent equations in our system of equations. Therefore, we have that for , up to a permutation of the labels. Therefore, we have that for and , the parameters , , , , and are identifiable up to a permutation of the labels given Assumptions (1)-(3).

2 Computation

2.1 Posterior Distributions

In this subsection, we will specify the posterior distributions specifically for the functional covariate adjusted mixed membership model proposed in the main manuscript. We will first start with the parameters, for and . Let . By letting

and

we have that

The posterior distribution of , for , is

The posterior distribution for , for and , is

The posterior distribution for () is not a commonly known distribution, however we have that

Since this is not a known kernel of a distribution, we will have to use Metropolis-Hastings algorithm. Consider the proposal distribution (Truncated Normal) for some small . Thus the probability of accepting any step is

Similarly for (), we have

We will use a similar proposal distribution, such that for some small . Thus the probability of accepting any step is

The posterior distribution for the parameters are not a commonly known distribution, so we will use the Metropolis-Hastings algorithm. We know that

We will use for some large as the proposal distribution. Thus the probability of accepting a proposed step is

Similarly, a Gibbs update is not available for an update of the parameters. We have that

Letting out proposal distribution be such that , for some large , we have that our probability of accepting any proposal is

The posterior distribution of is also not a commonly known distribution, so we will use the Metropolis-Hastings algorithm to sample from the posterior distribution. We have that

Using a proposal distribution such that (Truncated Normal), we are left with the probability of accepting a proposed state as

Let be the following tridiagonal matrix:

Thus, letting

and

we have that

Let denote the column of the matrix . Thus, letting

and

we have that

Thus we can see that we can draw samples from the posterior of the parameters controlling the mean structure using a Gibbs sampler. Similarly, we can use a Gibbs sampler to draw samples from the posterior distribution of and . We have that the posterior distributions are

and

for and . The parameter can be updated by using a Gibbs update. If we let

then we have

Lastly, we can update the parameters, for and , using a Gibbs update. If we let

and

then we have that

2.2 Tempered Transitions

One of the main computational problems we face in these flexible, unsupervised models is a multi-modal posterior distribution. In order to help the Markov chain move across modes, or traverse areas of low posterior probability, we can utilize tempered transitions.

In this paper, we will be following the works of Behrens et al. [2012] and Pritchard et al. [2000] and only temper the likelihood. The target distribution that we want to temper is usually assumed to be written as

where controls how much the distribution is tempered (). In this setting, we will assume that the hyperparameters and are user specified, and will depend on the complexity of the model. For more complex or larger models, we will need to set relatively high. In this implementation, we assume the parameters to follow a geometric scheme, but in more complex models, may need to be relatively small.

We can rewrite our likelihood for the functional covariate adjusted model to fit the above form:

Let be the set of parameters generated from the model using the tempered likelihood associated with . The tempered transition algorithm can be summarized by the following steps:

-

1.

Start with initial state .

-

2.

Transition from to using the tempered likelihood associated with .

-

3.

Continue in this manner until we transition from to using the tempered likelihood associated with .

-

4.

Transition from to using the tempered likelihood associated with .

-

5.

Continue in this manner until we transition from to using .

-

6.

Accept transition from to with probability

in the functional case, or

in the multivariate case.

Since we only temper the likelihood, many of the posterior distributions derived in section 2.1 can be utilized. Thus the following posteriors are the only ones that change due to the tempering of the likelihood. Starting with the parameters, we have

and

we have that

As in the untempered case, we have that the posterior distribution parameters under the tempered likelihood is not a commonly known distribution. Therefore, we will use the Metropolis-Hastings algorithm. We have that

We will use for some large as the proposal distribution. Thus the probability of accepting a proposed step is

Letting

and

we have that

Let denote the column of the matrix . Thus, letting

and

we have that

If we let

then we have

Lastly, we can update the parameters, for and , using a Gibbs update. If we let

and

then we have that

3 Simulation Study and Case Studies

3.1 Simulation Study 1

This subsection contains detailed information on how the simulation study in Section 3 of the main text was conducted. This simulation study primarily looked at how well we could recover the true mean structure, the covariance structure, and the allocation structure. In this simulation study, we simulated datasets from 3 scenarios at 3 different sample sizes for each scenario. Once the datasets were generated, we fit a variety of covariate adjusted functional mixed membership models, as well as unadjusted functional mixed membership models, on the datasets to see how well we could recover the mean, covariance, and allocation structures.

The first scenario we considered was a covariate adjusted functional mixed membership model with 2 true covariates. To generate all of the datasets, we assumed that the observations were in the span of B-spline basis with 8 basis functions. For this scenario, we generated 3 datasets with sample sizes of 60, 120, and 240 functional observations, all observed on a grid of 25 time points. The data was generated by first generating the model parameters (as discussed below) and then generating data from the likelihood specified in Equation 11 of the main text. The model parameters for this dataset were generated as follows:

The parameters were drawn according to the following distributions:

where , , . The parameters were drawn from a standard normal distribution. The parameters were drawn from a mixture of Dirichlet distributions. Roughly 30% of the parameters were drawn from a Dirichlet distribution with and . Another roughly 30% of the parameters were drawn from a Dirichlet distribution where and . The rest of the parameters were drawn from a Dirichlet distribution with . The covariates, , were drawn from a standard normal distribution. Models in this scenario were run for 500,000 MCMC iterations.

For the second scenario, we considered data drawn from a covariate adjusted functional mixed membership model with one covariate. We considered three sample sizes of 50, 100, and 200 functional samples observed on a grid of 25 time points. The model parameters for this dataset were generated as follows:

The parameters were drawn according to the following distributions:

where , , . The parameters were drawn from a standard normal distribution. The parameters were drawn from a mixture of Dirichlet distributions. Roughly 30% of the parameters were drawn from a Dirichlet distribution with and . Another roughly 30% of the parameters were drawn from a Dirichlet distribution where and . The rest of the parameters were drawn from a Dirichlet distribution with . The covariates, , were drawn from a normal distribution with variance of nine and mean of zero. Models in this scenario were run for 500,000 MCMC iterations.

For the third scenario, we generated data from an unadjusted functional mixed membership model. We considered three sample sizes of 40, 80, and 160 functional samples observed on a grid of 25 time points. The model parameters for this dataset were generated as follows:

The parameters were drawn according to the following distributions: