Counting Phases and Faces Using

Bayesian Thermodynamic Integration

Abstract

We introduce a new approach to reconstruction of the thermodynamic functions and phase boundaries in two-parametric statistical mechanics systems. Our method is based on expressing the Fisher metric in terms of the posterior distributions over a space of external parameters and approximating the metric field by a Hessian of a convex function. We use the proposed approach to accurately reconstruct the partition functions and phase diagrams of the Ising model and the exactly solvable non-equilibrium TASEP without any a priori knowledge about microscopic rules of the models. We also demonstrate how our approach can be used to visualize the latent space of StyleGAN models and evaluate the variability of the generated images.

∗ e-mail: [email protected]

While machine learning methods are effective at analyzing statistical physics systems their application has mainly been limited to determining the boundaries of phase transitions and extracting the learned order parameters. However, in many cases it remains unclear how to relate these learned order parameters to known physical quantities that are commonly used to determine phase transitions.

We address here a general problem of reconstructing both phase boundaries and thermodynamic functions of a given statistical physics model. A model in this context is thought to be a stochastic mapping from a low-dimensional space of external parameters t to a multi-dimensional space of microstates . We don’t assume any prior knowledge of the number of phases and their location in the space of external parameters. The only label which is available to us is the values of external parameters for which a given microstate was generated.

Our contributions. The contributions of this paper are as follows:

-

•

We propose a new approach which we call Bayesian Thermodynamic Integration to approximate the Fisher information metric for the distributions over a high-dimensional microstate space depending on several external parameters. The approach is based on approximating the probability distribution with a function from the exponential family

where the normalization factor (partition function in the terminology of statistical physics) is a convex function of parameters. For the statistical physics problems this approach gives access to the partition function and other thermodynamic functions without a priori knowledge of the system Hamiltonian;

-

•

We apply the suggested approach to several two-parametric systems and demonstrate that it allows a satisfactory reproduction of the thermodynamic functions and phase transition lines. In the case of a two-parameter model of statistical mechanics based on the StyleGAN v3 generator, we observe signs of a second-order phase transition corresponding to the sharp changes of identity of a generated human face.

I Bayesian Thermodynamic Integration

The main problem of interest to statistical physics is the study of phase transitions. They correspond to sharp changes in typical microstates with a gradual change in external parameters. We use Jensen-Shannon divergence to measure the distance between probability distributions corresponding to different values of external parameters

| (1) |

where JSD is Jensen-Shannon divergence which is a proper metric on the manifold of probability distributions parameterized by t and represents entropy of the corresponding distribution. For small variations of t

| (2) |

where G(t) is a Riemannian metric on the manifold of probability distributions called Fisher information metric amari2000methods .

Fisher information metric is defined as the second order Taylor expansion term of KL divergence between close probability distributions

| (3) |

At phase transition points the Fisher metric is supposed to have singularities in the limit of large system size (i.e., for dimensionality of the space of approaching infinity). Apply Bayes’ formula to to find posterior distribution on the space of external parameters given observed microstate

| (4) |

Thus, if prior distribution is uniform then

| (5) |

This implies that, if one knows the posterior distribution (for example, via some sort of Monte-Carlo approximation), then the Fisher information metric can be expressed as

| (6) |

I.1 Approximation of the Posterior

Posterior distribution works as a sort of “probabilistic thermometer”: it looks at a microstate and gives a probabilistic forecast of external parameters (for example temperature) at which this microstate was generated.

By definition of the problem, it is possible to sample from , giving pairs

sampled from joint distribution, where is chosen to be uniform in some compact domain. These samples can be used to fit parametric family of distributions to approximate posterior . Here are the parameters of the family of distribution, which could be obtained by maximizing likelihood of true external parameters given over all our samples or equivalently by minimizing negative log-likelihood

| (7) |

| (8) |

Minimization of the log-likelihood (7) is equivalent to minimization of the KL divergence between the distribution of target labels and the predicted distribution

| (9) |

where is a microstate of the physical model sampled from the conditional distribution . If labels are considered hard, the target distribution is . In order to avoid divergences, it is convenient to use a smoothened distribution

| (10) |

instead. Here is the normalizing constant, it is obtained by integrating over the domain of interest in the space of macroscopic parameters. The standard deviation is defined to be equal to the mean distance from a point in the training dataset to its K-th nearest neighbor.

The resulting parametric distribution can be used as input of formula (6) in order to get an approximation of the Fisher metric. However, this approach has two important drawbacks.

First, in order to obtain a decent approximation the parametric family should be flexible enough, implying a large number of parameters . However, if , i.e. if the number of parameters is much larger than the number of examples in the training set, the problem of overfitting arises.

Second, the Fisher metric depends on the derivative of the approximated function, and derivatives of approximated functions are typically much noisier than the functions themselves. As a result, without additional efforts to smooth the posterior distribution, approximation (6) turns out to be too noisy to be useful in practice.

In order to resolve the first problem we artificially augment the training set. Instead of using a single microstate as an input for the prediction of external parameters t we use a randomly shuffled tuple of microstates generated at external parameters close to t. That is to say, start with a set of training pairs , for each choose values with the smallest distance , form a randomly shuffled tuple out of the corresponding microstates, and use the set as a training set, so that instead of we are now training the model to predict . This trick allows to increase the size of the training set by a factor without generating any new microstates. The drawback is that the resolution with respect to t decreases with growing . However, this decrease is much slower, then the factorial growth of the size of the training set.

The resulting target distribution is obtained by averaging target distributions (10) over the nearest neighbours

| (11) |

In order to resolve the second issue outlined above we approximate the posterior distribution with a distribution from the exponential family parametrized by convex function, as described in the next section.

I.2 Free Energy Approximation via Convex Neural Network

We know that Fisher metric is a positive-definite matrix . The main idea of our approach is to approximate this positive-definite matrix by the Hessian of a convex function. Note that this means that if , i.e. if there is a single scalar external macroscopic parameter, then this approximation is exact.

More precisely, we approximate the posterior distribution as a function of external parameters by a distribution from the so-called exponential family of distributions. It is instructive to introduce this family in a following way. Suppose for each value of t we fix average values of some macroscopic observables . We are interested in a probability distribution , which maximizes entropy

| (12) |

while respecting this constraint. Maximizing the functional

| (13) |

where t, and are Lagrange multipliers, gives the exponential family distributions of the form

| (14) |

where the normalization factor

| (15) |

is known as “the partition function” in equilibrium statistical mechanics.

For distributions from exponential family Fisher metric reduces to Hessian of the logarithm of the partition function

| (16) |

while its first derivatives are the mean values of the function and are known as “thermodynamic forces”

| (17) |

Fisher metric tensor is known to be positive-definite, and thus the function is convex.

Now, in order to solve the problem of approximating the derivatives of the posterior distribution , we want to find a function , depending on some set of parameters which is, on the one hand, convex, and on the other hand, such that the Jensen-Shannon divergence between the approximate posterior and the posterior distribution generated from the exponential family with partition function is minimized.

If our prior is uniform then exponential-family posterior distribution takes the form

| (18) |

Function is unknown, but since we use a tuple of microstates to estimate a single posterior, we replace by its expectation (17),

To find parameters we minimize Jensen-Shannon divergence between posterior predicted using maximum likelihood and posterior with replaced by the gradient of the partition function

| (19) |

| (20) |

| (21) |

The resulting approximation for the Fisher metric is

We summarize the procedure described in this section by two algorithms outlined below, the first one generates the training dataset (1), the second one finds the approximations for the partition function and the Fisher metric (2).

II Numerical experiments

II.1 Ising model

In this section we test whether our approach is capable of reconstructing thermodynamic functions for an equilibrium statistical mechanics model in which the distribution of microstates belongs to the exponential family. We consider a 2D Ising model ising1925beitrag , which is an archetypal model of phase transitions in statistical mechanics. A microstate of this model is a set of spin variables defined on each vertex of a square lattice of size . At equilibrium probability distribution over the space of microstates is

| (22) |

where and are external parameters called magnetic field and temperature, respectively, the first sum is over all edges of the lattice, and is a normalization parameter known as a partition function:

| (23) |

This model is exactly solvable for onsager1944crystal ; kac1952combinatorial ; baxter1978399th . In particular, it is known that at a transition occurs between the high-temperature disordered state, where spin variables are on average equal zero, and the low-temperature ordered state in which average value of spin becomes distinct from zero. For general values of the likelihood function (22) is intractable.

Our dataset consists of samples of spin configurations on the square lattice of size with periodic boundary conditions. We consider the parameter ranges similar to the ranges used in (Walker, 2019). Point is sampled uniformly from this rectangle, and then a sample spin configuration is created for these values of temperature and external field by starting with a random initial condition and equilibrating is with Glauber (one-spin Metropolis) dynamics for iterations. We represent spin configuration as a single-channel image with values and . When constructing target probability distributions we choose and set the discretization of the square to be a uniform grid with grid cells.

Image-to-image network with U2-Net architecture qin2020u2 is used to approximate posterior . The network takes as input a bundle of images concatenated across channel dimension and outputs a categorical distribution representing density values in discrete grid points. For simplicity we choose the discretization to be of the same spatial dimensions as the input image. For all our numerical experiments the training was performed on a single Nvidia-HGX compute node with 8 A100 GPUs. We trained U2-Net using Adam optimizer with learning rate and batch size of for gradient update steps. In all our experiments the training set consists of 80% of samples and the other 20% are used for testing.

Bundle augmentation. To explore how test loss depends on the bundle size , which determines the number of microstates used to evaluate a single posterior distribution, we performed four different training runs with . The results are shown in 2. For , we observed overfitting after about the 12000th training iteration. However, even with , overfitting is significantly reduced and continues to decrease at large values of . The downside of this approach is that the effective grid resolution of the posterior distribution decreases with growing as

where is the dataset size. In what follows, we set . Also, since we are using a fully convolutional architecture, increasing the number of input channels by times has almost no effect on the overall GPU memory consumption during training.

Free energy approximation. We use Input Convex Neural Network amos2017input architecture to approximate the free energy. The fully connected ICNN has 5 layers with hidden dimension 512. We train network using Adam optimizer with learning rate 0.001.

Reconstructed free energy is shown in 3. The model was able to correctly determine 1st order phase transition line and .

II.2 Totally asymmetric simple exclusion process

Totally asymmetric simple exclusion process (TASEP) is a simple model of 1-dimensional transport phenomena. A microscopic configuration is a set of particles on a 1d lattice respecting the condition that there can be no more than one particle in each lattice cell. Each particle can move to the site to the right of it with probability per time provided that it is empty (we put without loss of generality). When complemented with boundary conditions, the TASEP attains a stationary state as time goes to infinity.

One particular case is open boundary conditions, when a particle is added with probability per time to the leftmost site provided that it is empty and removed with probability per time from the rightmost site provided that it is occupied. For this boundary condition the probability distribution is known exactly dehp ; evans_review ; krapivsky_book and it once again takes the form

| (24) |

where microstate is a concrete sequence of filled and empty cells, and is some function of and external parameters . Importantly, however, the function , which is known exactly for all system sizes and all values of does not take the form (14). TASEP with free boundaries exhibits a rich phase behavior: for large system sizes three distinct phases - the low-density phase, the high-density phase and the maximal current phase are possible depending on the values of , and the asymptotic ”free energy” which is defined as

| (25) |

and coincides with average flow per unit time, equals

| (26) |

where the first, second and third cases correspond to maximal current, high density and low density cases, respectively.

We generate a dataset of stationary TASEP configurations on a 1d lattice with sites. The rates of adding (removing) particles at the left(right) boundary are sampled from the uniform prior distribution over a square . To reach the stationary state we start from a random initial condition and perform move attempts, which is known to be enough to achieve the stationary state except for the narrow vicinity of the transition line between high-density and low-density phases (in this case the stationary state has a slowly diffusing front of a shock wave in it, one needs of order move attempts to form the shock but of order move attempts for it to diffusively explore all possible positions of the shock).

We reshape 1d lattice with sites into an image of size using raster scan ordering. To construct target probability distributions we set and define the discretization as a uniform grid on with grid cells.

The comparison of the reconstructed free energy and the exact analytical solution is shown in 5.

II.3 Image space of the StyleGAN

Consider a synthetic two-parametric statistical mechanics model where microstates are sampled using the StyleGAN v3 generator karras2021alias . Let and be vectors from the latent space of dimension 512. Consider a 2d section of the latent space parameterized by and as follows

| (27) |

where is a normally distributed random vector with zero mean and small enough standard deviation (we it to be 1/5 of the standard deviation of the prior standard normal distribution). External parameters and lie in the rectangle , so that interpolates between three base latent vectors at the corners , , of the rectangle. By putting latent code into the generator of StyleGAN we can sample images as functions of two external parameters.

| (28) |

For FFHQ dataset we generate dataset of human faces using StyleGAN at resolution of and resize it to the resolution before feeding it to the U2Net model which approximates posterior distribution on the space of external parameters. Experiment setup was similar to the 2D Ising model.

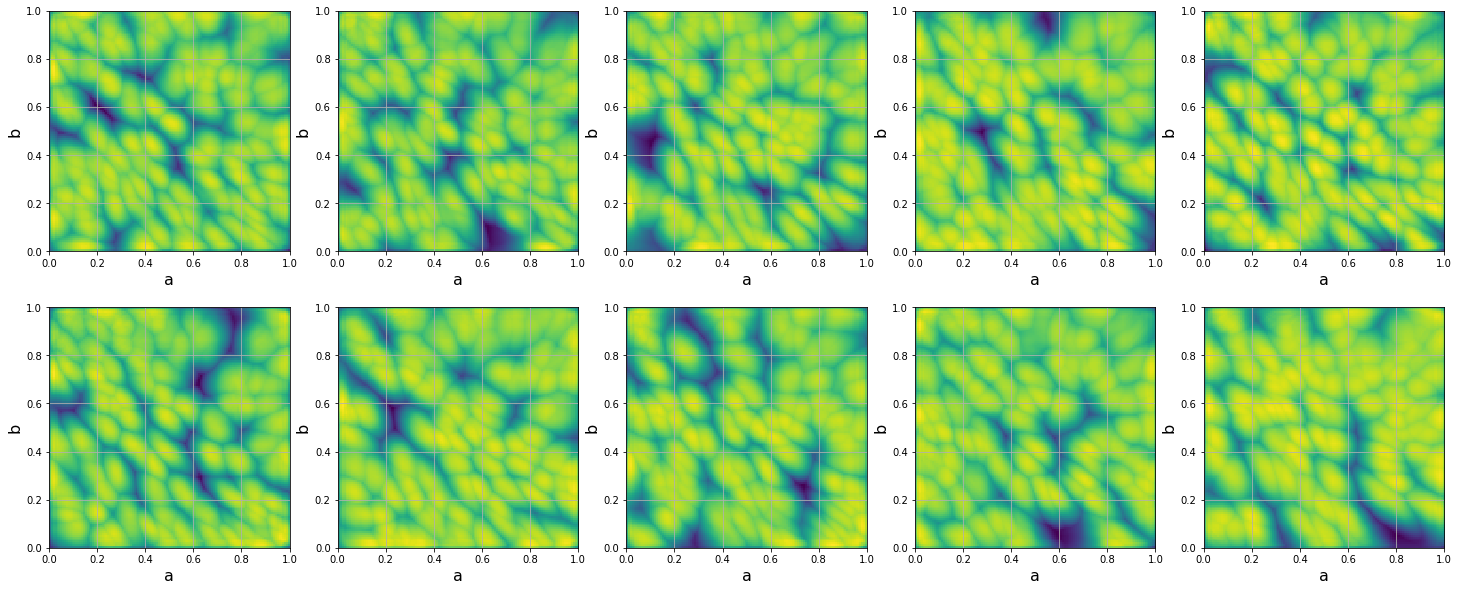

Reconstructed Fisher metric is shown in 7. Unexpectedly, we found two diagonal lines in the components of the Fisher metric, which, from the point of view of statistical mechanics, are signs of second-order phase transitions. If we compare it with the faces presented in 6 and 13 we can observe that phase transition lines correspond to the sharp identity change of generated faces. In the next section we investigate whether this behaviour is determined by the mapping network part of StyleGAN or it depends only on the synthesis network.

II.4 Intermediate latent space of the StyleGAN

Given a latent vector the StyleGAN v3 generates images in two steps. First, the mapping network is applied to generate a vector (code) in the intermediate latent space . This latent code in case of the StyleGAN v3 model pretrained on FFHQ dataset is a matrix with shape karras2021alias ; karras2020analyzing . Second, this vector is used by the weight demodulation layers of the synthesis network to generate the image itself.

A lot of attention was devoted to the improvement of the synthesis network. The effect of the mapping network on the generated images attracted less attention. To study which network, mapping or synthesis, is responsible for the typical shape of the Fisher metric field of the StyleGAN we introduce another synthetic two-parametric statistical mechanics model where microstates are generated by the mapping network

| (29) |

Similarly to the previous section we generate intermediate latent code of the shape for the same latent vectors and resize it into an image of size .

As it can be seen from the 9 and 7 Fisher metric fields for image space and for the space almost coincide which means that mostly the mapping network defines variability of the generated faces and the synthesis network doesn’t change the distribution of output images. In other words the synthesis network performs almost a bijective transformation of vectors from the intermediate latent space .

III Previous work

Machine learning methods have been actively used in the study of models of classical and quantum statistical physics van2017learning ; carrasquilla2017machine ; rem2019identifying ; wang2021unsupervised ; canabarro2019unveiling . The main problem of concern was to determine boundaries of phase transitions and to extract learned ”order parameters” which can be used to distinguish one phase from another. Since equilibrium distribution of microstates of a statistical mechanics system belongs to the exponential family (usually with intractable normalization constant) the problem of determining ”order parameters” is equivalent to the search of the sufficient statistics jiang2017learning ; chen2020neural . In walker2020deep it was observed that principal components of the mean and standard deviation vectors learned by a variational autoencoder trained on the configurations of a 2D Ising model were highly correlated with the known physical quantities, indicating that variational autoencoder implicitly extract sufficient statistics from the data.

In contrast to chen2020neural and walker2020deep where neural networks were used to extract sufficient statistics from the raw microstates our approach is based on approximating the partition function directly and expressing mean values of the sufficient statistics through the derivatives of the logarithm of the partition function.

Our approach to the visualization of the StyleGAN latent space in terms of the Fisher metric could be thought as an unsupervised alternative to the approach used in tran2018dist to study the GANs mode collapse problem, where the authors applied a classification network trained on MNIST to classify the generated images and construct a ”phase diagrams” of predicted classes in the space of two-dimensional latent parameters. Similar approach was used in carrasquilla2017machine to extract phase diagram of the Ising model by training network to classify low-temperature and high-temperature phases and drawing prediction on the space of external parameters. In our method, the singularities of the Fisher metric automatically highlight the boundaries of second-order phase transitions without an additional classification network or any other a priori knowledge about the number of phases and their location on the parameter space. However, we assume that all integrals over the space of external parameters are tractable, which is not the case for the general -dimensional hidden space of StyleGAN. Since -dimensional integrals cannot be computed efficiently using domain discretization, other approaches are needed to parameterize the predicted posterior distributions on the parameter space, such as tensor-train density estimation method novikov2021tensor , which admits a tractable partition function.

IV Discussion

We propose a new approach to the reconstruction of the thermodynamic functions: partition function, free energy and their derivatives as functions of the external parameters, and apply it to several two-parametric statistical mechanics models. Our method is based on expressing the Fisher metric on the manifold of probability distributions over a high-dimensional space of microstates through the posterior distributions over a space of external parameters. Log-partition function is obtained by approximating the metric field by a Hessian of a convex function parametrized by an Input Convex Neural Network (ICNN).

The proposed approach is used to reconstruct the partition functions and phase diagrams of the equilibrium Ising model and the exactly solvable non-equilibrium TASEP without any a priori knowledge about microscopic rules of the models. The only information we need is some algorithm allowing to sample microstates for given values of the external parameters.

We also demonstrate how our approach can be used to visualize the latent space of a StyleGAN v3 model and evaluate the variability of the generated images. The singularities of the Fisher metric in the two-dimensional section of the latent space are signs of a second-order phase transition, corresponding to a sharp change in the identity of the generated faces with a gradual change in the latent code. It is shown that the phase diagram of the generated images is mostly determined by the mapping network, so the synthesis network does not change the position of the phase boundaries. Potentially, this means that it is possible to extract semantically meaningful features by studying only the mapping network, since the most significant changes occur in directions orthogonal to the boundaries of the phase transition. In general, the reasons for the existence of the observed phase boundaries in StyleGAN models remain largely unclear, and this phenomenon requires further research.

Acknowledgements

The authors acknowledge the use of Zhores HPC zacharov2019zhores for obtaining the results presented in this paper. We are grateful to P. Krapivsky, V. Palyulin, A. Iakovlev and D. Egorov for interesting discussions. This work is supported by the RSF Grant No. 21-73-00176.

References

- (1) Amari, S.-I., Nagaoka, H. Methods of information geometry, American Mathematical Soc., Providence, RI, 2000.

- (2) Ising, E. Beitrag zur theorie des ferromagnetismus. Zeitschrift für Physik, 31, 253 (1925).

- (3) Onsager, L. Crystal statistics. I. a two-dimensional model with an order-disorder transition. Physical Review, 65, 117 (1944).

- (4) Kac, M. and Ward, J. C. A combinatorial solution of the two-dimensional Ising model. Physical Review, 88, 1332 (1952).

- (5) Baxter, R. J. and Enting, I. G. 399th solution of the Ising model. J. Phys. A: Math. and Gen., 11, 2463 (1978).

- (6) Qin, X., Zhang, Z., Huang, C., Dehghan, M., Zaiane, O. R., and Jagersand, M. U2-net: Going deeper with nested u-structure for salient object detection. Pattern Recognition, 106, 107404 (2020).

- (7) Amos, B., Xu, L., and Kolter, J. Z. Input convex neural networks. In International Conference on Machine Learning, p. 146. (2017).

- (8) Derrida, B., Evans, M. R., Hakim, V., and Pasquier, V. Exact solution of a 1d asymmetric exclusion model using a matrix formulation. J. Phys. A: Math. and Gen., 26, 1493 (1993).

- (9) Blythe, R. A. and Evans, M. R. Nonequilibrium steady states of matrix-product form: a solver’s guide. J. Phys. A: Math. and Gen., 40, R333 (2007).

- (10) Krapivsky, P. L., Redner, S., and Ben-Naim, E. A kinetic view of statistical physics. Cambridge University Press, 2010.

- (11) Karras, T., Aittala, M., Laine, S., Härkönen, E., Hellsten, J., Lehtinen, J., and Aila, T. Alias-free generative adversarial networks. Advances in Neural Information Processing Systems, 34 (2021).

- (12) Karras, T., Laine, S., Aittala, M., Hellsten, J., Lehtinen, J., and Aila, T. Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8110 (2020).

- (13) Van Nieuwenburg, E. P., Liu, Y.-H., and Huber, S. D. Learning phase transitions by confusion. Nature Physics, 13, 435 (2017).

- (14) Carrasquilla, J. and Melko, R. G. Machine learning phases of matter. Nature Physics, 13, 431 (2017).

- (15) Rem, B. S., Käming, N., Tarnowski, M., Asteria, L., Fläschner, N., Becker, C., Sengstock, K., and Weitenberg, C. Identifying quantum phase transitions using artificial neural networks on experimental data. Nature Physics, 15, 917 (2019).

- (16) Wang, J., Zhang, W., Hua, T., and Wei, T.-C. Unsupervised learning of topological phase transitions using the Calinski-Harabaz index. Physical Review Research, 3, 013074 (2021).

- (17) Canabarro, A., Fanchini, F. F., Malvezzi, A. L., Pereira, R., and Chaves, R. Unveiling phase transitions with machine learning. Physical Review B, 100, 045129 (2019).

- (18) Jiang, B., Wu, T.-Y., Zheng, C., and Wong, W. H. Learning summary statistic for approximate bayesian computation via deep neural network. Statistica Sinica, p. 1595 (2017).

- (19) Chen, Y., Zhang, D., Gutmann, M., Courville, A., and Zhu, Z. Neural approximate sufficient statistics for implicit models, arXiv:2010.10079.

- (20) Walker, N., Tam, K.-M., and Jarrell, M. Deep learning on the 2-dimensional Ising model to extract the crossover region with a variational autoencoder. Scientific reports, 10, 1 (2020).

- (21) Tran, N.-T., Bui, T.-A., and Cheung, N.-M. Dist-gan: An improved GAN using distance constraints. In Proceedings of the European Conference on Computer Vision (ECCV), p. 370 (2018).

- (22) Novikov, G. S., Panov, M. E., and Oseledets, I. V. Tensor-train density estimation. In Uncertainty in Artificial Intelligence, pp. 1321, PMLR, 2021.

- (23) Zacharov, I., Arslanov, R., Gunin, M., Stefonishin, D., Bykov, A., Pavlov, S., Panarin, O., Maliutin, A., Rykovanov, S., and Fedorov, M. “Zhores”—petaflops supercomputer for data-driven modeling, machine learning and artificial intelligence installed in Skolkovo Institute of Science and Technology. Open Engineering, 9, 512 (2019).

Appendix A Learned posterior distributions for 2D Ising model

Appendix B StyleGAN v3 linear interpolation between three base faces

Appendix C Reconstructed Fisher metrics

Appendix D StyleGAN v3 latent space visualization

D.1 Density-based visualization of the posterior distribution on the space of macroscopic parameters

D.2 Log-density-based visualization of the posterior distribution on the space of macroscopic parameters