Counterfactual Explanations via Latent Space Projection and Interpolation

Abstract

Counterfactual explanations represent the minimal change to a data sample that alters its predicted classification, typically from an unfavorable initial class to a desired target class. Counterfactuals help answer questions such as "what needs to change for this application to get accepted for a loan?". A number of recently proposed approaches to counterfactual generation give varying definitions of "plausible" counterfactuals and methods to generate them. However, many of these methods are computationally intensive and provide unconvincing explanations. Here we introduce SharpShooter, a method for binary classification that starts by creating a projected version of the input that classifies as the target class. Counterfactual candidates are then generated in latent space on the interpolation line between the input and its projection. We then demonstrate that our framework translates core characteristics of a sample to its counterfactual through the use of learned representations. Furthermore, we show that SharpShooter is competitive across common quality metrics on tabular and image datasets while being three orders of magnitude faster than two comparable methods and excels at measures of realism, making it well-suited for high velocity machine learning applications which require timely explanations.

1 Introduction

Machine learning models, leveraging ever larger datasets, have had notable recent successes in domains like healthcare, finance and industry. These models exhibit well-known interpretability challenges. As a result, researchers have developed a variety of techniques to explain the model’s behavior by providing feature importances for each data sample. Some well known methods that provide post-hoc local explanations include those that observe deviations relative to a reference input (DeepLift Shrikumar et al. [2017] and Integrated Gradients) Sundararajan et al. [2017], those that perturb the inputs and measure the effects (LIME) Ribeiro et al. [2016], and those that take a game theoretic approach (SHAP) Lundberg and Lee [2017]. While each has its particular benefits and observed drawbacks they share a common goal: given a prediction, estimate the effect of each feature on that prediction. In a binary classification setting, these approaches seek to answer a similar question. For a model with two classes A and B and an input sample x, they attempt to answer the question "what evidence did the model rely on to make its prediction of class A for input x?" Answering this question, in this format, can be useful for an audience that is familiar with machine learning.

For other audiences, it may be useful to answer a slightly modified question "why didn’t the model choose class B for this input?" and to get to that answer one might ask "what would have had to be different about x for it to result in B?" The exploration of outcomes in alternative but very similar worlds is known as counterfactual analysis. Here the alternative world is one in which the model had predicted something different. Given a trained model, the only way for the model to predict something different is for the inputs to change. The counterfactual explanation is in the form of which features of the data have to change by what amount to alter the predicted class. There are many benefits to this form of explanation. Counterfactual reasoning is a common logical approach for humans and therefore is easy to interpret Fernandez et al. [2020].

However, counterfactual explanations are not without their limitations. Depending on the properties of the data, the classifier and the counterfactual method, it is possible to generate an out-of-sample counterfactual. Out-of-sample counterfactuals can result in the explanations not being feasible (i.e. unlikely to be attained since it is not seen in the training data). An example of this is adversarial samples Goodfellow et al. [2015] which resemble the original class but have changed in imperceptible ways to fool the classifier. We note that for our purpose, valid counterfactual explanations are not adversarial in nature; they are a consequence of making meaningful changes to an input. In addition to challenges of remaining in-sample, counterfactuals are susceptible to a curse of dimensionality. High dimensional input spaces can produce high dimensional explanations with limited utility for those seeking to interpret the model (i.e they may not be sparse) Braun et al. [1956]. Additionally, searching over the high dimensional space of possible counterfactuals is computationally costly.

The majority of the current methods focus on providing explanations to people impacted by the decisions made by these models. Our work focuses on providing explanations to a neglected audience - the intermediaries ultimately responsible for their use, situated between model developers and the individuals that are subjected to the models decisions. We focus on filling this gap with SharpShooter. It is essential to provide those audiences with methods to guide building, understanding, and implementing models. We emphasize their use as explanations for the model a necessary step before trying to afford recourse.

We require that a counterfactual explanation be high quality, feasible (i.e. in sample), sparse, and computationally efficient to generate. To achieve these properties we propose SharpShooter a method for generating counterfactual explanations using latent representations. We demonstrate this approach on three datasets: MNIST Lecun et al. [1998] (for its visual appeal) and two tabular datasets: the UCI credit dataset Yeh and hui Lien [2009] (important categoricals), and Lending Club George [2018] (less important categoricals).

Our contributions are: (i) a model agnostic framework for finding actionable and realizable counterfactual explanations that is scalable in data with low computational cost (ii) a novel visualization of the decision boundary and the trajectories of the counterfactual search (iii) a comparison of methods across image and tabular datasets

2 Background & Previous Work

This work sits at the intersection of generative modeling and explainable artificial intelligence (XAI). We will detail some relevant work in the subsections below.

Generative modeling

For SharpShooter, crafting counterfactuals requires creating new samples from a learned data distribution. Generative models, which attempt to learn are well suited for the task. Broadly speaking these approaches fall into two categories generative adversarial networks (GANs) Goodfellow et al. [2014] and normalizing flows Kobyzev et al. [2020], Rezende and Mohamed [2015]. Besides when used for improving the latent capacity of VAEs, both GANs and normalizing flows focus largely on generation (or , and less on the latent space of the hidden variables themselves. For instance, GANs learn to generate synthetic samples from noise while normalizing flows use a series of transforms and inverse transforms to learn relationships between hidden variables and the input.

To gain greater control over the latent space we use variational autoencoders (VAE) Kingma and Welling [2013]. Variational autoencoders have a similar encoder-decoder structure to autoencoders, however VAEs additionally learn a posterior distribution over latent codes through the use of variational inference Blei et al. [2017]. In practice VAEs are learned through maximizing a lower bound on frequently known as the evidence lower bound (ELBO) Doersch [2021]. This formulation as a lower bound helps decompose the objective function into two parts: a reconstruction component related to the decoding network and a Kullback–Leibler divergence () term that measures the asymmetric distance from the latent codes to the standard normal distribution of the prior in the standard VAE. The normalization of latent space in VAEs through the divergence helps provide structure over the latent codes . In SharpShooter, we use the -VAE to encourage continuous latent code spaces Higgins et al. [2016].

Explainable artificial intelligence

Much of the work on local explanations for machine learned models has focused on local attributions, per sample feature importances and their aggregation Shrikumar et al. [2017], Ribeiro et al. [2016], Lundberg and Lee [2017], Datta et al. [2016], Ibrahim et al. [2019]. While useful for model developers, feature importances are less helpful in other contexts. The output and processes used to create these explanations are often difficult to understand for those not well versed with the background such as end-users.

A second branch of explainability is focused on counterfactual explanations; explanations that represent plausible changes for a user or observation to change their predicted classification. Counterfactual explanations are useful for multiple audiences, with most of the work centering on explanations for the people subject to a disadvantageous decision. Wachter et al Wachter et al. [2017] popularized the notion of using counterfactuals as way to provide recourse to data subjects in response to the EU General Data Protection Regulation (“GDPR”). They leverage the principle of "the closest possible world" in motivating their work. The notions of recourse Ustun et al. [2019] and fairness Joshi et al. [2019] are still major themes of research. This leaves a gap of providing counterfactual explanations for the audience that facilitates, mitigates and deliberates before a model gets deployed.

A good survey of available methods is available in Verma et al Verma et al. [2020]. Here we will frame much of that work through the lens of our use case, and highlight selected relevant work. We broadly separate the literature into two relevant branches, methods that involve input feature perturbations and those that use perturbations of hidden variables of generative or probabilistic models. We include comparisons to a method from each branch in our experiments in our experiments in Section 4.

Input feature perturbations

One large branch of the literature creates counterfactuals by perturbing the input feature space. For instance, early work Lash et al. [2017] under the name of inverse classification maintained sparsity by partitioning the features into immutable and mutable features, and imposing budgetary constraints on allowable changes to the mutable features. Likewise Laugel et al Laugel et al. [2018] advocated a sampling approach with their growing spheres method to traverse the input space where Gomez et al Gomez et al. [2020] submitted a heuristic search method supported with visualisations to the FICO data challenge FICO [2018]. Their considerations of data distribution and feature importance, along with counterfactual explanations are in line with our current work.

Dhurandhar et al Dhurandhar et al. [2018] utilize gradient descent in input space to find contrastive explanations, which decompose into pertinent positives and pertinent negatives. They included an autoencoder loss to keep the explanations in sample. Van Looveren et al Looveren and Klaise [2019] accelerate previous gradient descent methods with the introduction of prototypes, guiding the gradient descent towards the average value of the target class as determined by averaging the training sets representation in the latent space. Russell Russell [2019] improves on the stability of the optimization problem posed in Wachter et al. [2017] and extends beyond considering only categorical features Ustun et al. [2019] in posing a mixed integer programming formulation. Where Poyiadzi et al Poyiadzi et al. [2020] create counterfactuals by forming a network of feasible paths following high density regions of input space. GRACE Le et al. [2020] was designed for neural networks on tabular data that combines the concepts of contrastive explanations with interventions by performing constrained gradient descent adding an additional loss that is a measure of information gain to keep the resulting explanations sparse.

Latent space perturbations

While intuitive in idea, input feature perturbation methods, without regularization, can generate counterfactuals unconvincing to the human eye that resemble traditional adversarial attacks Szegedy et al. [2013]. To address this shortcoming, latent space perturbations methods utilize the machinery of generative and probabilistic models to insure counterfactuals have high probability under the data distribution .

For instance ExplainGAN Samangouei et al. [2018], a method for finding counterfactual explanations for images, jointly trains multiple autoencoders using the signal from the classifier and discriminators to inform the learned representations. Their approach is focused on deep learning and computer vision tasks, while our approach is domain and model agnostic. Pawelczyk et al Pawelczyk et al. [2020] focus on tabular datasets by perform growing sphere perturbation in the latent space of a conditional variational autoencoder to generate counterfactuals. Where Balasubramanian et al Balasubramanian et al. [2020] and Joshi et al Joshi et al. [2019] directly perform gradient descent in the latent space of a variational autoencoder with various regularization terms. While other work focuses on topics of causality and counterfactuals within the context of generative models Mahajan et al. [2020].

Both latent space and input space methods differ substantially in the types of models and the datasets they can be used for, as well as the amount of model access they require (see Verma et al Verma et al. [2020] for a full list). By contrast SharpShooter is a model and data agnostic (although our primary focus is on tabular data).

3 SharpShooter

Our method relies on having access to the dataset and a previously trained classifier that the user wants to explain with counterfactual explanations. We will use the dataset to learn two distinct autoencoders with the algorithm flow shown in Figure 1.

Target variational autoencoder

The starting point of the SharpShooter algorithm is the target variational autoencoder (TVAE). We train the TVAE on the subset of the data that is labeled as the preferred target class. A sample from the base class () is passed through the TVAE to give a reconstruction . This process creates a projection of the base observation to the target class.

This raises an interesting question: how does an autoencoder make sense of data from a class it has never seen before? If the model has only ever seen the target class, how does it reconstruct a base class sample? Does it place these latent codes in an area of very low data distribution and the sample becomes unrecognizable as the target class? or does the model generate valid reconstructions?

We find that with the use of the proper prior, the TVAE will correctly produce an in-sample projection. While too strong of a prior will collapse the latent distribution, a prior with wider variance prevents the creation of viable counterfactuals. This weighting is closely determined by the coefficient . A higher biases the model away from purely optimizing reconstruction loss and ensures that codes still have a roughly normally distributed shape. This term ensures that even points with low mass in have codes close to the prior distribution .

The limited capacity of amortized inference models (such as a VAE) to both reconstruct and perform inference is known as the amortization gap Cremer et al. [2018]. In prior contexts, the amortization gap has been seen as an obstacle to overcome, but the SharpShooter algorithm takes advantage of the lossy gap in inference when translating bases to target counterfactuals. If reconstruction and latent code inference becomes too direct then predictions on the unseen class stop classifying as the target class. We show this trade-off and investigate it in the appendix and in Figures 11-17.

Unified variational autoencoder

We train the unified variational autoencoder (UVAE) on both classes. The encoder of the UVAE is used to embed both a base class sample, , and its target class projection, , in a common learned representation. We then use linear interpolation to sample along the line between a base sample and its TVAE-generated projection in UVAE space. We use the UVAE to estimate the joint data distribution and its latent space so that we can ensure counterfactuals both lie on the data manifold, and that they are close to their original sample in latent distance. While a powerful method that seems to succeed in our experiments on MNIST and the Lending Club datasets, there are additional difficulties building non-degenerate -VAEs on the highly imbalanced UCI Credit dataset with its higher tabular dimensionality.

3.1 Algorithm

Algorithm 1 shows pseudo code as a companion to Figure 1 which is used to generate the counterfactuals in Section 4.

The dataset is composed where we can split into two classes where has and has under the classifier . For training we separate the dataset according to the class and train the target VAE and the unified VAE . We denote their encoders and , and their decoders and respectively.

We first pass a base sample through the TVAE to obtain the sample’s target projection . Next, we take both and and pass them through the encoder of the UVAE to obtain and respectively. Once we have both "endpoints" in joint latent space, an encoded counterfactual candidate, , is generated using linear interpolation with in . The candidate counterfactual is decoded and the classifier assesses its fitness. The counterfactual is then accepted if it both crosses the decision boundary and is within a user specified tolerance which is the desired maximum distance from for the generated counterfactual’s classification score. For the experiments in Section 4, the decision boundary is set to be .

The line search is then executed by sampling a finite number of . An alternative formulation of this search can also be done using a one-dimensional gradient descent which is demonstrated in Algorithm 2 in the appendix. Gradient descent can be advantageous over a simple line search depending on the user criteria (tighter tolerance can be hard to hit with sampling) and the use case (the presence of categoricals can make the probabilities along the line either discontinuous and/or non-monotonic).

3.2 Visualization of the latent space, and decision boundary

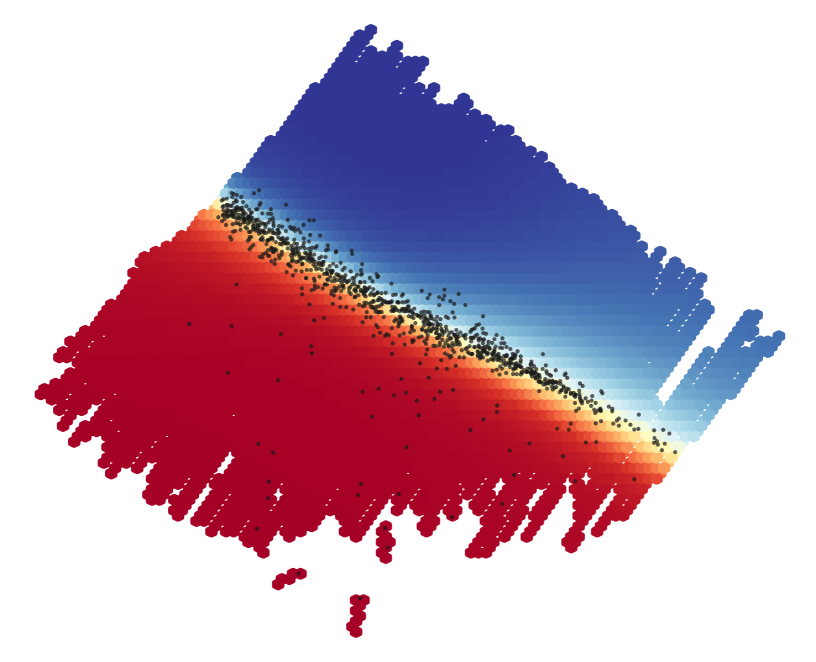

We visualize the decision boundary in Figure 2 using the low dimensional projections of the latent codes in Unified VAE space for the base class , the target class , and the generated counterfactuals . The colors denote prediction score (with blue denoting the base class) and the black dots represent the final SharpShooter counterfactuals.

The visualization of the decision boundary in Figure 2 was generated by sampling many points along the candidate counterfactual line . The interpolated points are then decoded by the UVAE and passed to the classifier to obtain a classification score: i.e. . Plotting is then done using a weighted hexbin over the UVAE space to plot in two dimensions. Figure 7 shows the underlying codes for all classes and their projections, and Figure 8 visualizes the candidate counterfactual lines pairing with their corresponding with more accompanying description found in Appendix A.2.

Figure 2 offers valuable insight into both the topological structure of the classifier’s decision boundary and the Unified VAE’s latent space. In the case of the Lending Club dataset, we see two clearly defined regions of classification scores. Without labels the UVAE is managing to create an approximately linear separation between the two classes with a highly nonlinear transformation mapping from code to covariate space. Even in Figure 2(a), where we only plot the first two PCA components of a 20-dimensional latent space, we see a boundary between the two classes with large regions of classifier probability close to 0 or 1. While the boundary for MNIST is fuzzier than in Figure 2(b), this effect is largely due to greater dimensionality of MNIST’s latent code space than in the UVAE for Lending Club. With a few notable exceptions, the two classes are separable by a single hyperplane. These results indicate an exciting correspondence between the classifier and the UVAE, even though the two models have disparate objective functions and differ in their architectures.

Sampling along the interpolation line from a base to its transformed counterfactual provides an attractive path to visualize the qualities of the classifier to provide simplified views of an abstract space. By comparison, performing a similar exercise, but instead sampling an evenly spaced cloud of points in space will place too much weight on low-probability points. Likewise, as we are travelling along the same trajectory that the algorithm itself traverses when selecting the chosen counterfactual this visualization method can provide further intuition into the workings of the SharpShooter process.

4 Results

We compare SharpShooter (SS) against two other standard counterfactual methods: gradient descent in input space (GDI) and gradient descent in latent space (GDL). In both cases gradients are taken of the loss function of the distance from the classifier score to the chosen cutoff . GDI is then solved by making updates to the input until the distance is less than the tolerance , that is, , whereas GDL is given by making updates to the latent representation , that is, . Note that while a few other counterfactual methods exist that are model agnostic and work on both tabular and image datasets, GDI and GDL broadly categorize many of the alternative approaches taken in the literature.

Metrics for comparison

We examine the quality of counterfactuals by tabulating these metrics surveyed from literature: (i) time required to find a CF for a given sample (ii) validity (val), the percentage success in generating counterfactuals that cross the decision boundary (iii) proximity (prox), distance from input sample to counterfactual in latent space (iv) sparsity (spars), percentage of features changed (v) classifier shift - pass CF sample through UAE and check the change in classification score (vi) reconstruction loss - a measure of the CF being in sample. Further details about metrics and the classifiers used in the experiments can be found in the appendix.

MNIST

For MNIST, we formulate the problem as a binary classification problem of predicting fours (our base class) and nines (our target class). While multiclass image counterfactuals are not the primary focus of SharpShooter, MNIST provides a naturally intuitive visualization of the impact of projecting from the base class to the target class. The counterfactual examples from SharpShooter shown in Figure 3 are exhibit a blend of features from the input (e.g. angle of strokes) and from the target class (e.g. rounder and more closed tops). Interestingly, all methods show struggles in interpreting the last out-of-sample image of a four.

The quality of counterfactual explanations as measured by the metrics discussed in the appendix are summarized in Table 1. These metrics show SharpShooter outperforming the other methods in validity, classifier shift, reconstruction score, and time, but lagging in proximity and sparsity.

Note that the thin layer of changed pixels in the counterfactuals for GDI in Figure 3 is reflected by the method’s poor performance in classifier shift and reconstruction score. The noisy transformations seen in those counterfactuals is not reproduced in UVAE reconstructions - leading to the lower performance in CS and RECON. This is why we introduce these two measures as metrics of realism of counterfactuals: the UVAE smooths out samples far from the underlying data distribution. Meanwhile, SharpShooter counterfactuals lie closer to the data distribution, and appear more like the target class in Figure 3.

| Method | val | prox | spars | CS | recon | time |

|---|---|---|---|---|---|---|

| SS | 0.97 | 1.36 | 0.32 | 0.13 | 1.5e-3 | 3e-3 |

| GDL | 0.79 | 1.13 | 0.30 | 0.17 | 3.3e-3 | 1.82 |

| GDI | 0.67 | 0.84 | 0.42 | 0.41 | 6.4e-3 | 1.81 |

UCI Credit Card Default

Following new banking regulation in 1990 Wang [2021], Taiwan faced a credit card debt crisis. The UCI credit default dataset Yeh and hui Lien [2009], contains demographic, billing and payment information and labels of default from the period of April to September of 2005 while the crisis is deepening. The UCI credit dataset contains a high number of categorical variables. Complicating things further, the labels have a large class imbalance on the scale of 20 to 1 in favor of the non-defaulted class. A classifier is built to predict default with an AUC of 0.76 - one of the drawbacks of modeling an unsteady temporal process as steady, there is noise on the labels in the form of samples that have yet to default.

Due to these complications, training a standard VAE for either part of the SharpShooter process proves more difficult than training for the other datasets. The nature of divergence attempts to form spherical latent distributions and is zero-avoidingDieng et al. [2019], while at the same time the reconstruction loss on the categorical variables encourages latent codes to be further apart. The tension caused by these competing forces can cause for either collapsed latent spaces or areas of low probability mass. This push and pull can be seen in the TSNE of UCI credit’s latent codes (see Figure 7) which show islands of mass, even within the same class type hinting at clusters in the latent space. The shape of the latent space for the TVAE and UVAE thus requires careful tuning parameters such as and categorical reconstruction weights.

The UCI credit dataset serves as a good counterexample necessitating the use of more expressive generational models with fundamentally different code distributions for tabular datasets with higher categorical dimensionality.

| Method | val | prox | spars | CS | recon | time |

|---|---|---|---|---|---|---|

| SS | 0.505 | 3.99 | 0.309 | 0.136 | 1.58 | 1e-3 |

| GDL | 0.317 | 2.25 | 0.113 | 0.154 | 0.74 | 8.14 |

| GDI | 0.752 | 0.83 | 0.000 | 0.170 | 1.28 | 8.02 |

Changes made by the three methods on the continuous variables of the dataset - presented in a scaled log value - can be seen in Figure 4. The SharpShooter changes in bill amount are in striking contrast to the gradient descent methods. While similar for April, a structural change is seen in May, and the later months suggest drastic reductions in bill amounts. The reductions suggested in payment amount correlate with lower bill amounts.

Likewise Figure 5 shows values for education, marital status, and pay timing. For categorical variables, SharpShooter shows a tendency to create counterfactuals where the payment is on time, and the marital status changes to married. On the other hand, GDI and GDL tend not to make many changes to categorical variables. This could be due to a relative inability of gradient descent methods to change categorical variables, a property not shared by the VAE-based SharpShooter.

Lending Club

Lending Club is a peer-to-peer lending company that would periodically release data on its loan portfolio. This tabular dataset included information on whether a borrower defaulted on their loan, the size of the loan, their annual income, debt to income ratio, FICO score, and the loan length. We train a classifier to predict default using five continuous and one categorical feature and obtains an AUC of 0.95. A summary of quality metrics for the methods is seen in Table 3.

| Method | val | prox | spars | CS | recon | time |

|---|---|---|---|---|---|---|

| SS | 0.98 | 1.66 | 0.49 | 0.33 | 0.019 | 1.6e-3 |

| GDL | 0.91 | 1.35 | 0.74 | 0.313 | 0.015 | 0.71 |

| GDI | 0.93 | 0.71 | 0.50 | 0.19 | 0.037 | 1.21 |

For this experiment, the latent dimension of both autoencoders is two. In low dimensional space, the reconstruction loss performance of SharpShooter becomes very close to GDL. Otherwise Sharpshooter shows high validity and generates counterfactuals that are more sparse. Even though SharpShooter sees a larger classifier shift and reconstruction loss on this dataset, time is still much lower than the other two methods.

To illustrate these differences between methods the distributions of changes made for counterfactual explanations are shown in Figure 6. Given the latent search space was only 2, it is unsurprising that SharpShooter and GDL were not very large. The most notable difference between the two sets of counterfactuals was with respect to loan size, which was mostly unchanged in SharpShooter counterfactuals. Meanwhile, gradient descent in the input space makes the largest changes for variable like debt to income ratio (dti) and annual income.

5 Conclusion

In this paper we present SharpShooter: a model-agnostic algorithm for finding counterfactuals via linear interpolation in latent space. SharpShooter implements a framework that uses two variational autoencoders and introduces the concept of a target VAE that projects a sample to a counterfactual class. We demonstrate SharpShooter’s advantages and disadvantages when compared against two general methods on three datasets (MNIST, UCI credit card default, and Lending Club loan default). We show that while not always the most sparse or proximal, our method is orders of magnitude faster on all datasets and excels at realism, as measured by two novel metrics. We provide a thorough examination of the created counterfactuals and their attributes, meanwhile suggesting that future work could revolve around improving latent space structure. Lastly we introduce a straightforward method of visualization of the decision boundary that helps provide insight into the classifier, the joint representation space, and the SharpShooter process.

References

- Balasubramanian et al. [2020] Rachana Balasubramanian, Samuel Sharpe, Brian Barr, Jason Wittenbach, and C Bayan Bruss. Latent-cf: A simple baseline for reverse counterfactual explanations. arXiv preprint arXiv:2012.09301, 2020.

- Blei et al. [2017] David M Blei, Alp Kucukelbir, and Jon D McAuliffe. Variational inference: A review for statisticians. Journal of the American statistical Association, 112(518):859–877, 2017.

- Braun et al. [1956] Jochen Braun, Christof Koch, Joel L. Davis, and George A. Miller. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review, 63:81–97, 1956.

- Cremer et al. [2018] Chris Cremer, Xuechen Li, and David Duvenaud. Inference suboptimality in variational autoencoders, 2018.

- Datta et al. [2016] A. Datta, S. Sen, and Y. Zick. Algorithmic transparency via quantitative input influence: Theory and experiments with learning systems. In 2016 IEEE Symposium on Security and Privacy (SP), pages 598–617, May 2016. doi: 10.1109/SP.2016.42.

- Dhurandhar et al. [2018] Amit Dhurandhar, Pin-Yu Chen, Ronny Luss, Chun-Chen Tu, Paishun Ting, Karthikeyan Shanmugam, and Payel Das. Explanations based on the missing: Towards contrastive explanations with pertinent negatives. In Proceedings of the 32nd International Conference on Neural Information Processing Systems, NIPS’18, page 590–601, Red Hook, NY, USA, 2018. Curran Associates Inc.

- Dieng et al. [2019] Adji B Dieng, Yoon Kim, Alexander M Rush, and David M Blei. Avoiding latent variable collapse with generative skip models. In The 22nd International Conference on Artificial Intelligence and Statistics, pages 2397–2405. PMLR, 2019.

- Doersch [2021] Carl Doersch. Tutorial on variational autoencoders, 2021.

- Fernandez et al. [2020] Carlos Fernandez, Foster J. Provost, and Xintian Han. Explaining data-driven decisions made by AI systems: The counterfactual approach. CoRR, abs/2001.07417, 2020. URL https://arxiv.org/abs/2001.07417.

- FICO [2018] FICO. Explainable machine learning challenge. https://community.fico.com/s/explainable-machine-learning-challenge, 2018.

- George [2018] Nathan George. All lending club loan data. https://www.kaggle.com/wordsforthewise/lending-club, 2018. Accessed: 2021-01-30.

- Gomez et al. [2020] Oscar Gomez, Steffen Holter, Jun Yuan, and Enrico Bertini. Vice: Visual counterfactual explanations for machine learning models, 2020.

- Goodfellow et al. [2014] Ian J Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial networks. arXiv preprint arXiv:1406.2661, 2014.

- Goodfellow et al. [2015] Ian J. Goodfellow, Jonathon Shlens, and Christian Szegedy. Explaining and harnessing adversarial examples, 2015.

- Higgins et al. [2016] Irina Higgins, Loic Matthey, Arka Pal, Christopher Burgess, Xavier Glorot, Matthew Botvinick, Shakir Mohamed, and Alexander Lerchner. beta-vae: Learning basic visual concepts with a constrained variational framework. 2016.

- Ibrahim et al. [2019] Mark Ibrahim, Melissa Louie, Ceena Modarres, and John Paisley. Global explanations of neural networks mapping the landscape of predictions. In AAAI. AAAI, 2 2019.

- Joshi et al. [2019] Shalmali Joshi, Oluwasanmi Koyejo, Warut Vijitbenjaronk, Been Kim, and Joydeep Ghosh. Towards realistic individual recourse and actionable explanations in black-box decision making systems, 2019.

- Kingma and Welling [2013] Diederik P Kingma and Max Welling. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2013.

- Kobyzev et al. [2020] Ivan Kobyzev, Simon Prince, and Marcus Brubaker. Normalizing flows: An introduction and review of current methods. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2020.

- Lash et al. [2017] Michael T. Lash, Qihang Lin, W. Nick Street, and Jennifer G. Robinson. A budget-constrained inverse classification framework for smooth classifiers. In Proceedings of The IEEE International Conference on Data Mining (ICDM), ICDM’17, pages 1184–1193, New Orleans, Louisiana, 2017. IEEE.

- Laugel et al. [2018] Thibault Laugel, Marie-Jeanne Lesot, Christophe Marsala, Xavier Renard, and Marcin Detyniecki. Comparison-based inverse classification for interpretability in machine learning. In Jesus Medina, Verdegay Ojeda-Aciegoand Manuel, Jose Luis, David A. Pelta, Inma P. Cabrera, Bernadette Bouchon-Meunier, and Ronald R. Yager, editors, Information Processing and Management of Uncertainty in Knowledge-Based Systems. Theory and Foundations, pages 100–111, Cham, 2018. Springer International Publishing. ISBN 978-3-319-91473-2.

- Le et al. [2020] Thai Le, Suhang Wang, and Dongwon Lee. Grace: Generating concise and informative contrastive sample to explain neural network model’s prediction, 2020.

- Lecun et al. [1998] Yann Lecun, Léon Bottou, Yoshua Bengio, and Patrick Haffner. Gradient-based learning applied to document recognition. In Proceedings of the IEEE, pages 2278–2324, 1998.

- Looveren and Klaise [2019] Arnaud Van Looveren and Janis Klaise. Interpretable counterfactual explanations guided by prototypes. CoRR, abs/1907.02584, 2019. URL http://arxiv.org/abs/1907.02584.

- Lundberg and Lee [2017] Scott M. Lundberg and Su-In Lee. A unified approach to interpreting model predictions. In Isabelle Guyon, Ulrike von Luxburg, Samy Bengio, Hanna M. Wallach, Rob Fergus, S. V. N. Vishwanathan, and Roman Garnett, editors, Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, 4-9 December 2017, Long Beach, CA, USA, pages 4765–4774, 57 Morehouse LaneRed HookNYUnited States, 2017. Curran Associates Inc. URL http://papers.nips.cc/paper/7062-a-unified-approach-to-interpreting-model-predictions.

- Mahajan et al. [2020] Divyat Mahajan, Chenhao Tan, and Amit Sharma. Preserving causal constraints in counterfactual explanations for machine learning classifiers, 2020.

- Pawelczyk et al. [2020] Martin Pawelczyk, Klaus Broelemann, and Gjergji Kasneci. Learning model-agnostic counterfactual explanations for tabular data. Proceedings of The Web Conference 2020, Apr 2020. doi: 10.1145/3366423.3380087. URL http://dx.doi.org/10.1145/3366423.3380087.

- Poyiadzi et al. [2020] Rafael Poyiadzi, Kacper Sokol, Raul Santos-Rodriguez, Tijl De Bie, and Peter Flach. Face. Proceedings of the AAAI/ACM Conference on AI, Ethics, and Society, Feb 2020. doi: 10.1145/3375627.3375850. URL http://dx.doi.org/10.1145/3375627.3375850.

- Rezende and Mohamed [2015] Danilo Rezende and Shakir Mohamed. Variational inference with normalizing flows. In International conference on machine learning, pages 1530–1538. PMLR, 2015.

- Ribeiro et al. [2016] Marco Tulio Ribeiro, Sameer Singh, and Carlos Guestrin. Why should i trust you?: Explaining the predictions of any classifier. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pages 1135–1144, New York, NY, USA, 2016. ACM, Association for Computing Machinery.

- Russell [2019] Chris Russell. Efficient search for diverse coherent explanations, 2019.

- Samangouei et al. [2018] Pouya Samangouei, Ardavan Saeedi, Liam Nakagawa, and Nathan Silberman. Explaingan: Model explanation via decision boundary crossing transformations. In The European Conference on Computer Vision (ECCV), Cham, September 2018. Springer International Publishing.

- Shrikumar et al. [2017] Avanti Shrikumar, Peyton Greenside, and Anshul Kundaje. Learning important features through propagating activation differences. In Proceedings of the 34th International Conference on Machine Learning - Volume 70, ICML’17, page 3145–3153. JMLR.org, 2017.

- Sundararajan et al. [2017] Mukund Sundararajan, Ankur Taly, and Qiqi Yan. Axiomatic attribution for deep networks. arXiv preprint arXiv:1703.01365, 2017.

- Szegedy et al. [2013] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian Goodfellow, and Rob Fergus. Intriguing properties of neural networks. arXiv preprint arXiv:1312.6199, 2013.

- Thomas [2018] Rachel Thomas. An introduction to deep learning for tabular data. https://www.fast.ai/2018/04/29/categorical-embeddings/, Apr 2018. URL https://www.fast.ai/2018/04/29/categorical-embeddings/.

- Ustun et al. [2019] Berk Ustun, Alexander Spangher, and Yang Liu. Actionable recourse in linear classification. Proceedings of the Conference on Fairness, Accountability, and Transparency, Jan 2019. doi: 10.1145/3287560.3287566. URL http://dx.doi.org/10.1145/3287560.3287566.

- Verma et al. [2020] Sahil Verma, John Dickerson, and Keegan Hines. Counterfactual explanations for machine learning: A review, 2020.

- Wachter et al. [2017] Sandra Wachter, Brent D. Mittelstadt, and Chris Russell. Counterfactual explanations without opening the black box: Automated decisions and the GDPR. CoRR, abs/1711.00399, 2017. URL http://arxiv.org/abs/1711.00399.

- Wang [2021] Eric Wang. Taiwan’s credit card crisis. https://sevenpillarsinstitute.org/case-studies/taiwans-credit-card-crisis/, 2021.

- Yeh and hui Lien [2009] I-Cheng Yeh and Che hui Lien. The comparisons of data mining techniques for the predictive accuracy of probability of default of credit card clients. Expert Systems with Applications, 36(2, Part 1):2473–2480, 2009. ISSN 0957-4174. doi: https://doi.org/10.1016/j.eswa.2007.12.020. URL https://www.sciencedirect.com/science/article/pii/S0957417407006719.

Appendix A Appendix

A.1 Description of Comparison Metrics

Comparison metrics used are a combination of commonly used metrics from the literature and two novel comparison methods, explained below. Except in the case of validity, all metrics are only calculated for the subset of counterfactual samples that classify in the correct target class.

Validity

Validity is the percentage of generated counterfactuals that correctly cross the decision boundary. The main goal for generating counterfactuals is that they are, indeed, counterfactuals, which requires them to classify as the target class. We take validity with respect to the classifier, noting this makes our practice distinct from analyzing counterfactuals in the causality literature.

Proximity

Proximity is discussed in many papers that promote algorithms for counterfactual generation Verma et al. [2020]. This metric measures how close a counterfactual is to to the base sample it is generated from. Typically this measure is taken as the norm in input space. Here we use the L2 norm in representation space, although we note similar (unreported) patterns for L2 in input space. We do note that proximity is limited in that it does not necessarily tell us much about whether the generated counterfactuals are actually on the data manifold (as seen in Figure 3). Hence we also report two novel measures classifier shift and reconstruction loss, explained below.

Sparsity

Sparsity is another commonly used metric in the counterfactual literature Verma et al. [2020]. Sparsity is a measure of how sparse the change vector is. In our usage, we calculate the change vector and then we count the percentage of dimensions that see changes greater than a half pixel in MNIST, and that see non-zero changes in the categorical and continuous variables in the two tabular datasets.

Classifier Shift

Classifier shift and reconstruction loss are two novel measures of whether a generated counterfactual is realistic. These measures bear resemblance to the VAE-specific measures of and promoted by Van Looveren and Klaise Looveren and Klaise [2019]. Classifier shift is given by where is the classifier and is the UVAE. In other words, we check how much passing the given counterfactual through the UVAE changes its original classification score.

The classifier shift metric relies on the strength of the prior to pull samples that lie farther from the data manifold closer to a higher probability reconstruction. If a sample does not have high probability, the resulting reconstruction from the UVAE will see a large amount of change in terms of its classification score, which is reported in the tables above.

Reconstruction Loss

Similarly to classifier shift, another way in which we can measure the stability of a given counterfactual is to measure its reconstruction loss. The reconstruction loss measures the change in the evidence lower bound (ELBO) for the log likelihood of a sample . Hence we can measure the effect of passing a counterfactual through the UVAE also by measuring its loss with respect to the UVAEs reconstruction (i.e. ). Having a smaller loss is desirable here.

Time

Time, one of the largest benefits of the SharpShooter process, here is reported as the time it takes to generate a single counterfactual sample, on average. SharpShooter is particularly fast in this dimension compared to other methods because generating a sample only requires the predictions of two variational autoencoders for a limited number of points for a fixed number of interpolated points. By comparison, iterative solutions based on gradients or perturbing the input space can be quite expensive if the search distance is large. Notably, we here exclude the overhead time of training the VAEs because, while this time is not trivial, it is exclusively a fixed cost and is not related to the scalability of generation of counterfactuals for a sample of size .

A.2 Visualizations of SharpShooter Latent Spaces

Figure 7 and Figure 8 give insights into how SharpShooter generates counterfactuals and how visualizations were created in Figure 2.

In Figure 7 we first plot the projections of all classes (base , target , and translated base ) in Unified VAE (UVAE) space. This plot shows distinct separation of and in the cases of MNIST and Lending Club with some minor exceptions. Likewise for these datasets, seem well mixed in with the target class as desired. This is the intended outcome of the TVAE’s projection for SharpShooter to produce meaningful counterfactuals because we need a base’s projection to be classified as a target for linear interpolation to cross the decision boundary. By comparison, the higher dimensional, more heavily imbalanced UCI dataset shows less clear separation of and , with jointly mixed amongst both base and target codes.

Figure 8 now examines exactly which point in is paired with which point in . I.e. now we plot a line from a base point to its TVAE transformation. The most immediate observation from this figure is the similar angle and length of many of the lines. This observation is not unfamiliar to the representation learning literature of similar difference vectors between two points implying similar changes. For instance, the classic example is taking the difference vector between "Italy" and "Rome" and adding it to "France" to produce "Paris". This observation raises an interesting question of to what extent can counterfactual be interpreted as a common vector in some representation space.

Lastly, Figure 2 is produced by taking a large number of to produce many , decoding those interpolated codes, and calculating each point’s corresponding classification score. Finally, we plot this large cloud of points as a weighted 2d histogram, and in this case we use a weighted hexbin plot to avoid visual biases. We here note that while the Lending Club dataset is an actual plot, the MNIST plot is much higher dimension. Because of this, we only see the PCA projection of the lines plotted in Figure 8 (a), and hence do not see such a sharp decision boundary as we do in 2 (b).

A.3 Additional views from the SharpShooter process

The TVAE is responsible for projecting samples from the base class to the target class. Philosophically, every base class sample has some innate ’goodness’ to it. The projection to the target class helps reveal those good qualities, and transforms the bad. Figure 9 shows input samples (top) projected to the target class (bottom). These projections on their own might be sufficient for a counterfactual explanation - however they are typically not situated near the decision boundary.

We demonstrate counterfactual candidate generation between an input base class sample and its projection. The candidates are generated by varying in the range . A candidate, , is chosen as the counterfactual sample, , if it classifies as the target class and is within a user specified tolerance of the decision boundary.

A.4 VAE hyperparameter tuning

For each dataset, two separate variational autoencoders were trained: one trained only on the target class (TVAE) and one that was trained on both classes (UVAE). The TVAE and the UVAE had the same architecture and only differed in hyperparameters which will be discussed for each data set below.

For the tabular datasets, UCI credit and Lending Club, we applied a weight to influence the fidelity of reconstruction of the categorical and continuous variables. This weight was applied in the loss function, a combination of mean squared error and categorical or binary cross entropy for UCI credit and Lending Club respectively. The categorical weight was subject to a hyperparameter search over the range of zero to one.

MNIST

For MNIST, the TVAE and UVAE differed on weight and latent dimension. The objective is to have good reconstruction, while maintaining generative capability of the autoencoders (i.e. low mean squared error and low KL divergence). A tertiary consideration for the TVAE was the average probability of the projected base class samples - but was not used in the decision process. Contours of these objectives can be seen in Figure 11 for the TVAE, and in Figure 12 for the UVAE.

The decision is brought into sharper focus by looking at the Pareto plot of the two primary objectives. The typical elbow shape is seen - the optimal points are lower and left most, where you cannot improve one objective without hurting the other.

The final choices of parameters for the TVAE were: weight of 0.085961 with a latent dimension of fourteen. The final choice of hyperparameters for the UVAE were: weight of 0.013037 and a latent dimension of twenty.

UCI credit

For the UCI credit dataset we used an architecture that joined together categorical and continuous variables after passing both sets through separate branches of dense or embedding layers (for the categorical and continuous variables respectively). The two branches were then combined again with a few dense layers before the bottleneck layer, see Figure 21. This process was then reversed in the decoder and is motivated by recent work on embeddings Thomas [2018].

Lending Club

For Lending Club, with only 6 input features, the latent dimension was fixed to be two. The difference in hyperparameters occurred for weight and categorical weight used to combine the means squared error of the continuous variables with the binary cross entropy of the categorical. These hyperparameters were put to a grid search and the final set were chosen in the same manner as the MNIST hyperparameters. The contours of reconstruction error and KL divergence are shown in Figure 16.

Each objective has implications on the resulting counterfactuals. For instance, a high categorical weight tended to pull apart the latent space and would make linear interpolation less feasible, such as in the UCI dataset. Candidates being accepted in areas of low probability space in could imply resulting counterfactuals with low mass in and could be considered less feasible. Similarly, a higher weights help encourage convexity through a "regularization" of the codes towards their standard normal prior. Convexity could be advantageous to SharpShooter by again preventing traversing latent space with low probability mass, but also empirically it seemed to provide a more defined separation of the classes. Finally, reconstruction quality was important due to the desire for base observations to be close to their projections and their interpolated candidates.

The algorithm would look like this:

A.5 Classifier architecture

SharpShooter is agnostic to the architecture of the classifier used. We include the architecture of the classifiers for repeatability. The classifiers used for this study were all built in the Keras API of Tensorflow. Model architecture diagrams are show for each data set below.

For the Lending Club dataset, we down selected to six features; the classifier achieved an AUC of .

A.6 UCI VAE architecture

The combination of continuous and categorical features for the UCI credit dataset required an interesting architecture, that we show below.

For the decoder, the categorical features are split off into distinct outputs, shown on the right in Figure 22, from the single continuous output, labeled ’cont_out’ in the figure.