COTReg: Coupled Optimal Transport based Point Cloud Registration

Abstract

Generating a set of high-quality correspondences or matches is one of the most critical steps in point cloud registration. This paper proposes a learning framework COTReg by simultaneously considering point-wise and structural matchings to estimate correspondences in a coarse-to-fine manner. Specifically, we transform the two matchings into a Wasserstein distance-based and a Gromov-Wasserstein distance-based optimizations, respectively. The task of establishing the correspondences thus can be naturally reshaped to a coupled optimal transport problem. Furthermore, we design an overlap attention module consisting primarily of transformer layers and train it to predict the probability (overlap score) that each point lies in the overlapping region. The overlap score then guides the procedure of correspondences prediction. We conducted comprehensive experiments on 3DMatch, KITTI, and 3DCSR benchmarks showing the state-of-art performance of the proposed method.

Index Terms:

Point cloud, Registration, Point-wise matching, Structural matching, Optimal transport, Coarse-to-fine.1 Introduction

Point cloud registration is to align two or more 3D point clouds acquired from various views, platforms, or at different times into a unified coordinate system [1]. This technique is the cornerstone of many 3D computer vision applications, such as 3D reconstruction [2], augmenting reality [3], autonomous driving [4, 5, 6], cancer radiotherapy [7, 8] and robotics [9, 10].

Recently, various types of deep learning-based point cloud registration approaches have achieved outstanding performance. Some of these methods show that combining conventional optimization approaches and current deep neural networks obtains better accuracies than traditional optimization methods [11]. Algorithms based on correspondences occupy a significant proportion of deep learning-based point cloud registration methods. State-of-the-art correspondence-based pipelines commonly consist of the following stages [12]: feature extraction, correspondence prediction, outlier rejection, and transformation estimation. Such correspondence-based approaches mainly focus on improving registration performance by extracting highly discriminative features [13, 14, 15, 16] or removing outlier correspondences [11, 12, 17]. The correspondence prediction is also a critical stage since the estimation of the rigid motion parameters depends on the correct correspondences. However, very few works have been devoted to improving correspondence prediction algorithms [11]. Therefore, this paper focuses on developing a correspondence prediction algorithm to obtain more accurate correspondences for pairwise point cloud registration.

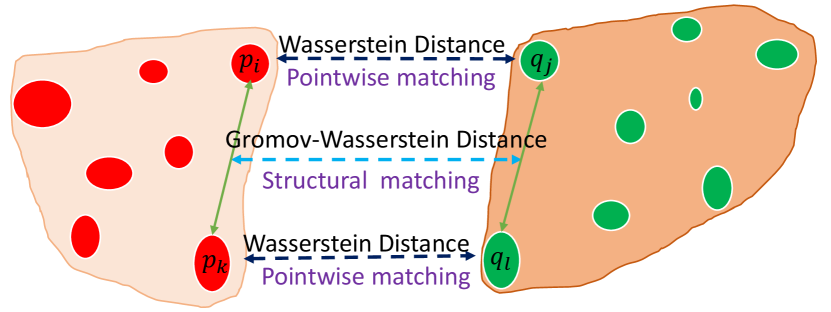

In learning-based 3D point registration, pointwise matching is often used to establish matches between two point clouds [18]. The core idea of pointwise matching is that a pair of points between the source and target point clouds having the most similar feature representations are identified as the corresponding points. However, the putative correspondences produced by the pointwise matching contain many false matches and outliers [19]. For example, the feature matching recall of recent FCGF [20] drops to 80% when the inlier ratio is set to 0.2, meaning at least 20% correspondences contain more than 80% outliers [20]. We observe that the following two factors will cause false matches and outliers: first of all, some inliers are assigned to outliers because we treat the inliers and outliers equally in the correspondence prediction stage [15]; second, only considering pointwise matching is insufficient to find the correct correspondences due to the ambiguous and repeated patterns in the 3D point clouds [16]. To solve the above problems, we introduce the overlap scores, which act as auxiliary information to assist in correspondence prediction. When combining overlap scores with pointwise matching, it induces an optimal transport problem [21], i.e., Wasserstein distance (WD) [21]. Furthermore, inspired by the success of structural information (edge length) in graph matching [22, 23], we also consider it as an additional constraint to produce correspondences for registration. In our case, the structure is defined as the distance between two points within a point cloud in both the Euclidean and feature spaces, so the structural matching is formulated by comparing the geometric difference based on the Gromov-Wasserstein distance (GWD) [21]. The pointwise and structural matchings are illustrated in Figure 1. By integrating the pointwise and structural matchings into a unified learning framework, we formulate the problem of establishing the correspondences of point cloud registration as a coupled optimal transport problem. It can be easily integrated into deep neural networks for end-to-end training. Our method is based on the intuition that incorporating both pointwise and structural matchings into producing correspondences can resolve the ambiguity issue, achieving better performance than using either one of the two matchings. We also design a positional encoding scheme that assigns intrinsic geometric properties to per-point features to enhance distinctions among point features in indistinctive regions. Specifically, the coupled optimal transport can incorporate both the pointwise and the structural matchings with the overlap scores acting as empirical distributions to effectively improve the accuracy of the generated correspondences in 3D point cloud registration. To reduce the complexity of the matching process, our model adopts the coarse-to-fine mechanism, a hierarchical matching strategy to generate the correspondences.

To the best of our knowledge, we are the first to apply coupled optimal transport to predict correspondences for point cloud registration. The contribution of this paper is summarized as follows:

-

•

We incorporate overlap scores, pointwise and structural matchings in a joint model based on coupled optimal transport to produce correspondences in a coarse-to-fine manner.

-

•

We transform the pointwise matching into Wasserstein distance-based optimization to generate correspondences.

-

•

We cast the structural matching into Gromov-Wasserstein distance-based optimization to predict matches. The structural matching is formulated by considering the structural difference in both Euclidean and feature spaces.

-

•

We introduce an overlap attention module to learn point-wise features and overlap scores.

-

•

Comprehensive experiments on indoor and outdoor, synthetic, and cross-source point clouds demonstrate that the proposed method can effectively improve the accuracy of 3D point cloud registration.

2 Related Work

We review both traditional and deep learning-based point cloud registration methods. Since optimal transport is a major component in the 3D correspondence prediction of the proposed learning framework, we also review the relevant works.

2.1 Traditional Point Cloud Registration

The best-known traditional registration algorithm is Iterative Closest Point (ICP) [24], which alternates between rigid motion estimation and the correspondences searching [25, 26] by solving a -optimization. However, ICP converges to spurious local minima due to the non-convexity. Based on this algorithm, many variants have been proposed. For example, the Levenberg-Marquardt ICP [27] uses a Levenberg-Marquardt algorithm to produce a transformation by replacing the singular value decomposition with gradient descent and Gaussian-Newton approaches, accelerating convergence while ensuring high accuracy. Go-ICP [28] solves the point cloud alignment problem globally by using a Branch-and-Bound (BnB) optimization framework without prior information on correspondence or transformation. RANSAC-like algorithms are widely used for robust finding of the correct correspondences for registration [29]. FGR [30] optimizes a Geman-McClure cost-induced correspondence-based objective function in a graduated non-convex strategy and achieves high performance. TEASER [31] reformulates the registration problem as an intractable optimization and provides readily checkable conditions to verify the optimal solution. However, most existing methods still face challenges when point clouds contain a mixture of noise, outlier, and partial overlap [32]. In contrast, the learning-based methods tend to have strong robustness [33].

2.2 Deep Learning-Based Point Cloud Registration

Deep point cloud registration methods can be commonly classified into correspondences-free methods and correspondences-based methods [32]. The core idea of correspondence-free registration approaches [34, 35] is to estimate the transformation by minimizing the difference between global features extracted from two input point clouds. However, the global features rely on the whole points of a point cloud, leading to a major limitation that correspondences-free approaches are inapplicable to real scenes with partial overlaps [11, 32]. On the contrary, correspondence-based methods extract the per-point or per-patch embeddings of the two input point clouds to generate a mapping and estimate a rigid transformation [32]. For instance, DCP [25] employs DGCNN [36] for feature extraction and an attention module to generate soft matching pairs. FCGF [20] uses kernel to extract compact metric features for geometric correspondence. RGM [16] considers information exchange between two point clouds to extract discriminative features for point-wise matching. Predator [15] and PRNet [1] pay attention to the detection of points in the overlap region and use these features of the detected points to generate matches. DGR [11] proposes a 6-dimensional convolutional network architecture for inlier likelihood prediction and estimates the transformation via a weighted Procrustes module. 3DRegNet [17] uses an inlier prediction model to estimate the inliers. PointDSC [12] integrates pairwise similarity as a constraint to improve correspondence accuracy. RPMNet [37] and [38] propose methods to solve the point cloud partial visibility by integrating the Sinkhorn algorithm into a network to get soft correspondences from local features. Soft correspondences can increase the robustness of registration accuracy. IDAM [39] incorporates both geometric and distance features into the iterative matching process. Some works [13, 14, 40, 41] also focus on alleviating the dependence on the manual annotation in the process of extracting features. Most of these methods generate correspondences by applying the point feature similarities then directly match the highest response [39]. This strategy has two obvious limitations. First of all, some inliers are assigned to outliers, since inliers and outliers are treated equally in the correspondence prediction stage [15]. Second, the one-shot matching may fail because there are multiple possible correspondences due to randomness [39], while the per-point feature itself is not powerful enough to distinguish the geometric properties in point clouds [16]. Based on such observations, some additional constraints should be imposed to obtain high-quality correspondences. Inspired by edge similarity in graph matching [22, 23], the matched pairs between two point clouds hold an equal length constraint in both Euclidean and feature spaces, we propose a coupled optimal transport-based framework that incorporates overlap scores into pointwise matching as well as structural matching to generate more accurate correspondences. We also design a positional encoding scheme that assigns intrinsic geometric properties to per-point features to enhance distinctions among point features in indistinctive regions.

2.3 Optimal Transport

Optimal transport (OT) [21] is a method to exploit the best assignment between two general objects, which is widely applied in many machine learning applications [42]. OT provides a way to predict the correspondences and measure the similarity between two distributions. The distance based on OT is called the Wasserstein distances (WD), which has been used in various computer vision tasks [43]. For instance, Su et al. [44] employed the Wasserstein distance to deal with the 3D shape matching and surface registration problem. Dang et al. [38] applied Wasserstein distances to handle 3D point cloud registration. Gromov-Wasserstein (GWD) [21] extends WD by computing couplings between metric measure spaces. Unlike calculating the distance between two entity sets as in WD, GWD can be utilized to calculate the structure distance within each domain. The GWD has been used for shape analysis [45], graph matching [46], etc. The properties of WD and GWD that focus, respectively, on features and structure information motivate us to utilize WD to build pointwise matching and GWD to build structural matching. Contrasting with previous correspondence prediction algorithms for point-cloud registration, we treat point clouds as probability distributions, i.e., overlap scores are embedded in a specific metric space based on the similarities of features and structures. The correspondence prediction is then used to compute a coupled optimal transport distance between two probability distributions that can endow pointwise and structural matchings with overlap scores to generate more accurate correspondences.

3 Problem Formulation

Before introducing our method, we explain the formulation and notation of the problem. We consider two point clouds and , with their associated features and , respectively. The registration problem is to recover a rigid transformation with rotation and translation that aligns the source set to the target set . Obviously can only be determined from the data in overlapping areas of and , if both sets have sufficient overlaps. We define an assignment matrix with elements to represent the matching confidence between the point and , where each element satisfies

| (1) |

Let us first consider the case where the overlapping region is given, and we use the overlap scores to depict the overlapping region. The overlap score of the point is defined as

| (2) |

where is defined similarly. Let and . Usually, it is unlikely to determine whether a point is in overlap regions. Thus we relax the overlap scores to and use a neural network to estimate overlap scores. The details of the overlap score prediction module are illustrated in Sec. 4. When considering the overlap scores, the registration task can be cast to solve the following problem:

| (3) | ||||

The three constraints enforce to be a permutation matrix. If we know the optimal rigid transformation , then the assignment matrix can be recovered from Eq. (3). By contrary, given the assignment matrix , we generate correspondences . can be directly leveraged by RANSAC [47] or SVD to estimate the transformation.

The following notation will be used throughout the paper. indicates a correspondence of and . represents a pair. is an inner product operator. The Kullback-Leibler () divergence between two non-negative vectors and is defined as

| (4) |

with the convention .

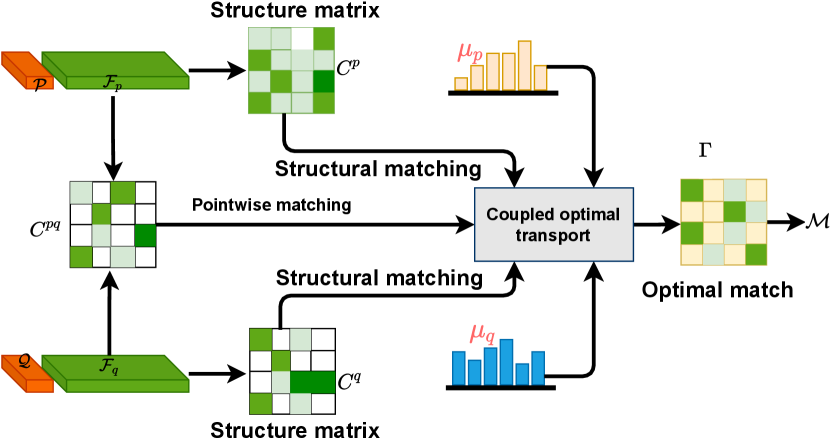

4 Proposed optimal transport-based point cloud registration

In this paper, we propose a new method to generate correspondences by jointly considering pointwise and structural matchings, as illustrated in Figure 2. We first use and the coordinates of to calculate . Similarly, and the coordinates of are used to calculate the . After that, , , the overlap scores and are used for structural matching based on the Gromov-Wasserstein distance. and are used to calculate . Then and overlap scores and are used for pointwise matching based on the Wasserstein distance. Finally, we couple Wasserstein distance and Gromov-Wasserstein distance in a mutually-beneficial way by sharing the assignment matrix , which is estimated via the coupled optimal transport algorithm. and have and points, respectively. The correspondences are finally obtained based on . Next, we introduce how to use the coupled optimal transport to predict the correspondences.

4.1 Coupled Optimal Transport-Based Correspondence Prediction

As is mentioned above, only considering the pointwise matching is not sufficient to find the accurate correspondences due to the ambiguous and repeated patterns in the 3D acquisition point clouds. Therefore, we exploit both pointwise and structural matchings to indicate the correspondence between source and target point clouds.

For point-wise matching, the cross-distance matrix is derived based on the feature space between point cloud and with each element satisfying

| (5) |

where represents the distance between and .

To construct the structural matching, we first calculate the discrepancy (structure) matrix of the point pairs within in both Euclidean and feature spaces with the elements related to satisfying

| (6) |

where is a function related to distances between two points in Euclidean space satisfying . is a hyperparameter controlling the contribution of feature and coordinate information. Similarly, the elements of related to for satisfy

| (7) |

We jointly exploit the pointwise and structural matchings equipped with overlap scores to indicate the correspondences between source and target point clouds. This leads to an optimization problem that can be solved by a coupled optimal transport method (called coupled optimal transport since it contains two types of distance, i.e., WD and GWD).

| (8) | ||||

where denotes an -dimensional all-one vector. and are two non-negative hyperparameters controlling the pointwise and structural matchings, respectively. If and , then it only depends on the pointwise matching, and if and , it only considers the structural matching. When and , it allows the framework to effectively consider both pointwise and structural matchings for better correspondence predictions by sharing the assignment matrix . After obtaining , we can apply some methods, such as SVD and RANSAC, to estimate the transformation.

Next, we introduce the Wasserstein distance-based pointwise matching and the Gromov-Wasserstein distance-based structural matching, respectively.

4.2 Wasserstein Distance-Based Pointwise Matching

Our Wasserstein distance-based pointwise matching method can be regarded as a variant of the nearest neighbor search with an additional bijectivity constraint that enforces global matching consistency. Our model is appropriate for partial registration, since the overlap scores have been introduced. Given two point clouds and , with their associated features and , overlap scores and , the assignment matrix can be estimated based on the following Theorem.

Theorem 1.

Given features and , overlap scores and , if are invariant to rigid transformations, and are the optimal solutions of problem in Eq. (3). Then is an optimal solution to the following optimization problem:

| (9) | ||||

where , represent the features of points and , respectively. . The constraint of the assignment matrix is relaxed to a doubly stochastic state, that is, .

Please refer to the proof of this theorem in Appendix A.

4.3 Gromov-Wasserstein Distance-Based Structural Matching

Our method is based on the observations as follows: for and with their associated features and , if and are correct correspondences, then the distance between and should be similar to the distance between and . Thus, the structural difference in both Euclidean and feature spaces should be small, i.e., and should be small. Gromov-Wasserstein distance is often applied to find the correspondences between two sets of samples based on their pairwise intra-domain similarity (or distance) matrices. Thus, we can approximately transform the correspondence prediction to a structural matching problem based on the Gromov-Wasserstein distance as the following Theorem.

Theorem 2.

Given two point clouds and , with their associated features and , overlap scores and , if are invariant to rigid transformation, and are the optimal solutions of problem (3), then is an optimal solution of the following Gromov-Wasserstein distance-based optimization:

| (10) | ||||

The proof is available in Appendix B.

Remark 2.

Assignment matrix can be obtained from by problem in Eq. (10) by solving an entropy regularized optimization. The structural matching is formulated by jointly considering the structural differences in both Euclidean and feature space.

4.4 Model Optimization

Now, we introduce how to solve the problem in (8). For simplicity, we denote a matrix with each element . We get . The problem in Eq. (8) can be rewritten as

| (11a) | |||

| (11b) | |||

Standard optimal transport only allows a meaningful comparison of measures with the same total mass, i.e., , which does not always satisfy the registration requirement due to multiple correspondences. Following [49], we replace the constraints in Eq. (11b) with soft-marginals ( divergence). Optimization in Eq. (11) is then translated into an unconstrained approximate transport problem

| (12) | ||||

where is a regularization parameter to adjust the strength of penalization of the soft margins. We use the generalized proximal point method [50] and projected gradient descent to solve the problem in Eq. (12) based on the metric. Following [45, 50], we fix at iteration for , acts as a regularization centered on the previous solution . The update rule for Eq. (12) at iteration can be written as

| (13) | ||||

with initialization . is a regularization parameter. can be interpreted as a damping term that encourages not to be very far from . For small enough, in Eq. (13) converges to the optimal solution of problem in Eq. (11) as increases. Choosing trades off convergence speed with closeness to the original transport problem [48]. The solution of the problem in Eq. (13) is based on the following theorem.

Theorem 3.

Denote and with elements that satisfy . The optimal solution for the objective in Eq. (29) can be obtained by solving the following dual entropic regularized objective,

| (14) | ||||

where are dual variables.

Please refer to the proof of the theorem in Appendix C. The problem in Eq. (14) can be solved using the Sinkhorn algorithm [48, 49]. Specifically,

Let be the solution returned at the -th iteration of the algorithm. Here and with . Suppose we are at iteration for with a fixed , i.e.,

Multiplying both sides by , we get

Similarly, with fixed, we get

We use the pseudocode in Algorithm 1 to illustrate the solution. The inner iterations can be determined by and and the proof is similar to Theorem 2 in [49]. In our experiments, we found that when setting , , , and , it can obtain satisfactory results.

Input: Distance matrices , and , overlap scores and , and hyparameters , , and the number of outer/inner iterations .

4.5 Combined with Learning Network

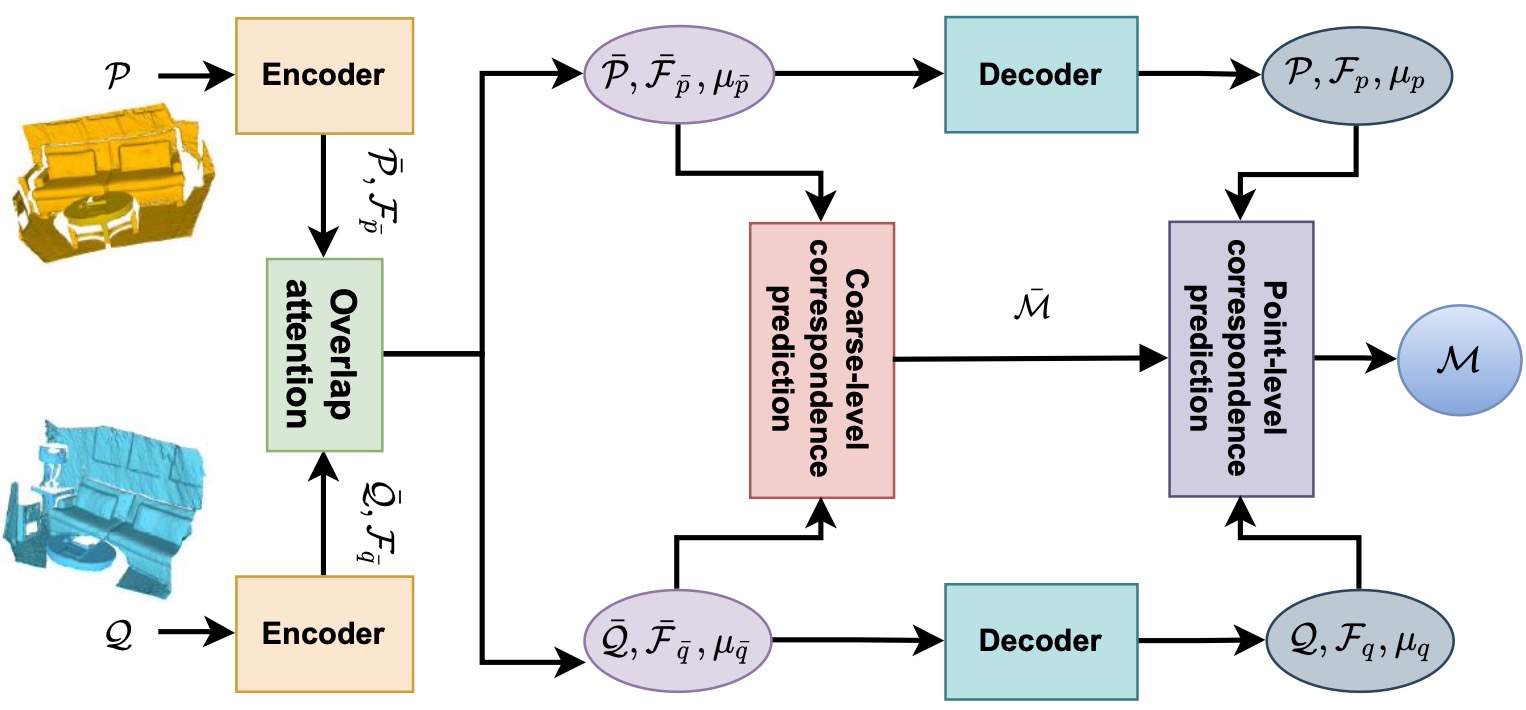

The solution of the optimization problem in Eq. (8) is sought over the space of permutation matrices. Because of memory constraints and speed limitations, it is not suitable to solve large scale registration problem. To this end, COTReg adopts a hierarchical matching strategy that first establishes superpoint-level correspondences and then predicts point-level correspondences according to superpoint-level matches. The pipeline of our COTReg is illustrated in Figure 3, which is a shared weighted two-stream encoder-decoder network. Given a pair of point cloud and , the encoder aggregates the raw points into superpoints and , while jointly learning the associated features and . The overlap attention block updates the features as and , and projects them to coarse level overlap score vectors . The updated features and overlap scores are then used to calculate the coarse-level correspondences. Finally, the decoder transforms the superpoint level features and overlap scores to per-point feature descriptors and , and overlap scores and , which are used to estimate fine-level correspondences.

4.5.1 Encoder

4.5.2 Overlap Attention Module

The overlap attention module, which estimates the probability (overlap score) of whether a point is in the overlapping area, consists of positional encoding, self-attention, cross-attention, and overlap score prediction. The positional encoding assigns intrinsic geometric properties to a per-point feature, thus enhancing distinctions among point features in indistinctive regions. The extracted local features have a limited receptive field which may not distinguish indistinctive regions. Instead, humans find correspondences in these indistinctive regions not only based on the local neighborhood but with a larger global context. Self-attention is thus introduced to model the long-range dependencies. And cross attention module exploits the intra-relationship within the source and target point clouds, which models the potential overlap regions. Here, we detail the individual parts hereafter.

Positional Encoding. Positional encoding scheme which assigns intrinsic geometric properties to the per-point feature by adding unique positional information enhances distinctions among point features in indistinctive regions. Given two superpoints and of , we first select the nearest neighbors of and compute the centroid of . For each , we denote the angle between two vectors and is . The position encoding of is defined as follows:

| (15) |

where and are two MLPs, and each MLP consists of a linear layer and one ReLU nonlinearity function.

Self-Attention and Cross-Attention. Let be the intermediate representation for at layer and let . We use a multi-attention layer consisting of four parallel attention head to update the via

| (16) | ||||

Here, if represents self-attention, and if indicates cross-attention. denotes concatenation, and is a three-layer fully connected network consisting of a linear layer, instance normalization, and a LeakyReLU activation. The same attention module is also simultaneously performed for all points in point cloud . A fixed number of layers with different parameters are chained and alternatively aggregate along the self- and cross-attention. As such, start from if is even and if is odd. The final outputs of attention module are for and for . By doing this, each point can incorporate non-local information that intuitively strengthens their long-range correlation dependencies. The latent features have the knowledge of and vice versa.

Overlap Score Prediction. To deal with those points in non-overlapping regions, we separately predict the overlap scores and using the conditioned features and . Here and can be computed using a single fully layer followed by a sigmoid activation function.

| (17) | ||||

The overlap scores are used to mask out the influence of points outside the overlap region.

4.5.3 Coarse-Level Correspondence Prediction

, , and are used to calculate an assignment matrix , at superpoint level, by solving a coupled optimal transport problem

| (18) | ||||

where , and are the distance matrices with elements satisfying and , respectively. is a hyperparameter. Eq. (18) is an instance of the optimal transport [48] problem, which can be solved efficiently using Sinkhorn-Knopp algorithm [48]. After reaching , we select correspondences with maximum confidence score of in each row and column, and further enforce the mutual nearest neighbor (MNN) criterion, which filters possible outlier coarse matches. The coarse-level correspondences are defined as follows:

| (19) |

4.5.4 Decoder

Our decoder starts with conditioned features , concatenates them with the overlap score , and outputs the per-point feature descriptor and refined per-point overlap scores . The decoder architecture combines NN-upsampling with linear layers and includes skip connections from the corresponding encoder layers. The same operator is applied to generate and .

4.5.5 Fine-Level Prediction

On the finer stage, we refine coarse correspondences to point-level correspondences. Those refined matches are then utilized for point cloud registration.

Point-Level Correspondence Prediction. We first group the points into clusters by assigning points to their nearest superpoints in geometry space. After grouping, points with their associated overlap scores and descriptors form patches, on which we can extract point correspondences. For a superpoint , its associated point set , feature set , and the overlap score set are denoted as

| (20) |

The coarse-level correspondence set is expanded to its corresponding patch correspondence sets, both in geometry space , feature space , and overlap scores . For computational efficiency, every patch samples number of points based on the overlap scores. Given a pair of overlapped patches and , we first calculate the cross distance matrix , and structural matrices and with elements satisfying

where is a hyperparameter. Extracting point correspondences is analogous to matching two smaller-scale point clouds by solving a coupled optimal transport problem to calculate a matrix as

For correspondences, we choose the maximum confidence score of in every row and column to guarantee a higher precision. The final point correspondence set is represented as the union of all the correspondence sets obtained. After obtaining the correspondences , following [52, 53], a variant of RANSAC [47] that is specialized to 3D correspondence-based registration [54] is utilized to estimate the transformation.

4.6 Loss Function and Training

Our model is an end-to-end learning framework, using the ground truth correspondences as supervision. The loss function is composed of an coarse-level loss for superpoint matching, a point matching loss for point matching, a binary classification loss for coarse-level overlap scores, and a classification loss for fine-level overlap scores.

4.6.1 Coarse-Level Loss

Superpoint Matching Loss. Existing methods [53, 16] usually formulate superpoint matching as a multilabel classification problem and adopt a cross-entropy loss with optimal transport. Doing this requires unfolding the Sinkhorn layer to compute gradients in the training stage. To address this issue, we adopt a circle loss [55] to optimize the superpoint-wise feature descriptors. As there is not direct supervision for superpoint matching, we leverage the overlap ratio of points in that have correspondences in to depict the matching probability between superpoints and . is defined as:

where is the ground-truth transformation and is a set threshold. For circle loss, a pair of superpoints are positive if their corresponded patches share at least overlap, and negative if they do not overlap. All other pairs are omitted. We select the superpoints in which have at least one positive superpoint in to form a set of anchor superpoints, . For each anchor , we denote the set of its positive superpoints in as , and the set of its negative patches as . The superpoint matching loss (circle loss) on is then defined as:

| (21) | ||||

where is the distance in the feature space. The weights and are determined individually for each positive and negative example, using the empirical margins and with a learned scale factor . The circle loss reweights the loss values on based on the overlap ratio so that the patch pairs with higher overlap are given more importance. The same goes for the loss on . The overall superpoint matching loss is

| (22) |

Coarse-Level Overlap Loss. We use the ratio of points in that are visible in to depict the ground-truth overlap scores of the superpoint . It is calculated by

| (23) |

with overlap threshold. If is close to 1, tends to locate in the overlap regions. is calculated in the same way. The predicted overlap scores for are thus supervised using the binary cross entropy loss, i.e.,

| (24) |

The loss for is calculated in the same way. The loss for coarse-level overlap scores is

4.6.2 Fine-Level Loss

Point Matching Loss. We apply circle loss again to supervise the point matching. Consider a pair of matched superpoints and with associated patches and , we first extract a set of anchor points satisfying that each has at least one (possibly multiple) correspondence in , i.e.,

For each anchor , we denote the set of its positive points in as . All points of outside a (larger) radios form the set of its negative patches as . The fine-level matching loss on is calculated as:

| (25) | ||||

where is the distance in the feature space. The weights and are determined individually for each positive and negative example with a learned scale factor . and . The same goes for the loss on . The overall superpoint matching loss writes as

| (26) |

Fine-Level Overlap Loss. The overlap score loss is with

The ground-truth label of the point is defined as

| (27) |

where is calculated in the same way.

4.6.3 Implementation Details

The proposed method is implemented in PyTorch and can be trained on a single Quadro GV100 GPU (32G) and two Intel(R) Xeon(R) Gold 6226 CPUs. The hyperparameters are set as follows: , , , , and . We train our model 120 epochs with a batch size of 1 in all experiments using the ADAM optimizer with an initial learning rate of and an exponentially decaying factor of 0.99. We adopt the same encoder and decoder architectures used in [53]. For training the network, we sample 128 coarse correspondences, with truncated patch size on 3DMatch (3DLoMatch). On KITTI, the numbers are 128 and 32, respectively.

5 Experiments

We conduct extensive experiments to evaluate the performance of our method on indoor 3DMatch [18] and 3DLoMatch [15] benchmarks, outdoor KITTI [56] benchmark, and cross-source 3DCSR [57] benchmark.

Baselines. COTReg was compared with the recent state-of-the-art methods: FCGF [20], D3Feat [58], SpinNet [33], Predator [15], YOHO [59], CoFiNet [53], and GeoTransformer[52].

5.1 Evaluation on 3DMatch and 3DLoMatch.

Datasets. 3DMatch [18] and 3DLoMatch [15] are two widely used indoor datasets that contain more than and partial overlapping scene pairs, respectively. 3DMatch contains 62 scenes, from which we use 46 scenes for training, 8 scenes for validation, and 8 scenes for testing, respectively. The test set contains 1,623 partially overlapped point cloud fragments and their corresponding transformation matrices. We use training data preprocessed by [15] and evaluate on both the 3DMatch and 3DLoMatch [15] protocols. We first voxel downsample the point clouds with a voxel size, then extract different feature descriptors. Following [15], we set , , and , respectively.

Metrics. Following Predator[15] and CoFiNet [53], we evaluate performance with three metrics: (1) Inlier Ratio (IR), the fraction of putative correspondences whose residuals are below a certain threshold (i.e., 0.1m) under the ground-truth transformation, (2) Feature Matching Recall (FMR), the fraction of point cloud pairs whose inlier ratio is above a certain threshold (i.e., 5%), and (3) Registration Recall (RR), the fraction of point cloud pairs whose transformation error is smaller than a certain threshold (i.e., ).

| 3DMatch | 3DLoMatch | |||||||||

| # Samples | 5000 | 2500 | 1000 | 500 | 250 | 5000 | 2500 | 1000 | 500 | 250 |

| Method | Inlier Ratio | |||||||||

| FCGF[20] | 56.8 | 54.1 | 48.7 | 42.5 | 34.1 | 21.4 | 20.0 | 17.2 | 14.8 | 11.6 |

| D3Feat[58] | 39.0 | 38.8 | 40.4 | 41.5 | 41.8 | 13.2 | 13.1 | 14.0 | 14.6 | 15.0 |

| SpinNet [33] | 47.5 | 44.7 | 39.4 | 33.9 | 27.6 | 20.5 | 19.0 | 16.3 | 13.8 | 11.1 |

| Predator [15] | 58.0 | 58.4 | 57.1 | 54.1 | 49.3 | 26.7 | 28.1 | 28.3 | 27.5 | 25.8 |

| CoFiNet[53] | 49.8 | 51.2 | 51.9 | 52.2 | 52.2 | 24.4 | 25.9 | 26.7 | 26.8 | 26.9 |

| YOHO [59] | 64.4 | 60.7 | 55.7 | 46.4 | 41.2 | 25.9 | 23.3 | 22.6 | 18.2 | 15.0 |

| GeoTransformer[52] | 71.9 | 75.2 | 76.0 | 82.2 | 85.1 | 43.5 | 45.3 | 46.2 | 52.9 | 57.7 |

| COTReg (Ours) | 85.4 | 85.7 | 86.1 | 86.4 | 86.9 | 54.2 | 55.1 | 56.3 | 57.7 | 59.3 |

| Feature Matching Recall | ||||||||||

| FCGF[20] | 97.4 | 97.3 | 97.0 | 96.7 | 96.6 | 76.6 | 75.4 | 74.2 | 71.7 | 67.3 |

| D3Feat [58] | 95.6 | 95.4 | 94.5 | 94.1 | 93.1 | 67.3 | 66.7 | 67.0 | 66.7 | 66.5 |

| SpinNet [33] | 97.6 | 97.2 | 96.8 | 95.5 | 94.3 | 75.3 | 74.9 | 72.5 | 70.0 | 63.6 |

| Predator[15] | 96.6 | 96.6 | 96.5 | 96.3 | 96.5 | 78.6 | 77.4 | 76.3 | 75.7 | 75.3 |

| CoFiNet[53] | 98.1 | 98.3 | 98.1 | 98.2 | 98.3 | 83.1 | 83.5 | 83.3 | 83.1 | 82.6 |

| YOHO[59] | 98.2 | 97.6 | 97.5 | 97.7 | 96.0 | 79.4 | 78.1 | 76.3 | 73.8 | 69.1 |

| GeoTransformer[52] | 97.9 | 97.9 | 97.9 | 97.9 | 97.6 | 88.3 | 88.6 | 88.8 | 88.6 | 88.3 |

| COTReg (Ours) | 98.5 | 98.6 | 98.5 | 98.6 | 98.6 | 89.5 | 89.7 | 89.7 | 89.6 | 89.4 |

| Registration Recall | ||||||||||

| FCGF[20] | 85.1 | 84.7 | 83.3 | 81.6 | 71.4 | 40.1 | 41.7 | 38.2 | 35.4 | 26.8 |

| D3Feat[58] | 81.6 | 84.5 | 83.4 | 82.4 | 77.9 | 37.2 | 42.7 | 46.9 | 43.8 | 39.1 |

| SpinNet[33] | 88.6 | 86.6 | 85.5 | 83.5 | 70.2 | 59.8 | 54.9 | 48.3 | 39.8 | 26.8 |

| Predator[15] | 89.0 | 89.9 | 90.6 | 88.5 | 86.6 | 59.8 | 61.2 | 62.4 | 60.8 | 58.1 |

| CoFiNet[53] | 89.3 | 88.9 | 88.4 | 87.4 | 87.0 | 67.5 | 66.2 | 64.2 | 63.1 | 61.0 |

| YOHO [59] | 90.8 | 90.3 | 89.1 | 88.6 | 84.5 | 65.2 | 65.5 | 63.2 | 56.5 | 48.0 |

| GeoTransformer[52] | 92.0 | 91.8 | 91.8 | 91.4 | 91.2 | 75.0 | 74.8 | 74.2 | 74.1 | 73.5 |

| COTReg (Ours) | 93.1 | 92.8 | 92.9 | 92.5 | 92.4 | 80.9 | 80.4 | 79.7 | 76.0 | 74.6 |

5.1.1 Inlier Ratio and Feature Matching Recall

As the main contribution of COTReg is that we jointly adopt the pointwise and structural matchings to estimate the more correct correspondences, we first check the Inlier Ratio of COTReg, which is directly related to the quality of extracted correspondences. Following [15, 53, 52], we report the results with different numbers of correspondences. As shown in Table I (Top), on Inlier Ratio, COTReg outperforms all the previous methods on both benchmarks, showing an outstanding accuracy improvement. In particular, our COTReg surpasses GeoTransformer, the second best baseline, consistently by on 3DMatch and 3DLoMatch when the sample number ranges from 250 to 5000, respectively. Furthermore, the fact that sampling fewer correspondences leads to a higher Inlier Ratio indicates that our learned scores are well-calibrated, i.e., higher confidence scores indicate more reliable correspondences. For Feature Matching Recall, in Table I (Middle), our method obtains the best results. Especially on 3DLoMatch, which is more challenging due to low overlap scenarios, our proposed method achieves improvements of at least 0.9%, demonstrating its effectiveness in low overlap cases. The main difference between our method and other baselines is the correspondence prediction strategy, which jointly considers point-wise and structural matchings equipped with overlap scores. On the contrary, these baseline methods only consider pointwise matching based on feature similarity.

5.1.2 Registration Recall

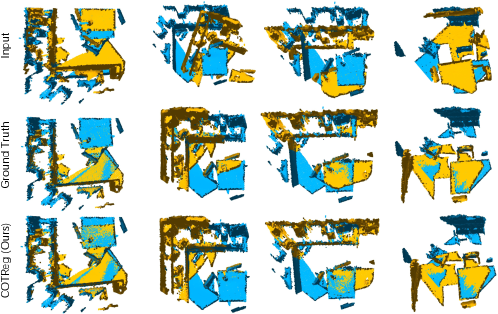

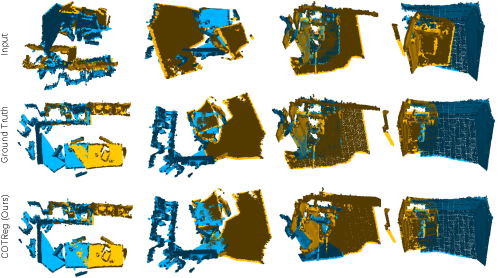

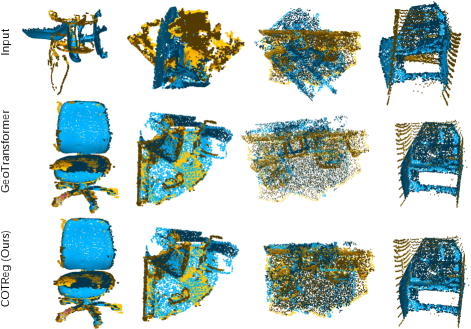

Registration Recall reflects the final performance on point cloud registration. To evaluate the registration performance, we compare the RR obtained by RANSAC in Table I (bottom), and our method outperforms all the other models with various number of sampling points on both two datasets. Specifically, COTReg achieved and Registration Recall, exceeding the previous best, GeoTransformer,( RR on 3DMatch) by and ( RR on 3DLoMatch) by , showing its efficacy in both high- and low-overlap scenarios. It demonstrates that incorporating both pointwise and structural matchings with overlap scores into the correspondence prediction process can alleviate the ambiguity issue and thus obtains better performance than the counterparts that only consider pointwise matching. Figures 4 and 5 show visual comparison examples on 3DMatch and 3DLoMatch, respectively. We can easily see that our method can achieve better results in challenging indoor scenes with a low overlap ratio.

We also compare the registration results weighted SVD over correspondences in Table II. Some baselines either fail to achieve reasonable results or suffer from severe performance degradation. In contrast, COTReg with weighted SVD achieves the registration recall of 87.2% on 3DMatch and 60.7% on 3DLoMatch. Without outlier filtering by RANSAC, high inlier ratio is necessary for successful registration. However, a high inlier ratio does not necessarily lead to a high registration recall, since the correspondences could cluster together as noted in [15].

We further count the average inference time of COTReg and compare it with that of the baselines. Notably, all methods consist of two stages that first extract dense features or the correspondences and then recovers the transformation using RANSAC or SVD. We report inference times of the two stages, respectively. Although in the correspondence prediction stage, our method is slightly slower than some baselines, it performs well by extracting reliable correspondences.

| RR | Time (s) | ||||||

| Method | Estimator | Samples | 3DM | 3DLM | Model | Pose | Total |

| FCGF[20] | RANSAC-50k | 5000 | 85.1 | 40.1 | 0.052 | 3.326 | 3.378 |

| D3Feat[58] | RANSAC-50k | 5000 | 81.6 | 37.2 | 0.024 | 3.088 | 3.112 |

| SpinNet [33] | RANSAC-50k | 5000 | 88.6 | 59.8 | 60.248 | 0.388 | 60.636 |

| Predator [15] | RANSAC-50k | 5000 | 89.0 | 59.8 | 0.032 | 5.120 | 5.152 |

| CoFiNet[53] | RANSAC-50k | 5000 | 89.3 | 67.5 | 0.115 | 1.807 | 1.922 |

| GeoTrans[52] | RANSAC-50k | 5000 | 92.0 | 75.0 | 0.075 | 1.558 | 1.633 |

| COTReg (Ours) | RANSAC-50k | 5000 | 93.1 | 80.9 | 0.652 | 1.463 | 2.115 |

| FCGF[20] | weighted SVD | 250 | 42.1 | 3.9 | 0.052 | 0.008 | 0.056 |

| D3Feat[58] | weighted SVD | 250 | 37.4 | 2.8 | 0.024 | 0.008 | 0.032 |

| SpinNet [33] | weighted SVD | 250 | 34.0 | 2.5 | 60.248 | 0.006 | 60.254 |

| Predator [15] | weighted SVD | 250 | 50.0 | 6.4 | 0.032 | 0.009 | 0.041 |

| CoFiNet [53] | weighted SVD | 250 | 64.6 | 21.6 | 0.115 | 0.003 | 0.118 |

| GeoTrans[52] | weighted SVD | 250 | 86.5 | 59.9 | 0.075 | 0.003 | 0.078 |

| COTReg (Ours) | weighted SVD | 250 | 87.2 | 60.7 | 0.652 | 0.002 | 0.654 |

5.2 Evaluation on KITTI

5.2.1 Datasets

KITTI contains 11 sequences of LiDAR scanned outdoor driving scenarios. For fair comparisons, we follow the same data splitting as [20, 11] and use sequences 0-5 for training, 6-7 for validation, and 8-10 for testing, respectively. Following [11], we further refine the ground truth poses provided using ICP and only use point cloud pairs that are at most away from each other for evaluation. Following [15], we use a voxel size for downsampling point clouds and set thresholds to , to , and to , respectively.

5.2.2 Metrics

Following Predator [15] and CoFiNet [53], we use three metrics, Registration Recall (), Rotation Error (), and Translation Error (), to evaluate the performance of the proposed registration algorithm. is the percentage of successful alignment whose rotation error and translation error are below set thresholds (i.e., RRE and RTE ). RRE and RTE are defined as , respectively. and denote the ground-truth rotation matrix and the translation vector, respectively.

5.2.3 Registration results

We compare to the state-of-the-art RANSAC-based methods: FCGF [20], D3Feat [58], SpinNet [33], Predator [15], CoFiNet [53], and GeoTransformer [52]. As shown in Table III, our model still achieves the best performance in terms of registration recall as well as the lowest average and . The results verify the effectiveness of considering both pointwise and structural matchings to produce correspondences.

| Method | Estimator | RTE (cm) | RRE | RR(%) |

| FCGF [20] | RANSAC | 9.5 | 0.30 | 96.6 |

| D3Feat [58] | RANSAC | 7.2 | 0.30 | 99.8 |

| SpinNet [33] | RANSAC | 9.9 | 0.47 | 99.1 |

| Predator [15] | RANSAC | 6.8 | 0.27 | 99.8 |

| CoFiNet [53] | RANSAC | 8.5 | 0.41 | 99.8 |

| GeoTrans [52] | RANSAC | 7.4 | 0.27 | 99.8 |

| COTReg (Ours) | RANSAC | 4.9 | 0.22 | 99.8 |

5.3 Generalization on Cross-source Dataset

The generalization ability of learning-based registration algorithms is highly required when the point cloud is acquired from different sensors. To validate the generalizability of our model, we experiment on our own Cross Source Dataset (3DCSR) [57]. 3DCSR is a challenging dataset for registration due to a mixture of noise, outliers, density difference, partial overlap, and scale variation.

5.3.1 3DCSR

This dataset contains two folders: Kinect Lidar and Kinect SFM. Kinect lidar contains 19 scenes from both the Kinect and Lidar sensors, where each scene is cropped into different parts. Kinect SFM consists of 2 scenes from both Kinect and RGB sensors. The RGB images have already been constructed into a point cloud by using the software VSFM. We use the model trained on 3DMatch since the cross-source dataset is captured in an indoor environment. is the percentage of successful alignment whose rotation error and translation error are below set thresholds (i.e., RRE and RTE ).

| Method | Estimator | RRE | RTE (cm) | RR(%) |

| FCGF [20] | RANSAC | 7.47 | 0.21 | 49.6 |

| D3Feat [58] | RANSAC | 6.41 | 0.26 | 52.0 |

| SpinNet [33] | RANSAC | 6.56 | 0.24 | 53.5 |

| Predator [15] | RANSAC | 6.26 | 0.27 | 54.6 |

| CoFiNet [53] | RANSAC | 5.76 | 0.26 | 57.3 |

| GeoTrans [52] | RANSAC | 5.60 | 0.24 | 60.2 |

| COTReg (Ours) | RANSAC | 5.49 | 0.21 | 63.4 |

5.3.2 Registration Results

We use FCGF [20], D3Feat [58], SpinNet [33], Predator [15], CoFiNet [53], and GeoTransformer [52], as the baselines. Table IV shows that our method obtains the highest accuracies in generalizing the registration ability to real-world cross-source dataset. Specifically, it outperforms the second-best, GeoTransformer, by more than 3.2% in terms of registration recall (63.4% vs 60.2%). However, the recall is not high enough, showing that registration challenges on 3DCSR remain.

5.4 Ablation Study

To fully understand COTReg, we conduct an ablation study on 3DMatch and 3DLoMatch to investigate the contribution of each part. First, we replace the overlap scores with a uniform distribution, i.e., treating the points in overlap and non-overlap regions equally, to evaluate the effectiveness of overlap scores. As shown in Table V, on 3DMatch, the learned overlap scores improve the performance by nearly 2.0% (92.9% vs. 90.9%) RR, 0.7% (98.5% vs. 97.8%) FMR, and 7.8% (86.1% vs. 68.3%) IR, respectively. Structure matching can boost RR by 1.1% (92.9% vs. 91.8%), FMR by 0.5% (98.5% vs. 98.0%) and IR by 10.2% (86.1% vs. 75.9%), respectively. It also indicates that COTReg benefits from the overlap scores and structure matching. Table V also shows that the positional encoding can improve the performance in terms of RR, FMR and IR. On 3DLoMatch, the same results can be concluded.

| 3DMatch | 3DLoMatch | ||||||||

| PE | OS | PM | SM | RR | FMR | IR | RR | FMR | IR |

| 92.9 | 98.5 | 86.1 | 79.7 | 89.7 | 55.1 | ||||

| 91.8 | 98.0 | 75.9 | 74.6 | 88.9 | 46.4 | ||||

| 90.9 | 97.8 | 68.3 | 67.2 | 85.6 | 35.4 | ||||

| 90.2 | 97.6 | 63.4 | 66.1 | 84.7 | 33.5 | ||||

| 89.6 | 97.6 | 62.9 | 65.0 | 84.3 | 32.1 | ||||

| 88.9 | 97.5 | 59.8 | 64.8 | 84.0 | 30.8 | ||||

6 Conclusion

We propose a method to improve the accuracy of putative correspondences of point cloud registration. Specifically, we design a coupled optimal transport-based approach to generate correspondences by jointly considering overlap scores, pointwise and structural matchings. Comprehensive experiments show that our approach achieves a new state-of-the-art. We believe our techniques have the potential in the applications that require more accurate of 3D point cloud registration.

References

- [1] Y. Wang and J. M. Solomon, “Prnet: Self-supervised learning for partial-to-partial registration,” in NeurIPS, 2019.

- [2] C. Wang and X. Guo, “Feature-based rgb-d camera pose optimization for real-time 3d reconstruction,” Computational Visual Media, vol. 3, no. 2, pp. 95–106, 2017.

- [3] D. Borrmann, A. Nuechter, and T. Wiemann, “Large-scale 3d point cloud processing for mixed and augmented reality,” in ISMAR-Adjunct, 2018.

- [4] S. Chen, B. Liu, C. Feng, C. Vallespi-Gonzalez, and C. Wellington, “3d point cloud processing and learning for autonomous driving: Impacting map creation, localization, and perception,” IEEE Signal Processing Magazine, vol. 38, no. 1, pp. 68–86, 2020.

- [5] B. Nagy and C. Benedek, “Real-time point cloud alignment for vehicle localization in a high resolution 3d map,” in ECCV Workshops, 2018.

- [6] R. Wang, Y. Xu, M. A. Sotelo, Y. Ma, T. Sarkodie-Gyan, Z. Li, and W. Li, “A robust registration method for autonomous driving pose estimation in urban dynamic environment using lidar,” Electronics, vol. 8, no. 1, p. 43, 2019.

- [7] Z. Ma et al., “Point reg net: Invariant features for point cloud registration using in image-guided radiation therapy,” Journal of Computer and Communications, vol. 6, no. 11, p. 116, 2018.

- [8] W. Li, Z. Jiang, K. Chu, J. Jin, Y. Ge, and J. Cai, “A noninvasive method to reduce radiotherapy positioning error caused by respiration for patients with abdominal or pelvic cancers,” Technology in Cancer Research & Treatment, vol. 18, 2019.

- [9] R. P. Saputra and P. Kormushev, “Casualty detection from 3d point cloud data for autonomous ground mobile rescue robots,” in SSRR. IEEE, 2018, pp. 1–7.

- [10] F. Pomerleau, F. Colas, and R. Siegwart, “A review of point cloud registration algorithms for mobile robotics,” 2015.

- [11] C. Choy, W. Dong, and V. Koltun, “Deep global registration,” in CVPR, 2020, pp. 2514–2523.

- [12] X. Bai, Z. Luo, L. Zhou, H. Chen, L. Li, Z. Hu, H. Fu, and C.-L. Tai, “Pointdsc: Robust point cloud registration using deep spatial consistency,” in CVPR, 2021, pp. 15 859–15 869.

- [13] Z. J. Yew and G. H. Lee, “3dfeat-net: Weakly supervised local 3d features for point cloud registration,” in ECCV, 2018, pp. 607–623.

- [14] Z. Gojcic, C. Zhou, J. D. Wegner, and A. Wieser, “The perfect match: 3d point cloud matching with smoothed densities,” in CVPR, 2019, pp. 5545–5554.

- [15] S. Huang, Z. Gojcic, M. Usvyatsov, A. Wieser, and K. Schindler, “Predator: Registration of 3d point clouds with low overlap,” in CVPR, 2021, pp. 4267–4276.

- [16] K. Fu, S. Liu, X. Luo, and M. Wang, “Robust point cloud registration framework based on deep graph matching,” in CVPR, 2021, pp. 8893–8902.

- [17] G. D. Pais, S. Ramalingam, V. M. Govindu, J. C. Nascimento, R. Chellappa, and P. Miraldo, “3dregnet: A deep neural network for 3d point registration,” in CVPR, 2020, pp. 7193–7203.

- [18] A. Zeng, S. Song, M. Nießner, M. Fisher, J. Xiao, and T. Funkhouser, “3dmatch: Learning local geometric descriptors from rgb-d reconstructions,” in CVPR, 2017, pp. 1802–1811.

- [19] J. Li, P. Zhao, Q. Hu, and M. Ai, “Robust point cloud registration based on topological and cauchy weighted lq-norm,” ISPRS Journal of Photogrammetry and Remote Sensing, vol. 160, pp. 244–259, 2020.

- [20] C. Choy, J. Park, and V. Koltun, “Fully convolutional geometric features,” in ICCV, 2019, pp. 8958–8966.

- [21] G. Peyré, M. Cuturi et al., “Computational optimal transport: With applications to data science,” Foundations and Trends® in Machine Learning, vol. 11, no. 5-6, pp. 355–607, 2019.

- [22] M. Fey, J. E. Lenssen, C. Morris, J. Masci, and N. M. Kriege, “Deep graph matching consensus,” in ICLR, 2020.

- [23] A. Zanfir and C. Sminchisescu, “Deep learning of graph matching,” in CVPR, 2018, pp. 2684–2693.

- [24] P. J. Besl and N. D. McKay, “Method for registration of 3-d shapes,” in Sensor fusion IV: control paradigms and data structures, vol. 1611. International Society for Optics and Photonics, 1992, pp. 586–606.

- [25] Y. Wang and M. Solomon, J, “Deep closest point: Learning representations for point cloud registration,” in ICCV, 2019, pp. 3523–3532.

- [26] X. Huang, J. Zhang, Q. Wu, L. Fan, and C. Yuan, “A coarse-to-fine algorithm for matching and registration in 3d cross-source point clouds,” TCSVT, vol. 28, no. 10, pp. 2965–2977, 2017.

- [27] A. W. Fitzgibbon, “Robust registration of 2d and 3d point sets,” Image and vision computing, vol. 21, no. 13-14, pp. 1145–1153, 2003.

- [28] J. Yang, H. Li, D. Campbell, and Y. Jia, “Go-icp: A globally optimal solution to 3d icp point-set registration,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 38, no. 11, pp. 2241–2254, 2015.

- [29] J. Li, Q. Hu, and M. Ai, “Point cloud registration based on one-point ransac and scale-annealing biweight estimation,” TGRS, 2021.

- [30] Q.-Y. Zhou, J. Park, and V. Koltun, “Fast global registration,” in ECCV. Springer, 2016, pp. 766–782.

- [31] H. Yang, J. Shi, and L. Carlone, “Teaser: Fast and certifiable point cloud registration,” IEEE Transactions on Robotics, 2020.

- [32] Z. Zhang, Y. Dai, and J. Sun, “Deep learning based point cloud registration: an overview,” Virtual Reality & Intelligent Hardware, vol. 2, no. 3, pp. 222–246, 2020.

- [33] S. Ao, Q. Hu, B. Yang, A. Markham, and Y. Guo, “Spinnet: Learning a general surface descriptor for 3d point cloud registration,” in CVPR, 2021, pp. 11 753–11 762.

- [34] Y. Aoki, H. Goforth, R. A. Srivatsan, and S. Lucey, “Pointnetlk: Robust & efficient point cloud registration using pointnet,” in CVPR, 2019, pp. 7163–7172.

- [35] X. Huang, G. Mei, and J. Zhang, “Feature-metric registration: A fast semi-supervised approach for robust point cloud registration without correspondences,” in CVPR, 2020, pp. 11 366–11 374.

- [36] Y. Wang, Y. Sun, Z. Liu, S. E. Sarma, M. M. Bronstein, and J. M. Solomon, “Dynamic graph cnn for learning on point clouds,” ACM TOG, vol. 38, no. 5, pp. 1–12, 2019.

- [37] Z. J. Yew and G. H. Lee, “Rpm-net: Robust point matching using learned features,” in CVPR, 2020, pp. 11 824–11 833.

- [38] Z. Dang, F. Wang, and M. Salzmann, “Learning 3d-3d correspondences for one-shot partial-to-partial registration,” arXiv preprint arXiv:2006.04523, 2020.

- [39] J. Li, C. Zhang, Z. Xu, H. Zhou, and C. Zhang, “Iterative distance-aware similarity matrix convolution with mutual-supervised point elimination for efficient point cloud registration,” in ECCV, 2019.

- [40] M. El Banani, L. Gao, and J. Johnson, “Unsupervisedr&r: Unsupervised point cloud registration via differentiable rendering,” in CVPR, 2021, pp. 7129–7139.

- [41] L. Ding and C. Feng, “Deepmapping: Unsupervised map estimation from multiple point clouds,” in CVPR, 2019, pp. 8650–8659.

- [42] L. Chapel, M. Z. Alaya, and G. Gasso, “Partial optimal tranport with applications on positive-unlabeled learning,” NeurIPS, vol. 33, pp. 2903–2913, 2020.

- [43] J. Solomon, F. De Goes, G. Peyré, M. Cuturi, A. Butscher, A. Nguyen, T. Du, and L. Guibas, “Convolutional wasserstein distances: Efficient optimal transportation on geometric domains,” ACM TOG, vol. 34, no. 4, pp. 1–11, 2015.

- [44] Z. Su, Y. Wang, R. Shi, W. Zeng, J. Sun, F. Luo, and X. Gu, “Optimal mass transport for shape matching and comparison,” IEEE Trans. Pattern Anal. Mach. Intell, vol. 37, no. 11, pp. 2246–2259, 2015.

- [45] J. Solomon, G. Peyré, V. G. Kim, and S. Sra, “Entropic metric alignment for correspondence problems,” ACM TOG, vol. 35, no. 4, pp. 1–13, 2016.

- [46] H. Xu, D. Luo, H. Zha, and L. C. Duke, “Gromov-wasserstein learning for graph matching and node embedding,” in ICML. PMLR, 2019, pp. 6932–6941.

- [47] M. A. Fischler and R. C. Bolles, “Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography,” Communications of the ACM, vol. 24, no. 6, pp. 381–395, 1981.

- [48] M. Cuturi, “Sinkhorn distances: Lightspeed computation of optimal transport,” NeurIPS, vol. 26, pp. 2292–2300, 2013.

- [49] K. Pham, K. Le, N. Ho, T. Pham, and H. Bui, “On unbalanced optimal transport: An analysis of sinkhorn algorithm,” in ICML. PMLR, 2020, pp. 7673–7682.

- [50] A. Iusem and R. D. Monteiro, “On dual convergence of the generalized proximal point method with bregman distances,” Mathematics of Operations Research, vol. 25, no. 4, pp. 606–624, 2000.

- [51] H. Thomas, C. R. Qi, J.-E. Deschaud, B. Marcotegui, F. Goulette, and L. J. Guibas, “Kpconv: Flexible and deformable convolution for point clouds,” in ICCV, 2019, pp. 6411–6420.

- [52] Z. Qin, H. Yu, C. Wang, Y. Guo, Y. Peng, and K. Xu, “Geometric transformer for fast and robust point cloud registration,” in CVPR, 2022.

- [53] H. Yu, F. Li, M. Saleh, B. Busam, and S. Ilic, “Cofinet: Reliable coarse-to-fine correspondences for robust pointcloud registration,” NeurIPS, vol. 34, 2021.

- [54] Q.-Y. Zhou, J. Park, and V. Koltun, “Open3d: A modern library for 3d data processing,” arXiv preprint arXiv:1801.09847, 2018.

- [55] Y. Sun, C. Cheng, Y. Zhang, C. Zhang, L. Zheng, Z. Wang, and Y. Wei, “Circle loss: A unified perspective of pair similarity optimization,” in CVPR, 2020, pp. 6398–6407.

- [56] A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomous driving? the kitti vision benchmark suite,” in CVPR. IEEE, 2012, pp. 3354–3361.

- [57] X. Huang, G. Mei, J. Zhang, and R. Abbas, “A comprehensive survey on point cloud registration,” 2021.

- [58] X. Bai, Z. Luo, L. Zhou, H. Fu, L. Quan, and C.-L. Tai, “D3feat: Joint learning of dense detection and description of 3d local features,” in CVPR, 2020, pp. 6359–6367.

- [59] H. Wang, Y. Liu, Z. Dong, W. Wang, and B. Yang, “You only hypothesize once: Point cloud registration with rotation-equivariant descriptors,” in ICCV, 2021.

- [60] L. Chizat, G. Peyré, B. Schmitzer, and F.-X. Vialard, “Scaling algorithms for unbalanced optimal transport problems,” Mathematics of Computation, vol. 87, no. 314, pp. 2563–2609, 2018.

Appendix A Proof of the Theorem 1

Proof.

We assume the correct matches in correspondence set are free of noise. We denote .

Appendix B Proof of the Theorem 2

Proof.

Denote . The minimum values of is non-negative, so if we can prove that , then is a optimal solution of (10). If and , then and . Meantime, we can get that and . Then we have

If or , thus . ∎

Appendix C Proof of the Theorem 3

Proof.

We denote

| (28) | ||||

And then we have

After algebraic simplification, Eq. (13) can be rewritten as

| (29) | ||||

with initialization . We solve it iteratively with the help of the Sinkhorn-Knopp algorithm [48, 60]. For , we can check that the problem (29) is strongly convex and lower semi-continuous. Meanwhile, and are given non-negative vectors, strong duality and the existence of a minimizer for (29) is thus given by the Fenchel-Legendre dual form, which states that

where , and the function and take the following forms:

| (30) | ||||

Thus, it is proved by denoting . ∎