Cosine-Pruned Medial Axis: A new method for isometric equivariant and noise-free medial axis extraction

Abstract

We present the CPMA, a new method for medial axis pruning with noise robustness and equivariance to isometric transformations. Our method leverages the discrete cosine transform to create smooth versions of a shape . We use the smooth shapes to compute a score function that filters out spurious branches from the medial axis. We extensively compare the CPMA with state-of-the-art pruning methods and highlight our method’s noise robustness and isometric equivariance. We found that our pruning approach achieves competitive results and yields stable medial axes even in scenarios with significant contour perturbations.

keywords:

Medial Axis Pruning , Discrete Cosine Transform , Equivariance , Isometric transformation , Morphological skeleton1 Introduction

Shape analysis arises naturally in computer vision applications where geometric information plays an essential role. The shape of an object is a useful tool in fields such as: non-destructive reconstruction of archaeology and cultural heritage [49, 51]; object classification and retrieval from large collections of images [23, 39]; human action and pose recognition for gaming and entertainment [12, 24]; environment sensing in robot navigation and planning [34, 25]; and industry for automatic visual quality inspection of product defects [37, 5].

We visually perceive shape as the collections of all the features that constitute an object. However, to perform computer-based shape analysis, one must rely on an accurate discrete mathematical representation of an object’s shape. This representation should exhibit the same geometric and topological properties inherent to the shape itself. Accordingly, we can think of shape representation as a way to store the shape’s information in a different format, which benefits speed, compactness, and efficiency.

Many authors have proposed a variety of shape representations such as voxel/pixel grids, point clouds, triangular meshes, medial axes, or signed distance functions [45, 50, 28, 18, 54]. These representations differ greatly in their formulation, and aim to provide a method for extracting descriptive features from objects, while also preserving their appearance and geometric properties [9, 1, 48, 17, 29]. However, these methods also have disadvantages that limit their application. For example, medial axis representations are highly sensitive to contour noise; voxel/pixel grids are inaccurate after isometric transformations; signed distance functions and triangular meshes are memory-consuming representations when high-frequency details of the shape want to be stored.

We focus this study on the medial axis, also called the topological skeleton. The medial axis represents shapes as a collection of one-dimensional curves that define the central axis (or backbone) of an object. It provides dimensionality reduction of the amount of data needed to represent an entire shape while preserving its topological structure. Moreover, the medial axis is a rotation equivariant shape representation because the medial axis of a rotated object should ideally be the rotated medial axis of the same object. The medial axis is also robust to small deformation, such as articulation, because of its graph-like structure. For instance, a human-like shape moving only its arm will not affect all of the points in the medial axis, only the connections between the arm’s nodes.

Despite its advantages, the medial representation is extremely sensitive to noise on the object’s contour [40, 36]. Even small amounts of noise can cause erroneous sections of the skeleton called spurious branches. Consequently, many medial axis extraction algorithms are equipped with a mechanism to avoid or remove these spurious branches. There are two main strategies reported in the state-of-the-art to deal with this problem: prior smoothing of the curve representing the object’s boundary, and pruning the spurious branches after the medial axis’ computation. In the former, the smoothed boundary is obtained by removing small structures along the curve or surface. It is interesting to note that smoothing curves does not always result in a simplified skeleton [53, 6]. Effective pruning techniques focus instead on criteria to evaluate the significance of individual medial axis branches. However, pruning often requires user-defined parameters that depend on the size and complexity of the object [43, 7, 44], making the pruning method domain-dependent. Moreover, some pruning strategies result in a violation of the equivariant property [36, 40]. As a result, medial axis pruning is still an open problem in computer vision, and this problem is in need of noise-robust methods that concurrently preserve the isometric equivariance of the medial axis.

This paper presents a new method for medial axis pruning that employs mechanisms from the two aforementioned branch-removal strategies. Our method works by computing a controlled set of smoothed versions of the original shape via the discrete cosine transform. We combine these smoothed shapes’ medial axis to create a score function that rates points and branches by their degree of importance. We use our score function to prune spurious branches while preserving the medial axis’ ability to reconstruct the original object. Our method is robust to boundary noise and exhibits isometric equivariance.

We benchmark our approach on three datasets of 2D and 3D segmented objects. We use the Kimia216 [42] and the Animal dataset [8] to evaluate 2D medial axis extraction. These two datasets provide a method to assess 2D medial axis extraction in the presence of intra-class variation. We also use the University of Groningen Benchmark [46, 47, 13] to evaluate our approach on 3D objects. Our results show that our approach achieves competitive results on isometric equivariance and noise robustness compared to the state-of-the-art.

The main contributions of this paper are summarized as follows:

-

1.

We define a novel method to compute medial axes that are robust to several degrees of boundary noise without losing the capacity to reconstruct the original object.

-

2.

Our computation pipeline guarantees that the isometric equivariance of the medial axis is preserved.

-

3.

The definition of our score function allows for a medial axis pruning that is efficiently computed in parallel.

2 Related work

Many algorithms and strategies exist to extract the medial axis and simplify it when affected by contour noise. This section briefly reviews the most representative algorithms for medial axis computation and discusses their key advantages and disadvantages.

2.1 The Medial Axis

Blum [11] first introduced the medial axis as an analogy of a fire propagating with uniform velocity on a grass field. The field is assumed to have the form of a given shape. If one “sets fire” at all boundary points, the medial axis is the set of quench points. There are other equivalent definitions of the medial axis. In this work we use a geometric definition as follows:

Definition 1.

Medial Axis. Let be a connected bounded domain in , and two points such that . The medial axis of is defined as all the points where is the center of a maximal ball of radius that is inscribed inside . Formally,

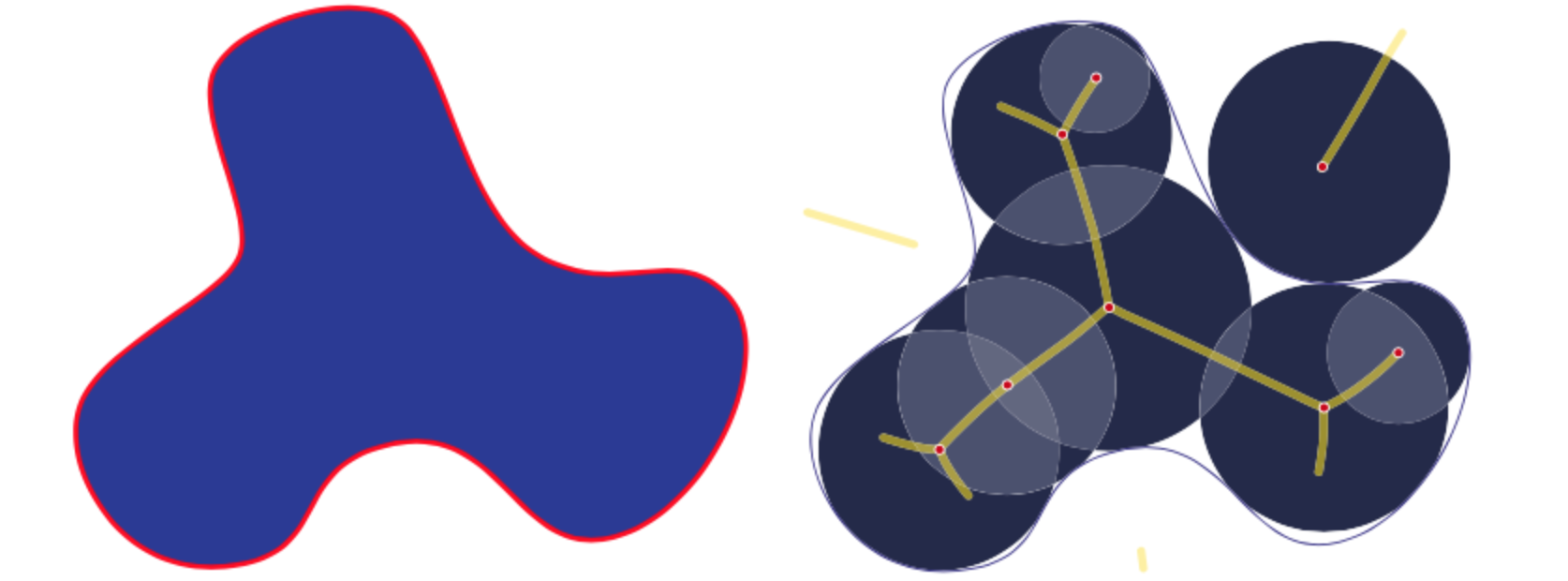

The medial axis, together with the associated radius of the maximally inscribed ball, is called the medial axis transform (). The medial axis transform is a complete shape descriptor, meaning that it can be used to reconstruct the shape of the original domain. In some work, and are also referred to as shape skeletonization. Figure 1 shows an example of a 2D shape and its medial axis as the center of maximal discs. In definition 1 may result in a 2-dimensional medial axis sometimes called the medial surface. We will restrict our examples and analysis to only one-dimensional medial axes.

2.2 Medial Axis Computation

There are three primary mechanisms to compute the : 1) layer by layer morphological erosion also called thinning methods, 2) extraction of the medial axis from the edges of the Voronoi diagram generated by the boundary points, and 3) detection of ridges in distance map of the boundary points. In digital spaces, only an approximation to the “true medial axis” can be extracted.

When using thinning methods [16, 27, 26, 32], points which belong to are deleted from the outer boundary first. Later, the deletion proceeds iteratively inside until it results in a single-pixel wide medial axis. The medial axis by thinning can be approximated in terms of erosion and opening morphological operations [55]. Thinning methods are easy to implement in a discrete setting, but they are not robust to isometric transformations.

The most well-known algorithm for thinning skeletonization is perhaps the Zhang Suen [55] algorithm. However, other approaches have been developed using similar principles [52, 26, 32], mainly focused on parallel computation.

Another way to estimate the medial axis works by computing the Voronoi Diagram (VD) of a polygonal approximation of the object’s contour. The contour is expressed as line segments in 2D or a polygonal mesh in 3D. The seed points for the VD are the vertices of the polygonal representation. The medial axis is then computed as the union of all of the edges of the VD, such that the points , and are not neighbors in the polygonal approximation [33].

Voronoi skeletonization methods preserve the topology of . However, a suitable polygonal approximation of an object is crucial to guarantee the medial axis’ accuracy. Noise in the boundary forms convex areas in the contour, which induce spurious branches on the medial axis. In general, the better the polygonal approximation, the closer the Voronoi skeleton will be to the real medial axis. Nevertheless, this is an expensive process, particularly for large and complex objects [36].

The most common methods to extract the medial axis are those based on the distance transform. Within these methods, the medial axis is computed as the ridges of the distance transform inside the object [3, 40, 2, 22, 14, 35, 41]. This interpretation of the medial axis follows definition 1, because the centers of the maximal balls are located on points along the ridgeline of the distance transform, and the radius of the balls correspond to the distance value at .

When computed in a discrete framework, distance-based approaches suffer from the same isometric robustness limitations as thinning and Voronoi methods [36]. Moreover, noise in the contour produced by a low discretization resolution directly affects the medial axis’ computation by introducing artificial ridges in the distance transform and, consequently, spurious branches.

2.3 Medial Axis Simplification

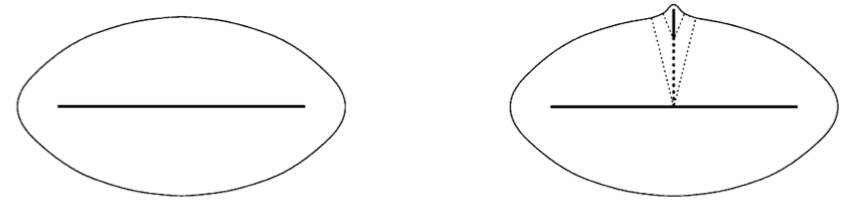

The medial axis’ sensitivity to boundary noise limits its applications to real-life problems [10]. Even negligible boundary noise can cause spurious branches, as shown in Figure 2.

One strategy for removal of spurious branches consists of computing as the medial axis of a smoother version of the shape, [38, 31]. The main disadvantage of this approach is that, in most cases, the resulting medial axis is not a good approximation. Additionally, the smoothing of can potentially change its topology. Miklos et al. [30] introduced a slightly different approach they call Scaled Axis Transform (SAT). The SAT involves scaling the distance transform and computing the medial axis of the original un-scaled shape as the medial axis of the scaled one. However, in [35], the authors show that the SAT is not necessarily a subset of the medial axis of the original shape. Instead, they propose a solution that guarantees a better approximation by including additional constraints on the scaled distance transform.

Another method to overcome the noise sensitivity limitation of the medial axis is spurious branch pruning. Pruning methods are a family of regularization processes incorporated in most medial axis extraction algorithms [43]. Effective pruning techniques focus on different criteria to evaluate the significance of medial axis branches. Thus, the decision is whether to remove the branch (and its points) or not. We can say that pruning methods are adequate if the resulting is noticeably simplified while preserving the topology and its ability to reconstruct the original object. Most pruning methods rely on ad hoc heuristic rules, which are invented and often reinvented in a variety of equivalent application-driven formulations [43]. Some authors apply these rules while computing all medial axis points. Others do so by removing branches that are considered useless after the computation [36, 19, 22].

One of the most popular pruning methods is Couprie et al. [14]. They consider the angle formed by a point , and its two closest boundary points denoted by the set . This solution removes points from for which is lower than a constant threshold. This criterion allows different scales within a shape but generally leads to an unconnected medial axis. Another pruning method found in the state-of-the-art is the work of Hesselink et al. [22]. They introduce the Gamma Integer Medial Axis (GIMA), where a point belongs to the medial axis if the distance between its two closest boundary points is at least equal to .

Many pruning methods rely on the distance transform . The distance transform acts as a generator function for the medial axis, such that points if and only if they satisfy some constraint involving their distance to the boundary. However, other authors have proposed alternative generator functions in their pruning strategies [21, 4].

For example, [21] and [4] introduce what they call Poisson skeletons by approximating as the solution of the Poisson equation with constant source function. Poisson skeletons rely on a solid mathematical formulation. Among other concepts, they use the local minimums and maximums of the curvature of . However, when such methodology is applied in a discrete environment, many spurious branches appear due to the need to define the length of a kernel size to estimate these local extreme points.

3 Method

We propose a new pruning approach to remove spurious branches from the medial axis of a -dimensional closed shape . We call our method the Cosine-Pruned Medial Axis (CPMA). The CPMA works by filtering out points from the Euclidean Medial Axis with a score function . We define the function by aggregating the medial axis of controlled smoothed versions of . Our formulation of must yield high values at points that belong to the real medial axis and low values at points that belong to spurious branches. Additionally, we require to be equivariant to isometric transformations.

3.1 The Cosine-Pruned Medial Axis

Let us represent as a square binary image with a resolution of pixels. We start the computation of the CPMA by estimating a set of smoothed versions of the via the Discrete Cosine Transform (DCT) and its inverse (IDCT):

| (1) | |||

| (2) |

where are coordinates in the frequency domain, and are the spatial coordinates of the Euclidean space where is defined. The values of and are determined by:

The DCT is closely related to the discrete Fourier transform of real valued-functions. However, it has better energy compaction properties with only a few of the transform coefficients representing the majority of the energy. Multidimensional variants of the various DCT types follow directly from the one-dimensional definition. They are simply a separable product along each dimension.

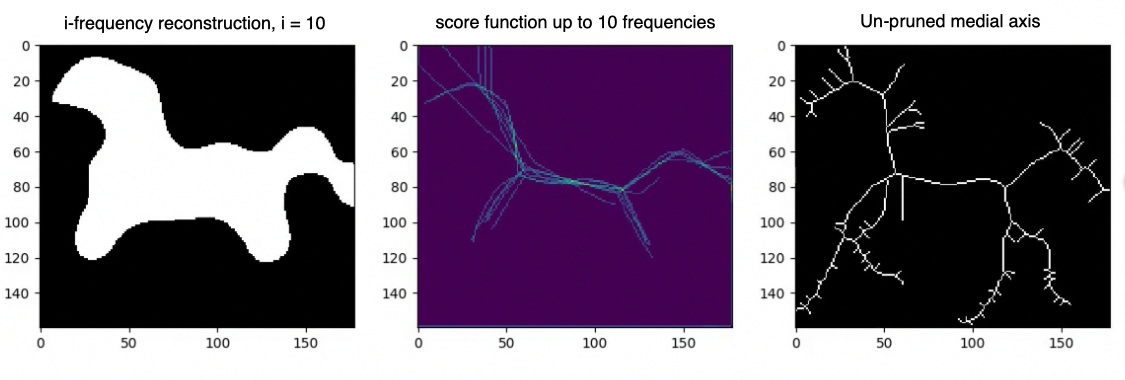

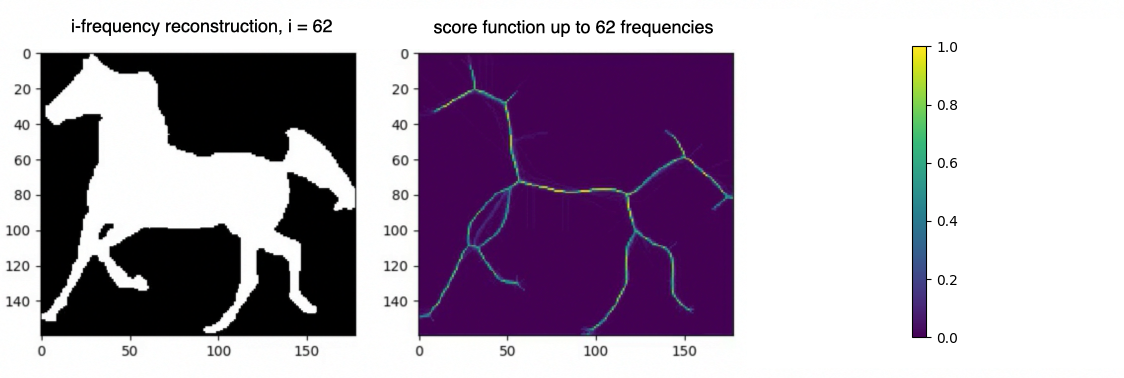

Let us now denote by with the reconstructions of using only the first frequencies as per equation 2. We seek to obtain a acting as a sort of probability indicating how likely it is for a point to be in the medial axis of . Points on relevant branches will appear regularly in the smoothed shapes’ medial axis, resulting in high score function values. In contrast, spurious branches will only appear occasionally, resulting in low values.

Definition 2.

Score function. Let be a square binary image such that . Let also be the -frequency reconstruction of via the IDCT. We define as the per pixel average over a set of estimations of the on the smoothed shapes .

| (3) |

The score function is defined for all in the domain of . Notice that we represent as another binary image where only when belongs to the medial axis. Finally, with , we have all the elements to present our definition of the CPMA.

Definition 3.

Cosine-Pruned Medial Axis Given a binary image representing a shape , the consist of all the pairs such that is greater than a threshold :

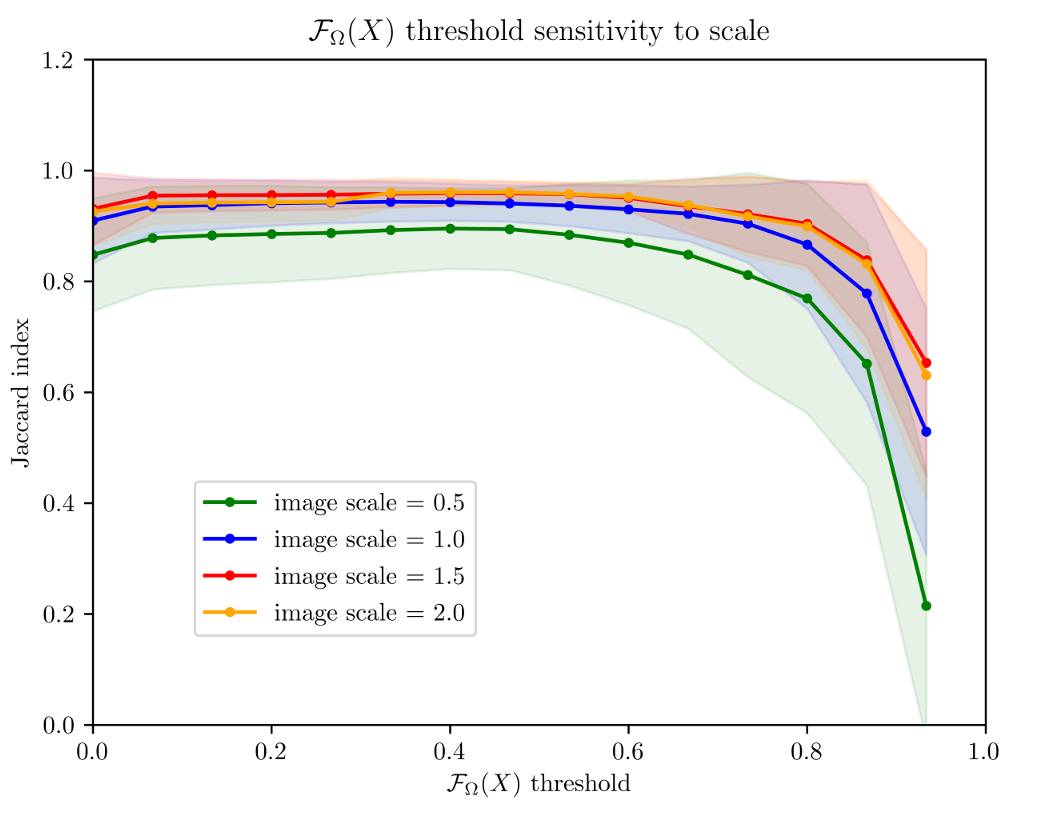

We empirically set the value of the threshold to . However, we conducted an additional experiment to show that this value is consistent across different shapes.

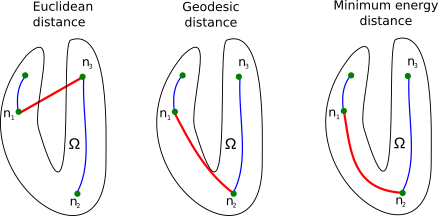

Although the CPMA results in a noise-free , there is no restriction in its formulation to force the CPMA to create a connected medial axis. We solve this by finding all the disconnected pieces of the CPMA. Later, we connect them using a minimum distance criterion , where and are endpoints of two distinct pieces. However, neither the Euclidean distance nor the geodesic distance are suitable criteria because they lead to connections between nodes that do not follow the medial axis (See figure 4). We instead connect and with a minimum energy path using an energy function . We must guarantee that has high values when is close to and low values when is close to the medial axis. This way, we enforce the paths to be close to the . We call the result of this strategy the Connected CPMA (C-CPMA). In section 3.3, we provide details of computation.

3.2 Isometric Equivariance of the CPMA

The distance transform-based medial axis depends only on the shape , not on the position or size in the embedding Euclidean space. Therefore the medial axis should be equivariant under isometric transformations.

Proposition 1.

Let be the medial axis transform of a connected bounded domain embedded in , and let be an isometric transformation in . The square matrix is a composition of any number or rotations and reflection matrices, and is an -dimensional vector. We say that is equivariant to any such that .

Proof.

Recall that is an isometric transformation and thus it is invertible and preserves the Euclidean distance. The previous statement implies that is an isomorphic map between an open ball and . Consequently, if is a maximal ball in then from definition 1 we have that .

Let us now define . Applying to every element of , we have:

∎

Moreover, the CPMA depends primarily on , which also holds the isometric equivariant property.

Corollary 1.

Let be the score function of as per definition 3, and let be an isometric transformation. We say that is equivariant to any such that .

Proof.

Using the results from proposition 1 and recalling that is a linear transformation, we have that:

∎

However, in a discrete domain, this equivariance is only an approximation because points on both and are constrained to be on a fixed, regular grid. In a continuous domain, it is easy to demonstrate that the cosine transform has exact isometric equivariance.

3.3 Implementation Details

To compute the CPMA enforcing the connectivity, we create a lattice graph . A point in the domain of is a node of , if and only if . The node shares an edge with all its neighbors in the lattice only if the neighbors are also inside . We used an -connectivity neighborhood in 2D and a -connectivity neighborhood in 3D.

In order to determine the minimum energy path between pairs of pixels/voxels, we compute the minimum distance path inside using Dijkstra’s algorithm. We assign weights to every edge with values extracted from . Given , we compute the energy of every edge as follows:

This method guarantees connectivity, but it is inefficient because of the minimum energy path’s iterative computation. We sacrifice performance in favor of connectivity. We include the pseudo-code to compute the CPMA and the C-CPMA in algorithms 1 and 2.

The CPMA only relies on one parameter, . The value of is the threshold that determines whether a point of is a medial axis point. We empirically set the value of . However, in section 5, we present the result of an additional experiment to show how sensitive the CPMA is to different threshold values.

Another essential consideration when computing the CPMA is the maximum frequency used to reconstruct the original shape through the IDCT. Using less than frequencies enables a faster computation of the CPMA without losing accuracy. We found that using frequencies greater than does not yield significant improvement for the CPMA.

4 Experiments

In this section, we describe experiments used to evaluate our approach compared to state-of-the-art medial axis pruning methods.

4.1 Datasets

We chose three extensively used datasets of 2D and 3D segmented objects to evaluate our methodology on medial axis extraction robustness. These datasets are part of the accepted benchmarks in literature, enabling us to compare our results.

Kimia216 dataset [42]

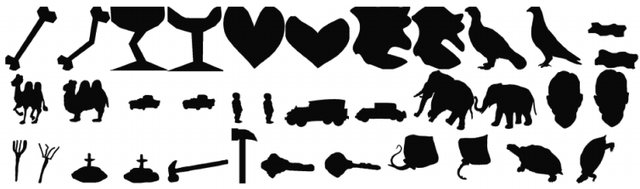

It consists of 18 classes of different shapes with 12 samples in each class. The dataset’s images are a collection of slightly different views of a set of shapes with varying topology. Figure 5(a) shows two samples from each class. Contour noise and random rotations are also present in some images in the dataset. Kimia216 has been largely used to test a wide range of medial axis extraction algorithms. Because of the large variety of shapes, we assume that this benchmark ensured a fair comparison with the state-of-the-art.

Animal2000 dataset [8]

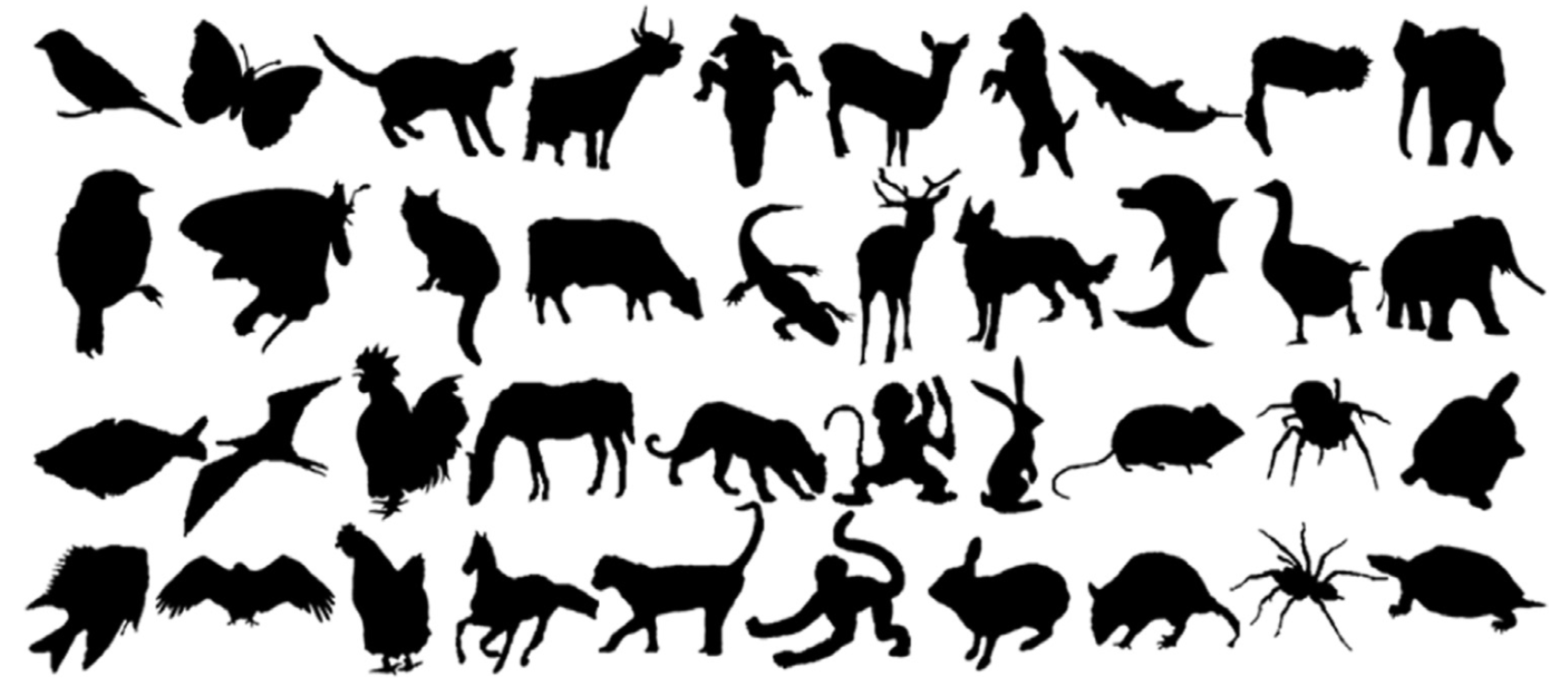

The Animal2000 dataset helps us to evaluate the properties of our approach in the presence of non-rigid transformations. It contains 2000 silhouettes of animals in 20 categories. Each category consists of 100 images of the same type of animal in different poses (Figure 5(b)). Because silhouettes in Animal2000 come from real images, each class is characterized by a large intra-class variation.

University of Groningen’s Benchmark

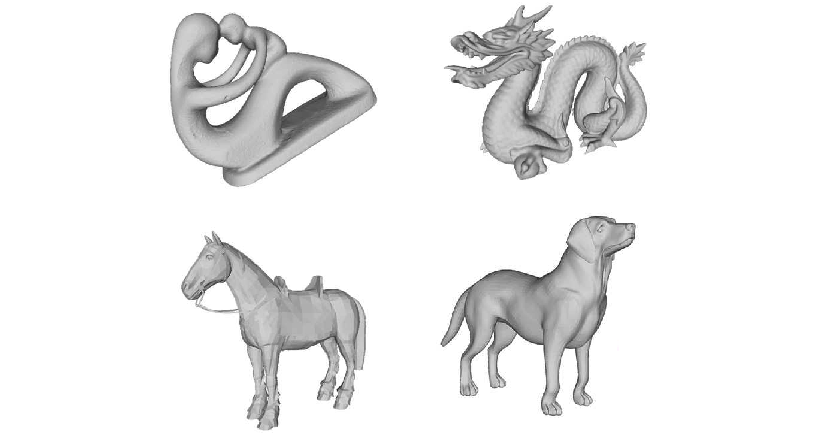

This set of 3D meshes is commonly found in the literature to evaluate medial axis extraction methods in 3D [46, 47, 13]. It includes scanned and synthetic shapes taken from other popular datasets. It contains shapes with and without holes, shapes of varying thickness, and shapes with smooth and noisy boundaries. See Figure 5(c). All meshes are pre-processed, ensuring a consistent orientation, closeness, non-duplicated vertices, and no degenerate faces.

4.2 Sensitivity to Noise and Equivariance Analysis

To compare the robustness of a medial axis extraction method, we adopt an evaluation strategy similar to [13]. Consequently, we measure the similarity between the medial axis of a shape and . The shape derives from a “perturbation” of . We are interested in evaluating how well our methodology responds to induced noise on the contour/surface. We are also interested in assessing how stable the CPMA responds in the presence of rotations of to test its isometric equivariance.

We employ the Hausdorff distance (), and Dubuisson-Jain dissimilarity () as metrics between shapes. The Dubuisson-Jain similarity is a normalization of the Hausdorff distance [15], which aims to overcome sensitivity to outliers. The Dubuisson-Jain similarity between point sets and is defined as:

| (4) |

with

| (5) |

We must first choose a strategy to induce noise to the boundary to evaluate the noise sensitivity. We use a stochastic approach denoted by , where a random number of points are deformed by a vector in the direction orthogonal to the boundary, with a deformation magnitude that is normally distributed, . This noise model is recursively applied times to every shape in our datasets. We denote as the medial axis of a shape after applying the noise model times. In our experiments, we used noise levels.

For every object in each dataset, we compared the medial axis with the noisy versions to determine how sensitive a method is to boundary noise.

The medial axis is ideally an isometric equivariant shape representation so that . Due to sampling factors, this relationship is only an approximation. However, we can measure the equivariance by comparing with . The more similar they are, the more equivariant the method.

Because the translation equivariance is trivial, we evaluate isometric equivariance only with rotations of . We do not use reflections because the properties of rotation matrices we use in this study can be extended to reflection matrices. In our experiments, 2D rotations are counterclockwise in the range degrees around the origin. We use rotations at regular intervals. In 3D, we use a combination of azimuthal () and elevation () rotations around the origin. The angles and take values each degrees.

4.3 Comparative Studies

We chose seven of the most relevant methods in the scientific literature to compare with CPMA extraction results. Each method was selected based on a careful review of the state-of-the-art. These methods illustrate the variety of approaches that authors employ to prune the medial axis. Notice that the first method we used in our study is the un-pruned .

Table 1 summarizes all of the methods in our comparative study. In many cases, the performance of a pruning method depended on its parametrization. We selected parameters for all of the methods following the best performance parametrization reported in the state-of-the-art.

| Dim. | Method | Full name | Parameter description |

|---|---|---|---|

| 2D | MAT [11] | Medial Axis Transform | N/A |

| Thinning [55] | Zhang-Suen Algorithm | N/A | |

| GIMA [22] | Gamma Integer Medial Axis | : minimum distance between and , , the neighborhood of . | |

| BEMA [14] | Bisector Euclidean Medial Axis | : angle formed by the point and the two projections and , . | |

| SAT [20] | Scale Axis Transform | : scale factor to resize . | |

| SFEMA [35] | Scale Filtered Euclidean Medial Axis | : scale factor to all balls in the . | |

| Poisson skel. [4] | Poisson Skeleton | : window size to find the local maximum of contour curvature. | |

| 3D | Thinning [55] | Zhang-Suen Algorithm | N/A |

| TEASAR [41] | Tree-structure skeleton extraction | N/A |

5 Results

In this section, we present and discuss the results of our approach on medial axis pruning. We focus on two properties: 1) robustness to noise of the contour and 2) isometric equivariance.

5.1 Stability Under Boundary Noise

We compared the stability of the CPMA under boundary noise against other approaches in Table 1. We conducted our experiments on Kimia216 and the Animal2000 Dataset for 2D images. Additionally, we used a set of three-dimensional triangular meshes from the Groningen Benchmark for 3D experimentation.

For our noise sensitivity experiments, we applied times the noise model to every object of each dataset. We then computed their using every method in Table 1 with different parameters. Finally, each was compared with using both the Hausdorff distance and Dubuisson-Jain dissimilarity. We report the per method average of each metric over all the elements of each dataset.

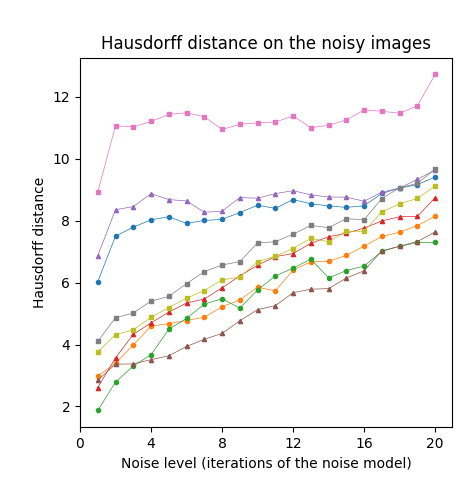

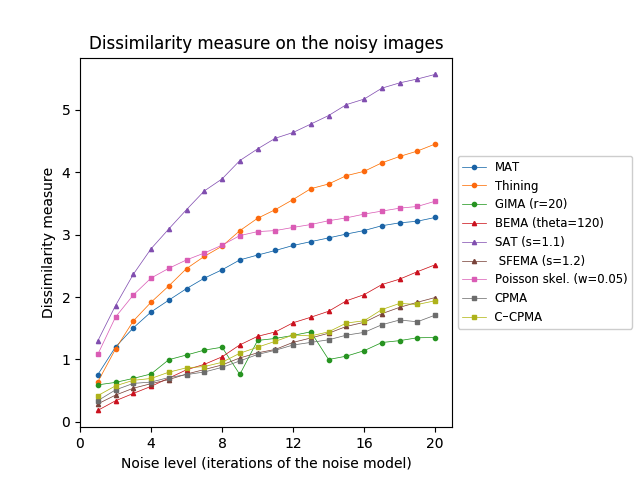

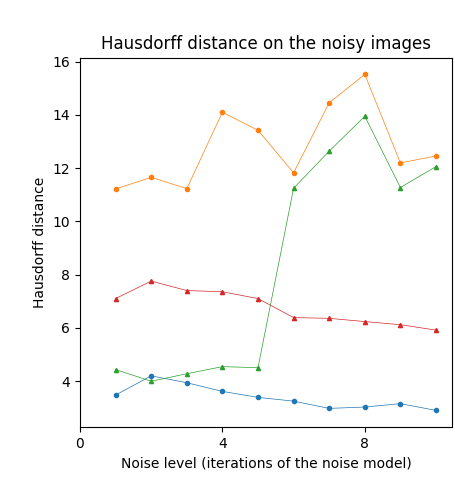

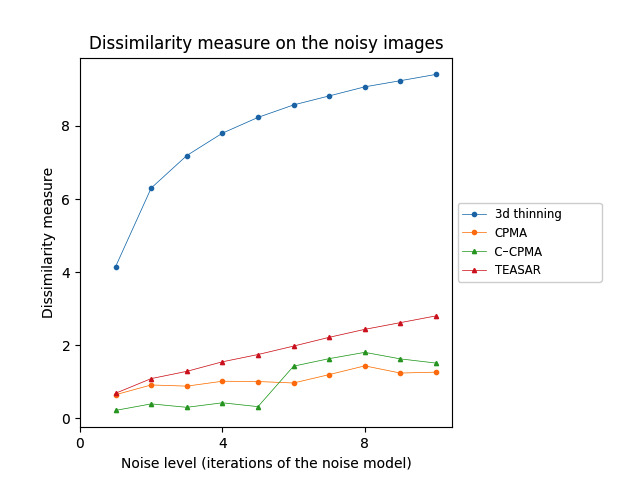

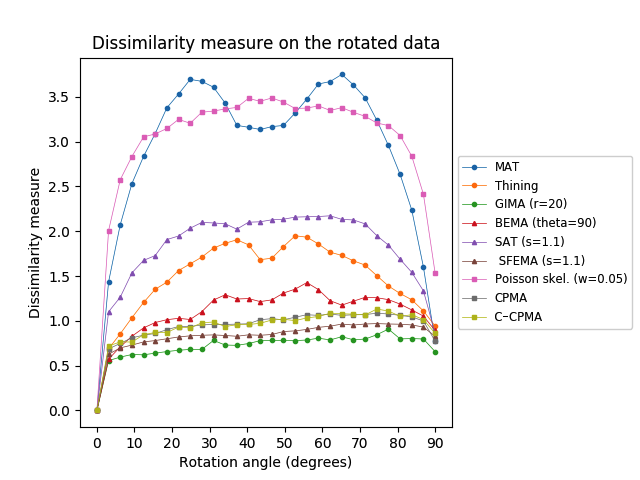

First, we tested our medial axis pruning method on the Kimia216 dataset and present the results in Table 2. The table shows that the CPMA and the C-CPMA are competitive against state-of-the-art methods for medial axis extraction. Our results show similar performance to two state-of-the-art methods: the GIMA and SFEMA. The CPMA and C-CPMA also performed better than Poisson Skeletons, SAT, topological thinning, and the MAT itself. For visual comparison, Figure 6 (top row) shows both Hausdorff distance and Dubuisson-Jain dissimilarity against noise level. The figure only displays the best parametrization of every method to improve visualization. It is clear that the CPMA and the C-CPMA are among the three best results when we use the Dubuisson-Jain dissimilarity metric. However, we observe a decrease in performance when we the Hausdorff distance metric. We believe this occurs because of Hausdorff’s distance sensitivity to outliers.

| Method | Hausdorff | Dissimilarity | ||||||

|---|---|---|---|---|---|---|---|---|

| 5 | 10 | 15 | 20 | 5 | 10 | 15 | 20 | |

| MAT | 8.13 | 8.50 | 8.43 | 9.41 | 1.95 | 2.67 | 3.01 | 3.27 |

| Thinning | 4.68 | 5.85 | 6.88 | 8.15 | 2.18 | 3.26 | 3.94 | 4.45 |

| GIMA (r=5) | 5.46 | 6.50 | 7.37 | 8.84 | 0.87 | 1.31 | 1.60 | 1.88 |

| GIMA (r=10) | 5.40 | 7.12 | 8.35 | 9.18 | 0.68 | 1.08 | 1.35 | 1.58 |

| GIMA (r=20) | 4.49 | 5.76 | 6.39 | 7.30 | 1.00 | 1.30 | 1.05 | 1.35 |

| BEMA (theta=90) | 5.22 | 6.55 | 7.11 | 8.30 | 0.99 | 1.60 | 2.07 | 2.53 |

| BEMA (theta=120) | 5.05 | 6.56 | 7.60 | 8.74 | 0.70 | 1.37 | 1.94 | 2.52 |

| BEMA (theta=150) | 6.68 | 7.69 | 7.89 | 9.40 | 0.99 | 1.80 | 2.50 | 3.37 |

| SAT (s=1.1) | 8.68 | 8.73 | 8.76 | 9.64 | 3.09 | 4.37 | 5.08 | 5.57 |

| SAT (s=1.2) | 9.61 | 10.05 | 9.79 | 10.20 | 2.50 | 3.22 | 3.89 | 4.47 |

| SFEMA (s=1.1) | 4.15 | 5.35 | 6.18 | 7.53 | 0.84 | 1.37 | 1.92 | 2.50 |

| SFEMA (s=1.2) | 3.64 | 5.13 | 6.15 | 7.64 | 0.68 | 1.11 | 1.53 | 1.99 |

| Poisson skel. (w=0.05) | 11.43 | 11.16 | 11.26 | 12.73 | 2.46 | 3.05 | 3.27 | 3.53 |

| Poisson skel. (w=0.10) | 15.60 | 15.48 | 16.07 | 17.35 | 3.62 | 4.07 | 4.19 | 4.56 |

| Poisson skel. (w=0.20) | 17.71 | 18.02 | 19.54 | 21.35 | 5.00 | 5.38 | 5.63 | 6.20 |

| CPMA | 5.55 | 7.28 | 8.07 | 9.66 | 0.71 | 1.07 | 1.39 | 1.71 |

| C-CPMA | 5.19 | 6.68 | 7.66 | 9.12 | 0.80 | 1.20 | 1.58 | 1.94 |

The Animal2000 dataset contains nearly ten times more shapes than Kimia216. This leads to more variation between shapes, and therefore a more challenging setting. Table 3 shows similar results compared to Kimia216, confirming that the noise invariant properties of the CPMA still hold in a more robust dataset. The GIMA and the SFEMA are still the best methods measured with the Dubuisson-Jain dissimilarity, closely followed by both the CPMA and the C-CPMA. Results of using the Dubuisson-Jain dissimilarity as a metric show that the CPMA is close to methods such as BEMA and SFEMA. However, the results are not as good as when using the Hausdorff distance metric. Figure 6 (middle row) depicts the best performance for every method in comparison to ours. Our experiment’s results suggest that the CPMA noise invariant properties generalize across different datasets.

| Method | Hausdorff | Dissimilarity | ||||||

|---|---|---|---|---|---|---|---|---|

| 5 | 10 | 15 | 20 | 5 | 10 | 15 | 20 | |

| MAT | 7.47 | 10.39 | 12.47 | 14.64 | 3.56 | 4.50 | 5.00 | 5.29 |

| Thinning | 7.51 | 10.79 | 12.94 | 15.03 | 2.30 | 3.71 | 4.52 | 4.99 |

| GIMA (r=5) | 7.96 | 11.00 | 13.23 | 15.27 | 1.24 | 1.88 | 2.35 | 2.68 |

| GIMA (r=10) | 6.78 | 8.56 | 10.21 | 11.54 | 0.89 | 1.29 | 1.62 | 1.89 |

| GIMA (r=20) | 5.02 | 6.86 | 8.29 | 9.77 | 0.85 | 1.16 | 1.52 | 1.89 |

| BEMA (theta=90) | 7.84 | 10.61 | 12.76 | 14.78 | 1.45 | 2.37 | 3.06 | 3.57 |

| BEMA (theta=120) | 7.86 | 11.76 | 13.93 | 15.74 | 1.22 | 2.25 | 3.04 | 3.69 |

| BEMA (theta=150) | 8.88 | 12.38 | 14.39 | 16.51 | 1.68 | 3.00 | 3.95 | 4.72 |

| SAT (s=1.1) | 9.44 | 11.80 | 13.47 | 15.21 | 2.80 | 4.13 | 5.02 | 5.49 |

| SAT (s=1.2) | 11.28 | 13.57 | 15.21 | 16.40 | 2.13 | 3.13 | 3.85 | 4.55 |

| SFEMA (s=1.1) | 7.44 | 11.00 | 13.30 | 15.35 | 1.33 | 2.27 | 3.03 | 3.69 |

| SFEMA (s=1.2) | 7.64 | 11.43 | 13.83 | 15.90 | 1.26 | 2.13 | 2.78 | 3.36 |

| Poisson skel. (w=0.05) | 11.94 | 13.68 | 15.35 | 17.03 | 3.08 | 3.63 | 4.01 | 4.20 |

| Poisson skel. (w=0.10) | 14.55 | 17.11 | 18.64 | 20.10 | 3.55 | 4.08 | 4.68 | 4.94 |

| Poisson skel. (w=0.20) | 17.37 | 20.35 | 21.67 | 23.69 | 3.94 | 4.69 | 5.33 | 5.73 |

| CPMA | 9.20 | 12.88 | 14.96 | 17.22 | 1.18 | 1.96 | 2.55 | 3.09 |

| C-CPMA | 8.67 | 12.39 | 14.45 | 16.88 | 1.17 | 1.96 | 2.58 | 3.13 |

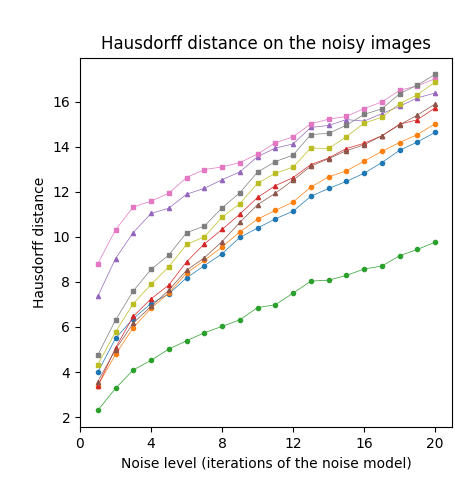

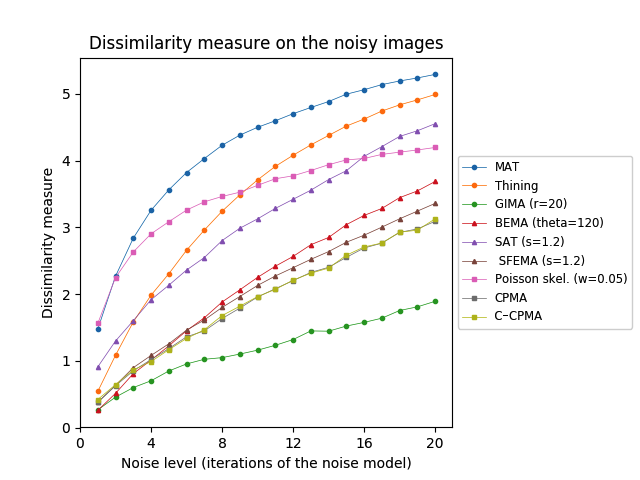

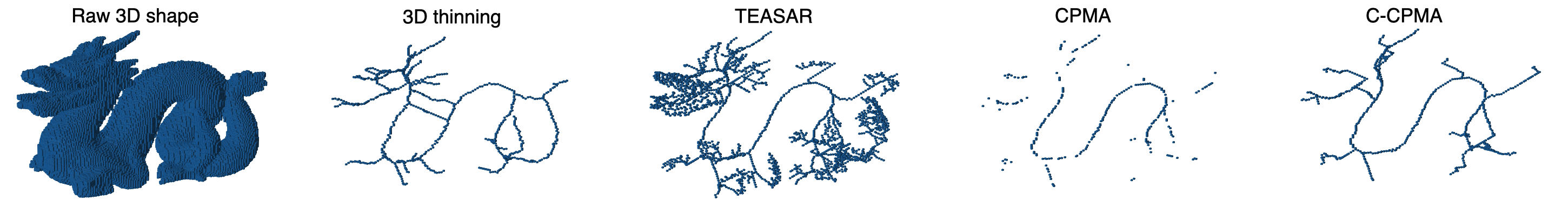

For our 3D experiments, we selected objects from the Groningen Benchmark, reflecting the most common shapes used in the literature. Each object was voxelized to a binary voxel grid with resolution . This resolution offered sufficient details as well as a sufficiently low computational cost. In contrast to the 2D case, we applied only times to the 3D object. We did this for two reasons: 1) to reduce computational complexity and 2) noise tends to be more extreme in 3D at the chosen resolution. The results on the Groningen dataset are shown in Table 4 and Figure 6 (bottom row). Notice that both the CPMA and C-CPMA achieved the best results among the other methods when compared with the dissimilarity measure. These results show that our methodology has noise-invariance properties, and it is stable in the presence of small surface deformation. However, the results show unusual patterns when compared with the Hausdorff distance. In fact, for some methods, the metric decreases when the noise level increases. We attribute this behavior to the outlier sensibility of the Hausdorff distance.

| Method | Hausdorff | Dissimilarity | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 2 | 4 | 6 | 8 | 10 | 2 | 4 | 6 | 8 | 10 | |

| 3D thinning | 4.20 | 3.61 | 3.25 | 3.03 | 2.90 | 6.30 | 7.80 | 8.58 | 9.07 | 9.41 |

| TEASAR | 7.76 | 7.36 | 6.39 | 6.24 | 5.92 | 1.09 | 1.55 | 1.98 | 2.44 | 2.80 |

| CPMA | 11.66 | 14.10 | 11.83 | 15.52 | 12.46 | 0.91 | 1.02 | 0.97 | 1.44 | 1.27 |

| C-CPMA | 3.99 | 4.54 | 11.25 | 13.95 | 12.06 | 0.40 | 0.43 | 1.43 | 1.81 | 1.51 |

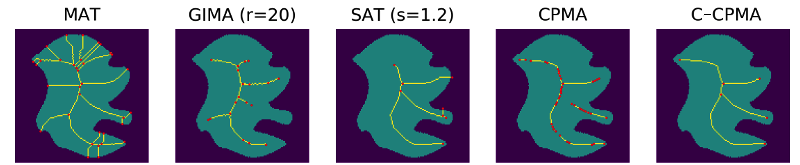

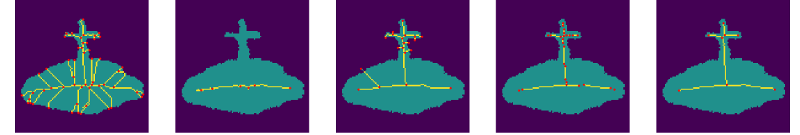

We complete the noise stability analysis showing examples of the computed with our methodology, and compared to the other methods in this study, Figure 7.

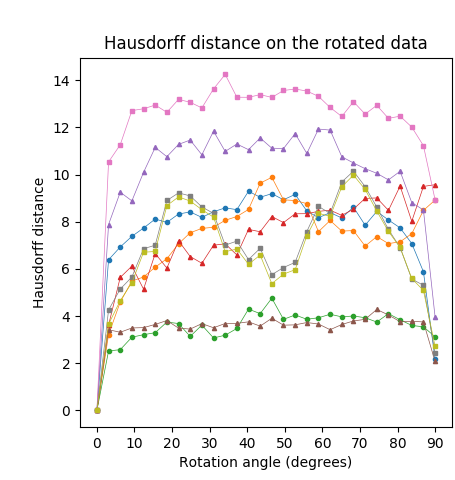

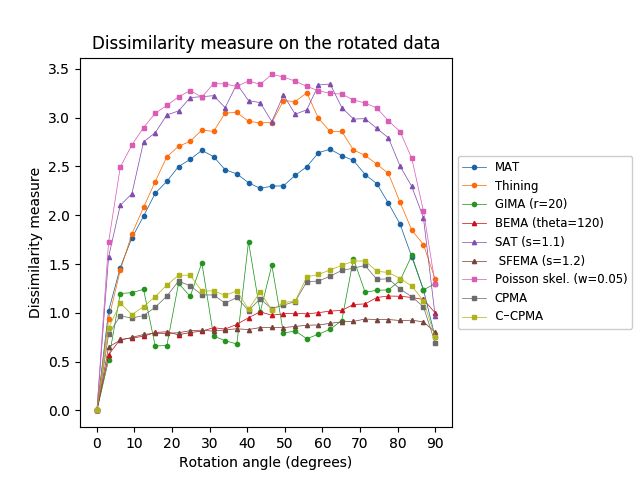

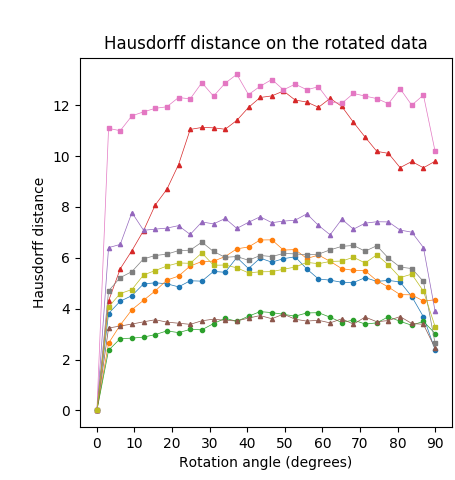

5.2 Sensitivity to Rotations

We measured the dissimilarity between and for different instances, and different definitions of the medial axis transform. The lower this dissimilarity, the more stable the method is under rotation. The rotation sensitivity analysis on the Kimia216 dataset is summarized in Table 5 and Figure 8 (top row). The results show that the curves of the CPMA and the C-CPMA fall near the average of the rest of the methods achieving state-of-the-art performance. The results also surpassed several methods, such as Poisson skeleton, SAT, and thinning methods. Notice that when using the dissimilarity metric the CPMA, the GIMA, the SFEMA, and the BEMA form a subgroup that performs significantly better compared to the others. Moreover, the performance of these methods oscillates around a value of dissimilarity of around pixel on average. The intuition for this result is that regardless of the rotation, skeletons computed with these methods vary only at one pixel. Consequently, we can claim that they exhibit rotation equivariance.

| Method | Hausdorff | Dissimilarity | ||||

|---|---|---|---|---|---|---|

| 30º | 60º | 90º | 30º | 60º | 90º | |

| MAT | 8.18 | 8.17 | 2.17 | 2.67 | 2.64 | 0.75 |

| Thinning | 7.72 | 7.58 | 8.92 | 2.87 | 2.99 | 1.35 |

| GIMA (r=5) | 6.16 | 6.03 | 5.54 | 1.02 | 1.11 | 0.85 |

| GIMA (r=10) | 5.54 | 6.25 | 5.04 | 0.83 | 1.02 | 0.72 |

| GIMA (r=20) | 3.62 | 3.93 | 3.12 | 1.51 | 0.78 | 1.30 |

| BEMA (theta=90) | 12.35 | 12.76 | 11.72 | 1.31 | 1.60 | 1.06 |

| BEMA (theta=120) | 6.24 | 8.44 | 9.57 | 0.81 | 1.00 | 1.00 |

| BEMA (theta=150) | 9.14 | 10.09 | 10.27 | 1.11 | 1.35 | 1.36 |

| SAT (s=1.1) | 10.84 | 11.93 | 3.96 | 3.22 | 3.34 | 0.97 |

| SAT (s=1.2) | 11.44 | 12.40 | 4.45 | 2.64 | 2.87 | 0.97 |

| SFEMA (s=1.1) | 3.86 | 3.98 | 2.52 | 0.92 | 1.01 | 0.83 |

| SFEMA (s=1.2) | 3.68 | 3.66 | 2.08 | 0.82 | 0.88 | 0.80 |

| Poisson skel. (w=0.05) | 12.83 | 13.32 | 8.93 | 3.21 | 3.28 | 1.30 |

| Poisson skel. (w=0.10) | 16.36 | 17.03 | 10.17 | 4.24 | 4.30 | 1.78 |

| Poisson skel. (w=0.20) | 18.94 | 19.90 | 9.65 | 5.61 | 5.66 | 2.69 |

| CPMA | 8.63 | 8.65 | 2.42 | 1.18 | 1.33 | 0.70 |

| C-CPMA | 8.51 | 8.36 | 2.72 | 1.22 | 1.39 | 0.75 |

We applied the same analysis to the Animal2000 dataset achieving similar results. In this case, the CPMA and the C-CPMA ranked third and fourth, respectively, among all methods when we used the dissimilarity metric. The results for all methods and parameters are presented in Table 6. As before, we also present a summary with the best parametrization for each method in Figure 8 (middle row) to facilitate the interpretation. Notice that due to the larger number of objects in the Animal2000 dataset, the curves for every method appear to be smoother, highlighting stability across different rotation angles and shapes.

| Method | Hausdorff | Dissimilarity | ||||

|---|---|---|---|---|---|---|

| 30º | 60º | 90º | 30º | 60º | 90º | |

| MAT | 5.08 | 5.17 | 2.38 | 3.67 | 3.64 | 0.77 |

| Thinning | 5.85 | 6.11 | 4.35 | 1.71 | 1.86 | 0.94 |

| GIMA (r=5) | 5.58 | 5.54 | 5.62 | 0.96 | 1.08 | 0.83 |

| GIMA (r=10) | 5.42 | 5.50 | 4.29 | 0.78 | 0.88 | 0.74 |

| GIMA (r=20) | 3.17 | 3.84 | 3.02 | 0.68 | 0.81 | 0.66 |

| BEMA (theta=90) | 11.12 | 11.92 | 9.80 | 1.10 | 1.35 | 0.89 |

| BEMA (theta=120) | 5.45 | 6.10 | 6.80 | 0.77 | 0.90 | 0.86 |

| BEMA (theta=150) | 7.60 | 8.88 | 9.91 | 1.13 | 1.36 | 1.40 |

| SAT (s=1.1) | 7.41 | 7.27 | 3.90 | 2.10 | 2.16 | 0.86 |

| SAT (s=1.2) | 9.82 | 9.62 | 4.85 | 1.77 | 1.90 | 0.95 |

| SFEMA (s=1.1) | 3.52 | 3.54 | 2.45 | 0.84 | 0.93 | 0.83 |

| SFEMA (s=1.2) | 3.54 | 3.63 | 2.26 | 0.77 | 0.87 | 0.82 |

| Poisson skel. (w=0.05) | 12.88 | 12.72 | 10.18 | 3.33 | 3.40 | 1.54 |

| Poisson skel. (w=0.10) | 15.02 | 15.41 | 10.58 | 3.67 | 3.89 | 1.89 |

| Poisson skel. (w=0.20) | 16.83 | 17.20 | 8.67 | 4.07 | 4.33 | 1.85 |

| CPMA | 6.62 | 6.14 | 2.64 | 0.96 | 1.06 | 0.77 |

| C-CPMA | 6.19 | 5.77 | 3.30 | 0.98 | 1.05 | 0.86 |

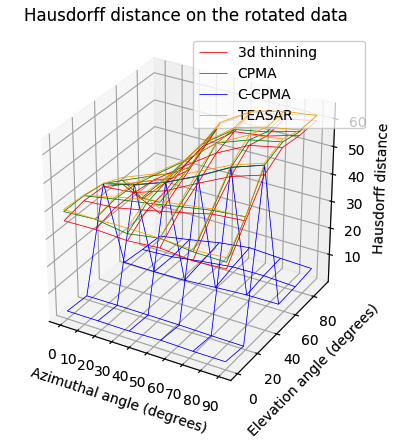

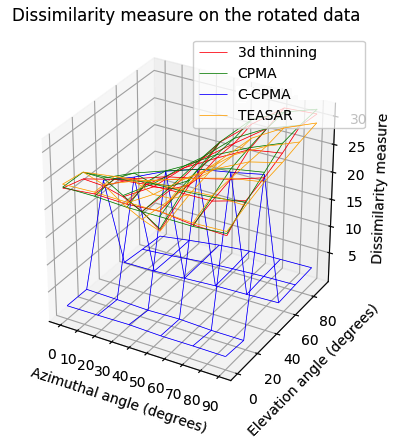

Finally, we conducted the rotation sensitivity analysis on the 3D dataset. the results are summarized in Figure 8 (bottom row). The image shows the four 3D medial axis extraction methods we compared in our study for combinations of azimuthal and elevation angles. This figure shows how both the Hausdorff distance and the Dubuisson-Jain dissimilarity become higher when the rotation becomes more extreme, except in the case of C-CPMA. We believe this behavior is due to the connectivity enforcement mitigating the gaps in the medial axis, and reducing the metrics.

5.3 Hyper-parameter Selection

We tested our medial axis pruning method on the Kimia216 dataset. Many medial axis pruning methods depend on hyper-parameters to accurately estimate the medial axis [22, 14, 20, 35]. These parameters usually have a physical meaning in the context of the object whose medial axis they seek to estimate. Often, the parameters are distances or angles formed between points inside the object. In other work, some authors create score functions like ours, intending to use its values as a filter parameter to remove points on spurious branches of the . In most cases, however, such parameters are subject to factors like resolution or scale. Thus, we conducted another experiment to test the sensitivity of the CPMA to the pruning parameter at different scale factors of the input object.

Figure 9 shows the average of the Jaccard index as the reconstruction metric vs the values of . We compared an object against its reconstruction over all images in Kimia216 dataset. The figure shows how high values of deteriorate the reconstruction, while lower values do not prune enough spurious branches. From the figure, we can infer that values around offer a good trade-off between reconstruction and branch pruning. Moreover, around this value, the standard deviation reaches its minimum value suggesting optimal performance regardless of the object. Because the value of is stable for different scale factors, we conclude that scale does not affect the selection of the threshold.

6 Conclusions and Future Work

We presented the CPMA, a new method for medial axis pruning with noise robustness and equivariance to isometric transformations. Our method leverages the discrete cosine transform to compute a score function that rates the importance of individual points and branches within the medial axis of a shape.

Our pruning approach delivers competitive results compared to the state-of-the-art. The results of our experiments show that our method is robust to boundary noise. Additionally, it is also equivariant to isometric transformations, and it is capable of producing a stable and connected medial axis even in scenarios with significant perturbations of the contour.

The CPMA can be efficiently computed in parallel because it depends on an aggregation of reconstructions of the original shape. Each reconstruction is independent of the others, which allows the parallelism.

We believe our work leaves room for improvement. Thus we identified the following potential for future work.

All of the 3D objects we used come as 3D triangular meshes. To compute the CPMA, we discretize the meshes to fix a resolution of voxels. The discretization introduces two issues: 1) the objects lose small details in their structure, affecting the overall performance, and 2) the isometric equivariance decreases because rotated voxels will not usually align perfectly with non-rotated voxels.

Our algorithm for connectivity enforcement relies on iterative computations of Dijkstra’s algorithm for finding the minimum energy path between two pieces of the unconnected medial axis. Better strategies to compute the paths could increase the efficiency.

7 Acknowledgments

We want to thank the GRASP Lab at the University of Pennsylvania for providing the computational resources to conduct this investigation.

References

- [1] Sadegh Abbasi, Farzin Mokhtarian, and Josef Kittler. Curvature scale space image in shape similarity retrieval. Multimedia Systems, 7(6):467–476, November 1999.

- [2] Carlo Arcelli and Gabriella Sanniti di Baja. Finding local maxima in a pseudo-euclidian distance transform. Computer Vision, Graphics, and Image Processing, 43(3):361 – 367, 1988.

- [3] Carlo Arcelli, Gabriella Sanniti di Baja, and Luca Serino. Distance-Driven Skeletonization in Voxel Images. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(4):709–720, apr 2011.

- [4] Gilles Aubert and Jean Franois Aujol. Poisson skeleton revisited: A new mathematical perspective. Journal of Mathematical Imaging and Vision, 48(1):149–159, 2014.

- [5] G. Aufort, R. Jennane, R. Harba, and C. L. Benhamou. A new 3d shape-dependant skeletonization method. application to porous media. In 2006 14th European Signal Processing Conference, pages 1–5, 2006.

- [6] J. August, A. Tannembaum, and S. W. Zucker. On the evolution of the skeleton. In Proceedings of the ICCV, pages 315–322, 1999.

- [7] X. Bai, L. J. Latecki, and W. Liu. Skeleton pruning by contour partitioning with discrete curve evolution. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29(3):449–462, 2007.

- [8] X. Bai, W. Liu, and Z. Tu. Integrating contour and skeleton for shape classification. In 2009 IEEE 12th International Conference on Computer Vision Workshops, ICCV Workshops, pages 360–367, 2009.

- [9] Serge Belongie, Jitendra Malik, and Jan Puzicha. Shape matching and object recognition using shape contexts. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24(4):509–522, 2002.

- [10] A. Beristain and M. Grana. Pruning algorithm for voronoi skeletons. Electronics Letters, 46(1):39–41, January 2010.

- [11] Harry Blum. A Transformation for Extracting New Descriptors of Shape. In Weiant Wathen-Dunn, editor, Models for the Perception of Speech and Visual Form, pages 362–380. MIT Press, Cambridge, 1967.

- [12] Rizwan Chaudhry, Ferda Ofli, Gregorij Kurillo, Ruzena Bajcsy, and Rene Vidal. Bio-inspired dynamic 3d discriminative skeletal features for human action recognition. In 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops, pages 471–478. IEEE, June 2013.

- [13] John Chaussard, Michel Couprie, and Hugues Talbot. Robust skeletonization using the discrete -medial axis. Pattern Recognition Letters, 32(9):1384–1394, jul 2011.

- [14] Michel Couprie, David Coeurjolly, and Rita Zrour. Discrete bisector function and Euclidean skeleton in 2D and 3D. Image and Vision Computing, 25(10):1543–1556, 2007.

- [15] M.-P. Dubuisson and A.K. Jain. A modified Hausdorff distance for object matching. In Proceedings of 12th International Conference on Pattern Recognition, volume 1, pages 566–568. IEEE Comput. Soc. Press, 2002.

- [16] P. Dłotko and R. Specogna. Topology preserving thinning of cell complexes. IEEE Transactions on Image Processing, 23(10):4486–4495, 2014.

- [17] Carlos Esteves, Christine Allen-Blanchette, Ameesh Makadia, and Kostas Daniilidis. Learning so(3) equivariant representations with spherical cnns. In Vittorio Ferrari, Martial Hebert, Cristian Sminchisescu, and Yair Weiss, editors, Computer Vision – ECCV 2018, pages 54–70, Cham, 2018. Springer International Publishing.

- [18] Oren Freifeld and Michael J Black. Lie Bodies: A Manifold Representation of 3D Human Shape. In Bastian Leibe, Jiri Matas, Nicu Sebe, and Max Welling, editors, European Conference on Computer Vision, number October 2012 in Lecture Notes in Computer Science, pages 1–14. Springer International Publishing, Cham, 2012.

- [19] Fengyi Gao, Guangshun Wei, Shiqing Xin, Shanshan Gao, and Yuanfeng Zhou. 2D skeleton extraction based on heat equation. Computers and Graphics (Pergamon), 74:99–108, 2018.

- [20] Joachim Giesen, Balint Miklos, Mark Pauly, and Camille Wormser. The scale axis transform. In Proceedings of the 25th annual symposium on Computational geometry - SCG ’09, page 106, New York, New York, USA, 2009. ACM Press.

- [21] Lena Gorelick, Meirav Galun, and Eitan Sharon. Shape Representation and Classification Using the Poisson Equation. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28(12):1991–2005, 2006.

- [22] Wim H. Hesselink and Jos B.T.M. Roerdink. Euclidean skeletons of digital image and volume data in linear time by the integer medial axis transform. IEEE Transactions on Pattern Analysis and Machine Intelligence, 30(12):2204–2217, 2008.

- [23] Haisheng Li, Li Sun, Xiaoqun Wu, and Qiang Cai. Scale-invariant wave kernel signature for non-rigid 3d shape retrieval. In 2018 IEEE International Conference on Big Data and Smart Computing (BigComp), pages 448–454. IEEE, January 2018.

- [24] Maosen Li, Siheng Chen, Xu Chen, Ya Zhang, Yanfeng Wang, and Qi Tian. Actional-structural graph convolutional networks for skeleton-based action recognition. ArXiv, abs/1904.12659, 2019.

- [25] Ronghao Li, Guochao Bu, and Pei Wang. An automatic tree skeleton extracting method based on point cloud of terrestrial laser scanner. International Journal of Optics, 2017:1–11, 2017.

- [26] C. Lohou and J. Dehos. Automatic correction of ma and sonka’s thinning algorithm using p-simple points. IEEE Transactions on Pattern Analysis and Machine Intelligence, 32(6):1148–1152, 2010.

- [27] Christophe Lohou and Gilles Bertrand. A 3d 12-subiteration thinning algorithm based on p-simple points. Discrete Applied Mathematics, 139(1):171 – 195, 2004. The 2001 International Workshop on Combinatorial Image Analysis.

- [28] Romain Marie, Ouiddad Labbani-Igbida, and El Mustapha Mouaddib. The Delta Medial Axis: A fast and robust algorithm for filtered skeleton extraction. Pattern Recognition, 56:26–39, 2016.

- [29] D. Maturana and S. Scherer. Voxnet: A 3d convolutional neural network for real-time object recognition. In 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 922–928, Sep. 2015.

- [30] Balint Miklos, Joachim Giesen, and Mark Pauly. Discrete scale axis representations for 3d geometry. ACM Trans. Graph., 29(4):101:1–101:10, July 2010.

- [31] F. Mokhtarian and A.K. Mackworth. A theory of multiscale, curvature-based shape representation for planar curves. IEEE Transactions on Pattern Analysis and Machine Intelligence, 14(8):789–805, 1992. cited By 722.

- [32] Gábor Németh, Péter Kardos, and Kálmán Palágyi. Thinning combined with iteration-by-iteration smoothing for 3D binary images. Graphical Models, 73(6):335–345, 2011.

- [33] R. Ogniewicz and M. Ilg. Voronoi skeletons: theory and applications. In Proceedings 1992 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pages 63–69, June 1992.

- [34] Ravi Peters and Hugo Ledoux. Robust approximation of the medial axis transform of LiDAR point clouds as a tool for visualisation. Computers & Geosciences, 90:123–133, May 2016.

- [35] Michał Postolski, Michel Couprie, and Marcin Janaszewski. Scale filtered Euclidean medial axis and its hierarchy. Computer Vision and Image Understanding, 129:89–102, 2014.

- [36] Gunilla Borgefors Punam K. Saha and Gabriella Sanniti de Baja (Eds.). Skeletonization. Theory, Methods and Applications. Elsevier Science, 1st edition edition, 2017.

- [37] T. Qiu, Y. Yan, and G. Lu. A medial axis extraction algorithm for the processing of combustion flame images. In 2011 Sixth International Conference on Image and Graphics, pages 182–186, Aug 2011.

- [38] Martin Rumpf and Tobias Preusser. A level set method for anisotropic geometric diffusion in 3d image processing. SIAM Journal on Applied Mathematics, 62(5):1772–1793, January 2002.

- [39] Maytham H. Safar and Cyrus Shahabi. Shape Analysis and Retrieval of Multimedia Objects. Springer US, 2003.

- [40] Punam K. Saha, Gunilla Borgefors, and Gabriella Sanniti di Baja. A survey on skeletonization algorithms and their applications. Pattern Recognition Letters, 76:3–12, 2016.

- [41] M. Sato, I. Bitter, M. A. Bender, A. E. Kaufman, and M. Nakajima. Teasar: tree-structure extraction algorithm for accurate and robust skeletons. In Proceedings the Eighth Pacific Conference on Computer Graphics and Applications, pages 281–449, Oct 2000.

- [42] Thomas B. Sebastian, Philip N. Klein, and Benjamin B. Kimia. Recognition of shapes by editing shock graphs. IEEE TRANSACTIONS ON PATTERN ANALYSIS AND MACHINE INTELLIGENCE, 00(528):755–762, 2004.

- [43] Doron Shaked and Alfred M. Bruckstein. Pruning medial axes. Computer Vision and Image Understanding, 69(2):156 – 169, 1998.

- [44] Wei Shen, Xiang Bai, Rong Hu, Hongyuan Wang, and Longin Jan Latecki. Skeleton growing and pruning with bending potential ratio. Pattern Recogn., 44(2):196–209, February 2011.

- [45] Kaleem Siddiqi, Ali Shokoufandeh, Sven J. Dickinson, and Steven W. Zucker. Shock graphs and shape matching. International Journal of Computer Vision, 35(1):13–32, 1999.

- [46] André Sobiecki, Andrei Jalba, and Alexandru Telea. Comparison of curve and surface skeletonization methods for voxel shapes. Pattern Recognition Letters, 47:147–156, oct 2014.

- [47] André Sobiecki, Haluk C. Yasan, Andrei C. Jalba, and Alexandru C. Telea. Qualitative Comparison of Contraction-Based Curve Skeletonization Methods. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), volume 7883 LNCS, pages 425–439. Springer, 2013.

- [48] Jian Sun, Maks Ovsjanikov, and Leonidas Guibas. A Concise and Provably Informative Multi-Scale Signature Based on Heat Diffusion. Computer Graphics Forum, 28(5):1383–1392, jul 2009.

- [49] Ayellet Tal. 3d shape analysis for archaeology. In 3D Research Challenges in Cultural Heritage, pages 50–63. Springer Berlin Heidelberg, 2014.

- [50] Alexander Toshev, Ben Taskar, and Kostas Daniilidis. Shape-based object detection via boundary structure segmentation. International Journal of Computer Vision, 99(2):123–146, March 2012.

- [51] Laurens J. P. van der Maaten, Paul J. Boon, Guus Lange, Hans Paijmans, and Eric O. Postma. Computer vision and machine learning for archaeology. In Proceedings of the Computer Applications in Archaeology, CAA 2006, page in press. Dr. H. Kamermans, Faculty of Archeology, Leiden University, 2006.

- [52] G. K. Viswanathan, A. Murugesan, and K. Nallaperumal. A parallel thinning algorithm for contour extraction and medial axis transform. In 2013 IEEE International Conference ON Emerging Trends in Computing, Communication and Nanotechnology (ICECCN), pages 606–610, March 2013.

- [53] L. Younes. Shapes and Diffeomorphisms. Applied Mathematical Sciences. Springer Berlin Heidelberg, 2 edition, 2019.

- [54] Dengsheng Zhang and Guojun Lu. Review of shape representation and description techniques. Pattern Recognition, 37(1):1 – 19, 2004.

- [55] T. Y. Zhang and C. Y. Suen. A fast parallel algorithm for thinning digital patterns. Commun. ACM, 27(3):236–239, March 1984.