Correlation networks: Interdisciplinary approaches beyond thresholding

Abstract

Many empirical networks originate from correlational data, arising in domains as diverse as psychology, neuroscience, genomics, microbiology, finance, and climate science. Specialized algorithms and theory have been developed in different application domains for working with such networks, as well as in statistics, network science, and computer science, often with limited communication between practitioners in different fields. This leaves significant room for cross-pollination across disciplines. A central challenge is that it is not always clear how to best transform correlation matrix data into networks for the application at hand, and probably the most widespread method, i.e., thresholding on the correlation value to create either unweighted or weighted networks, suffers from multiple problems. In this article, we review various methods of constructing and analyzing correlation networks, ranging from thresholding and its improvements to weighted networks, regularization, dynamic correlation networks, threshold-free approaches, comparison with null models, and more. Finally, we propose and discuss recommended practices and a variety of key open questions currently confronting this field.

1 Introduction

Correlation matrices capture pairwise similarity of multiple, often temporally evolving signals, and are used to describe system interactions in various diverse disciplines of science and society, from financial economics to psychology, bioinformatics, neuroscience, and climate science, to name a few. Correlation analysis is often a first step in trying to understand complex systems data [1]. Existing methods for analyzing correlation matrix data are abundant. Very well established methods include principal component analysis (PCA) [2] and factor analysis (FA) [3, 4], which can yield a small number of interpretable components from correlation matrices, such as a global market trend when applied to stock market data, or spatio-temporal patterns of air pressure when applied to atmospheric data. Another major method for analyzing correlation matrix data is the Markowitz’s portfolio theory in mathematical finance, which aims to minimize the variance of financial returns while keeping the expected return above a given threshold [5, 6]. The model takes as input a correlation matrix among different assets that an investor can invest in. In a related vein, random matrix theory (RMT) [7, 8, 9] has been a key theoretical tool for estimating and analyzing economic and other correlation matrix data for a couple of decades [6]. Various new methods for analyzing correlation matrix data have also been proposed. Examples include detrended cross-correlation analysis [10, 11, 12], correlation dependency, defined as the difference between the partial correlation coefficient and the Pearson correlation coefficient given three nodes [13, 14], determination of optimal paths between distant locations in correlation matrix data [15], early warning signals for anticipating abrupt changes in multidimensional dynamical systems including the case of networked systems [16, 17, 18], and energy landscape analysis for multivariate time series data particularly employed in neuroscience [19, 20].

The last two decades have also seen successful applications of tools from network science and graph theory to correlational data. A correlation matrix can be mapped onto a network, which we refer to here as a correlation network, where nodes represent elements and edges are informed by the strength of the correlation between pairs of elements. Correlation network analysis generally intends to extract useful information from data, such as the patterns of interactions among nodes or a ranking of nodes. We show a typical workflow of correlation network analysis in Fig. 1. With multivariate data with multiple (and not too few) samples as input, the analysis entails calculation of correlation matrices, construction of correlation networks from the correlation matrices, and downstream network analysis on the resulting correlation networks. The network analysis often includes discussion of the implication of the network analysis results in application domains in question. An ideal correlation network analysis appropriately adapts concepts and methods developed in network science to the case of correlation networks, generating knowledge that standard methods for correlation matrices (such as PCA) do not produce. Although correlation does not necessarily reflect a physical connection or direct interaction between two nodes, correlation matrices are conventionally used as a relatively inexpensive substitute of such direct connections, whose data are often less available than correlation matrix data. Correlation networks are also useful for visualization [21]. Correlation network analysis has been used in various research disciplines, typically not much behind wherever correlation matrix analysis is used, as we will review in section 2. In our survey here, we focus on correlation networks, with an emphasis on identifying different methods used to transform correlation matrices into correlation networks. See [22, 23, 24, 25, 21] for shorter reviews.

The validity of correlation network analysis remains an outstanding question, especially because the decisions about how to best construct network representations from correlation matrices is far from straightforward. One of the simplest methods is to threshold on the correlation value measured for each pair of nodes (see section 3.2). However, while such a simple thresholding is widely used, it introduces various problems. These problems have led to proposals of alternative methods for generating correlation networks, which we will cover in sections 3.4–3.7.

Before proceeding, we raise some important clarifications. First, correlation networks as we consider here are different from network architectures that exploit correlation in data [27, 28, 29]111For example, the Progressive Spatio-Temporal Correlation Network (PSCC-net) is an algorithm to detect and localize manipulations in the input image data by taking advantage of spatial correlation structure in images [27]. The superpixel group-correlation network (SGCN) [28] and the deep correlation network (DCNet) [29] are encoder-decoder and deep-learning network architectures, respectively, for salient object detection in images.. These “networks” are in the sense of neural network architecture in artificial intelligence and machine learning, whereas here we consider “networks” to denote graphs in network science.

Second, we focus on correlation networks based on the Pearson correlation coefficient or its close variants such as the partial correlation coefficient. In fact, there are numerous other definitions for quantifying the similarity between data obtained from node pairs [30, 31, 23, 32, 33, 24, 34]. Examples include similarity networks whose edges are determined using the rank correlation coefficient [35, 36], the mutual information [37, 38, 39], and partial mutual information [40, 41]. Co-occurrence of two nodes over samples, such as two authors co-authoring a paper (a paper is a sample in this example), also gives us an unnormalized variant of correlation. See sections 2.5 and 2.8 for examples of co-occurrence networks. However, a majority of concepts and techniques explained in our main technical sections 3 and 4, such as the detrimental effect of thresholding and dichotomizing the edge weight, use of weighted networks, graphical lasso, and importance of null models, also hold true when one constructs correlation networks using these or other alternative methods.

Third, we do not discuss causal inference in the present paper. A plethora of methods are available for inferring causality between nodes and associated directed networks. For example, a Bayesian network is a directed acyclic graph that fully represents the joint probability distribution of the variables. The edge of the directed acyclic graph represents directed and pairwise conditional dependency of one random variable (corresponding to a node) on another variable. The absence of the edge represents conditional independence between the two nodes. For Bayesian networks, see, e.g., [42, 43]. For other techniques, see, e.g., [34, 44, 21]. While these methods reveal potential causal links, even from cross-sectional data, we do not consider them further here in our discussion of correlation matrices and related general similarity matrices, whose inherently symmetric natures mean that these matrices or networks do not in principle inform us of causality or directionality between nodes (at least not in a straightforward manner [45, 46, 47]). In a related vein, we do not discuss time-lagged correlation of multivariate time series in this paper, since these are also asymmetric in general, although many of the same considerations we raise here also apply to lagged correlations.

2 Data types leading to correlation networks

Correlation network analysis is common in many research fields. In this section, we survey typical correlation networks and their analysis in representative research fields.

2.1 Psychological networks

There are various multivariate psychological data, from which one can construct networks [25, 21]. For example, in personality research, researchers construct personality networks in which each node can be a personal trait or goal such as being organized, being lazy, and wanting to stay safe. Edges between a pair of nodes typically represent a type of conditional association between the two nodes. Samples are frequently participants in the research responding to various questionnaires on a numeric scale (e.g., 5-point scale ranging from 1: strongly disagree to 5: strongly agree) corresponding to nodes. From a cross-sectional data set, one can calculate (conditional) correlation between pairs of nodes. Researchers are also increasingly combining surveys with alternative data collection modalities, for example, sensor data for daily movement or neural markers of stress [48, 49]. It is reasonable to use correlation between signals from different modalities (e.g., smartphones and brain scanners) to construct a correlation network [49].

Another major type of psychological network is symptom networks employed in mental health research. Symptoms of a psychological, including psychopathological, condition, such as major depression and schizophrenia, are interrelated. Furthermore, causality between symptoms such as fatigue, headaches, concentration problems, and insomnia, and a psychopathological disorder, is often unclear. It has been suggested that a disorder does not originate from a single root cause, which motivates the study of symptom networks [50, 51, 52, 21]. Nodes in a symptom network are symptoms, and one can use association between pairs of symptoms calculated from the participants’ responses to define edges. Analysis of symptom networks may help us to predict how an individual develops psychopathology in the future, understand comorbidity as strong connection between symptoms of two mental disorders, and propose central nodes as possible targets of intervention [52]. Health-promoting behaviors can also be treated as nodes in these networks to suggest key behavioral intervention points [53].

Panel data, i.e., longitudinal measurements of variables from samples, are increasingly common for network approaches [21]. In this case, one obtains correlation networks at multiple time points. Then, one can construct time-varying correlation networks (see section 3.9) or within-person correlation networks [54] that reflect temporal symptom patterns and ideally expose individual differences and possible causal pathways in mental health patterns related to disorders [55, 56]. However, the validity of psychological network approach should be further studied. Research has shown that symptom networks have poor reproducibility across samples, likely due to measurement error in assessing symptoms among other reasons [51, 57].

2.2 Brain networks

Various notions of brain connectivity have been essential to better understanding different neural functions. Studies of such brain networks constitute a major part of a research field that is often referred to as network neuroscience [58, 59]. (See also the related material about network representations in [60].) Multivariate time series of neuronal signals recorded from the brain are a major source of data used in network neuroscience research. Such data may be recorded in a resting state or when participants are performing some task. Functional networks or functional connectivity refer to correlation-based networks constructed from multivariate neuronal time series data, obtained through, e.g., neuroimaging or electroencephalography, where the term “functional” in this setting effectively means correlational. A typical node in the brain networks is either a voxel (i.e., cube of side length of, e.g., 1 mm) or a spherical region of interest (ROI), which is a sphere in the brain. In the case of multivariate time series data, there are various other methods to infer directed brain networks, which is referred to as effective connectivity, but we do not cover directed networks in this article. Brain networks based on anatomical connectivity between brain regions are referred to as structural networks. Functional connectivity, or a correlation-based edge between two nodes in the brain does not imply the presence of an edge between the same pair of nodes in the structural network. Indeed, one does not expect a one-to-one correspondence between functional and structural brain networks because several brain states and functions continuously arise from the same anatomical structure [61]. Still, the possibility of studying structural networks in combination with functional networks on the same set of nodes is a distinguishing feature of brain networks, which can be used for an additional comparison when validating the outcome of correlation-based networks [62]. See [58, 32, 63, 64, 24, 62, 65] for reviews and comparisons of techniques for the estimation and validation of brain networks from the (partial) correlations. Examples of the use of these methods for (functional) network analysis are discussed later in this review.

The most typical functional neuronal networks come from neuroimaging data, in particular functional magnetic resonance imaging (fMRI) data, which are measured using blood-oxygeneration-level-dependent (BOLD) imaging techniques [66]. Functional connectivity between voxels or between spherical ROIs, or other types of nodes, is calculated by a correlation between fMRI time signals at the two nodes after one has bandpassed the fMRI time series at each node to remove artifacts, with a frequency band of, e.g., 0.01-0.1 Hz. Functional MRI improves on electroencephalogram (EEG) and magnetoencephalography (MEG) in spatial resolution at the expense of temporal resolution, but functional EEG and MEG networks are not uncommon. We also note that EEG and MEG signals are oscillatory, so one has to calculate the functional connectivity between each pair of nodes using methods that are aware of the oscillatory nature of the signal, such as using phase lag index or amplitude envelope correlation [67] rather than conventional correlation coefficients or mutual information.

Structural covariance networks are another type of correlation brain network where the edges are defined as the correlation/covariance of the corrected gray matter volume or cortical thickness between brain regions and , where the samples are participants [68, 69]. Morphometric similarity networks are a variant of structural covariance networks. In morphometric similarity networks, one uses various morphometric variables, not just a single one such as cortical thickness, for each node (i.e., ROI) [70]. One calculates the correlation between two nodes by regarding each morphometric variable as a sample. Therefore, differently from structural covariance networks based on cortical thickness, one can calculate a correlation network for each individual.

In neuroreceptor similarity networks, an edge between two nodes, or ROIs, is the correlation in terms of receptor density [71]. Specifically, one first calculates a vector of neurotransmitter density for each ROI, with each entry of the vector corresponding to one type of receptor. Then, one computes the correlation between each pair of ROIs, called receptor similarity.

2.3 Gene co-expression networks

Genes do not work in isolation. Gene co-expression networks have been useful for figuring out webs of interaction among genes using network analysis methods [72, 73, 74, 75, 76, 34, 77, 78, 79]. They are a type of data in a subfield of network science often referred to as network biology or network medicine. Gene co-expression networks are correlation networks in the generalized sense considered here, including the case of other measures of similarity. A typical measurement is the amount of gene expression for different genes and samples, where a sample most commonly corresponds to a human or animal individual. If one measures the expression of various genes for the same set of samples, we can calculate the co-expression between each pair of genes by calculating the sample correlation, yielding a correlation matrix. Depending on the questions being asked in the study, it may be important to calculate the underlying correlations with different factors to account for the effects of heterogeneous gene frequencies [80, 81]. It is common to transform a correlation matrix into a network and then apply various network analysis methods, for example community detection with the aim of estimating the group of genes that are associated with the same phenotype222A phenotype is a set of observable traits of an organism and is usually contrasted with the underlying genotype that causes (or influences) the phenotype. such as a disease. In this manner, correlation network analysis has been a useful tool for gene screening, which can lead to identification of biomarkers and therapeutic targets. In addition to community detection, identifying hub genes in co-expression networks helps finding key genes, for example, for cancer [82].

Different ways of defining co-expression matrices and networks from gene expression data include tissue-to-tissue co-expression (TTC) networks [83] (also see [84, 85]). A TTC network proposed in [83] is a bipartite network, and its node is a gene-tissue pair. An edge between two nodes, denoted by and , where and are genes, and and are tissues, represents the sample correlation as in conventional co-expression networks. However, by definition, the correlation is calculated only between node pairs belonging to different tissues, i.e., only for . Therefore, TTC networks characterize co-expression of genes across different tissues.

Co-expression of genes and implies that and are both expressed at a high level in some samples (usually individuals) and both expressed at a low level in other individuals. Co-expressed genes tend to be involved in common biological functions. There are in fact multiple biophysical and non-biophysical reasons for gene co-expression [76]. For example, a transcription factor, a protein that binds to DNA, may regulate different genes and that are physically close on a chromosome. If this is the case, differential levels of regulation by the transcription factor across individuals can create co-expression of and . Another mechanism of co-expression is that the expression of genes and , which may be located far from each other on the chromosome or on different chromosomes, may depend on the temperature. Then, and would be co-expressed if different individuals are sampled from living environments with different temperatures. Variation in ages of the individuals can similarly create co-expression among age-related genes. Alternatively, co-expression may originate from non-biological sources, such as technical or laboratory ones, whose exact origins are often unknown.

One is often interested in looking for differential co-expression, which refers to the different levels of gene co-expression between two phenotypically different sets of samples, such as a disease set versus a control set, or in two types of tissues [76, 78]. Differential co-expression often reveals information that one cannot obtain by examining differential expression (as opposed to co-expression), i.e., different levels of gene expression between the two sets of samples [86].

2.4 Metabolite networks

Metabolites are small molecules (e.g., amino acids, lipids, vitamins) that are intermediates or end products of metabolic reactions. One can also construct correlation networks from metabolomics data, or data of metabolites and their reactions [87, 88].To inform the edge, one measures pairwise correlation between the amounts of two metabolites given the samples. Like gene co-expression, correlation between metabolites can occur for multiple reasons, including knock-out of a gene coding an enzyme that is involved in a chemical reaction consuming or producing two metabolites, different temperatures or other environmental conditions under which different samples are obtained, or intrinsic variability owing to cellular metabolism [87]. Note that mass conservation within a moiety-conserved cycle produces negative correlation between at least one pair of metabolites involved in the reaction [89]. That said, in some cases one may consider correlation or other similarity between only a subset of metabolites that are not necessarily associated to one another by direct chemical processes but instead draw from a set of alternative biochemical processes (see, e.g., [90]).

2.5 Microbiome networks

Microbes interact with other microbe species as well as with their environments. Understanding of microbial composition and interaction in the human gut is expected to inform multiple diseases. Similarly, understanding soil microbial communities may contribute to enhancing plant productivity. Network analysis is adept at revealing, e.g., ecological community assembly and keystone taxa, and has been increasingly contributing to these fields.

In microbiome network analysis, one collects samples from, e.g., soil, at various time points or locations. Each sample from an environment (e.g., soil, gut, animal corpus, or water) contains various microorganisms with different quantities. Co-occurrence network analysis is increasingly common in this field, aided by an increasing amount and accuracy of data [33, 91, 92]. In a microbiome co-occurrence network, nodes are microorganisms (e.g., bacteria, archaea, or viruses), specified at the taxa level, for example, and an edge is defined to exist if two nodes co-occur across the samples. Therefore, microbiome co-occurrence networks are essentially microbiome correlation networks, and the usual correlation measures, such as Pearson correlation, can be used to determine edge data, but more sophisticated methods to define edges are more often used. (See [33, 91] for various co-occurrence network construction methods.) Positively weighted edges result because of, e.g., cooperation between two taxa, sharing of niche requirements, or co-colonization. Negatively edges result because of, e.g., competition for space or resources, prey-predator relationships, or niche partitioning. A historically famous example of negative co-occurrence in ecological community assembly study is the checkerboard-like presence-absence patterns of two bird species inhabiting an island, discussed by Jared Diamond [93]. (Also see [33] for a historical account.) Regardless, one should keep in mind that correlation, or co-occurrence, does not immediately imply physical interaction between two taxa.

2.6 Disease networks

A node in a disease network is a disease phenotype. Correlation between two diseases defines an edge, and there are various definitions of edges as we introduce in this section. Each definition of edge creates a different type of disease network.

Comorbidity, also called multimorbidity [94], is the simultaneous occurrence of multiple diseases within an individual. One cause of comorbidity is that the same gene or disease-associated protein can trigger multiple diseases. Other causes, such as environmental factors or behaviors, such as smoking, can also result in comorbidity. A collection of potentially comorbid diseases can be modeled as the nodes of a network, and the edges, which are based on comorbidity or other similarity index between diseases [88], are correlational in nature.

The authors of [95] constructed phenotypic disease networks (PDNs) in which nodes are disease phenotypes. The edges are sample correlation coefficients or a variant, and the samples are patients in a hospital claim record (i.e., Medicare claims in the US). Note that here one uses correlation for binary variables because each sample (i.e., patient) is either affected or not affected by any disease . The authors found that, for example, patients tend to develop illness along the edges of the PDN [95].

Similarly, prior work constructed a human disease network when two diseases share at least one associated gene, which is similar in principle to the phenotypic disease network despite that the edge of the human disease network is not a conventional correlation coefficient [96] (also see [97]). Similarly, an edge in a metabolic disease network is defined to exist when two diseases are either associated with the same metabolic reaction or their metabolic reactions are adjacent to each other in the sense that they share a compound that is not too common [98]. (H2O and ATP, for example, are excluded because they are too common.) Alternatively, in a human symptom disease network [99], the edge between a pair of diseases is a correlation measure in which each sample is a symptom. In other words, roughly speaking, the edge weight is large when two diseases share many symptoms.

2.7 Financial correlation networks

Stocks of different companies are interrelated, and the prices of some of them tend to change similarly over time. A common transformation of such financial time series before constructing correlation matrices and networks is into the time series of logarithmic return, i.e., the successive differences of the logarithm of the price, given by

| (1) |

where is the price of the th financial asset at time , such as the closure price of the th stock on day , and . An advantage of this method is that is not susceptible to changes in the scale of over time [100]. Then, one constructs the correlation matrix for time series , where .

Financial correlation matrices have been analyzed for decades. For example, Markowitz’s portfolio theory provides an optimal investment strategy as vector , where represents the fraction of investment in the th financial asset, and ⊤ represents the transposition [5, 6]. The theory formulates the minimizer of the variance of the return, , where is the covariance matrix, as the solution of a quadratic optimization problem with the constraint that the expected return, , where , and is the expected return for the th asset, is larger than a prescribed threshold.

Financial correlation matrices have also been extensively studied in econophysics research since the 1990s, with successful uses of RMT [100, 101, 102, 6, 103, 104, 105, 106] and network methods such as maximum spanning trees [107, 108], community detection [109, 104, 110, 105, 106], and more advanced methods (see [23, 22] for reviews). One usually employs RMT in this context to verify that most eigenvalues of the empirical financial correlation matrices lie in the bulk part of the distribution of eigenvalues for random matrices. Such results imply that most eigenvalues of the empirical correlation matrices can be regarded as noise, and one is primarily interested in other dominant eigenvalues of the empirical correlation matrices whose values are not explained by random matrix theory [101, 102, 103, 106]. The largest eigenvalue is usually not explained by RMT and is often called the market mode because it represents the movement of the market as a whole; moreover, other deviating eigenvalues are also present, encoding for the presence of groups of stocks that move coherently, as we discuss in section 4.3.

Other types of financial data are possible. For example, correlation networks were constructed from pairwise correlation between the daily time series of the investor’s behavior (i.e., the net volume of Nokia stock traded or its normalized version) for two investors [111, 112]. One can also renormalize the covariance matrix using other indices, such as momentum [113].333Momentum in finance generally refers to the rate of change in price. If the prices of two assets are correlated, the momentum of one asset can be informative of the future price of the correlated asset [114]. In [113], momentum and price correlation are mixed in various ways to construct correlation-type networks that reflect collective price dynamics, and, for example, network centrality is predictive of large upcoming swings in asset prices.

2.8 Bibliometric networks

Apart from microbiome studies, bibliometric and scientometric studies are another research field in which co-occurrence networks are often used [115, 116]. For example, in an academic co-authorship network, a node represents an author, and an edge represents co-occurrence (i.e., collaboration) of two authors in at least one paper. One can weigh the edge according to the number of coauthored papers or its normalized variant [117]. While keeping authors as nodes, one can also create other types of co-occurrence networks, such as co-citation networks in which an edge connects two authors whose papers are cited together by a later paper, and keyword-based co-occurrence networks in which an edge connects two authors sharing keywords associated with their papers. Nodes of co-occurrence bibliometric networks can also be journals, institutions, research areas, and so forth. These co-occurrence networks are mathematically close to correlation networks and have been useful for understanding research communities and specialities, communication among researchers, interdisciplinarities, and the structure and evolution of science, for example.

Various other web-based information has also been analyzed as co-occurrence networks. For example, tags annotating posts in social bookmarking systems can be used as nodes of co-occurrence networks [118]. Two tags are defined to form an edge if both tags appear on the same post at least once. One can also use the number of the posts in which the two tags co-appear as the edge weight. Another example is co-purchase networks in online marketplaces, in which a node represents an item, and an edge represents that customers frequently purchase the two items together [119].

2.9 Climate networks

Climate can be analyzed as a network of interconnected dynamical systems [120, 121, 122, 123]. In most analyses, the nodes of the network are equal-angle latitude-longitude grid points on the globe. However, such angular partitions lead to grid cells with geometric areas that vary with latitude, which in particular might lead to spurious correlations in the measured quantities, especially near the poles; such biases might be addressed either by a node splitting scheme that aims to obtain consistent weights for the network parameters [124], or by choosing instead to work on a grid with (possibly only approximately) equal grid cell areas [125]. Each node has, for example, a time series measurement of the pressure level, which represents wind circulation of the atmosphere. The edge between a pair of nodes is based on the correlation between the two time series. An early study showed that all nodes in equatorial regions have large degree (i.e., the number of edges that a node has) regardless of the longitude, whereas only a small fraction of nodes in the mid-latitude regions had large degrees [120]. Climate networks have been further used for understanding mechanisms of climate dynamics and predicting extreme events. For example, early warning signals were constructed from the degree of the nodes and clustering coefficient for climate networks of the Atlantic temperature field [126]. The proposed early warning signals were effective at anticipating the collapse of Atlantic Meridional Overturning Circulation. See section 2.1 of [123] for more examples.

3 Methods for creating networks from correlation matrices

To apply network analysis to correlation matrix data, we need to generate a network from correlation data (usually in the form of a correlation matrix). We call such a network a correlation network. Whether correlation network analysis works or is justified depends on this process. Although there are various methods for constructing correlation networks from data, they have pros and cons. Furthermore, there are various unjustified practices around correlation network generation, which may yield serious limitations on the power of correlation network analysis. In this section, we review several major methods.

3.1 Estimation of covariance and correlation matrices

How to estimate covariance matrices from observed data when the matrix size is not small is a long-standing question in statistics and surrounding research fields. In particular, the sample covariance matrix, a most natural candidate, is known to be an unreliable estimate of the covariance matrix. See [127, 128, 129, 6] for surveys on estimation of covariance matrices. Although the primary focus of this paper is estimation of correlation networks, not covariance or correlation matrices, it is of course important to realize that correlation networks created from unreliably estimated correlation matrices are themselves unreliable. Therefore, we briefly survey a few techniques of covariance and correlation matrix estimation in this section, including providing the notations and preliminaries used in the remainder of this paper. This exposition is important in particular because correlation network analysis in non-statistical research fields such as network science and also various applications often ignores statistical perspectives examined in the previous studies.

We denote by an data matrix, where is the number of nodes to observe the signal from, and is the number of samples, which is typically the length of the time series or the number of participants in an experiment or questionnaire. The sample covariance matrix, , is given by

| (2) |

where

| (3) |

is the sample mean of the signal from the th node.444Note the in the denominator of Eq. (2) is necessary to obtain an unbiased estimator. Because Eq. (2) is a sum of outer products, the rank of is at most . One can understand this fact more easily by rewriting Eq. (2) as

| (4) |

where Therefore, is singular if , while the converse does not hold true in general.

Although covariance matrices are mathematically convenient, they are not normalized. In particular, if we multiply the data from the th node by (), then , where , changes by a factor of , and changes by a factor of , whereas all the other entries of remain the same. In practice, the data from different nodes may have different baseline fluctuation levels. For example, the price of the th stock may fluctuate much more than that of the th stock because the former has a larger average or the industry to which the th company belongs may be subject to higher temporal variability. The correlation matrix, denoted by , normalizes the covariance matrix such that is not subject to the effect of different overall amounts of fluctuations across different nodes. The sample Pearson correlation matrix, denoted by , is defined by

| (5) |

Note that . Also note that every sample correlation matrix is a sample covariance matrix of some data but not vice versa. A correlation matrix is characterized by positive semidefiniteness, symmetry, range of the entries only being , and the diagonal being equal to [130]. The set of full-rank correlation matrices for a fixed is called the elliptope, which has its own geometric structure [131, 132]. For standardized samples , the Euclidean distance between vectors and is given by

| (6) |

Therefore, given the sample correlation matrix, defines a Euclidean distance [107, 100].

In the following, we refer to covariance matrices in some cases and correlation matrices in others, often interchangeably. This is because the correlation matrix, which is a normalized quantity, should be used in most data analyses, while the covariance matrix allows better mathematical analysis in most cases. This convention is not problematic because, if we standardize the given data first and then calculate the covariance matrix for the standardized data (), then the obtained covariance matrix is also a correlation matrix. Therefore, the mathematical results for covariance matrices also hold true for correlation matrices as long as we feed the pre-standardized data to the analysis pipeline.

With the above consideration in mind, we now stress that it is important to distinguish the sample covariance matrix, which is calculated from empirical data, from the theoretical or ‘true’ (also called population) covariance matrix. One may use the true covariance matrix to model the observed data mathematically in terms of a random process described by a (stationary) probability distribution. Let denote a random variable for . The true covariance matrix is given by

| (7) |

where represents the expectation, and is the ensemble mean of . Equation (7) implies that a covariance matrix is a symmetric matrix. It is also a positive semidefinite matrix. Conversely, a symmetric positive semidefinite matrix is always a covariance matrix for the following reason. Any positive semidefinite matrix allows a positive semidefinite matrix, denoted by , as square root. We set

| (8) |

where , , are independent random variables, with each having mean and variance . Then, it is straightforward to see that has mean and covariance matrix .

Having distinguished population and sample covariance matrices, we now look for a relationship between the two. If we regard as a random variable, and the observed data as a possible realization of that variable, then we must also regard the sample covariance matrix as a random variable, and the empirical sample correlation matrix as a single realization of that variable. Then, the relevant question is the characterization of the probability distribution governing the sample covariance matrix, given the true covariance matrix which acts a set of fixed parameters (to be estimated from the data) for the distribution. Clearly, the number of observations, regarded as the number of independent draws for each random variable , is another parameter (not to be estimated). For any finite , the sample covariance matrix obeys the so-called Wishart distribution with degrees of freedom, denoted by , under the assumption that the samples are i.i.d. and obey the multivariate normal distribution whose covariance matrix is [133, 134, 3, 6]. We obtain . In other words, the sample covariance matrix is an unbiased estimator of the true covariance matrix, called the Pearson estimator in statistics. The variance of is equal to . In fact, is a problematic substitute of , and the use of in applications in place of tends to fail; see [6] for an example in portfolio optimization. An intuitive reason why is problematic is that, if is not much larger than , which is often the case in practice, one would need to estimate many parameters from a relatively few observations. Specifically, the covariance and correlation matrices have and unknowns to infer, respectively, whereas there are samples of vector available [135]. If is not vanishingly small (called the large dimension limit or the Kolmogorov regime [6]), then the estimation would fail. As an extreme example, if , then is singular, but the true may be nonsingular. Even if , matrix may be ill-conditioned if is not sufficiently greater than , whereas may be well-conditioned.

Therefore, covariance selection to reduce the number of parameters to be estimated is a recommended practice when is not large relative to [135]. One also says that we impose some structure on the estimator of the covariance matrix, with the mere use of the sample covariance matrix as an estimate of the true covariance matrix corresponding to no assumed structure.

A major method of covariance selection is to impose sparsity on the covariance matrix or the so-called precision matrix (also called the concentration matrix), which is the inverse of the covariance matrix (with entries representing un-normalized partial correlation coefficients; see section 3.6). Note that a sparse precision matrix does not imply that the corresponding covariance matrix (i.e., its inverse) is sparse. Graphical lasso (see section 3.7) is a popular method to estimate a sparse precision matrix. Another major method to estimate a sparse correlation matrix is to threshold on the value of the correlation to discard node pairs with correlation values close to (see section 3.2). Another common method of covariance selection, apart from estimating a sparse covariance matrix, is covariance shrinkage (see [136] for a review). With covariance shrinkage, the estimated covariance matrix is a linear weighted sum of the sample covariance matrix, , and a much simpler matrix, called the shrinkage target, such as the identity matrix [137, 138, 139] or the so-called single-index model (which is a one-factor model in factor analysis terminology and is an approximation of by a rank-one matrix plus residuals) [137]. Note that the shrinkage target is a biased estimator of . These and other covariance selection methods balance between the estimation biases and variances.

An advanced estimation method for the entire correlation matrix, based on RMT, is the so-called optimal rotationally invariant estimator (RIE) [6, 65]. Roughly speaking, the RIE is the closest (in some spectral sense) matrix, among all those having the same eigenvectors as the sample correlation matrix, to the ‘true’ correlation matrix. It uses a certain self-averaging property to infer the spectrum of the true matrix from that of the sample matrix, and then to compute the spectral distance from the true matrix to be minimized [6]. A specific cross-validated version of the RIE has been recently shown to outperform several other estimators [65]. Since the RIE requires some notion of RMT to be properly defined, we discuss it in section 4.3.

3.2 Dichotomization

In this and the following subsections, we present several methods to generate undirected networks from correlation matrices.

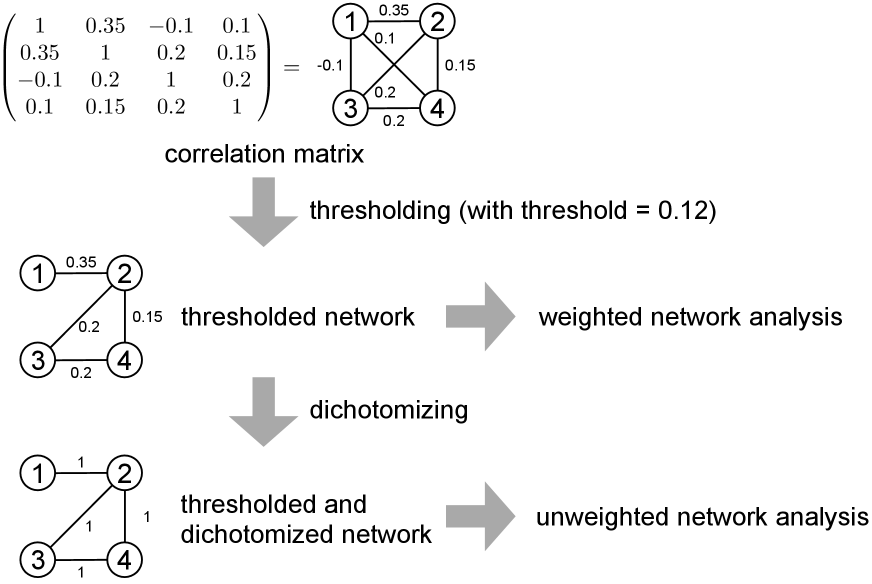

A simple method to generate a network from the given correlation matrix is thresholding, which in its simplest form entails setting a threshold , and placing an unweighted edge if and only if the Pearson correlation ; otherwise, we do not include an edge . It is also often the case that one thresholds on . There are mainly two choices after the thresholding. First, we may discard the weight of the surviving edges to force it to , creating an unweighted network. Second, we may keep the weight of the surviving edge to create a weighted network. See Fig. 2 for these two cases. The literature often use the term thresholding in one of the two meanings without clarification. In the remainder of this paper, we call the first case dichotomizing (which can be also called binarizing), which is, precisely speaking, a shorthand for “thresholding followed by dichotomizing”. We discuss dichotomized networks in this section and threshold networks without dichotomization (yielding weighted networks) in sections 3.4 and 3.7.

Dichotomizing has commonly been used across research areas. However, researchers have repeatedly pointed out that dichotomizing is not recommended for multiple reasons.

First, no consensus exists regarding the method for choosing the threshold value [140, 141, 24, 142, 143, 144] despite that results of correlation network analysis are often sensitive to the threshold value [145, 141, 146, 142, 143, 144, 147]. In a related vein, if a single threshold value is applied to the correlation matrix obtained from different participants in an experiment, which is typical in neuroimaging data analysis and referred to as an absolute threshold [142, 148], the edge density can vary greatly across participants. Since edge density is heavily correlated with many network measures, this can be seen as introducing a confound into subsequent analyses and casts doubt on consequent conclusions, e.g., that sick participants tend to have less small-world brain networks than healthy controls. (In this example, a network with a large edge density would in general yield a small average path length and large clustering coefficient, leading to the small-world property, so that density differences alone could have driven the observed effect.) An alternative method for setting the threshold is the so-called proportional thresholding, with which one keeps a fixed fraction of the strongest (i.e., most correlated) edges to create a network, separately for each participant [142, 148]; also see [149, 150, 151] for an early study. In this manner, the thresholded networks for different participants have the same density of edges. However, while the proportional thresholding may sound reasonable, it has its own problems [148]. First, because different participants have different magnitudes of overall correlation coefficient values, the proportional threshold implies that one includes relatively weakly correlated node pairs as edges for participants with an overall low correlation coefficients. This procedure increases the probability of including relatively spurious node pairs, which can be regarded as type I errors (i.e., false positives), increasing noise in the resulting network. (Also see [152, 153] for discussion on this matter.) Second, the overall correlation strength is often predictive of, for example, a disease in question. The proportional threshold enforces the same edge density for the different participants’ networks. Therefore, it gives up the possibility of using the edge density, which is a simplest network index, to account for the group difference. If one uses the absolute threshold, the edge density is different among participants, and one can use it to characterize participants. The edge density in the proportional thresholding is also an arbitrary parameter.

Second, apart from false positives due to keeping small-correlation node pairs as edges, correlation networks at least in its original form suffer from false positives because pairwise correlation does not differentiate between direct effects (i.e., nodes and are correlated because they directly interact) and indirect effects (i.e., nodes and are correlated because and interact and and interact). In other words, correlations are transitive. The correlation coefficient is lower-bounded by [154]

| (9) |

Equation (9) implies that if and are large, i.e., sufficiently close to , then is positive. Furthermore, this lower bound of is usually not tight, suggesting that tends to be more positive than what Eq. (9) suggests when [155, 156]. This false positive problem is the main motivation behind the definition of the partial correlation networks and related methods with which to remove such a third-party effect, i.e., influence of node in Eq. (9). (See section 3.6.) Instead, one may want to suppress false positives by carefully choosing a threshold value. Let us consider the absolute thresholding. For example, if and do not directly interact, and do, and also do, yielding , and , then setting enables us to remove the indirect effect by . However, it may be the case that and do not directly interact, and do, and also do, yielding , , and . Then, thresholding with dismisses direct as well as indirect interactions (that is, it introduces false negatives). A related artifact introduced by the combination of thresholding and indirect effects is that thresholding tends to inflate the abundance of triangles, as measured by the clustering coefficient for dichotomized (and therefore unweighted) networks, and other short cycles [87, 156]; even correlation networks generated by dichotomizing randomly and independently generated data have high clustering coefficients [156]. This phenomenon resembles the fact that spatial networks tend to have high clustering just because the network is spatially embedded [157, 158].

Third, whereas thresholding has been suggested to be able to mitigate uncertainty on weak links (including the case of the proportional thresholding to some extent) and enhance interpretability of the graph-theoretical results (e.g., [24]), thresholding in fact discards the information contained in the values of the correlation coefficient. For example, in Fig. 2, thresholding turns a correlation of and into the absence of an edge. Furthermore, if we dichotomize the edges that have survived thresholding, a correlation of and are both turned into the presence of an edge.

There are various methods to try to mitigate some of these problems. In the remainder of this section, we cover methods related to dichotomizing.

One family of solutions is to integrate network analysis results obtained with different threshold values [24] (but see [159] for a critical discussion). For example, one can calculate a network index, such as the node’s degree, denoted by , as a function of the threshold value, , and fit a functional form to the obtained function to characterize the node [146]. Similarly, one can calculate for a range of values and take an average of [26, 160]. In the case of group-to-group comparison, an option along this line of idea is the functional data analysis (FDA), with which one looks at as a function of across a range of values and statistically test the difference between the obtained function for different groups by a nonparametric permutation test [161, 162]. In these methods, how to choose the range of is a nontrivial question.

A different strategy is to determine the threshold value according to an optimization criterion. For example, a method was proposed [143] for determining the threshold value as a solution of the optimization of the trade-off between the efficiency of the network [163] and the density of edges. Another method to set is to use the highest possible threshold that guarantees all or most (e.g., 99%) of nodes are connected [164].

The so-called maximal spanning tree is an easy and classical method to automatically set the threshold by guaranteeing that the network is connected [107, 100], while at the same time avoiding the creation of edges that would form loops (and are therefore unnecessary for connectedness). One adds the largest correlation node pairs as edges one by one under the condition that the generated network is the tree. In the end, the maximal spanning tree contains all the nodes, and the number of edges is . Thanks to the mapping from (large) sample correlation coefficients to (small) Euclidean distances established by Eq. (6), the maximal (in the sense of correlation) spanning tree is sometimes called the minimum (in the sense of distance) spanning tree [100, 107] (MST). The MST can be viewed as the graph achieving the overall minimum length among all graphs that make the nodes reachable from one another. Here, the minimum length is defined as the sum of the lengths of its realized edges, and the length of an edge is the metric distance between its endpoints. The maximal spanning tree also allows a hierarchical tree representation, which facilitates interpretation [107, 165, 166]. However, the generated network is extreme in the sense that it is a most sparse network among all the connected networks on nodes, without any triangles. A variant of the maximum spanning tree is to sequentially add edges with the largest correlation value under the constraint that the generated network can be embedded on a surface of a prescribed genus value (roughly speaking, the given number of holes) without edge intersection [167]. If the genus is constrained to be zero, the resulting network is a planar graph, called the planar maximally filtered graph (PMFG). The PMFG contains edges. The PMFG contains more information than the maximum spanning tree (which is in any case contained in the PMFG), such as some cycles and their statistics. Note that these methods effectively produce a threshold for the correlation value to be retained, but on the other hand preserve only some of the edges that exceed the threshold. Indeed, they are (designed to be) irreducible to the mere identification of an overall threshold value, with their merit residing in the introduction of higher-order geometric constraints guiding the dichotomization procedure.

Another related method is to use the nearest neighbor graph of the correlation matrix, with which each th node is connected at least to the nodes with the highest correlation with the th node [153]. Yet another choice, which is designed for general weighted networks, is the disparity filter, with which one preserves only statistically significant edges to generate the network backbone [168, 169]. Note that, with these methods as well, some lower-correlation node pairs are retained as edges and some higher-correlation edges are discarded.

Application example: Wang et al. compared functional networks of the brain between children with attention-deficit hyperactivity disorder (ADHD) and healthy controls using fMRI data [170]. The brain scan lasted for eight minutes in the resting state, and the brain image was acquired every two seconds. The authors used a previously published popular brain atlas defined by three-dimensional coordinates of 90 anatomical regions of interest (45 per hemisphere), each of which defines a node of the network. After various steps of preprocessing the original data, they computed the Pearson correlation coefficient between the time series at each pair of nodes, separately for each child. Aware of the problem inherent in choosing a threshold value, which we discussed earlier in this section, the authors examined dichotomized networks for a range of threshold values . As downstream analysis, they measured the small-world-ness of networks in terms of the so-called global efficiency and local efficiency [163]. A large global efficiency value and a small local efficiency value suggest that the network has a small-world property. They found that, while the networks of both ADHD and healthy control children were small-world, those of children with ADHD were somewhat less small-world than those of the controls across a wide range of ; the difference was statistically significant for the local efficiency in some range of .

3.3 Persistent homology

In the previous section, we discussed the idea of integrating analysis of dichotomized networks over different threshold values to mitigate the effect of selecting a single threshold value. Topological data analysis, or more specifically, persistent homology, provides a systematic and mathematically founded suite of methods to do such analyses. In topological analysis in general, one focuses on properties of shapes that do not change under continuous deformations of them, such as stretching and bending. Such topologically invariant properties include the numbers of connected components and of holes, which can be calculated through the so-called homology group. Persistent homology captures the changes in the network structure over multiple scales, or over a range of threshold values, clarifying topological features that are robust with respect to the threshold choice. In this broader perspective, networks are only a particular instance of the type of topological object under major consideration in topological data analysis, called simplicial complexes, because networks only consider pairwise interactions. One may want to consider the clique complex, in which each -clique (i.e., complete subgraph with nodes) in the network is defined to be a higher-order object called a -simplex and belongs to the simplicial complex of the given data. Note that the clique complex contains all the edges of the original network as well because an edge is a 2-clique by definition. For reviews of topological data analysis including persistent homology, see [171, 172, 173, 174, 175].

To analyze correlation matrix data using persistent homology, we start with a point cloud, with each point corresponding to a node in a correlation network. Then, we introduce a distance between each pair of nodes, , where . By setting a threshold value , we obtain a dichotomized network, or, e.g., a clique complex, depending on the choice, denoted by . The simplicial complexes with varying values forms a filtration, i.e., with the nestedness property

| (10) |

with the inclusion relationship in Eq. (10) referring to that of the edge set and that of any higher-order simplex object. The collection of clique complexes in Eq. (10) is called the Vietoris-Rips filtration. Simply put, with a larger threshold on the distance between nodes, the generated simplicial complex has more edges (and higher-order simplexes in the case of the clique complex). In practice, it suffices to consider the sequence of threshold values , such that , contains just one more edge (and some higher-order simplexes containing that edge) than ; if there are multiple node pairs with exactly the same distance value, the corresponding multiple edges not in simultaneously appear in . If is taken to vary over all possible topologies, the simplicial complex is composed of isolated nodes, while the last one in the nested sequence is the complete graph (that is, precisely, the corresponding clique complex) of nodes.

A large correlation should correspond to a small . One can realize this by setting , where is a monotonically decreasing function. Then, the network interpretation of Eq. (10) simply states the nested relationship in which the edges existing in a dichotomized network are included in dichotomized networks with smaller thresholds. However, while this practice is common, is not mathematically guaranteed to be a distance metric, typically violating the triangle inequality if we use an arbitrary monotonically decreasing function . Therefore, one often uses that makes a distance metric, such as Eq. (6) or variants thereof. Then, the ensuing topological data analysis of correlation networks is underpinned by stronger mathematical foundations.

The next step is to calculate for each the homology groups or associated quantities, such as the th Betti number. The zeroth and first Betti numbers are the numbers of connected components and essentially different cycles, respectively. These and other topological features of depend on . For example, each connected component and cycle may appear (through closing loops) and disappear (through mergers with others) as one gradually increases the distance threshold from , at which all the nodes are isolated. One can precisely visualize the occurrence of the birth and death events of each component by the persistence barcodes or persistence diagrams. For example, the persistence diagram represents each connected component, cycles, two-dimensional voids, etc. as a point in the two-dimensional space in which represents the value at which, e.g., a cycle appears and () represents the value at which the same cycle disappears. If is large, that particular feature of the data is robust over changing scales because it is independent of the specific value of within a relatively large range.

We are often motivated to compare different correlation matrices or networks, such as in the comparison of functional brain networks between the patient group and control group. Quantitative comparison between two persistent diagrams provides a threshold-free method to effectively compare dichotomized networks. A persistence diagram consists of a set of two-dimensional points . The bottleneck and Wasserstein distance, between persistence diagrams and , which are commonly used, first consider the best matching between in and that in . For the obtained best matching, both distance metrics measure the distance between in and that in in the Euclidean space and tally up the distance over all the points in different manners. For instance, the bottleneck distance is given by , where is a matching between and .

Persistent homology has been applied to correlation networks in neuronal activity data [141, 176, 177, 144], gene co-expression data [173, 178, 179], financial data [180, 181], and to co-occurrence networks of characters in literature work and in academic collaboration [182]. Scalability of persistent homology algorithms remains a concern. However, it may be of less concern for correlation network analysis because the number of nodes allowed for correlation networks is typically limited by the length of the data, not by the speed of algorithms (see section 3.1).

3.4 Weighted networks

A strategy for avoiding the arbitrariness in the choice of the threshold value and loss of information in dichotomizing is to use weighted networks, retaining the pairwise correlation value as the edge weight [73, 140]. Although there are numerous settings in network science where negative edge weights are considered, they are generally more difficult to treat. (See section 3.5.) As such, two common methods to create positively weighted networks are (1) using the absolute value of the correlation coefficient as the edge weight and (2) ignoring negatively weighted edges and only using the positively weighted edges. Both methods dismiss some information contained in the original correlation matrix, i.e., the sign of the correlation or the magnitude of the negative pairwise correlation. Nonetheless, these transformations are widely used because many methods are available for analyzing general positively weighted networks, many of which are extensions of the corresponding methods for unweighted networks. One can also use methods that are specifically designed for weighted networks [183].

It should be noted that weighted networks share the problem of false positives due to indirect interaction between nodes with the unweighted networks created by dichotomization. We also note that, in contrast to thresholding (which may be followed by dichotomization), node pairs with any small correlation (i.e., correlation coefficient close to ) are kept as edges in the case of the weighted network. This may increase the uncertainty of the generated network and hence of the subsequent network analysis results.

Thresholding operations in statistics literature to increase the sparsity of the estimated covariance matrix often produce weighted networks. This is in contrast to the dichotomization, which produces unweighted networks. Hard thresholding in statistics literature refers to coercing , with , to if and keep the original if [184, 127, 185, 186]. Soft thresholding [184, 187, 186] transforms by a continuous non-decreasing function of , denoted by , such that

| (11) |

This assumption implies that, in contrast to hard thresholding, there is no discontinuous jump in the transformed edge weight at . Both hard and soft thresholding, as well as a more generalized class of thresholding function [186], do not imply dichotomization and therefore generate weighted networks. In numerical simulations, all these thresholding methods to generate weighted networks outperformed the sample covariance matrix in estimating true sparse covariance matrices [186]. The same study also found that there was no clear winner between hard or soft thresholding, while combination of them tended to perform somewhat better than other types of thresholding.

Adaptive thresholding refers to using threshold values that depend on . An example is to use Eq. (11), but with an -dependent threshold value, denoted by , in place of , with , where is a constant, and is an estimate of the variance of [188]. This adaptive thresholding theoretically converges faster to the real covariance matrix and performs better numerically than universal thresholding schemes (i.e., using a threshold value independent of ).

Tapering estimators also threshold and suppress in an -dependent manner. A tapering estimator for the entry of the covariance matrix is given by , where if , if , and if . Here, tapering parameter is an even integer, and is the maximum likelihood estimator of . The tapering estimator more strongly suppresses the entries that are farther from the diagonal respecting the sparsity of the estimated covariance matrix. This estimator is optimal in terms of the rate of convergence to the true covariance matrix, and the value of realizing the optimal rate differs between the two major matrix norms with which the estimation error is measured [189].

Application example: Chen et al. compared gene co-expression networks between people with schizophrenia and non-schizophrenic controls [190]. They used the Weighted Correlation Network Analysis (WGCNA) software (see section 6), which is frequently used in gene co-expression network analysis, to construct weighted networks of genes. In short, it uses as the weight of edge , where is a parameter. Then, they compared gene modules, or communities of the network, between the schizophrenia and control groups. The module detection was carried out by an algorithm implemented in WGCNA. There was no significant difference between the schizophrenia and control groups in terms of the module structure (i.e., which genes are in each module). However, the eigengene — that is, the first principal component of the co-expression matrix within a module — of each of two modules was significantly associated with schizophrenia compared to the control. The hub genes of each of these two modules, NOTCH2 and MT1X, were the largest contributor to the respective eigengenes. The authors further carried out biological analyses of these two genes to clarify where their expressions are upregulated and the functions in which these genes may be involved. The results were similar for a biopolar disorder data set.

3.5 Negative weights

Correlation matrices have negative entries in general. In the case of both unweighted and weighted correlation networks, we often prohibit negative edges either by coercing negative entries of the correlation matrix to zero or by taking the absolute value of the pairwise correlation before transforming the correlation matrix into a network. We prohibit negative edges for two main reasons. First, in some research areas, it is often difficult to interpret negative edges. In the case of multivariate financial time series, a negative edge implies that the price of the two assets tend to move in the opposite manner, which is not difficult to interpret. In contrast, when fMRI time series from two brain regions are negatively correlated, it does not necessarily imply that these regions are connected by inhibitory synapses, and it is not straightforward to interpret negative edges in brain dynamics data [191, 24]. Second, compared to weighted networks and directed networks, we do not have many established tools for analyzing networks in which positive and negative edges are mixed, i.e., signed networks. Signed network analysis is still emerging [192]

In fact, negative edges may provide useful information. For example, they benefit community detection because, while many positive edges should be within a community, negative edges might ideally connect different communities rather than lie within a community. Some community detection algorithms for signed networks exploit this idea [192, 193]. Another strategy for analyzing signed network data is to separately analyze the network composed of positive edges and that composed of negative edges and then combine the information obtained from the two analyses. For example, the modularity, an objective function to be maximized for community detection, can be separately defined for the positive network and the negative network originating from a single signed network and then combined to define a composite modularity to be maximized [194, 140]. While these methods are designed for general signed networks, they have been applied to brain correlation networks [140, 193].

Another type of approach to signed weighted networks is nonparametric weighted stochastic block models [195, 196], which are useful for modeling correlation matrix data. Crucially, this method separately estimates the unweighted network structure and the weight of each edge but in a unified Bayesian framework. By imposing a maximum-entropy principle with a fixed mean and variance on the edge weight, they assumed a normal distribution for the signed edge weight. Because the edge weight in the case of correlation matrices, i.e., the correlation coefficient, is confined between and , an ad-hoc transformation to map to such as is applied before fitting the model. One can assess the goodness of such an ad-hoc transformation by a posteriori comparison with different forms of functions to transform to using Bayesian model selection [196]. In this way, this stochastic block model can handle negative correlation values. With this method, one can determine community structure (i.e., blocks) including its number and hierarchical structure.

3.6 Partial correlation

A natural method with which to avoid false positives due to indirect interaction effects in the Pearson correlation matrix is to use the partial correlation coefficient (as in, e.g., [197, 198, 199]). This entails measuring the linear correlation between nodes and after partialing out the effect of the other nodes. Specifically, to calculate the partial correlation between nodes and , we first compute the linear regression of on , which we write as , where is the coefficient of linear regression. Similarly, we regress on , which we write as . The residuals for samples are given by and , where . The partial correlation coefficient, denoted by , is the Pearson correlation coefficient between and .

In fact, the partial correlation coefficient between and () is given by

| (12) |

where is the precision matrix [133, 134]. Equation (12) implies that is equivalent to the lack of partial correlation, i.e., . This conditional independence property gives an interpretation of the precision matrix, . Equation (12) also implies that the partial correlation can be calculated only when is of full rank, whose necessary but not sufficient condition is . If is rank-deficient, a natural estimator of the partial correlation matrix is a pseudoinverse of . However, the standard Moore-Penrose pseudoinverse is known to be a suboptimal estimator in terms of approximation error [200, 201], while the pseudoinverse is useful for screening for hubs in partial correlation networks [201]. If is of full rank, as well as is positive definite. Therefore, although Eq. (12) only holds true for , if we denote the matrix defined by the right-hand side of Eq. (12) including the diagonal entries by , then the diagonal entries of are equal to , and is negative definite. We can verify this by rewriting Eq. (12) as , where is the diagonal matrix whose diagonal entries are , , . If we consider matrix , where is the identity matrix, as a partial correlation matrix to force its diagonal entries to instead of , the eigenvalues of are upper-bounded by [202].

By thresholding the partial correlation matrix, or using an alternative method, one obtains an unweighted or weighted partial correlation network, depending on whether we further dichotomize the thresholded matrix. Because the partial correlation avoids the indirect interaction affect, the network created from random partial correlation matrices yields, for example, smaller clustering coefficients [156] than if we had used the Pearson correlation matrix.

While it apparently sounds reasonable to partial out the effect of the other nodes to determine a pairwise correlation between two nodes, it is not straightforward to determine when the partial correlation matrix is better than the Pearson correlation one. First, Eq. (12) implies that extreme eigenvalues of are those of a normalized precision matrix. Because the precision matrix is the inverse of the covariance matrix , extreme eigenvalues of are derived from eigenvalues of with small magnitudes. It is empirically known for, e.g., financial time series, that small-magnitude eigenvalues of the covariance matrices are buried in noise, i.e., not distinguishable from eigenvalues of random matrices [101, 102], as we discuss later in section 4. Therefore, the dominant eigenvalue of the precision matrix is strongly affected by noise [203].

Second, the entries of are more variable than those of Pearson correlation matrices. Specifically, if obeys a multivariate normal distribution, the Fisher-transformed partial correlation of a sample partial correlation, i.e.,

| (13) |

where is the sample partial correlation calculated through Eq. (12) with , approximately obeys the normal distribution with mean and standard deviation . This result dates back to Fisher (see e.g., [204]). In contrast, the corresponding result for the Fisher transformation of the Pearson correlation coefficient is that the transformed variable approximately obeys the normal distribution with mean and standard deviation [204]. Therefore, the partial correlation has more sampling variance than the Pearson correlation unless .

Third, partial correlation matrices typically have more negative entries and smaller-magnitude entries than Pearson correlation matrices [205, 204]. Combined with the larger variation of the sample partial correlation than the sample Pearson correlation discussed just above, the tendency that has a smaller magnitude than poses a challenge of statistically validating the estimated partial correlation networks [204].

Studies in neuroscience have compared partial correlation networks with simple correlation networks and/or with the corresponding underlying structural networks [198, 206, 32, 207, 208, 209, 62] (see section 2.2). One study found that the similarity between partial correlation networks and structural networks is higher than that between correlation networks and structural networks [62].

Application example: Wang, Xie, and Stanley analyzed correlation networks composed of stock market indices from 2005 to 2014 from 57 countries [210], widely covering the continents of the world, with each country corresponding to a node. They computed Pearson and partial correlation coefficients for the time series of the logarithmic returns, given by Eq. (1), between each pair of countries, converted the correlation coefficient value into a distance (see Eq. (6)), and constructed the minimal spanning trees, called the MST-Pearson and MST-Partial networks. These networks appeared to be scale-free (i.e., with a power-law-like degree distribution) trees. Among other things, they compared clusters and top centrality nodes (i.e., countries) between the MST-Pearson and MST-Partial. They observed that the results from the MST-Partial networks are more reasonable than those from the MST-Pearson construction in light of our general understanding of world economics.

3.7 Graphical lasso and variants

Estimating a true correlation matrix, which contains unknowns, is an ill-founded problem unless the number of samples is sufficiently larger than . A strategy to overcome this problem is to impose sparsity of the estimated correlation network. A sparsity constraint enforces zeros on a majority of matrix entries to suppress the number of unknowns to be estimated relative to the number of samples. Imposing sparsity on estimated correlation networks is a major form of covariance selection. Structural learning refers to estimation of an unknown network from data and usually assumes that the given data obey a multivariate normal distribution and that the estimated network is sparse. For reviews with tutorials and examples on this topic, see [211, 25, 212].

The Gaussian graphical model assumes that the precision matrix from the data obeys a multivariate normal distribution and usually imposes sparsity of the precision matrix [134]. In addition to reducing the number of unknowns to be estimated, a motivation behind estimating a sparse precision matrix is that is equivalent to the absence of conditional linear dependence of the signals at the th and th nodes given all the other variables, which is easy to interpret. The graphical lasso is an algorithm for learning the structure of a Gaussian graphical model [213, 214, 215, 216, 217, 218]. The graphical lasso maximizes the likelihood of the multivariate normal distribution under a lasso penalty (i.e., penalty), whose simplest version is of the form , where we recall that is the entry of the precision matrix, and is a positive constant. This penalty term is added to the negative log likelihood to be minimized. If is large, it strongly penalizes positive , and the minimization of the objective function yields many zeros of the estimated . The pairs for which the estimated is nonzero form edges of the network; if there is no edge between and , they are conditionally independent, i.e., conditioned on the other variables. This conditional independence is also referred to as the pairwise Markov property because the distribution of only depends on . The pairwise Markov property is a special case of the global Markov property. The global Markov property dictates that and are conditionally independent given if is a cutset of and , that is, any path in the graph connecting a node in and a node in passes through . The pairwise and global Markov properties are major cases of the Markov random field, which is defined by a set of random variables having Markov property specified by an undirected graph [219, 134, 220, 221, 222]. The network is sparse by design. One can extend the lasso penalty function in multiple ways, for example, by allowing to depend on and automatically determining using an information criterion. Other ways to regularize the number of nonzero elements in the precision matrix than lasso penalty are also possible. (See e.g., [223, 224, 225].)