Copyright Protection in Generative AI: A Technical Perspective

Abstract.

Generative AI has witnessed rapid advancement in recent years, expanding their capabilities to create synthesized content such as text, images, audio, and code. The high fidelity and authenticity of contents generated by these Deep Generative Models (DGMs) have sparked significant copyright concerns. There have been various legal debates on how to effectively safeguard copyrights in DGMs. This work delves into this issue by providing a comprehensive overview of copyright protection from a technical perspective. We examine from two distinct viewpoints: the copyrights pertaining to the source data held by the data owners and those of the generative models maintained by the model builders. For data copyright, we delve into methods data owners can protect their content and DGMs can be utilized without infringing upon these rights. For model copyright, our discussion extends to strategies for preventing model theft and identifying outputs generated by specific models. Finally, we highlight the limitations of existing techniques and identify areas that remain unexplored. Furthermore, we discuss prospective directions for the future of copyright protection, underscoring its importance for the sustainable and ethical development of Generative AI.

1. Introduction

Recently, generative AI models have been extensively developed to produce a wide range of synthesized content, including text, images, audio, and code, among others. For example, advanced image generative models, such as Diffusion Models (DMs) (Ho et al., 2020), can produce highly realistic and detailed photographs and paintings. Similarly, large language models (LLMs) like ChatGPT (Achiam et al., 2023) can be leveraged to compose coherent and creative text articles with arbitrary genres and storylines. We refer to these advanced models as “Deep Generative Models” (DGMs). However, because of the remarkable fidelity and authenticity of the generated contents from DGMs, concerns have been raised regarding the associated copyright issues. For example, the New York Times sued OpenAI and Microsoft for using copyrighted work for training chatGPT 111https://www.nytimes.com/2023/12/27/business/media/new-york-times-open-ai-microsoft-lawsuit.html, https://www.nytimes.com/2024/01/08/technology/openai-new-york-times-lawsuit.html. Midjourney was accused to output images copied from commercial films222https://spectrum.ieee.org/midjourney-copyright. These copyright issues may pertain to various parties involved in the generation process. In specifics:

-

(1)

Source Data Owners. To generate high-quality contents, DGMs require training on a large amount of data, collected from various resources such as the Internet, even without the permission of the original data owner. As demonstrated in recent studies (Carlini et al., 2022, 2023), it is likely that both DMs and LLMs can produce contents with a high coincidence to parts of the training data samples. Besides, DGMs can also be utilized to directly edit the contents or imitate the artistic styles from source images and texts. These facts raise concerns for the data owners, as DGMs can generate data that closely resembles or replicates their original data without authorization.

-

(2)

DGM Users. DGMs are also frequently utilized by DGM users and assist DGM users for creative composing. However, whether the DGMs users should receive copyright for their generated contents is still a complex and evolving legal and ethical issue. For example, in 2023333Registration of “Zarya of the Dawn”, US Copyright Office refused to register a graphic novel for an artist facilitated by Midjourney444https://www.midjourney.com/ (a popular AI image generation model). However, another giant generative-AI company, OpenAI, claims that the model users own the created data via the models from OpenAI, including the right to reprint, sell, and merchandise555https://openai.com/policies/terms-of-use.

-

(3)

DGM Providers. The DGM providers contribute great efforts on the training of a DGM. It includes collecting and processing the large amount of data, engineering on the training and tuning for optimized model performance. Therefore, the DGM providers also have reasons to demand the copyright of the generative contents.

It has been under active discussion among government officials, lawmakers, and the general public, about who should claim the copyright and how to protect it. For instance, in early 2023, the U.S. Copyright Office initiated a process to gather feedback on copyright-related concerns related to generative AI. This included the discussions on the scope of copyright for works created via using AI tools and the use of copyrighted materials in AI training. Additionally, legal perspectives on these matters have also been explored in various opinions (Zirpoli, 2023; Franceschelli et al., 2022; Samuelson, 2023). However, in this article, we approach the topic from a different angle, where we provide an overview on existing computational methodologies, which have been proposed for copyright protection from a technical perspective. These are potential viable solutions for either AI model creator or model users, for copyright protection. In general, these computational techniques can be categorized according to the receiver of the copyright:

-

•

For source data owners, the protection on their original works could be achieved by: (a) “Crafting unrecognizeable examples”, which refers to the process that the data owners manipulate their data to hinder DGMs from extracting information; (b) “Watermark techniques” can be used by the data owner to trace and distinguish whether a generated work is produced based on their original creation; (c) “Machine Unlearning” means that the data owner can request a deletion of their data from the model or its output, once they identify the copyright infringement; (d) “Dataset De-duplication” removes duplicated data to mitigate the memorization effect to prevent the training data from being generated; (e) “Alignment” which uses a reward signal to reduce the memorization in LLMs; and (f) others including improved training and generation algorithms for better behaviors of LLMs.

-

•

For DGM users who create new works assisted by DGMs, the protected object is the generated contents from DGMs. Thus, the techniques for this type of copyright is barely related to the generation process of DGMs, as traditional copyright protection strategies can be also applied for protection of the DGM generated contents.

-

•

For DGM providers, there are representative “watermarking strategies” to inject the watermarks into the generated content or model parameters, such that we can track the ownership of the model.

Given the diversity in protection objectives, as well as DGM applications, we are motivated to have an overview on existing computational methods in this direction. Essentially, in Section 2, we will majorly discuss the copyright protection techniques for DGMs in the image domain. In Section 3, we discuss the strategies for text generation. Finally, we discuss the related problems in other domains, such as graphs, codes and audio generation in Section 4. In each section, we will introduce the background knowledge of existing DGMs, as well as the existing methodologies for data protection under different scenarios.

2. Copyright in Image Generation

In this section, we first introduce background knowledge on existing popular image generation models. Then, we define the problems related to the copyright issues for these image generation models, and introduce different strategies which can be utilized for data and model copyright protection.

2.1. Background: DGMs for Image Generation

In the era of deep learning, there are various types of image generation models that have been extensively studied. Most of them follow a pipeline to first collect a set of training images , whose samples’ distribution can be denoted as , and a model is trained to explicitly or implicitly estimate this data distribution. We denote the learned distribution as . During the image generation stage, the new images are generated by sampling from the learned distribution . For example, some popular models (Goodfellow et al., 2014a; Ho et al., 2020) can be written as the form of , which takes random noise (following distributions such as Gaussian Distribution) as input and generates samples . Besides, there are more advanced generative models, which can generate new samples based on user’s particular demands. For example, there are conditional generative models (Mirza et al., 2014) to generate samples which belong to a specific sub-distribution of , where can denote the given condition. In text-to-image models (Rombach et al., 2022), the condition can also be formatted as a language prompt for the desired generated samples. Furthermore, there are also advanced models which can directly take inputs from a few source samples , and then edit or modify them to obtain new samples . In the following, we provide a brief overview on the mechanisms of several popular image generative models.

-

•

Autoencoders (Vincent et al., 2010; Kingma et al., 2013) refer to the generative models, which consist of an encoder module and a decoder module for image reconstruction. In detail, the encoder projects a real image into a latent vector in the latent space. Then, the decoder takes the input of the latent vectors to generate new samples . The decoder is trained to reconstruct the original sample from the its latent produced by the encoder. In the work (Kingma et al., 2013), the latent vectors are further regulated to follow a standard Gaussian distribution. During the generation process, new latent vectors are sampled from the regulated distribution directly without the encoder and then decoded to obtain the generated images.

-

•

Generative Adversarial Networks (GAN) (Goodfellow et al., 2014a) are proposed to synthesize images via solving a min-max game. In GANs, there are two adversarial players, a generator and a discriminator . The generator aims to generate images that are highly realistic, while the discriminator aims to determining whether a sample is real or synthesized by . By solving the min-max problem, and will eventually converge to a point at which is well trained to distinguish the real and fake images, but can generate convincing images that are still possible to confuse . The adversarial min-max problem can be formulated as:

(1) where is the generated images, given the latent vector ( is the predefined distribution like an isotropic Gaussian noise). In Eq. (1) the goal of is to be trained to generate more authentic and realistic samples to mislead .

-

•

Diffusion Models, like DDPM (Ho et al., 2020), are a type of generative models which generate images via two -step Markov processes: a forward process and a reverse process. The forward process gradually adds noise to transform an input image into an isotropic Gaussian sample . In the reverse process, a denoising neural network is trained to transform a sample from the Gaussian distribution into the image distribution. The forward process can be formulated as:

where and controls the variance of injected noise. Then, the reverse process learns to denoise from noisy variable to less-noisy variable . The denoising step is approximately equivalent to estimating the injected noise with a parametric neural network in practice. The network is trained to minimize distance between estimated noise and true noise:

(2) Once the training of denoising network is complete, a new image can be generated starting from a random noise by solely employing the reverse process.

Based on DDPM, Latent Diffusion Model (LDM) (Rombach et al., 2022) is a specific class of diffusion models, which apply the forward process in the latent space instead of the pixel (image) space. In detail, to train an LDM, an input image is first mapped to its latent representation , where is a given image encoder. Then the forward process continues by repeatedly adding noise to the latent representation for steps, while the reverse process generates data by the denoising network . Once the latent representation is generated by the reverse process, it is then decoded by a decoder model to get the final generated image .

-

•

Text-to-image Diffusion Models, such as Stable Diffusion (SD) (Rombach et al., 2022), MidJourney, and DALLE2, allow the model users to generate images based on language descriptions. Stable Diffusion is achieved by combining LDMs with CLIP (Radford et al., 2021), a powerful model that learns the connection between the concepts of human language and images. Briefly speaking, given one of a few sentences as a prompt, a new image is produced following the basic pipeline of LDM, but conditioning on the (embedding of) language information of the given prompt. As a result, the generated contents can contain the desired semantic features and patterns by the prompt.

Recently there are more advanced techniques such as Textual Inversion (Gal et al., 2022) and DreamBooth (Ruiz et al., 2023) which further upgrade text-to-image diffusion models for customized image editing and modifying. For example, one can fine-tune SD on a few “input images” via the algorithm of DreamBooth, to make the model learn new objects from the input images, and then generate new images for the targeted object in different scenes.

2.2. Copyright Issues in Image Generation

The development of Deep Generative Models (DGMs) marks a noteworthy advancement in image generation. Nevertheless, the impressive quality and authenticity of the generated images, as well as the efficiency in producing new ones, give rise to legitimate concerns regarding copyright matters within the realm of DGMs.

Data copyright protection. For the source data owner, which refers to the party or individual who owns the originality of image works, their data can be intentionally or unintentionally collected by model trainers as training samples to construct DGMs as introduced above. For example, recent studies (Carlini et al., 2023; Vyas et al., 2023) have demonstrated that popular DGMs are highly possible to completely replicate their training data samples, which is called memorization. The possibility of data replication may severely offend the ownership of the original data samples. Moreover, the development of fine-tuning strategies such as DreamBooth can greatly improve the efficiency for unauthorized parties to directly edit or modify the source data to obtain new samples, which also severely infringes the copyright of the original works.

Model copyright protection. To obtain DGMs with advanced generation performance, it is always necessary for the model trainers to invest a significant amount of funds and labor. It grants them intellectual property rights over the trained model. However, recent works also identify the possibility to steal others models (Tramèr et al., 2016).

2.3. Data Copyright Protection

In this subsection, we review the techniques for the source data owners to protect their data copyrights. In general, we can categorize these techniques into four major types.

-

•

Unrecognizable Examples, which aim to prevent models from learning important features of protected images. This often results in the generation of either low-quality images or completely incorrect ones;

-

•

Watermark, which involves inserting unnoticeable watermarks into protected images. Data owners can detect these watermarks if their data is used for training;

-

•

Machine Unlearning, which aims to ablate the contribution of copyrighted data on the DGM to prevent the model from generating based on the information of the protected images;

-

•

Dataset De-duplication, which mitigates the memorization by removing the duplicated data from training set.

In the four categories, unrecognizable examples and watermarks are employed from the side of source data owners. They modify their data before releasing to the public to protect the copyright. As for machine unlearning and dataset de-duplication, they are proposed for the model builders who aim to provide DGM services legally without infringement on the copyrighted data. Next, we will introduce these strategies in detail.

2.3.1. Unrecognizable Examples

From the perspective of the source data owner, one major strategy for data protection is to make their data “unrecognizable” to potential DGMs. In general, a data owner can consider injecting imperceptible perturbations into the protected images, such that the DGMs cannot effectively exploit useful features from them, and hence hardly generate qualified new samples. These works of “unrecognizable examples” can be categorized based on how the DGM is utilized to extract information from the source image: (1) “inference-stage protection” counteracts DGMs which operate without the need for fine-tuning on the source image . These models are capable of extracting image features directly from the source image in an inference mode; (2) “training-stage protection” is against DGMs that is fine-tuned on the source images to extract the desired information and generate new samples based on the extracted information. Targeting on these two types of image editing manners, a variety of data copyright protection strategies are devised.

1) Inference stage protection. Well-trained DGMs, such as GAN models and diffusion models, can be directly used for various image generation tasks, including image-to-image synthesis and image editing. Thus, it is crucial to address inference-stage protection which aims at preventing models from extracting important information.

GAN-based methods. UnGANable (Li et al., 2022b) is the first defense system against a method commonly used for altering photographs or artistic creations, called GAN Inversion (Zhu et al., 2016, 2020; Abdal et al., 2019). It uses adversarial examples (Goodfellow et al., 2014b) to mislead GAN in latent space. Ruiz et al. (2020) aimed at data protection against Image-Translation GAN (Liu et al., 2017), which is a variant of conditional GAN that directly generates new images by inputting a source image instead of a random latent vector. It can generate an image which is manipulated from the source image . They use adversarial examples to maximize the distortion in the generated image. Yeh et al. (2020) aimed to protect users’ images from DeepNude (Sigal Samuel, 2019), a deep generative software based on the image-to-image translation algorithm. This method also borrows the idea of adversarial examples. It defines adversarial loss for Nullifying Attack which maximizes the distance of features extracted by the generator between perturbed images and the original image, as well as adversarial loss for Distorting Attack. Besides, Huang et al. (2021) expanded adversarial examples to more practical “grey-box” and “black-box” settings, which refer to the case that the data owner is unaware of the specific model that might be used for potential copyright infringement. This method adopts a surrogate model to approximate the manipulation model and update surrogate parameters and adversarial examples in an alternative manner. These examples demonstrate that the framework to generate adversarial examples can be adapted to different image manipulation models by properly designing the loss function for different specific tasks.

Diffusion models. Beyond GAN-based models, recently diffusion models have also been exploited for various types of modifying or editing tasks, which exposes a significant risk for copyright infringement. For example, Textual Inversion (Gal et al., 2022) is an image modifying technique by Stable Diffusion, without any training or fine-tuning process. Refer to the illustration in Figure 1, given a few source samples , Textual Inversion aims to extract the knowledge from by linking the sample to a specific text string such as . This process is achieved by adjusting the text embedding of in Stable Diffusion to embed the information of into it. The model user can utilize to compose new prompts,

and generate new images with the information embedded in , such as the original object or style from the source image, which might infringe the copyright of the source images. Targeted on Textual Inversion, the work (Liang et al., 2023a) aims to find an adversarial example to protect the source images . lies out of the distribution of the generated data samples of the diffusion models. Thus, the inversion process cannot find proper language tokens corresponding to the adversarial image. In detail, following the general definition of the diffusion loss in Eq. (2), we define the loss function on a specific sample : , the work (Liang et al., 2023a) aims to find a perturbation to maximize the loss value of the diffusion model on :

Because the diffusion model has a maximized loss on the perturbed image , the sample can be seen as a natural outlier from the distribution of the generated samples of the model. As a result, the Textual Inversion process cannot effectively find reasonable token , and thus protect the original information.

Similar to the idea of (Liang et al., 2023a), the work (Salman et al., 2023) directly leverages the adversarial examples for data protection against a image editing model based on LDM. In the work of (Salman et al., 2023), two attack strategies are proposed: (a) Encoder Attack: Considering a Latent Diffusion Model is employed for image editing, given the encoder model which maps a source image to the representation , the encoder attack searches for an perturbation satisfying where is a target latent representation. In this way, the latent representation of is close to the target representation which is pre-specified to be distinct from the original latent representation , which severely disrupts the LDM process. (b) Diffusion Attack: The full image editing process can be simply denoted as a model , and the generated image can fulfill the generation goal of the model user, the diffusion attack directly finds a perturbation to maximize the discrepancy of the generated sample and :

As a result, the newly generated sample is close to the target image thus very different from , which breaks the original image editing goal. However, addressing this optimization problem is computationally costly due to the extensive number of parameters involved and the multiple steps during the diffusion process. Consequently, they suggested calculating the loss based on only a limited number of steps instead of the entire diffusion process.

According to the discussion in (Salman et al., 2023), currently existing data protection strategies based on adversarial examples still face major drawbacks that can hinder their feasibility and reliability in practice:

-

•

Lack of robustness to transformations. The protected images may also be subject to image transformations and noise purification techniques, such as cropping the image, adding filters to it, or applying a rotation. However, the authors also mention that this problem can be addressed by leveraging “robust” adversarial perturbations, as discussed in (Athalye et al., 2018; Kurakin et al., 2018).

-

•

Generalization on different models. The protection techniques that are designed for one generative model may not be guaranteed to be effective against future versions of these models or other types of generative models. The authors mentioned that one could hope to improve on the “transferability’ of adversarial perturbations (Ren et al., 2022; He et al., 2023; Li et al., 2024). However, these “transferable” perturbations will not always be transferable for all circumstances.

2) Training stage protection Different from directly employing DGMs to generate new images, DGMs are also usually trained or fine-tuned on some source images to effectively learn useful information from for future generations. Therefore, “training-stage protection” aims to add imperceptible noise to the copyrighted images, to break the training process of potential DGMs for data copyright protection.

This type of method is first explored on GAN-based models. For example, GAN-based Deepfake models (Lu., 2018) are representative tools that can be leveraged to swap the faces from the source images to the faces of a target person, which severely abuses the copyrights of both source image holders and target people. Similar tools like EditGAN (Ling et al., 2021) and Introspective Adversarial Network (Brock et al., 2016) are developed to edit images that pose more threats to the copyrights of creative and artistic works. To handle this problem, Yang et al. (2021) proposed to utilize the idea of adversarial examples (Goodfellow et al., 2014b), to break the balance in the min-max game in GAN-based DeepFake models. Specifically, they focus on Deepfake models which are trained on the target person’s face images to generate other face images belonging to the target person. To protect the targeted face being exploited by Deepfake, they directly adopt the fast gradient sign method (FGSM) method to generate adversarial examples for GAN-based models,

| (3) |

where refers to the loss of the discriminator in GAN. During the generation process, the discriminator will have a large loss value on the target samples , which consequently breaks the balance of the min-max game during the training of GAN. As a result, the generated images based on the protected target images will have a degraded quality (see Figure. 2). Notably, in Eq.(3), a transformation operator is introduced to improve the robustness of the perturbation under various image transformations, including resizing, affine transformation and image remapping.

Wang et al. (2022) pointed out that previous adversarial perturbations via gradient-based strategies (e.g, FGSM) could be easily removed or destroyed by image reconstructions such as MagDR (Chen et al., 2021b) and proposed Anti-Forgery targeted on GAN-based Deepfake attacks. Anti-Forgery generates perturbations that are robust to input transformation, natural to human eyes, and applicable to black-box settings. They observed that adversarial perturbations on the LAB color space are robust to input reconstruction. Therefore, they converted the input from RGB space to the LAB color space and added perceptual-aware adversarial perturbations to the color channel to maintain robustness against input transformations including image reconstruction (Chen et al., 2021b) and image compression (Dziugaite et al., 2016).

Regarding diffusion models (Rombach et al., 2022; Ruiz et al., 2023), which can be easily deployed to mimic the style of specific artists, via advanced fine-tuning techniques, the special structure of diffusion models (see Section 2.1) poses unique challenges, especially the denoising procedure during the reverse process can eliminate noises added to the original image (Nie et al., 2022). GLAZE (Shan et al., 2023) is a representative copyright protection method focusing on text-to-image LDM and aiming at protecting artists from style mimicry. As shown in Figure 3, the core idea of GLAZE is to guide the diffusion model to learn an alternative target style that is totally different from the style of protected images. In detail, the method consists of three steps: target style choosing, style transfer, and cloak perturbation computation. GLAZE first chooses a target style which is sufficiently different from the protected style. A pre-trained style-transfer model is utilized to transfer the protected artworks into the target style for optimization. Then, GLAZE computes the cloak perturbation by:

| (4) |

where is the feature extractor of the LDM. This objective minimizes the distance between features of perturbed

images and target-style transferred images, and LPIPS (Zhang et al., 2018) constraints the perturbation to be imperceptible. When fine-tuned on protected arts, the generated images will mimic the target style rather than the arts’ true style.

MIST (Liang et al., 2023b) emphasizes that existing methods generate perturbations relying on some strong assumptions on a specific model which are hard to generalize to other scenarios. For example, perturbations generated for image-to-image DGMs usually fail for Textual Inversion (Salman et al., 2023). Therefore, they propose to generate perturbations that can work for various DGMs simultaneously, including DreamBooth (training-stage) (Ruiz et al., 2023), Textual Inversion (inference-stage) (Gal et al., 2022) and image-to-image generation (Rombach et al., 2022). To achieve this goal, they combine semantic loss from (Liang et al., 2023a) and textual loss from (Salman et al., 2023). They empirically show that the maximization of semantic loss leads to chaotic contents in the generated image, and the maximization of textual loss leads to a mimic of the pre-specified target image. Their empirical results reveal that perturbations from the combination of two losses can protect images under different scenarios.

Anti-DreamBooth (Van Le et al., 2023) specifically targets a powerful finetuning technique, DreamBooth (Ruiz et al., 2023), which is proposed to personalize text-to-image diffusion models onto given source images. DreamBooth has a similar target as Textual Inversion but requires fine-tuning of diffusion models. To avoid malicious usage of DreamBooth on users’ owned images, Anti-DreamBooth aims to attack the training process of DreamBooth, following the idea of data poisoning attacks. In detail, it formulates the protection problem as a bi-level optimization problem, to find perturbation satisfying:

where denotes the training loss for DreamBooth, and refers to the conditional loss of sample in prompt-guided diffusion models. By solving this problem, it searches for the perturbation, such that the fine-tuned diffusion models will disconnect the image with its corresponding language concept because of the high conditional loss. In this way, during the fine-tuning process, DreamBooth will overfit the adversarial images and experience worse performance in synthesizing images with high quality. Later, the work ADAF (Wu et al., 2023) also focuses on text-to-image models but pays more attention to the text part. It points out two drawbacks of existing methods: existing methods ignore the combination of the text encoder and the image encoder; existing methods are not robust to the perturbation of prompts. Consequently, ADAF implements multi-level text-related augmentations to enhance defense stability.

2.3.2. Watermarks

Another approach for protection on source data copyright is to track or detect whether a suspect piece of artwork is generated by a model trained on the copyrighted data. Various AI-generated image detection methods (Epstein et al., 2023; Dogoulis et al., 2023) can be applied to distinguish whether a sample is generated by certain models, which partially fulfill the objective. However, these methods are still not applicable to identify the source of the generated contents. Therefore, the “watermarking” strategy is alternatively studied. This technique involves encoding sophisticated “identifiable information” into the copyrighted source data, such that this information also exists in the generated samples which are trained on the watermarked images. Subsequently, a detector is leveraged to assess whether a suspect image contains this encoded information, to trace and verify the ownership of copyright.

Before DGMs, there exist various watermarking methods(Baluja, 2017; Hayes et al., 2017; Vukotić et al., 2018; Zhu et al., 2018; Zhang et al., 2019; Tancik et al., 2020; Luo et al., 2020) hiding data like a message or even an image behind imperceptible perturbations. These techniques primarily concentrate on hiding information in specific images, without being specifically applied to DGMs. But the objective of protection copyright against malicious DGMs is to identify hidden messages within generated images. Focusing on DDPM (Nichol et al., 2021), Cui et al. (2023a) evaluated whether the injected watermarks via previous methods for traditional image watermarks (Navas et al., 2008; Zhu et al., 2018; Yu et al., 2021) can still be preserved in the generated samples. The empirical results show that these methods are either partially preserved in generated images or requires large perturbation budgets. Therefore, they proposed DiffusionShield (Cui et al., 2023a), a watermarking method designed for diffusion models. To elaborate, blockwise watermarks, are engineered to convey a greater amount of information, allowing distinct copyright information to be more readily decoded. Then, a joint optimization strategy is leveraged to optimize both the pixel values of watermark patches, as well as a decoding model, which is utilized to detect and decode the encoded information from the generated images.

Fine-tuning text-to-image diffusion models, like Stable Diffusion, demonstrates significant potential in personalizing image synthesis and editing. Consequently, watermarking techniques are increasingly applied as a means of copyright protection during the fine-tuning phase. GenWatermark (Ma et al., 2023b) is the first to propose a novel watermark system that is based on the joint learning of a watermark generator and a detector. In particular, it adopts a GAN-like structure, where a GAN generator serves as the watermark generator and a detector is trained to distinguish between clean and watermarked images. Wang et al. (2023) aimed at a method that is independent of the choice of text-to-image diffusion models so that the perturbation can effectively protect the images from various models. In detail, they add specific stealthy transformations on the protected images as well as injecting a corresponding trigger into the caption of those images. Since they use the image warping function as the watermark generator, this method can work without a surrogate model and thus can work on different diffusion models. Cui et al. (2023b) considered a practical scenario where protectors can not control the fine-tuning process and emphasize that previous methods require many fine-tuning steps to learn the embedded watermarks. In order to make the watermark easily recognized by the model, they proposed FT-Shield which adds imperceptible perturbations that can be learned prior to the original image features, like styles and objects, by the text-to-image diffusion model. In the detection stage, a binary classifier is trained to distinguish the watermarked images and clean images. In particular, the perturbations minimize the loss of a diffusion model trained on these perturbed samples as shown in the training objective:

| (5) |

where denotes the parameters of the UNet (Ronneberger et al., 2015) which is the denoise network within the text-to-image model structure, while denotes parameters of the other parts; and denote the protected image and the corresponding caption, respectively. In other words, perturbation in Eq. (5) leads to a rapid decrease in the training loss and thus serves as a ’shortcut’ feature that can be quickly learned and emphasized by the diffusion model.

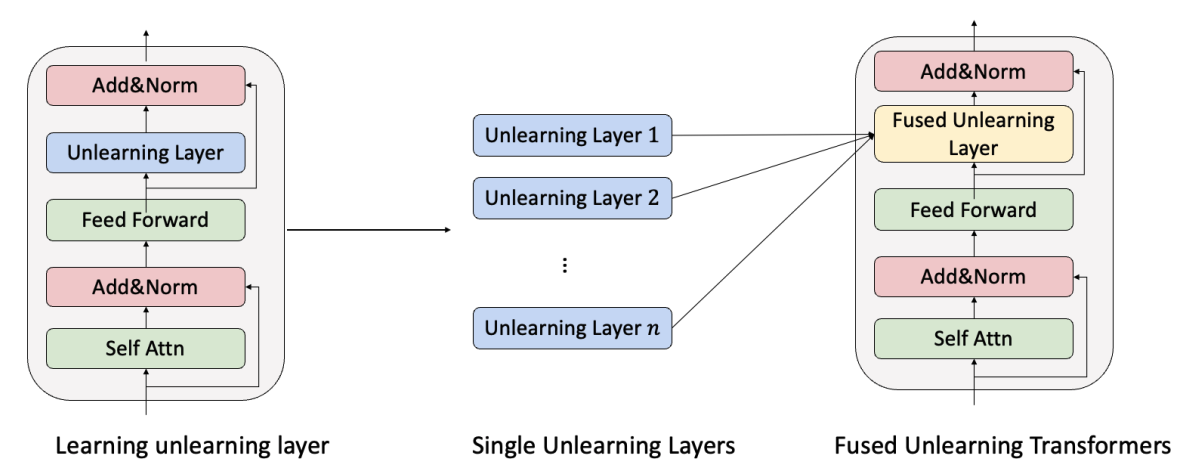

2.3.3. Machine Unlearning

In addition to the data owners, the protection of the data copyright is also considered by model builders to ensure the legal provision of DGM services. Companies try to filter out the copyrighted data from the training data. For example, Stability AI cooperated with an AI startup Spawning to build tools for data owners to claim their copyright and remove the data from the training set of Stable Diffusion, which has removed 80 million images from the training data of Stable Diffusion 3 666https://the-decoder.com/artists-remove-80-million-images-from-stable-diffusion-3-training-data/. OpenAI also provides solution to the data owners to report the violation of data copyright 777https://adguard.com/en/blog/ai-personal-data-privacy.html. Besides the data filtering, the model builder also takes other strategies like machine unlearning and dataset de-duplication (Section 2.3.4). Depending on whether the intent behind their implementation is motivated by the model builders’ need to legitimize the generation process or the source data owners’ requirements for the model builders, we attribute to machine unlearning (Bourtoule et al., 2021; Nguyen et al., 2022; Zhang et al., 2023a; Kumari et al., 2023) as the passive method and dataset de-duplication (Webster et al., 2023; Somepalli et al., 2023b) as the active method. For the passive method, after the DGM is trained, the model builder provides an interface for data owners to claim their copyright and ablate the influence of copyrighted data from the DGM. The passive method is executed when the source data owners request. In contrast, for the active method, the model builder considers the copyright in the stage of model training. The active method is usually implemented by the model builder without the request of source data owners. In this subsection, we focus on the discussion of machine unlearning in data copyright protection.

Especially, “Machine Unlearning” (Bourtoule et al., 2021) refers to the protocol to make a trained model forget a specific subset of training data, by editing the model parameter to follow the distribution identical to that of a model trained without the forgotten subset. With different subsets of undesirable concepts, machine unlearning can achieve not only copyright protection, but also preserving privacy against membership inference attack and the removal of biased, NSFW and harmful concepts.

Refer to Definition III.1 in (Bourtoule et al., 2021) and Section 3.1 in (Nguyen et al., 2022), assuming that the collected training dataset is , we notate the model obtained by training with the vanilla learning algorithm as . Assuming the undesirable (copyrighted) subset is , and the unlearning mechanism is , the model obtained by with unlearning of can be represented as . The perfect unlearning should have the distribution of parameters of unlearned model, , to be identical to the distribution of model parameter trained on the dataset stripped of the undesirable , i.e.,

| (6) |

where denotes the distribution of a random variable. For the problem of DGM where we consider the distribution of generated samples, and adapt Eq. (6) into:

| (7) |

where is the generated samples from model . By choosing different sub-dataset as , machine unlearning can fulfill various objectives. When is set as the data owned and copyrighted by individuals, the DGM builder utilizes machine unlearning to safeguard the copyright of this data. Zhang et al. (2023a) pointed out four goals of unlearning for DGM: performance (successfully remove target data from the model), integrity (at best keep other data of the model), generality (can be applied to a wide range of data that covers all aspects of human perceptions) and flexibility (can be applied to various models of different tasks and domains). In the following, we discuss different unlearning methods for GANs and Diffusion Models in the image domain.

Fine-tuning with a modified objective is usually used to achieve Eq. (7) for DGMs efficiently. Compared with data filtering and re-training from scratch (Nichol et al., 2022; Ramesh et al., 2022), it is more efficient in time and energy. For example, a representative method (Kong et al., 2023b) modifies the min-max adversarial objective of GAN by mixing the undesirable data with generated data as negative (fake) samples of the discriminator. Thus, the fine-tuned discriminator will not consider the undesirable data as true samples and the generator will be fine-tuned to avoid generating it. Another unlearning method considers how to change the guidance of conditions in conditional DGMs, like text-to-image models. The copyrighted images can be transformed as concepts like styles, branches, person names, and so on. By re-directing the conditional representations of these concepts to conditions unrelated to the copyrights, the generated images can avoid infringement on the copyrighted images. Kong et al. (2023a) represent the undesirable concepts as . They fine-tune the DGM to push the condition representation of concepts belonging to towards a concept which is not in the undesirable set. Meanwhile, to keep the other benign concepts unchanged, the fine-tuning objective also includes a term maintaining the representation of them as follows

| (8) |

where is the fine-tuned condition representation function, while is the original condition function. The condition representation of undesired is re-directed towards the benign concept and can avoid to guide the model to produce the samples of concepts from . Kong et al. (2023a) applied this general framework on different DGMs like class-conditional GAN (Mirza et al., 2014), and GAN-based text-to-image models (Zhu et al., 2019). Besides, Kong et al. (2021) also extended this method to diffusion-based text-to-speech models.

More unlearning methods by fine-tuning are tailored for Generative Diffusion Models, especially the text-to-image Stable Diffusion (SD) (Rombach et al., 2022) (refer to Section 2.1). Specifically, Kumari et al. (2023) proposed to unlearn SD by matching the conditional distribution of undesirable concepts to anchor concepts in every diffusion step, which means the output of the denoising network is flipped to anchor concepts that is unrelated to the undesirable ones. The anchor concepts can be random noise, , or the conditional distribution of benign concepts . With the anchor concept of random noise, the fine-tuning objective is

| (9) |

This objective tries to induce the new diffusion network to generate random noise if the input condition is from the undesirable concepts from . If the model is unlearned by benign concepts, the fine-tuning objective is

| (10) |

is the output under benign conditions. By optimizing this objective, the output under undesirable concepts will be re-directed towards the benign concepts. This method has a similar intuition to the second term in Eq. (8), but Eq. (8) fine-tunes the component for conditional representation, while Eq. (9) and Eq. (10) fine-tune the whole diffusion network of SD. In addition, a similar regularization term to keep the other desirable concepts unchanged is also combined with Eq. (9) and Eq. (10).

Zhang et al. (2023a) proposed the method called Forget-Me-Not to resteer the cross-attention layers in SD by minimizing the values of attention maps, which disrupts the guidance of text condition in diffusion process. In Stable Diffusion (Rombach et al., 2022; Zhang et al., 2023a), the cross-attention of UNet transfers information from conditional text to hidden features through dot product and softmax. The attention maps are calculated based on hidden features and embeddings of condition texts. The attention maps will show the information of the undesirable concepts. Thus, distrupting the attention map will lead to a broken diffusion process. The fine-tuning objective of Forget-Me-Not minimizes the values in the attention map to disrupt the guidance of condition texts. In this way, Forget-Me-Not can mislead and remove the undesirable concepts from the final generated data.

Different from the above two unlearning methods for diffusion models, Gandikota et al. (2023) targeted on the classifier-free diffusion generation (Ho et al., 2022). The classifier-free diffusion guidance does not reply on a classifier for conditional generation, which is opposite to the classifier-based diffusion guidance. The classifier-based diffusion guidance modifies the denoising step to follow the feature captured by an auxiliary classifier :

where provides the information of what features should have if wants to be classified as . This guidance can shape into step by step, and is the parameter to control the strength of the classifier guidance (Dhariwal et al., 2021). In contrast, the classifier-free diffusion guidance uses the difference between the outputs of conditional denoising diffusion model and unconditional denoising diffusion model as the guidance to boost the influence of conditions:

where is different between conditional and unconditional model, i.e. the influence of condition. It can also provide the information of the features that should possess to likely generate it into class . The classifier-free diffusion model makes use of this information to guide the generation process. Gandikota et al. (2023) proposed ESD which uses the opposite of the guidance as the unlearning objective to fine-tune SD:

The formulation uses several instances of diffusion models, and . is fixed for quantifying the opposite of guidance, while the is the unlearned network to solve. ESD has two variants, ESD-x and ESD-u. They have the same fine-tuning objective, but choose different subsets of parameters to fine-tune. ESD-x fine-tunes the cross-attention layers, while ESD-u fine-tunes non-cross-attention modules. Gandikota et al. (2023) found that the ESD-x can control the unlearned data according to the specific prompt, such as a named artistic style, and has little influence on other concepts. ESD-u can unlearn concepts from SD no matter whether a specific prompt is used, which is more suitable for global unlearning like NSFW.

2.3.4. Dataset De-duplication

Memorization is another problem that threatens the copyright of source data. It refers to the problem that the generation model might produce images that are the same as the training data (van den Burg et al., 2021). It is found by many works that duplicated examples in the training data are more possible to be generated (Webster, 2023; Somepalli et al., 2023a; Carlini et al., 2023). Somepalli et al. (2023a) also argued that memorization is associated with the frequency at which data is replicated. Carlini et al. (2023) pointed out that the samples that are easy to be memorized usually duplicated for many times.

Therefore, dataset de-duplication is necessary to mitigate the memorization and prevent the model from generating the training data. It is attributed to an active method because de-duplication is conducted by the model builder for the purpose of providing legal services. Webster et al. (2023) proposed a method to search the duplicate training images by CLIP (Radford et al., 2021). First, it trains the auto-encoders to compress the images and texts into latent space. It proposes Subset Nearest Neighbor CLIP (SNIP) to fine-tune the encoder for compression and keep the distance between neighbors at the same time. After compression, it uses the inverted file system (IVF) to approximately search the duplicated images. The whole huge dataset is divided into small groups by -means, and the duplication is searched within the closest centroids. Then the found data is de-duplicated to reduce the memorization. OpenAI used a similar strategy to train DALL·E 2 (OpenAI, [n. d.]). It first divides the training data into clusters, and then search the similar data within the union set of a small number of clusters.

Somepalli et al. (2023b) pointed out that the conditions of diffusion model, i.e. the text caption, will also influence memorization. Their experiments demonstrated that during the fine-tuning of Stable Diffusion, the memorization is more likely to happen if the captions (or text prompts) of different images are more diverse. But it does not mean the totally random captions can exacerbate memorization. Actually, the captions that are correlated with image content but have more diversity can lead to more severe memorization. However, diverse captions of the duplicated images can reduce the memorization. They also found that more training epochs have similar influence to duplication which increases the memorization. This means more epochs may mimic the duplication. Based on these findings, they concluded that for a single image and duplicated images, the diverse captions can help slow down the memorization. They proposed a few methods to process the captions during fine-tuning to avoid memorization, including multiple captions (which randomly samples from 20 captions generated by BLIP (Li et al., 2022a) for each image when training), random token replacement & addition (which randomly replaces tokens/words in the caption with a random word, or add a random word to the caption at a random location) and so on.

2.4. Model Copyright Protection

This subsection summarizes the strategies for protecting the copyright of DGMs for image generation. This protection is important for two major reasons. First, a powerful DGM, which necessitates extensive computational resources and well-annotated data for its creation, needs to be safeguarded from copyright infringements (such as being stolen by malicious users to offer unauthorized paid services). Second, the unregulated distribution of those models may lead to ethical concerns, including their potential misuse for generating misinformation, which necessitates techniques to identify the origin of an image.

Deep Generative Model Watermarking is a common solution for model copyright protection. It involves incorporating distinct information, known as a watermark, into the models before their deployment. The embedded watermark can be retrieved from a potentially infringing model or its generated data to confirm any suspected copyright violations. To achieve good performance in protecting the model while preserving the original generation performance, the watermarking technique should incorporate the following key properties: (a) Fidelity: the ability of the watermarking method to not significantly impact the general performance of the model (the diversity and visual quality of the generated images); (b) Integrity: the accuracy with which the watermark can be extracted; (c) Capacity: the length of message that can be effectively encoded to and extracted from the watermark; (d) Robustness: the ability of the watermark to withstand alterations to the model or perturbation on the watermarked generated images; and (e) Efficiency: the computational cost of the watermark embedding and extraction process.

The proposed DGM watermarking methods can be summarized into three categories according to the specific way they embed the watermark:

-

•

Parameter-based watermarking encodes the watermark message into the model’s parameters or structural configurations;

-

•

Image-based watermarking embeds the watermark message into every image generated by the model;

-

•

Triggered-based watermarking secretly incorporates a trigger to the protected model such that an image with copyright information will be generated once the trigger is activated.

2.4.1. Parameter-based watermarking

This type of watermarking techniques aims to subtly incorporate watermark information into the network’s internal weights or structural configurations. It is known as a “white-box” method because full access to these elements is required to implement and extract the watermark. Parameter-based watermarking has been widely applied for verifying the ownership of classification models (Uchida et al., 2017; Darvish Rouhani et al., 2019; Zhao et al., 2021) and has also been extended to protect DGMs in recent years.

Ong et al. (2021) proposed to embed the signature of a GAN model to the scaling factors, , of the normalization layer of the generators. The loss for watermark embedding is:

| (11) |

where refers to the predefined binary watermark message and denotes the number of channels. This objective stipulates that the scaling factor of the -th channel, denoted as , adopts either a positive or negative polarity (+/-) as determined by . The term is a constant employed to regulate the minimum value of . The sign loss is added to the original training loss of the GAN model (e.g., a DCGAN (Radford et al., 2015), SRGAN (Ledig et al., 2017) or CycleGAN (Zhu et al., 2017)) to form the overall training objective of the generators. With this embedding strategy, the capacity of the watermark is determined by the total number of channels in the normalization layers. In the verification stage, the embedded message can be easily extracted by looking at the signs of the specific scaling factors of the model.

2.4.2. Image-based watermarking

This group of watermarking approaches embeds watermark messages into all the images generated by the model to be protected. To achieve this goal, various studies suggested using an additional deep-learning-based network for embedding watermarks after the image generation process. In detail, once the DGMs create the images, these extra watermark embedding networks apply watermarks to the images before they are released to public. For example, Zhang et al. (2022a) proposed two frameworks for watermark embedding based on the Human Visual System (HVS) to reduce the impact of the watermark on the visual quality of the generated images. Leveraging the fact that humans are more sensitive to changes in green color and brightness, they recommended embedding watermarks into the Red (R) and Blue (B) channels in the RGB color system or the U/V channels in the Discrete Cosine Transform (DCT) domain. In either framework, each channel carries a unique watermark, allowing for the extraction of two distinct watermarks from a single image.

As a following-up work, Zhang et al. (2023b) noted that images marked using prior watermark embedding networks display significant high-frequency artifacts in the frequency domain. As shown in Figure 4, slight spatial artifacts are detectable in the generated image marked by the method described in Wu et al. (2020). And obvious grid-like high-frequency artifacts can be found in the DCT heat map of the marked image. These artifacts, mainly due to the up-sampling and down-sampling convolution operations of the GAN-like embedding network, could compromise the watermark’s imperceptibility. To tackle the problem, they designed a new watermark embedding network which is capable of suppressing high-frequency artifacts through anti-aliasing. The anti-aliasing is primarily implemented by introducing a low-pass filter before the down-sampling process and appending a convolutional layer after the nearest-neighbor up-sampling process, adopting strategies demonstrated to be effective in previous research. In addition to using a separate watermark embedder, some other strategies consider directly modifying the host generative network so that the host network itself can embed the watermark while achieving the original generation task. At the same time, an external watermark decoder should be trained to correctly extract the watermark information from the generated images. To achieve this goal in GANs, Fei et al. (2022) proposed to update the training objective of the generator by:

where and refer to the discriminator and generator of GAN respectively, refers to the watermark decoder, means the prior distribution of the latent space, is the ground truth watermark message, is a regularization parameter and represents the binary cross entropy. The first term in the equation is the standard generator loss, while the second term is for ensuring the correctness of the decoded binary watermark message. It is noted that the watermark decoder they applied here is retrieved from a well-developed standard image-watermarking framework and its parameters are fixed in the training process of . With the above training objective, the model can be either trained from scratch or fine-tuned from a non-watermarked pre-trained GAN model.

Yu et al. (2020) also proposed a fingerprinting technique to trace the outputs of GANs back to their source, which can help identify misuse. The core difference between it and the work of Fei et al. (2022) is that the trained generator takes both the latent code and the embedding of the fingerprint (a sequence of bits) as its input, which increases the efficiency and scalability of the fingerprinting mechanism. Once the model is trained, multiple instances of fingerprinted generators which focus on embedding different fingerprint messages can be directly obtained. In their algorithm, the watermark encoder, , which maps fingerprint to its embedding, the decoder, , which obtains the latent code and fingerprint from a watermarked image, the discriminator and the generator of GAN are optimized together with the following loss:

In this objective, the first term refers to the original training loss of a GAN model and the second term is the regularization term firstly proposed by Srivastava et al. (2017) for mitigating the mode collapse issue of GANs. The term is the cross entropy loss of the fingerprint decoding to ensure the correctness of the reconstruction of the fingerprint. The here refers to the sigmod function. The formulation of the last term is designed to ensure the perceptual similarity between images generated with the same latent code but different fingerprints, which guarantees that the latent code exclusively controls the content of the generated images, irrespective of the fingerprint variations.

Similar idea of weights modulation has also been applied for the protection of LDMs. Kim et al. (2023) proposed to incorporate the watermark message to generated images by modulating the parameters of each layer of the decoder of LDM. Specifically, after the watermark message is obtained, a mapping network is applied to derive the feature representation of the message. Then an affine transformation layer is developed for each layer of the decoder . The affine transformation layer is applied to match the dimension of the message representation to the dimension of the model’s weights. Then the weight modulation is conducted following the formulation:

| (12) |

where and refers to the pre-trained and fingerprinted parameters of the decoder , , , denote the dimensions of the input, output, and kernel of each layer, and denotes the scale of the modulation to the th output channel. When the watermark message is encoded, a watermark decoder is also required to retrieve the watermark from the generated images. The watermark decoder as well as the mapping network are jointly trained with the following loss:

where denotes the binary cross entropy of watermark decoding:

in which refers to the latent feature of an image , denotes the output of the decoder whose weights being modulated by Eq.(12), and refers to the sigmoid activation function. refers to a Bernoulli distribution. is the regularization term inhibiting the influence of the watermark to the quality to the generated image.

Another work (Xiong et al., 2023), which also aims to protect the copyright of LDMs, has a similar pipeline to Kim et al. (2023). The major difference is that the feature representation of the watermark message is not used to modulate the weight of the decoder , but is combined with the intermediate outputs of the fine-tuned decoder such that the image generated by is watermarked. Under the pipeline, a watermark decoder is also required to extract the watermark from the generated image.

Additionally, the work of Fernandez et al. (2023), which also focus on the protection of Latent Diffusion Models (LDMs), suggested to directly apply a classical neural network-based watermarking method, HiddeN (Zhu et al., 2018), to jointly optimize the parameters of a watermark encoder and extractor. Then the decoder of the LDM is fine-tuned such that all images it generates contain a given watermark that can be extracted by the pre-trained watermark extractor.

Unlike the previously mentioned methods that require modifying the generative networks to include watermarks in their generated images, Yu et al. (2021) achieved this by solely watermarking the training images of the generative models. To ensure that the watermark can be successfully transferred from the training images to the generated images of GANs, they developed a deep-learning-based structure for watermark (or “fingerprints” in their paper) embedding and extraction. Similar to the other watermark embedding-extraction framework, the training objective of the watermark embedder and decoder also consists of a loss which guides the decoder to decode the fingerprint correctly and an loss which penalizes any deviation of the watermarked image from the original image. Although the framework proposed by Yu et al. (2021) was originally designed for DeepFake detection and misinformation prevention, its functionality can be extended to verifying the copyright of GANs, as the watermark on the generated images can indicate their source. Additionally, Zhao et al. (2023b) experimentally verified that this technique can be extended to safeguard the copyright of unconditional and class-conditional diffusion models.

While the previous methods consider incorporating the watermarking embedding procedure in the training process of the generative models, Wen et al. (2023) proposed Tree-Ring Watermarks, a watermarking framework specified for diffusion models which conducts the watermark encoding in the sampling process. As shown in Figure 5, the watermark is embedded into the initial noise vector used for sampling. In order to ensure that the watermark can achieve a better robustness against multiple image modification such as cropping, dilation, flipping, and rotation, they suggest to encode the watermark patterns to the Fourier space of the image. After the image is generated, watermark detection is done by inverting the diffusion process to reconstruct the noise vector. This is done using the DDIM (Song et al., 2020) inversion process. In detail, the initial noise vector is described in Fourier space as:

where refers to a binary mask, is the key chosen for the watermark. The key is designed to be ring-shaped in the Fourier space to ensure that the watermark is invariant to certain common image transformations. In the detection stage, the watermark is detected if the difference between the inverted noise vector and the pre-defined key in the Fourier domain of the watermarked area is below a tuned threshold .

Another work which considers adding the watermark in the images’ sampling process is Nie et al. (2023). This approach, which target to protect the copyright of LDM or StyleGAN2 (Karras et al., 2020), does not require a network to conduct the watermark embedding and decoding but suggest to directly modify the latent features (either generated by the multi-layer perception network of the StyleGAN2 or sampled by the diffusion process of LDM) by matrix operation. In detail, let denotes an orthonormal subspace of the space of the latent feature and denotes its complementary subspace, the watermarked latent feature is

The notation refers to the watermark message, represents the pseudo-inverse of , denotes the projection of to , and denotes a hyperparameter to control the strength of the watermark. In the detection stage, in order to retrieve the watermark from the image, an optimization problem is solved:

where refers to the LPIPS metric (Zhang et al., 2018) which evaluates the visual similarity of two images. The lower and upper bound of , and , are chosen based on empirical observation.

2.4.3. Trigger-based watermarking

The trigger-based watermarking follows the basic technical scheme of backdoor attack (Wang et al., 2019; Saha et al., 2020; Chen et al., 2017), which embeds a trigger into a neural network to cause a failed classification by activating the trigger. In the protection of DGM copyright, once the trigger is integrated into the protected model, the activation of this trigger in the input will cause the model to generate a watermarked output. By examining the presence or absence of the watermark in this output, the model owner can determine whether a suspect model has been illicitly derived from the protected, watermarked model. Consequently, if the watermark is detected in the example of triggered generation, it substantiates the claim of copyright infringement regarding the model.

Ong et al. (2021) also proposed a trigger-based watermark framework to protect the copyright of GANs. It first adds the trigger onto which is the input of the generator to get the triggered input . The protected GAN is trained towards the goal that once the trigger is input to , the output will be generated with a watermark. The model owner can input a trigger into the suspect model and verify whether the model is stolen from the copyrighted model by checking the existence of watermark in the output. For different types of GAN, the triggered input is obtained by different manually defined rules. For example, in DCGAN, the trigger is encoded with a binary representation :

where is the elementwise production, and is the dimension of . The triggered input means each dimension of is encoded with one dimension in . The triggered input is expected to cause to generate a watermarked target . During the training of GAN, the trigger pair is included in a regularization term for this. The term is formulated as

where SSIM refers to the structural similarity. The regularization term is combined with the training loss of by a coefficient :

However, the trigger-based method is not implemented in isolation. Instead, it is integrated with the parameter-based watermarking approach, as detailed in Equation (11), following the practice outlined in (Ong et al., 2021).

Besides the protection of GANs, Liu et al. (2023c) proposed to watermark Stable Diffusion by injecting triggers into the prompt. According to the method, when specific triggers are present in the prompt, Stable Diffusion will produce an image with a watermark. If the resulting image closely resembles the watermarked prototype, it confirms that the model is safeguarded by the designated watermark. Liu et al. (2023c) developed two different approaches for integrating triggers, NaiveWM and FixedWM. NaiveWM embeds a specific word at a random location within the prompt, while FixedWM places a specific word at a predetermined position in the prompt. The chosen trigger word should be nonsensical to avoid accidental activation. For embedding the trigger-based watermark into Stable Diffusion, a triggered dataset is employed to fine-tune the model. Additionally, a clean dataset is also used in the fine-tuning process to preserve the quality of generation when the trigger is not activated. Zhao et al. (2023b) proposed a similar idea, but did not consider the stealthiness of the trigger. Their method directly uses “[V]” as the whole triggered prompt.

Different from Liu et al. (2023c) and Zhao et al. (2023b) that embedded the watermark into the guidance of prompt, Peng et al. (2023a) watermarked by injecting triggers into the diffusion process. In the reverse process of generative diffusion models, when the trigger is added onto the current step, the subsequent will be generated towards the watermarked image. By repeating injecting the trigger into the reverse process, the final generated image will be a watermarked image that can be used to verify the copyright of the model. For embedding the watermark, the model is trained with both triggered dataset and clean dataset. During training, when the data comes from triggered dataset, the triggered noise is input into the denoising network of diffusion model and the network is optimized to denoise towards the watermarked image. During the both forward and reverse process, the trigger is added into step by

where is the trigger, and is the state of step . For reverse process, is the image denoised from the last step, while for forward process, it is the diffused image accumulated by the Gaussian noise in the forward steps. This protection method can be used for both training from scratch and fine-tuning. Although this method can also protect the copyright by verifying whether the final output is watermarked or not, the extraction of watermark requires the modification on each diffusion step which is more strict than the previous methods that only need to trigger in the prompt.

3. Copyright in Text Generation

In this section, we first define the problem of copyright protection in text and unique properties in text domain. Then we introduce data copyright and model copyright protection by providing the taxonomy of existing methods.

3.1. Background: DGMs for text generation

In the realm of text generation, our focus is primarily on Large Language Models (LLMs), due to their outstanding capabilities and the potential risks they entail. As key components of DGMs, LLMs have notably propelled advancements in the field of text generation. They exhibit remarkable emergent abilities, significantly boosting performance in a range of NLP tasks. However, alongside their exceptional efficacy, LLMs also present substantial concerns, particularly in the realms of copyright. The considerable commercial value and the high costs associated with their training further underscores the importance of addressing these issues. In Section 3, which focuses on the text domain, we explore LLMs in detail, acknowledging their unparalleled efficiency and effectiveness in text generation, while also considering the associated concerns in copyright protection.

Training and Inference of LLMs. Generally, Large Language Models (LLMs) are constructed to comprehend human language and produce coherent, contextually relevant text. To achieve this objective, most existing LLMs can be categorized into “pre-trained LLMs” and “fine-tuned LLMs”. For the pre-trained LLMs, they are trained on a massive amount of internet text data, aiming to grant them the ability to predict the probable subsequent token based on its preceding tokens. Through this process, the models can obtain an understanding of the patterns and structures inherent in human language. Many early LLMs, such as GPT-2 (Radford et al., 2019), GPT-3 (Brown et al., 2020), OPT (Zhang et al., 2022b), GPT-Neo (Black et al., 2021), and others, have been developed in this procedure, to be equipped with the ability to complete sentences by generating the possible subsequent tokens.

Through various fine-tuning technologies, LLMs have been made increasingly flexible and adept at various downstream tasks. Specifically, the Instruction Tuning (Zhang et al., 2023c) strategy is employed to enable LLMs to handle diverse language tasks based on users’ requests. This versatility allows LLMs to be applied as general assistants for tasks such as question answering (Lu et al., 2022). Furthermore, Reinforcement Learning with Human Feedback (RLHF) is utilized to help LLMs better align with human values, enhance the reliability of generated outputs, and improve ethical decision-making (Ouyang et al., 2022). Based on these advancements, advanced LLMs like ChatGPT 888https://chat.openai.com/, Claude999https://www.anthropic.com/index/claude-2, Bard (Manyika et al., 2023), and LLaMA 2 (Touvron et al., 2023), have been developed.

Notations. Next, we introduce key notations we will use in this paper. We denote an arbitrary text which is composed of a sequence of tokens with length . Many popular LLMs follow an “auto-regressive” manner: given a sequence of prior tokens , a language model calculates the probability of the next token conditioning on the preceding tokens. We denote the probability that model predict the -th word as by , where and is the vocabulary. Based on this design, the likelihood of each token in the original sentence given by the model can be defined as:

| (13) |

which is the probability of the model to give an output token to be the same as in the text. Similarly, we can also define the likelihood of the whole sentence given by the model , which is the overall likelihood of all tokens in the sentence:

| (14) |

For most existing LLMs, during the (pre-)training process, the model is trained to maximize this likelihood, so that the model can learn to generate the texts following the distributions of training texts. During the LLM’s inference process, the model will generate the texts, by making sampling from the tokens with high likelihood.

3.2. Copyright Issues in Text Generation

Data copyright protection. The data copyright problem regarding the use of LLMs has attracted extensive focus and debates. The definition of copyright violation varies across countries and laws. Generally, it means the use of works protected by copyright without permission for a usage where such permission is required, thereby infringing certain exclusive rights granted to the copyright holder, such as the right to reproduce, distribute, display or perform the protected work, or to make derivative works101010https://en.wikipedia.org/wiki/Copyright_infringement. Similarly, Lee et al. (2023a) also note that “plagiarism occurs when any content including text, source code, or audio-visual content is reused without permission or citation from an author of the original work.” However, as evident from the following laboratory and court cases, current LLMs demonstrate various plagiarism behaviors, which can be divided into three types: verbatim plagiarism, paraphrase plagiarism, and idea plagiarism:

-

•

Verbatim Plagiarism, which refers to directly copying the origin data completely or partially.

-

•

Paraphrase Plagiarism, which refers to composing new works including consent word replacement, statement rearrangement, or even translate sentences back and forth, from copyrighted works.

-

•

Idea Plagiarism, which refers to copying the core idea of the copyrighted material.

Cases in Laboratory. There are existing lines of work showing that Large Language Models (LLMs) tend to memorize and emit parts of its training data (which may include copyrighted materials), referred to as the “memorization effect of LLMs”. Although such effect is widely believed to be essential for language model’s performance and generalization, it also raises serious risks on data copyright protection. Carlini et al. (2021) first found that LLMs can memorize and leak specific training examples, by devising a data extraction attack, which can effectively examine the training data verbatim from GPT-2. Then, Carlini et al. (2022) further quantified such phenomena in detail and found that the memorization effect grows with the model scales, number of replicates, as well as the prompt length. Similarly, Tirumala et al. (2022) suggested that lager LMs generally memorize faster and less likely to forget information. Besides, they found that nouns and numbers are more likely to be memorized.

Zhang et al. (2021) defined ”counterfactual memory” which quantifies the performance difference between models trained on the specific data and models not trained on that. Biderman et al. (2023) investigated correlations of memory phenomena between large and small models and between partially-trained and fully-trained models. They suggested that we can use partially trained-model to efficiently predict whether the data is memorized by a fully-trained model. Furthermore, Zeng et al. (2023) explored the memorization behavior of fine-tuned LLMs and identified some feature dense tasks such as dialog and summarization present high memorization effects, and demonstrated the correlation between attention scores and task-specific memorization.

Cases in Court. Recently, The New York Times initiated a lawsuit against OpenAI and Microsoft, alleging copyright infringement111111https://www.nytimes.com/2023/12/27/business/media/new-york-times-open-ai-microsoft-lawsuit.html. The lawsuit asserts that OpenAI and Microsoft used millions of articles from The Times to train their automated chatbots. These chatbots, the suit contends, now rival The Times in providing reliable information. The complaint seeks restitution for what The Times describes as “billions of dollars in statutory and actual damages” arising from the “unauthorized replication and exploitation of The Times’s distinct and valuable works.” A specific allegation made in the lawsuit is that ChatGPT, when queried about current events, occasionally generates responses containing “verbatim excerpts” from articles published by The New York Times. These articles are typically behind a paywall, accessible only to subscribers. Moreover, the lawsuit highlights instances where the Bing search engine, incorporated with ChatGPT, reportedly displayed content sourced from a New York Times-owned website. This usage was done without providing direct links to the articles or including the referral links that The Times employs for revenue generation.

Model copyright protection. For LLMs, the infringement on model copyright is in line with the model copyright protection in the image domain. The model builder invests a significant amount of funds and labor into the construction of LLMs, which naturally grants them intellectual property rights over the trained model.

3.3. Data Copyright Protection

According to the previous discussed cases, a majority of research works study how to improve the training scheme of LLMs to avoid replicating its training data samples. These methods are generally proposed from the perspective of model builders to prevent copyright infringement and provide legal services. In text domain, they can be categorized according to the major reasons which cause the memorization / plagiarism behaviors of LLMs:

- •

-

•

Improved training & generation algorithms: the model builder can also modify the training objective and text generation procedure to avoid potential reproduction behavior of LLMs.

-

•

Alignment strategies: the model builder devises new alignment strategies to reduce memorization in LLMs.

-

•

Machine unlearning, which services to delete the copyrighted materials from LLMs, once the owners of the copyrighted materials identify the infringement.

Similar to image domain, machine unlearning can be seen as the passive method requested by the data owner, while the others are active approaches directly conducted by the model builder during the model construction stage.

3.3.1. Data De-duplication