Copula-based measures of asymmetry between the lower and upper tail probabilities

Abstract

We propose a copula-based measure of asymmetry between the lower and upper tail probabilities of bivariate distributions. The proposed measure has a simple form and possesses some desirable properties as a measure of asymmetry. The limit of the proposed measure as the index goes to the boundary of its domain can be expressed in a simple form under certain conditions on copulas. A sample analogue of the proposed measure for a sample from a copula is presented and its weak convergence to a Gaussian process is shown. Another sample analogue of the presented measure, which is based on a sample from a distribution on , is given. Simple methods for interval estimation and nonparametric testing based on the two sample analogues are presented. As an example, the presented measure is applied to daily returns of S&P500 and Nikkei225.

Keywords: Asymptotic theory; Bootstrap; Extreme value theory; Gaussian process; Stock daily return.

1 Introduction

In statistical analysis of multivariate data, it is often the case that data have complex dependence structure among variables. As a statistical tool for analyzing such data, copulas have gained their popularity in various academic fields, especially, finance, actuarial science and survival analysis (see, e.g., Joe, 1997, 2014; Nelsen, 2006; McNeil et al., 2015).

A copula is a multivariate cumulative distribution function with uniform margins. The bivariate case of Sklar’s theorem states that, for a bivariate cumulative distribution function with margins and , there exists a copula such that . Hence a copula can be used as a model for dependence structure and is applicable for flexible modeling. Another important advantage of using copulas is that copulas are useful as measures of dependence. For example, the tail dependence coefficient is well-known as a measure of dependence in a tail (see, e.g., Section 2.13 of Joe, 2014).

One important problem in copula-based modeling is to decide which should be fitted to data of interest, a copula with symmetric tails or a copula with asymmetric tails. An additional problem arising from this is that if a copula with asymmetric tails is appropriate for the data, how much degree of tail asymmetry the copula should have. These problems are important because the lack of fit in tails of copulas leads to erroneous results in statistical analysis. For example, it is said that widespread applications of Gaussian copula, which has symmetric light tails, to financial products have contributed to the global financial crisis of 2008–2009 (see Donnelly and Embrechts, 2010). Therefore, in order to carry out decent statistical analysis, it is essential to evaluate the degree of tail asymmetry of copula appropriately. Given the stock market spooked by the outbreak of COVID-19, these problems would be even more important.

Some copula-based measures of tail asymmetry have been proposed in the literature. Nikoloulopoulos et al. (2012) and Dobrić et al. (2013) discussed a measure of tail asymmetry based on the difference between the conditional Spearman’s rhos for truncated data. Krupskii (2017) proposed an extension of their measure, which can regulate weights of tails. Rosco and Joe (2013) proposed three measures of tail asymmetry; two of them are based on moments or quantiles of a transformed univariate random variable and one of them is based on a difference between a copula and its reflected copula. As related works, measures of radial symmetry for the entire domain, not for tails, have been proposed, for example, by Dehgani et al. (2013) and Genest and Nešlehová (2014). See Joe (2014, Section 2.14) for the book treatment on this topic.

In this paper we propose a new copula-based measure of asymmetry between the lower and upper tails of bivariate distributions. The proposed measure and its sample analogues have various tractable properties; the proposed measure has a simple form and its calculation is fast; the proposed measure possesses desirable properties which as a measure of tail asymmetry; the limits of the proposed measure as the index goes to the boundary of its domain can be easily evaluated under mild conditions on copulas; sample analogues of the proposed measure converge weakly to a Gaussian process or its mixture; simple methods for interval estimation and hypothesis testing based on the sample analogues are available; a multivariate extension of the proposed measure is straightforward.

The paper is organized as follows. In Section 2 we propose a new copula-based measure of tail asymmetry and present its basic properties. Section 3 considers the limits of our measure as the index goes to the boundary of its domain. Values of the proposed measure for some well-known copulas are discussed in Section 4. In Section 5 two sample analogues of the proposed measure are presented and their asymptotic properties are investigated. Also statistical inference for our measure such as interval estimation and hypothesis tests is discussed and a simulation study is carried out to demonstrate the results. In Section 6 the proposed measure is compared with other copula-based measures of tail asymmetry. In Section 7 the proposed measure is applied to daily returns of S&P500 and Nikkei225. Finally, a multivariate extension of the proposed measure is briefly considered in Section 8.

Throughout this paper, a ‘copula’ refers to the bivariate case of a copula, namely, a bivariate cumulative distribution function with uniform margins. Let be a set of all the bivariate copulas. Let denote the survival copula associated with , which defined by . Define by .

2 Definition and basic properties

In this section we propose a measure for comparing the probabilities of the lower and upper tails of bivariate distributions. The proposed measure is defined as follows.

Definition 1.

Let be an -valued random vector. Assume and have continuous margins and , respectively. Then a measure of comparison between the lower-left and upper-right tail probabilities of is defined by

Here the definition of the logarithm function is extended to be if and , if and , and if .

Similarly it is possible to define a measure to compare the lower-right and upper-left tail probabilities of bivariate distributions. Properties of this measure immediately follow from those of , which will be given hereafter, by replacing by .

The calculation of can be simplified if the distribution of is represented in terms of copula. The proof is straightforward and therefore omitted.

Proposition 1.

Let denote a copula of given by

.

Then defined in Definition 1 can be expressed as

| (1) |

Note that, using the survival copula associated with with , the proposed measure (1) has the simpler expression

Throughout this paper, the lower tail and the upper tail of the copula are said to be symmetric if .

Unlike many existing measures, the proposed measure (1) is not a global measure but a local one in the sense that this measure focuses on the probability of a subdomain of the copula regulated by the index . Setting a particular value of or looking at the behavior of for multiple choices of , the proposed measure (1) provides a different insight from the global measure. For more details on the comparison between the proposed measure and existing ones, see Section 6.

It is straightforward to see that the following basic properties hold for .

Proposition 2.

Let be a set of all bivariate copulas. Denote the measure for the copula by . Assume that , , and is the permutated copula of . Then, for , we have that:

-

(i)

for every ; the equality holds only when either or ;

-

(ii)

if and only if ;

-

(iii)

for fixed , is monotonically non-increasing with respect to ; similarly, for fixed , is monotonically non-decreasing with respect to ;

-

(iv)

for every ;

-

(v)

for every ;

-

(vi)

if and is a sequence of copulas such that uniformly, then .

Property (i) implies that the proposed measure is potentially unbounded although it is bounded except for the unusual case or . Compared with a similar measure based on the difference between and , our measure is advantageous in the sensitivity of detecting the asymmetry of tail probabilities for small ; see Section 6.2 for details. Property (ii) implies that for any if the copula is radially symmetric, namely, . Property (ii) is the same as an axiom of Dehgani et al. (2013) and is an extended property of Rosco and Joe (2013). Properties (iv)–(vi) are the same as the axioms of tail asymmetry presented in Section 2 of Rosco and Joe (2013). It is possible to use any function of other than the logarithm function as a measure of tail asymmetry. However one nice property of the proposed measure is property (iv) which other functions of do not have in general.

3 Limits of the proposed measure

We consider limits of the proposed measure (1) the index goes to the boundary of its domain. It follows from the expression (1) that for any copula . Therefore we have

| (2) |

The limiting behavior of as is much more intricate. To consider this problem, define

| (3) |

given that the limit exists. Here we present three expressions for the limit (3).

The first expression is based on the tail dependence coefficients. Tail dependence coefficients are often used as local dependence measures of bivariate distributions. The lower-left and upper-right tail dependence coefficients of the random variables and are defined by

respectively, given the limits exist. If has the copula , the expressions for and are simplified as

| (4) |

respectively (see, e.g., Joe, 2014, Section 2.13).

Theorem 1.

Let be an -valued random vector with the copula . Assume that the lower-left and upper-right tail dependence coefficients of and exist and are given by and , respectively. Suppose that either or is not equal to zero. Then

See Supplementary Material for the proof. Theorem 1 can be generalized by utilizing the concepts of tail orders and tail order parameters. If there exists a constant and a slowly varying function such that , then is called the lower tail order of and is called the lower tail order parameter of , where is defined by . Similarly, the upper tail order and the upper tail order parameter of are defined by the lower tail order and the lower tail order parameter of the survival copula , respectively. See Joe (2014, Section 2.16) for more details on the tail orders and tail order parameters. Using the tail orders and tail order parameters, we have the following result. The proof is given in Supplementary Material.

Theorem 2.

Let and be the lower and upper tail orders of the copula , respectively. Then if and if . If and either of the lower tail order parameter or the upper tail order parameter of is not equal to zero, then .

Note that Theorem 2 with reduces to Theorem 1. Theorems 1 and 2 are useful to evaluate if we already know the tail dependence coefficients or tail orders and tail order parameters of a copula. If those values are not known, the following third expression for could be useful.

Theorem 3.

Let be an -valued random vector with the copula . Suppose that there exists such that exists in . Assume that . Then

given the limit exists.

See Supplementary Material for the proof. As will be seen in the next section, Theorem 3 can be utilized to calculate for Clayton copula and Ali-Mikhail-Haq copula.

4 Values of the proposed measure for some existing copulas

In this section we discuss the values of the proposed measure for some existing copulas. It is seen to be useful to plot with respect to for comparing the probabilities of the lower tail and upper one for the whole range of . See, e.g., Joe (2014) for the definitions of the existing copulas discussed in this section.

4.1 Copulas with symmetric tails

Proposition 2 implies that for any if for any . Such copulas include the independence copula, Gaussian copula, -copula, Plackett copula and FGM copula. Among well-known Archimedean copulas, Frank copula has a radially symmetric shape and therefore for any .

4.2 Copulas with asymmetric tails

There exist various copulas for which is not equal to zero in general. Many Archimedean copulas have asymmetric tails, including Clayton copula, Gumbel copula, Ali-Mikhail-Haq copula and two-parameter BB copulas. In addition, some asymmetric extensions of Gaussian copula and -copula have been proposed recently. Such extensions include the skew-normal copulas and skew- copulas discussed in Joe (2006) and Yoshiba (2018), for which is not equal to zero in general. As examples of copulas with asymmetric tails, here we discuss the values of for the three well-known copulas, namely, Clayton copula, Ali-Mikhail-Haq copula and BB7 copula.

Clayton copula: Clayton copula is defined by

| (5) |

where .

|

|

|

| (a) | (b) | (c) |

Figure 1(a) plots the values of as a function of for four positive values of . (For an intuitive understanding of the distributions of Clayton copula, see Figure S1(a) and (b) of Supplementary Material which plot random variates from Clayton copula with the two values of the parameters used in Figure 1.) As is clear from equation (2), for any . The smaller the value of , the smaller the value of . The figure also suggests that, for a fixed value of , as increases, the value of approaches zero. The upper tail dependence coefficient of Clayton copula is 0 and the lower tail dependence coefficient is for and for . Therefore, for , Theorem 1 implies that , meaning that the lower tail dependence is considerably stronger than the upper one. If , it follows from Theorem 3 that .

Ali-Mikhail-Haq copula: Ali-Mikhail-Haq copula is of the form

| (6) |

where . The values of as a function of and are exhibited in Figure 1(b) and (c), respectively. (See Figure S1(d) and (e) of Supplementary Material for plots of random variates generated from Ali-Mikhail-Haq copula with the two values of the parameters used in Figure 1(b).) Figure 1(b) suggests that decreases with . Also it appears that, for a fixed value of , the greater the value of , the smaller the value of . This observation can be seen more clearly in Figure 1(c) which plots the values of as a function of . Since both the lower and upper tail dependence coefficients of this copula are equal to zero, one can not apply Theorem 1 for the calculation of . However Theorems 2 and 3 are applicable in this case and we have a simple form .

BB7 copula: Finally, consider the BB7 copula of Joe and Hu (1996) defined by

| (7) |

where and . Unlike the last two copulas, this model has two parameters. The parameter controls the lower tail dependence coefficient, while regulates the upper one. Indeed, the lower and upper tail dependence coefficients are known to be and , respectively.

It follows from Theorem 1 that .

|

|

| (a) | (b) |

Figure 2 displays a plot of with respect to for four selected values of and that of with respect to for . Note that, in Figure 2(a), and imply that the lower tail dependence coefficients are around 0.5 and 0.9, respectively, while and suggest that the upper tail dependence coefficients are about 0.5 and 0.7, respectively. (See also Figure S1(g)–(h) of Supplementary Material for plots of random variates from BB7 copula (7) with the three combinations of the parameters in Figure 2(a).) Figure 2(a) suggests that, when both the lower and upper tail dependence coefficients are around 0.5, the values of are close to zero for any . When the difference between the lower and upper tail dependence coefficients is large, appears to be monotonic with respect to . It can be seen from Figure 2(b) that the values of monotonically decreases as increases. Also, monotonically increases with . The two contours and show somewhat similar shapes, implying that is a reasonable approximation to .

5 Two sample analogues of

In practice, it is often the case that the form of the copula underlying data is not known. In such a case, we need to estimate based on the data. In Sections 5.1–S1.6 we propose a sample analogue of based on a sample from the copula. Section 5.4 presents a sample analogue of based on a sample from a distribution on . A comparison between the two proposed sample analogues of is discussed via a simulation study in Section 5.5.

5.1 A sample analogue of based on a sample from a copula

A sample analogue of based on a sample from a copula is defined as follows.

Definition 2.

Let be a random sample from a copula. Then we define a sample analogue of by

where

and is an indicator function, i.e., if is true and otherwise.

In Sections 5.1–S1.6, we assume that is an iid sample from the copula . For iid -valued random vectors , if the margins of and are known to be and , respectively, then can be obtained by replacing by .

The goal of this subsection is to investigate some properties of . To achieve this, we first show the following lemma. See Supplementary Material for the proof.

Lemma 1.

For we have the following:

where , , and .

This lemma implies that is a consistent estimator of . Applying this lemma, we obtain the following asymptotic result. The proof is given in Supplementary Material.

Theorem 4.

Define

Then, as , converges weakly to a centered Gaussian process with covariance function

| (8) |

5.2 Interval estimation based on

An asymptotic interval estimator of can be obtained by applying the asymptotic results obtained in the previous subsection. Theorem 4 implies that, for fixed ,

where and is defined as in equation (8). Since includes the copula which is usually not known in practice, we use an estimator of defined by

It follows from Lemma 1 that as . Then we have as . Hence a % nonparametric asymptotic confidence interval for is

| (9) |

where satisfies , where and .

Notice that the asymptotic confidence interval (9) is a pointwise one for a fixed value of . If the interest of statistical analysis is to construct an asymptotic confidence band for for a range of , one can adopt Bonferroni correction. This can be done by replacing by in the equation (9) for , where . Therefore the asymptotic confidence band for with Bonferroni correction is

| (10) |

where and . Since and are step functions, it suffices to evaluate the bounds of confidence intervals only for .

5.3 Hypothesis testing based on

Some hypothesis tests can be established based on . For a given value of , one can carry out a hypothesis test to test against by using the asymptotic confidence interval (9). Similarly, an one-sided test for the alternative hypothesis or can be derived by modifying the asymptotic confidence interval (9).

If the interest of analysis is to evaluate the values of for multiple values of , one can consider the test against for . One example of such tests is based on a asymptotic confidence band based on Bonferroni correction (10). However, since the confidence band based on Bonferroni correction is known to be conservative, especially, for dependent hypotheses, the test based on Bonferroni correction (10) is not powerful in general. Alternatively, the following result can be used to present a test for the multiple values of . See Supplementary Material for the proof.

Theorem 5.

Let and . Suppose

where and . Assume that is invertible. Then

where denotes the chi-squared distribution with degrees of freedom.

Substituting into in the test statistic , the null hypothesis is rejected for a large value of . In order that becomes invertible, the values of need to be selected such that and/or hold for any .

5.4 A sample analogue of based on a sample from a distribution on

The sample analogue of given in Definition 2 can be calculated on the assumption that the margins of the -valued random vector are known. Here we discuss the case in which margins are unknown and empirical distributions are adopted as the margins.

Definition 3.

Let be -valued random vectors. Then we define a sample analogue of by

where

| (11) |

Note that the denominator of the empirical distribution function (11) is defined by rather than in order to avoid positive bias of .

The authors have not yet obtained the asymptotic distribution for . However the following results are available regarding and . The proof is straightforward from Fermanian et al. (2004), Tsukahara (2005) and Segers (2012) and therefore omitted.

Proposition 3.

Let be iid random vectors with the copula and the continuous margins. Assume that is differentiable with continuous -th partial derivatives . Then, as ,

where

is a centered Gaussian process with covariance function

and is defined as in Lemma 1.

Confidence intervals for can be numerically constructed using the bootstrap method. Hypothesis tests can also be established based on the bootstrap confidence intervals. It should be noted that, in order to calculate based on bootstrap samples, and in (11) should be calculated based on each bootstrap sample. If and are calculated from the original data, the bootstrap confidence intervals become similar to the asymptotic confidence intervals (9) for large .

5.5 Simulation study

In order to compare the performance of the two proposed sample analogues of for a large sample size, we consider the following cumulative distribution function

| (12) |

where is the cumulative distribution function of the standard Cauchy distribution, i.e., , and denotes the Clayton copula (5).

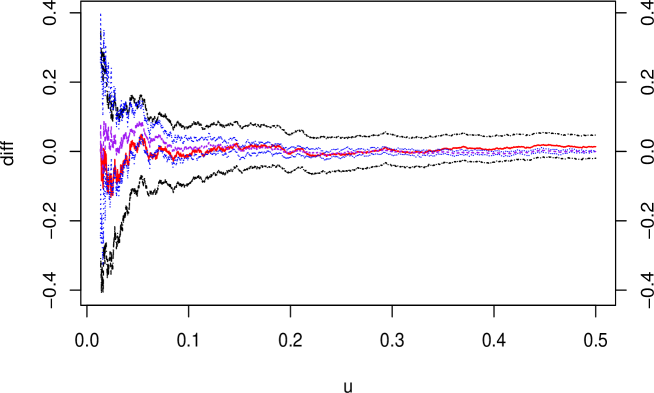

Figure 3 plots the values of , and their bounds of 90% confidence intervals for a sample of size from the distribution (12). See also Figure 1(a) for the plot of . For the calculations of and its confidence intervals (9), the sample is transformed into the copula sample via , where is the true margin . The confidence intervals of are calculated using the basic bootstrap method based on resamples of size ; see the last paragraph of Section 5.4 for details. The minimum value of in the plot is defined as in order that the asymptotic theory is applicable.

The figure suggests that when is around 0.2 or greater, the performance of both and seems satisfactory. For , the difference between the sample analogues and the true value increases with in general. It appears that the 90% confidence intervals of both and are generally narrow if is around 0.2 or more. For , the smaller the value of , the wider the ranges of the confidence intervals of both and . Interestingly, the confidence intervals of are narrower than those of in most of the plotted range of . This tendency is particularly obvious in the range , where the confidence intervals of are much narrower than those of .

6 Comparison with other measures

6.1 Comparison with existing measures

In this section we compare our measure with other copula-based measures of tail asymmetry. Rosco and Joe (2013) proposed three measures of tail asymmetry. One of their measures based on the distance between a copula and its survival copula is defined by

| (13) |

This measure has also been proposed by Dehgani et al. (2013) as a limiting case of a measure of radial asymmetry for bivariate random variables.

Our measure (1) has some similarities to and differences from the measure (13). Similarities include that both are functions of a copula and its survival function. Also, both measures satisfy Properties (ii), (v) and (vi) of Proposition 2.

However there are considerable differences between the two measures (1) and (13). First, the domains of a copula the two measures evaluate are different. The measure (13) is a global measure in the sense that the whole domain of the copula is taken into account to evaluate the value of the measure, while our measure (1) is a local measure which focuses on squared subdomains of the copula. By choosing the value of the index , our measure (1) enables us to choose the subdomain of a copula which analysts are interested in. However the prescription for selecting the value of is not always straightforward and the choice of the index could influence the results of analysis. The index-free measure (13) does not have such a problem. However the supremum value of this measure is not necessarily attained in the tails of the distribution and the value of the measure might not reflect the tail probabilities if or . Also, because of its locality, computations associated with our measure (1) are very fast.

Also there are differences between the two measures (1) and (13) in terms of properties. Our measure (1) satisfies all the properties of (i)–(vi) of Proposition 2 which include four (out of five) axioms of Rosco and Joe (2013). However this measure does not satisfy one of the axioms, i.e., axiom (i), of Rosco and Joe (2013) and therefore the value of the measure could be unbounded for special cases. The measure (13) also satisfies four axioms of Rosco and Joe (2013) including the axiom (i). On the other hand, the measure (13) does not satisfy their axiom (iii) which is equivalent to Property (iv) of Proposition 2, implying that the measure (13) does not distinguish which tail probability is greater than the other one.

The other two measures of Rosco and Joe (2013) are derived through different approaches. For a bivariate random vector from a copula, the two measures are based on the moments or quantile function of the univariate random variable . Therefore these measures are essentially different from ours which is based on the joint distribution of the bivariate random vector .

Another copula-based measure for tail asymmetry has been proposed by Krupskii (2017). It is defined by

| (14) |

where , is a weighting function,

If , the measure (14) reduces to the measure discussed by Nikoloulopoulos et al. (2012) and Dobrić et al. (2013). Properties of each term of the measure (14) have been investigated by Krupskii and Joe (2015).

The measure (14) is related to ours in the sense that the values of their measures are calculated from the subdomain of a copula indexed by the truncation parameter. However the measure (14) is based on Spearman’s rhos or correlation coefficients of a truncated copula, and therefore the interpretation of the values of the measure (14) is essentially different from ours. A nice property of the measure (14) is that the weights of tails can be controlled through the weight function . Therefore this measure can be a useful measure of tail asymmetry if the weight function is appropriately defined.

6.2 An alternative measure based on tail probabilities

The proposed measure is a function of the lower and upper tail probabilities. Here we briefly consider another measure of comparison between the two tail probabilities.

Definition 4.

Let , and be defined as in Definition 1. Then we define a measure of comparison between the lower-left and upper-right tail probabilities of by

where the index is given by .

If has the copula , the expression of can be simplified to

Hence this measure is based on the difference between the lower and upper tail probabilities of the copula as well as the value of which could be decided based on the lower and upper tail orders.

The measure is another simple measure to compare the lower-right and upper-left tail probabilities. Properties (ii)–(vi) of Proposition 2 hold for . As for the range of the measure related to Property (i), it can be seen if and otherwise.

One big difference between and is that, when considering the values of the measures for , and possibly lead to different conclusions. As an example of this, consider the Clayton copula (5) with , which is said to have asymmetric tails (see Figure S1 of Supplementary Material for a plot of random variates from Clayton copula with ). For this model, the measure for takes large positive values because . However, the values of with for are close to zero since . This fact about is reasonable in one sense, but one might argue that does not capture the asymmetry of tail probabilities appropriately. Although this problem can be solved by selecting a different value of , the selection of , which influences the conclusion of analysis, appears difficult in practical situations where is unknown.

7 Example

As an application of the proposed measure, we consider a dataset of daily returns of two stock indices.

The dataset is taken from historical data in Yahoo Finance, available at https://finance.yahoo.com/quote/%5EGSPC/history/ and https://finance.yahoo.

com/quote/%5EN225/history/.

We consider stock daily returns of S&P500 and Nikkei225 observed from the 1st of April, 2008 until the 31st of March, 2019, inclusive.

We fit the autoregressive-generalized autoregressive conditional heteroscedastic model AR(1)-GARCH(1,1) to each of the stock daily returns using ugarchfit in ‘rugarch’ package in R (R Core Team, 2020; Ghalanos, 2020).

The Student -distribution is used as the conditional density for the innovations.

We consider the residuals of the fitted AR(1)-GARCH(1,1), where and are the residuals of S&P500 and Nikkei225, respectively.

The residuals show unexpected changes in daily return which are not explained by the model;

if the joint plunging probability is higher than the joint soaring probability, then the proposed measures is supposed to be negative.

We discuss defined in Definition 1 and defined in Definition 3. In order to obtain the copula sample for , we transform the residuals via , where and are the cumulative distribution functions of Student -distribution estimated using the maximum likelihood method. We assume, though not mathematically precise, that and are known.

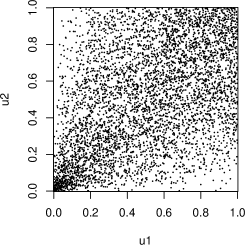

|

|

|

||||

| (a) | (b) | (c) | ||||

|

||||||

Figure 4(a) plots the sample which the residuals are transformed into via the cumulative distribution functions of Student . The values of calculated from the sample and their 90% asymptotic confidence intervals (9) are displayed in Figure 4(d). In Figure 4(d), the minimum value of is defined as in order that the asymptotic theory is applicable.

For the calculation of , we use the empirical distribution functions (11) to transform the residuals into the copula sample . The transformed sample is displayed in Figure 4(b). The values of calculated from the sample are plotted in Figure 4(d). The same frame also plots the 90% confidence intervals based on resamples of size using the basic bootstrap method. The minimum value of in the plot is .

Figure 4(a) and (b) suggest that there are more observations in the lower-left tail than the upper-right one for . However it does not seem immediately clear from these data plots whether there is significant difference between the two tail probabilities for as well as for general . To solve this problem, Figure 4(d) and (e) showing the values of and , respectively, are helpful. Indeed Figure 4(d) and (e) suggest that and are negative in most areas of the domain of , suggesting that the lower tail probability is greater than the upper one for most values of . In particular the two frames imply the general tendency that, for , and decrease with . The asymptotic and bootstrap 90% confidence intervals do not include for in Figure 4(d) and for in Figure 4(e). Hence, when considering the tests against for a fixed value of based on the two 90% confidence intervals, both tests reject the null hypothesis at a significance level of 0.1. This implies that the lower tail probability is significantly greater than the upper one for . On the other hand, when is greater than 0.21, both 90% confidence intervals include 0 and therefore each of the tests for a nominal size of 0.1 accepts the null hypothesis . There is disagreement in conclusions between the tests based on the two 90% confidence intervals in some areas of in .

As seen in the discussion above, both and show similar tendencies in general. Actually, the two data plots given in Figure 4(a) and (b) look similar at the first glance. However Figure 4(d) and (e) reveal that there are some differences between and . For example, the values of are generally smaller than those of for . Also the bootstrap confidence intervals of are narrower than the asymptotic confidence intervals of for large .

Apart from the tests based on pointwise confidence intervals given in Figure 4(d) and (e), we carry out a different test for a nominal size of 0.1 based on the test statistic in Theorem 5. We test against for . The test statistic is with . Therefore we reject the null hypothesis that the lower tail and upper tail are symmetric for the 11 equally spaced points of in .

We apply other measures of tail asymmetry to the copula sample displayed in Figure 4(a). The measure (13) of Rosco and Joe (2013) is calculated as . Another measure we consider here is a modified version of Krupskii’s (2017) measure (14), namely, . This modification is made to interpret the sign of the measure in the same manner as in that of ours. Figure 4(c) displays the estimates of with respect to for the three specific functions of . The three curves of the modified measure agree that there is stronger correlation in the lower tail than the upper one for . This is somewhat similar to the result based on our measure as well. For , the correlation coefficient in the lower tail is greater than that in the upper tail for any .

Finally, we summarize the results of the analysis of stock daily return data. The results based on the proposed measures suggest that the lower tail probability is greater than the upper one for most values of , where . In particular, there is significant difference between the lower and upper tail probabilities for . From the economic perspective, this result implies that the joint plunging probability is higher than the joint soaring probability with the threshold . Therefore it is recommended to use a copula with asymmetric tails for an appropriate modeling of the residuals of the daily return data appropriately. The three cases of the measure of Krupskii (2017) agree that, for , there is stronger correlation in the lower tail than in the upper one.

8 Discussion

In this paper we have proposed a copula-based measure of asymmetry between the lower and upper tail probabilities. It has been seen that the proposed measure has some properties which are desirable as a measure of tail asymmetry. Sample analogues of the proposed measure have been presented, and statistical inference based on them, including point estimation, interval estimation and hypothesis testing, has been shown to be very simple. The practical importance of the proposed measure has been demonstrated through statistical analysis of stock return data.

This paper discusses a measure for bivariate data. However it is straightforward to extend the proposed bivariate measure to a multivariate one in a similar manner as in Embrechts et al. (2016) and Hofert and Koike (2019). Let be an -valued random vector with continuous univariate margins. Then an extended measure of tail asymmetry for is defined by

where is the proposed measure (1) of the random vector . The properties of each element of the measure are straightforward from the results of this paper. It would be a possible topic for future work to investigate properties of this extended measure as a matrix and evaluate the values of the measure for multivariate copulas such as some examples of the vine copulas (Aas et al., 2009; Czado, 2010).

Supplementary material

Acknowledgements

The authors are grateful to Hideatsu Tsukahara for his valuable comments on the work. Kato’s research was supported by JSPS KAKENHI Grant Numbers JP17K05379 and JP20K03759.

References

- (1)

- Aas et al. (2009) Aas K, Czado C, Frigessi A, Bakken H (2009) Pair-copula constructions of multiple dependence. Insur Math Econ 44(2):182–198.

- (3)

- Czado (2010) Czado C (2010) Pair-copula constructions of multivariate copulas. In Copula Theory and its Applications, Springer, Dordrecht, pp 93–109

- Dehgani et al. (2013) Dehgani A, Dolati A, Úbeda-Flores M (2013) Measures of radial asymmetry for bivariate random vectors. Stat Pap 54:271–286

- Dobrić et al. (2013) Dobrić J, Frahm G, Schmid F (2013) Dependence of stock returns in bull and bear markets. Dependence Model 1:94–110

- Donnelly and Embrechts (2010) Donnelly C, Embrechts P (2010) The devil is in the tails: actuarial mathematics and the subprime mortgage crisis. ASTIN Bulletin 40(1):1–33

- Embrechts et al. (2016) Embrechts P, Hofert M, Wang R (2016) Bernoulli and tail-dependence compatibility. Ann Appl Probab 26(3):1636–1658

- Fermanian et al. (2004) Fermanian JD, Radulovic D, Wegkamp M (2004) Weak convergence of empirical copula processes. Bernoulli 10(5):847–860

- Genest and Nešlehová (2014) Genest C, Nešlehová JG (2014) On tests of radial symmetry for bivariate copulas. Stat Pap 55:1107–1119

- Ghalanos (2020) Ghalanos, A (2020) rugarch: univariate GARCH models, R package version 1.4-2

- Hofert and Koike (2019) Hofert M, Koike T (2019) Compatibility and attainability of matrices of correlation-based measures of concordance. ASTIN Bulletin 49:885–918

- Joe (1997) Joe H (1997) Multivariate models and dependence concepts. Chapman & Hall, London

- Joe (2006) Joe H (2006) Discussion of “copulas: tales and facts” by Thomas Mikosch. Extremes 9(1):37–41

- Joe (2014) Joe H (2014) Dependence modeling with copulas. Chapman & Hall/CRC, Boca Raton, FL

- Joe and Hu (1996) Joe H, Hu T (1996) Multivariate distributions from mixtures of max-infinitely divisible distributions. J Multivar Anal 57:240–265

- Krupskii (2017) Krupskii P (2017) Copula-based measures of reflection and permutation asymmetry and statistical tests. Stat Pap 58:1165–1187

- Krupskii and Joe (2015) Krupskii P, Joe H (2015) Tail-weighted measures of dependence. J Appl Stat 42:614–629

- Lee et al. (2018) Lee D, Joe H, Krupskii P (2018) Tail-weighted dependence measures with limit being the tail dependence coefficient. J Nonparametr Stat 30:262–290

- McNeil et al. (2015) McNeil AJ, Frey R, Embrechts P (2015) Quantitative risk management: concepts, techniques, and tools (revised ed.). Princeton University Press, Princeton, NJ

- Nelsen (2006) Nelsen RB (2006) An introduction to copulas (2nd ed.) Springer, New York

- Nikoloulopoulos et al. (2012) Nikoloulopoulos AK, Joe H, Li H (2012) Vine copulas with asymmetric tail dependence and applications to financial return data. Comput Stat Data Anal 56:3659–3673

- R Core Team (2020) R Core Team (2020) R: a language and environment for statistical computing, R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

- Rosco and Joe (2013) Rosco JF, Joe H (2013) Measures of tail asymmetry for bivariate copulas. Stat Pap 54:709–726

- Segers (2012) Segers J (2012) Asymptotics of empirical copula processes under non-restrictive smoothness assumptions. Bernoulli 18(3):764–782

- Tsukahara (2005) Tsukahara H (2005) Semiparametric estimation in copula models. Can J Stat 33:357–375

- Yoshiba (2018) Yoshiba T (2018) Maximum likelihood estimation of skew- copulas with its applications to stock returns. J Stat Comput Simul 88(13):2489–2506

Supplementary Material for “Copula-based measures of asymmetry between the lower and upper tail probabilities”

Shogo Kato∗,a, Toshinao Yoshibab,c and Shinto Eguchi a

a Institute of Statistical Mathematics

b Tokyo Metropolitan University

c Bank of Japan

August 4, 2020

The Supplementary Material is organized as follows. Section S1 presents the proofs of Lemma 1 and Theorems 1–5 of the article. Section S2 displays plots of random variates from Clayton copula, Ali-Mikhail-Haq copula and BB7 copula discussed in Sections 4.2 and 6.2 of the article.

S1 Proofs

S1.1 Proof of Theorem 1

S1.2 Proof of Theorem 2

Proof.

If follows from the assumption that there exists a slowly varying function such that as . Similarly, there exists a slowly varying function such that as Therefore

The last equality holds because is slowly varying. If and either or , then

∎

S1.3 Proof of Theorem 3

Proof.

Proposition 1 implies that can be expressed as

Since , the l’Hôpital’s rule is applicable to the last expression of the equation above. Hence we have

as required. ∎

S1.4 Proof of Lemma 1

Proof.

It is straightforward to see that and can be calculated as

Noting that , the other expectation and variance, namely, and , can be calculated in a similar manner.

Consider

The first term of the left-hand side of the equation above is

Therefore we have

Similarly, can be calculated. The other covariance can also be obtained via a similar approach, but notice that

The second equality holds because . Thus

∎

S1.5 Proof of Theorem 4

Proof.

Without loss of generality, assume . Let

Then it follows from Lemma 1 and the central limit theorem that

Define

Applying the delta method, we have

where

The asymptotic variance can be calculated as

| (S3) | |||||

| (S6) | |||||

| (S9) |

The case can be calculated in the same manner. Then, for any , it follows that, as , converges weakly to the two-dimensional Gaussian distribution with mean 0 and the covariance matrix (S9). Weak convergence of to an -dimensional centered Gaussian distribution for can be shown in a similar manner. Therefore converges weakly to a centered Gaussian process with covariance function as . ∎

S1.6 Proof of Theorem 5

Proof.

Theorem 4 implies that converges weakly to an -dimensional normal distribution as tends to infinity, where

, and is defined as in Theorem 4. Then we have as . Since and are consistent estimators of and , respectively, it holds that, for any , converges in probability to as . It then follows from Slutsky’s theorem that as . ∎