Cooperative Sentiment Agents for Multimodal Sentiment Analysis

Abstract

In this paper, we propose a new Multimodal Representation Learning (MRL) method for Multimodal Sentiment Analysis (MSA), which facilitates the adaptive interaction between modalities through Cooperative Sentiment Agents, named Co-SA. Co-SA comprises two critical components: the Sentiment Agents Establishment (SAE) phase and the Sentiment Agents Cooperation (SAC) phase. During the SAE phase, each sentiment agent deals with an unimodal signal and highlights explicit dynamic sentiment variations within the modality via the Modality-Sentiment Disentanglement (MSD) and Deep Phase Space Reconstruction (DPSR) modules. Subsequently, in the SAC phase, Co-SA meticulously designs task-specific interaction mechanisms for sentiment agents so that coordinating multimodal signals to learn the joint representation. Specifically, Co-SA equips an independent policy model for each sentiment agent that captures significant properties within the modality. These policies are optimized mutually through the unified reward adaptive to downstream tasks. Benefitting from the rewarding mechanism, Co-SA transcends the limitation of pre-defined fusion modes and adaptively captures unimodal properties for MRL in the multimodal interaction setting. To demonstrate the effectiveness of Co-SA, we apply it to address Multimodal Sentiment Analysis (MSA) and Multimodal Emotion Recognition (MER) tasks. Our comprehensive experimental results demonstrate that Co-SA excels at discovering diverse cross-modal features, encompassing both common and complementary aspects. The code can be available at https://github.com/smwanghhh/Co-SA.

Index Terms:

Multimodal Sentiment Analysis, Multimodal Representation Learning, Cooperative Sentiment Agents.1 Introduction

The rapid development of deep learning [22, 20] and reinforcement learning [60, 59] has propelled the field of human-computer interaction remarkably. However, human-machine interactions are predominantly task-oriented yet lack some spontaneous and personalized service due to machines’ inability to gain individuals’ inner states [25, 46, 56]. Consequently, a refined sentiment analysis algorithm is urgently required for the new-generation intelligent human-machine interaction [52, 55, 57, 58]. In daily life, people unconsciously emit their inner states through a mixture of spoken language, visual clues, and acoustic signals. Analyzing sentiment states or emotion labels in such multimodal contexts is known as multimodal sentiment analysis (MSA) [23] or multimodal emotion recognition (MER) [24].

Multimodal Representation Learning (MRL) integrates multiple heterogeneous signals into a joint representation and plays a crucial role in the MSA task [51]. It has derived diverse modality-fusion strategies, including tensor-based operations [1, 2], Canonical Correlation Analysis (CCA) [53, 54], graph-based fusion [17, 4], attention mechanisms [5, 6], etc. In recent years, self-attention and transformers have become mainstream technologies for modality fusion due to their distinctive structures in integrating multiple representations [61]. Nevertheless, within the self-attention mechanism, the inner product between the query and key, which are constructed by different modalities, highlights inter-modal similarities [7, 12]. It indicates that self-attention-based models extract more modality-shared properties for constructing the joint multimodal representation. However, parts of modality-unique features also complement each other. Hence, there is an urgent need to transcend the modality-shared bias of predefined fusion modes, coordinate adaptive interactions among multiple signals, and achieve precise predictions in individual sentiment states. Besides, capturing sentiment variation over time from heterogeneous signals and representing them in a unified form is an important premise for modality fusion.

In this paper, we coordinate the adaptive interaction between multiple signals to learn the joint representation through Cooperative Sentiment Agents (Co-SA). Co-SA comprises the Sentiment Agent Establishment (SAE) phase and the Sentiment Agent Cooperation (SAC) phase, which capture sentiment variations within unimodal signals and foster interaction between multiple unimodal representations, respectively. Specifically, in the SAE phase, each sentiment agent contains a Modality-Sentiment Disentanglement (MSD) module and a Deep Phase Space Reconstruction (DPSR) module to express sentiment dynamics in a unified form. The MSD module disentangles sentiment features from raw input, thereby mitigating the impact of diverse modal properties. Inspired by Phase Space Reconstruction [27], the DPSR module establishes relationships between short- and long-time observations, emphasizing sentiment variations over time. In the SAC phase, each sentiment agent takes actions based on the respective policy model to determine those unimodal properties that contribute to the joint representation. To further facilitate the interaction between multiple signals, Co-SA defines the task-tailored cooperation mechanism between sentiment agents in policy models and their joint optimization strategies. It also rewards all sentiment agents jointly for their actions, so as to adjust policies mutually. Through the rewarding mechanism, Co-SA will reach the optimal multimodal representation learning strategy. To validate the effectiveness of Co-SA, we conduct comprehensive experiments on multimodal sentiment analysis (MSA) and multimodal emotion recognition (MER) tasks.

-

•

We propose the concept of Cooperative Sentiment Agents for the MSA task, which coordinates adaptive interactions across multiple modalities to learn the joint representation.

-

•

Each sentiment agent deals with heterogeneous unimodal signals with the Modality-Sentiment Disentanglement (MSD) and the Deep Space Reconstruction (DPSR) modules to highlight sentiment variations over time in a unified form, which lays a solid foundation for modality interactions.

-

•

To facilitate the interaction across multiple modalities in the MRL task, we design a task-tailored collaboration mechanism for sentiment agents through policy models. These policies are further mutually optimized via a unified reward, in conjunction with the optimal joint representation achieved.

2 Related Work

2.1 Multimodal Sentiment Analysis

Multimodal sentiment analysis (MSA) aims to infer individuals’ sentiment states through the cues they inadvertently emitted. These cues encompass behavioral aspects (e.g., facial expressions) and physiological indicators (e.g., electroencephalogram). With the recent surge in the popularity of smart devices, a multitude of users create and upload videos to platforms like YouTube to share their immediate moods. This exponential growth in video data has laid a robust foundation for MSA, predominantly focusing on behavioral cues. An intrinsic difficulty for MSA is to fuse heterogeneous data collected by disparate sensors for a more reliable prediction. Based on the data integration stage, existing MSA works can be categorized into feature-level fusion, decision-level fusion, and hybrid fusion.

Feature-level fusion consolidates several independent representations into a joint representation and subsequently makes decisions [1]. Decision-level fusion independently processes the features and classifiers of each modality [16]. It combines the decision vectors from all classifiers to produce the ultimate sentiment prediction. Hybrid fusion represents a combination of both feature-level and decision-level fusion techniques [9, 15]. Among the above paradigms, decision-level fusion is the most straightforward due to the consistent formats of decision vectors. However, achieving precise predictions through decision-level fusion remains challenging. First, it is hard to determine the reliabilities of modalities when fusing their decision vectors. Secondly, the independent prediction process for each modality fails to capture the relevance between multimodal signals, such as shared and complementary properties.

Unlike decision-level fusion, feature-level fusion makes the most of interacted properties across multimodal signals and combines multiple representations into a powerful joint one. It has become the most popular modality-fusion mode in the MSA task. Tensor-based, graph-based, transferred-based, and attention-based techniques are popular for feature-level fusion. Tensor-based works employ common tensor operations to integrate multimodal representations, like concatenation, outer product, etc. TFN (Tensor Fusion Network) uses a three-fold Cartesian product to establish trimodal dynamic explicitly [1]. LMF (Low-rank Multimodal Fusion) analyzes that the TFN, performing outer product on high-dimension embedding, has resulted in the computational burden. To optimize TFN, it exploits the parallel decomposition of low-rank weight tensor and input tensor to compute tensor-based fusion [2]. Analogously, MRRF uses Tuckers’ tensor decomposition to disentangle unimodal representations and remove redundant parts to reduce computation. It gets joint multimodal representations by performing the outer product on disentangled modal embeddings [3]. Some works also construct graphs to learn the joint embedding, where nodes and edges represent modalities and corresponding relationships [17]. HHMPN successively models the feature-to-label, label-to-label, and modality-to-label dependencies via passing graph messages [4]. Transfer-based technology is another popular way of learning the joint representation by transferring one modal representation into another with the encoder-decoder structure. The immediate features output by the encoder are regarded as the joint representation of the two modalities [10]. The joint representation for all modalities will be obtained by transferring signals in succession. Some works avoid the sequential transfer among modalities. They specify the dominant modality in advance and then transfer other modalities to the specified one to strengthen it. The attention mechanism is widely used for feature-level fusion since it inspires models to focus on some vital features when learning the joint representation. MFN proposes the Delta-memory Attention Network (DMAN) to learn fusion weights for disparate multimodal elements through time. It also designs the Multi-view Gated Memory module to filter noisy features [5]. MARN applies the attention mechanism to the hidden state of LSTM and aggregates multimodal representations [6]. MulT applies the intra-modal transformer and cross-modal transformers to alleviate the issue of (1) unaligned multimodal data; (2) long-time dependencies between elements across modalities [7]. GME-LSTM(A) [8] is devoted to addressing unimodal temporal dynamics and multimodal importance issues. It applies temporal attention to the output of the LSTM module to attend to the vital time steps and modal attention to emphasize the significance of each modality.

Although the above feature-level techniques have successfully merged multiple signals into a joint representation in form, they care more about modality-fusion strategies yet lack deliberation about specific features suitable for fusion. Recently, there has been a growing awareness that diverse inter-modal properties may significantly contribute to the joint multimodal representation. Several studies have divided raw signals into modality-shared and modality-unique representations prior to the fusion process [11, 12, 13, 14]. However, these methods still struggle with the challenge of capturing appropriate features from shared and unique components to accurately predict sentiment states. A novel technique that can overcome the limitations of existing fusion modes and adaptively capture significant properties from unimodal signals for the joint representation is still needed. In this paper, we propose to collaboratively deal with multiple signals to learn the joint representation through cooperative sentiment agents.

Except for the modality-fusion technique, the quality of unimodal representations also significantly impacts the MSA task. Firstly, multimodal data are collected by disparate sensors and represented using distinct digital signal formats. Video, audio, and text data, for instance, are recorded via frames, waveforms of amplitude values over time, and sets of characters, respectively. Meanwhile, each modality carries different levels of modal and sentiment properties. It is essential for MRL to disentangle sentiment features from heterogenous data and represent them in a unified format. Secondly, features from adjacent frames often exhibit severe overlap and minor value fluctuations, making them weak at reflecting temporal dynamics. Moreover, prevalent approaches typically aggregate multimodal representations initially and subsequently extract temporal features, inadvertently neglecting the exploration of unimodal sentiment variations over time. The heterogeneous multimodal data and implicit temporal dynamics have increased the difficulty for sentiment agents in learning significant cross-modal features. To alleviate the above issues, we propose the Modality-Sentiment Disentanglement (MSD) and Deep Phase Space Recognition (DPSR) modules when establishing sentiment agents.

2.2 Multi-Agent Cooperative Reinforcement Learning

Reinforcement Learning (RL) [21] is about an agent spontaneously interacting with the environment, taking actions according to policies, and rewarding them. The environmental state , agent’s policy , state transition , and reward function are essential components of RL. Supposing at time , the agent perceives the state from the environment and takes action based on the policy . Upon taking the action, the agent receives a reward , and the environmental state is updated to . The agent repeats the above process until the terminal state , with a sequence of rewards accrued. The key to RL is to learn the optimal policy that maximizes the cumulative reward. The Actor-Critic model is a popular algorithm for RL, in which the actor and critic models are used to learn and evaluate policies, respectively [18]. Cooperative multi-agent reinforcement learning involves multiple agents working together to achieve a coincident goal [50, 40]. Centralized learning with fully decentralized execution is one of the prominent approaches for cooperative agents [39, 38]. It entails a central control unit that observes agents’ states and regulates their actions during training. After the training phase, the central unit is removed, and each agent independently makes decisions based on the trained policies.

3 Multimodal Representation Learning Based on Cooperative Sentiment Agents

In this paper, we propose an exclusive sentiment agent for each modality and further collaboratively control multiple sentiment agents to promote multimodal interactions and learn the joint multimodal representation. As shown in Fig. 1, the proposed framework comprises two essential components: the Sentiment Agents Establishment (SAE) Phase and the Sentiment Agents Cooperative (SAC) Phase. In the SAE Phase, sentiment agents disentangle sentimental properties from heterogeneous signals to the same form through the MSD module. They further contain the DPSR module that ferrets out sentiment sequential variations to obtain high-quality unimodal representations. In the SAC Phase, Co-SA collaboratively controls sentiment agents to learn the joint representation, where each sentiment agent determines effective features within its modality to contribute to the joint representation. Co-SA further applies the joint representation to predict downstream tasks and subsequently calculates unified rewards to guide sentiment agents in adjusting their policies. In the following section, we will provide a detailed introduction to the SAE and SAC Phases, respectively.

3.1 Sentiment Agents Establishment

Individuals express their sentiment states through multiple behavioral signals, which are collected and recorded by various devices. The elements comprising each modal signal vary across modalities. For instance, text signals consist of sets of characters, while visual data is a collection of frames. Additionally, different modalities exhibit substantial variations in modal properties, noise, and even task relevance. These significant differences pose challenges for sentiment agents when uniformly dealing with multimodal signals during the modality-fusion process. Meanwhile, diverse modal properties also hinder sentiment agents from precisely extracting sentiment-related features. To provide precise sentiment signals for each sentiment agent, we propose the Modality-Sentiment Disentanglement (MSD) module to extract sentiment-related properties during the representation fission process.

3.1.1 Modality-Sentiment Disentanglement (MSD)

Each modality carries both sentiment-related and modality-specific properties in different forms. Consequently, we design a sentiment encoder and a modality encoder to extract sentiment-related and modality-specific features from raw input , respectively, where can be selected text , visual , and acoustic data.

| (1) |

| (2) |

where and are parameters of sentiment and modality encoders, respectively. Features extracted by the modality encoder vary across modalities. To facilitate the modality encoder in extracting as many precise modal properties as possible, we constrain as follows.

| (3) |

where indicates the function that maps to predicted probabilities. Without the loss of generality, , , and are set as 0, 1, 2, respectively. Moreover, and represent two distinct properties within the same modality. Therefore, to enable to express appropriate sentiment features and prevent the inclusion of modal properties, we impose a significant difference between and .

| (4) |

Where is the euclidean distance. prevents from conveying any modality-related properties. Additionally, we decouple each modal signal into modality-related and sentiment-related information. To ensure the completeness of disentangled features, we combine the two parts and reconstruct the input , preventing the loss of information.

| (5) |

where

| (6) |

where and are the decoders and their parameters, respectively. indicates the concatenation operation. is reconstructed unimodal signal. is the euclidean distance.

To sum up, the MSD module has three objective functions as follows.

| (7) |

The modality loss allows each modality encoder to precisely extract modality-related properties. The reconstructed loss ensures the completeness of information during the disentangled process. The modality-sentiment constraint loss prevents the extracted sentiment embedding from carrying any modality-related properties. The stringent constraints imposed by the above objective functions ensure that sentiment agents perceive precise sentiment properties.

In addition to heterogeneous multimodal data, sentiment variation over time is also an easily overlooked issue. Most MRL models fuse unimodal signals before extracting temporal features. The assumption behind this operation is that sentiment changes in unimodal representation are well presented. However, adjacent frames often exhibit severe redundancy in semantic context, and sentiment variations only manifest in a subtle number of numerical changes, failing to explicitly capture sentiment variations. To alleviate this issue, we draw inspiration from Phase Space Reconstruction (PSR) and propose the Deep Phase Space Reconstruction (DPSR) module to recover sentiment dynamics from existing observations.

3.1.2 Deep Phase Space Reconstruction (DPSR)

The phase space encompasses all feasible states of a dynamical system. In this space, each point, also termed an observation, can be seen as a sampling from the motion trajectory [27]. These observations exhibit strong correlations, allowing us to recover the system’s motions from existing observations. Simultaneously, sentiment changes in each modality can be treated as a distinct dynamic system, with each frame’s representation serving as a sampled observation of the motion process [26]. Therefore, we can apply the PSR theory to recover explicit sentiment dynamics from existing representations. Traditional PSR technology assesses the correlation between successive frames to determine time delay and embedded dimension parameters [28, 29]. By adjusting observations with these parameters, the phase space significantly reduces redundancy while maintaining relevance at a certain level. Building upon conventional PSR techniques but tailored to the task, the proposed DPSR module reconstructs each observation by incorporating deep learning principles to establish local and global motion information. More precisely, the DPSR module initiates its exploration of motion information of observations by utilizing a cross-correlation matrix first.

| (8) |

where is a matrix, represents the length of sequence, and each element in indicates the correlation between and observations. Subsequently, the DPSR module reconstructs each observation with the cross-correlation matrix to recover the system motion trajectory.

| (9) |

The aforementioned operations have provided a viable solution for recovering sentiment dynamics by capturing both local and global motion information. To further emphasize temporal dynamics while mitigating redundancy between frames, the DPSR module imposes constraints on the reconstructed observations as follows.

| (10) |

The objective function mentioned above aims to reduce redundancy among arbitrary pairs of frames to highlight sentiment changes. However, in some scenarios, specific events may repeat at intervals. To enhance precision in constructing sentiment motion, the DPSR module further refines the loss function as follows.

| (11) |

where indicates the time interval between and frames, which is calculated as follows.

| (12) |

Clearly, will be small if the frame is far from the frame, and vice versa.

3.2 Cooperative Sentiment Agents for Multimodal Representation Learning

Benefitting from the SAE phase, each agent perceives unimodal representations that carry sequential sentiment variations in a unified form. These sentiment properties can either be homogeneous or complementary across modalities, often referred to as modality-shared and modality-unique features. Solely extracting shared sentiment properties from each modality can be redundant, while constructing the joint representation with only complementary parts may lead to the loss of common characteristics. Thus, extracting appropriate homogeneous and complementary sentiment properties from each unimodal representation is crucial for constituting the joint representation. Yet, it also poses great challenges to related technologies in feature learning simultaneously. Cooperative control pre-defines the communication mode between agents according to the task and adjusts to the optimal policies through rewards. This working mechanism aligns well with our goal of coordinating multiple signals to achieve the optimal joint representation. Inspired by cooperative control, we propose the concept of sentiment agents and realize the interaction between multimodal signals through agents’ collaborations to finish the MRL task.

We assign sentiment agents for each modality, where unimodal representation is the input for each agent. Each sentiment agent has an independent policy model, also referred to as the actor model. It takes actions according to the current policy to determine valuable properties within the modality. Then, Co-SA combines selected features from all modalities into and further applies it to downstream tasks, where indicates the integrated mode. The key to the SAC phase is exactly hitting features that enhance or complement across modalities through , avoiding the loss or redundancy of sentiment properties.

To this end, we carefully designed policy models and their joint optimization strategy to facilitate efficient interactions across modalities. On the one hand, we allow each sentiment agent to perceive its dominant modality as well as its differential features with other modalities. On the other hand, we jointly evaluate all policy models to coordinate captured sentiment properties across modalities. Specifically, in each stage, policy models take their dominant modal representations and different features with other modalities as input for learning common and complementary components, respectively. Taking the visual modality as an example, the visual policy model takes visual representation as well as its differential features with the other two modalities , as input and output the weight .

| (13) |

Where and are differential features. Co-SA concatenates , , and and then map to get action . Policy models are required to be efficient on all samples. Thereby, upon outputting weights for the current batch of samples, sentiment agents transfer to the next batch of representations and exploit cumulative rewards to evaluate policy models. During the representation transition, is determined by the sampling order of the training samples. Meanwhile, Co-SA evaluates policy models according to the succession of weights they learned. It designs transformer-based critic models that learn cumulative rewards from the concatenation between current representations and weights , avoiding the massive computation from the current to terminal training batches. Notably, all agents struggle to adjust policies and reach the consistent prediction. Thereby, Co-SA designs a joint critic model to output a unified cumulative reward for all policy models . The joint critic model works as the central control unit that coordinates sentiment agents in continuously adjusting policies and learning appropriate cross-modal features for the joint representation. It will be removed after training, leaving policy models to decide valuable properties independently.

Clearly, policy models wish to output weights that cause a larger cumulative reward . Thereby, the objective function of policy models is optimizing their parameters that maximize the output of the critic model. As for the optimization of the critic model, the Temporal-Difference (TD) Error algorithm [19] is employed: , where . is the cumulative reward in the next stage, and is the discounted factor. is the immediate reward, and it varies with tasks. For the MSA task, , where and are predicted and true sentiment state. For the MER task, , where is the predicted probability for the true class, and is the number of emotion categories. Besides, the calculation of the reward relies on both the actor-critic and prediction models. To train the model stably, Co-SA iteratively optimizes the prediction and actor-critic model parameters. Thus, Co-SA contains loss functions about the policy, critic, and prediction models in the SAC phase. Co-SA has the total objective function after incorporating losses in the SAE phase.

| (14) |

is the predictive loss. It is the mean absolute loss for the MSA task and cross-entropy loss for the MER task. The weights of the four objective functions will be further discussed in the experimental part.

4 Experiments

In this section, we evaluate Co-SA on multiple MRL-related tasks, i.e., multimodal sentiment analysis (MSA) and multimodal emotion recognition (MER).

4.1 Experimental Setting

4.1.1 Experimental Target

We focus on the following questions in the experimental part: (1) whether all proposed modules are effective as expected; (2) after learning significant representation for each modality, whether it is applicable for some classic modality fusion methods; (3) If the combination of Co-SA and classic fusion methods can outperform other MSA and MER works; (4) how much of a role each modality play during the representations learning or prediction process;

4.1.2 Databases

We apply the widely used CMU-MOSI [47] and CMU-MOSEI [48] databases for MSA task, and IEMOCAP [49] for the MER task. CMU-MOSI, a popular database with three modalities, is widely used in MSA tasks. It is a collection of 93 videos from online sharing websites. Each video can be split into 62 utterance-level segments at most. These segments are annotated with the sentiment from [-3, 3], where -3 indicates the strongest negative sentiment while +3 represents the strongest positive attitude. Following popular works, we train Co-SA with 1281 utterances, valid, and test it with 229 and 685 utterances, respectively. CMU-MOSEI contains 2928 videos and is annotated with two types of labels, including continuous sentiment from -3 to 3 and emotions with six values. As popular MSA works, we use 16265 utterances as training data, 1869 as validation data, and 4643 as test data. IEMOCAP is the database collected in the lab, conveying three modalities of signals. It has 151 sessions of dialogues, of which there are two speakers per session and 302 videos in total. These videos are segmented into about 10 K utterances and labeled with nine emotions, including angry, happy, sad, neutral, surprised, fearful, excited, frustrated, and other. Following popular works, we take the first four emotions for experiments.

4.1.3 Features Extraction

We use the following tools to extract features for three modalities.

Visual modality. Facet is used to extract some visual features, including action units, facial landmarks, head pose, etc., for both MSA and MER tasks.

Acoustic modality. COVAREP [44] is used to extract some acoustic features, such as 12-Mel frequency cepstral coefficients, pitch tracking, spectral envelope, speech polarity, etc.

Text modality. Following the state-of-the-art works [33, 41, 35, 7, 37], we use BERT [43] to extract text features for the MSA task and extract GloVe word embedding [42] from the original text for the MER task.

To sum up, for the CMU-MOSI database, we get the dimensions for the text, acoustic, and visual features to be 768, 74, and 47, respectively. For the CMU-MOSEI database, the feature dimensions for the three modalities are 768, 74, and 35, respectively. For the IEMOCAP database, the three modalities have respective feature dimensions of 300, 74, and 35.

4.1.4 Evaluations metrics

For the MSA task, we evaluate Co-SA with (1) MAE: mean absolute error (the lower, the better); (2) Corr: correlation between predictions and ground truth (the higher, the better); (3) Acc2: binary accuracy, samples to be positive if its sentiment value is greater than 0, and vice versa; (4) F1 score; (5) Acc7: 7-class accuracy, we round up the predicted sentiment value for each sample as the class. For the MER task, we report the accuracy and F1 score for each category.

4.1.5 Compared Methods

(1) Tensor Fusion Network (TFN), which learns unimodal embeddings and then leverages the outer product to fuse unimodal, bimodal, and trimodal representations [1]. (2) Low-Rank Modality Fusion (LMF), which reduces computation complexity during fusing modalities with low-rank weight tensors [2]. (3) Memory Fusion Network (MFN), which designs the delta-memory attention network for learning fusion weights and multi-view gated memory to filter noisy features [5]. (4) Multimodal Transformer (MulT), which learns multimodal representations by transferring source modality to target modalities through transformers [7]. (5) Quantum-Inspired Multimodal Fusion (QMF), which draws inspiration from the quantum principle to address the issue of multimodal combination [30]. (6) Graph Fusion Network (GFN), which designs graph neural networks to learn unimodal, bimodal, and trimodal dynamics [10]. (7) Interaction Canonical Correlation Network (ICCN), which first fuses text embeddings with visual and acoustic representations, respectively, and then uses a canonical correlation network to get unified multimodal representations [31]. (8) Recurrent Attend Variation Embedding Network (RAVEN), which obtains multimodal representations by learning textual shift embeddings from visual and acoustic modalities [32]. (9) Multimodal Adaptation Gate (MAG), which finetunes transformer model to learn efficient textural embeddings and designs multimodal gate adaptation to get multimodal representations [33]. (10) Transformer-Based Feature Reconstruction Network (TFR), which proposes a feature reconstruction network to improve the robustness of models when missing some modal signals [34]. (11) Hybrid Contrastive Learning (HyCon), which establishes complex inter-class and intra-/inter-modal relationships with contrastive and semi-contrastive learning to explore discriminative representations [45]. (12) Multimodal Correlation Learning (MCL), which learns complex correlations between modalities using prior information across samples [35]. (13) Hierarchical Feature Fusion Network (HFFN), which applies the mechanism of ”Divide, Conquer, and Combine” to fuse local and global multimodal representations [36]. (14) Temporal Convolutional Multimodal LSTM (TCM-LSTM), which leverages temporal convolutions to explore sufficient unimodal embeddings and applies the LSTM module to get multimodal representations [37].

| Settings | Dataset: MOSI | Dataset: IEMOCAP | |||||||||||||

| Acc7 () | Acc2 () | F1 () | MAE () | Corr () | Happy | Sad | Angry | Neutral | Average | ||||||

| Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 | ||||||

| baseline | 47.30 | 84.88 | 84.87 | 0.711 | 0.8014 | 86.67 | 85.45 | 84.65 | 84.10 | 87.21 | 86.98 | 70.26 | 69.96 | 82.20 | 81.62 |

| only MSD | 48.47 | 85.50 | 85.44 | 0.711 | 0.8011 | 87.53 | 86.11 | 86.25 | 84.87 | 87.53 | 87.38 | 70.15 | 69.40 | 82.86 | 81.94 |

| MSD w/o | 47.30 | 84.88 | 84.91 | 0.711 | 0.8033 | 86.78 | 85.00 | 83.90 | 83.51 | 87.21 | 87.21 | 69.83 | 69.27 | 81.93 | 81.25 |

| MSD w/o | 47.59 | 85.19 | 84.98 | 0.706 | 0.8038 | 86.89 | 85.34 | 83.16 | 82.21 | 86.67 | 86.49 | 69.51 | 69.04 | 81.56 | 80.77 |

| MSD w/o | 47.74 | 85.19 | 85.06 | 0.702 | 0.8038 | 86.78 | 85.61 | 84.65 | 86.84 | 86.99 | 87.03 | 70.04 | 69.80 | 82.12 | 81.57 |

| only DPSR | 48.90 | 85.80 | 85.73 | 0.698 | 0.8055 | 87.53 | 85.72 | 86.14 | 85.82 | 88.17 | 88.45 | 72.60 | 71.82 | 83.61 | 82.95 |

| DPSR w/o | 47.44 | 85.80 | 85.73 | 0.718 | 0.7947 | 86.78 | 85.67 | 85.82 | 85.45 | 87.31 | 87.20 | 70.47 | 70.10 | 82.60 | 82.11 |

| only SAC | 48.76 | 86.26 | 86.25 | 0.698 | 0.8006 | 87.95 | 86.24 | 86.25 | 85.63 | 87.53 | 87.60 | 71.75 | 70.34 | 83.37 | 82.45 |

-

SAE and SAC represent the establishment and cooperation phases of sentiment agents, respectively. The SAE phase includes the MSD and DPSR modules. The MSD module contains the reconstructed , modality , and modality-sentiment constraint losses. The DPSR module has the weight in adjusting the loss function. The results from the baseline model, models incorporating only the MSD and DPSR modules, and models utilizing only the SAC phase are underlined to facilitate intuitive comparisons.

4.1.6 Implementation Details

Following some state-of-the-art works [7, 35], Co-SA takes 50 and 20 frames of features as input for MSA and MER tasks, respectively. In the MSD module, Co-SA encodes all modal signals into 128-dimension representations for both tasks. Co-SA applies popular modality fusion modes to get the joint representation to validate its generality, including addition and concatenation. The discounted factor is set as 0.5. For the weights , , and of loss functions, we will conduct further ablation studies on both MER and MSA tasks. Before training Co-SA, we initialize a replay memory to store a batch of features, weights, updated features, and rewards in each stage. They are sampled to optimize the actor and critic models during each training process. After optimizing the actor and critic models, the parameters for the target actor and critic models are updated for stable convergence: , , where is set as 0.01. We update Co-SA parameters with the Adam optimizer and adjust the learning rate based on valid loss, multiplying it by 0.95 with the patience of 50 epochs. Co-SA has trained 500 epochs for each task on 2080Ti devices.

4.2 Ablation Study

In this section, we evaluate each component of the Co-SA framework using the MOSI and IEMOCAP databases, which are representative datasets for the MSA and MER tasks, respectively. Beyond presenting quantitative results, we also provide and compare various visualized outcomes to offer a comprehensive analysis of Co-SA. In the subsequent sections, we delve into the investigation of the MSD and DPSR modules within the SAE phase and the SAC phase, respectively.

4.2.1 Evaluation on the SAE Phase

The MSD module separates each modal signal into sentiment-related and modality-related properties. It involves three core operations corresponding with the reconstruction loss , the modality loss , and the sentiment-modality constraint loss , respectively. Table. I presents comprehensive results for the MSD module, exploring the effectiveness of each loss. Notably, we define the model that excludes all proposed modules as the baseline method in Table. I. Compared with the baseline model, the MSD module efficiently improves model performance quantitatively on two tasks. Results, eliminating three loss functions by turns, indicate that the MSD module discounts to different extents when lacking one of the three loss functions. The compared results have demonstrated that Co-SA significantly benefits from the disentangled and homogenous sentiment properties in the MSD module.

Fig. 2 also presents intuitive disentangled features for three modal signals. In Fig. 2, each sample is divided into modality and sentiment features. Sentiment features from the same modality are clustered together, as are modality features. However, sentiment clusters maintain a certain distance from their corresponding modality clusters. These visualized results suggest that Co-SA has successfully separated sentiment features from raw signals, thereby reducing the disturbances of diverse modal properties in subsequent steps. Moreover, sentiment features are not clustered across modalities, as their sequential variations need to be processed prior to fusion.

The ’only DPSR’ configuration in Table. I presents the ablation study results for the DPSR module. This module mitigates redundancy across representations and emphasizes sentiment variations over time. Compared to the baseline model, the DPSR module generally enhances all metrics on both tasks. Furthermore, the DPSR module utilizes the parameter to ease the constraint on frame representations as the time interval between frames expands. The performance of the DPSR module sees a slight decline when is removed, suggesting that repeated events may occur in certain sequences.

| Dataset: MOSI | Dataset: MOSEI | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Acc7 () | Acc2 () | F1 () | MAE () | Corr () | Acc7 () | Acc2 () | F1 () | MAE () | Corr () | |

| TFN-Glove [1] | 32.2 | 76.4 | 76.3 | 1.017 | 0.604 | 49.8 | 79.4 | 79.7 | 0.610 | 0.671 |

| TFN-BERT [1] | 44.7 | 82.6 | 82.6 | 0.761 | 0.789 | 51.8 | 84.5 | 84.5 | 0.622 | 0.781 |

| LMF-Glove [2] | 30.6 | 73.8 | 73.7 | 1.026 | 0.602 | 50.0 | 80.6 | 81.0 | 0.608 | 0.677 |

| LMF-BERT [2] | 45.1 | 84.0 | 84.0 | 0.742 | 0.785 | 51.2 | 84.2 | 84.3 | 0.612 | 0.779 |

| MFN-Glove [5] | 32.1 | 78.0 | 76.0 | 1.010 | 0.635 | 49.1 | 79.6 | 80.6 | 0.618 | 0.670 |

| MFN-BERT [5] | 44.1 | 83.5 | 83.5 | 0.759 | 0.786 | 52.6 | 84.8 | 84.8 | 0.607 | 0.771 |

| Mult-Glove [7] | 33.6 | 79.3 | 78.3 | 1.009 | 0.667 | 48.2 | 80.2 | 80.5 | 0.638 | 0.659 |

| Mult-BERT [7] | 41.5 | 83.7 | 83.7 | 0.767 | 0.799 | 50.7 | 84.7 | 84.6 | 0.625 | 0.775 |

| QMF-Glove [30] | 35.5 | 79.7 | 79.6 | 0.915 | 0.696 | 47.9 | 80.7 | 79.8 | 0.640 | 0.658 |

| GFN-BERT [10] | 47.0 | 84.3 | 84.3 | 0.736 | 0.790 | 51.8 | 85.0 | 85.0 | 0.611 | 0.774 |

| ICCN-BERT [31] | 39.0 | 83.0 | 83.0 | 0.860 | 0.710 | 51.6 | 84.2 | 84.2 | 0.565 | 0.713 |

| RAVEN-Glove [32] | 33.8 | 78.8 | 76.9 | 0.968 | 0.667 | 50.2 | 79.0 | 79.4 | 0.605 | 0.680 |

| MAG-BERT [33] | 42.9 | 83.5 | 83.5 | 0.790 | 0.769 | 51.9 | 85.0 | 85.0 | 0.602 | 0.778 |

| TFR-Net [34] | 42.6 | 84.0 | 83.9 | 0.787 | 0.788 | 51.7 | 85.2 | 85.1 | 0.606 | 0.781 |

| HyCon [45] | 46.6 | 85.2 | 85.1 | 0.713 | 0.790 | 52.8 | 85.4 | 85.6 | 0.601 | 0.776 |

| MCL [35] | 49.2 | 86.1 | 86.1 | 0.713 | 0.793 | 53.3 | 86.2 | 86.2 | 0.581 | 0.791 |

| Co-SA(add) | 49.8 | 87.2 | 87.1 | 0.685 | 0.813 | 54.5 | 86.8 | 86.7 | 0.575 | 0.791 |

| Co-SA(concatenate) | 49.5 | 86.6 | 86.5 | 0.689 | 0.814 | 54.0 | 86.4 | 86.4 | 0.577 | 0.790 |

-

The optimal results are bolded and underlined, and the sub-optimal results are underlined.

To further scrutinize the DPSR module, we compute the average similarity between features across various time intervals. Fig. 3 illustrates the similarity comparisons between representations without (w/o) and with (w/) the DPSR module. Representations, when not modulated by the DPSR module, show considerable similarities across different times since they are defined by objective characteristics, such as visual AUs and acoustic MFCCs. These objective characteristics exhibit minimal variation within the same sequence and struggle to reflect sentiment fluctuations. However, when the DPSR module is employed, similarities among frames significantly decrease as frame intervals increase. Interestingly, the similarities of representations tend to increase again when their time interval surpasses a certain threshold. This phenomenon fortuitously confirms our assumption in the quantitative ablation study concerning the DPSR module.

4.2.2 Evaluation on the SAC Phase

Table. I also presents a comparison between models without (w/o) and with (w/) the SAC phase. In the baseline model, we eliminate the cooperative sentiment agents and learn weights for the fused features directly. Compared to the baseline model, the SAC module consistently enhances the model’s performance on both tasks, owed largely to its superior weight-learning mechanism. However, due to the constraints observed in the representation of sentiment agents, the model that exclusively employs the SAC module does not achieve optimal results. To provide a more intuitive understanding of the SAC module, we visualize the learned weights in Fig. 4. Each value in ranges from 0 to 1, signifying the importance of the corresponding features. Notably, the data in the MOSI and IEMOCAP databases are aligned across modalities. Co-SA captures modality-shared features when it learns larger weights at the same frame for all three modalities. Conversely, it captures complementary features when larger weights are distributed among different frames. Initially, models that directly learn fused properties tend to rely on one or two modalities, thereby failing to capture diverse cross-modal features. For instance, the model heavily depends on acoustic signals in the MSA task and emphasizes features in the acoustic and visual modalities in the MER task. This over-reliance on specific modalities overlooks the diversity of multimodal features, rendering the model less robust. In contrast, the proposed SAC phase, benefiting from the feedback reward, repeatedly learns the fused representations and is adept at mining valuable properties across all modalities. Furthermore, compared to conventional methods, Co-SA has unearthed diverse inter-modal features, encompassing both common and complementary aspects.

4.2.3 Evaluation on Hyper-parameters

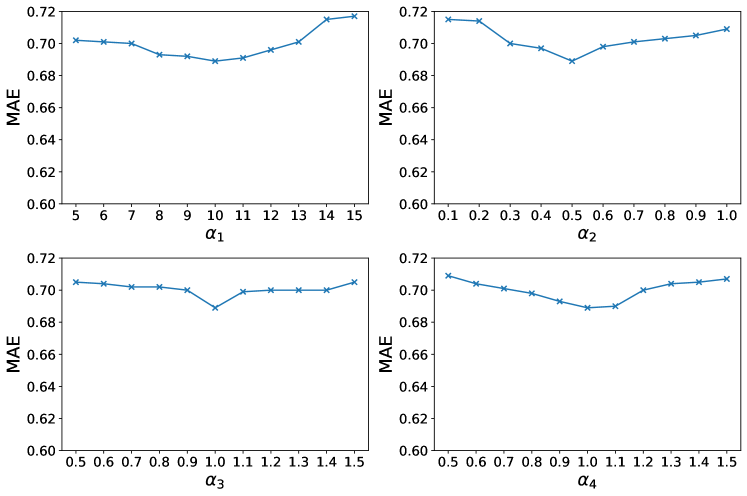

The objective function of Co-SA is composed of the prediction loss , the loss for the MSD module, the loss for the DPSR module, and the loss for the SAC phase, with weights of , , , and . To establish a more rigorous experimental setup, we conduct extensive experiments over a wide range of values for these four weights. Fig. 5 illustrates the weights and corresponding evaluation metrics for the MSA and MER tasks. Co-SA achieves the optimal MAE value for the MSA task when setting , , , and as 10, 0.5, 1.0, and 1.0, respectively. For the MER task, it performs best at the setting of , , , and . Furthermore, the performance of Co-SA exhibits minor fluctuations when exploring the optimal weights within a certain range, demonstrating its robustness.

| Happy | Sad | Angry | Neutral | Average | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 | Acc | F1 | |

| MFN [5] | 86.5 | 84.0 | 83.5 | 82.1 | 85.0 | 83.7 | 69.6 | 69.2 | 81.2 | 79.8 |

| Graph-MFN [5] | 86.8 | 84.2 | 83.8 | 83.0 | 85.8 | 85.5 | 69.4 | 68.9 | 81.5 | 80.4 |

| RAVEN [32] | 87.3 | 85.8 | 83.4 | 83.1 | 87.3 | 86.7 | 69.7 | 69.3 | 81.9 | 81.2 |

| LMF [2] | 86.9 | 82.3 | 85.4 | 84.7 | 87.1 | 86.8 | 71.6 | 71.4 | 82.8 | 81.3 |

| MulT [7] | 87.4 | 84.1 | 84.2 | 83.1 | 88.0 | 87.5 | 69.9 | 68.4 | 82.4 | 80.8 |

| HFFN [36] | 86.8 | 82.1 | 84.4 | 84.5 | 86.6 | 85.8 | 69.6 | 69.3 | 81.9 | 80.4 |

| TCM-LSTM [37] | 87.2 | 84.8 | 84.4 | 84.9 | 89.0 | 88.6 | 71.3 | 71.2 | 83.0 | 82.4 |

| HyCon [45] | 88.0 | 85.5 | 86.2 | 85.9 | 89.4 | 89.2 | 70.4 | 70.5 | 83.5 | 82.8 |

| MCL [35] | 88.8 | 86.8 | 86.6 | 86.6 | 90.3 | 90.3 | 71.6 | 71.4 | 84.3 | 83.8 |

| Co-SA(add) | 88.2 | 86.8 | 87.2 | 87.1 | 90.3 | 90.1 | 73.4 | 73.1 | 84.8 | 84.3 |

| Co-SA(concatenate) | 88.1 | 86.3 | 87.6 | 87.0 | 89.1 | 89.0 | 74.0 | 73.5 | 84.7 | 83.9 |

-

The optimal results are bolded and underlined, and the sub-optimal results are underlined.

4.3 Comparison With State-of-the-Art Works

In this section, we compare Co-SA with several state-of-the-art methods. Co-SA produces unimodal weights that dictate the features used for fusion. Without loss of generality, we employ two classic fusion modes to obtain a joint multimodal representation: addition and concatenation operations, denoted as Co-SA(add) and Co-SA(concatenate), respectively. Comparative results on the MOSI and MOSEI databases are presented in Table II. Among the compared works, MCL has so far achieved the best results on both databases. MCL is also an approach that aims to learn high-quality multimodal representations by establishing correlations between modalities. Compared with MCL, Co-SA(add) enhances performance by 0.6%, 1.1%, 1.0%, 0.028, and 0.02 on Acc7, Acc2, F1 score, MAE, and Corr, respectively, on the MOSI database. On the MOSEI database, it improves performance by 1.2%, 0.6%, and 0.5% on Acc7, Acc2, and F1 score, respectively. The correlation (Corr) predicted by Co-SA(add) for the MOSEI database remains on par with MCL. Meanwhile, the MAE of Co-SA for the MOSEI database is slightly worse than ICCN-BERT. This generalized improvement on the MSA task is attributed to the well-designed joint representation learning process. Regarding the concatenation fusion mode, Co-SA enhances Acc7, Acc2, F1 score, MAE, and Corr by 0.3%, 0.5%, 0.4%, 0.24, and 0.21, respectively, on the MOSI database, and boosts 0.7%, 0.2%, and 0.2% on Acc7, Acc2, and F1 score, respectively, on the MOSEI database. The correlation between Co-SA’s predictions and the ground truth experiences a 0.01 drop compared with MCL. However, the overall results of both fusion modes demonstrate a stable improvement compared with state-of-the-art works.

Table III presents a comparison with several state-of-the-art (SOTA) works on the IEMOCAP database. Similar to the MSA task, MCL has achieved the best results for the MER task. Compared with MCL, Co-SA exhibits slightly inferior performance in the happy class but significantly improves the recognition rates in the neutral class. Specifically, Co-SA enhances the accuracy of the neutral emotion by 1.8% and 2.4%, respectively, when using the two fusion modes. Moreover, Co-SA also significantly improves the mean accuracy across the four emotions, outperforming all existing works.

4.4 Evaluation on Modalities

| Acc7 () | Acc2 () | F1 () | MAE () | Corr () | |

|---|---|---|---|---|---|

| V | 21.0 | 57.7 | 55.7 | 1.447 | 0.093 |

| A | 20.2 | 53.3 | 52.7 | 1.448 | 0.132 |

| T | 45.4 | 84.1 | 84.0 | 0.736 | 0.792 |

| V+A | 22.9 | 57.4 | 57.2 | 1.442 | 0.135 |

| V+T | 49.3 | 85.3 | 85.3 | 0.703 | 0.804 |

| A+T | 47.7 | 85.8 | 85.7 | 0.714 | 0.798 |

| V+A+T | 49.8 | 87.2 | 87.1 | 0.685 | 0.813 |

-

Significant results are underlined.

In this section, we delve into the significance of the three modalities. Taking the MOSI database as an example, we conduct experiments with various combinations of modalities and present the results in Table IV. In Table IV, ”V”, ”A”, and ”T” denote the visual, acoustic, and text modalities, respectively. From the comparison among individual modalities, it is apparent that text data plays a pivotal role among the three modalities. This phenomenon is largely due to the fact that both the visual and acoustic modalities are constrained to use extracted features (such as AUs and MFCCs) as input because of privacy concerns, whereas raw text data can be directly incorporated into Co-SA. Raw data offer a far richer source of information than extracted features. The amalgamation of text with both visual and acoustic modalities yields a noticeable improvement across all metrics, indicating that visual and acoustic signals provide effective supplementary or complementary information. Ultimately, Co-SA achieves optimal performance when all modal signals are engaged.

5 Conclusion

In this paper, we aim to harness effective unimodal properties to construct a comprehensive joint multimodal representation. We analyze that existing works devote more to modality-fusion strategies. However, some pre-defined modality-fusion mechanisms limit models in perceiving diverse cross-modal features. Inspired by cooperative control, we establish distinct sentiment agents for multimodal signals, each comprising the MSD and DSPR modules that capture sentiment variations. Subsequently, these sentiment agents interact via a policy-learning and policy-optimization mechanism to capture significant cross-modal features. Quantitative results from two tasks have validated the effectiveness of our proposed modules. Moreover, the visualization of intermediate features provides a clear and intuitive understanding of each component of Co-SA. The introduction of multiple sentiment agents opens up a new avenue for research in multimodal data analysis.

References

- [1] A. Zadeh, M. Chen, S. Poria, E. Cambria, and L.-P. Morency, “Tensor fusion network for multimodal sentiment analysis,” arXiv preprint arXiv:1707.07250, 2017.

- [2] Z. Liu, Y. Shen, V. B. Lakshminarasimhan, P. P. Liang, A. Zadeh, and L.-P. Morency, “Efficient low-rank multimodal fusion with modality-specific factors,” arXiv preprint arXiv:1806.00064, 2018.

- [3] P. Fung and E. Jebalbarezi Sarbijan, “Modality-based factorization for multimodal fusion,” in ACL 2019: The 4th Workshop on Representation Learning for NLP (RepL4NLP-2019): Proceedings of the Workshop, 2019.

- [4] D. Zhang, X. Ju, W. Zhang, J. Li, S. Li, Q. Zhu, and G. Zhou, “Multi-modal multi-label emotion recognition with heterogeneous hierarchical message passing,” in Proceedings of the AAAI conference on artificial intelligence, vol. 35, no. 16, 2021, pp. 14 338–14 346.

- [5] A. Zadeh, P. P. Liang, N. Mazumder, S. Poria, E. Cambria, and L.-P. Morency, “Memory fusion network for multi-view sequential learning,” in Proceedings of the AAAI conference on artificial intelligence, vol. 32, no. 1, 2018.

- [6] A. Zadeh, P. P. Liang, S. Poria, P. Vij, E. Cambria, and L.-P. Morency, “Multi-attention recurrent network for human communication comprehension,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 32, no. 1, 2018.

- [7] Y.-H. H. Tsai, S. Bai, P. P. Liang, J. Z. Kolter, L.-P. Morency, and R. Salakhutdinov, “Multimodal transformer for unaligned multimodal language sequences,” in Proceedings of the conference. Association for Computational Linguistics. Meeting, vol. 2019. NIH Public Access, 2019, p. 6558.

- [8] M. Chen, S. Wang, P. P. Liang, T. Baltrušaitis, A. Zadeh, and L.-P. Morency, “Multimodal sentiment analysis with word-level fusion and reinforcement learning,” in Proceedings of the 19th ACM international conference on multimodal interaction, 2017, pp. 163–171.

- [9] Y. Li, K. Zhang, J. Wang, and X. Gao, “A cognitive brain model for multimodal sentiment analysis based on attention neural networks,” Neurocomputing, vol. 430, pp. 159–173, 2021.

- [10] S. Mai, H. Hu, and S. Xing, “Modality to modality translation: An adversarial representation learning and graph fusion network for multimodal fusion,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 01, 2020, pp. 164–172.

- [11] Y. Li, Y. Wang, and Z. Cui, “Decoupled multimodal distilling for emotion recognition,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2023, pp. 6631–6640.

- [12] D. Yang, S. Huang, H. Kuang, Y. Du, and L. Zhang, “Disentangled representation learning for multimodal emotion recognition,” in Proceedings of the 30th ACM International Conference on Multimedia, 2022, pp. 1642–1651.

- [13] T. Harada, K. Saito, Y. Mukuta, and Y. Ushiku, “Deep modality invariant adversarial network for shared representation learning,” in 2017 IEEE International Conference on Computer Vision Workshops (ICCVW). IEEE, 2017, pp. 2623–2629.

- [14] J. He, H. Yanga, C. Zhang, H. Chen, Y. Xua et al., “Dynamic invariant-specific representation fusion network for multimodal sentiment analysis,” Computational Intelligence and Neuroscience, vol. 2022, 2022.

- [15] J. Sun, H. Yin, Y. Tian, J. Wu, L. Shen, and L. Chen, “Two-level multimodal fusion for sentiment analysis in public security,” Security and Communication Networks, vol. 2021, pp. 1–10, 2021.

- [16] V. Lopes, A. Gaspar, L. A. Alexandre, and J. Cordeiro, “An automl-based approach to multimodal image sentiment analysis,” in 2021 International Joint Conference on Neural Networks (IJCNN). IEEE, 2021, pp. 1–9.

- [17] Z. Jin, M. Tao, X. Zhao, and Y. Hu, “Social media sentiment analysis based on dependency graph and co-occurrence graph,” Cognitive Computation, vol. 14, no. 3, pp. 1039–1054, 2022.

- [18] J. Kober, J. A. Bagnell, and J. Peters, “Reinforcement learning in robotics: A survey,” The International Journal of Robotics Research, vol. 32, no. 11, pp. 1238–1274, 2013.

- [19] S. S. Mousavi, M. Schukat, and E. Howley, “Deep reinforcement learning: an overview,” in Proceedings of SAI Intelligent Systems Conference (IntelliSys) 2016: Volume 2. Springer, 2018, pp. 426–440.

- [20] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2016, pp. 770–778.

- [21] L. P. Kaelbling, M. L. Littman, and A. W. Moore, “Reinforcement learning: A survey,” Journal of artificial intelligence research, vol. 4, pp. 237–285, 1996.

- [22] Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” nature, vol. 521, no. 7553, pp. 436–444, 2015.

- [23] M. Soleymani, D. Garcia, B. Jou, B. Schuller, S.-F. Chang, and M. Pantic, “A survey of multimodal sentiment analysis,” Image and Vision Computing, vol. 65, pp. 3–14, 2017.

- [24] N. Sebe, I. Cohen, and T. S. Huang, “Multimodal emotion recognition,” in Handbook of pattern recognition and computer vision. World Scientific, 2005, pp. 387–409.

- [25] M. Spezialetti, G. Placidi, and S. Rossi, “Emotion recognition for human-robot interaction: Recent advances and future perspectives,” Frontiers in Robotics and AI, p. 145, 2020.

- [26] S. Wang, H. Shuai, and Q. Liu, “Phase space reconstruction driven spatio-temporal feature learning for dynamic facial expression recognition,” IEEE Transactions on Affective Computing, vol. 13, no. 3, pp. 1466–1476, 2020.

- [27] F. Takens, “Detecting strange attractors in turbulence,” in Dynamical systems and turbulence, Warwick 1980. Springer, 1981, pp. 366–381.

- [28] H. D. Abarbanel, R. Brown, J. J. Sidorowich, and L. S. Tsimring, “The analysis of observed chaotic data in physical systems,” Reviews of modern physics, vol. 65, no. 4, p. 1331, 1993.

- [29] M. Casdagli, S. Eubank, J. D. Farmer, and J. Gibson, “State space reconstruction in the presence of noise,” Physica D: Nonlinear Phenomena, vol. 51, no. 1-3, pp. 52–98, 1991.

- [30] Q. Li, D. Gkoumas, C. Lioma, and M. Melucci, “Quantum-inspired multimodal fusion for video sentiment analysis,” Information Fusion, vol. 65, pp. 58–71, 2021.

- [31] Z. Sun, P. Sarma, W. Sethares, and Y. Liang, “Learning relationships between text, audio, and video via deep canonical correlation for multimodal language analysis,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, no. 05, 2020, pp. 8992–8999.

- [32] Y. Wang, Y. Shen, Z. Liu, P. P. Liang, A. Zadeh, and L.-P. Morency, “Words can shift: Dynamically adjusting word representations using nonverbal behaviors,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, no. 01, 2019, pp. 7216–7223.

- [33] W. Rahman, M. K. Hasan, S. Lee, A. Zadeh, C. Mao, L.-P. Morency, and E. Hoque, “Integrating multimodal information in large pretrained transformers,” in Proceedings of the conference. Association for Computational Linguistics. Meeting, vol. 2020. NIH Public Access, 2020, p. 2359.

- [34] Z. Yuan, W. Li, H. Xu, and W. Yu, “Transformer-based feature reconstruction network for robust multimodal sentiment analysis,” in Proceedings of the 29th ACM International Conference on Multimedia, 2021, pp. 4400–4407.

- [35] S. Mai, Y. Sun, Y. Zeng, and H. Hu, “Excavating multimodal correlation for representation learning,” Information Fusion, vol. 91, pp. 542–555, 2023.

- [36] S. Mai, H. Hu, and S. Xing, “Divide, conquer and combine: Hierarchical feature fusion network with local and global perspectives for multimodal affective computing,” in Proceedings of the 57th annual meeting of the association for computational linguistics, 2019, pp. 481–492.

- [37] S. Mai, S. Xing, and H. Hu, “Analyzing multimodal sentiment via acoustic-and visual-lstm with channel-aware temporal convolution network,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 29, pp. 1424–1437, 2021.

- [38] T. Rashid, M. Samvelyan, C. S. De Witt, G. Farquhar, J. Foerster, and S. Whiteson, “Monotonic value function factorisation for deep multi-agent reinforcement learning,” The Journal of Machine Learning Research, vol. 21, no. 1, pp. 7234–7284, 2020.

- [39] J. Foerster, G. Farquhar, T. Afouras, N. Nardelli, and S. Whiteson, “Counterfactual multi-agent policy gradients,” in Proceedings of the AAAI conference on artificial intelligence, vol. 32, no. 1, 2018.

- [40] J. R. Kok and N. Vlassis, “Collaborative multiagent reinforcement learning by payoff propagation,” Journal of Machine Learning Research, vol. 7, pp. 1789–1828, 2006.

- [41] M. K. Hasan, S. Lee, W. Rahman, A. Zadeh, R. Mihalcea, L.-P. Morency, and E. Hoque, “Humor knowledge enriched transformer for understanding multimodal humor,” in Proceedings of the AAAI conference on artificial intelligence, vol. 35, no. 14, 2021, pp. 12 972–12 980.

- [42] J. Pennington, R. Socher, and C. D. Manning, “Glove: Global vectors for word representation,” in Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), 2014, pp. 1532–1543.

- [43] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “Bert: Pre-training of deep bidirectional transformers for language understanding,” arXiv preprint arXiv:1810.04805, 2018.

- [44] G. Degottex, J. Kane, T. Drugman, T. Raitio, and S. Scherer, “Covarep—a collaborative voice analysis repository for speech technologies,” in 2014 ieee international conference on acoustics, speech and signal processing (icassp). IEEE, 2014, pp. 960–964.

- [45] S. Mai, Y. Zeng, S. Zheng, and H. Hu, “Hybrid contrastive learning of tri-modal representation for multimodal sentiment analysis,” IEEE Transactions on Affective Computing, 2022.

- [46] X. Chen, M. Cao, H. Wei, Z. Shang, and L. Zhang, “Patient emotion recognition in human computer interaction system based on machine learning method and interactive design theory,” Journal of Medical Imaging and Health Informatics, vol. 11, no. 2, pp. 307–312, 2021.

- [47] A. Zadeh, R. Zellers, E. Pincus, and L.-P. Morency, “Multimodal sentiment intensity analysis in videos: Facial gestures and verbal messages,” IEEE Intelligent Systems, vol. 31, no. 6, pp. 82–88, 2016.

- [48] A. B. Zadeh, P. P. Liang, S. Poria, E. Cambria, and L.-P. Morency, “Multimodal language analysis in the wild: Cmu-mosei dataset and interpretable dynamic fusion graph,” in Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), 2018, pp. 2236–2246.

- [49] C. Busso, M. Bulut, C.-C. Lee, A. Kazemzadeh, E. Mower, S. Kim, J. N. Chang, S. Lee, and S. S. Narayanan, “Iemocap: Interactive emotional dyadic motion capture database,” Language resources and evaluation, vol. 42, pp. 335–359, 2008.

- [50] A. Tampuu, T. Matiisen, D. Kodelja, I. Kuzovkin, K. Korjus, J. Aru, J. Aru, and R. Vicente, “Multiagent cooperation and competition with deep reinforcement learning,” PloS one, vol. 12, no. 4, p. e0172395, 2017.

- [51] T. Baltrušaitis, C. Ahuja, and L.-P. Morency, “Multimodal machine learning: A survey and taxonomy,” IEEE transactions on pattern analysis and machine intelligence, vol. 41, no. 2, pp. 423–443, 2018.

- [52] M. Minsky, The emotion machine: Commonsense thinking, artificial intelligence, and the future of the human mind. Simon and Schuster, 2007.

- [53] L. Gao, L. Qi, E. Chen, and L. Guan, “Discriminative multiple canonical correlation analysis for information fusion,” IEEE Transactions on Image Processing, vol. 27, no. 4, pp. 1951–1965, 2017.

- [54] Q.-S. Sun, S.-G. Zeng, Y. Liu, P.-A. Heng, and D.-S. Xia, “A new method of feature fusion and its application in image recognition,” Pattern Recognition, vol. 38, no. 12, pp. 2437–2448, 2005.

- [55] Z. Lian, L. Chen, L. Sun, B. Liu, and J. Tao, “Gcnet: graph completion network for incomplete multimodal learning in conversation,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023.

- [56] B. Xing and I. W. Tsang, “Relational temporal graph reasoning for dual-task dialogue language understanding,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023.

- [57] S. Fan, Z. Shen, M. Jiang, B. L. Koenig, M. S. Kankanhalli, and Q. Zhao, “Emotional attention: From eye tracking to computational modeling,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 2, pp. 1682–1699, 2022.

- [58] Z. Zeng, M. Pantic, G. I. Roisman, and T. S. Huang, “A survey of affect recognition methods: audio, visual and spontaneous expressions,” in Proceedings of the 9th international conference on Multimodal interfaces, 2007, pp. 126–133.

- [59] Z. Deng, Z. Fu, L. Wang, Z. Yang, C. Bai, T. Zhou, Z. Wang, and J. Jiang, “False correlation reduction for offline reinforcement learning,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023.

- [60] Y. Jiang, C. Li, W. Dai, J. Zou, and H. Xiong, “Variance reduced domain randomization for reinforcement learning with policy gradient,” IEEE Transactions on Pattern Analysis and Machine Intelligence, 2023.

- [61] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.