ifaamas \acmConference[AAMAS ’21]Proc. of the 20th International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2021)May 3–7, 2021London, UKU. Endriss, A. Nowé, F. Dignum, A. Lomuscio (eds.) \copyrightyear2021 \acmYear2021 \acmDOI \acmPrice \acmISBN \acmSubmissionID361 \affiliation \institutionMicrosoft Research \cityBeijing \countryChina \affiliation \institutionBeijing University of Posts and Telecommunications \cityBeijing \countryChina \affiliation \institutionMicrosoft Research \cityBeijing \countryChina \affiliation \institutionHong Kong University of Science and Technology \cityHong Kong \countryChina \affiliation \institutionMicrosoft ARD Incubation Team \cityBeijing \countryChina \affiliation \institutionMicrosoft Research \cityBeijing \countryChina \affiliation \institutionMicrosoft Research \cityBeijing \countryChina

Cooperative Policy Learning with Pre-trained Heterogeneous Observation Representations

Abstract.

Multi-agent reinforcement learning (MARL) has been increasingly explored to learn the cooperative policy towards maximizing a certain global reward. Many existing studies take advantage of graph neural networks (GNN) in MARL to propagate critical collaborative information over the interaction graph, built upon inter-connected agents. Nevertheless, the vanilla GNN approach yields substantial defects in dealing with complex real-world scenarios since the generic message passing mechanism is ineffective between heterogeneous vertices and, moreover, simple message aggregation functions are incapable of accurately modeling the combinational interactions from multiple neighbors. While adopting complex GNN models with more informative message passing and aggregation mechanisms can obviously benefit heterogeneous vertex representations and cooperative policy learning, it could, on the other hand, increase the training difficulty of MARL and demand more intense and direct reward signals compared to the original global reward. To address these challenges, we propose a new cooperative learning framework with pre-trained heterogeneous observation representations. Particularly, we employ an encoder-decoder based graph attention to learn the intricate interactions and heterogeneous representations that can be more easily leveraged by MARL. Moreover, we design a pre-training with local actor-critic algorithm to ease the difficulty in cooperative policy learning. Extensive experiments over real-world scenarios demonstrate that our new approach can significantly outperform existing MARL baselines as well as operational research solutions that are widely-used in industry.

Key words and phrases:

Multi-agent Reinforcement Learning, cooperative policy learning, graph attention network for state representation, pre-training method1. Introduction

Cooperative multi-agent systems (MAS) (Panait and Luke, 2005) have been employed to deal with a wide range of real-world applications, such as large scale fleet management (Lin et al., 2018), empty container repositioning (Li et al., 2019b), and traffic signal control (Wei et al., 2019). Instead of following classical single-agent planning methods, cooperative MAS stands essentially as a more efficient paradigm to resolve the global optimization task through decentralized agents with a relatively-simplified agent-wise problem-solving scheme. Essentially, the success of such cooperative MAS highly relies on its capability to model the cooperation between agents with the purpose of pursuing a maximized global utility. In reality, to bolster cooperation among complex relationships between agents and environmental entities, it is indispensable for each agent to fully perceive the state information about other inter-correlated agents and environmental entities.

To this end, previous studies (Jiang et al., 2020; Malysheva et al., 2019) proposed to model the entire environment with an interaction graph where vertices represent the agents and environmental entities and edges exist when two vertices can interact with each other. And, they leveraged Graph Neural Networks (GNN) (Kipf and Welling, 2017; Veličković et al., 2018; Hamilton et al., 2017) to propagate critical cooperation signals between inter-connected agents within the Multi-Agent Reinforcement Learning (MARL) framework (Hu et al., 1998). However, while the vanilla GNN model can perform well in relatively simple games, two major weaknesses limit its representability to capture the state information through the interaction graph in many complex real-world scenarios.

First, the generic procedure of message propagation in vanilla GNN assumes that the vertices in the interaction graph are homogeneous. In practice, however, the vertices can be heterogeneous, which impedes the efficient message passing due to the incompatible feature spaces between heterogeneous vertices. Furthermore, another weakness lies in simply using pooling function, such as average and maximization, as message aggregation, which may cause significant information loss or the over-smoothing problem. Although more expressive pooling functions such as LSTM have been employed to enhance GNN structures Hamilton et al. (2017), none of them, to our best knowledge, has been applied in MARL scenarios.

Henceforth, it becomes necessary to design a more expressive GNN with more informative message passing and aggregation mechanisms to increase the individual agent’s ability to grasp the complex representations of neighbors’ messages. Such enhancement over GNN, on the other hand, is likely to encounter a dilemma. Specifically, it may lead to drastically-soaring training difficulties, in terms of requiring either stronger training signals or much more experience, for each agent to converge into an effective cooperative policy. However, the signals measuring the accomplishment of the global optimization goals, e.g. the total value of served orders in a ride-sharing platformLin et al. (2018) or total shortage of empty containers in ocean transportation Li et al. (2019b), are influenced by the entangled actions from multiple agents and one agent could hardly evaluate its own actions with limited experiences especially when other agents’ policy is also non-stationary. Merely leveraging such global signals and letting agents learn from scratch will lead to poor sample efficiency and make the training process unbearably slow.

To obtain effective information representation from the heterogeneous interaction graph and learn the cooperative policy for decentralized agents, in this paper, we propose a new multi-agent reinforcement learning framework. Particularly, this framework leverages the graph attention with a delicately designed encoder-decoder structure for efficient message passing and aggregation. Moreover, we introduce a pre-training technology to alleviate the difficulty of learning the cooperative policy with global rewards.

More concretely, to magnify the GNN’s representability in the process of information passing and aggregation, we employ an encoder-decoder based graph attention network (EncGAT). Similar to its previous success in the NLP domain, this approach can consecutively embed a sequence of neighboring vertices into a high-level representation space with a self-attention network and then generate the aggregated feature through a decoder attention network with the feature of the vertex itself as the query, the details of which are demonstrated in Figure 2.

To ease the difficulty in learning cooperative policy for decentralized agents with global rewards, we propose a pre-training with a local actor-critic (PreLAC) algorithm. Specifically, a pre-training phase is designed to learn a selfish policy for each agent by using local rewards. Since local rewards, which are naturally existing in most global optimization scenarios (e.g. regional revenue of ride-sharing Lin et al. (2018) and port-level container shortage Li et al. (2019b)), are mainly affected by the corresponding individual agent and its neighbors, it is much easier to train a selfish policy, specified by a local actor and a local critic with the shared EncGAT layer, for each agent. Following this pre-training, a formal training phase will be carried out, in which decentralized agents with cooperative local actors and a shared global critic will be continuously trained, starting from using the pre-trained EncGAT layer, towards maximizing the global rewards. To avoid parameter interference, local critics are still working in the formal training phase for the parameters updating in EncGAT. Compared to the straightforward approach with a simple combination of global and local rewards, the PreLAC algorithm does not need to manually trade off between convergence and optimality, which can thus result in a more effective cooperative policy with less manual intervention.

To evaluate the effectiveness of our framework, we conduct experiments over a real-world problem, i.e., empty container repositioning, in the scenario of ocean transportation. Extensive experiments have shown that our new framework can significantly outperform either the operations research solution, a widely used industry standard, or existing GNN-powered MARL baselines. Further investigations have also been conducted to demonstrate the advantages of the proposed encoder-decoder attention structure and the introduction of the pre-training task in terms of their effectiveness in accelerating the convergence and improving the ultimate global performance.

Our main contribution can be summarized as follows:

-

•

We propose an encoder-decoder based graph attention (EncGAT) layer for representation learning of the complicate interactions between heterogeneous entities in the MAS with global optimization goal.

-

•

We introduce the pre-training with local actor-critic (PreLAC) algorithm for cooperative policy learning, in which the selfish policy learning with the purpose of local rewards maximization is taken as a pre-trained task and the pre-trained EncGAT parameters will be used for further cooperative policy learning.

-

•

Extensive experiments are conducted on the practical empty container repositioning (ECR) problem and the results demonstrate the advantages of the proposed framework.

In addition to the ECR problem, the proposed EncGAT-PreLAC framework in fact can be applied to a series of global optimization scenarios, where local rewards can be defined. These scenarios include but are not limited to vehicle re-balancing in ride-sharing, traffic control, and even football match.

2. Related Work

Recent years have witnessed the increasing research attention on applying cooperative multi-agent systems into many application scenarios. Previously, traditional operational research (OR) methods were widely used to achieve global optimization in a centralized way (Epstein et al., 2012; Song and Carter, 2009; Song and Dong, 2012). However, the prerequisite of both the forecasting module and artificial constructions of mathematical objectives and constraints gave rise to unsatisfactory performance in practice since OR methods are difficult to be adapted to a dynamic environment. With the recent rapid development of reinforcement learning, a rising number of efforts have turned to formulate MAS into stochastic games and then solve them using the multi-agent reinforcement learning (MARL) approach. There have been a couple of corresponding practices, such as empty container repositioning (Li et al., 2019b), shared bike repositioning (Li et al., 2018; Pan et al., 2019; Ghosh et al., 2017), ride-sharing fleet management (Fagnant and Kockelman, 2018; Lin et al., 2018), traffic light control (Wei et al., 2019), and cloud resource allocation (Wu et al., 2011; Liu et al., 2017).

Yet, in many real-world MAS with complex networks, one critical challenge, particularly in terms of how to feed each agent with the informative representation of a comprehensive observation scope for its decision-maker, has not been fully addressed. Some previous studies simply produced such representation using pre-defined contextual statistics, which has to be coupled with specific scenarios and apparently limits the generality. Most recently, GNN has been increasingly used to learn and enhance the representation of observation scope in the non-Euclidean space. For instance, MAGnet (Malysheva et al., 2019) learns relevance information from the observation in the form of a relevance graph, where relation weights are learned by pre-defined loss function based on heuristic rules. The relation weights in DGN (Jiang et al., 2020) are learned with a temporal regularization under the assumption that the attention of one agent does not change much in two adjacent time steps, which is not always true in practical problems yet. Another work (Agarwal et al., 2020) leverages the inductiveness of graph attention to learn a transferable policy. There have also been recent efforts to use GNN to enhance collaboration in a practical problem called traffic signal control (Wei et al., 2019; Devailly et al., 2020).

However, all the studies above treat the observation as an isomorphic graph, ignoring the heterogeneity of agents and environmental entities commonly existed in real-world scenarios. A most recent work (Sun et al., 2020) exploits the sparsity of interactions between agents and tackles the problem of heterogeneity of agents from different teams. Other than (Sun et al., 2020), in this paper, we focus on the gap of feature space between both agents and environmental entities and design a new graph attention based aggregation approach.

There are also some existing studies paying attention to enhancing the training signal. Counterfactual advantage (Foerster et al., 2018) is used for credit assignment to filtering out contributions of other agents. Cooperative reward mechanism design (Li et al., 2019b) is used to compute the cooperative local reward. Value decomposition (Sunehag et al., 2018; Rashid et al., 2018) is also a hot topic to solve the problem of cooperative MARL with a single joint reward signal. In this paper, we leverage the direct local reward to accelerate the training, but their methods can be integrated into our framework.

In the field of GNN, our work can be viewed as a graph-level training task with vertex-level pre-training. Recently, pre-training GNNs in supervised or semi-supervised tasks have also been studied in several works(Hu* et al., 2020; Navarin et al., 2018), but our pre-training pipeline is quite different from theirs. In addition, there are several studies(Wang et al., 2019) about the heterogeneous graph. However, our proposed networks introduce a different encoder-decoder structured aggregation function. Besides, our work aims to solve the sequential decision-making task, which basically yields a different problem domain other than theirs.

3. Problem Formulation

Heterogeneous interaction graph.

In many practical MAS, agents need to cooperate to achieve a maximized global utility. An interaction graph can be constructed to characterize the interaction between agents, e.g. fleets (Lin et al., 2018) and ports (Li et al., 2019b), and environment entities, e.g. wooden walls (Malysheva et al., 2019). Specifically, the vertices, in the interaction graph, are the agents and environmental entities, while edges stand for their corresponding interactions. Because agents and environmental entities usually yield different types, the interaction graph is essentially a heterogeneous graph. We follow the previous work (Sun and Han, 2013) to formally define the heterogeneous graph.

Definition \thetheorem.

A heterogeneous interaction graph, denoted as , is a graph including a set of vertices and a set of directed edges , where is the set of agents. In , we denote the set of the types of vertices and edges as and , and denote the type of vertex and edge as and . The set of the types of edges that are connected to vertex are denoted as . Then we define the mapping function from the vertex to the set of neighbors that connected by the edges of type as .

MAS with heterogeneous interaction graph.

We formally model the MAS with heterogeneous interaction graph as a Semi-POMDP . More concretely, denotes the aforementioned agent set; denotes the action set specified by the joint action space of all agents, i.e., ; represents the global state that can be defined by a heterogeneous interaction graph comprised of the agent vertices, environmental entity vertices and interaction edges ; represents the set of each agent’s observations, which is the joint observation space, i.e., , where is the observation space of agent and contains the local vertices and interaction edges near the agent; is the global reward function, which is defined as ; note that, in most of MAS, there exists local rewards for each agent111For examples, scores of a football player, orders completed by a driver and shortage of containers at a port., and we can similarly define the local reward function ; is the transition probability function , where is the probability simplex over the next global state and the time interval is non-constant time interval from current state to the next state; is the discount factor on the unit time interval The policy of agent is defined as a function . The joint policy is defined as .

4. Proposed Framework

We introduce our framework based on the multi-agent A2C algorithm for convenience. In fact, our framework can be easily extended to other actor-critic based algorithms. As is shown in Figure 1, the proposed EncGAT-PreLAC framework is composed of two parts: an encoder-decoder graph attention (EncGAT) model and a pre-training procedure with local actor-critic (PreLAC). The EncGAT model is designed to learn the informative representation of the interaction graph, which will be fed into both the actor and critic networks to facilitate learning the cooperative policy. However, introducing the delicate-designed EncGAT model into the A2C algorithm makes the learning process more difficult in MAS. To improve both the learning efficiency and the effectiveness of the policy, we first leverage the local reward to train multiple selfish local actors and critics with the EncGAT model. Then, we initialize the final multiple actors and the global critic with the pre-trained EncGAT and conduct the fine-tuning with the global reward. More details are introduced in the following sub-sections.

4.1. Encoder-Decoder Based Graph Attention for State Representation

As aforementioned, the vertices heterogeneity together with the intricate interactions between them prevents vanilla GNN to effectively learn the informative representations from the complex interaction graph. The EncGAT model is designed to solve these problems.

The encoder-decoder based attention procedure is shown in the figure 2. For vertex (agent) with feature vector , its neighbor set with type is denoted as and the corresponding feature matrix is . The calculation procedure of the encoder is based on self-attention, which is similar to the transformer block and shown below:

| (1) | ||||

where Att is the scaled dot product attention which is the same as that in Transformer Vaswani et al. (2017), , and are the projection matrices of query, key and value in the attention function, and are the square matrices of the linear layers, and are the bias parameters, LN is the layer norm and ReLU is the ReLU activation function. The final embedding is already transformed into the same vector space with in case that is not of type . We the whole encoder process as the function Enc. Through the function Enc, the feature of each neighboring vertex of is represented by considering other neighbors’ information in the Att function.

The aggregation among the same type of neighbors is conducted through the decoder attention as is shown in the left part of the figure 2. In decoder attention, another scaled dot product attention is applied, which uses the feature vector of , i.e. , as the query, and the encoded neighbors’ features matrix as the keys and values. To align the dimension, the feature vector is first expanded as a matrix . The mathematical description is shown as follows:

| (2) | ||||

where , and are the projection matrices of query, key and value in the attention function, and are the square matrices of linear layers and and are the bias parameters. Then we squeeze the expanded dimension of into the vector

Finally, as the neighbors of vertex may have different types, we simply concatenate all the output as the final feature of vertex . The expression is as

4.1.1. Discussion on EncGAT

Indeed, we provide a new perspective on the cooperative information aggregation within a heterogeneous graph through EncGAT. To cooperate with other agents, an agent must perceive the heterogeneous information from neighbors. The success of applying the self-attention architecture in the natural language processing tasks inspires us to leverage the same idea to enforce cooperative information aggregation. For one thing, it enables the agent to parse the contextualized interactions with each individual neighbor given all other neighbors. Moreover, the encoder-decoder attention can eliminate the feature gap between two heterogeneous vertices by mapping them into a unified representation space and reduce the difficulty in cooperative information extraction. The vanilla graph attention in fact can be seen as a simplified case without the encoder, which considers the interaction with each neighbor independently and then aggregates with a simple summation. This can only model the simplest linear combinational interactions of neighbors and will fail to model complex combinational interactions such as the relative order of consumer and producer in the inventory management, as is stated in Section 1. The self-attention we use in the encoder can be seen as weaving a complete graph between the neighbors of the same type and conducting the graph attention in these ‘sub-graphs’. With the aid of self-attention in the encoder, the feature of each neighbor is calculated based on contextualized information from other neighbors such that it can capture the complex reactions in the interactions.

In addition, the encoder-decoder structure we state here differs from the Transformer (Vaswani et al., 2017) in that the decoder in our model only gets a single vector as query, rather than a sequence. Therefore, no self-attention on the query is used in the decoder. The recent work GTR (Li et al., 2019a) uses transformer blocks in graph attention, which directly adds up features of the vertex and the weighted neighborhood in inter-graph message passing. It can be seen as the decoder-only version of EncGAT.

4.2. Pre-Training with Local Actor-Critic

A crucial challenge of training the EncGAT lies in that a direct optimization towards the global reward may lead to insufficient observation understanding, which will slow down the convergence and even erode the performance of the learned cooperative policy. In this paper, we manage to address this problem by proposing the pre-training with the local actor-critic (PreLAC) algorithm.

In the local actor-critic task, each agent is trained to maximize its own interest, that is the discounted sum of its local reward , where is the time-step. It encourages each agent to learn the concrete business logic about how the local reward is generated and how the neighborhood interactions affect the reward generation.

As is shown in figure 1, the EncGAT network is used as a shared embedding module for the actor and critic networks in the pre-training task. It receives the vertex-level training signals from the local actor and local critic headers. The Formula (3) shows the training loss in this stage.

| (3) | ||||

where denotes the local reward of agent at time step , while refers to the interval from current state to the next state, following the Semi-POMDP setting in Section 3; is the local value function under the local policy of ; is the action of agent ; and the hyper-parameter is the weight of the critic loss. As we can see, the policy loss and the critic loss are computed based on the advantage function derived from the local critic. The training follows the vanilla Actor-Critic algorithm, thus the in the third formula are detached in back-propagation and hence will not affect the update of the critic headers.

In the fine-tuning stage, we reinitialize the local actor headers for each agent and create a new global critic header, while the parameters of EncGAT and local critic headers are inherited from the pre-trained part. Different from the pre-training, the global reward is used as a training signal for the local actors’ network (including the shared EncGAT and actor header of each agent) and the global critic network, which encourages cooperation towards the global goal. Meanwhile, to prevent the training collapse and avoid the interference of random initialed parameters, we keep using the local critic loss in this stage to supervising the update of the shared EncGAT part with the agent-level local loss derived from local critics. Therefore, the loss function can be formally defined as the linear combination of three parts: the local critic loss , the global critic loss and the actor loss . That is

| (4) | ||||

where denotes the global reward, represents the global state advantage, is the global value function under the joint policy and the hyper-parameter and are the weights of the local critic loss and the global critic loss. Similar to the pre-training stage, the gradient of the advantage is detached in the training and will not affect the update of the global critic header.

4.3. Algorithm of EncGAT-PreLAC Framework

The whole EncGAT-PreLAC framework can be summarized as Algorithm 1. For simplicity of description, we assume all agents belong to the same type while other vertices in the interaction graph can be heterogeneous in the algorithm. Meanwhile, the actor header and critic header are shared among all agents, which can be overwritten according to actual demand. In addition, our framework supports both the synchronous and asynchronous decision of agents, thus we denote as the set of actionable agents at the time step in both the pre-training and the training procedure.

5. Experiments

We evaluate our framework on the empty container repositioning problem (ECR) in ocean transportation, which cost billions of dollars per year for shipping companies.

5.1. Task Description

Empty containers are the core resource used to load goods in ocean transportation. The serious imbalance of import and export among countries and regions all around the world results in extreme imbalance of supply and demand (SnD) for empty containers. With reposition operation, some ports will stack large amount of containers with high storage cost while some other ports have no containers to satisfy customers’ shipping requirements. Obviously, both cases will cause serious losses for the shipping companies and hinder the global trade. Empty container repositioning is to leverage the remaining capacity in vessels to explicitly transfer empty containers between deficit ports and surplus ports. Each vessel sails around a route and the travel time is influenced by unpredictable factors such as weather and ocean current. When a vessel arrives at a port, it first loads and discharges cargoes, and then triggers the repositioning action of the port to load to or discharge from the vessel a certain amount of empty containers.

We model the ECR problem as a MAS as follows. We assign an agent to each port to manage the containers. When a vessel arrives at the port, the corresponding agent is triggered to make a repositioning decision, which is in fact an event-driven reinforcement learning Menda et al. (2019); Li et al. (2019b). The repositioning action space is where action 0 means discharging all empty containers on the vessel and action 21 means loading all empty containers of the port on board. Other actions are evenly distributed with a step of 10 percent. The observation of an agent is its own information and that of related ports and vessels. The optimization goal is to minimize the total shortage of empty containers to fulfill the transportation needs because of the SnD imbalance among ports, which is, on the contrary, to maximize the amount of the fulfilled demand. Therefore, we define the global reward as a homogeneous linear function of the number of empty containers used to fulfill transportation orders at a certain time period, that is , where is a positive scale factor, is the number of ports and is the quantity of consumed containers in port at the time period . At the same time, the most straightforward definition of the local reward for the port is the value .

To realize the efficient repositioning, the agents should have the ability to extract useful information from the observation on the heterogeneous interaction graph. As the interactions happen when a vessel arrives at a port, the arrival time of the vessels from different routes plays an important role in decision making. For example, suppose there are two routes, the first one is in lack of containers and the second is rich in containers, namely the demand route and supply route. Two vessels from these routes will arrive at a port in particular time steps. Obviously, whether the vessel in the demand route arrives first or second will substantially affect the optimal repositioning decision of this port, e.g, reserving containers for the incoming vessel or using them out. Such a relative order of two vessels actually poses the requirements for our model to learn the complicate interactions among neighbors, which the vanilla pooling functions like averaging and maximizing will fail to capture as we state in Section 1. Another necessary feature for the agents is the cooperation between agents based on agents’ comprehensive understanding of the observations. A common phenomenon is that the demand routes and supply routes are not necessarily connected and agents should learn to play as brokers to conduct the multi-hop repositioning.

5.2. Data and Simulator

To build the simulator for the ECR problem, we employ the public routes of an international transportation company, including 22 ports located in main trading countries, 13 routes connecting these ports, and 46 vessels sailing on the routes. The concrete settings of the route topology are attached in the supplementary material. The shipment data between any two ports is generated based on the inter-country trading statistics released by WTO in 2019. Because only the yearly statistics are available while the temporal resolution of the simulation is daily, we distribute the total transportation amount into each time-step by two alternative rules. The first is the simple average, the game setting of which is called ‘normal’ and the second is to distribute the amount by a trend function, which is the multiplication of two trigonometric functions with different periods, i.e., 112 time-steps and 28 time-steps, the game setting of which is called ‘hard’. The transportation time between two ports is computed by their sea distance. At last, noises are added in both transportation time and trading amount to include the environmental uncertainty in each episode.

5.3. Input and EncGAT implementation

In the ECR problem, there are two kinds of vertices in the interaction graph, ports and vessels denoted as and respectively. We establish edges from one port to other ports and vessels in the same route, which represents the transportation interactions. For the port, the input features include the quantities of the empty containers, the reserved empty containers, the laden, the laden on board, transportation orders, the capacity, and the remaining space. The statistical information is also included such as the quantities of the total orders, the fulfilled orders, the failed orders, and their accumulated values in the past 5 time-steps. The input features of the vessel are 7 variables which are the quantities of the empty containers, the laden, the capacity, the remaining space, the accumulated quantities of empty containers, laden, and their difference. As each agent has to learn to predict trend of the inventory and plan for the future, it can hardly determine the strategy with only the input of the current information. Therefore, we let each agent look back a period. We denote the input features of each vertex over the time period as .

The network structure is shown in Figure 6. It follows the general design of the proposed framework, but we add three features based on the characteristics of the ECR problem. The first one is the temporal attention, which is used to capture the temporal information of the temporally sequential input, is added before the EncGAT module. For example for vertex , the temporal embedding is computed as follows:

| (5) |

where , and are the projection matrices for the query, key and value respectively. In the experiment, the lookback period is set to 20. The second one is that to consider the edge feature like the arriving time and the distance, we concatenate it with the features of the corresponding neighbor before feeding it to EncGAT. The third one is that the representations of both the port and the current vessel are fed into the actor header since the vessel situation is important for decision making.

Finally, we stack two encoder-decoder attentions in the EncGAT and residual connections are used to prevent over-smoothing. The actor and critic headers consist of two fully-connected layers also with residual connections. We share the headers of actor and local critic between agents, which makes the overall framework inductive (Hamilton et al., 2017). Empirical studies show the generality of policy with the parameter sharing, which is illustrated in the supplemental file.

5.4. Comparison with Baselines

| Section | Implementation Details | Simulation Mode | ||

| Normal | Hard | |||

| Baseline | No repositioning | |||

| Online LP | ||||

| DGN in A2C | ||||

| Normal GC | ||||

| PreLAC | Separate actors normal GC | |||

| Ablation | Separate actors PreLAC | |||

| Study | EncGAT | Single decoder attention | ||

| GAT | ||||

| LSTM | ||||

| Our Method (EncGAT-PreLAC) | ||||

In this section, we compare our framework with existing baseline methods with the total fulfillment ratio, that is the ratio of fulfilled demands to all demands, as the evaluation metric. To keep fairness, we search the learning rate for all deep learning algorithms and fix other hyper-parameters. We run 5 times for each one with the best learning rate. The results are shown in Table 1. It should be pointed out that since most of the ports play the roles of exporter and importer at the same time, the total ratio is around 80 percent if no repositioning is done.

Online linear programming (LP). In this method, the ECR problem is modeled as an integer linear programming (ILP) problem with mathematical definitions. Here, we use the baseline method in (Li et al., 2019b), which applies the rolling horizon policy to do periodic planning. It solves the ILP problem for a long period and only takes the first few plans into action, which prevents the deviation of planning from reality. The planning results are largely influenced by the unpredictable noise in the environment, which is the reason why it underperforms our method. Besides, the online computational cost of searching ILP solutions is much larger than our method.

DGN in A2C version. We chose one of the recent GNN based MARL frameworks called DGN as another baseline and adapt their key contributions into the multi-agent A2C framework, i.e., the network structure and temporal relation regularization. DGN directly uses the local reward to train, under the latent assumption that the local reward encourages cooperation, which is not always held in many real-world problems such as ECR. Therefore, we train it with artificially designed local reward, which is a combination of the global and the local reward. As you can see, we also outperform them in both normal and hard mode.

5.5. Ablation Study

The ablation studies are used to answer two questions: (1). Does the encoder-decoder attention in EncGAT help heterogeneous information aggregation and intricate interaction understanding? (2). Does PreLAC improve learning performance of cooperative policy?

Encoder-Decoder Attention. We compare our encoder-decoder attention with alternative aggregation functions, i.e. single decoder attention, GAT (Veličković et al., 2018) and LSTM (Hamilton et al., 2017). The single decoder attention directly puts the neighborhood sequence to the attention function in the decoder network introduced in Section 4.1. In the task of the normal mode, its performance is close to our method, but in the task of hard mode, the performance drops by 2%. The reason is that in the hard mode, the increased noise in the simulation data like the vessels’ sailing time and the quantity of orders requires agents to cooperate against uncertainty, making the interactions more complicated. GAT is different from the single decoder attention in the computation of the attention coefficients. In the original implementation of GAT, the attention coefficients are calculated with a single-layer feedforward network. The result underperforms the dot-product based single decoder attention, which indicates that the way to calculate the attention coefficients may significantly influence the result. We also try the LSTM aggregator introduced in (Hamilton et al., 2017). Although LSTM also has the ability to consider the inter-correlated influence from neighborhood sequence on an agent, the result is relatively poor. We think the reason is that the recurrent structure makes it difficult to learn with weak signals.

Pre-training with local actor-critic. To analyze how the pre-training algorithm improves learning performance, we first train the model directly with the global reward (Normal GC). It yields a poor performance especially in the hard mode, which is nearly the same as no repositioning at all. To further understand the result, we visualize the learned embedding of each port by mapping the output vector after EncGAT to the two-dimension space with principal component analysis(PCA). As is shown in Figure 4 where the data points of the same port are painted in the same color, we see that with the enhancement of pre-training, the data points regularly gather to several clusters compared to the mass without pre-training in the left picture. This indicates that without the local actor-critic task, the graph layers fail to learn distinguishable vertex embeddings, which will puzzle the succeeding actor and critic headers. An interesting experiment is also conducted to see the performance if we do not share the actor header between agents (separate actors normal GC), which leads to a little improvement. The reason is that the separate actor headers learn simple but different policies for agents regardless of the poor embedding input. Finally, we also try not to share actor headers in our framework (separate actors PreLAC). The result shows about a 2% drop in performance, which indicates that the parameter sharing, as a space to share experience between agents, helps train a better policy.

Inductiveness and generality. The inductiveness in the field of GNNs means the ability to generalize to unseen graph structures. Because the EncGAT itself is inductive and we share the actor headers among agents, the policy should be inductive too. Henceforth, we further conduct experiments to analyze the generality of the learned policy when the interaction graph changes, e.g., the changes of the topology and the trading distribution in the ECR problem. In particular, we first merge two ports into one port that takes the roles of the original two ports in their own route. Then, we randomly generate the trading distribution, which is different from the distribution generated from the WTO’s data. We plot the heat-map based on the trading quantity in the right of Figure 5 and compare it with the original one in the left of Figure 5. As the distribution completely differs from the original one, the role each port plays and the interactions between agents are different. The normal and hard modes are also defined in this setting. We test the performance of the model trained on the original setting on this new topology directly. The result in Table 2 shows significant improvements in the fulfillment ratio compared to that with no repositioning.

| Mode | Trained model | No repositioning |

|---|---|---|

| Normal | ||

| Hard |

6. Conclusion and Future Work

We have proposed a GNN based MARL framework, EncGAT-PreLAC, to solve the emerging challenges in practical cooperative MAS. To extract cooperative information from the heterogeneous interaction graph, we leverage the encoder-decoder structure in graph attention to embed heterogeneous features as well as model intricate interactions. To alleviate the training difficulty of GNN under the weak global reward signal, we introduce the pre-training task empowered by the vertex-level local reward. Extensive experiments are conducted to solve a practical problem called ECR and the results show the advantages of our method. Nevertheless, some other challenges remain untouched. Firstly, the credit assignment problem becomes serious in larger-scaled cooperative tasks. It is interesting to combine techniques like different rewards with our EncGAT for a better assignment. Secondly, the interaction information is sometimes unreliable because of either noise or deliberate interference like hacking in real-world problems. How to make cooperation robust to dirty information is practical and promising. We will conduct our work in these two aspects in the future.

7. Appendix

In this section, we will introduce additional experiments and analysis.

7.1. Source code

We attach the source code under the folder ‘code/’. The simulator we use is open-sourced, whose link is ‘github.com/microsoft/maro’. All the configurations of experiments are listed in the YAML file ‘config.yml’ and all the configurations of the ECR simulation are listed in four YAML files, which are ‘normal.yml’, ‘hard.yml’, ‘shuffle_normal.yml’ and ‘shuffle_hard.yml’ corresponding to two modes in the original topology and two modes in the derived topology. To reproduce the result, you should first download the simulator project from the github, subsitute the configuration file of the ECR simulation, then set the ‘model:setting’ field in ‘config.yml’ with the experiment name. For example, if you want to reproduce result of the EncGAT-PreLAC framework in the hard setting of the original topology, you should choose the file ‘hard.yml’ and change the field ‘model:setting’ to ‘encgat’.

7.2. Settings of the Topology

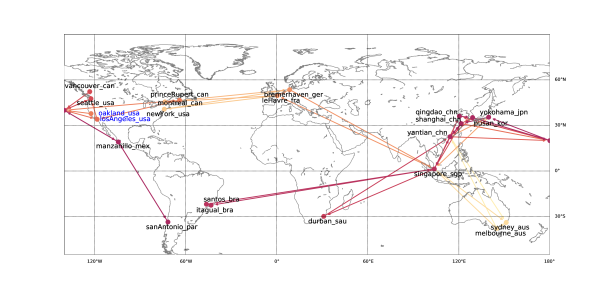

There are ports located in main trading countries, routes and vessels in the transportation topology. The overall topology is shown in Figure 6, where each node is a port and the edges of the same color form a route, whose directions are the sailing order of each vessel in the route. In addition, the two ports painted in blue are the merged ports in the derived topology we introduce in the main text.

As we stated in the main text, the yearly trading amount are distributed into daily resolution by two rules, e.g., the simple average and the trend function. We plot the trading distribution as well as the total amount of transportation orders at each time-step in Figure 7 and Figure 8. Suppose the total amount at the time-step is , transportation amount between port and port are calculated as a random proportion of , as is shown in Formula:

| (6) | ||||

where is the Gaussian distribution and and are the constant defined in the configuration file.

7.3. Experiment Results

The fulfillment curves of each configuration listed in the result table of the main text are shown in Figure 9.

References

- (1)

- Agarwal et al. (2020) Akshat Agarwal, Sumit Kumar, and Katia Sycara. 2020. Learning transferable cooperative behavior in multi-agent team. In Proceedings of the 19th International Conference on Antonomous Agents and Multiagent Sytems.

- Devailly et al. (2020) François-Xavier Devailly, Denis Larocque, and Laurent Charlin. 2020. IG-RL: Inductive Graph Reinforcement Learning for Massive-Scale Traffic Signal Control. arXiv:2003.05738 [cs.LG]

- Epstein et al. (2012) Rafael Epstein, Andres Neely, Andres Weintraub, Fernando Valenzuela, Sergio Hurtado, Guillermo Gonzalez, Alex Beiza, Mauricio Naveas, Florencio Infante, Fernando Alarcon, et al. 2012. A strategic empty container logistics optimization in a major shipping company. Interfaces 42, 1 (2012), 5–16.

- Fagnant and Kockelman (2018) Daniel J Fagnant and Kara M Kockelman. 2018. Dynamic ride-sharing and fleet sizing for a system of shared autonomous vehicles in Austin, Texas. Transportation 45, 1 (2018), 143–158.

- Foerster et al. (2018) Jakob Foerster, Gregory Farquhar, Triantafyllos Afouras, Nantas Nardelli, and Shimon Whiteson. 2018. Counterfactual Multi-Agent Policy Gradients. In Proceedings of the AAAI Conference on Artificial Intelligence.

- Ghosh et al. (2017) Supriyo Ghosh, Pradeep Varakantham, Yossiri Adulyasak, and Patrick Jaillet. 2017. Dynamic repositioning to reduce lost demand in bike sharing systems. Journal of Artificial Intelligence Research 58 (2017), 387–430.

- Hamilton et al. (2017) Will Hamilton, Zhitao Ying, and Jure Leskovec. 2017. Inductive Representation Learning on Large Graphs. In Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.). Curran Associates, Inc., 1024–1034.

- Hu et al. (1998) Junling Hu, Michael P Wellman, et al. 1998. Multiagent reinforcement learning: theoretical framework and an algorithm.. In Proceedings of the 25th International Conference on Machine Learning, Vol. 98. Citeseer, 242–250.

- Hu* et al. (2020) Weihua Hu*, Bowen Liu*, Joseph Gomes, Marinka Zitnik, Percy Liang, Vijay Pande, and Jure Leskovec. 2020. Strategies for Pre-training Graph Neural Networks. In Proceedings of the International Conference on Learning Representations.

- Jiang et al. (2020) Jiechuan Jiang, Chen Dun, and Zongqing Lu. 2020. Graph convolutional reinforcement learning for multi-agent cooperation. In Proceedings of the International Conference on Learning Representations.

- Kipf and Welling (2017) Thomas N Kipf and Max Welling. 2017. Semi-Supervised Classification with Graph Convolutional Networks. In Proceedings of the International Conference on Learning Representations.

- Li et al. (2019b) Xihan Li, Jia Zhang, Jiang Bian, Yunhai Tong, and Tie-Yan Liu. 2019b. A cooperative multi-agent reinforcement learning framework for resource balancing in complex logistics network. In Proceedings of the 18th International Conference on Autonomous Agents and MultiAgent Systems. International Foundation for Autonomous Agents and Multiagent Systems, 980–988.

- Li et al. (2019a) Yuan Li, Xiaodan Liang, Zhiting Hu, Yinbo Chen, and Eric P. Xing. 2019a. Graph Transformer. https://openreview.net/forum?id=HJei-2RcK7

- Li et al. (2018) Yexin Li, Yu Zheng, and Qiang Yang. 2018. Dynamic bike reposition: A spatio-temporal reinforcement learning approach. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 1724–1733.

- Lin et al. (2018) Kaixiang Lin, Renyu Zhao, Zhe Xu, and Jiayu Zhou. 2018. Efficient large-scale fleet management via multi-agent deep reinforcement learning. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 1774–1783.

- Liu et al. (2017) Ning Liu, Zhe Li, Jielong Xu, Zhiyuan Xu, Sheng Lin, Qinru Qiu, Jian Tang, and Yanzhi Wang. 2017. A hierarchical framework of cloud resource allocation and power management using deep reinforcement learning. In 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS). IEEE, 372–382.

- Malysheva et al. (2019) Aleksandra Malysheva, Daniel Kudenko, and Aleksei Shpilman. 2019. MAGNet: Multi-agent Graph Network for Deep Multi-agent Reinforcement Learning. In 2019 XVI International Symposium” Problems of Redundancy in Information and Control Systems”(REDUNDANCY). IEEE, 171–176.

- Menda et al. (2019) Kunal Menda, Yi-Chun Chen, Justin Grana, James W. Bono, Brendan D. Tracey, Mykel J. Kochenderfer, and David Wolpert. 2019. Deep Reinforcement Learning for Event-Driven Multi-Agent Decision Processes. IEEE Transactions on Intelligent Transportation Systems 20, 4 (Apr 2019), 1259–1268. https://doi.org/10.1109/tits.2018.2848264

- Navarin et al. (2018) Nicolò Navarin, Dinh V. Tran, and Alessandro Sperduti. 2018. Pre-training Graph Neural Networks with Kernels. arXiv:1811.06930 [cs.LG]

- Pan et al. (2019) Ling Pan, Qingpeng Cai, Zhixuan Fang, Pingzhong Tang, and Longbo Huang. 2019. A deep reinforcement learning framework for rebalancing dockless bike sharing systems. In Proceedings of the AAAI conference on Artificial Intelligence, Vol. 33. 1393–1400.

- Panait and Luke (2005) Liviu Panait and Sean Luke. 2005. Cooperative Multi-Agent Learning: The State of the Art. Autonomous Agents and Multi-Agent Systems 11, 3 (2005), 387–434. https://doi.org/10.1007/s10458-005-2631-2

- Rashid et al. (2018) Tabish Rashid, Mikayel Samvelyan, Christian Schroeder, Gregory Farquhar, Jakob Foerster, and Shimon Whiteson. 2018. QMIX: Monotonic Value Function Factorisation for Deep Multi-Agent Reinforcement Learning. In Proceedings of the 35th International Conference on Machine Learning. 4295–4304.

- Song and Carter (2009) Dong-Ping Song and Jonathan Carter. 2009. Empty container repositioning in liner shipping. Maritime Policy & Management 36, 4 (2009), 291–307.

- Song and Dong (2012) Dong-Ping Song and Jing-Xin Dong. 2012. Cargo routing and empty container repositioning in multiple shipping service routes. Transportation Research Part B: Methodological 46, 10 (2012), 1556 – 1575. https://doi.org/10.1016/j.trb.2012.08.003

- Sun et al. (2020) Chuangchuang Sun, Macheng Shen, and Jonathan P How. 2020. Scaling Up Multiagent Reinforcement Learning for Robotic Systems: Learn an Adaptive Sparse Communication Graph. arXiv preprint arXiv:2003.01040 (2020).

- Sun and Han (2013) Yizhou Sun and Jiawei Han. 2013. Mining Heterogeneous Information Networks: A Structural Analysis Approach. SIGKDD Explor. Newsl. 14, 2 (April 2013), 20–28. https://doi.org/10.1145/2481244.2481248

- Sunehag et al. (2018) Peter Sunehag, Guy Lever, Audrunas Gruslys, Wojciech Marian Czarnecki, Vinicius Zambaldi, Max Jaderberg, Marc Lanctot, Nicolas Sonnerat, Joel Z Leibo, Karl Tuyls, et al. 2018. Value-decomposition networks for cooperative multi-agent learning based on team reward. In Proceedings of the 17th international conference on autonomous agents and multiagent systems. International Foundation for Autonomous Agents and Multiagent Systems, 2085–2087.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Ł ukasz Kaiser, and Illia Polosukhin. 2017. Attention is All you Need. In Advances in Neural Information Processing Systems, I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.). Curran Associates, Inc., 5998–6008.

- Veličković et al. (2018) Petar Veličković, Guillem Cucurull, Arantxa Casanova, Adriana Romero, Pietro Lio, and Yoshua Bengio. 2018. Graph attention networks. In Proceedings of the International Conference on Learning Representations.

- Wang et al. (2019) Xiao Wang, Houye Ji, Chuan Shi, Bai Wang, Yanfang Ye, Peng Cui, and Philip S Yu. 2019. Heterogeneous graph attention network. In The World Wide Web Conference. 2022–2032.

- Wei et al. (2019) Hua Wei, Nan Xu, Huichu Zhang, Guanjie Zheng, Xinshi Zang, Chacha Chen, Weinan Zhang, Yanmin Zhu, Kai Xu, and Zhenhui Li. 2019. Colight: Learning network-level cooperation for traffic signal control. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management. 1913–1922.

- Wu et al. (2011) Jun Wu, Xin Xu, Pengcheng Zhang, and Chunming Liu. 2011. A novel multi-agent reinforcement learning approach for job scheduling in grid computing. Future Generation Computer Systems 27, 5 (2011), 430–439.