remarkRemark \newsiamremarkhypothesisHypothesis \newsiamthmclaimClaim \headersStructure-preserving Nonlinear Filtering: CG & DGVidhi Zala, Robert M. Kirby, and Akil Narayan \externaldocumentSupplementDocs

Convex Optimization-Based Structure-Preserving Filter for Multidimensional Finite Element Simulations]Structure-preserving convex optimization for multidimensional finite element simulations††thanks: Accepted by editors (JCP) 07/08/2023.

Abstract

In simulation sciences, capturing the real-world problem features as accurately as possible is desirable. Methods popular for scientific simulations such as the finite element method (FEM) and finite volume method (FVM) use piecewise polynomials to approximate various characteristics of a problem, such as the concentration profile and the temperature distribution across the domain. Polynomials are prone to creating artifacts such as Gibbs oscillations while capturing a complex profile. An efficient and accurate approach must be applied to deal with such inconsistencies to obtain accurate simulations. This often entails dealing with negative values for the concentration of chemicals, exceeding a percentage value over 100, and other such problems. We consider these inconsistencies in the context of partial differential equations (PDEs). We propose an innovative filter based on convex optimization to deal with the inconsistencies observed in polynomial-based simulations. In two or three spatial dimensions, additional complexities are involved in solving the problems related to structure preservation. We present the construction and application of a structure-preserving filter with a focus on multidimensional PDEs. Methods used such as the Barycentric interpolation for polynomial evaluation at arbitrary points in the domain and an optimized root-finder to identify points of interest, improve the filter efficiency, usability, and robustness. Lastly, we present numerical experiments in 2D and 3D using discontinuous Galerkin formulation and demonstrate the filter’s efficacy to preserve the desired structure. As a real-world application, implementation of the mathematical biology model involving platelet aggregation and blood coagulation has been reviewed and the issues around FEM implementation of the model are resolved by applying the proposed structure-preserving filter.

1 Introduction

A widely used application of mathematical modeling is creating simulations used to predict and analyze different processes. Simulations obtained from numerical solutions provide physically meaningful results if the values of simulation variables at each timestep follow the structure of the exact solution. Structure in this context refers to properties such as non-negative values for a chemical concentration or simulating a quantity requiring an upper bound, e.g., [0,1], and other such aspects of the numerical solution. If the solution fails to comply with these structural restrictions, the resulting solution is not meaningful. Therefore, designing an approach to regulating the solution is crucial for simulations in many applications. Examples include thermodynamics, numerical weather predictions, bio-chemical processes such as platelet aggregation and blood coagulation, and fluid flow problems.

Computing solutions to mathematical models described in multiple dimensions further adds to the complexity of solving a structure-preservation problem. To implement multidimensional models using numerical methods, we require approximations of the quantities of interest in the domain. Popular choices for numerical methods such as the finite element method (FEM) and the finite volume method (FVM) employ piecewise polynomial-based approximations. These approximations are prone to artifacts such as the Gibbs phenomenon, leading to a violation of structure. We focus on the FEM implementation for the advection-diffusion-reaction (ADR) problem and design an algorithm that computes a solution at each timestep adhering to a particular structure (e.g., positivity and monotonicity). The nonphysical values from such simulations may result in a propagation of nonphysical values, which often cause a blow up, resulting in the failure of the entire simulation. For example, when the concentration values are expected to be bound within but the numerical solution produces a value greater than one, even a small change in the next timestep causes a rapid compounding of the solution.

The problem of structure preservation has been well studied in different fields for applications to various partial differential equations (PDEs) and using different numerical approaches. The next section provides a brief overview of some existing methods proposed to solve the structure-preservation problem.

1.1 Review

Many sophisticated approaches have been considered in the literature to tackle different aspects of the structure-preservation problem. We make a broad classification into ‘intrusive’ and ‘nonintrusive’ classes of solutions. Many popular approaches generally involve changing either the solution or the PDE and require the imposition of stringent conditions on solver parameters, such as the step-size of the numerical method or the domain shape and granularity. Such methods can be classified as intrusive. On the other hand, some methods constrain the solution obtained from the solver with minimal, often superficial, change to the numerical scheme, i.e., in a nonintrusive way.

Prominent among the intrusive class of approaches is the transformation of structure preservation into a PDE-constrained optimization problem, methods that modify the spatial discretization [30], and limiters derived from Karush-Kahan-Tucker (KKT) optimality conditions [29]. Another example of the intrusive approach [21] proposes an operator-splitting positivity-preservation method based on the energy dissipation law.

The strategies for constraint satisfaction in [3] consider a version of the problem that is a specialization of the one solved by the solution proposed in this manuscript, which falls under the nonintrusive class. In [2, 1] and extensions [3, 23], the authors explore approaches for positivity preservation on the basis functions derived from Bernstein polynomials such that they are non-negative and possess a partition of unity property. Therefore, the interpolated solution respects the original bounds at any point in the domain, nonintrusively. Other nonintrusive methods prescribe solutions such as limiters or truncation and modification of the domain (e.g., curvilinear mesh adaptation) to adhere to the desired solution structures. One such method is established in [4] where the authors present a new approach for multi-material arbitrary Lagrangian–Eulerian (ALE) hydrodynamics simulations based on high-order finite elements posed on high-order curvilinear meshes in which conservative fields are remapped and additional synchronization steps are introduced for structural preservation. Additionally, many nonintrusive methods perform pre- or postprocessing of intermediate solutions without modifying the underlying problem to incorporate the constraints or making changes to the domain. Some nonintrusive approaches to structure preservation can be found in [20, 34, 25, 22, 27]. In [33], the authors present a positivity-preserving limiter by maintaining cell averages within a certain tolerance. The work in [16] imposes positivity on a discrete grid for kinetic equations via a nonlinear filtering procedure. Satisfying constraints up to a numerical tolerance is sufficient for many applications. However some applications require the solution to adhere to a strict non-negative structure. For example, in [13], the authors enforce strict, global non-negativity of the diffusion propagator by formulating constraints specific to the propagator model. The algorithm in [28] employs alternating least squares procedures to implement general linear equality and inequality constraints by reducing it to a non-negativity-constrained least squares (NNLS) problem. The non-negativity constraints based on square root representations have also been of interest in many other works [14, 10]. We propose a nonintrusive method based on a general filtering approach in [31, 32].

Many of the existing techniques prescribe approaches to preserve the structure that work only for low-order problems. Additionally, an increase in the dimensionality of the problem further exacerbates the issue of accuracy and convergence. Many approaches struggle to maintain high-order accuracy and convergence robustly, including the filter’s ability to preserve structure without any additional dependence or restrictions imposed on a per-case basis. This includes problem-specific modifications as well as varying solutions based on other aspects of the problem. For example, [15, 26] presents redistribution-based local and global repair methods depending on a sweep through cells to locate and rectify the violating structures. The final solution depends on the sweep order, producing different solutions for different sweep orders. In addition, this repair procedure preserves element-averaged bound/positivity constraints at particular finite difference nodes. It does not preserve them pointwise, e.g., at every spatial location. The proposed method tries to preserve the constraints at every spatial location. An ideal approach would achieve a balance between robust structure preservation, maintaining accuracy as well as convergence, and providing usability by deterministic termination within an acceptable time.

1.2 Contribution

We pose the problem of preserving the structure in polynomial-based numerical methods as a convex optimization problem. Our previous works discuss a filtering algorithm to solve this problem and demonstrate the solution for function approximations [31] and PDE solutions in 1D [32]. The robust design of the problem enables the preservation of different properties of the solution simultaneously (e.g., positivity, monotonicity, and boundedness). It transforms the problem into a composite constraint satisfaction problem that can be solved by convex minimization. To address this problem, we propose a filter that can be applied as a postprocessing step to deal with structural inconsistencies. The novel idea in the design of the filter is the efficiency achieved by applying the filter only to the violating regions in a subdomain and structure-preservation continuously throughout the domain, and not just a subset of points within the domain. This guarantee of structure-preservation depends on the granularity of the global minimization procedure in 2D and 3D, which is expensive and less reliable compared to its 1D counterpart. In 1D, the minimum can be found accurately using the confederate matrix approach to find zeros of the derivative of a polynomial. For finding the critical points in higher dimensions, an efficient gradient-based method is required which solves the nonconvex problem of finding the global minimum. In the case of the proposed filter, the global minimum represents a structure violation. Gradient-based methods are prone to get stuck at a local minimum, causing it to miss the point of larger structural inconsistency, thus failing to provide strong guarantees of preservation. Another important aspect that affects the outcome of the gradient-based method is the choice of a starting point. To address this aspect, we propose an investigation of structural inconsistencies on a lattice of points as the initial step in the gradient-based method. The point with the most inconsistency is chosen as a starting point. The accuracy of the minimum found depends on the pattern and granularity of the initial lattice used, which is detailed in Section 2.1.

To streamline the minima-finding stage and reduce the time and space complexity, we adopt the Barycentric interpolation approach from [6], expanded upon by [17]. Using these techniques, a considerable speed-up in time taken by minimization and overall filter computations is achieved.

This paper focuses on designing a structure-preserving filter using a convex optimization approach for 2D and 3D problems on different element types. We will present the formulation derived from [31] in Section 2 and extend the concepts to more complex problems tackled in this paper. The remainder of the paper is organized as follows: Section 2 discusses the setup for applications of the filter to time-dependent PDEs in 2D and 3D. Section 3 introduces the notations, details the design of different building blocks of the filter, and summarizes the 1D problem formulation from [31, 32]. A procedure for the filtering process developed for multidimensional applications is presented in Section 4. Section 5 describes the numerical results to demonstrate the filter’s efficacy in preserving the desired structures in different application scenarios. This section is divided into subsections describing the process of choosing the parameters to run the experiments, the 2D and 3D canonical experiments, and an advection problem on different homogeneous meshes using the discontinuous Galerkin (dG) formulation. We conclude with a demonstration of the use of the proposed filter on a real-world application: the model of platelet aggregation and blood coagulation problem.

2 Setup

In this section, we discuss the setup for filter applications to function approximations and time-dependent PDEs to solve an advection problem using the FEM with method-of-lines discretizations with a focus on the dG formulation. The choice of advection problem is for illustration purposes only. The setup remains the same for any time-dependent PDE solution using a polynomial-based method. The filter behaves as a postprocessing step that preserves the desired structure at each timestep within a defined tolerance and is agnostic to the numerical method used to obtain the solutions. In all our experiments, we consider the numerical zero to be . The idea behind this choice is that in all the positivity preserving experiments the gradient-based method stagnates when the difference in minimum found by two consecutive iterations dip below . In such a situation, there are no significant changes to the interpolation coefficients between iterations of the filter, and therefore, the convergence is considerably slow. Certain variations of the filter as investigated in [31, Section 5.1.2] can improve the rate of convergence below a particular tolerance. Section 5 shows numerical examples in higher dimensions using the same setup on element types such as triangle, quadrilateral, hexahedron, tetrahedron, prism, and meshes comprised of these element types.

2.1 Detecting and resolving structural inconsistency in 2D and 3D

If the polynomial projections lose structural conformity, they are likely to do so at or between the quadrature points. We hope to capture the structural inconsistencies by checking the values on a lattice of quadrature points and the centroids formed by adjacent quadrature points. Here, the arbitrary choice of the centroids is one of the many possible options to choose the lattice. A quadrilateral is used as a sample element to describe the setup; however, the same applies to any canonical element type in 2D and 3D. Let us call such a lattice of points . For a quadrilateral, let represent the number of quadrature points in one dimension. is defined by a combination of the quadrature grid on the quadrilateral (total points and centroids formed by the midpoints of the quadrature grid) as defined in Equation 2.1.1.

| (2.1.1) |

For a triangle, we can follow the same idea of combining the quadrature points and the centroids to construct the lattice. An example lattice on a mesh with quadrilaterals and triangles is shown in Figure 2.1.1.

Consider a function in 2D defined by Equation 2.1.2.

| (2.1.2) |

where

Projecting Equation 2.1.2 using polynomial order on a quadrilateral in the domain , we get the coefficients . The projection is shown in the left subfigure of Figure 2.1.2. In this case, , where is dimension. Since , . The original function is non-negative, so the projection should preserve positivity. To this end, we employ the optimization outlined in Section 4 as a postprocessing filter. The process behaves as a spectral filter based on the empirical evidence presented in [31, Section 5.2] and further theoretical explanation provided in [31, Proof 5.1]. We will hereafter refer to this optimization as a nonlinear filter (). Let be the filtered version of . The filtered projection is shown in the right subfigure of Figure 2.1.2.

2.2 Solution to advection problem in dG

The advection equation for a quantity described by a scalar field is expressed mathematically by a continuity equation Equation 2.2.1 in the domain .

| (2.2.1) |

where represents the velocity of advection. Assume proper initial and boundary conditions are specified.

Galerkin-type methods assume an ansatz for as a time-varying element of a fixed -dimensional linear subspace that contains the polynomial functions for a fixed degree , where frequently .

| (2.2.2) |

where , and represents the traditional FEM basis, which is not necessarily orthogonal. Let represent a collection of orthonormal basis functions We can transform the vector of coefficients into its orthonormal form .

Consider a partition of consisting of subdomains defined by . The discontinuous Galerkin formulation assumes that is comprised of functions that are polynomials of a fixed degree on each element on ; where discontinuities in derivatives are allowed only at partition boundaries. The semidiscrete form for Equation 2.2.1 is derived in the standard Galerkin way by using the ansatz Equation 2.2.2 and forcing the residual to be -orthogonal to . Usually, integration by parts is performed in the residual orthogonalization step, and often depending on the equation and spatial discretization, numerical flux and/or stabilization terms are included in the weak formulation.

The result is a system of ordinary differential equations prescribing time-evolution of the discrete degrees of freedom represented by vector

| (2.2.3) |

where are the mass and advection matrices, respectively, defined as

and F is a general term to compensate for any numerical fluxes or stabilization terms. Finally, Equation 2.2.3 is transformed into a fully discrete system that can be solved using an appropriate numerical integration scheme.

Consider the case when the solution defined by on a particular partition at a timestep does not satisfy the desired structural properties. To preserve the structure, we employ the optimization defined in Equation 3.1.2 as a postprocessing filter. Let represent the filtered solution that satisfies the structure. The process can be illustrated in Equation 2.2.4. The proposed filter works by simply augmenting this scheme nonintrusively.

| (2.2.4) |

Since DG discretizations have degrees of freedom that are decoupled across the subdomains, the total degrees of freedom in the optimization step need to be the only ones over the subdomain. In addition, the optimization needs to be applied only in the subdomains that have structural violations discovered at the lattice points, which leads to a parallelizable set of independent optimizations each in dimensions. We can filter the selected subdomains simultaneously, which further adds to the procedure’s efficiency.

For a mesh with the number of elements , the process of enforcing positivity per element leads to changes in solution properties; in particular, elementwise boundary values and the mean of the discrete solution are not preserved. Assume that numerical fluxes are computed as explicit functions of element boundary values. A shift in element boundary values by the filter causes changes in the corresponding fluxes, thus adding errors to the simulation. We can resolve this issue by imposing additional equality constraints (i.e., function values at element boundaries) in the filter. However, for the flux conservation, we cannot make an assertion that the resulting filtered solutions should satisfy the original flux/jump conditions or some updated conditions, based on the new filtered basis coefficients. In the case of mass (integral) conservation, additional equality constraints can be imposed. The values of fluxes are often used to ensure the properties of numerical schemes therefore by preserving fluxes one can attest that the properties of the original scheme have been retained. Preserving fluxes in this context corresponds to corrections in one element need not have an impact on neighboring elements, and one way to promote isolation of the optimization effect to the element under consideration is to ensure that interelement communication through fluxes is undisturbed. The formulation for flux conservation in [32, Section 3.2 and 3.3] is unique to one-dimensional scenarios. Generalization to higher dimensions is not immediate. Additional analysis is needed to incorporate different elements and, therefore, beyond the scope of this paper. The robust design of the filter allows for the incorporation of an arbitrary number of structural constraints into one optimization problem. However, increasing the number of constraints reduces the degrees of freedom for the filter accordingly. In particular, if the filter has degrees of freedom and we have linear equality constraints, then the dimension of the optimization problem can be reduced to using the procedure described in [32, Section 3.2].

3 Formulation of structure-preservation problem and solution design

This section establishes the notations and summarizes formulation, filter design, and implementation ideas from [31, 32].

3.1 The problem

Let be a function, where is a finite-dimensional subspace of a Hilbert space of real-valued functions on . Let V contain a collection of orthonormal basis functions for some .

Therefore, we have

where is the inner product on , and , the Kronecker delta function.

The value of is set to 1 for simplicity. The formulation follows the same steps for higher dimensions. A comprehensive constraint-satisfaction problem can be constructed as detailed in this section.

Let be the collection of that satisfies the constraints. Therefore, is the feasible subset of . An example of such a family of linear constraints is {positivity, boundedness}. Consider a family of linear constraints such as the one in Equation 3.1.1, where is a linear operator bounded on , and is a function on the domain .

| (3.1.1) |

Note that the framework in [31] allows a finite number of such constraints to be considered simultaneously.

According to the Riesz representation theorem, if is a dual of , then a functional can be associated with a unique V-representor satisfying

Furthermore, this identification is an isometry. We will use these facts in what follows. Given that identifies , we consider the coordinates of in a -orthonormal basis,

Then we have the following relations:

where is the Euclidean norm on vectors in .

The problem is essentially that of developing a map from a function , to a unique function . Initially, may not satisfy the structural constraints but its mapped version does. In [31] we introduce a strategy to solve the following well-posed convex feasibility problem.

| (3.1.2) |

Under certain conditions, such as if , is norm-contractive; therefore, the operation can be called a filter. For a brief discussion of the norm-contractive nature of Equation 3.1.2, see [31, Proposition 5.1].

3.2 Toward construction of a convex optimization-based solution to Equation 3.1.2

We first transform the continuous problem Equation 3.1.2 to a discrete version to construct a feasible solution. Let be a collection of orthonormal basis functions in , different from the standard FEM basis (), which are not orthonormal.

Consider as the affine conic region in corresponding to . From previously established notations, is a vector of expansion coefficients of that does not satisfy that desired constraints, and is a vector of filtered coefficients of that satisfies the desired constraints. Representing the Euclidean 2-norm on vectors as , and the fact that , we get the equivalent of the optimization problem Equation 3.1.2 as follows:

| (3.2.1) |

For a fixed , let be a -dimensional planar surface defined by an equality constraint corresponding to Equation 3.1.1 for a fixed . Therefore, . Writing as an expansion in degrees of freedom that corresponds to a single linear equality constraint for the , i.e., a -dimensional plane. In a geometric sense, is a surface representing the constraint boundary in the space , dividing it into two hyperspaces as shown in Figure 3.2.1. One hyperspace represents the region that satisfies a linear inequality constraint and the other that does not. For a single linear constraint, can be defined in terms of hyperspaces as Equation 3.1.1.

To obtain the full feasible set , the filter iteratively projects onto the intersection of feasible halfspaces defined by individual hyperplanes . To describe the feasible regions further, let us consider a geometric interpretation. Let the current state vector of the projection defined by be a point shown by the blue dot in Figure 3.2.1. The filter works by applying correction Equation 3.2.7 to by computationally inspecting the signed distance function Equation 3.2.4.

| (3.2.4) |

For the positivity-preservation example, the process of “inspection” means the ability to compute the global minimum of to determine a region where is negative. Based on this inspection, the algorithm projects the state vector of the current iterate onto for some . This projection can cause a particular with a positive to go negative. Therefore, we need to repeat the projection step until the solution lies completely in (or on the boundary of) . [31, Algorithm 1] summarizes all the steps of the filter.

At each iteration, the spatial point is found that minimizes the signed distance function given by Equation 3.2.5. Note that for more than one constraint we need to keep track of and the constraint specific to the minimum signed distance function.

| (3.2.5) |

This greedy procedure is an infinite-constraint generalization of Motzkin’s relaxation method [24]. The major computational work in the filtering procedure is to minimize the objective defined by Equation 3.2.6 at each iteration.

| (3.2.6) |

Next, update by projecting it onto the hyperplane corresponding to :

| (3.2.7) |

where is the normal vector corresponding to hyperplane of a single constraint family that points toward . This vector is readily computable from the orthonormal basis, see [31] for details. This procedure is repeated until vanishes to within a numerical tolerance.

Finding the violating hyperplane that is farthest away corresponds to finding the global minimum of on . Unlike the 1D procedure described in [31], multidimensional global minimization requires a more expensive approach such as gradient descent (GD). In this paper, we implement 2D and 3D global minimization using line-search-based GD with backtracking. We further provide a strategy to investigate the times taken for convergence and the accuracy of this GD approach by varying the values of the parameters. Then, we choose the best values for the parameters for the accuracy of the method. Section 4.1 provides details of the GD variant chosen for filter implementation and corresponding parameter selection to efficiently solve the minimization problem.

4 Algorithm

The structure of the solution is preserved by applying the filter as a postprocessing step that corrects obtained from the timestepping algorithm using iterative optimization. Each iteration of the filter involves the following crucial computational processes:

-

(1)

A GD-based minimization to solve Equation 3.2.5, which is a part of the global search for structural violations.

-

(2)

A calculation of the correction to using Equation 3.2.7

We briefly discuss the algorithmic details and the choices made to streamline the processes and their applications to the PDE timestepping.

4.1 Finding the global minimum

An important and the most expensive part of the optimization process is the global minimization Equation 3.2.5. For efficiency and usability of the filter, we need to fine-tune the most expensive step, i.e., minimizing the scaled distance function Equation 3.2.6, which quantifies the difference between the current state of the coefficients and the coefficients that satisfy the constraints at a critical point of the current iteration. The success of the proposed filter depends on accurately finding the points in the domain that violate the structure. Since the problem formulation uses dG, it can be solved element by element on a domain with elements. Applying the filter at each timestep is essentially solving optimization problems at every timestep. Each optimization problem is over a single element. We can reduce the number of optimization problems by testing the solution for structural inconsistencies at a lattice of points defined by Equation 2.1.1. For example, in the case of positivity preservation, if the minimum value of an element is nonpositive, the filter is applied to that element.

In the 1D case, the filter finds the minimum value by evaluating the solution at the critical points on the element. If the subspace restricted to an element is a space of polynomials, then the critical points of Equation 3.2.6 are defined by the roots of the derivative of Equation 3.2.6. For the root-finding problem, we employ the confederate matrix-generation approach. Relative to 1D, minimization in higher dimensions is more complex and expensive. Many variants of the gradient descent-based (GD-based) methods have been proposed to perform this operation efficiently and accurately. These methods operate only up to a certain tolerance and, like all the GD-based methods, have the potential for stagnation.

Since there is no multidimensional version of the confederate linearization approach that exactly solves the first-order optimality conditions, the problem of finding the critical points on the multidimensional domain is nonconvex. Therefore, we cannot ensure that all such points will be flagged for correction by any particular minimization algorithm. From our analysis, one suitable GD approach for this job is to use adaptive descent size and the ability to retrace the steps. For this reason, we choose the method of steepest descent with a backtracking line search strategy. The backtracking line search starts with a relatively large step size and repeatedly shrinks it by a factor until the Armijo–Goldstein condition Equation 4.1.1 is fulfilled. This widely used algorithm makes an informed choice about step size and direction at each iteration to efficiently arrive at the optimum value. It avoids stagnation by tracing back its steps if the difference between descent values falls below a fixed tolerance. An essential part of this algorithm is choosing the parameters denoted by and that determine the step size and backtracking performed by the algorithm. For an objective function , with a starting position , given parameters and the Armijo–Goldstein condition is defined as Equation 4.1.1.

| (4.1.1) |

where is the slope in the direction of descent and is calculated as

and play a vital role in testing the condition [5], which determines whether a step-wise movement from a current position to a modified position achieves an adequately corresponding decrease in the objective function. Although many recommendations exist [5, 11, 12, 7, 8] for an optimal way to choose and , these parameters must be tuned for maximum efficiency.

4.2 Applying the structure-preserving filter to obtained from the timestepper

From the discussion in Section 2, the optimization that enforces a constrained solution is applied as a postprocessing part inside the timestepper, independent of the choice of the timestepping routine. As predicted by the ‘curse of dimensionality’, structure preservation and optimization for higher dimensional problems are more complex than their 1D counterparts. Since the central idea of the filter is agnostic to the dimensions of the problem, the Algorithm 1 remains the same as described in [31]. Similarly, the applications to PDE solutions follow the procedures established in [32] for 1D problems.

Conversion from an input coefficient vector to corresponding coefficients in an orthonormal basis is the first stage of the filtering procedure. Algorithm 1 presents a summary of steps taken by the PDE solver for the filter application.

A significant chunk of work in the iterative correction stage is done by evaluating the solution at the lattice points using basis interpolation, an expensive operation that involves processing and storage of large interpolation matrices. To make the processing computationally efficient, we use the Barycentric interpolation method from [6], expanded upon by [17]. This method reduces the number of calculations and storage required to evaluate the basis functions at arbitrary points in the domain, thereby reducing the cost of the iterative correction stage. Although the implementation details of Barycentric interpolation method are beyond the scope of this paper, for the minimization part of the numerical experiments in Section 5, we observe a significant speed-up using the Barycentric approach.

5 Main results

This section presents four-part numerical results of the filter’s application to different types of problems in 2D and 3D. Since applying multiple constraints together can be reduced to one optimization problem on a smaller solution space, the numerical results presented here focus on positivity preservation. For 1D examples that preserve multiple structural constraints simultaneously, refer to the results presented in [32].

Section 5.1 details the process to choose the parameter values for the GD line search algorithm described in Section 4.1. Section 5.2 shows the results of applying the filter to a single-element function projection in multiple dimensions. Section 5.3 presents the results and analysis of the application of the filter using the dG formulation of the advection problems defined on composite and homogeneous domains, respectively. Finally, in Section 5.6 we present the results of the FEM solution to the mathematical-biology problem of platelet aggregation and blood coagulation (PAC) with and without the application of the filter. The PAC model is posed as an advection-diffusion-reaction (ADR) system of PDEs.

5.1 Parameter selection for backtracking line search used in GD-based minimization

To fix the values for and Equation 4.1.1 for the numerical results in Section 5, we first choose a set of functions. The choice of the functions depends on factors such as the constraint type and the domain geometry. For example, for an application where positivity is the primary structural concern, a list of functions with values close to zero is a good choice. For applications involving discontinuities in the function or the domain, discontinuous functions would be a good choice. The feasible space for and is . A non-comprehensive sampling on is performed for both parameters, and the GD is called with the parameters set to permutations of the sampled values. GD_linesearch denotes the call to the GD with line search. As its output, the GD_linesearch routine provides the number of iterations (denoted by ) taken by the algorithm. The error refers to the difference between the minimum value found and the known minimum value.

As a large number of GD iterations per filter iteration makes the filtering process more expensive, choosing the parameter values for which GD converges in the least number of iterations is desirable. Another important quantity to consider here is the error. It is desirable to have GD return with a minimum value as close to the known (or golden) minimum value as possible. With these quantities ( and ) as the metrics, we prescribe a procedure to select the ideal value of and in Algorithm A.1 [Appendix Appendix A]. The selection criteria narrow the samples to a range of and with the minimal number of iterations and then pick from that subset the ones with the smallest error. In the case of a tie, all the and values are included in the return variables. The procedure can be summarized as taking a list of functions that fairly represent the complexity of space and returning two ranges of ideal values: one for each of the parameters and , respectively. Since the quadrilateral and hexahedron represent richer space than their other canonical 2D and 3D counterparts, Algorithm A.1 [Appendix Appendix A] is applied and analyzed only on a quadrilateral for 2D and a hexahedron for 3D.

Future developments in the approaches for finding optima in multiple dimensions may improve the efficacy and efficiency of the filter. To this end and to provide robustness, we employ the object-oriented principle by modularizing the minimization part of the filter.

We consider different example functions Section 5.1 on the 2D domain and Section 5.1 on the 3D domain , which have varying complexities and projection orders to set the GD parameters comprehensively and fairly.

| (5.1.1) |

| (5.1.2) |

Using the orthogonal basis for Equation 3.2.7, is obtained, which is then used by Algorithm A.1 to find the values of parameters and . For functions that have an exact analytical minimum, calculating the error between the exact minimum and the optimum returned by the GD is straightforward. For the discontinuous functions such as and , an approximate golden minimum is calculated by projecting the function using polynomial order on a dense grid of points in and finding the least value of the projected polynomial. In all our test cases, GD converges to the exact (or golden) minimum within an acceptable tolerance (numerical 0 set to ). Therefore, the selection of the parameters is made based on the fewest iterations taken by GD () to converge.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/3d78a816-7649-4c24-a1e0-472b41bda109/Table_fig.png)

Consider discrete equispaced samples of . For , by applying Algorithm A.1, we get the results shown in Table 5.1.1.

Based on the results of these tests, we infer that for the numerical experiments, the appropriate choices for and in 2D are 0.7 and 0.7, respectively. Following a similar procedure, Algorithm A.1 on a hexahedron for functions defined in Section 5.1, the choices of and for 3D are 0.2 and 0.7, respectively. These values are used for all the numerical experiments in the numerical results.

The C++ implementation of the filter in Nektar++ [9] supports change in and as parameters in the configuration file.

5.2 Projection examples on 2D and 3D elements and application of the structure-preserving filter

Consider a 2D function Equation 5.2.1 and a 3D function Equation 5.2.2 that are both discontinuous clamped versions of a smooth sinusoidal function. The initial projections of on a quadrilateral and on a hexahedron are shown in Figure 5.2.1. The discontinuity produces oscillations similar to Gibb’s phenomenon upon projection, which is interesting for the application and analysis of the filter.

| (5.2.1) |

where

| (5.2.2) |

where

It is evident from the left subfigures in Figure 5.2.1 that the projection of discontinuous non-negative functions leads to the negative values as shown in the highlighted regions. As seen in the right subfigures of Figure 5.2.1, the application of the structure-preserving filter restores the non-negative structures of Equation 5.2.1 and Equation 5.2.2, respectively. To analyze the p-convergence, the experiment is repeated for different polynomial orders, as shown in Figure 5.2.2.

Figure 5.2.2 presents error comparison between the filtered and unfiltered version of projections of nonsmooth functions in 2D and 3D. The choice of a nonsmooth function is to demonstrate the effect of filter application in worst-case scenarios.

5.3 Structure preservation in 2D and 3D advection problems

Consider a dG solution to the advection problem Equation 5.3.1 on a 2D domain as shown in the first row of Figure 5.3.1.

| (5.3.1) |

Consider a function defined on as Equation 5.3.2.

| (5.3.2) |

For a given initial condition to be , , and the periodic boundary conditions, we formulate the problem in the discontinuous Galerkin framework. The first step is projecting on a set of elements using the typical nonorthogonal (hats and bubbles) basis . Locally, for an element , we have

where is the mesh shown in Figure 5.3.1 consisting of a mix of quadrilaterals and triangles. The filter steps do not change for different element types as emphasized by the choice of composite mesh.

The solution for the advection problem using the timestep , polynomial order 4, RK-4 integration scheme, and upwind flux calculations is shown in Figure 5.3.1. Row 2 of the figure shows the simulation state at a particular timestep and highlights the negative values in the domain. In row 3 the non-negative structure is restored after the application of the filter,

The filter is applied at each timestep to preserve the structure (positivity) of the solution on a lattice of points of interest. The lattice is a set of points defined in Equation 2.1.1. If a structure violation is found at any point in the lattice, the parent element is flagged for filtering. Since the boundaries are periodic, the final state of the simulation looks similar to the initial state.

For a similar analysis of the 3D advection problem, consider the initial state as a smooth continuous function Equation 5.3.3 on a cube mesh of 64 hexahedron elements defined in the domain . The advection velocity is defined by a , and all the boundary conditions are periodic. The integration method used is RK-4 with a timestep of . The timestepping is performed for a total of 2000 timesteps and the flux calculation is upwind.

Following a similar selection procedure as in 2D, we discover the elements that violate the structure at each timestep and apply the structure-preserving filter to those elements.

| (5.3.3) |

Figure 5.3.2 shows an instance during the advection process where a loss of structure is encountered, i.e., negative intermediate values are found, as shown by the highlighted region in the left and middle subfigures of Figure 5.3.2.

The process of discovering the structurally nonconformal elements and application of the filter to those elements adds to the total time cost of the advection solution, which is an important aspect to consider when deciding on the criteria for applying the filter.

The percentage increase in total time taken by the experiment by the application of structure-preserving filter is shown in Figure 5.3.3. Note that for 2D, at low orders (N=2,3,4), the cost of filtering is between 50-70 of the total time. This is due, in part, to the efficiency at these orders of the unfiltered solver such as caching effects, as well as up-front costs associated with the optimization. We find that for 2D the ratio of filtered to unfiltered is lowest at N=5, and then proceeds to climb linearly as shown in the left subfigure of Figure 5.3.3. The time taken by the filter in 3D is notably higher than the time taken by the filter in 2D because of the higher complexity of finding the global minimum in 3D. The cost of overall filtering per timestep depends on the number of elements that the filter operates upon. Therefore, the procedure of selective application of the filter becomes increasingly important.

5.4 Structure preservation of the canonical rotating solid body test

We now investigate the application of the filter on the solid body rotation experiment using a discontinuous initial condition defined as a combination of a notched cylinder, a cone, and a smooth hump. Consider the initial data shown in Figure 5.4.1. This example is extensively used in advection and structure preservation literature [19]. The parameters to reproduce the initial state: the cylinder, cone, and hump have a radius of 0.3 each. The centers of the cylinder, cone, and hump are (0, 0.5), (0,-0.5), and (-0.6,0) respectively. Without changing the domain and boundary conditions used for the 2D advection experiment shown in Figure 5.3.1, the advection velocity is changed to circular such that in one time period, the solution returns to its original state. For all tests in this section, the domain has 144 quadrilateral elements and the timestep is .

Since the initial condition for the experiment is defined by a complicated discontinuous function, the solution suffers from a larger loss of structure as compared to the case with a smooth initial condition.

Figure 5.4.2 shows the initial and final states during rotation of the body shown in Figure 5.4.1, with and without the filter application.

Figures 5.4.3 and 5.4.4 show different cross sections of the solution with and without filtering at the initial and final time. The total time taken by the filter as a function of the entire simulation time is shown in Figure 5.4.5.

As evident from the top left panel in Figure 5.4.5, the filter takes proportionally longer to restore the structure as the loss of structure increases. In many practical applications, such as the one presented in Section 5.6, we observe that paying the extra filtering cost results in the successful termination of the simulation, which otherwise fails due to the presence of structural inconsistencies. In such cases, the tradeoff between the time cost vs guarantee of structure preservation leans towards the latter. The filtered and unfiltered errors after one rotation of the solid body are shown in the top right panel of Figure 5.4.5. Whereas filtering changes the final state of the solution, thus contributing to the errors, the difference between filtered and unfiltered errors is still comparable. The filtered version of the tests using different polynomial order follows the same p-convergence as the unfiltered counterpart. A comparison between the number of times the filter is invoked and the number of iterations taken per invocation is shown in the bottom left panel of Figure 5.4.5. We notice that the number of iterations taken per call to the filter increases with the increase in polynomial order because of the larger magnitude of structural inconsistencies observed as a result of the oscillations in the solution. The largest contributor to the cost of applying the filter occurs from the GD line-search to find the global minimum. At each iteration of the filter, we find the minimum. Each call to find the minimum, in turn, takes a few iterations of GD. On the bottom right panel of Figure 5.4.5 is a comparison between the average number of GD iterations per iteration of the filter. Also shown is the average number of filter iterations per call to the filter.

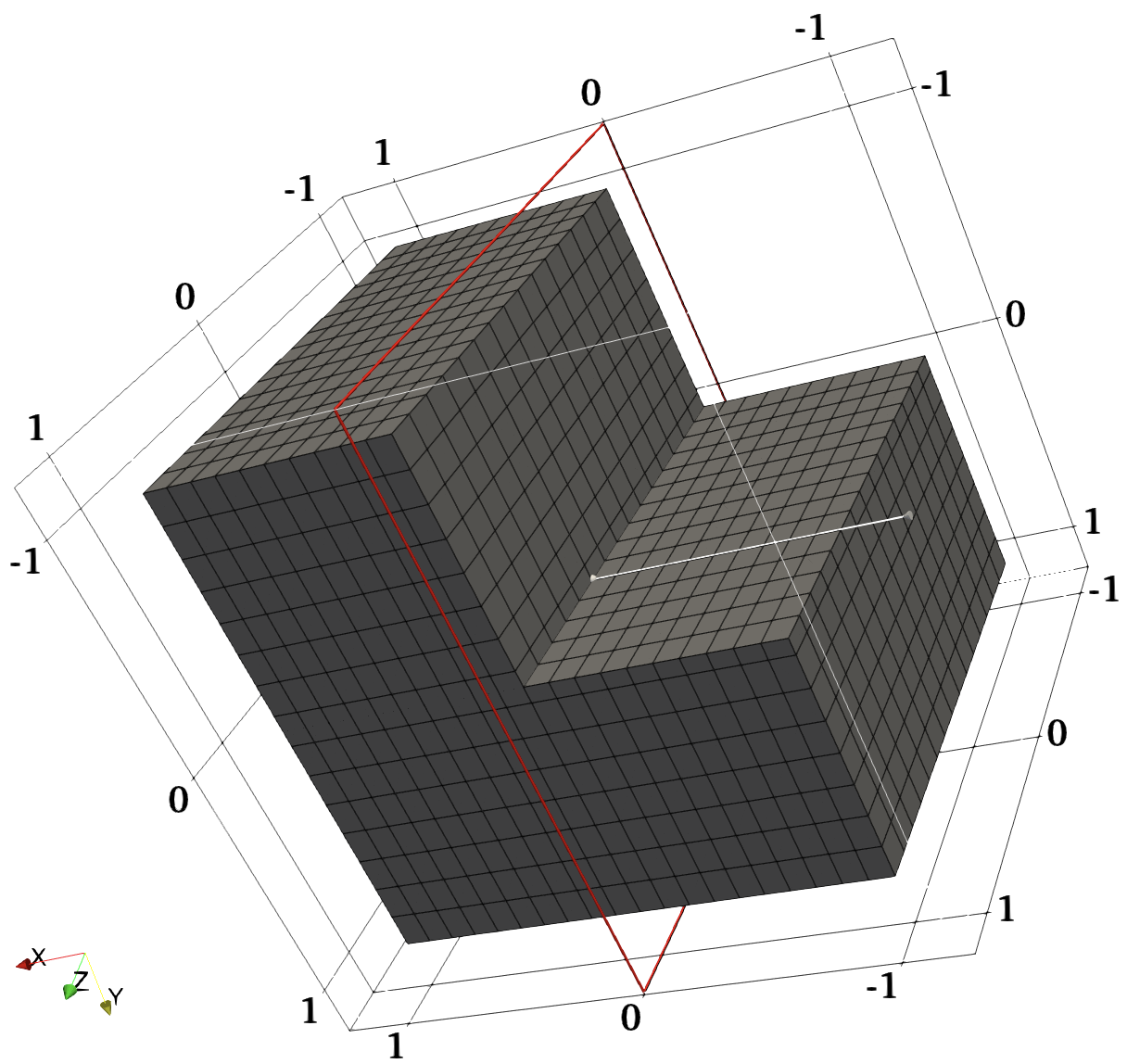

5.5 Structure preservation in advection tests on various 3D domains

The procedure to filter the elements that exhibit loss of structure remains agnostic to the type of element. To demonstrate this, consider different tesselations of the domain and solve an advection problem with the nontrivial initial state Equation 5.5.1. The setup remains the same as the previous 3D example, i.e., a in all directions, periodic boundaries, RK-4 integration scheme using timestep , and for a total of 2000 timesteps.

| (5.5.1) |

The initial state looks like a torus inside a cube. The advection experiment is repeated on different homogeneous meshes with element types hexahedra, tetrahedra, and prisms individually. Figure 5.5.1 shows the results before and after the filter application at a particular timestep for all these meshes.

The analysis of the filtering effects on the accuracy and convergence of the advection process, especially on the meshes with hexahedron and prism elements, reveals that the difference between the errors is too small to warrant a convergence comparison. However, for the mesh with tetrahedral elements, the errors perceptibly vary at each timestep, as shown by a longer run of the same experiment (total 4000 timesteps) in Figure 5.5.2.

5.6 Filter application to dG FEM implementation of the PAC model

A prominent scenario in which the problem of structure-preservation is encountered is in the mathematical-biology domain. A detailed mathematical model for platelet aggregation and blood coagulation (PAC) by [18] is considered for this experiment. The model describes the process of evolution, interaction, and decay of the chemical species involved in the process of thrombosis.

Although the details of the individual species in the PAC process are beyond the scope of this paper, [18] has a detailed explanation of the nature and the evolution of the chemical species. The model can be summarized as a comprehensive collection of ODEs and PDEs that track all the chemical species in various phases during the process. The results in [18] use a finite-difference method with a specified limiter to truncate nonpositive concentration values to zero. In order to implement this model using the FEM without loss of structure, an alternative approach to truncation, such as the one presented in this paper, is needed. For our experiments, we use the structure-preserving filter instead of a truncation limiter. The focus is limited to the system of equations to track the chemical species that advect, diffuse, and react. One such species is fluid-phase thrombin (FP ), an essential component of the thrombosis process. For this section, we track the evolution of thrombin by advection, diffusion, and decay in the fluid-phase. For the detailed results of the evolution of all the chemical species, including thrombin, under various circumstances in the original model, refer to [18].

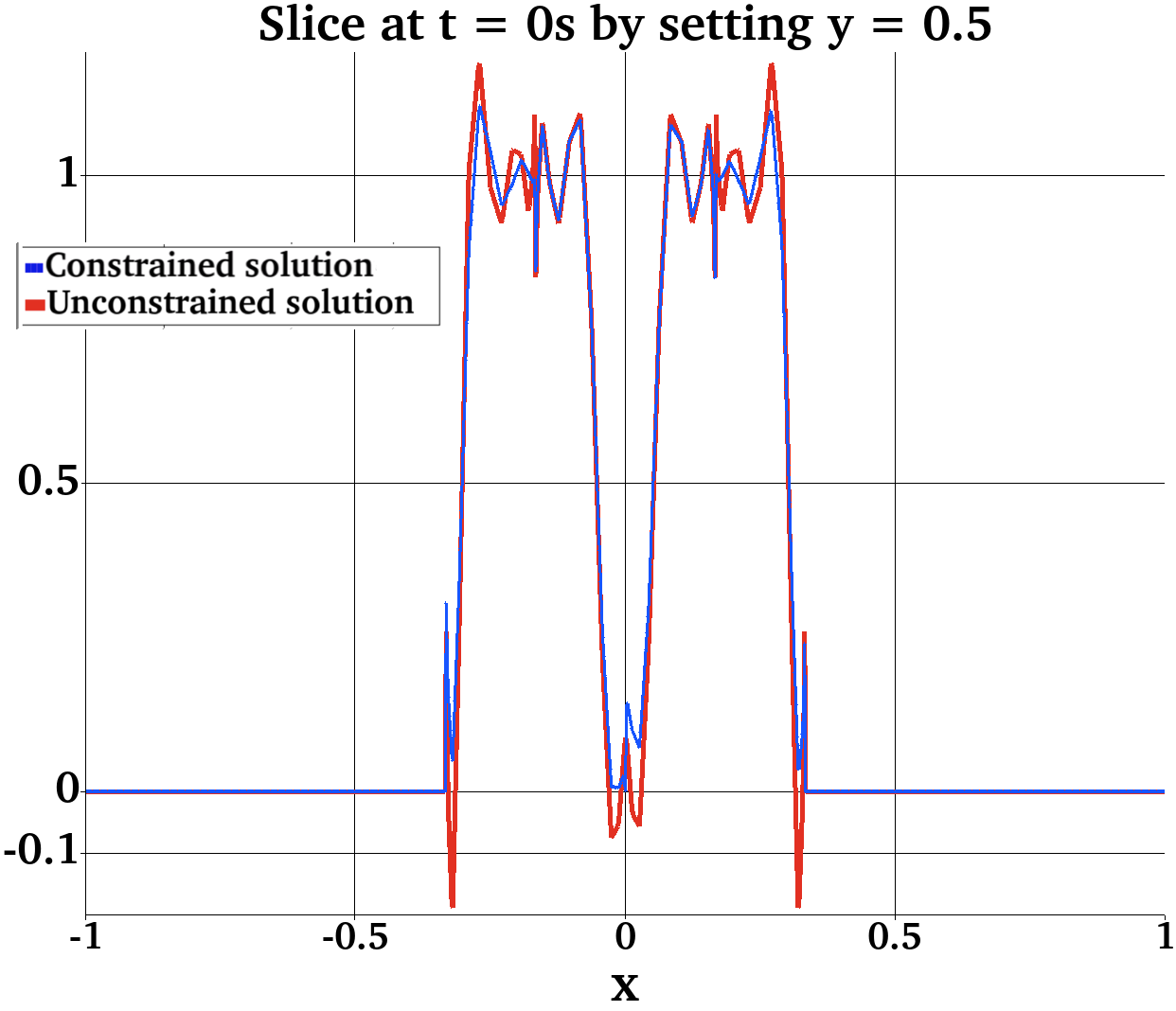

We set up a version of the PAC model that solves the species evolution problem by a combination of advection, diffusion, and reaction (ADR) PDEs. Unlike the original model, the velocity of the fluid medium is not reported by a modified Navier-Stokes solver. Instead, the velocity is constant . To solve this system of PDEs on a sample blood vessel represented by a rectangular domain , we employ the dG FEM. The domain is tessellated using 2048 quadrilaterals. The bottom wall has an injury site spanning from to . We use polynomial order and timestep of to solve the problem.

The total number of species tracked in our implementation of the model is 56. Since the focus is on the behavior of thrombin, we present the PDE describing the evolution of fluid-phase thrombin (FP ) Equation 5.6.1. For details on the other chemical species involved in Equation 5.6.1, refer to [18]. The boundary conditions vary depending on the chemical being tracked. For FP , we have a Dirichlet zero boundary on the left, and a no-flux boundary on the right and top. The bottom boundary has two kinds of conditions: a robin boundary condition on the injury site and Dirichlet zero everywhere else.

| (5.6.1) |

Figure 5.6.1 shows filtered and unfiltered runs of our implementation of this model and the resulting concentration profile of FP . At timestep = 2, we get the invalid (negative) values around the injury site because of the sharp concentration changes (refer to the slice at in the bottom left part of Figure 5.6.1). Therefore, the fluid phase thrombin concentration does not adhere to the desired structure (positivity), and the simulation does not succeed. If this experiment is allowed to continue, the effect of invalid values will compound over time, rendering the simulation results nonphysical and useless. At timestep 38, the simulation starts becoming unstable because of structural discrepancies. If it is allowed to run further, the accumulation and propagation of negative values results in the simulation blow-up at timestep 87, at which point the code reports invalid values such as NaN (datatype: Not a Number).

When the filter is applied, it runs for significantly more timesteps (total 674) and terminates naturally. The right side of Figure 5.6.1 shows the state of FP at the depletion point of the chemicals involved.

6 Conclusions

We present a formalism that solves the problem of structure-preservation for PDE solutions in 2D and 3D. The construction and design of a postprocessing structure-preserving filter are detailed and applied to multidimensional dG FEM solutions to different PDEs. A geometric interpretation of the mathematical foundation behind the filter is presented, followed by an algorithm to apply the proposed filter in a timestepping PDE framework. At the core of the filter lies the expensive requirement for global minimization on a weighted distance function that corresponds to the objective we optimize. We employ gradient descent with backtracking line search for the minimization to reduce the cost of the filtering routine. To this end, we detail an investigative procedure to reduce the minimization cost by precomputing specific parameter values for the gradient descent approach. Using numerical examples, we compare the convergence rates with and without the application of the filter for different problem sizes and domain structures. The percentage increase in time taken by the simulation is computed to understand the cost of the filter and it is observed that the cost scales with the order and size of the problem. In the end, using a filtered solution to a mathematical-biology problem of platelet aggregation and blood coagulation, we provide evidence of the proposed method’s efficacy and utility. One future direction for investigation could attempt to understand when inter-element flux preservation is beneficial in these types of numerical simulations. For example, comparing two filtered solutions, one with and one without flux preservation, could form the basis for more experiments.

Acknowledgments

V. Zala and R.M. Kirby acknowledge that their part of this research was sponsored by ARL under cooperative agreement number W911NF-12-2-0023. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of ARL or the U.S. Government. The U.S. Government is authorized to reproduce and distribute reprints for Government purposes notwithstanding any copyright notation herein. A. Narayan was partially supported by NSF DMS-1848508. This material is based upon work supported by both the National Science Foundation under Grant No. DMS-1439786 and the Simons Foundation Institute Grant Award ID 507536 while A. Narayan was in residence at the Institute for Computational and Experimental Research in Mathematics in Providence, RI, during the Spring 2020 semester.

References

- [1] L. Allen and R. C. Kirby, Bounds-constrained polynomial approximation using the bernstein basis, Numerische Mathematik, 152 (2022), pp. 101–126.

- [2] R. Anderson, V. Dobrev, T. Kolev, D. Kuzmin, M. Q. de Luna, R. Rieben, and V. Tomov, High-order local maximum principle preserving (mpp) discontinuous galerkin finite element method for the transport equation, Journal of Computational Physics, 334 (2017), pp. 102–124.

- [3] R. W. Anderson, V. A. Dobrev, T. V. Kolev, and R. N. Rieben, Monotonicity in high-order curvilinear finite element arbitrary lagrangian–eulerian remap, International Journal for Numerical Methods in Fluids, 77 (2015), pp. 249–273.

- [4] R. W. Anderson, V. A. Dobrev, T. V. Kolev, R. N. Rieben, and V. Z. Tomov, High-order multi-material ale hydrodynamics, SIAM Journal on Scientific Computing, 40 (2018), pp. B32–B58.

- [5] L. Armijo, Minimization of functions having Lipschitz continuous first partial derivatives., Pacific Journal of Mathematics, 16 (1966), pp. 1 – 3.

- [6] J.-P. Berrut and L. N. Trefethen, Barycentric lagrange interpolation, SIAM Review, 46 (2004), pp. 501–517.

- [7] D. P. Bertsekas, Nonlinear programming, Journal of the Operational Research Society, 48 (1997), pp. 334–334.

- [8] S. Boyd, S. P. Boyd, and L. Vandenberghe, Convex optimization, Cambridge university press, 2004.

- [9] C. D. Cantwell, D. Moxey, A. Comerford, A. Bolis, G. Rocco, G. Mengaldo, D. De Grazia, S. Yakovlev, J.-E. Lombard, D. Ekelschot, et al., Nektar++: An open-source spectral/hp element framework, Computer physics communications, 192 (2015), pp. 205–219.

- [10] J. Cheng, R. Deriche, T. Jiang, D. Shen, and P.-T. Yap, Non-negative spherical deconvolution (nnsd) for estimation of fiber orientation distribution function in single-/multi-shell diffusion mri, NeuroImage, 101 (2014), pp. 750–764.

- [11] J. B. Crockett, H. Chernoff, et al., Gradient methods of maximization., Pacific Journal of Mathematics, 5 (1955), pp. 33–50.

- [12] H. B. Curry, The method of steepest descent for non-linear minimization problems, Quarterly of Applied Mathematics, 2 (1944), pp. 258–261.

- [13] T. Dela Haije, E. Özarslan, and A. Feragen, Enforcing necessary non-negativity constraints for common diffusion mri models using sum of squares programming, NeuroImage, 209 (2020), p. 116405.

- [14] A. Goh, C. Lenglet, P. M. Thompson, and R. Vidal, A nonparametric riemannian framework for processing high angular resolution diffusion images and its applications to odf-based morphometry, NeuroImage, 56 (2011), pp. 1181–1201.

- [15] M. Kucharik, M. Shashkov, and B. Wendroff, An efficient linearity-and-bound-preserving remapping method, Journal of computational physics, 188 (2003), pp. 462–471.

- [16] M. P. Laiu, C. D. Hauck, R. G. McClarren, D. P. O’Leary, and A. L. Tits, Positive Filtered P$_n$ Moment Closures for Linear Kinetic Equations, SIAM Journal on Numerical Analysis, 54 (2016), pp. 3214–3238.

- [17] E. Laughton, V. Zala, A. Narayan, R. M. Kirby, and D. Moxey, Fast barycentric-based evaluation over spectral/hp elements, Journal of Scientific Computing, 90 (2022), p. 78.

- [18] K. Leiderman and A. L. Fogelson, Grow with the flow: a spatial–temporal model of platelet deposition and blood coagulation under flow, Mathematical Medicine and Biology: a journal of the IMA, 28 (2011), pp. 47–84.

- [19] R. J. Leveque, High-resolution conservative algorithms for advection in incompressible flow, SIAM Journal on Numerical Analysis, 33 (1996), pp. 627–665.

- [20] D. Light and D. Durran, Preserving nonnegativity in discontinuous galerkin approximations to scalar transport via truncation and mass aware rescaling (tmar), Monthly Weather Review, 144 (2016), pp. 4771–4786.

- [21] C. Liu, C. Wang, and Y. Wang, A structure-preserving, operator splitting scheme for reaction-diffusion equations with detailed balance, Journal of Computational Physics, 436 (2021), p. 110253.

- [22] X.-D. Liu and S. Osher, Nonoscillatory high order accurate self-similar maximum principle satisfying shock capturing schemes i, SIAM Journal on Numerical Analysis, 33 (1996), pp. 760–779.

- [23] C. Lohmann, D. Kuzmin, J. N. Shadid, and S. Mabuza, Flux-corrected transport algorithms for continuous galerkin methods based on high order bernstein finite elements, Journal of Computational Physics, 344 (2017), pp. 151–186.

- [24] T. S. Motzkin and I. J. Schoenberg, The Relaxation Method for Linear Inequalities, Canadian Journal of Mathematics, 6 (1954), pp. 393–404. Publisher: Cambridge University Press.

- [25] R. Sanders, A third-order accurate variation nonexpansive difference scheme for single nonlinear conservation laws, Mathematics of Computation, 51 (1988), pp. 535–558.

- [26] M. Shashkov and B. Wendroff, The repair paradigm and application to conservation laws, Journal of Computational Physics, 198 (2004), pp. 265–277.

- [27] C.-W. Shu and S. Osher, Efficient implementation of essentially non-oscillatory shock-capturing schemes, Journal of computational physics, 77 (1988), pp. 439–471.

- [28] M. H. Van Benthem and M. R. Keenan, Fast algorithm for the solution of large-scale non-negativity-constrained least squares problems, Journal of Chemometrics: A Journal of the Chemometrics Society, 18 (2004), pp. 441–450.

- [29] J. J. van der Vegt, Y. Xia, and Y. Xu, Positivity preserving limiters for time-implicit higher order accurate discontinuous galerkin discretizations, SIAM journal on scientific computing, 41 (2019), pp. A2037–A2063.

- [30] M. J. Zahr and P.-O. Persson, An optimization based discontinuous galerkin approach for high-order accurate shock tracking, in 2018 AIAA Aerospace Sciences Meeting, 2018, p. 0063.

- [31] V. Zala, R. M. Kirby, and A. Narayan, Structure-preserving function approximation via convex optimization, SIAM Journal on Scientific Computing, 42 (2020), pp. A3006–A3029.

- [32] V. Zala, R. M. Kirby, and A. Narayan, Structure-preserving nonlinear filtering for continuous and discontinuous galerkin spectral/hp element methods, SIAM Journal on Scientific Computing (Under review), (2020).

- [33] X. Zhang, On positivity-preserving high order discontinuous galerkin schemes for compressible navier–stokes equations, Journal of Computational Physics, 328 (2017), pp. 301–343.

- [34] X. Zhang and C.-W. Shu, On maximum-principle-satisfying high order schemes for scalar conservation laws, Journal of Computational Physics, 229 (2010), pp. 3091 – 3120.

Appendix A

The parameter selection algorithm for backtracking line search used in GD-based minimization. Section 5.1 and Section 5.1 define the set of functions used in the selection process.

Appendix B

Additional results for experiment described in Section 5.4 for polynomial orders 3 and 7 are attached below: