Convergence rates for the joint solution of inverse problems with compressed sensing data

Abstract

Compressed sensing (CS) is a powerful tool for reducing the amount of data to be collected while maintaining high spatial resolution. Such techniques work well in practice and at the same time are supported by solid theory. Standard CS results assume measurements to be made directly on the targeted signal. In many practical applications, however, CS information can only be taken from indirect data related to the original signal by an additional forward operator. If inverting the forward operator is ill-posed, then existing CS theory is not applicable. In this paper, we address this issue and present two joint reconstruction approaches, namely relaxed co-regularization and strict co-regularization, for CS from indirect data. As main results, we derive error estimates for recovering and . In particular, we derive a linear convergence rate in the norm for the latter. To obtain these results, solutions are required to satisfy a source condition and the CS measurement operator is required to satisfy a restricted injectivity condition. We further show that these conditions are not only sufficient but even necessary to obtain linear convergence.

Keywords: Compressed sensing from indirect data, joint recovery, inverse problems, regularization, convergence rate, sparse recovery

1 Introduction

Compressed sensing (CS) allows to significantly reduce the amount of measurements while keeping high spatial resolution [4, 7, 9]. In mathematical terms, CS requires recovering a targeted signal from data . Here is the CS measurement operator, , are Hilbert spaces and is the unknown data perturbation with . CS theory shows that even when the measurement operator is severely under-determinated one can derive linear error estimates for the CS reconstruction . Such results can be derived uniformly for all sparse assuming the restricted isometry property (RIP) requiring that for sufficiently sparse elements [5]. The RIP is known to be satisfied with high probability for a wide range of random matrices [1]. Under a restricted injecticity condition, related results for elements satisfying a range condition are derived in [11, 12]. In [10] a strong form of the source condition has been shown to be sufficient and necessary for the uniqueness of minimization. In [12] it is shown that the RIP implies the source condition and the restricted injectivity for all sufficiently sparse elements.

1.1 Problem formulation

In many applications, CS measurements can only be made on indirect data instead of the targeted signal , where is the forward operator coming from a specific application at hand. For example, in computed tomography, the forward operator is the Radon transform, and in microscopy, the forward operator is a convolution operator. The problem of recovering from CS measurements of indirect data becomes

| (1.1) |

where is the CS measurement operator. In this paper we study the stable solution of (1.1).

The naive reconstruction approach is a single-step approach to consider (1.1) as standard CS problem with the composite measurement operator . However, CS recovery conditions (such as the RIP) are not expected to hold for the composite operator due to the ill-posedness of the operator . As an alternative one may use a two-step approach where one first solves the CS problem of recovering and afterwards inverts the operator equation of the inverse problem. Apart from the additional effort, both recovery problems need to be regularized and the risk of error propagation is high. Moreover, recovering from sparsity alone suffers from increased non-uniqueness if .

1.2 Proposed co-regularization

In order to overcome the drawbacks of the single-step and the two-step approach, we introduce two joint reconstruction methods for solving (1.1) using a weighted norm (defined in (2.1)) addressing the CS part and variational regularization with an additional penalty for addressing the inverse problem part. More precisely, we study the following two regularization approaches.

-

(a)

Strict co-regularization: Here we construct a regularized solution pair with by minimizing

(1.2) where is a regularization parameter. This is equivalent to minimizing under the strict constraint .

-

(b)

Relaxed co-regularization: Here we relax the constraint by adding a penalty and construct a regularized solution by minimizing

(1.3) The relaxed version in particular allows some defect between and .

Under standard assumptions, both the strict and the relaxed version provide convergent regularization methods [18].

1.3 Main results

As main results of this paper, under the parameter choice , we derive the linear convergence rates (see Theorems 2.8, 2.7)

where is the Bregman distance with respect to and (see Definition 2.1) for strict as well as for relaxed co-regularization. In order to archive these results, we assume a restricted injectivity condition for and source conditions for and . These above error estimates are optimal in the sense that they cannot be improved even in the cases where , which corresponds to an inverse problem only, or the case where (1.1) is a standard CS problem on direct data.

As further main result we derive converse results, showing that the source condition and the restricted injectivity condition are also necessary to obtain linear convergence rates (see Theorem 3.4).

We note that our results and analysis closely follow [11, 12], where the source condition and restricted injectivity are shown to be necessary and sufficient for linear convergence of -regularization for CS on direct data. In that context, one considers CS as a particular instance of an inverse problem under a sparsity prior using variational regularization with an -penalty (that is, -regularization). Error estimates in the norm distance for -regularization based on the source condition have been first derived in [14] and strengthened in [11]. In the finite dimensional setting, the source condition (under a different name) for -regularization has been used previously in [10]. For some more recent development of -regularization and source conditions see [8].

Further note that for the direct CS problem where is the identity operator and if we take the regularizer , then the strict co-regularization reduces to the well known elastic net regression model [19]. Closely following the work [11], error estimates for elastic net regularization have been derived in [13]. Finally, we note that another interesting line of research in the context of co-regularization would be the derivation of error estimates under the RIP. While we expect this to be possible, such an analysis is beyond the scope of this work.

2 Linear convergence rates

Throughout this paper and denote separable Hilbert spaces with inner product and norm . Moreover we make the following assumptions.

Assumption A.

-

(A.1)

is linear and bounded.

-

(A.2)

is linear and bounded.

-

(A.3)

is proper, convex and wlsc.

-

(A.4)

is a countable index set.

-

(A.5)

is an orthonormal basis (ONB) for .

-

(A.6)

for some .

-

(A.7)

.

Recall that is wlsc (weakly lower semi-continuous) if for all weakly converging to . We write for the range of and

for the support of with respect to . A signal is sparse if . The weighted -norm with weights is defined by

| (2.1) |

We have . For a finite subset of indices , we write

| (2.2) | |||

| (2.3) | |||

| (2.4) | |||

| (2.5) |

Because is an ONB, every has the basis representation . Finally, denotes the standard operator norm.

2.1 Auxiliary estimates

One main ingredient for our results are error estimates for general variational regularization in terms of the Bregman distance. Recall that is called subgradient of a functional at if

The set of all subgradients is called the subdifferential of at and denoted by .

Definition 2.1 (Bregman distance).

Given and , the Bregman distance between with respect to and is defined by

| (2.6) |

The Bregman distance is a valuable tool for deriving error estimates for variational regularization. Specifically, for our purpose we use the following convergence rates result.

Lemma 2.2 (Variational regularization).

Let be bounded and linear, let be proper, convex and wlsc and let satisfy and for some . Then for all , with and we have

| (2.7) | ||||

| (2.8) |

Proof.

For our purpose we will apply Lemma 2.2 where is a combination formed by and . We will use that the subdifferential of at consists of all with

Since the family is square summable, can be obtained for only finitely many and therefore is nonempty if and only if is sparse.

Remark 2.3 (Weighted -norm).

For define

| (2.9) | ||||

| (2.10) |

Then is finite and as converges to zero, is well-defined with . Because is positively homogeneous it holds . Thus, for ,

| (2.11) |

Estimate (2.11) implies that if linearly converge to , then so does .

Lemma 2.4.

Let be finite, injective and . Then, for all ,

| (2.12) |

Proof.

Because is finite dimensional and is injective, the inverse is well defined and bounded. Consequently,

Bounding the -norm by the -norm yields (2.12). ∎

Lemma 2.5.

Let be sparse, and assume that is injective. Then, for ,

| (2.13) |

2.2 Relaxed co-regularization

First we derive linear rates for the relaxed model . These results will be derived under the following condition.

Condition 1.

-

(1.1)

with , .

-

(1.2)

-

(1.3)

-

(1.4)

is injective.

Conditions (1.2), (1.3) are source conditions very commonly assumed in regularization theory. From (1.3) it follows that is sparse and contained in . Condition (1.4) is the restricted injectivity condition.

Remark 2.6 (Product formulation).

We introduce the operator and the functional ,

| (2.14) | ||||

| (2.15) |

Using these notions, one can rewrite the relaxed co-regularization functional as

Because and are linear and bounded, is linear and bounded, too. Moreover, since and are proper, convex and wlsc, has these properties, too. The subdifferential is given by . The Bregman distance with respect to is given by

| (2.16) |

Here comes our main estimate for the relaxed model.

Theorem 2.7 (Relaxed co-regularization).

Let Condition 1 hold and consider the parameter choice for . Then for all with and all we have

| (2.17) | ||||

| (2.18) |

where

Proof.

According to (1.3), , which implies that is finite and . With (1.4) and Lemma 2.5 we therefore get

| (2.19) |

Using the product formulation as in Remark 2.6, according to (1.2), (1.3) the source condition holds. By Lemma 2.2 and the choice we obtain

Using (2.14), (2.15), (2.16) we obtain

Combining this with (2.19) completes the proof. ∎

2.3 Strict co-regularization

Next we analyze the strict approach (1.2). We derive linear convergence rates under the following condition.

Condition 2.

-

(2.1)

satisfies .

-

(2.2)

-

(2.3)

-

(2.4)

is injective.

Condition (2.2) is a source condition for the forward operator and the regularization functional . Condition (2.3) assumes the splitting of the subgradient into subgradients and . The assumption (2.4) is the restricted injectivity.

Theorem 2.8 (Strict co-regularization).

Let Condition 2 hold and consider the parameter choice for . Then for and we have

| (2.20) | ||||

| (2.21) |

with the constants

3 Necessary Conditions

In this section we show that the source condition and restricted injectivity are not only sufficient but also necessary for linear convergence of relaxed co-regularization. In the following we restrict ourselves to the -norm

We denote by and the product operator and regularizer defined in (2.14), (2.15). We call a -minimizing solution of if . In this section we fix the following list of assumptions which is slightly stronger than Assumption A.

Assumption B.

-

(B.1)

is linear and bounded with dense range.

-

(B.2)

is linear and bounded.

-

(B.3)

is proper, strictly convex and wlsc.

-

(B.4)

is countable index set.

-

(B.5)

is an ONB of .

-

(B.6)

.

-

(B.7)

.

-

(B.8)

is Gateaux differentiable at if is the unique -minimizing solution of .

Under Assumption B, the equation has a unique -minimizing solution.

Condition 3.

-

(3.1)

with , .

-

(3.2)

-

(3.3)

-

(3.4)

-

(3.5)

is injective.

3.1 Auxiliary results

Condition 3 is clearly stronger than Condition 1. Below we will show that these conditions are actually equivalent. For that purpose we start with several lemmas. These results are in the spirit of [12] where necessary conditions for standard minimization have been derived.

Lemma 3.1.

Assume that is the unique -minimizing solution of , let satisfy and assume that is sparse. Then:

-

(a)

The restricted mapping is injective.

-

(b)

For every finite set with there exists such that

Proof.

(a): Denote . After possibly replacing some basis vectors by , we may assume without loss of generality that for . Since is the unique -minimizing solution of , it follows that

for all and all with . Because is finite, the mapping

is piecewise linear. Taking the one-sided directional derivative of with respect to , we have

| (3.1) |

For the last equality we used that for all , that is Gateaux differentiable and that . Inserting instead of in (3.1) we deduce

| (3.2) |

for all . In particular,

| (3.3) |

and consequentially . Because for all and is finite, we have . Therefore which verifies that is injective.

(b): Let be finite with . Inequality (3.2) and the finiteness of imply the existence of a constant such that, for ,

| (3.4) |

Assume for the moment . Then for some . Due to (B.5), is an orthogonal projection and the adjoint of the embedding . The identity implies that

By assumption, is finite dimensional and therefore , where denotes the orthogonal complement in . Consequently we have to show the existence of with

| (3.5) |

where .

Define the element by for and for . If , then we choose and (3.5) is fulfilled. If, on the other hand, , then and there exists a basis of such that

| (3.6) |

Consider now the constrained minimization problem on

| (3.7) |

Because of the equality , the admissible vectors in (3.7) are precisely those for which . Thus, the task of finding satisfying (3.5) reduces to showing that the value of (3.7) is strictly smaller that . Note that the dual of the convex function is the function

| (3.8) |

Recalling that , it follows that the dual problem to (3.7) is the following constrained problem on :

| (3.9) |

From (3.6) we obtain that

for every . Taking , inequality (3.4) therefore implies that for every there exist such that

From (3.9) it follows that for every admissible vector for (3.9). Thus the value of in (3.9) is greater than or equal to . Since the value of the primal problem (3.7) is the negative of the dual problem (3.9), this shows that the value of (3.7) is at most . As , this proves that the value of (3.7) is strictly smaller than and, as we have shown above, this proves assertion (3.5). ∎

Lemma 3.2.

Assume that is the unique -minimizing solution of and suppose , for some . Then satisfies Condition 3.

Proof.

Lemma 3.3.

Let converge to , satisfy and with for . Then and as imply .

Proof.

See [12, Lemma 4.1]. The proof given there also applies to our situation. ∎

3.2 Main result

The following theorem in the main results of this section and shows that the source condition and restricted injectivity are in fact necessary for linear convergence.

Theorem 3.4 (Converse results).

Proof.

Item (i) obviously implies Item (ii). The implication (ii) (iii) has been shown in Theorem 2.7. The rate in (iii) implies that is the second component of every -minimizing solution of . As is strictly convex, (3.10) implies that is the unique -minimizing solution of . The rate in (3.12) follows trivially from (3.11), since is linear and bounded. To prove (3.13) we choose with and proceed similar as in the proof of Lemma 2.2. Because ,

and therefore

By the definition of the Bregman distance

Since the Bregman distance is nonnegative, it follows

and hence .

3.3 Numerical example

We consider recovering a function from CS measurements of its primitive. The aim of this elementary example is to point out possible implementation of the two proposed models and supporting the linear error estimates. Detailed comparison with other methods and figuring out limitations of each method is subject of future research.

The discrete operator is taken as a discretization of the integration operator . The CS measurement matrix is taken as random Bernoulli matrix with entries . We apply strict and relaxed co-regularization with , as Daubechies wavelet ONB with two vanishing moments and . For minimizing the relaxed co-regularization functional we apply the Douglas-Rachford algorithm [6, Algorithm 4.2] and for strict co-regularization we apply the ADMM algorithm [6, Algorithm 6.4] applied to the constraint formulation .

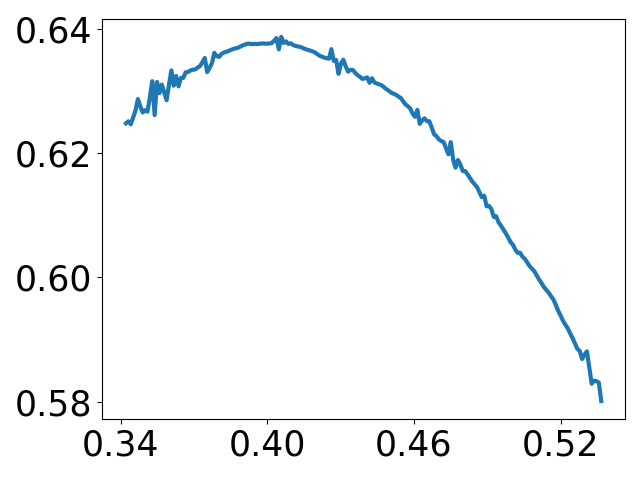

Results are shown in Figure 1. The top row depicts the targeted signal (left) for which is sparsely represented by (right). The middle row shows reconstructions using the strict and the relaxed co-sparse regularization from noisy data . The bottom row plots and as functions of the noise level. Note that for the Bregman distance is given by . Both error plots show a linear convergence rate supporting Theorems 2.7, 2.8, 3.4.

4 Conclusion

While the theory of CS on direct data is well developed, this by far not the case when compressed measurements are made on indirect data. For that purpose, in this paper we study CS from indirect data written as composite problem where models the CS measurement operator and the forward model generating indirect data and depending on the application at hand. For signal reconstruction we have proposed two novel reconstruction methods, named relaxed and strict co-regularization, for jointly estimating and . Note that the main conceptual difference between the proposed method over standard CS is that we use the penalty for indirect data instead of together with another penalty for accounting for the inversion of , and jointly recovering both unknowns.

As main results for both reconstruction models we derive linear error estimates under source conditions and restricted injectivity (see Theorems 2.8, 2.7). Moreover, conditions have been shown to be even necessary to obtain such results (see Theorem 3.4). Our results have been illustrated on a simple numerical example for combined CS and numerical differentiation. In future work further detailed numerical investigations are in order comparing our models with standard CS approaches in practical important applications demonstrating strengths and limitations of different methods. Potential applications include magnetic resonance imaging [2, 15] or photoacoustic tomography [17, 16].

Acknowledgment

The presented work of A.E. and M.H. has been supported of the Austrian Science Fund (FWF), project P 30747-N32.

References

- [1] R. Baraniuk, M. Davenport, R. DeVore, and M. Wakin. A simple proof of the restricted isometry property for random matrices. Constructive Approximation, 28(3):253–263, 2008.

- [2] K. T. Block, M. Uecker, and J. Frahm. Undersampled radial MRI with multiple coils. iterative image reconstruction using a total variation constraint. Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine, 57(6):1086–1098, 2007.

- [3] M. Burger and S. Osher. Convergence rates of convex variational regularization. Inverse problems, 20(5):1411, 2004.

- [4] E. J. Candès, J. Romberg, and T. Tao. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. IEEE Transactions on information theory, 52(2):489–509, 2006.

- [5] E. J. Candes, J. K. Romberg, and T. Tao. Stable signal recovery from incomplete and inaccurate measurements. Communications on Pure and Applied Mathematics: A Journal Issued by the Courant Institute of Mathematical Sciences, 59(8):1207–1223, 2006.

- [6] P. L. Combettes and J. C. Pesquet. Proximal splitting methods in signal processing. Springer Optimization and Its Applications, 49:185–212, 2011.

- [7] D. L. Donoho. Compressed sensing. IEEE Transactions on information theory, 52(4):1289–1306, 2006.

- [8] J. Flemming. Variational Source Conditions, Quadratic Inverse Problems, Sparsity Promoting Regularization: New Results in Modern Theory of Inverse Problems and an Application in Laser Optics. Springer, 2018.

- [9] S. Foucart and H. Rauhut. A mathematical introduction to compressive sensing. Applied and Numerical Harmonic Analysis. Birkhäuser/Springer, New York, 2013.

- [10] J.-J. Fuchs. On sparse representations in arbitrary redundant bases. IEEE transactions on Information theory, 50(6):1341–1344, 2004.

- [11] M. Grasmair, M. Haltmeier, and O. Scherzer. Sparse regularization with penalty term. Inverse Problems, 24(5), 2008.

- [12] M. Grasmair, O. Scherzer, and M. Haltmeier. Necessary and sufficient conditions for linear convergence of -regularization. Communications on Pure and Applied Mathematics, 64(2):161–182, 2011.

- [13] B. Jin, D. A. Lorenz, and S. Schiffler. Elastic-net regularization: error estimates and active set methods. Inverse Problems, 25(11):115022, 2009.

- [14] D. Lorenz. Convergence rates and source conditions for tikhonov regularization with sparsity constraints. Journal of Inverse & Ill-Posed Problems, 16(5), 2008.

- [15] M. Lustig, D. Donoho, and J. M. Pauly. Sparse MRI: The application of compressed sensing for rapid mr imaging. Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine, 58(6):1182–1195, 2007.

- [16] J. Provost and F. Lesage. The application of compressed sensing for photo-acoustic tomography. IEEE transactions on medical imaging, 28(4):585–594, 2008.

- [17] M. Sandbichler, F. Krahmer, T. Berer, P. Burgholzer, and M. Haltmeier. A novel compressed sensing scheme for photoacoustic tomography. SIAM Journal on Applied Mathematics, 75(6):2475–2494, 2015.

- [18] O. Scherzer, M. Grasmair, H. Grossauer, M. Haltmeier, and F. Lenzen. Variational methods in imaging. Springer, 2009.

- [19] H. Zou and T. Hastie. Regularization and variable selection via the elastic net. Journal of the Royal Statistical Society. Series B: Statistical Methodology, 67(2):301–320, 2005.