Controlling the False Discovery Rate in Complex Multi-Way Classified Hypotheses

Abstract

In this article, we propose a generalized weighted version of the well-known Benjamini-Hochberg (BH) procedure. The rigorous weighting scheme used by our method enables it to encode structural information from simultaneous multi-way classification as well as hierarchical partitioning of hypotheses into groups, with provisions to accommodate overlapping groups. The method is proven to control the False Discovery Rate (FDR) when the p-values involved are Positively Regression Dependent on the Subset (PRDS) of null p-values. A data-adaptive version of the method is proposed. Simulations show that our proposed methods control FDR at desired level and are more powerful than existing comparable multiple testing procedures, when the p-values are independent or satisfy certain dependence conditions. We apply this data-adaptive method to analyze a neuro-imaging dataset and understand the impact of alcoholism on human brain. Neuro-imaging data typically have complex classification structure, which have not been fully utilized in subsequent inference by previously proposed multiple testing procedures. With a flexible weighting scheme, our method is poised to extract more information from the data and use it to perform a more informed and efficient test of the hypotheses.

Keywords: Hierarchical Grouped BH; S-Way Grouped BH; Generalized Grouped BH; Data-adaptive Generalized Grouped BH; Multi-way classified hypotheses

1 Introduction

In modern inferential problems arising from large datasets, hypotheses often occur in complex structures of groups. Compelling examples can be found in neuro-imaging studies like functional magnetic resonance imaging (fMRI) or electroencephalography (EEG). Large sets of hypotheses obtained from such studies can be classified according to (a) locations of interest (voxels in an fMRI study and electrodes in an EEG study) and (b) their broader classifications (clusters of voxels known as Regions of Interest (ROIs) and regions of the cerebral cortex of the brain). The groups formed by the locations of interest can be nested within the groups formed by their broader classifications. In a different scenario, a set of hypotheses can be classified by more than one independent criteria that unlike the previous case, cannot be ordered among themselves. For example, Stein et al. (2010) conducted a study to detect voxelwise genomewide association in the human brain. The study involved the analysis of the relationship between single-nucleotide polymorphisms(SNPs) and volume change in voxels. The hypotheses obtained in this study can be classified according to groups formed by their SNPs and/or groups formed by a family for each voxel. Furthermore, in such studies, when the grouping criteria are defined by the nature of the scientific experiment and statisticians have little or no control on their demarcation, it is quite likely the groups overlap with one or more hypotheses becoming members of more than one group. For example, in an EEG experiment, some electrodes are placed at the boundaries of two adjacent brain regions, thereupon, hypotheses that are associated to these electrodes can be classified as members of either brain regions.

Though multiple hypotheses testing is a highly active research area, besides the p-filter algorithm proposed by Ramdas et al. (2019), not much development has been done to adequately justify overlapping groups, simultaneous or nested group structures in newly designed testing procedures. All the same, the grouping information provide valuable insight into the nature of the hypotheses which can be profitably used to create sharper distinction of signals from noise. The hypotheses classified into a group share common characteristics with other member hypotheses of the group. Consequently, a grouping structure levies a generic effect on its members, and a multiple testing method that utilizes all such valuable information in its algorithm would be naturally poised to make sharper distinction between the significant and non-significant state of an individual hypothesis. Some researchers have addressed the task of developing new multiple testing procedures adjusted to simple classification structure where hypotheses are classified into distinct groups by one norm of classification (Hu et al. (2010), Benjamini and Bogomolov (2014), Ignatiadis et al. (2016), Li and Barber (2019), etc.). However, to the best of our knowledge, there does not exist a well-defined multiple testing procedure that controls the overall False Discovery Rate, by utilizing structural information gleaned from multiple classifications, that are either simultaneous, or hierarchical or both, and make provisions for overlapping groups. In the absence of such a procedure, an investigator has to prioritize a classification over the rest and choose a multiple testing procedure that accommodates non-overlapping groups formed by a single classification norm. In a situation where each of the multiple classifications contain equally important information, the investigator is obligated to compromise the accuracy of the analysis.

In this article we propose the theory of a generalized testing procedure, and its data-adaptive version, in an effort to alleviate this problem. We systematically develop a weighted Benjamini-Hochberg (BH)-type procedure, the Generalized Grouped BH (Gen-GBH) procedure from combining two weighted BH-type procedures, the Heir-GBH and the -way GBH, designed for hierarchical classification and simultaneous classification structures respectively. Our proposed methods control the overall False Discovery Rate (FDR) in their respective classification settings. The theoretical formulations of the methods control FDR when the p-values satisfy some positive dependence criteria, while their data adaptive analogs control FDR under assumptions of independence. Due to the detailed classification information used in our approach, our proposed methods are poised to control FDR more accurately and possess more power than existing procedures that utilize partial or no classification information.

The paper is arranged as follows. In Section 2, we present a brief review of multiple testing research closely related to ours and some preliminary results at the base of our proposed methods. In Section 3, we present the p-value weighting schemes for hypotheses structured according to three different types of classification, hierarchical, multi-way simultaneous, and generalized classifications. The corresponding weighted BH methods in their oracle forms with proven FDR control under positive dependence and our proposals of their data-adaptive versions are also given in this section. The generalized classification integrates hierarchical classification into a simultaneous setting. Simulation studies in Section 4 investigate the performances of the oracle and data-adaptive weighted BH for hierarchically grouped hypotheses relative to their BH counterparts that ignore the underlying structure. Though the p-filter algorithm (Ramdas et al. (2019)) considers a general classification setup such as ours, it serves FDR controlling objectives that are quite different from the objectives in this paper. Nevertheless, we include it in our comparative studies, noting the caveats caused by the differences in our goals. The results of these studies indicate that the p-value weighting scheme in our proposed weighted BH under hierarchical classification setting can capture the underlying structure quite effectively. This carries over to our proposed data-adaptive method under the generalized classification, as seen from its application to an EEG dataset, illustrated in Section 5. The paper concludes with Section 6 where some remarks are made on the effectiveness of multiple testing as an important statistical tool for analyzing complexly structured datasets. Proofs of some results associated with the FDR control of our proposed methods are given in the Appendix.

2 Existing research and our contributions

2.0.1 Introduction of group structures in multiple hypotheses testing

At the outset, multiple testing methods were designed to correct and secure multiplicity errors like the family-wise error rate and the false discovery rate, when testing a single set of hypotheses simultaneously. With their growing popularity as an inferential tool, their limitations in handling large datasets with low signal rates became evident (Pacifico et al. (2004), Meinshausen et al. (2009)). It was realized that clustering such hypotheses into local groups, and updating existing practices to incorporate the specific characteristics of the clusters formed leads to improved performance of these methods. The groups can be pre-specified, owing to the nature of the underlying scientific experiment, or can be formed based on domain specific knowledge, economic considerations, etc. The target is to utilize structural information about the groups formed and sharpen the contrast between the significant hypotheses and those that are not, leading to powerful and accurate decisions.

Grouping of hypotheses due to one criterion has become a common practice in multiple testing literature. We describe such classification due to one criterion as ‘one-way classification’. An early work by Benjamini and Heller (2007) discussed the importance of grouping hypotheses according to locations arising from a spatial data analysis. They argued that aggregation of hypotheses into local clusters tend to group similar hypotheses (nulls or non-nulls) into groups, thus increasing signal-to noise ratio. Hu et al. (2010) proposed an idea of using weighted p-values in the BH procedure where the weights capture the one-way classification structure. The weight assigned to each p-value depends on the composition of the group to which the p-value belongs to, and inflates or deflates the p-value conforming to the proportion of nulls in its parent group. The theoretical formulation of their Grouped BH (GBH) method controls the FDR at desired level of significance in finite samples. Two data-adaptive versions of the GBH method use p-value weights that are estimated from the data and control the FDR asymptotically when the p-values are independent or weakly dependent. In more recent work, Li and Barber (2019) put forward the idea of structure adaptive BH algorithm (SABHA) that can incorporate structural information from one-way classified hypotheses in the form of weights. The weight assigned to each p-value is an estimate of the proportion of true nulls in its parent group, provided , for some arbitrary . Such weights depend upon an estimate of the proportion of true nulls in the parent group of a p-value. The SABHA method controls FDR more liberally, and the excess over the level of significance is characterized by a term dependent on the Rademacher complexity of the estimated weights. They successfully apply the SABHA method to analyze an fMRI dataset, where hypotheses from measured voxels are grouped according to regions of interest. Substantial amount of research has been pursued to find powerful multiple testing procedures that are based on thresholding the Local False Discovery Rate (Lfdr, introduced by Efron et al. (2001)), applicable to one-way classified hypotheses. Few notable articles include Cai and Sun (2009), Liu et al. (2016), etc. The proposed methods in this paper do not utilize Lfdrs in their processes and are fundamentally different from the methods that depend on Lfdr thresholding.

Next we describe the development of our proposed Gen-GBH procedure in three stages, by combining the proposals of the Heir-GBH and the -Way GBH, designed for hierarchical and simultaneous classification structures respectively. In its simple form, the oracle (theoretical) form of the Gen-GBH method conforms to the one-way classification structure as considered by all the testing procedures mentioned above. However, unlike any of the above methods, the Gen-GBH can accommodate overlapping groups in the one-way classification as well as in higher order classification structures. Hence it is capable of handling more complex group structures than one-way classified hypotheses. We show in section 3 that the oracle GBH procedure as proposed by Hu et al. (2010) is a specific case of the oracle Gen-GBH. The data-adaptive version of the proposed procedure estimates the unknown parameters from the data and is theoretically guaranteed to control the FDR in finite samples, under the assumption that the p-values are independent. The SABHA method proposed by Li and Barber (2019) is apt in handling one-way classified hypotheses in distinct groups. However, their choice of weights is quite different from our proposal and hence the control on FDR by the SABHA method, even in its oracle form is more liberal than our method, which, in its oracle form, attains exact control on FDR.

2.0.2 Our contribution

Beyond one-way classification, there might be multiple classification norms that can be imposed concurrently on a set of hypotheses. A ‘hierarchical classification’ is obtained when there is an ordering among the classification norms, implying that the groups formed by a certain norm can be split by a lower ordered norm to form smaller groups. A naturally occurring example is a phylogenetic tree, that classifies biological species into higher order groups, i.e., genera, which are further classified into higher order groups determined by the taxonomic hierarchy.

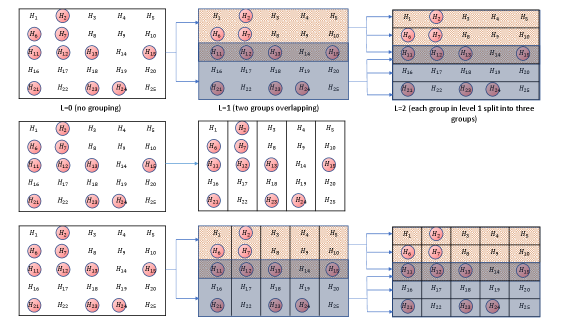

To further illustrate the hierarchical structure, consider the following simple example. A set of hypotheses is hierarchically classified in two levels. The top row in Figure 1 shows the layout of the hypotheses and the classification structure in three stages. The significant hypotheses are circled. Initially, at level L=0, there is no classification. At level , the set of hypotheses is split into two groups (shown in two different shades), each consisting of member hypotheses. There are five hypotheses at the intersection of the two groups. At level L=2, each of the two groups in level one are further split into three groups of five hypotheses each, each group being represented by a row. At any level, each hypothesis can be a member of an arbitrary number of groups, not exceeding the total number of groups at that level. The hierarchical procedures we propose in this paper are designed to account for the effect of all parent groups on each individual hypothesis. Theoretically, the hierarchical structure can be as detailed as desired, with groups at the ultimate level comprising of single hypotheses.

In a different situation, there may exist two or more classification criteria that may be imposed simultaneously, rather than hierarchically on a set of hypotheses. The simplest of such a case, involving two simultaneous criteria giving rise to ‘two-way classified’ hypotheses was discussed in a recent article by Nandi et al. (2021). A common example of simultaneously classified hypotheses can arise from any spatio-temporal data, where the spatial classification norm like geographical regions, brain voxels, etc. is unrelated to the temporal norm like seconds, hours, days, etc., but can be simultaneously imposed on the same set of hypotheses. We seek to combine the idea of hierarchical and simultaneous classifications to suggest a generalized classification setup, that also allows for overlapping groups formed by one or multiple criteria. In Figure 1, the middle row shows that the same set of hypotheses can be classified according to a different norm along the columns. The hierarchical classification along rows and the classification along the columns can be combined, as shown in the bottom row of Figure 1, to form the generalized classification setup. Rest of this paper is devoted to the development and discussion of the generalized weighted multiple testing procedures to account for hierarchical and overlapping groups that may occur along the simultaneous classifications, and control the False Discovery Rate.

2.0.3 Critical appraisal of our method in comparison to other recent methods

P-value weighting is a well-established concept to boost power of the multiple testing procedures. The weights can incorporate structural information (earliest example of which can be found in Storey et al. (2004), Benjamini et al. (2006), Blanchard and Roquain (2009), Sarkar (2008),etc.), penalties for multiple decisions (Benjamini and Hochberg (1997), etc.). Some articles like Genovese et al. (2006) and Ignatiadis et al. (2016) propose formulation of weights that do not depend directly on the p-values, but use prior information about the experiment and external covariates to reflect upon the nature of the p-values. Various formulations of complex structures of p-values, have been discussed in the literature, along with the novel designs of multiple testing procedures to address such structures. For example, in a ‘tree structure’ of hypotheses, a p-value is tested only if its parent is rejected. Several methods including Yekutieli et al. (2006), Yekutieli (2008), Lei et al. (2020), etc. address such structures of hypotheses. A different setup of ‘ordered hypotheses’ and corresponding testing procedures have been discussed in Lei and Fithian (2016), Li and Barber (2019), etc. The ordered structure of hypotheses assumes that hypotheses early in a lineup are more likely to contain true signals than their successors. Though our proposed methods address hierarchically classified hypotheses, clearly such classification is not to be confused with tree structures or ordered hypotheses. Hence much as our proposed methods are not suited for the structures mentioned above, to the best of our knowledge, the methods that address tree structures and ordered structures are not suited to the setup we discuss here.

To the best of our knowledge, the p-filter algorithm developed in Foygel Barber and Ramdas (2015) and Ramdas et al. (2019) is the only algorithm, besides ours, able to address hierarchical and/or simultaneous classifications with presence of overlapping groups. However, a basic difference between the p-filter and our algorithms is that the former controls FDR in each group and level of every classification, consequently leading to quite a conservative control on the overall FDR. While controlling FDR at each type of classification is desirable in some scenarios, our goal is to control the overall FDR and enhance the accuracy of the BH procedure using the classification information. Consequently, our methods are less conservative, and more accurately control the FDR than the p-filter process.

2.1 Preliminaries

2.1.1 The Weighted BH procedure.

We begin the discussion from some fundamental results on multiple testing in the framework of controlling the False Discovery Rate (FDR), as defined by Benjamini and Hochberg (1995). Some results from Nandi et al. (2021) are re-stated and generalized to form the background of the results obtained in this article. The goal of a multiple testing procedure is to discover the false null hypotheses with a control on the number of false discoveries. Consider a set of hypotheses that are to be tested simultaneously. The False Discovery Rate (FDR) is the expected proportion of false discoveries and is defined as

with and being the number of false and total discoveries made, respectively. The following assumptions are made on the nature of the p-values.

Assumption 1. Let be the set of indices of the true null hypotheses. For each , .

Assumption 2. The set of p-values , corresponding to , are Positively Regression Dependent on the Subset (PRDS) of null p-values.

A set of random variables is said to be positively regression dependent on a subset of these random variables if is non-decreasing in , for each and for any (coordinatewise) non-decreasing function of . A weaker form of positive dependence property, with replaced by , is often assumed in the literature in the context of BH-type FDR controlling procedures (Finner et al., 2009; Sarkar, 2008). Our proposed methods provably control the FDR under either of these conditions defining the PRDS property.

A general form of the Benjamini-Hochberg (BH) procedure forms the building block for the multiple testing procedures we develop in this article.

Definition 1

[Weighted BH] Given non-stochastic weight assigned to , for , the weighted BH at level is a level BH method applied to the weighted p-values , i.e., it orders the weighted p-values as , and rejects the hypothesis corresponding to , for all , provided the maximum exists; otherwise, it rejects none.

Result 1. For p-values satisfying the PRDS property, the FDR of the weighted BH is bounded above by .

The following condition ensures that the weighted BH controls FDR at level .

Condition 1

The weights , assigned to the p-values , are such that .

Result 1 is re-stated from Nandi et al. (2021) and interested readers are referred to it for the proof. Condition 1 permits researchers to conveniently incorporate a wide range of structural information about the hypotheses in the weights. The weighted BH thus encompasses several multiple testing procedures that employ any formulation of weights that satisfy Condition 1. Notably, the classical BH corresponds to the case when , the proportion of true nulls, for all .

2.1.2 Moulding the Weighted BH procedure for group structures with overlapping groups

Prior to applying the Weighted BH method to complex group structures, it is important to note that the weights can be designed to allow overlapping groups formed by a classification norm. Consider a situation where a set of hypotheses are classified into groups, and each group can overlap with one or more groups. The following result shows how the weights can be formulated to capture this structure.

Result 2. Given any , , quantifying the underlying structural information from the th group, let the th p-value be assigned the weight given below:

| (2.1) |

where is number of hypotheses and is the proportion of true hypotheses in group . These weights satisfy Condition 1.

Result 2.1.2 is proved in the Appendix. This scheme of weighting generalizes the one-way classification structure of hypotheses with non-intersecting groups and lays the foundation to design suitable multiple testing procedures for overlapping groups in more complicated structures of hypotheses.

2.1.3 Data-Adaptive Version of the Weighted BH procedure.

Storey (2002) and Storey et al. (2004) proposed a point estimate of the number of true nulls in a given set of independent p-values. This estimate, based on a tuning parameter was advantageously used in Storey (2002) and Storey et al. (2004) and in subsequent literature (Blanchard and Roquain (2009), Sarkar (2008), etc.) to propose new estimates of the proportion of true nulls. These estimates, when substituted for weights in the Weighted BH procedure, helped design data-adaptive versions of the method that controls FDR when p-values are independent. We extend this idea to estimate the weights in our proposed hierarchical and/or simultaneous classification of hypotheses, such that they satisfy the following result.

Result 3. For a data-adaptive weighted BH procedure with (coordinatewise) non-decreasing estimated weight functions , , FDR under independence if

| (2.2) |

where represents as a function of with .

Interested readers are referred to Sarkar (2008) for a proof of this result.

3 Proposed multiple testing methodologies

3.1 Hierarchical Classification

Suppose there are hypotheses that can be partitioned into groups according to each of different classification criteria. Assuming that these criteria are intrinsically ordered from to , we consider imposing all these classifications hierarchically. At level , i.e., upon successive application of the first classifications, each of the groups can be further partitioned into at least one subgroup at level , where . When , we have the unclassified group of all hypotheses, and as increases, we have non-decreasing number of groups with non-increasing number of member hypotheses in each group. At any level , there might be two or more groups that can overlap, i.e., they have one or more hypotheses in common.

Let , , be the subgroups of hypotheses formed at level from the th subgroup , for , with referring to the original unclassified set of null hypotheses. For notational convenience, henceforth, we refer to as . Thus, the hierarchical classification scheme partitions the hypotheses into the subgroups , .

Let and , respectively, be the sets of indices of the nulls and true nulls in , with , , and , being the number of nulls (which is when ), number of true nulls, and the proportion of true nulls,

respectively, in this group. The proportion of true nulls in the entire set of null hypotheses, at any level , is , where and , which reduce, respectively, to

and

when no overlapping occurs at any level of classification.

3.1.1 Oracle Hierarchically Grouped BH (Heir-GBH)

Assuming to be known for all and , we will now construct the desired weights satisfying Condition 1, i.e.,

| (3.1) |

Our proposed procedure assigns the weight to each , and applies the BH method to these weighted -values.

Lemma 1. Let be such that

| (3.2) |

where is recursively defined below:

| (3.3) |

with . The ’s in (3.2) satisfy Condition 1.

Note 1. There is a variety of choice for to define satisfying Condition 1, as suggested by Result 2.1.2. For instance, one can simply choose . Such a choice, however, does not capture the underlying hierarchical nature of the successive groupings, unlike our chosen in Lemma 3.1.1. The in Lemma 3.1.1 defined through this accounts for the effects of all the parent partitions that contain the hypothesis corresponding to . It sequentially compares the significance of composition of each group containing at a particular level with all other groups originating from the same parent, thus recording the impact of the entire hierarchical setup on the individual hypotheses.

It is important to note that if overlapping does not occur at any of the levels, then , for each , where .

The following alternative expression for is worth noting.

| (3.4) |

with and . This will be used to show that satisfies Condition 1 while proving Lemma 3.1.1 in the Appendix.

Definition 2 (Oracle Hierarchically Grouped BH (Heir-GBH))

Theorem 1. The Heir-GBH controls the FDR under Assumptions 2.1.1 and 2.1.1.

This theorem follows from Result 1 and Lemma 1.

Note 2. When , i.e., when no classification is applied, the Heir-GBH assigns the weight to each p-value, which is the same weight assigned by the Oracle BH. to all p-values.

3.1.2 Data-Adaptive Procedure

The data-adaptive version of Heir-GBH relies on the data to estimate the weights assigned to the p-values. We generalize the novel idea of estimating such weights for one-way classified hypotheses proposed in Nandi et al. (2021), and formulate the estimated weights in the current setting of more complex hierarchically grouped structure of hypotheses.

Given a specific , the number of p-values below is determined at the last level as and the estimate of as

| (3.5) |

On the basis of , for a generic group at level , we successively define the estimate of as

| (3.6) |

and the proportion of true nulls , as

If , i.e., for a single group of hypotheses, the estimated weight assigned to the th p-value is , where and that at level is

| (3.7) |

where estimates the grouping effect of , and is given by the recurring formula

| (3.10) |

where . This leads us to propose the data-adaptive multiple testing procedure for hierarchically classified hypotheses as given below:

Definition 3 (Data Adaptive Hierarchically Grouped BH (DAHeir-GBH))

Note 4. Like Heir-GBH, DAHeir-GBH reduces to a method that uses for each , if no groups overlap at any of the levels.

Theorem 2.

The DAHeir-GBH controls FDR at level , under the assumption that the p-values are independent.

The proof of this theorem is provided in the Appendix.

3.2 Simultaneous Multi-Way Classification

Suppose there exist ( different classification criteria, each of which can simultaneously be used to split the set of hypotheses into groups, thus resulting in an -way classification structure for the hypotheses. In the simple case of , i.e., for two-way classification (considered in Nandi et al. (2021), with non-overlapping groups for each classification), the hypotheses can be arranged in the form of an matrix. The rows and columns of the matrix represent two sets of non-overlapping groups created out of the hypotheses by the two classifications. For a general , this structure resembles an -dimensional array, with the th array representing a set of groups, created out of the hypotheses by classification .

Although the groups formed by each classification can be allowed to overlap, we defer discussion of this case to the next sub-section where a generalized classification structure is considered by integrating the ideas of hierarchical and simultaneous groupings.

Let be the th group containing hypotheses for classification , for . Then, with denoting the subset of indices of true nulls in group , is the proportion of true nulls in group for classification , , and

| (3.11) |

is the proportion of true nulls in the entire set of hypotheses for any of these classifications.

The simultaneous classification schemes are assumed to act independently of each other, so the groups of hypotheses do not overlap between classifications, except at the elementary level where each hypothesis is classified concurrently in different ways. In other words, each can be re-indexed as , i.e., can be identified as the p-value appearing at the intersection of the groups , where . With this in mind, we proceed to define the weight to be attached to each and propose our multiple testing procedure in its oracle form under -way classified setting in the following sub-section.

3.2.1 Oracle Procedure

Assuming to be known, for all , , we will construct the desired weights satisfying Condition 1, i.e.,

| (3.12) |

The weight will be attached to , and the BH method applied to these weighted -values will be our proposed method in its oracle form under -way classified setting of the hypotheses.

The following lemma gives the desired weights.

This lemma follows from the fact that the left-hand side of (3.12) with the in the lemma equals , which is , since , for each .

Note 5. This weight assigned to is the simple mean of the effects imposed on it by all its parent groups. It accounts for the significant effect of each group that this p-value belongs to. In case any of the classification norms is ineffective or does not provide information substantial to distinguish between the states (significant or not) of the member p-values, the term related to its effect can be easily removed from the formula (3.13), and the weight can be recalculated to consider the effects of the remaining grouping norms.

We are now ready to define our proposed multiple testing procedure in its oracle form for simultaneously -way classified hypotheses.

Definition 4

Oracle -Way Grouped BH (-Way GBH) is defined as the level BH procedure applied to , for , where is defined as in (3.13).

3.2.2 Data-Adaptive Procedure

Similar to the way data-adaptive weights are chosen to define DAHeir-GBH, we consider estimating the weight for in developing the data-adaptive form of Oracle -Way GBH as follows: Let

| (3.14) |

| (3.15) |

with representing the number of p-values not exceeding in group . Based on these weights, we propose our data-adaptive procedure under simultaneous classification setting as follows:

Definition 5

Theorem 4.

The DA--Way GBH controls FDR at level , under the assumption that the p-values are independent.

This theorem is proved in the Appendix.

3.3 Generalized Classification

The generalized setup allows the grouping structures to be more detailed. It accommodates hierarchical and simultaneous classifications concurrently, combining the ideas from Sections 3.1 and 3.2. More specifically, the set of hypotheses can be subjected to simultaneous (one-way) classifications according to different criteria, with the th criterion allowing hierarchical groupings, possibly overlapping, using () ordered grouping norms, as described in Section 3.1.

Integrating the notations used for hierarchical classifications into the simultaneous setting, let us define as the th group created out of the hypotheses by applying the hierarchical classification scheme up to the first levels at the th simultaneous classification, where , .

3.3.1 Oracle Procedure

Assuming the proportion of true nulls, , in , to be known for all , , we want to construct the weight to be attached to . This would pave the way toward defining our proposed procedure under the generalized -way classification. The following lemma gives the desired .

Lemma 3. Let be such that

| (3.16) |

where, for each , is defined as in Lemma 2 in terms of , . Such ’s, for all satisfy Condition 1.

The lemma can be easily proved by noting from Lemma 3.1.1 that , for each .

Our proposed procedure in its oracle form, under generalized multi-way classification setting, is formally defined in the following:

Definition 6

The Oracle Generalized Grouped BH (Gen-GBH) is a level BH procedure applied to , for , where is defined as in (3.16).

3.3.2 Data-Adaptive Procedure

Just like the Gen-GBH, we integrate the DAHeir-GBH into the simultaneous classification setting to propose our data-adaptive version of the Gen-GBH. More specifically, let

| (3.17) |

where, for each , is defined as in (3.7) and (3.10) using , for , we formally define our proposed data-adaptive procedure for generalized classification of hypotheses as follows:

Definition 7 (Data-Adaptive Generalized Grouped BH (DA-Gen-GBH))

The level BH procedure applied to , for , where is defined as in (3.17).

4 Simulations

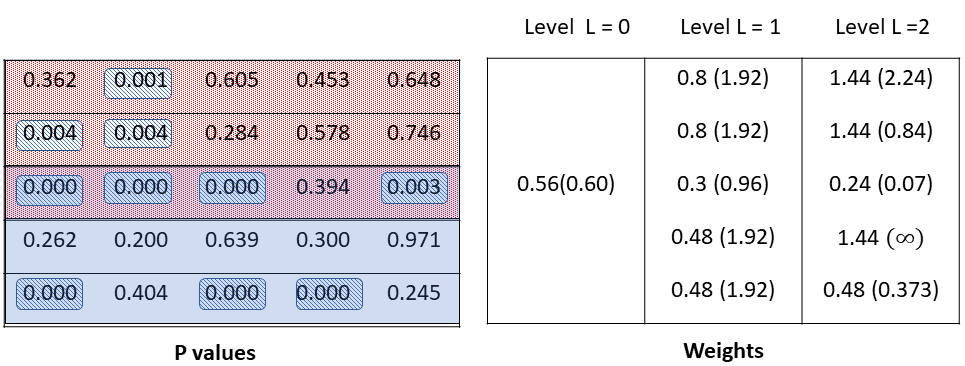

An example demonstrating the advantage of the Heir-GBH over the BH and respectively, their data-adaptive versions, in a hierarchical classification setup. Through a short example, we illustrate the superior distinguishing power of the Heir-GBH and the DAHeir-GBH over existing comparable testing procedures, the oracle BH and its data-adaptive version. We consider the setup similar to that in the first row of Figure 1, consisting of hypotheses that are hierarchically classified in two levels, with the p-values corresponding to significant hypotheses highlighted. The Adaptive BH disregards classification structure and assigns to each p-value , the constant estimate of weight (see Storey et al. (2004)):

| (4.1) |

where , with being set at , for the Adaptive BH as well as for the DAHeir-GBH. In Figure 2, the layout of the p-values is shown, alongwith a table showing the weights assigned by the four methods in three stages. At level , since there is no grouping, the hierarchical methods (with choice of weights as in (3.2), (3.3), and (3.7), (3.10)) are equivalent to the BH in its oracle and data-adaptive forms, respectively. As seen from the table of weights, the Adaptive BH, equivalent to the DAHeir-GBH at , assigns constant weight , while the oracle BH, equivalent to the Heir-GBH at assigns the weight to all the p-values. At level , the weights assigned by the Heir-GBH and DAHeir-GBH are more sensitive to the significant state of the hypotheses than the oracle BH or the Adaptive BH. The five hypotheses at the intersection of the two groups at level one are assigned the smallest weights, appropriately accounting for the effects of both overlapping parent groups. At level , the hierarchical procedures are more sensitive to the significant states of the p-values; the highest weights are assigned to the members of group 4 at level two, that does not contain any non-null p-values, and the smallest weights are assigned to the members of group 3 at level two, that are in the intersection of the overlapping groups at level one and contain the largest number of non-null p-values. The weights are sensitive to the effect of grouping on the significance of the individual p-value, and accuracy of the weights increase with the details of the hierarchical structure.

We further investigate the performance of the Heir-GBH in comparison with the oracle BH and the p-filter algorithm. The DAHeir-GBH is compared to the Adaptive BH and the pfilter algorithm with adaptive weights. The comparisons are made, respectively, in terms of (i) control on FDR and (ii) average power at varying densities of signals. We consider hypotheses in a hierarchical setup of two levels. The layout resembles a section of the hierarchical setup of the EEG dataset we analyzed in the following secion. At the first level, there are two groups, i.e., , the first group comprising of hypotheses and the second group consisting of member hypotheses. The groups overlap and there are hypotheses at the intersection of and . At level 2, each of these two groups are further classified into smaller groups each containing members, i.e., , and , . For the sake of simplification, the hypotheses at the intersection of the two groups at level one are classified into groups, each of size at level two. Thus at level , there are groups, each of size .

The simulation setting was designed using the following steps:

-

1.

Generate the following arrays to simulate the impact of classification at each level of grouping.

-

(a)

as an random matrix of i.i.d. Ber, where . The elements of the matrix represent the state of the individual hypotheses at level .

-

(b)

as defined below demonstrates the influence of second level of grouping on the set of hypotheses.

and are random observations obtained from Ber to simulate the effect of the groups at level . In order to reflect the overlapping significance of and we draw a sample Ber, where . Each of these effects are imposed on the respective hypotheses by .

-

(c)

as a random vector of i.i.d. Ber, reflects the grouping effect at level .

-

(a)

-

2.

Obtain

(4.2) where denotes the Hadamard product between matrices and , and representing the -dimensional vector of ’s.

-

3.

Given , generate a random matrix as follows:

(4.3) having generated as random matrix, as -dimensional random vector, and as -dimensional random vector, where , each comprising i.i.d. samples, and as an additional random variable. The quantities and measure the strength of correlation among the p-values in each group at levels and respectively. For simplicity, we assume same and in all groups at respective levels of grouping.

-

4.

Apply each of the DAHeir-GBH and BH procedures at FDR level , and the p-filter process with at each level of grouping, for testing against , simultaneously for all , in terms of the corresponding weighted p-values. Note the proportions of false rejections among all rejections and correct rejections among all false nulls.

-

5.

Repeat Steps 1-4 500 times to simulate the values of FDR and power for each procedure by averaging out the corresponding proportions obtained in Step 4.

Remark 1. Note that

where , and Thus, the test statistics are allowed to have different types of dependence structure by appropriately setting the value of and/or at or some non-zero value.

The formulation in equation (4.2) allows us to determine the significant state of each hypothesis, subject to the state of significance of its parent groups at all levels. This helps to demonstrate the true effect of the underlying hierarchical structure in the simulation studies. Since the groups at level overlap, we can regulate the density of signals in intersecting region using the following

| (4.4) |

and in the non-intersecting regions by the following

| (4.5) |

Here represents the proportion of true nulls in the entire set of hypotheses in terms of the proportions of groups at level 2 i.e., (), and at level , i.e, () (or in the overlapping region) containing signals, and the proportion of individual hypotheses that are significant , when no classification is imposed, i.e., at level .

We set for true null hypotheses and for true signals. We also set , , and increase from through in order to simulate different densities of signals using (4.4) and (4.5).

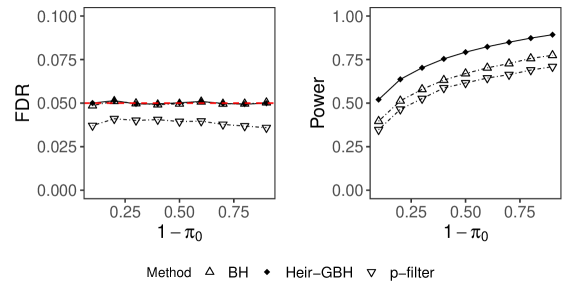

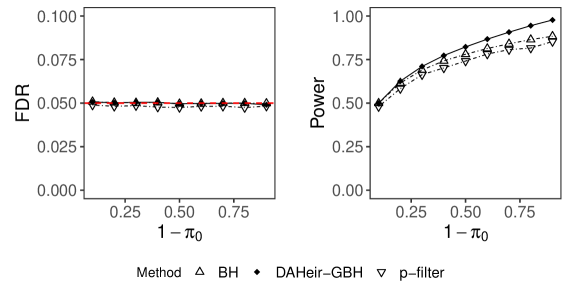

Comparison of the Heir-GBH with the Oracle BH and the p-filter algorithm. Figure 3 compares the performance of the Heir-GBH (as defined in Definition 2) and the oracle BH and the p-filter process under the assumption that the p-values are independent. Figure 4 shows the comparison under the assumption that the p-values are positively dependent. For this purpose, we set and in (3.). In either case, the comparisons show that the pfilter algorithm is more conservative than the BH and the Heir-GBH in terms of controlling the FDR, and incorporating hierarchical weights makes the Heir-GBH significantly more powerful than the other two methods at all levels of densities of signals.

Comparison of the DAHeir-GBH with the Adaptive BH and the p-filter algorithm. In order to compare with the DAHeir-GBH (as in Definition 3), we choose the set of weights proposed by Storey et al. (2004) for the Adaptive BH, which coincides with the weight used by DAHeir-GBH at level . That is, each p-value is assigned the estimated weight

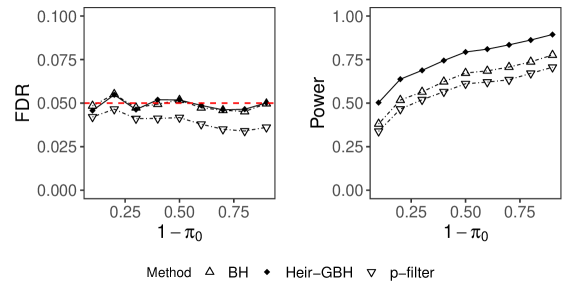

where . Under the assumption that the p-values are independent, all three methods control FDR, but the DAHeir-GBH is more powerful than the others, as shown in figure 5. Besides the simulation settings used for the comparison of the oracle counterparts, we set for the DAHeir-GBH and adaptive BH procedures. For the pfilter algorithm, we set for all (see section 2 of Ramdas et al. (2019)).

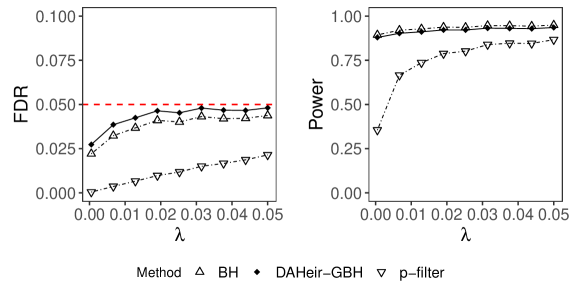

When the p-values satisfy the PRDS condition, the DAHeir-GBH still controls FDR under certain conditions. We set so that the signals are uniformly distributed in all groups at levels one and two, and the variability comes from . We choose for the DAHeir-GBH and the adaptive BH procedures. For the p-filter algorithm, we set , for . The within group dependence at levels one and two are characterized by and respectively. The comparative performances of the three procedures are shown in figure 6. All procedures conservatively control the FDR at all levels of , and the proposed DAHeir-GBH has comparable power with the adaptive BH.

Interested readers are referred to Nandi et al. (2021) for numerical results on the simultaneous classification setup.

The simulation results here and in Nandi et al. (2021) assert that our proposed methods in the hierarchically as well as simultaneously grouped setting are able to control FDR, at least under independence, and are more powerful than existing procedures. Since the Gen-GBH and DAGen-GBH combine the hierarchical and simultaneous procedures, their performances would evidently be better than existing competing methods.

5 Analysis of the EEG patterns through DAGen-GBH

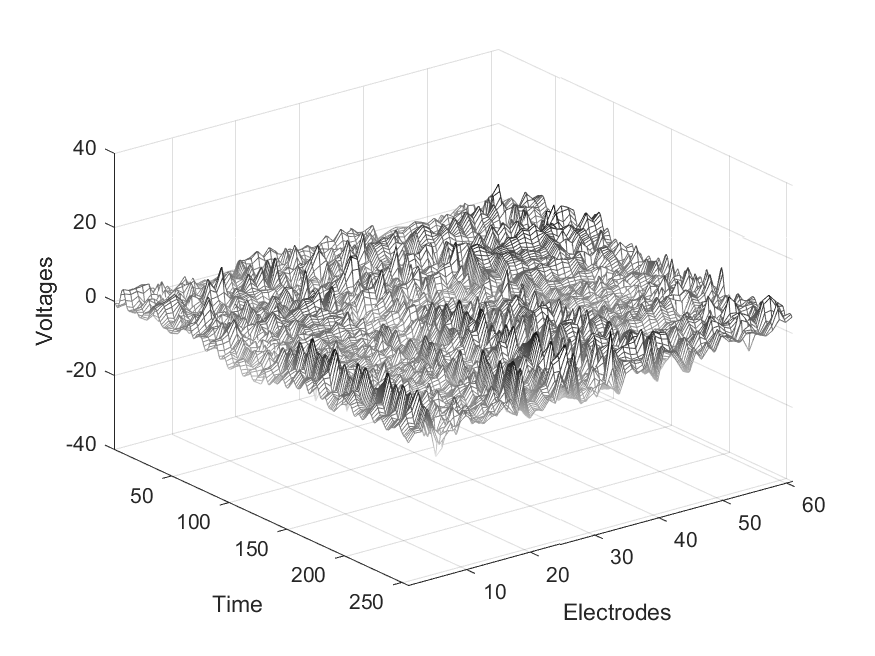

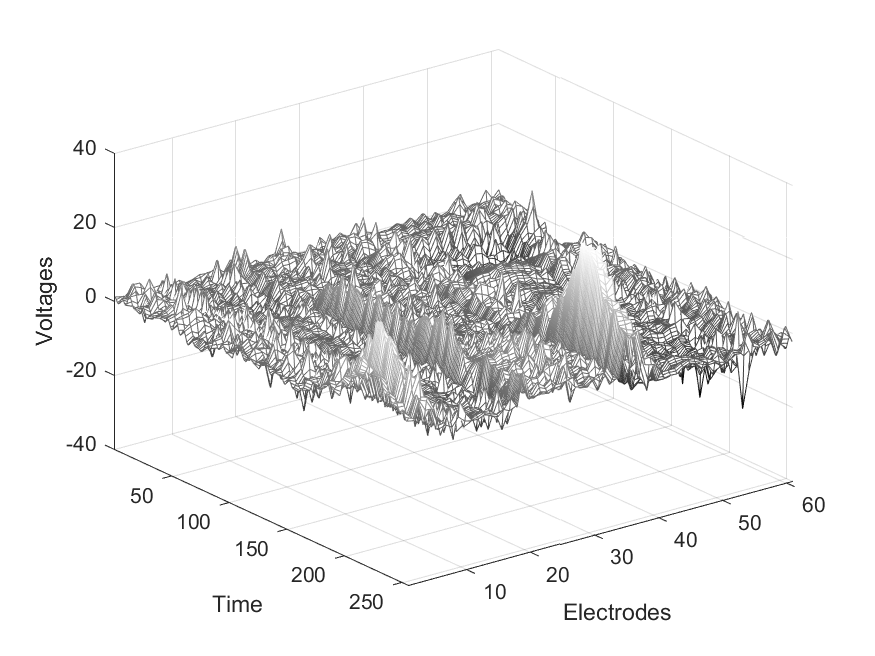

We apply our DAGen-GBH procedure to analyze the data in http://kdd.ics.uci.edu/databases/eeg/eeg.data.html, which arises from a large study examining EEG correlates of genetic predisposition to alcoholism. The experiment was conducted on a control group and a treatment group comprising of alcoholic subjects. For our analysis, we consider subjects each in the control group and alcoholic group. On each subject, ten trials were performed. During each trial, a picture from Snodgrass and Vanderwart (1980) was presented to the subject, while EEG activity in the form of voltage fluctuations (in microvolts) were recorded at time points (each time point being th of a second) from electrodes placed on the subject’s scalp. Figure 7 shows an example of the EEG pattern, averaged over measurements obtained from ten trials, for two subjects, one each from the control and alcoholic groups. The and axes correspond to time and scalp electrodes, respectively, and the -axis corresponds to the EEG measurements. It illustrates a clear difference in the voltage fluctuation patterns for the two subjects over time and electrodes, which leads us to the following driving question underlying this study:

At which pairs of electrodes, are the EEG measurements significantly different between the two groups of subjects?

Answering this question can shed further insight into brain dysfunction and regions impacted by alcoholism. In the literature, case-control studies on EEG measurements, such as this, has often been used to diagnose brain dysfunction and evaluate the performance of relevant treatment procedures (for examples, see Centorrino et al. (2002), Oscar-Berman and Marinkovic (2007), etc.). Specifically, we consider simultaneous testing of the following null hypotheses.

| Between the alcoholic and control groups, there is no difference in the mean | ||||

for , . We have such hypotheses.

The hypotheses in (5) are laid out in a generalized classification setup as described in Section 3.3. The placement of electrodes and anatomy of the brain provide valuable information about the spatial arrangement of the hypotheses. All electrode positions are described by the International 10-20 system, which is based on the location of an electrode and the underlying area of the cerebral cortex. Subsequently, an electrode can be classified according to the region of the brain it is reading from. Letter codes are used to describe the electrode positions in the six regions of the brain, i.e., pre-frontal (Fp), frontal (F), central (C), parietal (P), occipital (O) and temporal (T). The positions of some electrodes are not related to particular regions of the brain. These are placed on intermediate positions halfway between the electrodes placed according to the 10-20 system. These are named using two letter codes, for example, the electrodes on the margin of frontal and temporal regions are denoted by FT, those between temporal and parietal regions are denoted by TP, etc. It is logical to expect that these electrodes reflect the neuronal activities of brain regions between which they lie, which lead us to consider classification of such electrodes as not mutually exclusive, and these are considered to be members of either regions. Each hypothesis is associated to a pair of electrodes, one corresponding to the control and the other corresponding to the alcoholic group. In either group, at level , without any classification, there is a single group of hypotheses. At level , these hypotheses are clustered according to the six brain regions. In the ultimate level , a hypothesis is classified by its corresponding electrode. There are such groups in level , each consisting of member hypotheses. Two brain regions are considered to be overlapping if there is an electrode placed at their margins, with this electrode representing a common group of hypotheses. Each brain region overlaps with at least one and at most two other brain regions.

The classifications mandated by the control and treatment groups are simultaneously imposed on the set of hypotheses. Consequently, there are two simultaneously imposed hierarchical classification structures, according to electrodes and brain regions on the hypotheses.

Prior to computing the test statistics, the time series observations are detrended and cyclic/phase patterns are removed. The p-values corresponding to the hypotheses in (5) are obtained from two-sample -test statistics calculated from the de-trended sets of observations.

We apply the DAGen-GBH, with the choice of weights derived from (3.17), and attach the following weight to the p-value , for each :

| (5.2) |

where refers to the classification due to alcoholic group, and refers to the classification of the p-values due to the control group. From (3.7), we get

for all , where which is given by (3.10). Here , is the size of the th brain region, , the number of brain regions and is the number of electrodes in the th brain region, each electrode containing member p-values. is similarly defined for the control group.

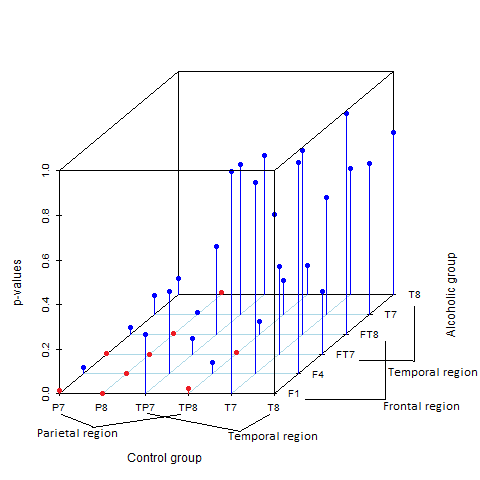

Using and we obtain rejections. In comparison, the Adaptive BH, with the choice of weights as in (4.1), yields a set of discoveries, approximately of which is a subset of those made by the DAGen-GBH. Figure 8 graphically represents a section of the classification structure of the p-values and visualizes the signals identified by the DAGen-GBH.

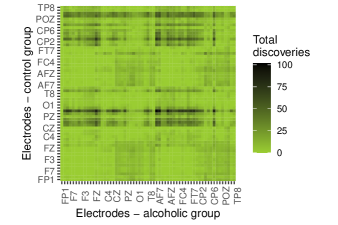

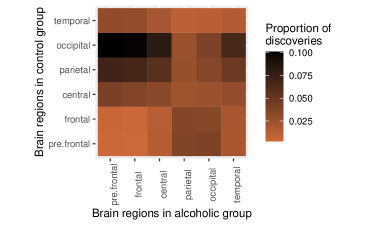

It is interesting to see the distribution of discoveries made at each pair of electrodes, and each pair of brain regions. Figure 10 shows the number of discoveries made at each pair of electrodes from the control and alcoholic groups. Figure 10 shows the proportion of discoveries made at each pair of brain regions from the control and alcoholic groups. The largest proportion of differences in EEG signals are noted between the pre-frontal and frontal regions in the alcoholic groups and parietal and occipital regions in the control group. Further insights into the discoveries would entail expert knowledge on such studies of neurological disorders.

6 Conclusion

Multiple testing has a long history of being applied to a variety of neuroimaging data (Nichols and Hayasaka (2003), Heller et al. (2006), Foygel Barber and Ramdas (2015), etc), and has been able to successfully draw valid inference from such complex structures, notwithstanding under-utilization of structural information in many cases. BH-type multiple testing procedures are capable to control FDR and identify true signals when the hypotheses are positively dependent. Additionally, tapping structural information in such procedures, though necessary to analyze datasets complex as neuroimaging data, is clearly not straightforward as shown by this article. The classification of hypotheses in the EEG dataset considered here is an assortment of different types of classifications, that need to be systematically studied to extract the effects of grouping on the individual hypotheses. We apply our idea of generalized classification that combines hierarchical and simultaneous groupings, and permits overlapping groups, to obtain interesting results pertaining to abnormal effect of alcohol on the functioning of the different regions of the brain, as captured by EEG signals.

This article makes significant contribution to show that there is substantial scope of modernizing the current framework of multiple hypotheses testing to address complex structures of hypotheses. We have broadened the idea of using weighted BH type procedure to address more general types of classification structure of hypotheses, hierarchical and/or simultaneous, and accommodate overlapping groups which is a plausible situation in a hierarchical setup. Our proposed scheme of weighting p-values in weighted BH type procedure utilizes the structural information from the classification setup to define the weights, that provably control the FDR when the p-values involved satisfy the PRDS condition. The corresponding data-adaptive methods estimate the weights from the data, and also control FDR under independence of the p-values involved. Our simulation studies highlight the fact that utilizing elaborate grouping information indeed enhances the power of the weighted BH procedures, over existing similar testing procedures. The simulated scenarios also show that given certain conditions of high density of signals and appropriate choices of the tuning parameter, the proposed data-adaptive procedures also control FDR and are satisfactorily powerful when the p-values involved are positively dependent.

There can be further possibilities to improve upon our idea of weighted multiple testing procedure, by incorporating additional structural information, or extraneous knowledge to devise new weights. Though we successfully applied our method to analyze a very complex dataset to draw interesting and valid inference, its scope extends beyond and can be adapted to datasets with similar or increased structural complexities.

Acknowledgements

We are thankful to Edo Airoldi and Debashis Ghosh for their valuable comments, which helped us shape this paper, and to Aaditya Ramdas for very helpful discussion on the p-filter algorithm.

Appendix

Appendix A Proofs

A.1 Proof of Result 2.1.2

The result follows by noting that

A.2 Proof of Lemma 3.1.1

The lemma follows from Result 2.1.2 and the following:

In the hierarchical setup with overlapping groups, Condition 1 is given as follows

| (A.2) |

With groups not overlapping, we have , for each . In such a case too, satisfies Condition 1. This can be seen by substituting the expression of from (3.4), to the left-hand side of (A.2) which in this case equals to

which ultimately equals or , depending on whether is even or odd, each of which equals .

A.3 Proof of Theorem 2

The proof of the theorem follows from Result 2.1.3, using the following arguments.

First we need to prove as defined in (3.10), is increasing in each . In group , is decreasing in each . Subsequently,

-

•

is non-decreasing in each ,

-

•

is also non-decreasing in for all .

Hence, by induction, as defined in (3.10), is increasing in . Note that the term

due to definitions in (3.5), (3.6) and (3.10). Hence the weight , as defined in (3.7) is non-decreasing in each . For each , if , then

and for any , let us denote in terms of , by , and let us denote in terms of by .

In order to prove

For ,

| (A.3) |

A.4 Proof of Theorem 4

In the simultaneous multi-way classification, , the estimate of grouping effect in a hierarchical setup with one level of classification. Following arguments from the proof of theorem 2, we can show that is non-decreasing in each p-value. Hence the weights as defined in equations (3.14) and (3.15) is non-decreasing in each p-value .

Note that

where and are respectively and as functions of with , . The last equality follows from the fact that the simulatenous classifications are assumed to be independent of each other. Following arguments from proof of theorem 2, it can be shown that

The proof of the theorem then follows from result 2.1.3.

References

- Benjamini and Bogomolov (2014) Benjamini, Y. and M. Bogomolov (2014). Selective inference on multiple families of hypotheses. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 76(1), 297–318.

- Benjamini and Heller (2007) Benjamini, Y. and R. Heller (2007). False discovery rates for spatial signals. Journal of the American Statistical Association 102(480), 1272–1281.

- Benjamini and Hochberg (1995) Benjamini, Y. and Y. Hochberg (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society. Series B (Methodological) 57(1), 289–300.

- Benjamini and Hochberg (1997) Benjamini, Y. and Y. Hochberg (1997). Multiple hypotheses testing with weights. Scandinavian Journal of Statistics 24(3), 407–418.

- Benjamini et al. (2006) Benjamini, Y., A. M. Krieger, and D. Yekutieli (2006). Adaptive linear step-up procedures that control the false discovery rate. Biometrika 93(3), 491–507.

- Blanchard and Roquain (2009) Blanchard, G. and E. Roquain (2009). Adaptive false discovery rate control under independence and dependence. Journal of Machine Learning Research 10, 2837–2871.

- Cai and Sun (2009) Cai, T. T. and W. Sun (2009). Simultaneous testing of grouped hypotheses: Finding needles in multiple haystacks. Journal of the American Statistical Association 104(488), 1467–1481.

- Centorrino et al. (2002) Centorrino, F., B. H. Price, M. Tuttle, W. M. Bahk, J. Hennen, M. J. Albert, and R. J. Baldessarini (2002, Jan). EEG abnormalities during treatment with typical and atypical antipsychotics. Am J Psychiatry 159(1), 109–115.

- Efron et al. (2001) Efron, B., R. Tibshirani, J. D. Storey, and V. Tusher (2001). Empirical bayes analysis of a microarray experiment. Journal of the American Statistical Association 96(456), 1151–1160.

- Finner et al. (2009) Finner, H., T. Dickhaus, and M. Roters (2009, 04). On the false discovery rate and an asymptotically optimal rejection curve. The Annals of Statistics 37(2), 596–618.

- Foygel Barber and Ramdas (2015) Foygel Barber, R. and A. Ramdas (2015, December). The p-filter: multi-layer FDR control for grouped hypotheses. arXiv:1512.03397v3.

- Genovese et al. (2006) Genovese, C. R., K. Roeder, and L. Wasserman (2006). False discovery control with p-value weighting. Biometrika 93(3), 509–524.

- Heller et al. (2006) Heller, R., D. Stanley, D. Yekutieli, N. Rubin, and Y. Benjamini (2006). Cluster-based analysis of fmri data. NeuroImage 33(2), 599 – 608.

- Hu et al. (2010) Hu, J. X., H. Zhao, and H. H. Zhou (2010). False discovery rate control with groups. Journal of the American Statistical Association 105(491), 1215–1227.

- Ignatiadis et al. (2016) Ignatiadis, N., B. Klaus, J. B. Zaugg, and W. Huber (2016). Data-driven hypothesis weighting increases detection power in genome-scale multiple testing. Nature Methods 13, 577 EP.

- Lei and Fithian (2016) Lei, L. and W. Fithian (2016, 20–22 Jun). Power of ordered hypothesis testing. In M. F. Balcan and K. Q. Weinberger (Eds.), Proceedings of The 33rd International Conference on Machine Learning, Volume 48 of Proceedings of Machine Learning Research, New York, New York, USA, pp. 2924–2932. PMLR.

- Lei et al. (2020) Lei, L., A. Ramdas, and W. Fithian (2020). A general interactive framework for false discovery rate control under structural constraints. Biometrika.

- Li and Barber (2019) Li, A. and R. F. Barber (2019). Multiple testing with the structure-adaptive benjamini–hochberg algorithm. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 81(1), 45–74.

- Liu et al. (2016) Liu, Y., S. K. Sarkar, and Z. Zhao (2016). A new approach to multiple testing of grouped hypotheses. Journal of Statistical Planning and Inference 179, 1 – 14.

- Meinshausen et al. (2009) Meinshausen, N., P. Bickel, and J. Rice (2009, 03). Efficient blind search: Optimal power of detection under computational cost constraints. Ann. Appl. Stat. 3(1), 38–60.

- Nandi et al. (2021) Nandi, S., S. K. Sarkar, and X. Chen (2021). Adapting to one- and two-way classified structures of hypotheses while controlling the false discovery rate. Journal of Statistical Planning and Inference 215, 95–108.

- Nichols and Hayasaka (2003) Nichols, T. and S. Hayasaka (2003, Oct). Controlling the familywise error rate in functional neuroimaging: a comparative review. Stat Methods Med Res 12(5), 419–446.

- Oscar-Berman and Marinkovic (2007) Oscar-Berman, M. and K. Marinkovic (2007, Sep). Alcohol: effects on neurobehavioral functions and the brain. Neuropsychology review 17(3), 239–257. 17874302[pmid].

- Pacifico et al. (2004) Pacifico, M. P., C. Genovese, I. Verdinelli, and L. Wasserman (2004). False discovery control for random fields. Journal of the American Statistical Association 99(468), 1002–1014.

- Ramdas et al. (2019) Ramdas, A. K., R. F. Barber, M. J. Wainwright, and M. I. Jordan (2019, 10). A unified treatment of multiple testing with prior knowledge using the p-filter. Ann. Statist. 47(5), 2790–2821.

- Sarkar (2008) Sarkar, S. K. (2008). On methods controlling the false discovery rate. Sankhyā: The Indian Journal of Statistics, Series A 70(2), 135–168.

- Snodgrass and Vanderwart (1980) Snodgrass, J. G. and M. Vanderwart (1980). A standardized set of 260 pictures: Norms for name agreement, image agreement, familiarity, and visual complexity. Journal of Experimental Psychology: Human Learning and Memory 6(2), 174–215.

- Stein et al. (2010) Stein, J. L., X. Hua, S. Lee, A. J. Ho, A. D. Leow, A. W. Toga, A. J. Saykin, L. Shen, T. Foroud, N. Pankratz, M. J. Huentelman, D. W. Craig, J. D. Gerber, A. N. Allen, J. J. Corneveaux, B. M. DeChairo, S. G. Potkin, M. W. Weiner, and P. M. Thompson (2010). Voxelwise genome-wide association study (vgwas). NeuroImage 53(3), 1160 – 1174.

- Storey (2002) Storey, J. D. (2002). A direct approach to false discovery rates. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 64(3), 479–498.

- Storey et al. (2004) Storey, J. D., J. E. Taylor, and D. Siegmund (2004). Strong control, conservative point estimation and simultaneous conservative consistency of false discovery rates: A unified approach. Journal of the Royal Statistical Society. Series B (Statistical Methodology) 66(1), 187–205.

- Yekutieli (2008) Yekutieli, D. (2008). Hierarchical false discovery rate–controlling methodology. Journal of the American Statistical Association 103(481), 309–316.

- Yekutieli et al. (2006) Yekutieli, D., A. Reiner-Benaim, Y. Benjamini, G. I. Elmer, N. Kafkafi, N. E. Letwin, and N. H. Lee (2006). Approaches to multiplicity issues in complex research in microarray analysis. Statistica Neerlandica 60(4), 414–437.