Controlling identical linear multi-agent systems over directed graphs

Abstract

We consider the problem of synchronizing a multi-agent system (MAS) composed of several identical linear systems connected through a directed graph. To design a suitable controller, we construct conditions based on Bilinear Matrix Inequalities (BMIs) that ensure state synchronization. Since these conditions are non-convex, we propose an iterative algorithm based on a suitable relaxation that allows us to formulate Linear Matrix Inequality (LMI) conditions. As a result, the algorithm yields a common static state-feedback matrix for the controller that satisfies general linear performance constraints. Our results are achieved under the mild assumption that the graph is time-invariant and connected.

I Introduction

In the last decades, the study of networks, and in particular the distributed control of networked MAS, has attracted a lot of interest in systems and control, due to the broad range of applications in many different areas [1], including: power systems, biological systems, sensors network, cooperative control of unmanned aerial vehicles, quality-fair delivery of media content, formation control of mobile robots, and synchronization of oscillators. In networked MAS, the general objective is to reach an agreement on a variable of interest.

We focus our attention on the synchronization problem, where the goal is to reach a common trajectory for all agents. In the literature, we can find several studies on scalar agents, but recent works also address networks of agents with finite-dimensional linear input-output dynamics [2]. In the case of identical agents, a common static control law inducing state synchronization can be designed by exploiting the information exchange among the agents, which modifies the system dynamics. This exchange is modeled by a (directed or undirected) graph. The spectrum of the Laplacian matrix of this graph plays an important role in the evolution of the associated networked system [3].

Necessary and sufficient conditions ensuring synchronization have been given under several different forms depending on the context [4, 2, 5, 6, 7]. A set of necessary and sufficient conditions for identical SISO agents over arbitrary time-invariant graphs is summarized in [8].

Different approaches for control design can be found in the literature depending on the desired objective. Most of the results are based on the solution of an algebraic Riccati equation, under the assumption that the static control law has a given infinite gain margin structure [9, 10, 11, 12]: the state-feedback matrix has the form , where is the input matrix and is the solution of an algebraic Riccati equation. However, imposing an infinite gain margin potentially limits the achievable performance. As shown in [9], by choosing a small enough constant, a feedback law can be designed without knowing the network topology; in practice, this constant depends on the non-zero eigenvalue of the Laplacian matrix having the smallest real part. While [10] studies the output feedback case, we consider the dual case, which is also discussed in [11]. The design procedure in [13] allows achieving synchronization under bounded disturbances, thanks to an observer-based dynamic controller, expressed in terms of suitable algebraic Riccati equations, which guarantees disturbance rejection properties. A different approach based on LMIs is presented in [14], where synchronization conditions are imposed by relying on strong assumptions on the structure of the Lyapunov matrices, while the problem size is independent of the number of agents.

In this work, we study the design of a static state-feedback control law ensuring MAS synchronization. The agents are modeled as identical LTI subsystems and their interconnections are described by time-invariant, directed, and connected graphs. We introduce a design strategy based on LMIs, similar to the one in [14], but without imposing any assumption on the controller structure or constraints on the Lyapunov matrices, thus ensuring higher degrees of freedom in the design, and potentially improved optimized stabilizers. Through a relaxation of the conditions in [8], we formulate an iterative LMI-based procedure to design a static state-feedback control law. Our LMI formulation allows us to easily embed additional linear constraints in order to reach a desired performance [15].

Notation. and denote the sets of real and complex numbers, respectively. We denote with the imaginary unit. Given , and are its real and imaginary parts, respectively; is its complex conjugate. is the identity matrix of size , while denotes the dimensional (column) vector with all entries. For any matrix , denotes the transpose of . Given two matrices and , indicates their Kronecker product. Given a complex matrix , denotes its conjugate transpose and . Matrix is Hermitian if , namely is symmetric and is skew-symmetric . We denote the Euclidean distance of a point from a set as .

II Problem Statement

Consider identical dynamical systems

| (1a) | |||

| with state vector , input vector , state matrix and input matrix . Assume that the pair is controllable. The directed graph with weight matrix captures the communication topology among the agents; its Laplacian matrix is . Denote by the eigenvalues of , ordered with non-decreasing real part (complex conjugate pairs and repeated eigenvalues are only counted once). The control input affecting agent is expressed as | |||

| (1b) | |||

where and are the entries of the weight and Laplacian matrices, respectively, and is the state-feedback matrix. Each agent uses only relative information with respect to the others, as typically desired in applications.

By defining the aggregate state vector and input vector , we can write the interconnection (1) as

| (2a) | ||||

| (2b) | ||||

The overall closed-loop expression is

| (3) |

Our goal is to synthesize a common static control law that enforces synchronization among systems (1a). To this aim, we introduce the synchronization set:

| (4) |

We recall the definition of “–synchronization” from [8].

Definition 1 (–Synchronization)

Some of the necessary and sufficient conditions for –synchronization in [8, Theorem 1] are here adapted to deal with a synthesis problem: matrix in [8] is replaced by the state-feedback matrix in the closed-loop dynamics (3). Moreover, we can exploit parameter in iterative approaches for optimization-based selections of .

Proposition 1

Consider the system in (1) and the attractor in (4). The synchronization set is –UGES if and only if any of the following conditions holds:

-

1.

[Complex condition] The complex-valued matrices

(5a) have spectral abscissa smaller than .

-

2.

[Real condition] The real-valued matrices

(5b) , have spectral abscissa smaller than .

-

3.

[Lyapunov inequality] For each , there exist real-valued matrices and such that matrix satisfies

(5c)

III Feedback Design

We aim at designing a common state-feedback matrix so as to ensure synchronization to , i.e., so as to satisfy the conditions in Proposition 1. We choose an LMI-based approach to design , which allows us to easily embed additional linear constraints in the design process. Relevant linear constraints may be related, e.g., to the gain, saturation handling, gain norm, and convergence rate [15].

III-A Revisited synchronization conditions

We can distinguish two main cases: either the Laplacian eigenvalues are all real or at least one of them is complex. In the former case, conditions (5a) can be framed within an LMI formulation, through a procedure similar to the one we describe next. In the latter case, we refer to expression (5c), where the problem is lifted to a higher space, considering instead of , so as to work with real-valued matrices. We focus on the latter case, which is more general and includes the former. Let us define the inverse of in (5c)

with symmetric positive definite and skew-symmetric. In fact, (which is invertible, since applying the Schur complement to yields ) and (where the equality holds because ). Then, we can left- and right- multiply inequality (5c) by , obtaining

| (6) |

To look for a common state-feedback matrix , even when the matrices are different, we take advantage of the results in [16]. We can rewrite (6) as

| (7) |

where describes the stability region, which in our case is the complex half-plane with real part smaller than . Then, according to [16, Section 3.1], (7) is equivalent to the existence of matrices satisfying

| (8) |

where and are multipliers that add degrees of freedom by decoupling matrices and . Conditions (8) are still necessary and sufficient for –synchronization, because they are equivalent to (7).

According to the derivation in [16, Section 3.3], imposing , with , does not add conservatism as far as there are independent matrices . We are now going to relax this condition by assuming that matrices and have the specific structure

where , with , is a block-diagonal matrix common to all inequalities, while are different multipliers for every inequality. This assumption introduces conservativeness; therefore, the conditions are now only sufficient. However, we can now expand (8) and obtain the bilinear formulation

| (9) |

with , where is related to the -th eigenvalue and is a suitable change of variables. An expanded version of (9) with the variables highlighted is provided in equation (12) at the top of the next page.

Constraints (9) alone, might lead to badly conditioned optimized selections of , due to the fact that the joint spectral abscissa of for all may potentially grow unbounded for arbitrarily large values of . Thus, as a possible sample formulation of a multi-objective optimization, we fix a maximum desired norm for and enforce the constraint through the following LMI formulation:

| (10) |

stemming from the expression after applying a Schur complement and exploiting .

We then suggest to synthesize a state-feedback matrix satisfying and maximizing by solving the bilinear optimization problem

| subject to: | (11) | |||

where alternative performance-oriented linear constraints can be straightforwardly included, and then selecting . An iterative approach can be used to make the problem quasi-convex and solve it iteratively with standard LMI techniques.

| (12) |

Remark 1

The most natural way to include the coefficient in the equations is that inspired by the techniques in [16], leading to the formulation in (9), where defines the stability region in the complex plane and the problem results in a generalized eigenvalue problem (GEVP). As an alternative, could be introduced as a destabilizing effect acting on the matrices (shifting their eigenvalues to the right in the complex plane), which are still required to be Hurwitz:

However, with this formulation, the problem is no longer a GEVP. This complicates the implementation, since the feasibility domain with respect to could be bounded (while in a GEVP it is right or left unbounded) and bisection is not appropriate. We tested this alternative approach in simulation and we obtained similar results to those achieved with Algorithm 1, with the advantage of typically reaching convergence after a significantly lower number of iterations.

III-B Iterative algorithm

In order to solve the BMI optimization problem (11) with convex techniques,

we focus our attention on BMI (9), since the other constraints are linear, and we propose

an iterative approach for the problem solution, described in Algorithm 1.

The algorithm is composed of two steps:

1)

Synthesis step: for given fixed multipliers and , , optimization (11) is solved in the decision variables , which corresponds to a generalized eigenvalue problem (easily solvable by a bisection algorithm) due to the fact that matrices are all positive definite;

2)

Analysis step: for given fixed matrices and ,

optimization (11) is solved in the decision variables , which corresponds again to a generalized eigenvalue problem due to positive definiteness of .

Algorithm 1 essentially comprises iterations of the two steps above, until parameter increases less than a specified tolerance over two steps. To the end of establishing a useful means of comparison in the simulations reported in Section IV, we naively initialize the algorithm by fixing the initial multipliers as scaled identity matrices (with properly tuned). More generally, we emphasize that using the Riccati construction of [10], stabilizability of is sufficient for ensuring the existence of a Riccati-based solution of (11) and it is immediate to design an infinite gain margin solution where all the matrices share a common quadratic Lyapunov function. We do not pursue this initialization here, so as to perform a fair comparison between our algorithm (initialized in a somewhat naive way) and the construction resulting from [10].

The following proposition establishes useful properties of the algorithm.

Proposition 2

For any selection of the tolerance, if the initial condition is feasible, then all the iterations of Algorithm 1 are feasible. Moreover, never decreases across two successive iterations. Finally, the algorithm terminates in a finite number of steps.

Proof.

About recursive feasibility, note that once the first step is feasible, for any pair of successive steps, the optimal solution to the previous step is structurally a feasible solution to the subsequent step. Indeed, the variables that are frozen at each iteration are selected by using their optimal values from the previous step. Since the cost maximized at each step is always , then can never decrease across subsequent steps and then recursive feasibility is guaranteed.

About the algorithm terminating in a finite number of steps, note that the optimal value of is necessarily upper bounded by a maximum achievable depending on the norm of matrices , , on the eigenvalue of having minimum norm, and on the bound imposed on the norm of the state-feedback matrix . Since monotonically increases across iterations and it is upper bounded, then it must converge to a value and eventually reach any tolerance limit across pairs of consecutive iterations. ∎

Remark 2

Computationally speaking, each iteration of Algorithm 1 amounts to solving a GEVP because is multiplying a sign definite matrix , and hence the conditions are monotonic with respect to . Therefore, we can find the optimal via a bisection algorithm: if the problem is feasible for , then the problem is feasible for all ; on the other hand, if the problem is infeasible for , then the problem is infeasible for all . Our objective is to find the maximum for which the problem is feasible (so that no other larger leads to feasibility).

IV Comparison and Simulations

To test the effectiveness of Algorithm 1, we compare it with other design procedures that solve the simultaneous stabilization problem. The benchmark problem is the maximization of the rate with the norm of upper bounded by , as induced by constraint (10).

& 0.657 0.654 0.684 0.686 1.197 14 1.922 3.853 4.005 4.016 4.684 5

20.00 16.23 17.95 18.05 20.00 20.00 16.72 15.93 15.93 19.99

(s) 0.125 1.562 1.109 2.703 78.81 0.047 0.516 0.531 0.719 9.156

0.107 0.171 0.178 0.178 0.374 20 1.466 1.968 1.987 1.990 2.209 8

20.00 16.37 16.47 16.58 20.00 20.00 17.43 17.28 17.28 19.99

(s) 0.047 0.984 1.109 3.594 168.9 0.031 0.375 0.562 2.359 22.17

0.577 0.654 0.654 0.654 1.096 12 1.972 3.853 3.853 3.853 4.254 6

20.00 16.23 16.23 16.24 20.00 20.00 16.72 16.72 16.73 20.00

(s) 0.031 0.953 0.812 3.000 56.25 0.000 0.219 0.359 0.703 8.062

0.093 0.075 0.075 0.075 0.368 94 1.177 1.403 1.403 1.402 1.517 18

19.97 16.84 16.85 16.85 19.95 20.00 17.94 17.94 17.95 19.95

(s) 0.062 1.266 1.828 3.859 614.8 0.000 0.391 0.438 0.781 34.39

0.657 0.715 0.715 0.715 1.201 12 2.401 4.147 4.147 4.148 5.818 66

20.00 19.93 19.93 19.93 19.99 20.00 14.00 14.00 14.00 20.00

(s) 0.062 0.922 0.828 1.344 36.09 0.000 0.438 0.188 0.531 61.02

| “a” | “b” | “c” | “d” | “e” | #iter | “a” | “b” | “c” | “d” | “e” | #iter | ||

| graph | Riccati | Listmann | Direct | Alg. 1 | Alg. 1 | Riccati | Listmann | Direct | Alg. 1 | Alg. 1 | |||

IV-A Dynamical system and network

In our simulations we consider two types of agent dynamics: one is oscillatory,

while the second one is the unstable lateral dynamics of a forward-swept wing, the Grumman X-29A, as in [14],

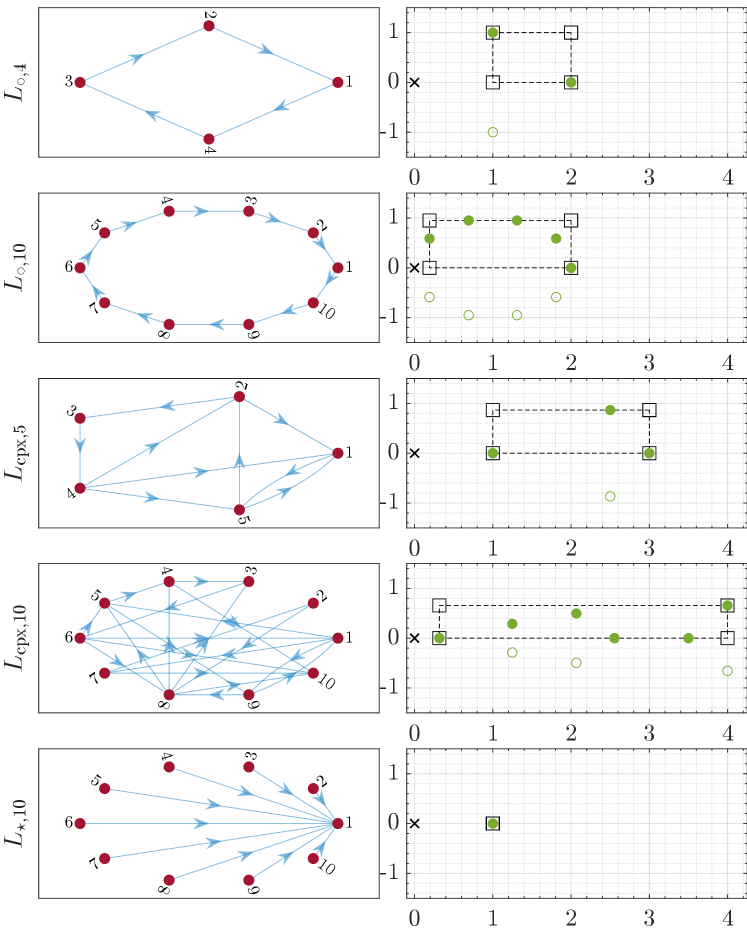

We consider five communication networks: two circular graphs with and agents, two generic directed graphs with and agents characterized by complex eigenvalues, and a star graph with agents. The graph topologies and the eigenvalues of the associated Laplacian matrices are visualized in Fig. 1.

IV-B Compared approaches

The approaches that we compare are the following ones:

- a.

-

b.

[Listmann] from [14]: LMI conditions (6) with and are imposed for the corresponding to the corners of a rectangular box in the complex plane that includes all non-zero eigenvalues of (considering the eigenvalues in the first quadrant is enough, since conjugate eigenvalues lead to the same condition), while incorporating in the LMI-based design the maximum norm condition (10). A fixed number of LMIs need to be solved regardless of the network size.

-

c.

[]: the method resembles that in “b”, but now conditions (6) are imposed for each , .

- d.

- e.

In the simulations, the convergence rate of the solutions is estimated from the spectral abscissa of matrices in (5a), namely, from the largest-real-part eigenvalue:

IV-C Results

We implement the different procedures in MATLAB, using the toolbox YALMIP [17] with MOSEK as an LMI solver. For the algorithm, we consider a tolerance of and as the bound on the norm of .

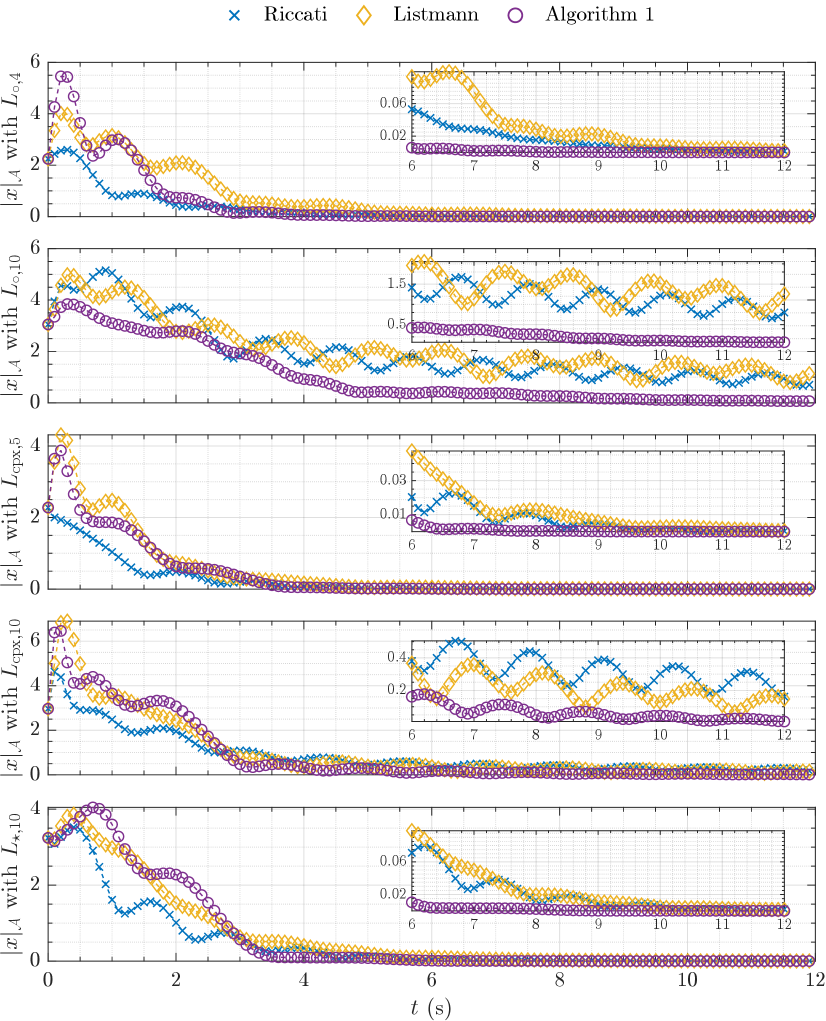

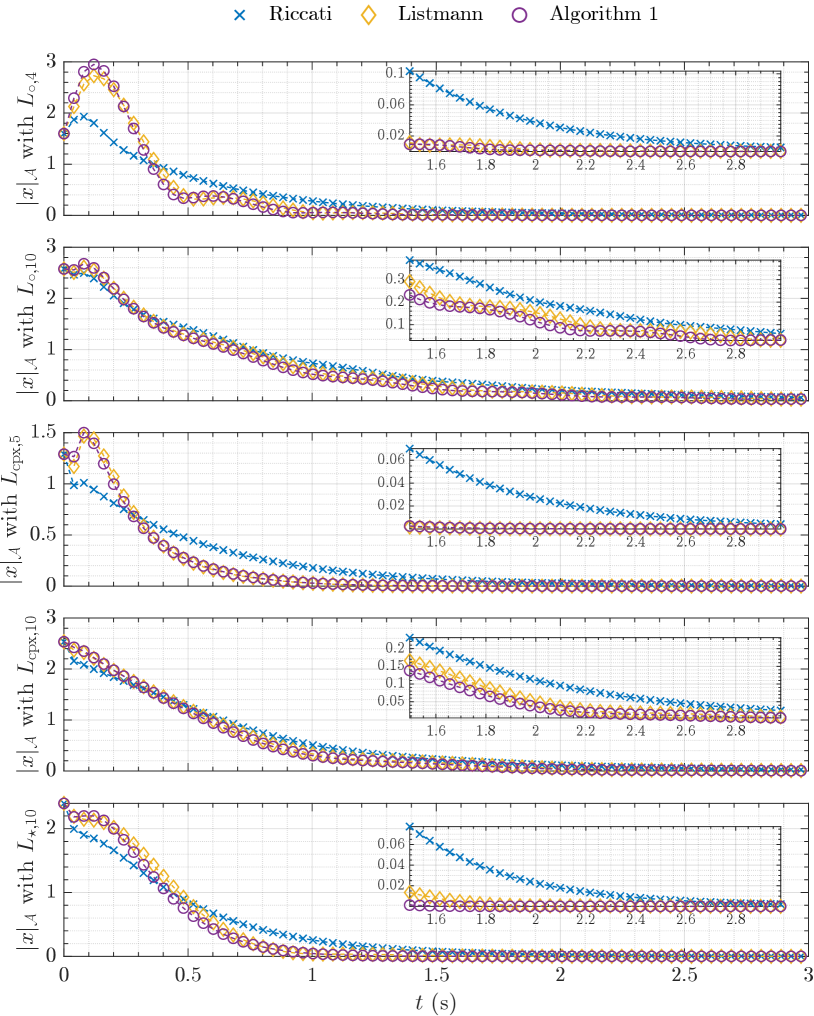

For the different combinations of dynamics and graph topologies, Table I reports a summary of all our results, along with estimated converge rate , norm of and execution time. The time evolutions of the distances from the synchronization set are shown in Figs. 2 and 3 for the approaches “a”, “b” and “e”.

Generally, method “a” has a worse performance than the considered LMI-based methods. The gain bound is reached, but the convergence rate is the slowest, most likely because the approach is forcing an infinite gain margin for . Locating the eigenvalues of the matrices in the complex plane shows that method “a” tends to move to the left a few eigenvalues (faster modes) and penalize others, so that the convergence speed is limited.

Method “b” performs similarly to “c”, as expected, since the two methods simply consider different (eigen)values. In general, method “b” is more conservative than “c”, but is faster in larger networks. With and , method “b” is slightly more conservative, as is reasonable, since it considers values that are not in the spectrum of the Laplacian.

Method “d” generally yields better results than “b” and “c”, provided that proper values of the parameter are chosen. This improvement is due to the decoupling between matrices and .

The best results are obtained using our proposed Algorithm 1, which gives the highest convergence rate and gets close to the system specifications. However, the computational load is higher, since several iterations are needed. With dynamics , the states are always converging faster; with dynamics , the performance is similar to that obtained with other non-iterative LMI-based techniques. Algorithm 1 outperforms the other procedures in the case with dynamics and graph : even though it requires quite some iterations to converge, it provides a controller that leads to almost one-order-of-magnitude faster convergence than the others, as shown in Fig. 2.

V Conclusions

We focused on the synchronization of identical linear systems in the case of full-state feedback. First we provided some necessary and sufficient conditions for the synchronization. Then, we relaxed them in order to have a new formulation that can be iteratively solved through LMIs. This new procedure to solve the simultaneous stabilization problem, although requiring relatively large computational times, turns out to give better results in our benchmark problem where the convergence rate is maximized under given constraints on the performance.

Our results pave the way for further developments, such as the use of alternative methods (e.g., convex-concave decomposition) to deal with BMIs and the extension to the case of static output feedback control laws.

Acknowledgment: The authors would like to thank Domenico Fiore for his work in the initial stages of this research activity.

References

- [1] R. Olfati-Saber, J. A. Fax, and R. M. Murray, “Consensus and Cooperation in Networked Multi-Agent Systems,” Proceedings of the IEEE, vol. 95, no. 1, pp. 215–233, 2007.

- [2] L. Scardovi and R. Sepulchre, “Synchronization in networks of identical linear systems,” Automatica, vol. 45, no. 11, pp. 2557–2562, 2009.

- [3] M. Mesbahi and M. Egerstedt, Graph Theoretic Methods in Multiagent Networks. Princeton University Press, 2010.

- [4] F. Xiao and L. Wang, “Consensus problems for high-dimensional multi-agent systems,” IET Control Theory & Applications, vol. 1, no. 3, pp. 830–837, 2007.

- [5] C.-Q. Ma and J.-F. Zhang, “Necessary and Sufficient Conditions for Consensusability of Linear Multi-Agent Systems,” IEEE Transactions on Automatic Control, vol. 55, no. 5, pp. 1263–1268, 2010.

- [6] Z. Li, Z. Duan, G. Chen, and L. Huang, “Consensus of Multiagent Systems and Synchronization of Complex Networks: A Unified Viewpoint,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 57, no. 1, pp. 213–224, 2010.

- [7] L. Dal Col, S. Tarbouriech, L. Zaccarian, and M. Kieffer, “A consensus approach to PI gains tuning for quality-fair video delivery,” International Journal of Robust and Nonlinear Control, vol. 27, no. 9, pp. 1547–1565, 2017.

- [8] G. Giordano, I. Queinnec, S. Tarbouriech, L. Zaccarian, and N. Zaupa, “Equivalent conditions for the synchronization of identical linear systems over arbitrary interconnections,” 2023. [Online]. Available: https://arxiv.org/abs/2303.17321

- [9] J. Qin, H. Gao, and W. X. Zheng, “Exponential Synchronization of Complex Networks of Linear Systems and Nonlinear Oscillators: A Unified Analysis,” IEEE Transactions on Neural Networks and Learning Systems, vol. 26, no. 3, pp. 510–521, 2015.

- [10] A. Isidori, Lectures in Feedback Design for Multivariable Systems. Springer International Publishing, 2017, ch. 5.

- [11] F. Bullo, Lectures on Network Systems. Kindle Publishing, 2022, ch. 8.

- [12] A. Saberi, A. A. Stoorvogel, M. Zhang, and P. Sannuti, Synchronization of Multi-Agent Systems in the Presence of Disturbances and Delays. Springer International Publishing, 2022.

- [13] H. L. Trentelman, K. Takaba, and N. Monshizadeh, “Robust Synchronization of Uncertain Linear Multi-Agent Systems,” IEEE Transactions on Automatic Control, vol. 58, no. 6, pp. 1511–1523, 2013.

- [14] K. D. Listmann, “Novel conditions for the synchronization of linear systems,” in 54th IEEE Conference on Decision and Control, 2015.

- [15] S. Boyd, L. El Ghaoui, E. Feron, and V. Balakrishnan, Linear Matrix Inequalities in System and Control Theory. SIAM, 1994.

- [16] G. Pipeleers, B. Demeulenaere, J. Swevers, and L. Vandenberghe, “Extended LMI characterizations for stability and performance of linear systems,” Systems & Control Letters, vol. 58, no. 7, pp. 510–518, 2009.

- [17] J. Löfberg, “Yalmip: A toolbox for modeling and optimization in matlab,” in Proceedings of the CACSD Conference, 2004.