274556 \course[]Computer and Systems Engineering \courseorganizerDepartment of Computer and Systems Engineering \submitdate2022 \copyyear2022 \advisorProf. Stefano Di Gennaro, \coadvisorProf. Maarouf Saad \authoremail[email protected]

Control of Automated Driving in Motion Planning

Abstract

Autonomous driving vehicles aim to free the hands of vehicle operators, helping them to drive easier and faster, meanwhile, improving the safety of driving on the highway or in complex scenarios. Automated driving systems (ADS) are developed and designed in the last several decades to realize fully autonomous driving vehicles (L4 or L5 level).

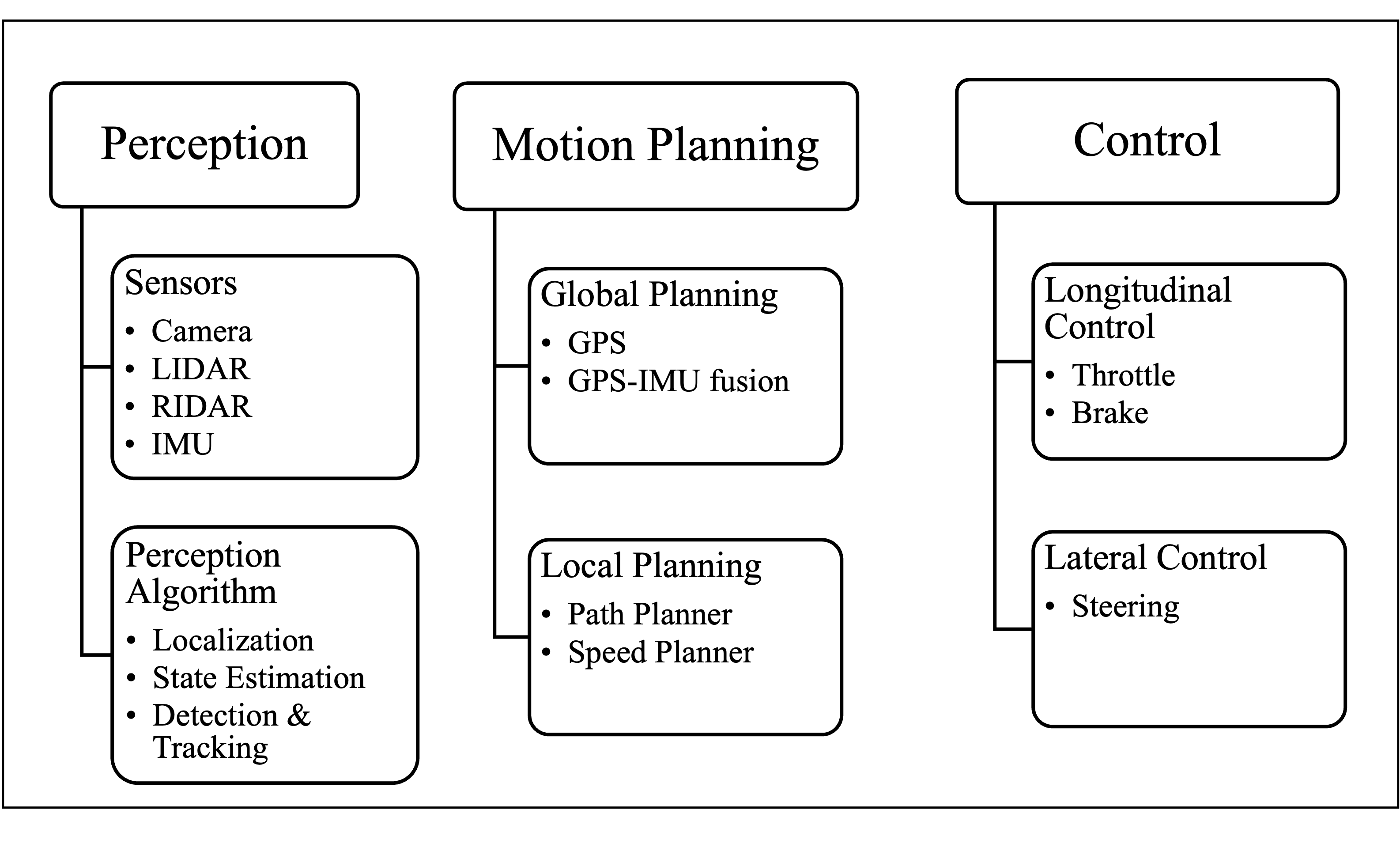

Usually, ADS can be divided into three main processes: Perception, Motion Planning and Control. Although many prototypes of ADSs were already implemented, both in scientific research centres and industries, a lot of work has still to be done, due to the complexity of different driving scenarios and the need for real-time computation. In particular, motion planning still remains one of the hardest problems to solve, since it deals with huge data from perception, and with the problem of finding (in the fastest possible manner) an optimal trajectory, to be used as a reference for the controller. Therefore, the high computational request is demanded of the CPUs onboard the autonomous vehicles, and planning algorithms with high performance have to be designed, to realize the real-time computation. Failing to find a solution in real-time could lead to a non-optimality of the reference trajectory, and to potential traffic accidents.

The scale of sampling space leads to the main computational complexity. Therefore, by adjusting the sampling method, the difficulty to solve the real-time motion planning problem could be incrementally reduced. Usually, the Average Sampling Method is taken in Lattice Planner, and Random Sampling Method is chosen for RRT algorithms. However, both of them don’t take into consideration the prior information, and focus the sampling space on areas where the optimal trajectory is previously obtained. Therefore, in this thesis it is proposed an adaptive sampling method to reduce the computation complexity, and achieve faster solutions while keeping the quality of optimal solution unchanged.

The main contribution of this thesis is the significant decrease in the complexity of the optimization problem for motion planning, without sacrificing the quality of the final trajectory output, with the implementation of an Adaptive Sampling method based on Artificial Potential Field (ASAPF). In addition, also the quality and the stability of the trajectory is improved due to the appropriate sampling of the appropriate region to be analyzed.

To prove the feasibility of the proposed ASAPF, the whole processes of Automated Driving including Perception, Motion Planning and Control have been simulated in MATLAB/Simulink, used in combination with CARSim and Prescan, which are popular simulation and visualization software for Automated Driving. The simulation results prove that the proposed ASAPF is feasible and efficient, compared with conventional Average Sampling Methods for the discretization of the continuous space, used as input to the dynamic programming analysis. Comparing the proposed ASAPF with classical Average Sampling Methods, the computational time necessary to solve the same optimization problem in real-time reduces by around 2/3, which could significantly improve the efficiency of the motion planning algorithm, and the safety of automated driving.

Keywords

Automated Driving, Motion Planning, Optimization Function, Adaptive Sampling, Artificial Potential Field, Dynamic Programming, Quadratic Programming, Nonlinear Control System, Double PID control, LQR control, MATLAB/Simulink, CARSim.

1 Introduction

Autonomous driving has promised to improve drastically the convenience of driving by releasing the burden of drivers and reducing traffic accidents with more precise control. According to a past technical report by the National Highway Traffic Safety Administration(NHTSA), 94 per cent of traffic accidents are mainly caused by human manipulating errors [27]. Nowadays, with the fast development of artificial intelligence and significant advancements in the Internet of Things (IoT) technologies, we have witnessed the steady progress of autonomous driving over the recent years. In [21], it is mentioned that annual social benefits of ADSs are projected to reach almost $800 billion by 2050 through road casualty reduction, decreased energy consumption and increased productivity caused by the reallocation of driving time.

The earliest major automated driving study is Eureka Project PROMETHEUS which was carried out in Europe from 1987-1995. In this project, VITA II by Daimler-Benz succeeded in automatically driving on highways [30]. DARPA Grand Challenge, organized by the US Department of Defense in 2004, was the first major automated driving competition. However, at this time, all the attendees failed to finish the 150-mile off-road parkour because it is difficult for the cars to drive automatically without any human intervention at any level. Urban scenes were seen as the biggest challenge of the field for ADSs, therefore, DARPA Urban Challenge [2] held in 2017 is significant for this field. In this competition, many different groups from all over the world implemented their ADSs in a test urban environment. Finally, six teams completed the event successfully. Even if this competition was the biggest and most significant event up to that time, the test environment was still not real because of the lack of certain aspects of a real-world urban driving scene such as pedestrians and cyclists. After DARPA Urban Challenge, several more automated driving competitions such as [1]-[4] were held in different countries.

Automated driving is the latest trend in the automobile industry with the enhancement of computational ability and improvement of sensor accuracy. It has been one of the most popular topics in academic institutes and high technology companies all over the world, especially in the United States of America, China and the United Kingdom. The concept of vehicle automation was envisioned in 1918 [23]. In [26], the system structure of automated driving is explicitly analysed and autonomous vehicles realize the process of autonomous driving by three steps: perception, planning and control, as shown in Fig 1.

The main focus of our work is related to the planning and control components of the architecture, specifically local planning techniques in automated vehicles, for the reason that it is one of the most difficult problems to be solved in automated driving techniques due to the unpredictable manoeuvres of other dynamic vehicles, pedestrians and other traffic participants. A review of the different approaches and concept definitions (as global, local or reactive motion planning) can be found in [10], [7], [3]. Normally, these planning techniques can be classified into four main groups depending on their techniques and implementation in automated driving: Graph Search Based Planners, Sampling Based Planners, Interpolating Curve Planners and Numerical Optimization. In the beginning, as for the automated driving problem, the basic idea is to transfer it into a graph problem. To be specific, the state space is traversed into a graph or an occupancy grid. Then, graph-searching algorithms can be implemented so as to find a possible solution. Some of these algorithms, for example, Dijkstra Algorithm in [20], [13], A-Star Algorithm (A*) [18], Dynamic A* Algorithm [29] and State Lattice Algorithm [25] have been successfully applied to the automated vehicles development. The easiest and most common graph-searching algorithms are Depth-First Search (DFS) and Breadth-First Search(BFS). BFS uses stacks to save the visited nodes, therefore it needs less execution time compared with DFS which doesn’t use stacks, but scarifying more storage space. Dijkstra is another popular and simple graph-searching algorithm, which tries to find the single-source shortest path in the graph. The configuration space is approximated as a discrete cell-grid space, lattices. A-Star Algorithm is a type of improvement of the Dijkstra Algorithm that can realize a very fast node-searching due to the design of heuristics. The most important design aspect is the determination of the cost function, which will directly decide the quality of performance of path planning. Rapidly-Exploring Random Tree (RRT) is the most commonly used method in robotics for motion planning [14], so many researchers also tested this algorithm for automated vehicles. For example, the MIT team used it for automated driving at DARPA Urban Challenge in [12]. However, the fatal drawback of this algorithm is that the resulting path is not optimal, jerky, and even not curvature continuous. The passengers will feel uncomfortable if we implement this algorithm for motion planning. RRT* in [12] got improved based on basic RRT and developed by the same team in MIT to make sure it can converge to an optimal solution. Nevertheless, the drawback we mentioned above in traditional RRT still exists in this improved RRT* algorithm. Interpolation is defined as the process of constructing and inserting a new set of data within the range of a previously known set, so it is mainly used to do smoothing tasks with a given reference line so as to satisfy vehicle constraints, and trajectory continuity and improve the performance of planning trajectory. In conclusion, the classification and their main representative algorithms are listed in the table1. In addition, the pros and cons of them are also given in the same table so as to have a better comparison.

| Methodologies | Examples | Advantage | Disadvantage |

|---|---|---|---|

| Graph Search Based | Dijkstra, A*, D*, State Lattices | Transform to a graph problem and efficiently use classic graph search algorithms | Slow due to vast areas and works only with a full-known map which is impossible in real-time implementation |

| Sampling-Based | RRT, RRT*, RRT-connect | Provide a fast solution in multi-dimensional systems. Complete algorithm and always converges to a solution (if existed) | The resulting trajectory is not continuous and therefore jerky |

| Interpolating Curves | Polynomial Curves, Bezier Curves, Spline Curves | Optimize the curvature and smoothness of the path with CAGD techniques | Depends on global planning or waypoints. Time-consuming to deal with obstacles in real time |

| Numerical Optimization | Function Optimization | Road and ego-vehicle constraints as well as other road users can be easily taken into account | Time-consuming, therefore, the optimization should be stopped at a given time horizon. |

From the table 1, we could see that all these algorithms for motion planning have their advantages and disadvantages. That means, until now, there is no one algorithm outperforming others, so we need to choose one from them or combine them together to implement our motion planning task depending on the demands of our real implementation problem. After considering the pros and cons of different motion planning technologies and their ability to implement in the virtual or real-time environment, Baidu Apollo EM Planner decided to take an optimization function combined with spline curves to generate a trajectory, [5], which could satisfy the curvature request that is important for the control module to do path following and meet the mission of low computational task, that means there is not too much demand for the high ability CUP onboard autonomous vehicles. On the one hand, spline curves, specifically polynomial curves need low computational cost compared with graph searching algorithms, especially the RRT family because of their random sampling. On the other hand, the optimization function can make sure the trajectory is smoothing enough to satisfy the request of comfort, vehicle dynamic constraints and so on.

Specifically speaking, firstly continuous construction space needs some methods of discretization, for example, random sampling, average sampling, etc. In this thesis, to solve this problem and improve the efficiency of the motion planning algorithm, we proposed our Adaptive Sampling Method based on Artificial Potential Field (ASAPF). Then based on the discrete space, Dynamic Programming (DP) is used to obtain a rough solution. After achieving the rough solution, a convex space is built around it. Then Quadratic Programming (QP) is chosen to explore an optimal solution inside the convex workspace. The solution will converge to local optima if we iteratively implement the process of path planning and speed planning. This whole motion planning part can be finished within 100 ms, therefore, it can satisfy the real-time requirement. However, with our proposed ASAPF, the complexity of the optimization problem will be reduced by adjusting the density of sampling with the help of prior information (like optimal trajectory in the last cycle), obstacles information and road boundaries and some unnecessary sampled points will be deleted before QP. Therefore, the computational time will be further shortened, meanwhile, the efficiency of our motion planning algorithm in automated driving and the safety of autonomous vehicles will be incrementally improved.

The structure of this thesis is shown below: Section 2 is the basement of our motion planning. We take Baidu Apollo EM Planner as our reference methodology, analysing the advantages and drawbacks of their algorithm. Then, our methodology (ASAPF) is proposed to solve their problem and reduce the computational complexity so as to improve the real-time implementation ability of motion planning. Section 3 introduces the details of our ASAPF and the formulation of DP and QP in the motion planning algorithm. Section 4 gives the formulation of simplified dynamic vehicle models (bicycle model) which are commonly used for automated driving and important for the analysis of control algorithm. Section 5 shows the control algorithm we implemented in the control module of the automated driving system. Linear Quadratic Regulator (LQR) and PID are chosen for the lateral and longitudinal control separately because of their stability and simplicity in the control area. Section 6 firstly explains our simulation environment (Matlab/Simulink + CARSim + Prescan) and lists the hyperparameters we used in our Simulink model. Then, in this section, the results of the simulation are shown and the comparison of the running time of DP with the original Average Sampling and our proposed ASAPF is analyzed so as to prove the efficiency and feasibility of our method. Section 7 concludes what we did in this master thesis and analyses some aspects that we didn’t consider here due to the limitation of time. Then I propose some possible directions on which I can choose to research in my PhD. period in the future.

2 Baidu Apollo and our whole methodology

Baidu which starts as a search engine company in China (like Google Searching Engine) has already been a leading AI company with a strong Internet foundation now. Since 2013, they have already been working on automated driving. After four years of researching this area, they officially published their automated driving open-source platform named Apollo in Baidu Apollo Github. Apollo is an open-source Automated Driving Platform, so everyone who is interested in automated driving could use it to study automated driving technologies and even implement it in Linux to visualize it in a virtual environment or in real vehicles. As for the motion planning module, after doing research on the existing methodologies and technologies, they decided to use the Optimization function combined Polynomial Curve. In other words, Dynamic Programming and Quadratic Programming are realized in their motion planning separately for path planning and speed planning. Then the path profile and speed profile are merged together to generate the optimal trajectory avoiding static and dynamic obstacles at the same time in the real traffic road. Their motion planning method is called EM Planner, because Expectation and Maximization are iterative used inside, which is published out in [5]. Compared with graph searching methodologies, the trajectory is less jerky and easier to realize the real-time path planning. In this chapter, the main structure of Apollo EM Motion Planner will be introduced and some problems existing in the real-time implementation will be mentioned. In the end, we will propose our method (ASAPF) and how it could solve the existing problems.

2.1 Frenet Coordinate

Nowadays, most autonomous driving motion planning tasks are developed in Frenet frames with time (SLT) so as to reduce the planning dimension with the help of a reference line, like in [31], [6] and [24]. The reason that Frenet frames are very popular in Automated Driving is the expression of equations will become simpler in Frenet Coordinate System and it will be easier to decouple the lateral and longitudinal motion planning and control.

As depicted in Figure 2, the same road in the real world looks totally different in the Cartesian frame and in Frenet Coordinate System [15]. It seems like a straight road in the latter frame after converting the road with an irregular shape from a Cartesian to a Frenet frame. It is obvious that any road could be standardized as a straight tunnel using a Frenet frame. Then, the nonlinear collision-avoidance constraints will be transformed into linear within-tunnel constraints. In addition, the originally coupled kinematic constraints are decoupled as independent polynomials in the longitudinal and lateral dimensions [16]. Then, we could easily solve our Dynamic Programming separately in the SL graph and ST graph within the Frenet frame, which seems impossible in the Cartesian coordinate system. To be specific, it is still difficult to solve a 3-Dimension SLT problem, therefore first of all, the SLT problem can be easily decoupled into two 2-Dimension problems (SL and ST). SL is the relation between the station and lateral distance. ST represents station and time. By solving the SL problem, we could get the path profile which can make sure the static and low-speed obstacles avoidance. Then, the next step is to find the relation between station and time which will create the speed profile and it can offer us a solution to avoid dynamic obstacles. Because both of path profile and speed profile have the same dimension S (station), they can be merged together by matching the station coordinate system introduced in [5] and until now, we obtain the final trajectory which can be used as input for control module to do the path following.

2.2 EM planner Iteration

As we introduced above, the 3-Dimension problem (SLT) should be divided into two 2-Dimension problems (SL and ST), and then these two problems can be optimized separately and iteratively, as shown in 3. Let us consider the SL graph first. In E-step, observed obstacles are projected in the SL graph and we need to find the optimal solution considering obstacles, dynamic vehicle constraints and traffic rules. The easiest way to solve this problem is quadratic programming which is similar to Newton’s gradient descent. However, as we all know, quadratic programming can give us a fast and good solution only in convex space. However, obviously, motion planning is not a convex problem. Thus, in the Apollo EM planner, dynamic programming is used firstly to obtain a rough solution and this solution will provide decisions or manoeuvres like nudge, yield or overtake. Then the next step is to build a convex hull based on that rough decision. Until now, convex space is already obtained and it can be used for quadratic programming to find a fast and optimal solution. Secondly, the ST graph is built to consider dynamic obstacles. The dynamic obstacles are mapped with the help of the last cycle trajectory of the ego car. The main idea is to project the last cycle’s moving trajectory on the Frenet frame so as to extract the station direction speed profile. That means we could more easily obtain an estimate of the ego car’s station coordinates given a specific time, which will be used to evaluate the interactions with dynamic obstacles. Once an ego car’s station coordinates have interacted with an obstacle trajectory point at the same time, a shaded area on the ST map will be marked as the estimated iteration with a dynamic obstacle. ST projection helps us generate the ego car’s speed profile so as to realize dynamic obstacle avoidance. Then, the final optimal trajectory is generated by the combination of the path profile and speed profile.

2.3 Existed problems and our improvement

As analyzed above, Quadratic Programming is a good method to obtain a fast and optimal solution. However, it only works on the convex space. Therefore the non-convex space needs to be transformed into convex space. In EM Planner, Dynamic Programming is implemented to search for a rough solution first and build a convex hull around it. In theory, this method seems feasible. However, in real implementation, due to the complexity of computation of Dynamic Programming, this method hugely increases the running time of programming and sometimes it is hard to obtain the solution in real-time which will lead to the failure of motion planning. Autonomous vehicles always work at high speed with other dynamic vehicles, pedestrians and bicycles in complex scenarios like urban and highways where the failure of real-time motion planning will lead to serious traffic accidents, even threatening the life of human beings. After analyzing the problem and the methodologies, we find the problem which makes the computation of Dynamic Programming heavy is Average Sampling and the computational cost of some points that the optimal trajectory will never pass through. To solve this problem, what we consider is whether we could delete some unnecessary points before Dynamic Programming optimization, so as to simplify the computational task. How to distinguish these unnecessary points from useful points becomes a difficult point that we need to consider, because we can not scarify the performance of our final trajectory to save the running time. Then, we have the idea of taking advantage of prior information (prior optimal trajectory), because the optimal trajectory in the next cycle and that in the last cycle should not change a lot because of the constraints of dynamic vehicles and the request for the comfort of passengers. Another reason is the time interval between two motion planning is only 0.1 second which is too small to have big vibration of trajectory in the normal driving condition. Therefore, the optimal trajectory in the next cycle should be close to the trajectory in the last cycle. In addition, the optimal trajectory should not be too close to road boundaries and obstacles to make sure the requested safety. Inspired by Artificial Potential Field (APF), the obstacles and road boundaries are considered as repulsive forces and the optimal path in the last cycle can create an attractive force field. Then, we could rank the points in the same station by their value given by repulsive and attractive fields. The next step is to choose the minimal several points (for example, 5 points in our experiment) to create an adaptive sampling space for dynamic programming to find the rough solution. If the row and column of Average Sampling in motion planning are 11 and 4 for example, then the complexity of computation is . After adopting our ASAPF, the complexity becomes while keeping the performance with the same quality. Our experiment in Simulink shows the running time of Dynamic Programming in ASAPF is normally reduced by 2/3 in comparison with the original Average Sampling Method and proves the feasibility of our proposed method. With the help of our adaptive sampling method, the computational request is largely reduced, so it will be faster to obtain the solution and the request for a high-performance CPU is decreased because the computational task is simplified before dynamic programming. The most important thing is, the performance of the final trajectory is not sacrificed and will even get improved if we increase the density of longitudinal sampling with our proposed ASAPF.

3 Motion Planning

Motion planning can be divided into two components: path planning and speed planning (i.e., speed profile generation). Then, the path and speed profile are merged together to generate the final trajectory. There are many conventional sampling-based algorithms for path planning in motion planning that is proven feasible and useful, for example, Rapidly-Random exploring Tree (RRT) in [11] and RRT* in [22] (an improved method by the same team), Probabilistic Roadmap (PRM) in [28], etc. Although they are in general computationally efficient to find a feasible path, these random sampling-based methods tend to fail to find the optimal path in most of the scenarios mentioned in [17]. Nowadays, the popular methods to implement motion planning are based on the Frenet coordinate system, which means we need to transform the traditional Cartesian coordinate system to the Frenet coordinate system first, and then do the motion planning in the Frenet coordinate system. After receiving the final trajectory from the motion planning part, the last step is to transform the trajectory back from Frenet to Cartesian so as to follow the path easier for the control part. According to Lindemann and LaValle (2005) [19], motion planning is an NP-hard problem following the standard of computer and algorithm, but randomization could make it easier to solve this type of finding a feasible path in even non-convex space. Due to the limitation of computational ability in the onboard CPU, there should be a trade-off between the time efficiency of the algorithm and the optimality of the solutions. Definitely, higher sampling resolution will help to find better solutions to motion planning problems, however, it will also increase the computational complexity to solve the problem at the same time, leading to dangerous manoeuvres for autonomous vehicles in real-time implementation. In [8], Ichter et al. (2018) proposed a method which is to uses a non-uniform resolution to replace the uniform resolution sampling to solve this problem in RRT-based motion planning. In [9], Kumar and Chakravorty (2012) introduced an adaptive-resolution-based sampling strategy in Probabilistic Roadmap (PRM) by explicitly utilizing the information encoded in the connectivity graph. More recently, in 2020 IFAC [17], Zhaoting Li from HIT, China implemented an adaptive sampling method to determine the trajectory by dynamic programming. However, they only used reference line and obstacles information to adjust the density of sampling, disregarding the information from history information, therefore, the performance and the running time didn’t get too much improved. In their future work, they also suggested that history information should be used to dynamically adjust sampling density [17]. Based on their drawback and experience, we proposed our adaptive sampling method based on historical information to save the running time of dynamic programming in real-time.

Here is the structure of my method in Figure 4. Initially, we admitted that the global path has already been provided by the upper layer, so we could focus on the local planning part to avoid obstacles. The global path only roughly tells us which way we could choose to go, like the information offered by GPS navigation, but not how to go exactly and how to avoid obstacles and follow traffic regulations. Therefore, we need the local planner to achieve the final trajectory. The global path is always too long and too jerky to guild autonomous vehicles to drive in real time. That’s why the first step is to cut the global path into the local path and smooth it into a reference line, which will be discussed more specifically later. The reference line is represented in a Cartesian coordinate system, which is not easy to decouple and solve our motion planning mission. To solve this problem, the common method is to transfer the reference line from the Cartesian to the Frenet coordinate system. Then, the 3-Dimension problem (SLT) in the Frenet frame could be easily decoupled into two 2-Dimension problems (SL and ST) so as to simplify the problem because it is much easier to solve two 2-Dimension problems than solving a 3-Dimension optimization problem. In the next, adaptive sampling methods based on historical experience are implemented both in the SL graph and ST graph, and the computational task is incrementally simplified without scarifying the performance of the planning trajectory. Path planning modules including Dynamic Programming (DP) and Quadratic Programming (QP) will build path profiles, which will be used as the initialization for the ST graph, and then speed planning will offer us a speed profile after DP and QP. The last step is to merge path and speed profiles to generate the final planned trajectory and transform it back from Frenet to the Cartesian coordinate system because it is easier for the control module to do path following in the Cartesian coordinate system.

3.1 Reference line

The reference line is the link between global planning and local planning. Global planning offers guidance to the latter and local planning specifies how to avoid collision with obstacles on the road in real-time. There are mainly three reasons why a reference line is necessary and the global route is impossible to be directly used for local planning. The first reason is that the global route is too long to follow (for example, our GPS could offer us a way around 100 km from L’Aquila to Rome) so the computational task will become too heavy for the CPU with the global route. The easiest way to solve this problem is to prune the global route and generate a reference line. Secondly, our ego vehicle and obstacles need to be projected in the same line together so as to compare their positions and avoid collision between them. However, if we consider the global route as our reference line, the projection of ego vehicle and obstacles may not be unique which will make the failure of collision avoidance, like the example in Figure 6. The last reason is that the global route is too jerky (smoothing enough), so the passengers will not feel comfortable in autonomous vehicles with that kind of jerky trajectory. The generated reference line will offer us a smoother line as input for local planners. The normal way to create a smoothing reference line is Quadratic Programming (QP). The cost function (QP) includes three parts: reference cost, smooth cost and length cost, so we have the cost function equation:

| (1) |

This cost function above is used only for three neighbour points starting from the first point in the global route every time. It will be iteratively implemented until the length of the reference line is enough. Normally, in our experiment, the length we choose to use is 180 meters. The length before the projection point of the ego vehicle is 150 meters, and the length after is 30 meters, as shown in Figure 5.

The reference line should be smoother than the original global route, but the deviation between them should not be too much, therefore reference cost is important here and the formulation is given below:

| (2) |

Here and are the coordinates of discrete points on the reference line.

The smoothing function means the second point should try to keep not too far from the middle of the first and third points, therefore the equation of smoothing cost is given below:

| (3) |

The last cost is length cost, which means the points in the reference line should be as close as possible to neighbour points:

| (4) |

3.2 Cartesian and Frenet frame Transformation

In this section, the Coordinate system transformation problem is mainly discussed. As shown in Figure 7, the curve road boundaries in the Cartesian frame will be transformed into straight in the Frenet frame and the nonlinear constraints will become linear which is easier to solve our optimization function in motion planning task. In addition, the longitudinal distance will be presented as S (Station) and the lateral distance with reference to S will be shown as L (Lateral), so the problem will be decoupled into two separate directions. Assume we have , , , in the Cartesian frame, the objective of transformation is to obtain the coordinates based on the reference line in Frenet coordinate system. Specifically speaking, we need to obtain , , , , ,, , . The definition of these variables is given below:

| (5) |

The process of how to transform is a little complex, so we put the proof of Cartesian to Frenet frame using a vector method in Appendix 9.2 and the Frenet to Cartesian is ignored here. Here the result is directly given in this section.

-

•

Cartesian to Frenet

(6) -

•

Frenet to Cartesian

(7)

3.3 Path Planning

The objective of path planning is to offer a path with coordinates SL (Station and Lateral) so that our ego vehicle following this path will avoid collision with static and low-speed dynamic obstacles. As for the high-speed dynamic obstacles, the problem will be solved in the speed planning section. By merging the path profile from path planning and speed profile from speed planning, the final trajectory will be generated, which will satisfy our goals - avoid multiple dynamic and static obstacles.

Adaptive Sampling in SL graph

In sampling-based motion planning algorithms, the number of samples determines the complexity of the computation required to solve the problem. Conventionally, the uniform sampling method will require a large number of samples and more computational resources as well. A uniform sampling pattern can be found in Gu et al. (2016), which is shown in the first picture of Fig 8. The quality of the path generated by this uniform pattern is highly dependent on the resolution. However, more running time during dynamic programming in the next step is needed if we increased the resolution in uniform sampling to achieve a trajectory with higher performance. To solve the problem that existed in the uniform sampling method, an adaptive sampling method is proposed by us so as to dynamically adjust uniform sampling patterns based on the history information. Adaptive sampling will automatically adjust the density of sampling based on the partially-observed information and the historical experience in the real-time implementation. In our motion planning experiment, roads are always bounded by two edges, and it is easy to obtain the optimal trajectory from the last cycle and the obstacles information in the real-time implementation. Therefore, we proposed our adaptive sampling method, which will take into consideration the historical trajectory, obstacles information and road boundaries.

In the next, the details of the implementation of our Adaptive Sampling Method will be presented. First of all, uniform sampling is realized as initialization, the same as shown in Figure 8. Suppose the number of layers is , and a layer is denoted by (i = 1,2,…, N), and in every layer, the number of nodes is M, so the location of a node on is denoted by (i = 1,2,…, M). Then, inspired by Artificial Potential Field (APF), the repulsive field and attractive field are defined so as to predefine and compare the possibility of being nodes of optimal trajectory in the next cycle.

Let us define the total APF force first, which equals the sum of repulsive force and attractive force. In our problem, repulsive force is given by obstacles and road boundaries and the optimal path in the last cycle offers us the attractive field in the theory:

| (8) |

-

1)

Obstacle Repulsive Field

The repulsive field given by static obstacles depends on the distance between sampling points and obstacles boundaries. Because the obstacle may not be symmetry with respect to the reference line, here we defined the obstacle field function based on the obstacle upper-bound and obstacle lower-bound :(9) Here is the coefficient of obstacle repulsive field. For the consideration of safety, it is defined as 1000 in our Simulink implementation.

-

2)

Boundary Repulsive Field

Then, the boundary repulsive field is given here. Similar to the form of the obstacle field, the form of the equation is defined depending on the distance between lateral deviation and lateral road upper boundary , the distance between that and lateral road lower boundary as well:(10) is the coefficient of the boundary repulsive field. By testing in our experiment, if the value is chosen as 20, our adaptive sampling method performs well. In addition, it is important to mention that, the objective of forming the equation when like that is to make sure the continuity of equations.

-

3)

Trajectory Attractive Field

In a real implementation, it is not difficult to obtain the optimal trajectory in the last cycle of motion planning. This information can be fully used to converge the unique sampling area into a more feasible and smaller space for the reason that the optimal trajectory will not vibrate too much in 100 milliseconds time intervals in a normal situation.Here is the coefficient of the trajectory attractive field. In our experiment, the value is chosen as 20. In the first cycle, there is no optimal solution from the last cycle, which will lead to the deadlock problem in the Simulink simulation. To solve this problem, a delay with initial values is added to this signal. The initial value is given from the reference line.

In this Figure 9, we see the visualization of the effect of our ASAPF. Depending on the distance with obstacles, road boundaries and history optimal trajectory, the sampling points in each column are adaptively adjusted so as to focus more on the area where the next optimal trajectory is easier to appear.

Dynamic Programming in SL graph

Until now, we have already transformed the reference line generated based on the global route from Cartesian into Frenet coordinate system, and our proposed adaptive sampling method has already been implemented to decrease the scale of sampling meanwhile maintaining the possibility to obtain optimal path. Then, in this section, the problem becomes how to find an optimal function of lateral coordinate w.r.t. station coordinate in non-convex SL space. Quadratic programming is fast and efficient enough to solve a 2-D optimization problem but the premise is that the problem has to be within convex space. It is obvious that our motion planning task is not a convex problem. Therefore, dynamic programming is used before quadratic programming to make path decisions, or in other words, provide a rough path profile. Then based on that rough path profile, a convex space will be built where quadratic programming could be implemented easily to take into consideration dynamic vehicle constraints and make the final path smoothing which is important for autonomous vehicles to follow in the control part.

After constructing sampling space using the adaptive sampling method, each graph edge could be evaluated by the summation of cost functions. Smoothness, obstacle avoidance and reference line should be considered together in dynamic programming. Therefore, the total cost of every edge in dynamic programming could be defined in the form below:

| (11) |

-

1)

Smoothing Cost

Let us give the smoothing cost first. By Newton’s theory, it is easy to know that lateral velocity is the derivative of a lateral function . Due to the same reason, the derivative of lateral velocity means lateral acceleration . Here lateral jerk is defined as the derivative of lateral acceleration , which represents the rate at which an object’s acceleration changes with respect to time. The jerk will influence the comfort of passengers, so it should be decreased as possible as we can. The smoothing cost has the form below:(12) -

2)

Obstacle Cost

If the distance between lateral derivation and the obstacle centre point is less than the boundary, it means there will be a collision at that point, so a large value will be given as obstacle cost so as to detect an infeasible path. Here in equations, for simplicity of expression, we define the large value as . Then, if the distance is over the safety distance, the influence of that obstacle could be neglected, so the obstacle cost will become 0. Between collision distance and safety distance, the obstacle cost will be defined as a monotonically decreasing function with respect to the distance, as shown below:(13) -

3)

Reference Cost

If there are no obstacles on the road, our ego vehicle should try to follow our reference line. Therefore, a reference cost is defined to incrementally punish the path with respect to the distance with the reference line:(14)

In the content above, the cost function is defined which could give cost to every single edge. We could set the initialization point as layer 0, and the cost of nodes in this layer is 0 too. The costs of nodes in the current layer are equal to the minimal sum of edge cost and cost of nodes in the last layer. Repeat that step until the nodes in the final layer are given values of costs as well. Then try to search the minimal cost in each layer from the final layer back to layer 0, which will give us a feasible path that is the result of dynamic programming. The details of the DP process are given in Algorithm 1.

In our implementation, it is easy to know that it is impossible to connect sampled nodes using straight lines because they can not satisfy the constraints of curvature and jerk. To solve this problem, the fifth-degree polynomial curve is used which could satisfy the requirement to jerk and does not make the problem too complex to solve at the same time. In Appendix 9.1, I will explain why the fifth-degree is chosen, rather than another degree, for example, the fourth-degree polynomial which is easier to solve, and the sixth-degree polynomial which is more accurate.

Quadratic Programming in SL graph

A rough path profile has already been obtained by dynamic programming (QP) in the last step. That means we have already decided to pass the obstacle from the left side or right side. Next, a convex space is built based on the obtained rough path. Quadratic Programming could be seen as a refinement of the dynamic programming path step. Normally, before implementing QP, objective functions and constraints should be defined in advance. The objective function is mainly related to a linear combination of smoothness costs which have the same form as in dynamic programming. Mathematically, the QP path step will optimize the following function:

| (15) |

Here represents the DP path result.

The constraints in the QP path include boundary constraints and dynamic feasibility. They are defined in discrete formulation, so let us note:

| (16) |

Suppose the third order derivative of between and is a constant and the higher orders of that are 0. So we could use Taylor expansion here in and .

| (17) | ||||

By simple transformation for that equation, we will have:

| (18) | ||||

It could be written in matrix form:

| (19) |

Note the left matrix on the left side of the above equation is . This constraint is only for two neighbour points. Suppose we have that needs to be optimized. If we implement this constraint for each two neighbour points, we will have the total equation constraint:

Then the simple inequality constraint could be defined as:

| (20) |

If we consider the volume of the vehicle, like in Figure 10, we need to make sure that all four edge points have to be within the range of the road boundary. Therefore, we have the expression of four endpoints:

| (21) | ||||

Because is a very small value, by trigonometric functions, it is easier to do the approximation:

| (22) |

So the equation 21 could be rewritten as below:

| (23) |

For safer manoeuvre and easier implementation, we first find the minimum of between and and note it as . So our constraints become:

| (24) |

For the same reason, the same constraints are implemented for the lower boundary, therefore, in the end, we will have the inequality constraint:

| (25) |

Rewrite 25 in matrix form, we will obtain: ,

| (26) |

Then the last step is to write it in to satisfy the inequality constraint formulation in Quadratic Programming:

| (27) |

3.4 Speed Planning

As for static obstacles and dynamic obstacles at low speeds, the path profile obtained by path planning is enough to realize obstacle avoidance. However, in automated driving motion planning problems, it is totally not sufficient if only static and low-speed obstacles are considered for the reason that there are always other vehicles, pedestrians, bicycles, etc on the road. Therefore, speed planning is designed to avoid dynamic obstacles. We admitted that path planning has already been finished. That means we have a feasible path profile to avoid obstacles. Based on that path profile, speed planning will tell the automated vehicle to drive following a specific velocity so as to avoid dynamic obstacles. Similar to path planning, the first step is also adaptive sampling, but in the ST graph. Then, the formulations of DP and QP for the ST graph in adaptive sampling space are analysed.

Adaptive Sampling in ST graph

Firstly, the station coordinate system is the path in a Cartesian coordinate system. In the next several seconds, if the dynamic obstacles will be projected on the station coordinate system, that will be presented in the ST graph.

In the past, uniform sampling is implemented in the ST graph, leading to huge computation complexity. Therefore, we also proposed to implement adaptive sampling using dynamic vehicle constraints here so as to delete some impossible nodes before dynamic programming. To formalize the problem, vehicle dynamics are given in the form: , ,

Similar to path planning, here we defined the cost function of our ASAPF:

| (28) |

-

1)

Smoothing Cost

(29) -

2)

Obstacle Cost

(30) -

3)

Constraint Cost

(31)

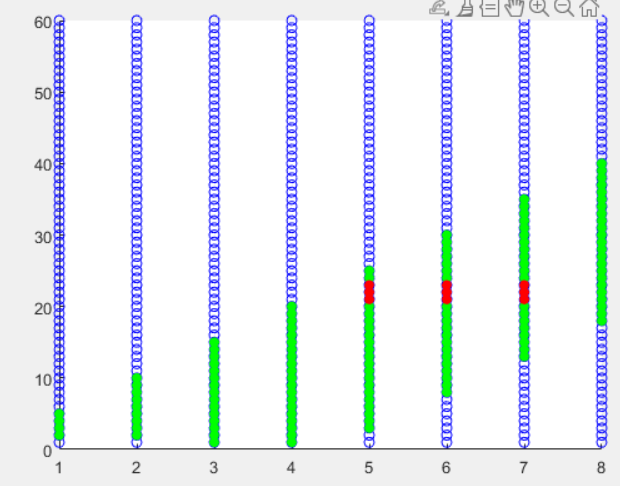

For example, if , , prediction period , , , by implementing our adaptive sampling method in ST graph, we will have Figure 11. During this figure, the blue points are the result of average sampling, and the green points are the result of our adaptive sampling. We could see from the graph that the useless nodes have already been removed before Dynamic Programming in ST graph, therefore the computational task is reduced too, same as adaptive sampling in path planning.

Dynamic Programming in ST graph

From the perception module, it is not difficult to obtain information on dynamic obstacles (x coordinate, y coordinate, velocity and heading angle). Then, these dynamic obstacles need to be projected on the ST graph we built in the last step to represent the prediction of the trajectory of dynamic obstacles. For the reason of simplicity, the velocity of dynamic obstacles is supposed to be constant. This assumption will not lead to the failure of speed planning, because our planning time interval is very short (around 100 milliseconds). During this short interval, speed could be approximated as constant. Similar to path planning, the goal of speed planning is to find the best speed profile on the ST graph which is a non-convex optimization problem too. Therefore, dynamic programming combined with quadratic programming is used to find a smooth speed profile on the ST graph. In detail, a piece-wise linear speed profile function is represented as on the grids. The finite difference method is taken to approximate the derivatives. If represents the time interval on the time axis between two evaluated points with equal space gap, then it is easy to give the formulation of velocity , acceleration , and jerk :

| (32) |

| (33) |

| (34) |

The cost function needs to be specifically defined for the speed profile here because it is different from that in the SL graph. The idea is also to optimize a cost function in the ST graph within the constraints. In detail, the cost function for dynamic programming in speed planning is represented as follows:

| (35) |

If there are no obstacles, the vehicle should try to follow the reference velocity, so the first term is the velocity-keeping cost. The acceleration and jerk square integral describes the smoothness of the speed profile. The last term, decided by the distance with obstacles’ boundaries, describes the total obstacle cost.

Quadratic Programming in ST graph

After Dynamic Programming in the last step, we have already known that the autonomous vehicle needs to accelerate or decelerate to avoid dynamic obstacles avoidance. Then, the next step is to refine the speed profile so as to improve the success of path following in the Control module and the comfort of passengers. Same as in path planning, we defined the cost function and constraints in Quadratic Programming too here. The cost function is described as follows:

| (36) |

4 Vehicle Models

4.1 Kinematic Model

According to [26], the Kinematic model of autonomous vehicles is the study of the motion of a system without taking into consideration the forces and torques. It is sufficiently approximate to perform non-aggressive manoeuvres at lower speeds. However, if we consider something like a racing car, automated driving on the highway or in complex scenarios, the kinematic model would fail to capture the actual system dynamics. In such occasions, dynamic models are needed to consider forces and torques as well.

In this section, the kinematic bicycle model which is the most widely used nowadays is presented. In practice, this model tends to strike a good balance between simplicity and accuracy and is therefore widely adopted. The basic idea of modelling is to see how the vehicle states change over time based on the previous states and current control inputs. First, vehicle states are defined, constituting and components of position, heading angle or orientation and velocity . In other words, self-vehicle state vector is defined as follows:

| (37) |

First, control vector is defined as following:

| (38) |

Due to the limitation of acceleration and deceleration based on the physical throttle and braking, the input should be limited in the range of . Additionally, The steering command alters the steering angle of the vehicle, where such that negative steering angles dictate left turns and positive steering angles otherwise.

The kinematic model of the vehicle as shown in figure 12. The slip angle can be computed using the distance between the rear wheel axle and the vehicle’s centre gravity, such that:

| (39) |

therefore,

| (40) |

The form of the slip angle could be further simplified if we ideally choose as half of L. Then we have:

| (41) |

The derivative of x and y is equal to the vectors following the direction of coordinate x and coordinate y separately. Therefore, we could easily compute them with the relation of , and velocity vector .

| (42) |

| (43) |

The next step is to calculate . According to Figure 12,

| (44) |

So should be computed in advance. Following the basic trigonometry, it is easy to get:

| (45) |

In addition,

| (46) |

Therefore,

| (47) |

Until now, we could combine 0.8 and 0.11 to compute , and

| (48) |

Finally, we can compute using differential relation.

| (49) |

Now let us formulate the continuous-time kinematic model of autonomous vehicle

| (50) |

The last step is to transfer the kinematic model from continuous time to discrete time to obtain a state transition equation(). Here means the current time instant and represents the next time instant.

4.2 Dynamic Model

The dynamic model of two wheels in a 2-D bicycle vehicle is shown in Figure 13, based on which we will analyze the dynamic model of vehicles. Suppose the front wheel angle is small in our model, and the lateral force of the wheels follows the equation below (C means cornering stiffness):

| (51) |

Then, by Newton’s Law, we have:

| (52) | ||||

Because is very small, , so the function 52 will become:

| (53) | ||||

From Figure 13, it is easy to obtain the expression of , and .

| (54) |

So Equation 53 could be expressed as:

| (55) |

Then it could be written in matrix form:

| (56) |

Until now, the form of a nonlinear control system for two wheels bicycle model has already been provided, similar to . Here . By adjusting the input of control , we could control and , stabilizing the system. In the next part, Linear Quadratic Regular (LQR) will be used for the lateral control in the path following module.

5 Control Algorithm

Normally the control system of an autonomous vehicle could be divided into longitudinal control and lateral control. The component controls the longitudinal motion of the ego vehicle, considering its longitudinal dynamics. The controlled variables in this case are throttle and brake inputs to the ego vehicle, which govern its motion including velocity, acceleration, jerk and higher derivatives in the longitudinal direction. As for lateral control, the controlled variable, in this case, is the steering input to the ego vehicle, which governs its steering angle and heading. It is important to mention that the steering angle and heading are different. The heading is related to the orientation of the ego vehicle, while the steering angle describes the orientation of the steerable wheels and hence the direction of motion of the ego vehicle.

5.1 Lateral Control

In the last section, we have already obtained the lateral control system of the 2-wheels bicycle model , and the error of lateral control is

| (57) |

is already given by the reference trajectory, and should satisfy the equation above. According to Control Theorem, it is easy to obtain:

| (58) |

The objective of lateral control is to decide to make sure and close enough, which means to minimize . Output

| (59) |

-

1.

Step 1: A, B matrices are computed by the computation module with and the parameters of vehicles. Then K will be computed with A, B, Q, and R as input.

-

2.

Step 2:According to the position and station, first do the prediction for the next step, then compute and .

(60) After stabilizing, , and

(61) -

3.

Step 3:Compute Our objective is to choose , so as to make to be 0 as soon as possible.

(62) So will become 0 when:

(63) could not be influenced by , so the system is not controllable. However, by estimation, we get the result that , so is almost a constant and equals to .

-

4.

Step 4:

(64)

We could define a cost function related to and

| (65) |

Q,R are the symmetric matrix, and are the column vectors The constraints for this cost function is

| (66) |

The constraint is linear form, therefore it represents L in LQR. L plus QR is called the LQR algorithm in control theory.

Here the proof is neglected because it is easy to find it in any traditional Control theory book. The result of is given here:

| (67) |

could be neglected here because changes very small and is almost 0. So we have the form:

| (68) |

During this equation, equals to , equals to steering angle . Until now, our problem has already been transformed into a linear control system, with matrix and . By adjusting , it is easy to make the system tend to be , or minimize in a limited time.

5.2 Longitudinal Control

As for longitudinal control, one of the easiest control laws - PID is chosen because of its simplicity and feasibility. PID is so easy and common that we will not discuss it in more detail about it here.

6 Simulation and Results

6.1 Simulation environment

In a real implementation, Matlab/Simulink is the main tool for motion planning and controlling algorithm. Besides Simulink, CARSim is used to offer the dynamic module of vehicles. Prescan is chosen because it can offer a huge variety of sensors and simulation environments. The most important thing is both of the simulation software offer an interface with Matlab/Simulink. The Simulink module and the initialization file of the Motion Planning and Controlling part have already been uploaded to my GitHub: link with all the models and codes in my experiment.

6.2 Adaptive sampling visualization

Here we used a scatter graph for the visualization of our Adaptive Sampling with an Artificial Potential Field. Firstly, an average sampling is implemented to divide the continuous space into discrete, as shown in Figure 15

Then, in the next, ASAPF is used to calculate the cost for all the nodes in average sampling depending on their distance with an optimal trajectory in the last cycle, obstacles and road boundaries. One example of the cost is shown in Figure 16. The label [-5,5] refers to the road width (10 meters). [0, 100] means the planning length is 100 meters. The value in the third dimension represents the cost of every single node in average sampling. Then, we just chose several nodes with a smaller cost in each station and build our adaptive sampling area. Our methods will delete the unnecessary points in average sampling, saving the running time of dynamic programming in motion planning without scarifying the performance of planning trajectory, as shown in Figure 17.

6.3 Result comparison

SL graph computation

The experiment result is shown in Figure 18. The blue bar graph means the computation time of dynamic programming with original average sampling, and the orange bars represent the result of our ASAPF. The results clearly show that the running time of programming is hugely decreased, around average. In the next Figure 19, the optimal path performance in original average sampling and in adaptive sampling are compared together. The value we chose to compare is the minimal cost of dynamic programming which means the optimal path computed by programming. The result is almost the same which means the path performance is not influenced by our ASAPF. Therefore, it shows clearly that our Adaptive Sampling Method is feasible and useful to decrease the running time of dynamic programming without scarifying the performance of optimal solution at the same time.

ST graph computation

In the ST graph, Adaptive Sampling is also implemented, aiming to delete unnecessary points depending on the vehicle constraints. As shown in Figure 20, with adaptive sampling, the green nodes mean the real feasible area for the next dynamic programming to solve the problem (red nodes mean obstacles). The ST computation time comparison is shown in Figure 21, the left bar graph represents the original average sampling, and the right graph means the running time of dynamic programming with our adaptive sampling in the ST graph. By comparison, it is easy to prove the feasibility of our method in saving running time and improving the performance of the motion planning algorithm in automated driving.

7 Conclusions and Future Works

7.1 Conclusion

The objective of this thesis has been to speed up the dynamic programming for the motion planning of automated driving, using adaptive sampling to replace the conventional average sampling. An adaptive sampling has been proposed and formulated on the basis of the information on obstacles, road boundaries, prior optimal trajectory in the last cycle, and vehicle dynamic constraints. Based on the motion planning algorithm in Baidu Apollo - EM Planner, we have implemented the motion planning and control algorithm in MATLAB/Simulink. They have been co-simulated with Prescan and CARSim, which offer the model of sensors, dynamic vehicles and simulation environment, the model in Simulink proved the feasibility of our proposed adaptive sampling and the running time of dynamic programming has been reduced by 2/3 while keeping the same performance of final optimal trajectory. The improvement is significant for the motion planning module for the reason that the efficiency of the algorithm is very important for real-time implementation and the less time the algorithm needs to run, the more time the automated vehicle will have to react to some accident occasions and the safer the whole automated driving process will be.

7.2 Future work

Due to the limitation of the time span of this thesis, there are still some points that can be improved and are here indicated as future work.

To simplify the prediction of dynamic vehicles, the speed of obstacle vehicles was assumed constant in simulation tests, which is not the case in real cases. In this regard, a tool usually used relies on Neural Networks. For example, RNN or LSTM are commonly used to train a model to predict the trajectory of dynamic vehicles in many recent papers. However, more study is necessary to accurately predict the speed and trajectory of dynamic obstacles with such a tool. In addition, Game Theory is recently studied in automated driving problems, because other drivers in dynamic vehicles or other autonomous vehicles have their manoeuvres too when encountering other vehicles. The problem that they will yield or overtake should also be taken into consideration in the automated driving process, otherwise, there will be a failure of automated driving which will lead to heavy traffic accidents and be dangerous to human society. Last but not least, Dynamic programming has already been proven feasible for motion planning problems, but not the best solution for this problem. Another algorithm such as RRT*, or control theory (MPC) should also be studied in my future period to compare the performance of different methods.

Acknowledgements

This is a master’s thesis, finished at L’Aquila University, supported by Epico (Electric Pulsion and Control), an Erasmus Mundus + Program. This program includes four universities: Ecole Centrale de Nantes in France, L’Aquila University in Italy, Kiel Univerity in Germany, and the University in Romania. I spent the first semester in ECN, Nantes, France. Then, the last three semesters are spent in UAQ, L’Aquila, Italy. My tutor in UAQ is Professor Stefano Di Gennaro and my co-tutor is Professor Maarouf Saad from ETS, Canada. This master’s thesis lasts around 4 months, from March to July. In the beginning, It was arranged to finish this thesis in Canada with Professor Maarouf Saad in present. However, due to the limitation of time and the visa issue, I could not go to Canada, so we changed our plan and then I decided to stay in L’Aquila to continue my thesis. Every Friday, we have a meeting with Professor Stefano and Professor Maarouf online in a Zoom meeting. Also, another Indian student whose name is Mohit is also supervised by these two professors. These two professors are really responsible for us and helped us a lot during this period. They give me a lot of suggestions and advice which helped me improve a lot in research. During this period, I found my interest in researching. They gave me enough freedom to find my interest in automated driving, and according to their knowledge, offered me sincere advice every time. I really appreciate the process of my master’s thesis in the last four months. Therefore, I would like to continue my PhD study in the next. There are a lot of problems in the automated driving area I would like to research more and try my best to solve them. For example, as mentioned in future work, the prediction of other vehicles and pedestrians still remains a difficult topic to be solved in automated driving. My dream is to create a fully autonomous vehicle, L4 or even L5 level. It will be my pleasure if I could try my best to contribute a little bit in this area of automated driving. In my mind, technologies and science are like magic because they could do something others think impossible. In the last ten years, smartphones and the Internet are the hot topics. In the next ten years, automated driving and IoT will become the future. Based on my experience and knowledge, I am really happy that I could join in this evolution.

Chapter 8 Appendices

9 Appendix

9.1 Appendix 1

First of all, the definition of a jerk is given here. Jerk equals the rate of change of acceleration in time intervals related to the comfort of passengers in the real implementation of automated driving. In other words, if the trajectory is too jerky, the passengers will feel uncomfortable. Therefore, our main objective is to minimize the jerk:

If function is quadratic or linear, definitely jerk will be minimal. However, there are six constraints the curve connecting two points needs to satisfy:

| (8.1) |

Then, it is easy to obtain that:

| (8.2) |

Let us note that , and . Then, the constraints will become:

| (8.3) |

Until now, the problem has already become how to solve problem under the constraints

| (8.4) |

Here Euler-Lagrange Equation is used to solve this problem:

| (8.5) |

Euler-Lagrange Equation tells us the objective function will have minimal value if the equation below could be satisfied:

| (8.6) |

By the expression of , we could have: , , , Then, the final result is obtained like that: Let us go back to deduce the expression of from this result, hence,

| (8.7) |

Until now, we have already proved why a fifth-degree polynomial could satisfy the requirement of a minimal jerk and it is also the minimum degree that can satisfy our standard in motion planning problem.

9.2 Appendix 2: Frenet-Cartesian Coordinate Transformation

First of all, the definitions of , and are given here:

| (8.8) |

It is important to mention that and could be transformed to each other easily, following the equations below:

| (8.9) |

Then, seven basic equations are needed before the real deduction.

| (8.10) |

| (8.11) |

| (8.12) |

| (8.13) |

| (8.14) |

| (8.15) |

| (8.16) |

The core equation is this:

| (8.17) |

-

1.

The nearest reference point in the reference road line can be expressed as . The in this reference point will be chosen as of in Frenet frame, therefore we have:(8.18) -

2.

Do derivative to both sides in Equation 8.17, we could obtain:(8.19) Combined with 8.11, 8.15 and 8.12, then the equation becomes:

(8.20) Then, Make dot product with in both sides, it becomes:

So, we achieved the final expression of :

(8.21) -

3.

Transform core Equation 8.17, it is not difficult to obtain:(8.22) So,

(8.23) -

4.

(8.24) -

5.

(8.25) -

6.

Based on the expression of in Equation 8.21, we could do the derivative to get :(8.26) - 7.

-

8.

(8.31) so,

(8.32)

References

- [1] Alberto Broggi, Pietro Cerri, Mirko Felisa, Maria Chiara Laghi, Luca Mazzei, and Pier Paolo Porta. The vislab intercontinental autonomous challenge: an extensive test for a platoon of intelligent vehicles. International Journal of Vehicle Autonomous Systems, 10(3):147–164, 2012.

- [2] Martin Buehler, Karl Iagnemma, and Sanjiv Singh. The DARPA urban challenge: autonomous vehicles in city traffic, volume 56. springer, 2009.

- [3] Mohamed Elbanhawi and Milan Simic. Sampling-based robot motion planning: A review. Ieee access, 2:56–77, 2014.

- [4] Cristofer Englund, Lei Chen, Jeroen Ploeg, Elham Semsar-Kazerooni, Alexey Voronov, Hoai Hoang Bengtsson, and Jonas Didoff. The grand cooperative driving challenge 2016: boosting the introduction of cooperative automated vehicles. IEEE Wireless Communications, 23(4):146–152, 2016.

- [5] Haoyang Fan, Fan Zhu, Changchun Liu, Liangliang Zhang, Li Zhuang, Dong Li, Weicheng Zhu, Jiangtao Hu, Hongye Li, and Qi Kong. Baidu apollo em motion planner. arXiv preprint arXiv:1807.08048, 2018.

- [6] Tianyu Gu and John M Dolan. On-road motion planning for autonomous vehicles. In International Conference on Intelligent Robotics and Applications, pages 588–597. Springer, 2012.

- [7] Yong K Hwang and Narendra Ahuja. Gross motion planning—a survey. ACM Computing Surveys (CSUR), 24(3):219–291, 1992.

- [8] Brian Ichter, James Harrison, and Marco Pavone. Learning sampling distributions for robot motion planning. In 2018 IEEE International Conference on Robotics and Automation (ICRA), pages 7087–7094. IEEE, 2018.

- [9] Sandip Kumar and Suman Chakravorty. Adaptive sampling for generalized probabilistic roadmaps. Journal of Control Theory and Applications, 10(1):1–10, 2012.

- [10] Voemir Kunchev, Lakhmi Jain, Vladimir Ivancevic, and Anthony Finn. Path planning and obstacle avoidance for autonomous mobile robots: A review. In International Conference on Knowledge-Based and Intelligent Information and Engineering Systems, pages 537–544. Springer, 2006.

- [11] Yoshiaki Kuwata, Gaston A. Fiore, Justin Teo, Emilio Frazzoli, and Jonathan P. How. Motion planning for urban driving using rrt. In 2008 IEEE/RSJ International Conference on Intelligent Robots and Systems, pages 1681–1686, 2008.

- [12] Yoshiaki Kuwata, Justin Teo, Gaston Fiore, Sertac Karaman, Emilio Frazzoli, and Jonathan P How. Real-time motion planning with applications to autonomous urban driving. IEEE Transactions on control systems technology, 17(5):1105–1118, 2009.

- [13] Steven M LaValle and Seth A Hutchinson. Optimal motion planning for multiple robots having independent goals. IEEE Transactions on Robotics and Automation, 14(6):912–925, 1998.

- [14] Steven M LaValle and James J Kuffner Jr. Randomized kinodynamic planning. The international journal of robotics research, 20(5):378–400, 2001.

- [15] Bai Li, Yakun Ouyang, Li Li, and Youmin Zhang. Autonomous driving on curvy roads without reliance on frenet frame: A cartesian-based trajectory planning method. IEEE Transactions on Intelligent Transportation Systems, 2022.

- [16] Xiaohui Li, Zhenping Sun, Dongpu Cao, Zhen He, and Qi Zhu. Real-time trajectory planning for autonomous urban driving: Framework, algorithms, and verifications. IEEE/ASME Transactions on mechatronics, 21(2):740–753, 2015.

- [17] Zhaoting Li, Wei Zhan, Liting Sun, Ching-Yao Chan, and Masayoshi Tomizuka. Adaptive sampling-based motion planning with a non-conservatively defensive strategy for autonomous driving. IFAC-PapersOnLine, 53(2):15632–15638, 2020.

- [18] Maxim Likhachev and Dave Ferguson. Planning long dynamically feasible maneuvers for autonomous vehicles. The International Journal of Robotics Research, 28(8):933–945, 2009.

- [19] Stephen R Lindemann and Steven M LaValle. Current issues in sampling-based motion planning. In Robotics research. The eleventh international symposium, pages 36–54. Springer, 2005.

- [20] Fabio M Marchese. Multiple mobile robots path-planning with mca. In International Conference on Autonomic and Autonomous Systems (ICAS’06), pages 56–56. IEEE, 2006.

- [21] W David Montgomery, Richard Mudge, Erica L Groshen, Susan Helper, John Paul MacDuffie, and Charles Carson. America’s workforce and the self-driving future: Realizing productivity gains and spurring economic growth. 2018.

- [22] Iram Noreen, Amna Khan, and Zulfiqar Habib. Optimal path planning using rrt* based approaches: a survey and future directions. International Journal of Advanced Computer Science and Applications, 7(11), 2016.

- [23] Scott Drew Pendleton, Hans Andersen, Xinxin Du, Xiaotong Shen, Malika Meghjani, You Hong Eng, Daniela Rus, and Marcelo H Ang Jr. Perception, planning, control, and coordination for autonomous vehicles. Machines, 5(1):6, 2017.

- [24] Bo Peng, Dexin Yu, Huxing Zhou, Xue Xiao, and Chen Xie. A motion planning method for automated vehicles in dynamic traffic scenarios. Symmetry, 14(2):208, 2022.

- [25] Mihail Pivtoraiko and Alonzo Kelly. Efficient constrained path planning via search in state lattices. In International Symposium on Artificial Intelligence, Robotics, and Automation in Space, pages 1–7. Munich Germany, 2005.

- [26] Chinmay Vilas Samak, Tanmay Vilas Samak, and Sivanathan Kandhasamy. Control strategies for autonomous vehicles. In Autonomous Driving and Advanced Driver-Assistance Systems (ADAS), pages 37–86. CRC Press, 2021.

- [27] Santokh Singh. Critical reasons for crashes investigated in the national motor vehicle crash causation survey. Technical report, 2015.

- [28] Guang Song, Shawna Thomas, and Nancy M Amato. A general framework for prm motion planning. In 2003 IEEE International Conference on Robotics and Automation (Cat. No. 03CH37422), volume 3, pages 4445–4450. IEEE, 2003.

- [29] Anthony Stentz. Optimal and efficient path planning for partially known environments. In Intelligent unmanned ground vehicles, pages 203–220. Springer, 1997.

- [30] Berthold Ulmer. Vita ii-active collision avoidance in real traffic. In Proceedings of the Intelligent Vehicles’ 94 Symposium, pages 1–6. IEEE, 1994.

- [31] Moritz Werling, Julius Ziegler, Sören Kammel, and Sebastian Thrun. Optimal trajectory generation for dynamic street scenarios in a frenet frame. In 2010 IEEE International Conference on Robotics and Automation, pages 987–993. IEEE, 2010.

Abstract