Contribution of directedness in graph spectra

Abstract

In graph analyses, directed edges are often approximated to undirected ones so that the adjacency matrices may be symmetric. However, such a simplification has not been thoroughly verified. In this study, we investigate how directedness affects the graph spectra by introducing random directization, which is an opposite operation of neglecting edge directions. We analytically reveal that uniformly random directization typically conserves the relative spectral structure of the adjacency matrix in the perturbative regime. The result of random directization implies that the spectrum of the adjacency matrix can be conserved after the directedness is ignored.

I Introduction

Many real-world datasets are represented by directed graphs. In social networks, the follower-followee relationship defines a directed edge [1]. In nervous systems, a signal transduction between neurons occurs only in one direction [2]. In this way, the directedness renders the relationship between a pair of vertices asymmetric and characterizes many properties on graphs, such as the diffusion [3] and reciprocity [4]. Nevertheless, in the studies of complex networks, the edge directions in graphs are often ignored, and directed graphs are converted to the undirected counterparts. Here, we refer to such simplification as undirectization. While undirectization may affect the result of an analysis only negligibly, it can be critical in some cases. In this study, we investigate the importance of the directedness in graph analyses by focusing on the change in graph spectra using the matrix perturbation theory.

Graphs are typically represented by matrices, such as adjacency matrices, combinatorial and normalized Laplacians [5], and non-backtracking matrices [6]. The associated graph spectra offer important tools for capturing the global properties of graphs, such as module structures [7] and network centralities [8]. For example, in spectral clustering for undirected graphs, the number of clusters is determined by the number of eigenvalues that are isolated from the (asymptotically) continuous spectral band. The eigenvectors corresponding to these isolated eigenvalues provide partitioning of a graph [9, 5]. There have been a number of studies on the spectral properties for variety of matrices in the context of statistical physics and random matrix theory [10, 11, 12]. In spectral graph theory, several bounds for the largest and second-largest eigenvalue for adjacency matrices and Laplacians have been studied [13, 14, 15].

|

|

Spectral methods for undirected graphs and directed graphs are not completely analogous to each other. Because the matrices are asymmetric for directed graphs, their spectra contain eigenvalues with non-zero imaginary parts. This is partly a reason that motivates researchers and practitioners to ignore edge directions. To formulate spectral methods for directed graphs, several graph Laplacians for the spectral clustering of directed graphs have been proposed in the literature [17, 18, 19, 20, 21, 22]. Although the choice of Laplacians is an important issue, herein, we focus only on adjacency matrices of directed and undirected graphs. There are several researches on the spectra of directed graphs. For example, in spectral graph theory, bounds of the spectral radius of adjacency matrices for directed graphs have been studied [23, 24]. In random graph theory and statistical physics, spectral densities of adjacency matrices for random directed graphs have been investigated [25, 26]. In contrast, we consider typical spectra of directed graphs based on its undirected counterpart.

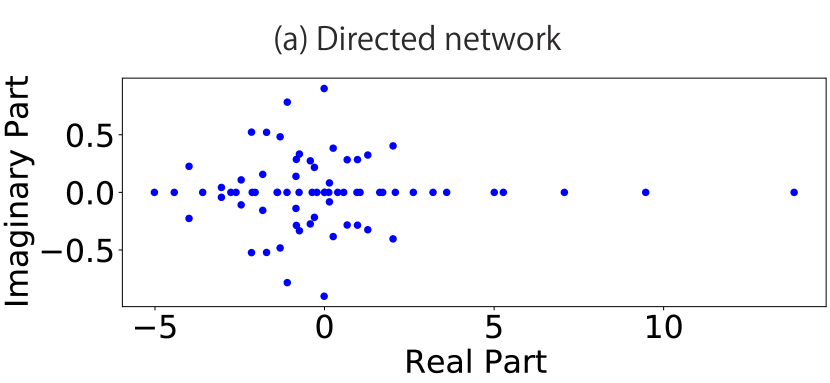

Figure 1 (a) shows the eigenvalue distribution of the adjacency matrix of a macaque-cortex network [16], which is partially directed; Fig. 1(b) presents the undirectized counterpart. We note that all eigenvalues are projected onto the real axis, and the scale along the real part is slightly larger in the undirectized graph. Despite these differences, the relative distances among the five largest eigenvalues along the real axis are almost unchanged between Figs. 1(a) and 1(b).

The last observation in this example motivates us to theoretically investigate the relationship between the spectral structures of directed graphs and their undirectized counterparts. To this end, we introduce random directization as an opposite operation of ignoring edge directions. That is, we consider an undirected graph as the original graph and randomly make undirected edges directed. We consider the typical variation of eigenvalues and eigenvectors under random directization. When the fraction of directized edges is sufficiently small compared to the total number of edges, the resulting adjacency matrix can be considered as a perturbed matrix of the original one. We apply the matrix perturbation theory to analytically evaluate variations of eigenvalues and eigenvectors after directization. As shown below, an important prediction of the perturbation theory is that the relative spectral structure along the real axis of the adjacency matrix is approximately conserved when the edges are directized uniformly randomly. This conversely explains our observation in Fig. 1 on undirectization.

There have been many works on perturbative analysis for undirected graph spectra. Let and be real-symmetric matrices, and we perturb by adding . Let , respectively denote the th eigenvalue and eigenvector of , and , respectively denote the th eigenvalue and eigenvector of . The bound for the variation of eigenvalues through this perturbation is known as the Weyl’s theorem [27]:

| (1) |

where represents the spectral norm of . As for the variation of eigenvectors, the Davis-Kahan theorem [28] explains how the eigenvector can change with the same perturbation: the angle between and is bounded as

| (2) |

where the sine of the angle between two vectors is defined by . In addition, many variants of the Weyl’s and Davis-Kahan theorems, such as the one convenient for application in statistical contexts [29] and the ones which assume low-rank matrices for and random matrices for [30, 31]. Sarkar et al. [32] extended the theorem in order to algorithmically estimate the number of modules in undirected graphs. Karrer and Newman [33] experimentally investigated the effect of adding edges to undirected graphs on their spectra. Note that in these studies, both the unperturbed and perturbed graphs were undirected, whereas in our study, we consider directizing perturbation.

The rest of the paper is organized as follows. In Sec. II, we formally define random directization and conduct a perturbative analysis. Then, we evaluate the variation in the spectra under undirectization in Sec. III. Finally, Sec. IV is devoted to a summary and discussions. The symbols used in this paper are listed in Appendix A.

II Random directization

An undirected graph has a symmetric adjacency matrix , in which when vertices and are connected, while otherwise. The degree, defined by , represents the number of the neighbors of vertex . For a directed graph, in contrast, the adjacency matrix is asymmetric, i.e., and when an edge has a direction from vertex to vertex only. Hereafter, we regard an undirected edge in a directed graph as a pair of directed edges in both directions. The in-degree and out-degree, defined by and , denote the number of in-neighboring vertices (with in-coming edges) and out-neighboring vertices (with out-going edges) of vertex , respectively.

We define uniformly random directization as follows. Let denote an undirected graph that consists of a set of vertices and edges and let denote a graph randomly directized from the original graph . The number of vertices is denoted by for both graphs, while the numbers of edges for and are respectively denoted by and ; note that each undirected edge is doubly counted in the latter, but not in the former. Hereafter, and denote the adjacency matrices of and , respectively. On directization, we alter an undirected edge between vertex and vertex to a directed edge (from to ) with probability , to (from to ) with probability , and remain undirected with probability , where ; see Fig. 2. The number of directed edges after the uniformly random directization becomes on average because we doubly count an undirected edge as a pair of directed edges with two directions. We express the adjacency matrix of the uniformly directized graph as

| (3) |

where is the perturbation matrix defined as follows: when , the set of matrix elements takes , , or with probabilities , , and , respectively; otherwise, . We express the th eigenvalue of as and its eigenvector as . The corresponding eigenvalue and eigenvector for are denoted by and , respectively. We express the ensemble of perturbation matrices for a given adjacency matrix as . Throughout this paper, the norm of each eigenvector is normalized to unity.

II.1 Perturbative analysis

Perturbation theory allows us to estimate the variation of each eigenvalue and the variation of each eigenvector under perturbative directization. We calculate the ensemble average and variance of these variations up to the first-order approximation.

II.1.1 Eigenvalues

For a given graph, the variation of the th eigenvalue along the real axis in the first-order approximation [34, 35] is

| (4) |

where denotes the transpose of a vector . Note that this is for a specific instance of graph, whereas we are interested in the ensemble of randomly directized graphs. To this end, we define a generating function for by

| (5) |

where is an auxiliary parameter, denotes the th element of , and represents the random average over the ensemble defined in

| (6) |

We find the average and variance of the variation for respectively by

| (7) | ||||

| (8) |

Because each edge is directized independently, the random average in Eq. (5) is calculated as

| (9) |

Then, the average and variance of the variation are given by

| (10) | ||||

| (11) |

respectively, where we used the fact

| (12) |

Equation (10) indicates that, in the range where the first-order approximation is valid, the average of the perturbed eigenvalue is

| (13) |

Thus, the relative spectral structure is conserved under uniformly random directization as long as the first-order approximation is valid.

Let us investigate the condition that the fluctuation of the variation is small. We consider the ratio between the average and standard deviation for the th eigenvalue:

| (14) |

Because each element of the eigenvector typically scales as and the number of nonzero elements in the summation is , where denotes the average degree of the original undirected graph, defined by , the ratio above scales as

| (15) |

In the case of regular random graphs, the eigenvalue at the edge of the spectral band is [36]. Thus, the fluctuation of the variation is expected to be negligible when is sufficiently large for the eigenvalues out of the spectral band.

|

|

|

|

II.1.2 Eigenvectors

For a given graph, the variation of the th eigenvector along the real axis in the first-order approximation [34, 35] is

| (16) |

For the th element of , we define its generating function by

| (17) |

We find the average and variance of the variation of the th element of respectively by

| (18) | ||||

| (19) |

As was done for the eigenvalues above, we can take the random average with respect to each edge, obtaining

| (20) |

From Eqs. (18) and (20), we find the average of ’s variation as follows:

| (21) |

Thus, in the first-order perturbative regime, uniformly random directization does not vary the eigenvectors of adjacency matrices on average. The variance of ’s variation is also obtained by using Eqs. (19) and (20):

| (22) |

II.2 Numerical confirmation

We now compare the first-order perturbative estimation with numerical calculations. We generated 10,000 uniformly randomly directized samples from the undirectized counterpart for real-world networks, and numerically calculated the eigenvalues and eigenvectors of the adjacency matrices.

Figure 3 exhibits the variation of the real part of each eigenvalue and the first element of each eigenvector of the undirectized macaque-cortex network [16] under random directization. We show the variation of all eigenvalues in the left panel and the variation of eigenvector elements corresponding to the top 20 eigenvalues in the right panel. In both panels, we observe that the first-order perturbative estimates and the numerical results agree well, particularly for the top eigenvalues, which are expected to be isolated eigenvalues.

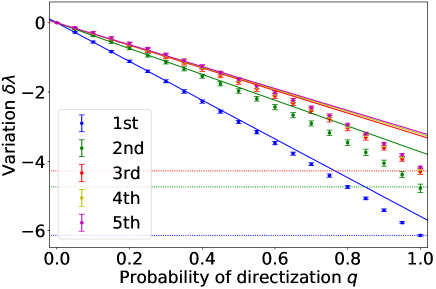

Figure 4 shows the -dependency of the top five eigenvalues along the real axis for two real-world directed networks; the macaque cortex network [16] and social network of employees at a consulting company [37]. The fractions of directed edges are 0.30 and 0.41, respectively. We numerically calculated the perturbed eigenvalues normalized by the original eigenvalues. Based on Eq. (10), we estimate the normalized eigenvalue to be regardless of the index of eigenvalue, which is illustrated by the dashed line in Fig. 4. For both networks, the estimation is reasonable when is sufficiently small. As increases, the difference between the theoretical estimate and the numerical result increases.

|

|

|

|

II.3 Distribution of directed in-degrees

Before we conclude this section, here we consider the degree distribution of directized edges after uniformly random directization. We define the directed in-degree for a directed graph as the number of in-coming edges for each vertex:

| (23) |

Here, we do not count undirected edges for , whereas we count them for . A simple example is shown in Fig. 5.

Note that because the directization of an edge affects the directed in-degrees of the vertices on both ends, the directed in-degrees of neighboring vertices are correlated with each other. Instead of deriving the directed in-degree distribution after actual random directization, we derive it after a process in which we uniformly randomly directize stubs (half-edges). This procedure is illustrated in Fig. 6. We independently alter an undirected stub to the in-coming one with probability and keep it undirected with probability . The probability that the number of directed stubs for vertex is is given by a binomial distribution:

| (24) |

We regard that each edge becomes a directed edge if one end of the edge is directized while the other end is not. After random directization of stubs, an edge is converted to with probability , converted to with probability , and remains undirected with probability . With probability , an edge becomes bi-directed and the resulting object is no longer a directed graph that we consider. Nonetheless, when is sufficiently small such that the emergence of the bi-directed edges is negligibly rare, this process is almost identical to the directization defined in Eq. (3) with . Thus, when is sufficiently small, the probability that a vertex has a directed in-degree after uniformly random directization approximately follows a binomial distribution:

| (25) |

Then, we obtain the directed in-degree distribution over a graph after uniformly random directization. For a subset of vertices with the same degree , the directed in-degree distribution approximately follows a binomial distribution:

| (26) |

Thus, the directed in-degree distribution over the whole graph approximately follows the mixture of binomial distributions:

| (27) |

where denotes the degree distribution of the original undirected graph.

III Variation of spectra under undirectization

We return to our original motivation of clarifying how the graph spectra are varied by ignoring edge directions, namely undirectization. Let denote a directed graph and let denote its undirectized graph. The numbers of edges for and are respectively denoted by and . The fraction of directed edges, , which corresponds to the probability of directization in random directization, should satisfy , and hence is given by . The adjacency matrices for and are respectively denoted by and .

The result from random directization implies that the relative spectral structure along the real axis is typically maintained under undirectization when the fraction is sufficiently small. The result of directization, Eq. (10), conversely implies that, after ignoring the edge directions, the real part of the th eigenvalue of is altered to the corresponding eigenvalue of as in

| (28) |

where denotes the real part of a complex value.

The perturbative analysis explains why the two spectra shown in Fig. 1 share almost the same relative spectral structure for the real parts. In Fig. 7, we compare the spectra of the macaque-cortex network, in which , with the theoretical estimates. The top panel shows the resulting spectrum after random directization in Eq. (10), and the bottom panel shows the resulting spectrum after undirectization in Eq. (28). Both panels show that the perturbative analysis is moderately accurate, particularly for isolated eigenvalues.

Apart from the accuracy of the perturbation theory, we can assess, using Eq. (27), to what degree a directed graph is regarded as a uniformly randomly directized one. Figure 8 compares the directed in-degree distribution of the original (directed) macaque-cortex network and the theoretical prediction [Eq. (27)] for a uniformly randomly directed graph. We assess the null hypothesis that the edges are directized uniformly randomly, by the goodness-of-fit test for the distributions in the range . As a result, we find that the -value of the empirical distribution is less than , which implies that the macaque-cortex network may not be regarded as a uniformly randomly directed graph, despite the accuracy of the perturbative analysis.

Note that the uniformity in random directization is not a necessary condition for the conservation of the relative spectral structure. Thus, the goodness-of-fit test of the in-degree distribution itself is not a criterion for the validity of our perturbative analysis. Nonetheless, this test partly explains why our analysis is plausible when the null hypothesis is not rejected.

IV Summary and discussions

In this study, we investigated the contribution of directedness in graph spectra. We introduced random directization as the inverse operation of ignoring the edge directions. We revealed that, in the perturbative regime, uniformly random directization does not destroy the relative spectral structure along the real axis of the undirected graphs. Additionally, we observed that the relative spectral structure along the real axis was also conserved for real-world datasets. Although the effects of directization and undirectization on the graph spectra are generally not symmetric, we showed that the results of random directization can be used to explain the behavior of the undirectization.

Several comments are in order. In Sec. II, we analyzed up to the first-order term in the perturbative expansion. However, it is not obvious whether the contributions from higher-order terms are negligible. In our formulation of perturbative random directization in Eq. (3), is not a perturbative expansion parameter, but simply defines the fraction of non-zero elements. Instead, we carry out the perturbative expansion with respect to the matrix , which does not consist of infinitesimally small elements. Here, let us consider the contribution from higher-order terms, especially the second-order term. The second-order terms in the perturbative expansion of the th eigenvalue along the real axis [34, 35] is given by

| (29) |

We numerically calculate the average of this second-order contribution over randomly directized graphs and show the result in Fig. 9 as solid lines. We also show the real part of the deviation from the first-order approximation in Fig. 9 as points. We observe that the second-order contribution is smaller when is sufficiently small, and the first-order approximation is indeed valid in that region. In addition, the average of the second-order term is not proportional to the original eigenvalues while that of the first-order term is. Therefore, the spectral structure can be much more complicated when higher-order contributions are dominant. In Appendix B, we analytically calculated the second-order perturbation expansion up to the first order in . As an exceptional case, we can analytically obtain the random average of the variation for random graphs when using the cavity method. We show the result for the stochastic block model in Appendix C.

Second, we can easily show that the conservation property of the relative spectral structure cannot be generalized to arbitrary directizations. For example, let us consider non-uniform directization on a graph with a module structure with two blocks. When a specific edge is altered to be directed as , the variation of each eigenvalue is expressed as

| (30) |

The largest eigenvalue varies negatively, regardless of the choice of the edges because and always have the same sign thanks to the Perron-Frobenius theorem. On the other hand, the variation of the second-largest eigenvalue , which is related to the module structure, depends on which edge is altered. When the edge functions as a bridge between the two blocks, is expected to be positive because the eigenvector elements and typically have different signs. In contrast, when both ends of the edge are located inside a common block, is expected to be negative because and typically have the same sign. Thus, the relative spectral structure is not conserved when the random directization is not uniformly random.

In this study, we evaluate the spectral variation only along the real axis. In the perturbative regime, all eigengaps along the real axis remain finite; thus, complex conjugate pairs never appear. As we further asymmetrize the adjacency matrix, some of the neighboring eigenvalues may collide at an exceptional point [35] and turn into a pair of complex conjugate eigenvalues. At the exceptional point, the corresponding eigenvectors become parallel to each other, and hence the matrix becomes undiagonalizable. The perturbation theory would no longer be valid because the matrices are assumed to be diagonalizable.

Random directization presented in this paper is applicable to resampling of graphs. Many complex systems have only one network data at one time, which makes it difficult to statistically analyze its properties, including the spectrum. To address this problem, there have been several resampling methods to duplicate similar undirected graphs from the original graph [38, 39]. The present study implies that we can utilize random directization for the purpose of resampling directed graphs with the same configuration of edges, whose relative spectral structure is typically close to the original undirected graphs.

Acknowledgements.

The authors thank Naomichi Hatano for his fruitful comments. This work was supported by JSPS KAKENHI No. 19H01506 (T.K. and M.O.), JST ACT-X Grant No. JPMJAX21A8 (T.K. and M.O.) and Quantum Science and Technology Fellowship Program (Q-STEP) (M.O.).References

- McAuley and Leskovec [2012] J. McAuley and J. Leskovec, Learning to discover social circles in ego networks, in Proceedings of the 25th International Conference on Neural Information Processing Systems - Volume 1, NIPS’12 (Curran Associates Inc., Red Hook, NY, 2012) pp. 539–547.

- Watts and Strogatz [1998] D. J. Watts and S. H. Strogatz, Collective dynamics of ‘small-world’networks, Nature 393, 440 (1998).

- Newman et al. [2002] M. E. Newman, S. Forrest, and J. Balthrop, Email networks and the spread of computer viruses, Phys. Rev. E 66, 035101(R) (2002).

- Garlaschelli and Loffredo [2004] D. Garlaschelli and M. I. Loffredo, Patterns of link reciprocity in directed networks, Phys. Rev. Lett. 93, 268701 (2004).

- Von Luxburg [2007] U. Von Luxburg, A tutorial on spectral clustering, Stat. Comput. 17, 395 (2007).

- Krzakala et al. [2013] F. Krzakala, C. Moore, E. Mossel, J. Neeman, A. Sly, L. Zdeborová, and P. Zhang, Spectral redemption in clustering sparse networks, Proc. Natl. Acad. Sci. U.S.A. 110, 20935 (2013).

- Newman [2006] M. E. J. Newman, Finding community structure in networks using the eigenvectors of matrices, Phys. Rev. E 74, 036104 (2006).

- Bonacich [1987] P. Bonacich, Power and centrality: A family of measures, Am. J. Sociol. 92, 1170 (1987).

- Fortunato [2010] S. Fortunato, Community detection in graphs, Phys. Rep. 486, 75 (2010).

- Rogers et al. [2008] T. Rogers, I. P. Castillo, R. Kühn, and K. Takeda, Cavity approach to the spectral density of sparse symmetric random matrices, Phys. Rev. E 78, 031116 (2008).

- Kühn [2008] R. Kühn, Spectra of sparse random matrices, J. Phys. A: Math. Theor. 41, 295002 (2008).

- Nadakuditi and Newman [2012] R. R. Nadakuditi and M. E. J. Newman, Graph spectra and the detectability of community structure in networks, Phys. Rev. Lett. 108, 188701 (2012).

- Chung et al. [2004] F. Chung, L. Lu, and V. Vu, The spectra of random graphs with given expected degrees, Int. Math. 1 (2004).

- Das and Kumar [2004] K. C. Das and P. Kumar, Some new bounds on the spectral radius of graphs, Discrete Math. 281, 149 (2004).

- Nilli [1991] A. Nilli, On the second eigenvalue of a graph, Discrete Math. 91, 207 (1991).

- Young [1993] M. P. Young, The organization of neural systems in the primate cerebral cortex, Proc. Biol. Sci. 252, 13 (1993).

- Chung [2005] F. Chung, Laplacians and the cheeger inequality for directed graphs, Ann. Combin. 9, 1 (2005).

- Zhou et al. [2005] D. Zhou, J. Huang, and B. Schölkopf, Learning from labeled and unlabeled data on a directed graph, in Proceedings of the 22nd international conference on Machine learning (Association for Computing Machinery, New York, NY, 2005) pp. 1036–1043.

- Li and Zhang [2012] Y. Li and Z.-L. Zhang, Digraph laplacian and the degree of asymmetry, Int. Math. 8, 381 (2012).

- Malliaros and Vazirgiannis [2013] F. D. Malliaros and M. Vazirgiannis, Clustering and community detection in directed networks: A survey, Phys. Rep. 533, 95 (2013).

- Rohe et al. [2016] K. Rohe, T. Qin, and B. Yu, Co-clustering directed graphs to discover asymmetries and directional communities, Proc. Natl. Acad. Sci. U.S.A. 113, 12679 (2016).

- Yoshida [2016] Y. Yoshida, Nonlinear laplacian for digraphs and its applications to network analysis, in Proceedings of the Ninth ACM International Conference on Web Search and Data Mining (Association for Computing Machinery, New York, NY, 2016) pp. 483–492.

- Brualdi [2010] R. A. Brualdi, Spectra of digraphs, Linear Algebra Appl. 432, 2181 (2010).

- Gudiño and Rada [2010] E. Gudiño and J. Rada, A lower bound for the spectral radius of a digraph, Linear Algebra Appl. 433, 233 (2010).

- Sommers et al. [1988] H. J. Sommers, A. Crisanti, H. Sompolinsky, and Y. Stein, Spectrum of large random asymmetric matrices, Phys. Rev. Lett. 60, 1895 (1988).

- Rogers and Castillo [2009] T. Rogers and I. P. Castillo, Cavity approach to the spectral density of non-hermitian sparse matrices, Phys. Rev. E 79, 012101 (2009).

- Weyl [1912] H. Weyl, Das asymptotische verteilungsgesetz der eigenwerte linearer partieller differentialgleichungen (mit einer anwendung auf die theorie der hohlraumstrahlung), Mathematische Annalen 71, 441 (1912).

- Davis and Kahan [1970] C. Davis and W. M. Kahan, The rotation of eigenvectors by a perturbation. iii, SIAM J. Numer. Anal. 7, 1 (1970).

- Yu et al. [2015] Y. Yu, T. Wang, and R. J. Samworth, A useful variant of the davis–kahan theorem for statisticians, Biometrika 102, 315 (2015).

- Fan et al. [2017] J. Fan, W. Wang, and Y. Zhong, An eigenvector perturbation bound and its application to robust covariance estimation, J. Mach. Learn. Res. 18, 1 (2017).

- Eldridge et al. [2018] J. Eldridge, M. Belkin, and Y. Wang, Unperturbed: spectral analysis beyond davis-kahan, in Proceedings of Algorithmic Learning Theory (PMLR, 2018) pp. 321–358.

- Sarkar et al. [2016] S. Sarkar, S. Chawla, P. A. Robinson, and S. Fortunato, Eigenvector dynamics under perturbation of modular networks, Phys. Rev. E 93, 062312 (2016).

- Karrer and Newman [2011] B. Karrer and M. E. J. Newman, Stochastic blockmodels and community structure in networks, Phys. Rev. E 83, 016107 (2011).

- Sakurai and Napolitano [2017] J. Sakurai and J. Napolitano, Modern Quantum Mechanics (Cambridge University Press, Cambridge, 2017).

- Kato [1995] T. Kato, Perturbation Theory for Linear Operators (Springer Berlin, Heidelberg, 1995).

- McKay [1981] B. D. McKay, The expected eigenvalue distribution of a large regular graph, Linear Algebra Appl. 40, 203 (1981).

- Cross and Parker [2004] R. Cross and A. Parker, The Hidden Power of Social Networks: Understanding How Work Really Gets Done in Organizations (Harvard Business Press, Boston, 2004).

- Rosvall and Bergstrom [2010] M. Rosvall and C. T. Bergstrom, Mapping change in large networks, PLoS One 5, e8694 (2010).

- Bhattacharyya and Bickel [2015] S. Bhattacharyya and P. J. Bickel, Subsampling bootstrap of count features of networks, Ann. Stat. 43, 2384 (2015).

- Holland et al. [1983] P. W. Holland, K. B. Laskey, and S. Leinhardt, Stochastic blockmodels: First steps, Soc. Networks 5, 109 (1983).

- Mézard et al. [1987] M. Mézard, G. Parisi, and M. A. Virasoro, Spin Glass Theory and Beyond: An Introduction to the Replica Method and Its Applications, Vol. 9 (World Scientific Publishing Company, Singapore, 1987).

- Neri and Metz [2016] I. Neri and F. L. Metz, Eigenvalue outliers of non-hermitian random matrices with a local tree structure, Phys. Rev. Lett. 117, 224101 (2016).

- Metz et al. [2019] F. L. Metz, I. Neri, and T. Rogers, Spectral theory of sparse non-hermitian random matrices, J. Phys. A: Math. Theor. 52, 434003 (2019).

- Neri and Metz [2020] I. Neri and F. L. Metz, Linear stability analysis of large dynamical systems on random directed graphs, Phys. Rev. Res. 2, 033313 (2020).

Appendix A List of symbols

Here, we show the list of notations used in the main article in Fig. 10.

Appendix B Contribution of the second-order terms in the perturbation theory

Here, we conduct an analytical calculation for the random average of the second-order terms of the eigenvalues and eigenvector elements up to the first order of . Note that, from the numerical calculation in Fig. 9, the random average of the second-order terms is not linear to , and thus this form of first-order evaluation is only valid when is sufficiently small.

The second-order terms in the perturbation theory of the th eigenvalue and the corresponding eigenvector along the real axis [34, 35] are, respectively, given by

| (31) | |||

| (32) |

Similarly to the case of the first-order approximation, we respectively obtain the average of the second-order term up to the first order of for the th eigenvalue and the th element of the th eigenvector under uniformly random directization in the forms

| (33) | |||

| (34) |

The detailed calculations are shown in Appendix D.

We find that the second-order variation of an eigenvalue is not typically proportional to the original one as in Eq. (10) and that of the eigenvector elements do not vanish as in Eq. (21). Thus, the relative spectral structure is not conserved under uniformly random directization when the contribution of the second-order term is sufficiently large.

Figure 11 numerically compares the second-order terms, Eqs. (33) and (34), with the first-order terms, Eqs. (10) and (21), for the undirectized macaque-cortex network. We observe that the second-order term is sufficiently smaller than the first-order term for some of the top eigenvalues. On the other hand, in the region in which the eigenvalues gather densely around zero, the second-order term is comparable to the first-order term, and our first-order approximation is invalidated.

|

|

Appendix C Spectrum out of the perturbative regime

We here investigate the behavior of eigenvalues out of the perturbative regime using synthetic graphs generated from the stochastic block model (SBM) [40, 33], which is a random graph model with a preassigned module structure. We particularly consider the SBM with two equally sized blocks, namely the symmetric SBM; we let and denote the vertex sets of the two blocks, with . For each pair of vertices, an edge is generated independently and randomly with probability if the vertices belong to the same block. Otherwise, they are connected with probability . The average degree is given by .

Figure 12 shows the variation of the top five eigenvalues for graphs generated from the SBM. Similarly to the real-world networks in Fig. 7, it is confirmed that the perturbation theory is valid when is sufficiently small, and the differences between the estimate and numerical results increase as increases.

We can analytically estimate the variation of isolated eigenvalues when the graph is fully directized, i.e., , by using the cavity method [41, 26] for the symmetric SBM. After full directization, the average number of in-neighbors in the same block and that in the different block are given by and , respectively, while the average number of out-neighbors in the same block and that in the different block are also given by and , respectively. From the cavity method, the spectral band edge of the adjacency matrix spectrum in the limit is given by [42, 43, 44]

| (35) |

Additionally, the cavity method yields the recursive equations, namely the cavity equations, for the eigenvector elements of the adjacency matrix. For a fully directed graph in the limit, the right-eigenvector elements and the left-eigenvector elements corresponding to the eigenvalue of the adjacency matrix are respectively given by the solution of the following cavity equation:

| (36) |

where and represent the set of out-neighbors and that of in-neighbors, respectively [42, 43].

We use these equations to estimate the isolated eigenvalues for the symmetric SBM. Considering the average over the symmetric SBM ensemble, we obtain the relations between the first and second moments of the eigenvector elements in each block as

| (37) | ||||

| (38) | ||||

| (39) | ||||

| (40) | ||||

| (41) | ||||

| (42) | ||||

| (43) | ||||

| (44) |

where the moments are taken over the SBM graph ensemble, and denote the th moments of the former eigenvector elements, and and denote the th moments of the latter eigenvector elements. For isolated eigenvalues, the first moments , , and are non-zero, which is satisfied when

| (45) |

By solving this, we find the following two isolated eigenvalues for the symmetric SBM with two blocks:

| (46) | ||||

| (47) |

which are real.

Appendix D Derivation of Eqs. (33) and (34)

D.1 Eigenvalues

D.2 Eigenvectors

From Eq. (32), we define the three generating functions for the second-order term of the th eigenvector as

| (54) |

Then, the random average of the second-order term is given by

| (55) |

We find the first generating function in the form

| (56) |

For small , we have

| (57) |

The second generating function gives

| (58) |

Again for small , we have

| (59) |

Finally, the third generating function takes the form

| (60) |

which is followed for small by

| (61) |

Thus, we arrive at the average of the second-order term in the perturbation theory as

| (62) |

which we used in Eq. (34).