Contrastive Learning for Joint Normal Estimation

and Point Cloud Filtering

Abstract

Point cloud filtering and normal estimation are two fundamental research problems in the 3D field. Existing methods usually perform normal estimation and filtering separately and often show sensitivity to noise and/or inability to preserve sharp geometric features such as corners and edges. In this paper, we propose a novel deep learning method to jointly estimate normals and filter point clouds. We first introduce a 3D patch based contrastive learning framework, with noise corruption as an augmentation, to train a feature encoder capable of generating faithful representations of point cloud patches while remaining robust to noise. These representations are consumed by a simple regression network and supervised by a novel joint loss, simultaneously estimating point normals and displacements that are used to filter the patch centers. Experimental results show that our method well supports the two tasks simultaneously and preserves sharp features and fine details. It generally outperforms state-of-the-art techniques on both tasks. Our source code is available at https://github.com/ddsediri/CLJNEPCF.

Index Terms:

Point cloud filtering, normal estimation, contrastive learning, machine learning.1 Introduction

Point clouds have numerous applications as they provide a natural representation of 3D geometric information. They have seen applications in fields such as autonomous driving, robotics, 3D printing and urban planning [Luo-Pillar-Motion, Bekiroglu-PCD-Robotics, Kim-3D-Printing, Urech-Urban-Planning]. Captured using 3D sensors, point clouds consist of unordered points which lack connectivity information between individual points. The captured point cloud information may be corrupted with noise. Therefore, one fundamental research problem is point cloud filtering, also known as denoising. Another fundamental task is normal estimation at individual points. Together, they facilitate other tasks such as 3D rendering and surface reconstruction.

Conventional normal estimation methods, such as Principal Component Analysis (PCA) and its variants [Hoppe-PCA, Mitra-Estimating-Surface-Normals, Pauly-Point-Sampled, Yoon-Surface-Normal-Ensembles] and Voronoi diagram based approaches [Amenta-Surface-Voronoi, Alliez-Voronoi-Variational, Dey-Voronoi-based-Normal-Estimation], perform poorly when estimating the normals at sharp features such as corners or edges and show high sensitivity to noise. To address these issues, a number of learning based methods have been recently proposed such as Deep Feature Preserving (DFP) [Lu-Deep-Feature-Preserving] and Nesti-Net [Ben-Shabat-Nesti-Net]. However, they have large network sizes and therefore are typically slow. Methods such as AdaFit [Zhu-AdaFit] and Deep Iterative (DI) [Lenssen-Deep-Iterative] offer more lightweight solutions that perform admirably, but still show less robust results at higher noise levels.

Point cloud filtering can be classified into two main types: normal based methods [RIMLS-Oztireli, Sun-L0, Avron-L1, Lu-Deep-Feature-Preserving] and position based methods [Lipman-LOP, Huang-WLOP, Rakotosaona-PCN, Zhang-Pointfilter]. The former utilizes normal information at a given point in order to apply a position update algorithm [Lu-Deep-Feature-Preserving], while the latter does not require normal information and relies solely on position information. Among position based methods, a common issue is the inability to preserve sharp features during the filtering process while normal based methods rely heavily on normal accuracy. Learning based approaches seek to resolve this. In particular, Pointfilter [Zhang-Pointfilter], performs effectively at preserving sharp feature information on CAD-like shapes yet fails to generalize to large scenes. Methods such as PointCleanNet [Rakotosaona-PCN] and TotalDenoising [Hermosilla-Total-Denoising] also perform sub-optimally, tending to smear sharp features.

In this paper, we propose a novel method capable of simultaneously inferring point normals and displacements while maintaining robustness to noise. Our method comprises of a feature encoder capable of generating latent representations of patches based on patch similarity and a regressor capable of inferring point normals and displacements simultaneously. We introduce a 3D patch based contrastive learning framework to train the feature encoder which employs noise corruption as an augmentation technique, allowing the encoder to identify the sharp geometric features of the underlying clean patch despite different levels of noise corruptions. The regressor consumes the latent representation of a patch and outputs the point normal and the displacement required to filter the central point of that patch. To train the regressor, we introduce a novel loss function that jointly penalizes inferred point position error and normal estimation error by exploiting the relationship between a point’s position and normal. We intuitively assume that a filtered point’s normal should correspond to a ground truth point’s normal if this ground truth point first corresponds to that filtered point in position, thus leading to the relationship between filtering and normal estimation.

The main contributions of this paper are as follows.

-

•

We develop a novel framework capable of inferring both points’ displacements and normals simultaneously by introducing a loss function capable of constraining both filtering and normal estimation tasks. This joint loss penalizes both position regression error and normal estimation prediction error and allows the network to learn both filtered displacements and point normals.

-

•

We introduce 3D patch based contrastive learning to generate effective patch-wise representations.

We conduct extensive experiments and demonstrate that our method, in general, outperforms state-of-the-art normal estimation and filtering techniques.

2 Related work

Normal estimation. In its earliest incarnation, normal estimation was based on Principal Component Analysis (PCA) [Hoppe-PCA]. Several variants of this initial PCA method have also been proposed [Mitra-Estimating-Surface-Normals, Pauly-Point-Sampled, Yoon-Surface-Normal-Ensembles]. Thereafter, approaches based on Voronoi cells were used to reconstruct surfaces while preserving sharp features and estimating normals [Amenta-Surface-Voronoi, Alliez-Voronoi-Variational, Dey-Voronoi-based-Normal-Estimation]. Recently, Lu et al. [Lu-Low-Rank] proposed a normal estimation method based on a Low Rank Matrix Approximation (LRMA) algorithm. Additionally, methods such as [Li-Robust-Sharp-Features, Zhang-Guided-Least-Squares, Zhang-Low-Rank, Zhang-Pair-Consistency-Voting] utilized point statistics and clustering to determine point normals.

Normal estimation (learning-based). One of the first learning models for normal estimation, HoughCNN, employs a voting mechanism for estimating normals. They utilize a local patch representation in Hough space that can be consumed by a CNN [Boulch-HoughCNN]. However, with the advent of PointNet [Qi-PointNet] and PointNet++ [Qi-PointNet++], newer methods have been proposed that directly consume point sets. PCPNet is one such example, which consumes point cloud patches at multiple scales [Guerrero-PCPNet]. Similarly, Nesti-Net consumes patches at multiple scales but also employs multiple sub-networks, Mixture-of-Experts, that specialize in estimating normals at these scales [Ben-Shabat-Nesti-Net]. Wang and Prisacariu introduced NINormal, a self-attention based normal estimation scheme [Wang-NINormal] while Lu et al. proposed Deep Feature Preserving (DFP), a two step mechanism that classifies points into feature and non-feature points and, subsequently, estimates their normals based on this classification [Lu-Deep-Feature-Preserving]. Finally, several deep learning methods based on weighted least squares plane fitting have been proposed [Lenssen-Deep-Iterative, Ben-Shabat-DeepFit, Zhu-AdaFit]. While these methods focus on accurately determining unoriented normals, the work of Wang et al. [Wang-Deep-Global-NO] focuses on estimating point normals and their orientations.

Point cloud filtering. Traditional filtering applications center around Moving Least Squares (MLS) approaches [MLS-Levin, Kolluri-MLS]. Alexa et al. [Alexa--MLS-PSS] built on MLS techniques to minimize the approximation error of denoised point set surfaces. These methods perform poorly on point sets with sharp features, an issue that Adamson and Alexa [IMLS-Adamson] and Guennebaud and Gross [APSS-Guennebaud] aimed to tackle. Lipman et al. developed the Locally Optimal Projection (LOP) operator which does not depend on a local data parametrization such as a local normal or tangent plane [Lipman-LOP]. This projection operator was further enhanced by Huang et al. and Preiner et al., who proposed a Weighted LOP (WLOP) [Huang-WLOP] and Continuous LOP (CLOP) [Preiner-CLOP], respectively. The main drawback to these MLS and LOP based techniques is their inability to preserve sharp features. Oztireli, Guennebaud and Gross [RIMLS-Oztireli] proposed Robust Implicit Moving Least Squares [RIMLS-Oztireli] which improves the filtering ability to preserve sharp features but relies heavily on the accuracy of normal information. Lu et al. proposed a point cloud filtering scheme based on normals estimated by their LRMA algorithm [Lu-Low-Rank]. Remil et al. reformulated point cloud filtering as a global, sparse optimization problem which is solved using Augmented Lagrangian Multipliers [Remil-Data-Driven-Sparse-Priors].

Point cloud filtering (learning-based). PointProNets used a CNN which consumes noisy height-maps and returns filtered ones [Roveri-PointProNets]. EC-Net employed a supervised scheme for edge aware filtering and upsampling [Yu-EC-Net]. PCN uses a norm loss based network to remove outliers and norm loss based network to filter points [Rakotosaona-PCN]. Pointfilter takes into account local structure by considering points and their ground-truth normals, during training time, to infer filtered positions [Zhang-Pointfilter]. DFP [Lu-Deep-Feature-Preserving] employs the position update mechanism of [Lu-Low-Rank] to filter points based on the estimated normals. ScoreDenoise (SD) models a noisy point cloud’s underlying surface with a 3D distribution supported by 2D manifolds and estimates the score for the gradient of the noise convolved distribution [Luo-Score-Based-Denoising]. TotalDenoising (TD) offers an unsupervised learning alternative to the aforementioned supervised schemes [Hermosilla-Total-Denoising].

Contrastive learning. Recently, we have seen the increased use of contrastive learning in generating faithful representations based on similarity between inputs. Self-supervised learning that maximizes agreement between similar inputs was first proposed by Becker and Hinton [Becker-Contrastive]. Thereafter, contrastive learning was further exploited to learn lower dimensional representations of high dimensional image data by the work of Hadsell, Chopra and LeCun [Hadsell-Contrastive]. Chen et al. utilized more recent neural network architectures and data augmentation methods in their SimCLR method [Chen-SimCLR]. Although initially designed for 2D image processing tasks, contrastive learning is now seeing applications in 3D representation learning [jiang2021unsupervised, Lal-CoCoNets, Du-Self-Supervised-Point-Cloud, Afham-CrossPoint] and for specific point cloud processing tasks such as shape completion, segmentation and scene understanding [Alliegro-Shape-Completion, Li-HybridCR, Hou-3D-Scene]. However, it has never before been explored in terms of the problems of normal estimation and point cloud filtering, which we focus on in this work.

3 Background and Motivation

In this section, we look at the motivation for our contrastive learning based joint normal estimation and filtering method.

3.1 Patch-based contrastive learning

As mentioned earlier, contrastive learning has emerged as an effective method of generating latent representations of inputs such as images or point clouds based on similarity between augmented pairs of inputs which are seen by the network during training [Chen-SimCLR]. The work of Xie et al. [Xie-PointContrast] extended this method to 3D point clouds. Crucially, their work focuses on generating representations of entire point clouds, i.e., they use a global approach. However, as Guerrero et al. [Guerrero-PCPNet] point out, normal estimation at a given point relies on the local structure of the point neighborhood rather than the global structure of the entire point cloud. This is also true for the problem of point cloud filtering [Rakotosaona-PCN] as effective filtering mechanisms must preserve sharp feature information locally. This motivates our approach of developing a patch-based contrastive learning mechanism where noise corruption of input patches is used as an augmentation to develop different views of the same underlying clean patch. Thereafter, we employ the Normalized Temperature-scaled Cross Entropy (NT-XEnt) loss function detailed in Sec. 4.3 which promotes similarity of generated latent representations for a given positive pair of augmented patches. Inspired by the work of [Chen-SimCLR], we do not explicitly sample negative pairs as the remaining augmented pairs within a batch can be used for this purpose. Furthermore, the goal of this contrastive process is to bring representations of patches of the same underlying clean structure closer together, which is unlike a triplet based learning process which simultaneously brings representations closer for similar patches while pushing away representations of dissimilar patches.

3.2 Joint normal estimation and filtering

Normal estimation and point cloud filtering are two interconnected tasks. Accurately predicted normals are central to reliable point cloud filtering and surface reconstruction as mentioned by [Lu-Deep-Feature-Preserving, RIMLS-Oztireli]. In a similar manner, predicting normals on less noisy patches provide more accurate results, as opposed to noisier patches where outliers affect the final prediction [Guerrero-PCPNet, Lenssen-Deep-Iterative]. This motivates our joint normal estimation and filtering approach where our regression network estimates patch normals along with point displacements to filter central patch points. Thereafter, the estimated normals are used to further refine the final filtered position. This approach helps exploit the interlinked relationship between normal estimation and point cloud filtering and motivates our joint approach.

3.3 Link between contrastive learning and regression tasks

Feature encoders trained using contrastive learning are adept at generating similar representations of similar inputs and dissimilar representations of dissimilar inputs. Guided by the intuition that two noisy variants of the same underlying clean patch should generate similar latent representations, we develop a contrastive learning framework with noise corruption as an augmentation to train a feature encoder (see Fig. 1). This encoder is later used as part of a regression network (Fig. 3) to infer point normals and filtered displacements simultaneously. During training of the regression network, the pretrained feature encoder’s weights are kept frozen and only the regressor is trained. The pretrained feature encoder is robust to noise and generates representations that can be consumed more effectively by the regression network during training. Fig. 2 illustrates t-SNE projections of 250 noisy variants of a given sharp feature patch and 250 noisy variants of a non-sharp feature patch obtained from the Cube shape in our dataset. The corresponding clean patches are illustrated at the top of Fig. 2. Each noisy variant contains Gaussian noise of standard deviation , ranging from to of the clean point cloud’s bounding box diagonal. We observe that a feature encoder trained without contrastive learning generates latent representations that are less similar for differing noisy patch variants sharing a common underlying clean structure, for both the sharp feature and non-sharp feature patch. This is evident from Fig. 2 as low noise patch variants (dark green markers) have projections that are far apart from their respective higher noise counterparts (light green/yellow markers).

The feature encoder pretrained using contrastive learning, with only noise corruption as an augmentation, generates latent representations whose t-SNE projections are clustered more closely, indicating that representations are more similar even as noise increases. This is due to the contrastive pretraining that exploits noise corruption as an augmentation and ensures robustness to noise. As such, latent representations generated by the feature encoder are easier for the regression network to distinguish, even for high noise patches, as these representations are similar to that of the underlying clean counterparts. Thereby, the contrastive learning based pretraining facilitates our joint normal estimation and point cloud filtering method.

4 Proposed Methodology

4.1 Overview

We first introduce 3D patch-based contrastive learning to train a feature encoder capable of producing representations of point cloud patches (Fig. 1). This feature encoder consists of a PointNet-like architecture, with 5 Conv1D layers and a global max pool layer that generates a 1024 dimensional representation of input point cloud patches. These representations are projected to a 256 dimensional vector by a projection head consisting of 3 fully connected layers. Once the feature encoder has been trained, we use it to generate latent representations of input patches for regression tasks. Next, we train a regression network (Fig. 3) to predict patch normals and displacements simultaneously (i.e., normal and displacement of the central point of a patch). The regressor consists of the pretrained feature encoder and a MLP of 5 fully connected layers that output the desired normals and displacements. During the testing phase, these displacements are added to the initial point cloud, which produces a filtered point cloud that is refined and becomes the input for the next iteration of inference.

4.2 Contrastive pair construction

A clean point cloud consisting of points is described by where enumerates all training shapes. A noisy point cloud can, thereafter, be characterized by the addition of noise onto the clean point cloud

| (1) |

where corresponds to additive Gaussian noise with a mean of and standard deviation of . For our training set, takes on values of 0.25%, 0.5%, 1.0%, 1.5% and 2.5% of the bounding box diagonal length of . For a given shape, the set of 6 variant point clouds (1 clean and 5 noisy), is given by

| (2) |

Patches sampled from point clouds in are utilized in the contrastive learning process.

An input patch centered at , the point of a given point cloud , can be described by

| (3) |

We create a contrastive pair by randomly sampling another point cloud such that,

| (4) |

Here, and correspond to two noisy variants of the same underlying clean point cloud and and are chosen randomly. The process of pairing with , where both share the same underlying clean patch structure, as both are subsets of and are centered at , is the first augmentation. We note that may have a different patch radius to as the bounding box diagonal length of differs from unless . The patch radii and are taken to be 5% of the respective bounding box diagonals. Patches are maintained at a fixed size to simplify the training procedure. Empirically, for each patch we sample 500 points. If the number of points is fewer, we increase it by copying random points in the patch and downsampling is applied otherwise. All patches are translated to the origin and normalized to [-1, 1], i.e., and .

For a batch of size , we have a total of augmented patches, i.e, we have input patches and their noise contrasted versions. The input patches are drawn from the training set , given by . All pairs and , where , form positive contrastive pairs as these patches are drawn from point clouds of the same shape, the set , and correspond to the same central point . Any pairs or where constitute negative pairs. For each augmented patch, there exists positive pair and negative pairs. We do not pair the augmented patch with itself, i.e., we do not consider or .

Positive contrastive pairs are put into the canonical basis by taking the inverse of the eigenvector matrix, obtained from the covariance matrix of , Eq. (5), and matrix multiplying both patches by it.

| (5) |

Finally, we apply a second augmentation to by randomly rotating it around either the , , or axis by an angle . This second augmentation allows the network to learn patch similarity based on patch structure despite rotations and reinforces the effect of contrastive learning.

4.3 Contrastive learning

Once contrastive pairs have been generated, we train our feature encoder in a contrastive learning manner. As patches within a positive pair correspond to the same ground-truth patch, albeit with different levels of additive noise and arbitrary rotations applied to them, they should produce similar representations. As such, we adopt the Normalized Temperature-scaled Cross Entropy (NT-XEnt) loss [Chen-SimCLR] to achieve this. The loss for a positive pair in the batch is given by

| (6) |

where enumerates over all projections. The function if and otherwise. We empirically set based on results. In Eq. (6), the projection with and parameterized by the projection head and feature encoder, respectively. Finally, the contrastive loss for the entire batch is,

| (7) |

where enumerates over all positive pairs. Fig. 1 shows how a single positive pair within a batch is processed by the feature encoder and projection head. All positive and negative pairs within the batch see the same copy of the feature encoder and projection head and all pairs are processed simultaneously. The larger the batch size, the greater the number of negative pairs available for the contrastive loss calculation. Once the training of the contrastive learning network has been completed, we discard the projection head and use the feature encoder as the backbone for our regression network. Representations generated by the feature encoder are consumed by the regressor, comprising 5 MLPs, and outputs a 2-tuple of displacement and normal vectors. During the training of the regressor, the weights for the feature encoder are kept frozen. The regression process is visualized in Fig. 3.

4.4 Joint loss

We propose a novel, joint position and normal based loss for training our regression network. This joint loss allows our network to, when trained, perform both a position update of a noisy point while also estimating its corresponding normal. Therefore, our regressor loss function comprises two main components: , the position loss and , the normal loss.

Approximating the clean surface. The position loss term is inspired by the work of [Rakotosaona-PCN]. We consider all points within the ground-truth patch and seek to minimize the squared norm instead of solely using a single fixed target.

| (8) |

where is the filtered point from the regressor given a noisy patch centered at , i.e., the point in . The ground truth patch is translated to the origin and normalized such that . This loss term allows the regressor to filter the noisy central point back to the clean patch surface. However, this does not ensure that the filtered point is centered within the ground truth patch which leads to unwanted clustering of filtered points.

Ensuring regular distribution of points. To avoid unwanted point clustering, we employ a regularization term which promotes the centering of filtered points within ground truth patches. By ensuring that filtered points lie close to the central points of their corresponding ground truth patches, we recover a regular distribution of points within the filtered point cloud.

| (9) |

Intuitively, if the filtered point lies away from the ground truth patch center, this leads to a larger penalization due to Eq. (9). For example, given a circular patch with radius , the minimum value of Eq. (9) is where the inferred point lies at the center of the ground truth patch and the furthest point is away. and form the position based loss contribution to the final loss

| (10) |

where is the parameter that controls the regularization term’s contribution to the position loss. We empirically set it to , as we notice that a large contribution affects the convergence of the final loss function.

Normal estimation. We then develop a relationship between a regressed position and its normal. Intuitively, if the ground-truth patch point, which minimizes the squared norm between positions, corresponds to the true position of the filtered point, then that point’s normal should correspond to the true normal of the filtered point. If the ground-truth patch’s central point which minimizes the squared distance is given by

| (11) |

then the angle difference between the predicted normal and the ground-truth normal can be expressed in terms of cosine similarity between the two:

| (12) |

where and correspond to the normals at and , respectively. This cosine term can now be used to construct our normal loss:

| (13) |

The loss function in Eq. (13) is a periodic function which penalizes values away from and . Therefore, it encourages the predicted normal to be as close to the ground-truth normal as possible. It also assumes that the predicted normal with an angle difference of is equivalent to the ground-truth normal. The term, which is empirically set to , serves to control the shape of loss function , wherein, angle differences close to are heavily penalized. This penalization decreases closer to and . Finally, we express our joint loss as

| (14) |

where controls the relative contributions of the position and normal losses to the final loss function. We empirically set to as the emphasis for the regressor is denoising the point cloud iteratively. This in turn leads to better normal estimation results.

4.5 Alternative joint loss

We also examine Eq. (17), a variant of the joint loss function, Eq. (14), which utilizes the point-to-point correspondences between ground truth points and filtered points , i.e., using fixed ground truth targets.

| (15) | ||||

| (16) | ||||

| (17) |

where with and being the predicted normal and ground truth normal, respectively. As we regress the filtered point directly back to the ground truth central point, does not contain a repulsion term. The regressor trained with this loss function performs sub-optimally to that of the regressor trained using Eq. (14). As noted in [Rakotosaona-PCN], multiple clean points, within a given neighbourhood, may be perturbed in such a way as to result in the same noisy point. Therefore, the norm minimization between a filtered point and ground truth point, Eq. (15), cannot successfully remove the noise component tangential to the surface and leads to a lower filtering performance. This motivates our use of Eq. (14) which regresses the filtered point back to the surface while ensuring it is centered as best possible within the ground truth patch. More details are given in Sec. LABEL:sec:alt-joint-loss.

4.6 Inference

The regression network , trained based on the loss function defined by Eq. (14), outputs a 6D vector or 2-tuple of 3D vectors. The first element corresponds to the displacement required to obtain the filtered point , and the second to the corresponding normal vector . As these two vectors are in the space defined by the eigenvectors of the patch covariance matrix, they must first be transformed back to the original space. Subsequently, the filtered point is given by with the original noisy point . The normal vector in the original space is where is the feature encoder.

Given , we apply refinement during post-processing to obtain the final filtered point as suggested by [Rakotosaona-PCN, Lu-Low-Rank]. The first is a Taubin smoothing-like inflation step [Rakotosaona-PCN] and the second is the LRMA position update [Lu-Low-Rank] to combat shrinking of the point cloud and avoid incorrect displacement of points along the surface, respectively. The ablation study on the post-processing refinement is discussed in Section LABEL:sec:no-post-processing. The new position after applying the inflation step is given by,

| (18) |

where we take as the neighborhood of 100 filtered points in the vicinity of and is the original point position before filtering. The LRMA position update yields our final filtered point. The position update is given by,

| (19) |

with being the neighborhood of 20 points in the vicinity of .

5 Experimental Results

5.1 Dataset

Our training set consists of 22 synthetic point clouds (Fig. 4): 11 CAD shapes and 11 non-CAD shapes. The validation set consists of 3 shapes, 1 CAD and 2 non-CAD shapes, while the test set consists of 23 shapes: 14 CAD and 9 non-CAD. Each shape is a point cloud of 100K points, which have been randomly sampled from their original surfaces. For training, we create 5 additional noisy variants of each training shape by adding Gaussian noise with standard deviations of 0.25%, 0.5%, 1.0%, 1.5% and 2.5% of the clean point cloud’s bounding box diagonal. These 6 variants (clean and 5 noise levels) for each shape give a total of 132 point clouds for training purposes. For validation, we consider 2 noisy variants of the initial 3 clean validation shapes, with 0.5% and 1.0% noise, resulting in a total of 6 validation point clouds. In the testing phase, we examine the robustness of our model at unseen noise levels by utilizing 0.6%, 0.8%, 1.1%, 1.5% and 2.0% Gaussian noise for each test shape, yielding 115 test point clouds.

Sharp features. To evaluate performance at sharp features, we classify points within our synthetic dataset as feature and non-feature points. Please refer to the supplementary document for more details.

5.2 Implementation

The contrastive learning and regression networks are both trained on NVIDIA A100 GPUs using PyTorch 1.7.1 with CUDA 11.0. The contrastive learning network is trained for 150 epochs, with the Adam optimizer and a learning rate of . The regression network is trained for 30 epochs, utilizing the SGD optimizer with a learning rate of .

5.3 Comparisons

We compare our method with state-of-the-art normal estimation and point cloud filtering methods. For normal estimation, we consider conventional PCA [Hoppe-PCA], HoughCNN [Boulch-HoughCNN], NINormal [Wang-NINormal], PCPNet [Guerrero-PCPNet], Nesti-Net [Ben-Shabat-Nesti-Net], Deep Iterative (DI) [Lenssen-Deep-Iterative] and AdaFit [Zhu-AdaFit] on our test set including synthetic and scanned point clouds. We do not compare with DeepFit [Ben-Shabat-DeepFit] as AdaFit is based on DeepFit and achieves better results. PCA requires the manual selection of neighborhood sizes for plane-fitting. For synthetic shapes, we utilize three different neighborhood sizes, i.e., 60 points for 0.6% Gaussian noise, 150 points for 0.8% Gaussian noise and 200 points for 1.1%, 1.5% and 2.0% Gaussian noise. For scanned surfaces a neighborhood of 100 points is used. Our metric for comparison is the Mean Squared Angular Error (MSAE) where we calculate angle differences between ground truth normals and predicted normals and take the mean of their squares.

| PCA | NINormal | HoughCNN | PCPNet | Nesti-Net | DI | AdaFit | Ours | |

|---|---|---|---|---|---|---|---|---|

| Syn. (=0.6%) | 0.1637 | 0.2113 | 0.7418 | 0.1184 | 0.1029 | 0.1037 | 0.0958 | 0.1038 |

| Syn. (=0.8%) | 0.1514 | 0.3277 | 1.0427 | 0.1414 | 0.1197 | 0.1246 | 0.1143 | 0.1126 |

| Syn. (=1.1%) | 0.1954 | 0.4813 | 1.1595 | 0.1762 | 0.1467 | 0.1539 | 0.1472 | 0.1268 |

| Syn. (=1.5%) | 0.2664 | 0.6160 | 1.1879 | 0.2174 | 0.1827 | 0.1932 | 0.1914 | 0.1502 |

| Syn. (=2.0%) | 0.3632 | 0.7175 | 1.1853 | 0.2646 | 0.2319 | 0.2405 | 0.2440 | 0.1951 |

| Syn. average | 0.2280 | 0.4708 | 1.0634 | 0.1836 | 0.1568 | 0.1632 | 0.1585 | 0.1377 |

| Sharp feat. ave. | 0.4216 | 0.5872 | 1.0003 | 0.3947 | 0.3504 | 0.3913 | 0.3445 | 0.3230 |

| Scanned average | 0.1010 | — | 0.4960 | 0.0550 | 0.0430 | 0.0370 | 0.0250 | 0.0390 |

| Overall average | 0.2237 | 0.4550 | 1.0443 | 0.1793 | 0.1530 | 0.1590 | 0.1540 | 0.1344 |

| Noisy | CLOP | WLOP | TD | SD | PCN | PF | Ours | ||

| \pbox100ptChamfer dist. () | Syn. (=0.6%) | 4.762 | 2.366 | 3.374 | 4.638 | 1.867 | 1.467 | 1.444 | 1.241 |

| Syn. (=0.8%) | 7.517 | 4.163 | 3.921 | 4.038 | 3.127 | 1.752 | 1.749 | 1.545 | |

| Syn. (=1.1%) | 12.746 | 8.311 | 6.533 | 3.508 | 6.903 | 2.572 | 2.355 | 1.987 | |

| Syn. (=1.5%) | 21.637 | 16.13 | 14.24 | 7.472 | 14.301 | 5.787 | 3.544 | 3.244 | |

| Syn. (=2.0%) | 35.906 | 29.177 | 27.318 | 22.838 | 27.943 | 17.658 | 5.307 | 8.055 | |

| Syn. average | 16.514 | 12.029 | 11.077 | 8.499 | 10.828 | 5.847 | 2.880 | 3.214 | |

| Scanned average | 2.764 | 3.317 | 5.618 | 3.835 | 3.443 | 0.542 | 0.482 | 0.472 | |

| Overall average | 16.052 | 11.736 | 10.894 | 8.342 | 10.58 | 5.669 | 2.799 | 3.122 | |

| \pbox100ptPoint2Surf. dist. () | Syn. (=0.6%) | 4.658 | 2.392 | 2.423 | 3.195 | 2.262 | 1.380 | 1.141 | 1.067 |

| Syn. (=0.8%) | 6.143 | 3.730 | 3.052 | 3.078 | 3.520 | 1.592 | 1.386 | 1.324 | |

| Syn. (=1.1%) | 8.306 | 5.976 | 4.810 | 3.063 | 6.009 | 2.108 | 1.810 | 1.807 | |

| Syn. (=1.5%) | 11.073 | 8.959 | 8.418 | 5.471 | 9.259 | 3.100 | 2.472 | 2.937 | |

| Syn. (=2.0%) | 14.432 | 12.685 | 12.460 | 11.282 | 13.315 | 5.124 | 3.440 | 5.020 | |

| Syn. average | 8.922 | 6.748 | 6.233 | 5.218 | 6.873 | 2.661 | 2.050 | 2.431 | |

| Sharp feat. ave. | 9.082 | 6.867 | 6.324 | 6.409 | 6.893 | 3.366 | 2.339 | 2.887 | |

| Scanned average | 3.795 | 3.410 | 4.037 | 2.990 | 3.957 | 1.000 | 0.722 | 0.705 | |

| Overall average | 8.750 | 6.636 | 6.159 | 5.143 | 6.775 | 2.605 | 2.005 | 2.373 |

For point cloud filtering, we compare with conventional methods CLOP [Preiner-CLOP] and WLOP [Huang-WLOP] and deep learning methods TotalDenoising (TD) [Hermosilla-Total-Denoising], ScoreDenoise (SD) [Luo-Score-Based-Denoising], PointCleanNet [Rakotosaona-PCN] and Pointfilter [Zhang-Pointfilter] on two metrics, the Chamfer distance (CD) and the Point2Surface distance (P2S) [Li-Dis-PU]. The Chamfer distance between a ground truth point cloud and a filtered point cloud is defined by Eq. (20). The first term provides a measure of the distance from filtered points to the ground truth surface while the second provides a measure of the relative even distribution of filtered points w.r.t. the clean point cloud. P2S measures the average distance between filtered points and the reconstructed ground truth mesh. All learning based methods are retrained on our dataset.

| (20) |

Evaluation at sharp features. We take the MSAE for sharp feature points as a measure of normal estimation accuracy and the P2S distance for these points as a measure of filtering accuracy. Tables I, 5.3, V and LABEL:tab:filtering-additional give the MSAE and P2S averages for each method on our dataset.

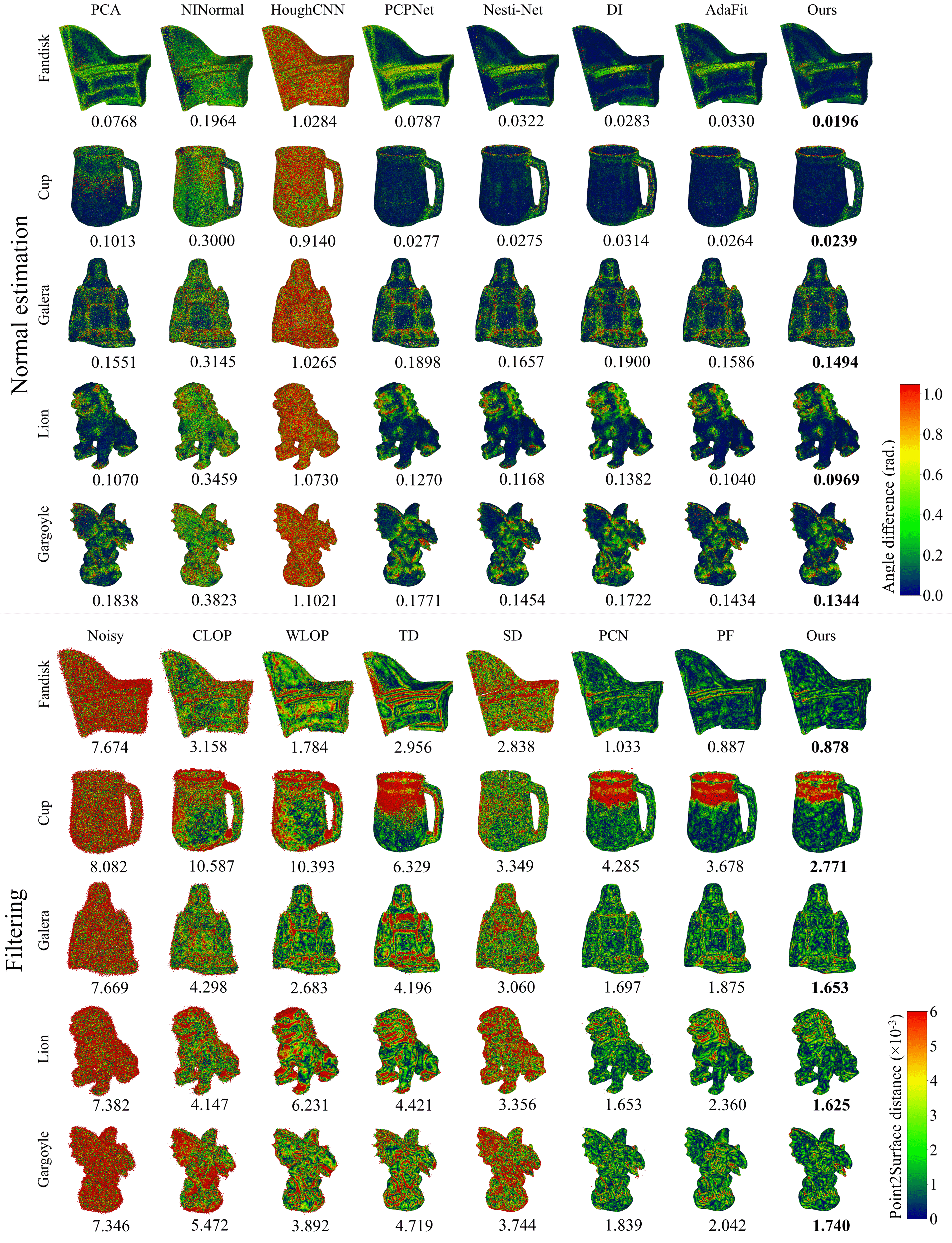

5.4 Performance on synthetic data

Tables I and III and the top half of Fig. 5 demonstrate normal estimation results on shapes with Gaussian noise. For the normal estimation task, our method outperforms all other methods including ones which employ larger networks, such as Nesti-Net, and recovers accurate normals in the presence of noise. AdaFit offers competitive results at lower noise levels but performs sub-optimally at higher noise. Another key attribute is its ability to accurately predict normals at sharp features. Both these attributes of the network can be seen by its performance on the Fandisk shape. Overall, it has the lowest MSAE at sharp feature points and shows higher robustness to noise. Contrastive learning facilitates this as patches from anisotropic surfaces are trained to produce distinct feature representations which can be better distinguished by the regressor. Additionally, as positive contrastive pairs share the same underlying clean surface, representations of a given patch at differing noise levels remain similar. This allows the regressor to reliably estimate normals as noise increases.

| Shape | Normal estimation - MSAE | Filtering - Chamfer distance () | ||||||||

| PCPNet | Nesti-Net | DI | AdaFit | Ours | Noisy | PCN | PF | Ours | ||

| Fandisk | 0.6% | 0.0615 | 0.0205 | 0.0148 | 0.0197 | 0.0159 | 4.735 | 0.896 | 0.748 | 0.747 |

| 0.8% | 0.0787 | 0.0322 | 0.0283 | 0.0329 | 0.0196 | 7.674 | 1.033 | 0.887 | 0.878 | |

| 1.1% | 0.1005 | 0.0534 | 0.0542 | 0.0563 | 0.0266 | 13.232 | 1.546 | 1.246 | 1.244 | |

| 1.5% | 0.1250 | 0.0708 | 0.0828 | 0.0948 | 0.0442 | 22.610 | 3.520 | 1.678 | 2.285 | |

| 2.0% | 0.1498 | 0.0945 | 0.1152 | 0.1366 | 0.0716 | 37.450 | 13.952 | 2.916 | 6.613 | |

| Cup | 0.6% | 0.0194 | 0.0218 | 0.0262 | 0.0198 | 0.0213 | 5.276 | 3.488 | 2.384 | 2.117 |

| 0.8% | 0.0277 | 0.0275 | 0.0314 | 0.0264 | 0.0239 | 8.082 | 4.285 | 3.678 | 2.771 | |

| 1.1% | 0.0451 | 0.0399 | 0.0389 | 0.0446 | 0.0287 | 12.961 | 5.609 | 5.228 | 2.957 | |

| 1.5% | 0.0773 | 0.0645 | 0.0518 | 0.0749 | 0.0390 | 21.329 | 10.267 | 8.462 | 2.995 | |

| 2.0% | 0.1153 | 0.1108 | 0.0755 | 0.1027 | 0.0640 | 34.351 | 22.665 | 10.917 | 5.057 | |

| Galera | 0.6% | 0.1504 | 0.1357 | 0.1469 | 0.1256 | 0.1324 | 4.924 | 1.422 | 1.516 | 1.334 |

| 0.8% | 0.1898 | 0.1657 | 0.1899 | 0.1586 | 0.1494 | 7.669 | 1.697 | 1.875 | 1.653 | |

| 1.1% | 0.2416 | 0.2085 | 0.2472 | 0.2091 | 0.1747 | 12.922 | 2.430 | 2.478 | 2.128 | |

| 1.5% | 0.2904 | 0.2609 | 0.2947 | 0.2670 | 0.2166 | 21.713 | 5.930 | 3.493 | 3.324 | |

| 2.0% | 0.3403 | 0.3136 | 0.3441 | 0.3219 | 0.2775 | 35.215 | 19.101 | 6.112 | 6.879 | |

| Lion | 0.6% | 0.0957 | 0.0896 | 0.0989 | 0.0741 | 0.0798 | 4.691 | 1.309 | 1.768 | 1.219 |

| 0.8% | 0.1270 | 0.1168 | 0.1382 | 0.1040 | 0.0969 | 7.382 | 1.653 | 2.360 | 1.625 | |

| 1.1% | 0.1707 | 0.1570 | 0.1786 | 0.1481 | 0.1262 | 12.489 | 2.567 | 3.160 | 2.273 | |

| 1.5% | 0.2205 | 0.2053 | 0.2185 | 0.2022 | 0.1684 | 21.135 | 6.105 | 4.130 | 3.606 | |

| 2.0% | 0.2755 | 0.2630 | 0.2718 | 0.2595 | 0.2279 | 34.894 | 19.627 | 6.181 | 8.796 | |

| Gargoyle | 0.6% | 0.1305 | 0.1113 | 0.1250 | 0.1038 | 0.1134 | 4.710 | 1.518 | 1.661 | 1.345 |

| 0.8% | 0.1771 | 0.1454 | 0.1722 | 0.1434 | 0.1344 | 7.346 | 1.839 | 2.042 | 1.74 | |

| 1.1% | 0.2445 | 0.2037 | 0.2340 | 0.2159 | 0.1753 | 12.16 | 2.968 | 3.034 | 2.441 | |

| 1.5% | 0.3077 | 0.2672 | 0.2953 | 0.2865 | 0.2253 | 19.903 | 6.594 | 5.179 | 3.902 | |

| 2.0% | 0.3725 | 0.3383 | 0.3616 | 0.3523 | 0.3019 | 32.178 | 19.408 | 8.000 | 8.597 | |

Table 5.3 and III and the bottom half of Fig. 5 demonstrate filtering results on shapes with Gaussian noise. A core attribute of our method is that it generalises well between both CAD-like and non-CAD like shapes. It is able to recover the sharp feature information of CAD shapes such as Fandisk while also achieving the highest accuracy on non-CAD shapes such as Galera and Gargoyle. Furthermore, on complex shapes such as Cup, our method outperforms others at filtering noisy points along closely neighboring surfaces. Pointfilter specializes in the point cloud filtering task and it only outperforms our method on both metrics at the highest noise level. Although, at higher noise, Pointfilter has slightly lower Point2Surface distances, the higher Chamfer distance indicates a relatively uneven distribution of points along the filtered surface. This is due to the fact that there are multiple displacements along which a point may return to the underlying clean surface. Therefore, even if the point is returned to the underlying surface, it may be incorrectly positioned along the surface. Our method reduces this longitudinal jitter by using estimated normals in conjunction with the LRMA position update scheme of [Lu-Low-Rank]. This takes regressed point positions, the output from our network, as input and updates them based on the neighboring points’ positions and normal information from the previous iteration. Thereby, we are able to optimize both the position and normal estimation predictions to generate a filtered point cloud which more accurately represents the original clean point cloud. Additional visual results are given in the supplementary document.

5.5 Performance on scanned data

Next, we look at the performance of each method on the scanned dataset which comprises 3 CAD-like scans and 1 non-CAD like scan where the ground-truth normal and position information are known. These scans are obtained using the virtual scanner introduced by Yu et al [Yu-EC-Net]. Additionally, we present comparisons on the Kinect v1 and Kinect v2 datasets, introduced by Wang, Liu and Tong [Wang-Kinect], comprising 71 and 72 scans respectively, obtained using Kinect v1 and v2 sensors. As these scans have no ground truth normal information, we only present filtering results. We also test the learning based filtering methods on two scans extracted from the Paris-Rue-Madame database [Serna-Rue-Madame]. They are scans taken from Rue Madame, a street in the 6th district of Paris. These scans capture many intricate details like the street’s building facades and parked vehicles and also provide a good example of real-world noise that is encountered when obtaining such scans. Finally, we provide results on two scans extracted from the Kitti-360 dataset [Liao-Kitti360] in the supplementary document. They capture scenes in several suburbs of Karlsruhe, Germany, using Velodyne HDL-64E sensors. As the Rue Madame and Kitti-360 scans have no ground truth, only visual results are presented. Tables I and 5.3 illustrate quantitative normal estimation and filtering results on the scanned data. AdaFit outperforms other methods in estimating normals on scanned data, but is less robust to high noise. Our method, on average, performs better than other methods and remains competitive at estimating normals near sharp features, as shown in Fig. 6.

| Noisy | PCN | PF | Ours | ||

|---|---|---|---|---|---|

| \pbox100ptKinect v1 | CD | 14.489 | 13.469 | 12.623 | 12.083 |

| P2S | 6.272 | 5.763 | 5.029 | 4.947 | |

| \pbox100ptKinect v2 | CD | 22.633 | 21.985 | 20.174 | 18.785 |

| P2S | 7.505 | 7.269 | 6.265 | 5.889 |

Among filtering methods, our method shows an advantage in recovering sharp feature information as well as fine details, such as the braids in the girl’s hair in Fig. 7. Our method also performs optimally on the Kinect v1 and Kinect v2 datasets, as demonstrated by Table IV. We outperform other methods on both the Chamfer distance and Point2Surface metrics, indicating its ability to approximate the surface and generate a regular distribution of points. Visual results on the Kinect data are presented in the supplementary document. Furthermore, Fig. 8 demonstrates our method’s ability to filter complex scenes. Results on Scene 1 and Scene 2 demonstrate our method’s ability to maintain sharp features, in the presence of real-world noisy artifacts, while other methods such as PointCleanNet (PCN) and Pointfilter (PF) [Rakotosaona-PCN, Zhang-Pointfilter] tend to smear feature information. For example, in Scene 1, the outlines of doors and windows are filtered accurately by our method while smoothing planar surfaces (surfaces of doors, walls). Fine details such as the headlights of vehicles and the outlines of tire rims are also recovered. Conversely, Pointfilter smears such details while PointCleanNet only partially filters them and is not successful in smoothing planar surfaces. In Scene 2, we demonstrate an ability to reliably filter details of vehicles including side-mirrors and windows while other methods perform sub-optimally.

Based on these results, we see that our method is very competitive in filtering while holding a clear advantage in normal estimation. On average (Tables I and 5.3), our method outperforms other methods in both tasks.

5.6 Performance on shapes with varying density and different noise patterns

The synthetic data results presented in Tables I and 5.3 correspond to point clouds generated by uniformly sampling their original meshes, with Gaussian random noise subsequently added to them. We also evaluate normal estimation and filtering methods on uniformly sampled point clouds with an impulsive noise pattern and on point clouds sampled with varying density. To generate point clouds with an impulsive noise pattern, we apply Gaussian noise of , with respect to the bounding box diagonal, to 30% of points in each clean point cloud within the test set. To obtain varying density point clouds, two different sampling regimes, gradient and striped, are applied to the original test meshes. Thereafter, Gaussian noise of is applied to these point clouds. Further details on varying density point clouds can be found in the supplementary document. When evaluating the performance of methods on test point clouds with varying density and different noise patterns, we use models trained on our synthetic dataset comprising of point clouds with Gaussian noise. Table V and Table LABEL:tab:filtering-additional demonstrate our method’s ability to generalize well on point clouds with varying density for normal estimation while performing competitively in filtering. For point clouds with an impulse noise pattern, we perform optimally at the filtering task.

| PCA | PCPNet | Nesti-Net | DI | AdaFit | Ours | |

|---|---|---|---|---|---|---|

| VD (=0.8%) | 0.143 | 0.102 | 0.098 | 0.089 | 0.083 | 0.075 |

| VD sharp feat. ave. | 0.298 | 0.294 | 0.273 | 0.278 | 0.247 | 0.242 |

| IN (=1.5%) | 0.118 | 0.125 | 0.106 | 0.077 | 0.104 | 0.111 |

| IN sharp feat. ave. | 0.334 | 0.329 | 0.275 | 0.208 | 0.304 | 0.309 |

and Point Cloud Filtering: Supplementary

Dasith de Silva Edirimuni, Xuequan Lu, Gang Li, and Antonio Robles-Kelly, D. de Silva Edirimuni, X. Lu, G. Li and A. Robles-Kelly are with the School of Information Technology, Deakin University, Waurn Ponds, Victoria, 3216, Australia (e-mail: {dtdesilva, xuequan.lu, gang.li, antonio.robles-kelly}@deakin.edu.au). A. Robles-Kelly is also with the Defense Science and Technology Group, Australia. Manuscript received Month Day, Year; revised Month Day, Year. (Corresponding author: Xuequan Lu.)

Here we provide supplementary material to the main paper. In particular, we provide additional information on the following:

-

1.

Sharp feature points classification

-

2.

Varying density point clouds

-

3.

Further comparisons on synthetic and real-world scan data

A Sharp Feature Points Classification

In order to explicitly analyze the performance of normal estimation and filtering methods at sharp features on our synthetic data, we must first determine which points correspond to sharp features, i.e., points that lie on anisotropic surfaces. To achieve this, we exploit the ground truth normal information for each clean synthetic point cloud. For each point in the clean point cloud, we consider neighborhoods of their 10 nearest neighbors. Nearest neighbors whose normals make an angle with the neighborhood’s central point’s normal are classified as feature points. Here, the threshold angle between normals for determining a sharp feature point is . If the angle is below the threshold, the surface varies smoothly. An angle greater than most likely corresponds to a normal of a point on a surface parallel to that of the central point and is not considered. This scheme allows us to extract points along sharp edges and corners. The unique indices corresponding to these points (on ground truth and filtered point clouds) are used to determine MSAE and Point2Surface distance values at sharp features.

B Varying density point clouds

Varying density point clouds are obtained by applying two different sampling regimes, gradient and striped, to the original test meshes. Fig. 12 illustrates point clouds from these two regimes. Thereafter, Gaussian noise of is added to each point cloud in order to evaluate the robustness of normal estimation and filtering methods on noisy, varying density point clouds.

C Further comparisons on synthetic and real-world scan data

Fig. 13 displays normal estimation and filtering results on additional shapes within our synthetic data for Gaussian noise with standard deviation of of the bounding box diagonal and Table XIII details performance across all noise levels, for each respective shape. In the normal estimation task, we outperform other methods and generalize well between CAD shapes such as StarSharp and non-CAD shapes such as Netsuke, especially at higher noise levels. For the filtering task, we perform competitively, and our method is able to reliably recover sharp features on CAD shapes such as StarSharp and PipeCurve and fine details on non-CAD shapes such as Netsuke.

| Shape | Normal estimation - MSAE | Filtering - Chamfer distance () | ||||||||

| PCPNet | Nesti-Net | DI | AdaFit | Ours | Noisy | PCN | PF | Ours | ||

| PipeCurve | 0.6% | 0.0169 | 0.0068 | 0.0056 | 0.0146 | 0.0115 | 5.190 | 1.482 | 1.015 | 1.024 |

| 0.8% | 0.0193 | 0.0105 | 0.0087 | 0.0303 | 0.0123 | 8.067 | 2.167 | 1.341 | 1.158 | |

| 1.1% | 0.0230 | 0.0173 | 0.0132 | 0.0689 | 0.0121 | 12.922 | 5.041 | 4.691 | 1.651 | |

| 1.5% | 0.0304 | 0.0324 | 0.0214 | 0.0597 | 0.0196 | 19.805 | 11.395 | 11.26 | 3.214 | |

| 2.0% | 0.0398 | 0.0433 | 0.0280 | 0.0421 | 0.0272 | 30.269 | 25.024 | 16.563 | 7.103 | |

| Netsuke | 0.6% | 0.1628 | 0.1552 | 0.1793 | 0.1440 | 0.1591 | 4.555 | 1.263 | 1.545 | 1.237 |

| 0.8% | 0.1953 | 0.1808 | 0.2125 | 0.1714 | 0.1712 | 7.209 | 1.49 | 1.823 | 1.500 | |

| 1.1% | 0.2462 | 0.2163 | 0.2526 | 0.2155 | 0.1964 | 12.209 | 2.160 | 2.294 | 2.040 | |

| 1.5% | 0.3122 | 0.2736 | 0.3054 | 0.2763 | 0.2459 | 20.832 | 4.585 | 3.171 | 3.826 | |

| 2.0% | 0.3714 | 0.3447 | 0.3631 | 0.3463 | 0.3044 | 33.812 | 14.521 | 5.077 | 9.593 | |

| StarSharp | 0.6% | 0.1371 | 0.1257 | 0.1029 | 0.0801 | 0.1039 | 4.002 | 0.93 | 0.895 | 0.822 |

| 0.8% | 0.1732 | 0.1684 | 0.1484 | 0.1177 | 0.1148 | 6.564 | 1.219 | 1.284 | 1.195 | |

| 1.1% | 0.2280 | 0.2309 | 0.1977 | 0.1671 | 0.1338 | 11.572 | 2.416 | 1.980 | 2.597 | |

| 1.5% | 0.2776 | 0.2811 | 0.2558 | 0.2226 | 0.1622 | 20.749 | 7.205 | 3.535 | 6.190 | |

| 2.0% | 0.3219 | 0.3206 | 0.3020 | 0.2849 | 0.1696 | 36.616 | 20.958 | 5.770 | 14.617 | |

| Dragon | 0.6% | 0.1562 | 0.1302 | 0.1337 | 0.1310 | 0.1526 | 4.644 | 1.494 | 1.613 | 1.443 |

| 0.8% | 0.2117 | 0.1725 | 0.1800 | 0.1725 | 0.1787 | 7.294 | 1.862 | 2.085 | 1.893 | |

| 1.1% | 0.2857 | 0.2279 | 0.2329 | 0.2287 | 0.2087 | 12.183 | 2.858 | 2.863 | 2.609 | |

| 1.5% | 0.3765 | 0.3170 | 0.3301 | 0.3245 | 0.2772 | 20.215 | 6.577 | 4.491 | 4.394 | |

| 2.0% | 0.4768 | 0.4279 | 0.4386 | 0.4240 | 0.3684 | 32.734 | 19.231 | 6.269 | 9.000 | |

| Genus | 0.6% | 0.0128 | 0.0119 | 0.0218 | 0.0103 | 0.0112 | 4.720 | 0.817 | 0.642 | 0.638 |

| 0.8% | 0.0184 | 0.0170 | 0.0274 | 0.0165 | 0.0134 | 7.645 | 0.901 | 0.676 | 0.721 | |

| 1.1% | 0.0318 | 0.0303 | 0.0394 | 0.0370 | 0.0178 | 13.249 | 1.370 | 0.909 | 0.973 | |

| 1.5% | 0.0570 | 0.0486 | 0.0526 | 0.0628 | 0.0248 | 22.718 | 3.647 | 2.025 | 2.000 | |

| 2.0% | 0.1022 | 0.0865 | 0.0724 | 0.1119 | 0.0478 | 37.945 | 14.607 | 3.835 | 6.064 | |

| Monkey | 0.6% | 0.2225 | 0.2113 | 0.2298 | 0.1953 | 0.2174 | 4.371 | 1.220 | 1.393 | 1.150 |

| 0.8% | 0.2715 | 0.2532 | 0.2738 | 0.2358 | 0.2442 | 6.877 | 1.461 | 1.712 | 1.473 | |

| 1.1% | 0.3372 | 0.3083 | 0.3297 | 0.2964 | 0.2788 | 11.706 | 2.112 | 2.175 | 1.978 | |

| 1.5% | 0.4125 | 0.3719 | 0.3948 | 0.3745 | 0.3326 | 19.968 | 4.956 | 2.896 | 3.621 | |

| 2.0% | 0.4856 | 0.4516 | 0.4754 | 0.4626 | 0.4103 | 32.578 | 16.503 | 4.651 | 9.033 | |

On the Kinect v1 and v2 datasets, we outperform other methods as depicted by Fig. 14. The average Chamfer distance and Point2Surface results are given in Table 4 of the main paper. Fig. 15 provides an evaluation on 2 scenes of the Kitti-360 dataset. This dataset contains sparse point clouds with high amounts of real world noise which makes it difficult to filter these scenes. However, as shown in scene 1, we perform better at filtering noise and retrieving the underlying shapes of parked cars as compared to other methods.