ContraNorm: A Contrastive Learning Perspective on Oversmoothing and Beyond

Abstract

Oversmoothing is a common phenomenon in a wide range of Graph Neural Networks (GNNs) and Transformers, where performance worsens as the number of layers increases. Instead of characterizing oversmoothing from the view of complete collapse in which representations converge to a single point, we dive into a more general perspective of dimensional collapse in which representations lie in a narrow cone. Accordingly, inspired by the effectiveness of contrastive learning in preventing dimensional collapse, we propose a novel normalization layer called ContraNorm. Intuitively, ContraNorm implicitly shatters representations in the embedding space, leading to a more uniform distribution and a slighter dimensional collapse. On the theoretical analysis, we prove that ContraNorm can alleviate both complete collapse and dimensional collapse under certain conditions. Our proposed normalization layer can be easily integrated into GNNs and Transformers with negligible parameter overhead. Experiments on various real-world datasets demonstrate the effectiveness of our proposed ContraNorm. Our implementation is available at https://github.com/PKU-ML/ContraNorm.

1 Introduction

Recently, the rise of Graph Neural Networks (GNNs) has enabled important breakthroughs in various fields of graph learning (Ying et al., 2018; Senior et al., 2020). Along the other avenue, although getting rid of bespoke convolution operators, Transformers (Vaswani et al., 2017) also achieve phenomenal success in multiple natural language processing (NLP) tasks (Lan et al., 2020; Liu et al., 2019; Rajpurkar et al., 2018) and have been transferred successfully to computer vision (CV) field (Dosovitskiy et al., 2021; Liu et al., 2021; Strudel et al., 2021). Despite their different model architectures, GNNs and Transformers are both hindered by the oversmoothing problem (Li et al., 2018; Tang et al., 2021), where deeply stacking layers give rise to indistinguishable representations and significant performance deterioration.

In order to get rid of oversmoothing, we need to dive into the modules inside and understand how oversmoothing happens on the first hand. However, we notice that existing oversmoothing analysis fails to fully characterize the behavior of learned features. A canonical metric for oversmoothing is the average similarity (Zhou et al., 2021; Gong et al., 2021; Wang et al., 2022). The tendency of similarity converging to 1 indicates representations shrink to a single point (complete collapse). However, this metric can not depict a more general collapse case, where representations span a low-dimensional manifold in the embedding space and also sacrifice expressive power, which is called dimensional collapse (left figure in Figure 1). In such cases, the similarity metric fails to quantify the collapse level. Therefore, we need to go beyond existing measures and take this so-called dimensional collapse into consideration. Actually, this dimensional collapse behavior is widely discussed in the contrastive learning literature (Jing et al., 2022; Hua et al., 2021; Chen & He, 2021; Grill et al., 2020), which may hopefully help us characterize the oversmoothing problem of GNNs and Transformers. The main idea of contrastive learning is maximizing agreement between different augmented views of the same data example (i.e. positive pairs) via a contrastive loss. Common contrastive loss can be decoupled into alignment loss and uniformity loss (Wang & Isola, 2020). The two ingredients correspond to different objectives: alignment loss expects the distance between positive pairs to be closer, while uniformity loss measures how well the embeddings are uniformly distributed. Pure training with only alignment loss may lead to a trivial solution where all representations shrink to one single point. Fortunately, the existence of uniformity loss naturally helps solve this problem by drawing representations evenly distributed in the embedding space.

Given the similarities between the oversmoothing problem and the representation collapse issue, we establish a connection between them. Instead of directly adding uniformity loss into model training, we design a normalization layer that can be easily used out of the box with almost no parameter overhead. To achieve this, we first transfer the uniformity loss used for training to a loss defined over graph node representations, thus it can optimize representations themselves rather than model parameters. Intuitively, the loss meets the need of drawing a uniform node distribution. Following the recent research in combining optimization scheme and model architecture (Yang et al., 2021; Zhu et al., 2021; Xie et al., 2021; Chen et al., 2022), we use the transferred uniformity loss as an energy function underlying our proposed normalization layer, such that descent steps along it corresponds with the forward pass. By analyzing the unfolding iterations of the principled uniformity loss, we design a new normalization layer ContraNorm.

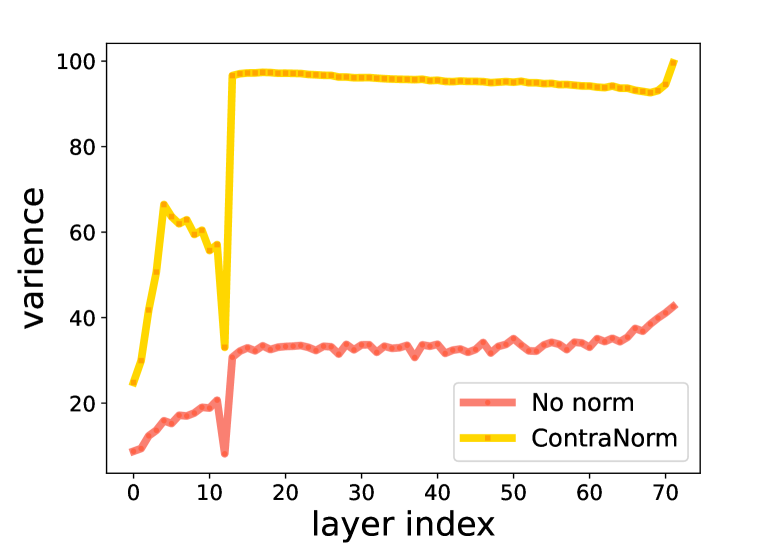

As a proof of concept, Figure 1 demonstrates that ContraNorm makes the features away from each other, which eases the dimensional collapse. Theoretically, we prove that ContraNorm increases both average variance and effective rank of representations, thus solving complete collapse and dimensional collapse effectively. We also conduct a comprehensive evaluation of ContraNorm on various tasks. Specifically, ContraNorm boosts the average performance of BERT (Devlin et al., 2018) from 82.59% to 83.54% on the validation set of General Language Understanding Evaluation (GLUE) datasets (Wang et al., 2019), and raises the test accuracy of DeiT (Touvron et al., 2021) with 24 blocks from 77.69% to 78.67% on ImageNet1K (Russakovsky et al., 2015) dataset. For GNNs, experiments are conducted on fully supervised graph node classification tasks, and our proposed model outperforms vanilla Graph Convolution Network (GCN) (Kipf & Welling, 2017) on all depth settings. Our contributions are summarized as:

-

•

We dissect the limitations of existing oversmoothing analysis, and highlight the importance of incorporating the dimensional collapse issue into consideration.

-

•

Inspired by the techniques from contrastive learning to measure and resolve oversmoothing, we propose ContraNorm as an optimization-induced normalization layer to prevent dimensional collapse.

-

•

Experiments on a wide range of tasks show that ContraNorm can effectively mitigate dimensional collapse in various model variants, and demonstrate clear benefits across three different scenarios: ViT for image classification, BERT for natural language understanding, and GNNs for node classifications.

2 Background & Related Work

Message Passing in Graph Neural Networks. In the literature of GNNs, message-passing graph neural networks (MP-GNNs) are intensively studied. It progressively updates representations by exchanging information with neighbors. The update of node ’s representation in -th layer is formalized as where denotes the neighborhood set of node , is the procedure where nodes exchange message, and is often a multi-layer perceptron (MLP). A classical MP-GNNs model is GCN (Kipf & Welling, 2017), which propagates messages among one-hop neighbors.

Self-Attention in Transformers. Transformers encode information in a global scheme with self-attention as the key ingredient (Vaswani et al., 2017). Self-attention module re-weights intermediate representations by aggregating semantically near neighbors. Formally, it estimates similarities between key and query, namely self-attention matrix, as , , and where , , and are the input, weight matrices for query and key, respectively. A multi-head self-attention module with a residual connection can be formularized as where is the number of heads, and , are weights for value and final output.

Connections between Message Passing and Self-Attention. Note that the self-attention matrix can be seen as a normalized adjacent matrix of a corresponding graph (Shi et al., 2022). Considering a weighted fully connected graph with adjacency matrix denoted as , we map nodes to token representations and set weights of the edge between node and node to . Then entry of a normalized adjacency matrix is , where diagonal matrix . Apparently, is equal to the form of self-attention matrix defined in Transformers. Simultaneously, plays a major part in the message-passing scheme by deciding which nodes to exchange information with.

Oversmoothing in GNNs and Transformers. The term oversmoothing is firstly proposed by Li et al. (2018) in research of GNNs. Intuitively, representations converge to a constant after repeatedly exchanging messages with neighbors when the layer goes to infinity. Zhou et al. (2020) mathematically proves that, under some conditions, the convergence point carries only information of the graph topology. Coincidentally, an oversmoothing-like phenomenon is also observed in Transformers. Unlike CNNs, Transformers can not benefit from simply deepening layers, and even saturates with depth increasing. Early works empirically ascribe it to attention/feature collapse or patch/token uniformity (Tang et al., 2021; Zhou et al., 2021; Gong et al., 2021; Yan et al., 2022). To be specific, the attention maps tend to be overly similar in later layers, whereby features insufficiently exchange information and lose diversity in the end. Outputs of pure transformers, i.e., attention without skip connections or MLPs, are also observed to converge to a rank-1 matrix (Dong et al., 2021). For illustration proposes, we also refer to the degeneration issue in Transformers as oversmoothing.

Whitening Methods for Dimensional Collapse. Whitening methods ensure that the covariance matrix of normalized outputs is diagonal, making dimensions mutually independent and implicitly solving dimensional collapse. Huang et al. (2018; 2019); Siarohin et al. (2018); Ermolov et al. (2021) all adopt the idea of whitening, but differ in calculating details of whitening matrix and application domain. Compared with them, we avoid complicated calculations on the squared root of the inverse covariance matrix and delicate design of backward pass for differentiability. Moreover, Huang et al. (2018; 2019) are proposed for convolution operations, Siarohin et al. (2018) is for GAN, and Ermolov et al. (2021) works in self-supervised learning. In contrast, we borrow the idea from contrastive learning and solve the oversmoothing of neural networks.

3 Mitigating Oversmoothing from the Perspective of Contrastive Learning

In this section, we first empirically demonstrate that current similarity metric fails to characterize dimensional collapse, thus overlooking a crucial part of oversmoothing. To address this problem, we draw inspiration from contrastive learning whose uniformity property naturally rules out dimensional collapse. Specifically, we transfer the uniformity loss to a loss that directly acts on representations. By unfolding the optimization steps along this loss, we induce a normalization layer called ContraNorm. Theoretically, we prove our proposed layer helps mitigate dimensional collapse.

3.1 The Characterization of Oversmoothing

In this part, we begin by highlighting the limitations of existing metrics in characterizing oversmoothing. These limitations motivate us to adopt the effective rank metric, which has been demonstrated to be effective in capturing the degree of dimensional collapse in contrastive learning.

Taking the oversmoothing problem of Transformers as an example without loss of generality, a prevailing metric is evaluating attention map similarity (Wang et al., 2022; Gong et al., 2021; Shi et al., 2022). The intuition is that as attention map similarity converges to one, feature similarity increases, which can result in a loss of representation expressiveness and decreased performance. However, by conducting experiments on transformer structured models like ViT and BERT, we find that high attention map similarity does not necessarily correspond to high feature similarity. As shown in Figure 2(a) and Figure 2(b), although attention map similarity is close to 0.8 or even higher, the feature similarity remains below 0.5 in most cases. It means the oversmoothing problem still occurs even with a low feature similarity. This finding suggests that similarity can not fully depict the quality of representations and the oversmoothing problem.

Intuitively, we can consider a case that representations in the latent embedding space do not shrink to one single point but span a low dimensional space. In such cases, feature similarity may be relatively low, but representations still lose expressive power. Such representation degeneration problem is known as dimensional collapse, and it is widely discussed in the literature of contrastive learning. In contrastive learning, common practice to describe dimensional collapse is the vanishing distribution of singular values (Gao et al., 2019; Ethayarajh, 2019; Jing et al., 2022). To investigate whether dimensional collapse occurs in Transformers, we draw the singular value distribution of features in the last block of 12-layer BERT. As shown in Figure 2(c), the insignificant (nearly zero) values dominate singular value distribution in the deep layer of vanilla BERT, indicating that representations reside on a low-dimensional manifold and dimensional collapse happens. To show the collapse tendency along layer index, we simplify the singular value distribution to a concise scalar effective rank (erank) (Roy & Vetterli, 2007), which covers the full singular value spectrum.

Definition 3.1 (Effective Rank).

Considering matrix whose singular value decomposition is given by , where is a diagonal matrix with singular values with . The distribution of singular values is defined as -normalized form The effective rank of the matrix , denoted as , is defined as where is the Shannon entropy given by

Based on erank, we revisit the oversmoothing issue of Transformers in Figure 2(d). We can see that the effective rank descends along with layer index, indicating an increasingly imbalanced singular value distribution in deeper layers. This finding not only verifies dimensional collapse does occur in Transformers, but also indicates the effectiveness of effective rank to detect this issue.

3.2 Inspirations from the Uniformity Loss in Contrastive Learning

The core idea of contrastive learning is maximizing agreement between augmented views of the same example (i.e., positive pairs) and disagreement of views from different samples (i.e. negative pairs). A popular form of contrastive learning optimizes feature representations using a loss function with limited negative samples (Chen et al., 2020). Concretely, given a batch of randomly sampled examples, for each example we generate its augmented positive views and finally get a total of samples. Considering an encoder function , the contrastive loss for the positive pair is

| (1) |

where denotes the temperature. The loss can be decoupled into alignment loss and uniformity loss:

| (2) |

The alignment loss encourages feature representations for positive pairs to be similar, thus being invariant to unnecessary noises. However, training with only the alignment loss will result in a trivial solution where all representations are identical. In other words, complete collapse happens. While batch normalization (Ioffe & Szegedy, 2015) can help avoid this issue, it cannot fully prevent the problem of dimensional collapse, which still negatively impacts learning (Hua et al., 2021).

Thanks to the property of uniformity loss, dimensional collapse can be solved effectively. Reviewing the form of uniformity loss in Eq. (2), it maximizes average distances between all samples, resulting in embeddings that are roughly uniformly distributed in the latent space, and thus more information is preserved. The inclusion of the uniformity loss in the training process helps to alleviate dimensional collapse. Intuitively, it can also serve as a sharp tool for alleviating oversmoothing in models such as GNNs and Transformers.

An alternative approach to address oversmoothing is to directly incorporate the uniformity loss into the training objective. However, our experiments reveal that this method has limited effectiveness (see Appendix B.2 for more details). Instead, we propose a normalization layer that can be easily integrated into various models. Our approach utilizes the uniformity loss as an underlying energy function of the proposed layer, such that a descent step along the energy function corresponds to a forward pass of the layer. Alternatively, we can view the layer as the unfolded iterations of an optimization function. This perspective is adopted to elucidate GNNs (Yang et al., 2021; Zhu et al., 2021), Transformers (Yang et al., 2022), and classic MLPs (Xie et al., 2021).

Note that the uniformity loss works by optimizing model parameters while what the normalization layer directly updates is representation itself. So we first transfer the uniformity loss, which serves as a training loss, to a kind of architecture loss. Consider a fully connected graph with restricted number of nodes, where node is viewed as representation of a random sample . Reminiscent of , we define over all nodes in the graph as

| (3) |

This form of uniformity loss is defined directly on representations, and we later use it as the underlying energy function for representation update.

3.3 The Proposed ContraNorm

Till now, we are able to build a connection between layer design and the unfolded uniformity-minimizing iterations. Specifically, we take the derivative of on node representations:

| (4) |

Denote the feature matrix as with the -th row , we can rewrite Eq. (4) into a matrix form:

| (5) |

where and 111 is a diagonal matrix, whose -th element equals to the sum of the -th row of .. To reduce the uniformity loss , a natural way is to take a single step of gradient descent that updates by

| (6) |

where and denote the representations before and after the update, respectively, and is the step size of the gradient descent. By taking this update after a certain representation layer, we can reduce the uniformity loss of the representations and thus help ease the dimensional collapse.

In Eq. (6), there exist two terms and multiplied with . Empirically, the two terms play a similar role in our method. Note that the first term is related to self-attention matrix in Transformers, so we only preserve it and discard the second one. Then Eq. (6) becomes

| (7) |

In fact, the operation corresponds to the stop-gradient technique, which is widely used in contrastive learning methods (He et al., 2020; Grill et al., 2020; Tao et al., 2022). By throwing away some terms in the gradient, stop-gradient makes the training process asymmetric and thus avoids representation collapse with less computational overhead, which is discussed in detail in Appendix B.1.

However, the layer induced by Eq. (7) still can not ensure uniformity on representations. Consider an extreme case where is equal to identity matrix . Eq. (7) becomes , which just makes the scale of smaller and does not help alleviate the complete collapse. To keep the representation stable, we explore two different approaches: feature norm regularization and layer normalization.

I. Feature Norm regularization. We add a regularization term to the uniformity loss to encourage a larger feature norm. When the regularization term becomes smaller, the norm of becomes larger. Therefore, adding this term can help prevent the norm of representation from becoming smaller. In this way, the update form becomes

| (8) |

Proposition 1.

Let . For attention matrix , let be the smallest eigenvalue of . For the update in Eq. (8), i.e. , we have . Especially, if , we have .

Proposition 1 gives a bound for the ratio of the variance after and before the update. It shows that the change of the variance is influenced by the symmetric matrix . If is a semi-positive definite matrix, we will get the result that , which indicates that the representations become more uniform. In Appendix F, we will give out some sufficient conditions for that is semi-positive definite.

II. Appending LayerNorm. Another option is to leverage Layer Normalization (LayerNorm) (Ba et al., 2016) to maintain the feature scale. The update form of LayerNorm is , where and are learnable parameters and . The learnable parameters and can rescale the representation to help ease the problem. We append LayerNorm to the original update in Eq. (7) and obtain

| (9) |

where applying the layer normalization to a representation matrix means applying the layer normalization to all its components .

ContraNorm. We empirically compare the two proposed methods and find their performance comparable, while the second one performs slightly better. Therefore, we adopt the second update form and name it Contrastive Normalization (ContraNorm). The ContraNorm layer can be added after any representation layer to reduce the uniformity loss and help relieve the dimensional collapse. We discuss the best place to plug our ContraNorm in Appendix B.3.

ContraNorm-D: Scalable ContraNorm for Large-scale Graphs. From the update rule in Eq. (9), we can see that the computational complexity of the ContraNorm layer is , where is the number of tokens and is the feature dimension per token, which is in the same order of self-attention (Vaswani et al., 2017). Therefore, this operation is preferable when the token number is similar or less than the feature dimension , as is usually the case in CV and NLP domains. However, for large-scale graphs that contain millions of nodes (), the quadratic dependence on makes ContraNorm computationally prohibitive. To circumvent this issue, we propose Dual ContraNorm (ContraNorm-D), a scalable variant of ContraNorm whose computational complexity has a linear dependence on , with the following dual update rule,

| (10) |

where we calculate the dimension-wise feature correlation matrix and multiple it to the right of features after softmax normalization. In Appendix A, we provide a more thorough explanation of it, showing how to derive it from the feature decorrelation normalization adopted in non-contrastive learning methods (like Barlow Twins (Zbontar et al., 2021) and VICReg (Bardes et al., 2022)). As revealed in recent work (Garrido et al., 2022), there is a dual relationship between contrastive and non-contrastive learning methods, and we can therefore regard ContraNorm-D as a dual version of ContraNorm that focuses on decreasing dimension-wise feature correlation.

3.4 Theoretical Analysis

In this part, we give a theoretical result of ContraNorm, and show that with a slightly different form of the uniformity loss, the ContraNorm update can help alleviate the dimensional collapse.

Proposition 2.

Consider the update form let be the largest singular value of . For satisfying , we have .

Proposition 2 gives a promise of alleviating dimensional collapse under a special update form. Denote , then shares a similar form with Barlow Twins (Zbontar et al., 2021). This loss tries to equate the diagonal elements of the similarity matrix to 1 and equate the off-diagonal elements of the matrix to 0, which drives different features becoming orthogonal, thus helping the features become more uniform in the space. Note that , therefore, the update above can be rewritten as , which implies that this update form is a ContraNorm with a different uniformity loss. Proposition 2 reveals that this update can increase the effective rank of the representation matrix, when satisfies . Note that no matter whether or not, if is sufficiently close to , the condition will be satisfied. Under this situation, the update will alleviate the dimensional collapse.

4 Experiments

In this section, we demonstrate the effectiveness of ContraNorm by experiments including 1) language understanding tasks on GLUE datasets with BERT and ALBERT (Lan et al., 2020) as the backbones; 2) image classification task on ImageNet100 and ImageNet1k datasets with DeiT as the base model; 3) fully supervised graph node classification task on popular graph datasets with GCN as the backbone. Moreover, we conduct ablative studies on ContraNorm variants comparison and hyperparameters sensitivity analysis.

4.1 Experiments on Language Models

Setup. To corroborate the potential of ContraNorm, we integrate it into two transformer structured models: BERT and ALBERT, and evaluate it on GLUE datasets. GLUE includes three categories of tasks: (i) single-sentence tasks CoLA and SST-2; (ii) similarity and paraphrase tasks MRPC, QQP, and STS-B; (iii) inference tasks MNLI, QNLI, and RTE. For MNLI task, we experiment on both the matched (MNLI-m) and mismatched (MNLI-mm) versions. We default plug ContraNorm after the self-attention module and residual connection (more position choices are in Appendix B.3). We use a batch size of 32 and fine-tune for 5 epochs over the data for all GLUE tasks. For each task, we select the best scale factor in Eq. (6) among . We use base models (BERT-base and ALBERT-base) of 12 stacked blocks with hyperparameters fixed for all tasks: number of hidden size 128, number of attention heads 12, maximum sequence length 384. We use Adam (Kingma & Ba, 2014) optimizer with the learning rate of .

Results. As shown in Table 1, ContraNorm substantially improves results on all datasets compared with vanilla BERT. Specifically, our ContraNorm improves the previous average performance from 82.59% to 83.39% for BERT backbone and from 83.74% to 84.67% for ALBERT backbone. We also submit our trained model to GLUE benchmark leaderboard and the results can be found in Appendix E.1. It is observed that BERT with ContraNorm also outperforms vanilla model across all datasets. To verify the de-oversmoothing effect of ContraNorm. We build models with/without ContraNorm on various layer depth settings. The performance comparison is shown in Figure 3(a). Constant stack of blocks causes obvious deterioration in vanilla BERT, while BERT with ContraNorm maintains competitive advantage. Moreover, for deep models, we also show the tendency of variance and effective rank in Figure 3(b) and Figure 3(c), which verifies the power of ContraNorm in alleviating complete collapse and dimensional collapse, respectively.

| Dataset | COLA | SST-2 | MRPC | QQP | STS-B | MNLI-m | MNLI-mm | QNLI | RTE | Avg |

|---|---|---|---|---|---|---|---|---|---|---|

| BERT-base | 55.28 | 92.89 | 88.96 | 88.24 | 88.61 | 84.65 | 84.61 | 91.51 | 68.59 | 82.59 |

| BERT + ContraNorm | 58.83 | 93.12 | 89.49 | 88.30 | 88.66 | 84.87 | 84.66 | 91.78 | 70.76 | 83.39 |

| BERT + ContraNorm* | 59.57 | 93.23 | 89.97 | 88.30 | 88.93 | 84.87 | 84.67 | 91.78 | 70.76 | 83.54 |

| ALBERT-base | 57.35 | 93.69 | 92.09 | 87.23 | 90.54 | 84.56 | 84.37 | 90.90 | 76.53 | 83.74 |

| ALBERT + ContraNorm | 58.51 | 92.89 | 92.86 | 87.45 | 90.56 | 84.33 | 84.62 | 91.76 | 79.06 | 84.67 |

| ALBERT + ContraNorm* | 58.76 | 93.23 | 92.89 | 87.67 | 90.72 | 84.69 | 84.95 | 92.28 | 79.06 | 84.92 |

4.2 Experiments on Vision Transformers

We also validate effectiveness of ContraNorm in computer vision field. We choose DeiT as backbone and models are trained from scratch. Experiments with different depth settings are evaluated on ImageNet100 and ImageNet1k datasets. Based on the Timm (Wightman, 2019) and DeiT repositories, we insert ContraNorm into base model intermediately following self-attention module.

Setup. We follow the training recipe of Touvron et al. (2021) with minimal hyperparameter changes. Specifically, we use AdamW (Loshchilov & Hutter, 2019) optimizer with cosine learning rate decay. We train each model for 150 epochs and the batch size is set to 1024. Augmentation techniques are used to boost the performance. For all experiments, the image size is set to be . For Imagenet100, we set the scale factor to 0.2 for all layer depth settings. For Imagenet1k, we set to 0.2 for models with 12 and 16 blocks, and raise it to 0.3 for models with 24 blocks.

Results. In Table 2, DeiT with ContraNorm outperforms vanilla DeiT with all layer settings on both datasets. Typically, our method shows a gain of nearly 5% on test accuracy for ImageNet100. For ImageNet1k, we boost the performance of DeiT with 24 blocks from 77.69% to 78.67%.

| Dataset | Model | L=12 | L=16 | L=24 |

|---|---|---|---|---|

| ImageNet100 | DeiT-tiny | 76.58 | 75.34 | 76.76 |

| +ContraNorm | 79.34 | 80.44 | 81.28 | |

| ImageNet1k | DeiT-small | 77.32 | 78.25 | 77.69 |

| +ContraNorm | 77.80 | 79.04 | 78.67 |

4.3 Experiments on Graph Neural Networks

Setup. We implement ContraNorm as a simple normalization layer after each graph convolution of GCN, and evaluate it on fully supervised graph node classification task. For datasets, we choose two popular citation networks Cora (McCallum et al., 2000) and Citeseer (Giles et al., 1998), and Wikipedia article networks Chameleon and Squirrel (Rozemberczki et al., 2021). More information of datasets is deferred to Appendix D. We compare ContraNorm against a popular normalization layer PairNorm (Zhao & Akoglu, 2020) designed for preventing oversmoothing. We also take LayerNorm as a baseline by setting the scale . We follow data split setting in Kipf & Welling (2017) with train/validation/test splits of 60%, 20%, 20%, respectively. To keep fair in comparison, we fix the hidden dimension to 32, and dropout rate to 0.6 as reported in Zhao & Akoglu (2020). We choose the best of scale controller in range of for both PairNorm and ContraNorm. For PairNorm, we choose the best variant presented by Zhao & Akoglu (2020).

Results. As shown in Table 3, in shallow layers (e.g., two layer), the addition of ContraNorm reduces the accuracy of vanilla GCN by a small margin, while it helps prevent the performance from sharply deteriorating as the layer goes deeper. ContraNorm outperforms PairNorm and LayerNorm in the power of de-oversmoothing. Here, we show the staple in diluting oversmoothing is ContraNorm, and LayerNorm alone fails to prevent GCN from oversmoothing, even amplifying the negative effect on Cora with more than 16 layers.

| Dataset | Model | L=2 | L=4 | L=8 | L=16 | L=32 |

|---|---|---|---|---|---|---|

| Cora | Vanilla GCN | 81.75 0.51 | 72.61 2.42 | 17.71 6.89 | 20.71 8.54 | 19.69 9.54 |

| +LayerNorm | 79.96 0.73 | 77.45 0.67 | 39.09 4.68 | 7.79 0.00 | 7.79 0.00 | |

| +PairNorm | 75.32 1.05 | 72.64 2.67 | 71.86 3.31 | 54.11 9.49 | 36.62 2.73 | |

| +ContraNorm | 79.75 0.33 | 77.02 0.96 | 74.01 0.64 | 68.75 2.10 | 46.39 2.46 | |

| CiteSeer | Vanilla GCN | 69.18 0.34 | 55.01 4.36 | 19.65 0.00 | 19.65 0.00 | 19.65 0.00 |

| +LayerNorm | 63.27 1.15 | 60.91 0.76 | 33.74 6.15 | 19.65 0.00 | 19.65 0.00 | |

| +PairNorm | 61.59 1.35 | 53.01 2.19 | 55.76 4.45 | 44.21 1.73 | 36.68 2.55 | |

| +ContraNorm | 64.06 0.85 | 60.55 0.72 | 59.30 0.67 | 49.01 3.49 | 36.94 1.70 | |

| Chameleon | Vanilla GCN | 45.79 1.20 | 37.85 1.35 | 22.37 0.00 | 22.37 0.00 | 23.37 0.00 |

| +LayerNorm | 63.95 1.29 | 55.79 1.25 | 34.08 2.62 | 22.37 0.00 | 22.37 0.00 | |

| +PairNorm | 62.24 1.73 | 58.38 1.48 | 49.12 2.32 | 37.54 1.70 | 30.66 1.58 | |

| +ContraNorm | 64.78 1.68 | 58.73 1.12 | 48.99 1.52 | 40.92 1.74 | 35.44 3.16 | |

| Squirrel | Vanilla GCN | 29.47 0.96 | 19.31 0.00 | 19.31 0.00 | 19.31 0.00 | 19.31 0.00 |

| +LayerNorm | 43.04 1.25 | 29.64 5.50 | 19.63 0.45 | 19.96 0.44 | 19.40 0.19 | |

| +PairNorm | 43.86 0.41 | 40.25 0.55 | 36.03 1.43 | 29.55 2.19 | 29.05 0.91 | |

| +ContraNorm | 47.24 0.66 | 40.31 0.74 | 35.85 1.58 | 32.37 0.93 | 27.80 0.72 |

4.4 Ablation Study

Recalling Section 3.3, we improve ContraNorm with stop-gradient technique (SG) by masking the second term. For solving data representation instability, we apply layer normalization (LN) to the original version, while for the convenience of theoretical analysis, layer normalization is replaced by normalization (N). Here, we investigate the effect of these tricks and the results are shown in Table 4. Compared with the variant with only LayerNorm, ContraNorm with both stop gradient and layer normalization presents better performance. As for the two normalization methods, they are almost comparable to our methods, which verifies the applicability of our theoretical analysis.

In Appendix E.2, we further conduct an ablation study regarding the gains of ContraNorm while using different values of scale factor , and show that ContraNorm is robust in an appropriate range of .

| Variants | Datasets | Avg | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SG | LN | N | COLA | SST-2 | MRPC | QQP | STS-B | MNLI-m | MNLI-mm | QNLI | RTE | |

| ✓ | 58.80 | 93.12 | 89.60 | 88.35 | 88.97 | 84.81 | 84.67 | 91.47 | 68.23 | 83.11 | ||

| ✓ | ✓ | 59.82 | 93.00 | 89.64 | 88.37 | 88.92 | 84.72 | 84.58 | 91.58 | 68.95 | 83.29 | |

| ✓ | ✓ | 59.57 | 93.12 | 89.97 | 88.30 | 88.93 | 84.84 | 84.67 | 91.58 | 68.95 | 83.33 | |

5 Conclusion

In this paper, we point out the deficiencies of current metrics in characterizing over-smoothing, and highlight the importance of taking dimensional collapse as the entry point for oversmoothing analysis. Inspired by the uniformity loss in contrastive learning, we design an optimization-induced normalization layer ContraNorm. Our theoretical findings indicate ContraNorm mitigates dimensional collapse successfully by reducing variance and effective rank of representations. Experiments show that ContraNorm boosts the performance of Transformers and GNNs on various tasks. Our work provides a new perspective from contrastive learning on solving the oversmoothing problem, which helps motivate the following extensive research.

Acknowledgement

Yisen Wang is partially supported by the National Key R&D Program of China (2022ZD0160304), the National Natural Science Foundation of China (62006153), Open Research Projects of Zhejiang Lab (No. 2022RC0AB05), and Huawei Technologies Inc. Xiaojun Guo thanks for Hanqi Yan for her kind guidance on the implementation of BERT models. We thank anonymous reviewers for their constructive advice on this work.

References

- Ali et al. (2021) Alaaeldin Ali, Hugo Touvron, Mathilde Caron, Piotr Bojanowski, Matthijs Douze, Armand Joulin, Ivan Laptev, Natalia Neverova, Gabriel Synnaeve, Jakob Verbeek, et al. Xcit: Cross-covariance image transformers. In NeurIPS, 2021.

- Ba et al. (2016) Jimmy Lei Ba, Jamie Ryan Kiros, and Geoffrey E Hinton. Layer normalization. arXiv preprint arXiv:1607.06450, 2016.

- Bardes et al. (2022) Adrien Bardes, Jean Ponce, and Yann LeCun. Vicreg: Variance-invariance-covariance regularization for self-supervised learning. In ICLR, 2022.

- Chen et al. (2020) Ming Chen, Zhewei Wei, Zengfeng Huang, Bolin Ding, and Yaliang Li. Simple and deep graph convolutional networks. In ICML, 2020.

- Chen et al. (2022) Qi Chen, Yifei Wang, Yisen Wang, Jiansheng Yang, and Zhouchen Lin. Optimization-induced graph implicit nonlinear diffusion. In ICML, 2022.

- Chen & He (2021) Xinlei Chen and Kaiming He. Exploring simple siamese representation learning. In CVPR, 2021.

- Chien et al. (2022) Eli Chien, Wei-Cheng Chang, Cho-Jui Hsieh, Hsiang-Fu Yu, Jiong Zhang, Olgica Milenkovic, and Inderjit S Dhillon. Node feature extraction by self-supervised multi-scale neighborhood prediction. In ICLR, 2022.

- Devlin et al. (2018) Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- Dong et al. (2021) Yihe Dong, Jean-Baptiste Cordonnier, and Andreas Loukas. Attention is not all you need: Pure attention loses rank doubly exponentially with depth. In ICML, 2021.

- Dosovitskiy et al. (2021) Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, and Neil Houlsby. An image is worth 16x16 words: Transformers for image recognition at scale. In ICLR, 2021.

- Ermolov et al. (2021) Aleksandr Ermolov, Aliaksandr Siarohin, Enver Sangineto, and Nicu Sebe. Whitening for self-supervised representation learning. In ICML, 2021.

- Ethayarajh (2019) Kawin Ethayarajh. How contextual are contextualized word representations? comparing the geometry of bert, elmo, and gpt-2 embeddings. arXiv preprint arXiv:1909.00512, 2019.

- Fulton (2000) William Fulton. Eigenvalues, invariant factors, highest weights, and schubert calculus. Bulletin of the American Mathematical Society, 37(3):209–249, 2000.

- Gao et al. (2019) Jun Gao, Di He, Xu Tan, Tao Qin, Liwei Wang, and Tieyan Liu. Representation degeneration problem in training natural language generation models. In ICLR, 2019.

- Garrido et al. (2022) Quentin Garrido, Yubei Chen, Adrien Bardes, Laurent Najman, and Yann Lecun. On the duality between contrastive and non-contrastive self-supervised learning. arXiv preprint arXiv:2206.02574, 2022.

- Giles et al. (1998) C Lee Giles, Kurt D Bollacker, and Steve Lawrence. Citeseer: An automatic citation indexing system. In DL, 1998.

- Gong et al. (2021) Chengyue Gong, Dilin Wang, Meng Li, Vikas Chandra, and Qiang Liu. Vision transformers with patch diversification. arXiv preprint arXiv:2104.12753, 2021.

- Grill et al. (2020) Jean-Bastien Grill, Florian Strub, Florent Altché, Corentin Tallec, Pierre Richemond, Elena Buchatskaya, Carl Doersch, Bernardo Avila Pires, Zhaohan Guo, Mohammad Gheshlaghi Azar, et al. Bootstrap your own latent-a new approach to self-supervised learning. In NeurIPS, 2020.

- He et al. (2020) Kaiming He, Haoqi Fan, Yuxin Wu, Saining Xie, and Ross Girshick. Momentum contrast for unsupervised visual representation learning. In CVPR, 2020.

- Hu et al. (2020) Weihua Hu, Matthias Fey, Marinka Zitnik, Yuxiao Dong, Hongyu Ren, Bowen Liu, Michele Catasta, and Jure Leskovec. Open graph benchmark: Datasets for machine learning on graphs. In NeurIPS, 2020.

- Hua et al. (2021) Tianyu Hua, Wenxiao Wang, Zihui Xue, Sucheng Ren, Yue Wang, and Hang Zhao. On feature decorrelation in self-supervised learning. In ICCV, 2021.

- Huang et al. (2018) Lei Huang, Dawei Yang, Bo Lang, and Jia Deng. Decorrelated batch normalization. In CVPR, 2018.

- Huang et al. (2019) Lei Huang, Yi Zhou, Fan Zhu, Li Liu, and Ling Shao. Iterative normalization: Beyond standardization towards efficient whitening. In CVPR, 2019.

- Ioffe & Szegedy (2015) Sergey Ioffe and Christian Szegedy. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In ICML, 2015.

- Jing et al. (2022) Li Jing, Pascal Vincent, Yann LeCun, and Yuandong Tian. Understanding dimensional collapse in contrastive self-supervised learning. In ICLR, 2022.

- Kingma & Ba (2014) Diederik P Kingma and Jimmy Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Kipf & Welling (2017) Thomas N. Kipf and Max Welling. Semi-supervised classification with graph convolutional networks. In ICLR, 2017.

- Lan et al. (2020) Zhenzhong Lan, Mingda Chen, Sebastian Goodman, Kevin Gimpel, Piyush Sharma, and Radu Soricut. Albert: A lite bert for self-supervised learning of language representations. In ICLR, 2020.

- Li et al. (2021) Guohao Li, Matthias Müller, Bernard Ghanem, and Vladlen Koltun. Training graph neural networks with 1000 layers. In ICML, 2021.

- Li et al. (2018) Qimai Li, Zhichao Han, and Xiao-Ming Wu. Deeper insights into graph convolutional networks for semi-supervised learning. In AAAI, 2018.

- Liu et al. (2019) Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. Roberta: A robustly optimized bert pretraining approach. arXiv preprint arXiv:1907.11692, 2019.

- Liu et al. (2021) Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In ICCV, 2021.

- Loshchilov & Hutter (2019) Ilya Loshchilov and Frank Hutter. Decoupled weight decay regularization. In ICLR, 2019.

- McCallum et al. (2000) Andrew Kachites McCallum, Kamal Nigam, Jason Rennie, and Kristie Seymore. Automating the construction of internet portals with machine learning. Information Retrieval, 3:127–163, 2000.

- Rajpurkar et al. (2018) Pranav Rajpurkar, Robin Jia, and Percy Liang. Know what you don’t know: Unanswerable questions for squad. arXiv preprint arXiv:1806.03822, 2018.

- Roy & Vetterli (2007) Olivier Roy and Martin Vetterli. The effective rank: A measure of effective dimensionality. In EUSIPCO, 2007.

- Rozemberczki et al. (2021) Benedek Rozemberczki, Carl Allen, and Rik Sarkar. Multi-scale attributed node embedding. Journal of Complex Networks, 9(2):cnab014, 2021.

- Russakovsky et al. (2015) Olga Russakovsky, Jia Deng, Hao Su, Jonathan Krause, Sanjeev Satheesh, Sean Ma, Zhiheng Huang, Andrej Karpathy, Aditya Khosla, Michael Bernstein, et al. Imagenet large scale visual recognition challenge. In IJCV, 2015.

- Senior et al. (2020) Andrew W Senior, Richard Evans, John Jumper, James Kirkpatrick, Laurent Sifre, Tim Green, Chongli Qin, Augustin Žídek, Alexander WR Nelson, Alex Bridgland, et al. Improved protein structure prediction using potentials from deep learning. Nature, 577(7792):706–710, 2020.

- Shi et al. (2022) Han Shi, Jiahui Gao, Hang Xu, Xiaodan Liang, Zhenguo Li, Lingpeng Kong, Stephen M. S. Lee, and James Kwok. Revisiting over-smoothing in bert from the perspective of graph. In ICLR, 2022.

- Siarohin et al. (2018) Aliaksandr Siarohin, Enver Sangineto, and Nicu Sebe. Whitening and coloring batch transform for gans. arXiv preprint arXiv:1806.00420, 2018.

- Strudel et al. (2021) Robin Strudel, Ricardo Garcia, Ivan Laptev, and Cordelia Schmid. Segmenter: Transformer for semantic segmentation. In ICCV, 2021.

- Tang et al. (2021) Yehui Tang, Kai Han, Chang Xu, An Xiao, Yiping Deng, Chao Xu, and Yunhe Wang. Augmented shortcuts for vision transformers. In NeurIPS, 2021.

- Tao et al. (2022) Chenxin Tao, Honghui Wang, Xizhou Zhu, Jiahua Dong, Shiji Song, Gao Huang, and Jifeng Dai. Exploring the equivalence of siamese self-supervised learning via a unified gradient framework. In CVPR, 2022.

- Touvron et al. (2021) Hugo Touvron, Matthieu Cord, Matthijs Douze, Francisco Massa, Alexandre Sablayrolles, and Hervé Jégou. Training data-efficient image transformers & distillation through attention. In ICML, 2021.

- Vaswani et al. (2017) Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. In NeurIPS, 2017.

- Wang et al. (2019) Alex Wang, Amanpreet Singh, Julian Michael, Felix Hill, Omer Levy, and Samuel R. Bowman. GLUE: A multi-task benchmark and analysis platform for natural language understanding. In ICLR, 2019.

- Wang et al. (2022) Peihao Wang, Wenqing Zheng, Tianlong Chen, and Zhangyang Wang. Anti-oversmoothing in deep vision transformers via the fourier domain analysis: From theory to practice. arXiv preprint arXiv:2203.05962, 2022.

- Wang & Isola (2020) Tongzhou Wang and Phillip Isola. Understanding contrastive representation learning through alignment and uniformity on the hypersphere. In ICML, 2020.

- Wightman (2019) Ross Wightman. Pytorch image models. https://github.com/rwightman/pytorch-image-models, 2019.

- Xie et al. (2021) Xingyu Xie, Qiuhao Wang, Zenan Ling, Xia Li, Yisen Wang, Guangcan Liu, and Zhouchen Lin. Optimization induced equilibrium networks. arXiv preprint arXiv:2105.13228, 2021.

- Yan et al. (2022) Hanqi Yan, Lin Gui, Wenjie Li, and Yulan He. Addressing token uniformity in transformers via singular value transformation. In UAI, 2022.

- Yang et al. (2021) Yongyi Yang, Tang Liu, Yangkun Wang, Jinjing Zhou, Quan Gan, Zhewei Wei, Zheng Zhang, Zengfeng Huang, and David Wipf. Graph neural networks inspired by classical iterative algorithms. In ICML, 2021.

- Yang et al. (2022) Yongyi Yang, Zengfeng Huang, and David Wipf. Transformers from an optimization perspective. arXiv preprint arXiv:2205.13891, 2022.

- Ying et al. (2018) Rex Ying, Ruining He, Kaifeng Chen, Pong Eksombatchai, William L Hamilton, and Jure Leskovec. Graph convolutional neural networks for web-scale recommender systems. In ACM SIGKDD, 2018.

- Zbontar et al. (2021) Jure Zbontar, Li Jing, Ishan Misra, Yann LeCun, and Stéphane Deny. Barlow twins: Self-supervised learning via redundancy reduction. In ICML, 2021.

- Zhao & Akoglu (2020) Lingxiao Zhao and Leman Akoglu. Pairnorm: Tackling oversmoothing in gnns. In ICLR, 2020.

- Zhou et al. (2021) Daquan Zhou, Bingyi Kang, Xiaojie Jin, Linjie Yang, Xiaochen Lian, Zihang Jiang, Qibin Hou, and Jiashi Feng. Deepvit: Towards deeper vision transformer. arXiv preprint arXiv:2103.11886, 2021.

- Zhou et al. (2020) Jie Zhou, Ganqu Cui, Shengding Hu, Zhengyan Zhang, Cheng Yang, Zhiyuan Liu, Lifeng Wang, Changcheng Li, and Maosong Sun. Graph neural networks: A review of methods and applications. AI open, 1:57–81, 2020.

- Zhu et al. (2021) Meiqi Zhu, Xiao Wang, Chuan Shi, Houye Ji, and Peng Cui. Interpreting and unifying graph neural networks with an optimization framework. In WWW, 2021.

Appendix A More Details on Dual ContraNorm

A.1 Methodology

The most time-consuming operation of ContraNorm is the matrix multiplication. Given , where and denote the number of samples in a batch and feature size respectively, the time complexity of ContraNorm is , which is the same order as the self-attention operation in Transformer. Empirically, we report the training time of BERT with or without ContraNorm on GLUE tasks in Table 5. All experiments are conducted on a single NVIDIA GeForce RTX 3090. On average, we raise the performance of BERT on GLUE tasks from 82.59% to 83.54% (see Table 1) with less than 4 minutes overhead. We think the time overhead is acceptable considering the benefits it brings.

| Dataset | COLA | SST-2 | MRPC | QQP | STS-B | MNLI-m (/mm) | QNLI | RTE | Avg |

|---|---|---|---|---|---|---|---|---|---|

| BERT | 110 | 851 | 74 | 6458 | 110 | 8186 | 2402 | 74 | 2283 |

| +ContraNorm | 125 | 983 | 80 | 7150 | 122 | 8941 | 2594 | 80s | 2509 |

However, the computation bottleneck will become more apparent when applying ContraNorm to large-scale graphs with . And we draw our inspirations from the duality between contrastive and non-contrastive learning (Garrido et al., 2022) to resolve this issue. It is shown that the uniformity loss used for deriving ContraNorm is equivalent (under limited assumptions) to the feature decorrelation loss adopted in non-contrastive learning. As the computation complexity of the decorrelation loss is that has a linear dependence on . For the sake of consistency with the original ContraNorm, we choose the dual version of the InfoNCE uniformity loss, named VICReg-exp proposed in Garrido et al. (2022), and apply it to the graph features , leading to the following VICReg-exp decorrelation for ,

| (11) |

where denotes the -th column vector (i.e., a feature) of . We can observe its close resemblance to the InfoNCE uniformity loss in Eq. (2). Following the same procedure as in Section 3.3, we can drive the gradient descent rule of the decorrelation loss and arrive at the following normalization layer named Dual ContraNorm (ContraNorm-D):

| (12) |

which is also very close in form to the ContraNorm layer in Eq. (9). We can see that ContraNorm-D only involves computing the feature correlation matrix of complexity . The linear dependence on the node number makes it very suitable for large-scale graphs. We note that other forms of feature decorrelation objectives in non-contrastive learning (Zbontar et al., 2021; Bardes et al., 2022) can also be applied, and we leave a more systematic study of their effects on neural network dynamics to future work.

A.2 BERT Experiments on GLUE Benchmark

To verify whether it performs equally well as ContraNorm, we conduct experiments on the validation set of GLUE tasks. The learning rate of BERT with ContraNorm-D is set to and other experiment setups are the same in Section 4.1. As shown from Table 6, for each task the performance of BERT with ContraNorm-D surpasses the vanilla BERT, and the average performance is raised from to . The results imply effectiveness of this modified version of ContraNorm, which can also be explained with the relationship between Gram matrix ( ) and covariance matrix ( ) (Ali et al., 2021).

| Dataset | COLA | SST-2 | MRPC | QQP | STS-B | MNLI-m | MNLI-mm | QNLI | RTE | Avg |

|---|---|---|---|---|---|---|---|---|---|---|

| BERT | 55.28 | 92.89 | 88.96 | 88.24 | 88.61 | 84.65 | 84.61 | 91.51 | 68.59 | 82.59 |

| + ContraNorm-D | 62.08 | 93.69 | 91.78 | 88.36 | 89.59 | 85.24 | 85.19 | 91.95 | 70.04 | 84.21 |

A.3 GNN Experiments on Large-scale OGB Benchmark

Setup. In order to assess the efficacy of ContraNorm-D in enhancing existing GNN techniques, we conduct tests on the ogbn-arxiv (Hu et al., 2020) benchmark, which consists of a large number of nodes (169,343) and edges (1,166,243), rendering ContraNorm unsuitable for scalability. We choose the high-ranking GIANT (Chien et al., 2022) method from the OGB leaderboard 222See https://ogb.stanford.edu/docs/leader_nodeprop/#ogbn-arxiv. as the backbone, and combine it with RevGAT Li et al. (2021) and self-knowledge distillation (selfKD) as suggested in the original paper. We follow the experiment settings in Chien et al. (2022), but reduce the optimization step for each layer of the hierarchical label trees to 1,000 (as opposed to 2,000 in the original method). For hyperparameters in ContraNorm-D, we set the scale to and the temperature to . We insert a ContraNorm-D layer after the graph convolution operation. Our implement is based on the GIANT repository 333See https://github.com/elichienxD/deep_gcns_torch..

Results. As shown in Table 7, despite the various tricks that have been applied to the GIANT* setting, the use of our ContraNorm-D variant can still boost its test performance from 76.15% to 76.39%. At the time of paper revision (April 17, 2023), we find that this gain is significant enough to improve the rank of GIANT* from the 6th place to the 2nd place, and achieves SOTA results on the ogbn-arxiv dataset at this parameter scale, since the 1st ranking method GLEM+RevGAT has more than 100x parameters than our model. This finding indicates that ContraNorm-D can serve as a plug-and-play component and boost existing state-of-the-art GNNs on large-scale graph datasets.

| Method | GIANT* | GIANT* + ContraNorm-D | GLEM+RevGAT (SOTA) |

|---|---|---|---|

| Test Accuracy | 76.15 | 76.39 | 76.94 |

| Params | 1.3M | 1.3M | 140.4M |

| Peak Memory | 5.11G | 6.09G | - |

| Average epoch time | 0.44s | 0.61s | - |

| Rank on OGB leaderboard | 6 | 2 | 1 |

Appendix B Additional Discussions and Ablating Experiments

In this section, given more space, we elaborate more details on the choice of our architectural designs and evaluate their effects on real-world experiments.

B.1 Explaining and Evaluating The Stop-Gradient Operation in Eq. (7)

In this section, we will illustrate the stop-gradient operation in Eq. (7) by using the framework proposed by Tao et al. (2022). The original update form should be Eq. (6):

We take SimCLR (Chen et al., 2020) as the contrastive learning framework. Tao et al. (2022) have studied the stop-gradient form of SimCLR and illustrated that the stop-gradient operation will make a similar performance with the original one. Based on this, we will elaborate on how is removed in the following part. In fact, we can directly illustrate how the second term in Eq. (4) can be omitted.

In SimCLR, by denoting the normalized features from the online branch as , the normalized features from the target branch (although the two branches have no differences in SimCLR) are and the SimCLR loss can be represented as

where and are positive pairs and is the temperature. Then the gradient of on can be calculated as

where

is the softmax results over similarities between and other samples, and

is computed over similarities between sample and its contrastive samples . We can see that the first term of comes from the part which takes as the anchor, and the second term comes from the part which takes the other feature as the anchor. Tao et al. (2022) proposes to stop the second term and verifies that stopping the second gradient term will not affect the performance empirically.

Note that the term in the gradient is from the alignment loss. So the gradient of the uniformity loss on can be written as

| (13) |

It is noteworthy that by writing , Eq. (4) shares the same form as Eq. (13). By adopting the stop-gradient method just as Tao et al. (2022) takes, we remove the second term in Eq. (4), which is just the term in Eq. (6).

Empirically, we draw the singular value distribution of embeddings for vanilla BERT and +ContraNorm with only term or term on RTE task. As shown in Figure 4, compared with vanilla BERT with a long-tail distribution (dimensional collapse), adding ContraNorm with and both reduce the number of insignificant (nearly zero) singular values and make a more balanced distribution. The similar singular value distributions mean that they play a similar role in alleviating dimensional collapse.

B.2 Comparing ConraNorm to Uniformity Regularization

We conduct a comparative experiment on BERT model with straightforwardly applied uniformity loss and our proposed ContraNorm. Specifically, we add the uniformity loss () to the classification loss (MSELoss, CrossEntropyLoss or BCELoss depending on the task type, denoted by ). Formally, the final loss is written as

where and is the number of samples in the batch. We tune in the range of and choose the best one in terms of average performance. Other hyperparameters are kept the same as settings of ContraNorm. The results are shown in the Table 8

| Dataset | COLA | SST-2 | MRPC | QQP | STS-B | MNLI-m | MNLI-mm | QNLI | RTE | Avg |

|---|---|---|---|---|---|---|---|---|---|---|

| BERT-base | 55.28 | 92.89 | 88.96 | 88.24 | 88.61 | 84.65 | 84.61 | 91.51 | 68.59 | 82.59 |

| BERT + Uniformity Loss | 58.08 | 93.00 | 89.46 | 88.14 | 88.69 | 84.45 | 84.43 | 91.60 | 68.59 | 82.94 |

| BERT + ContraNorm | 58.83 | 93.12 | 89.49 | 88.30 | 88.66 | 84.87 | 84.66 | 91.78 | 70.76 | 83.39 |

We can see that +ContraNorm gets the best score in 8 / 9 tasks, while +Uniformity loss reaches the best only in 1 / 9 tasks. ContraNorm also has the highest average score among all tasks. The reason is that updating the total loss is a combined process for objectives of correct classification and uniform distribution. Thus, a lower may be only caused by a lower classification loss while uniformity loss is kept the same, which cannot ensure a more uniform distribution of representations. In contrast, ContraNorm acts directly on representations in each layer and enforces the uniform property.

In fact, there are many methods in GNNs such as Yang et al. (2021) and Zhu et al. (2021), which design the propagation mechanism under the guidance of the corresponding objective. The well-designed propagation mechanism is shown to be the most fundamental part of GNNs (Zhu et al., 2021). Instead of directly using the loss function, these methods transfer the loss function into a specific propagation method and achieve superior performance, which indicates that changing the network may be more effective than directly adding the objective to the loss function.

B.3 Analysis of the plugging position of ContraNorm

We explore two ways to integrate ContraNorm into BERT and ALBERT. Concretely, consider the update of in -th block of Transformers

| (14) | |||

| (15) | |||

| (16) |

where is the input tensor.

We choose positions 1) between Eq. (14) and Eq. (15), named as before-residual; 2) between Eq. (15) and Eq. (16), named as after-residual. The performance comparison between the two positions on GLUE datasets is shown in Table 9. It is observed that putting ContraNorm after the residual connection slightly outperforms that before residual. Therefore, we choose the after-residual variant as our basic ContraNorm.

| Dataset | COLA | SST-2 | MRPC | QQP | STS-B | MNLI-m | MNLI-mm | QNLI | RTE | Avg |

|---|---|---|---|---|---|---|---|---|---|---|

| BERT-base | 55.28 | 92.89 | 88.96 | 88.24 | 88.61 | 84.65 | 84.61 | 91.51 | 68.59 | 82.59 |

| before-residual | 59.57 | 93.12 | 89.97 | 88.30 | 88.93 | 84.84 | 84.67 | 91.58 | 68.95 | 83.33 |

| after-residual | 58.83 | 93.12 | 89.49 | 88.30 | 88.66 | 84.87 | 84.66 | 91.78 | 70.76 | 83.39 |

Appendix C More Experimental Details

Appendix D Introduction of graph datasets

Here, we introduce the properties of the graph datasets discussed in this work.

Citation Network. Cora, CiteSeer are three popular citation graph datasets. In these graphs, nodes represent papers and edges correspond to the citation relationship between two papers. Nodes are classified according to academic topics.

Wikipedia Network. Chameleon and Squirrel are Wikipedia page networks on specific topics, where nodes represent web pages and edges are the mutual links between them. Node features are the bag-of-words representation of informative nouns. The nodes are classified into four categories according to the number of the average monthly traffic of the page.

Statics of datasets are shown in Table 10.

| Category | Dataset | # Nodes | # Edges | # Features | Degree | # Classes |

|---|---|---|---|---|---|---|

| Citation | Cora | 2708 | 5278 | 1433 | 4.90 | 7 |

| CiteSeer | 3327 | 4552 | 3703 | 3.77 | 6 | |

| Wikipedia | Chameleon | 2277 | 36101 | 500 | 5.0 | 6 |

| Squirrel | 5201 | 217073 | 2089 | 154.0 | 4 |

D.1 Metrics calculating feature and attention map cosine similarity

Following Wang et al. (2022), given feature map and attention map of the -th head with batch size and hidden embedding size , the feature cosine similarity and the attention cosine similarity is computed as

where denotes the -th row of , is the -th column of , and is the number of attention heads.

Appendix E Additional Experimental Results

E.1 Results on test set of GLUE datasets

We submit our trained model to GLUE benchmark leaderboard and the resultant feedback of performance is shown in Table 11.

| Dataset | COLA | SST-2 | MRPC | QQP | STS-B | MNLI-m | MNLI-mm | QNLI | RTE | Avg |

|---|---|---|---|---|---|---|---|---|---|---|

| BERT-base | 53.3 | 92.8 | 86.8 | 71.2 | 82.8 | 84.4 | 83.2 | 90.9 | 66.3 | 79.1 |

| BERT + ContraNorm | 54.5 | 93.0 | 87.9 | 71.4 | 83.0 | 84.5 | 83.4 | 91.0 | 66.9 | 79.5 |

E.2 More results of the Ablation Study

We conduct experiments using BERT+ContraNorm with varying scaling factor on GLUE datasets. For each dataset, we vary the normalization scale around the best choice, and other parameters remain consistent. As shown in Figure 5, the results illustrate that model with ContraNorm is robust in an appropriate range of normalization scaling factor.

Appendix F Omitted Proofs

Here, we present the complete proof for Propositions in Section 3.4.

First, we give two lemmas that will be useful for the proof.

Lemma F.1.

Denote . For any update form and , if the eigenvalues of are all not larger than zero, then we have .

Proof.

We denote for the eigen-decomposition of , where is the orthogonal basis and all . Note that . Therefore,

| (17) | ||||

Similarly, we have

| (18) |

Therefore, we have

| (19) |

Thus, if the eigenvalues of are all not larger than zero, is semi-negative definite, then we have

| (20) |

which implies that . Therefore, . ∎

The second lemma is from the Eq. (13) in Fulton (2000).

Lemma F.2.

Let be symmetric matrices and Suppose the eigenvalues of are , the eigenvalues of are , and the eigenvalues of are . Then we have the inequality

| (21) |

We can now start to prove Proposition 1.

Proposition 1.

Let . For attention matrix , let be the smallest eigenvalue of . For the ContraNorm update , we have . Especially, if , we have .

Proof.

We denote . Then,

| (22) | ||||

Let be the eigenvalues of . Since has a eigenvalue of 0 and eigenvalues of 1, is a semi-definite positive matrix. Thus, is also a semi-definite positive matrix. Notice that the largest eigenvalue of is and the largest eigenvalue of is . Therefore, by Lemma 21, the largest eigenvalue of is less or equal to . Let , then the largest eigenvalue of is less or equal to . By Lemma F.1, we have .

Moreover, if , then , leading to . ∎

Remark. Now we discuss some sufficient conditions for If is a diagonally dominant matrix, then we will have the result Denote , and , where if and if , then we have

| (23) |

If we have for any , then we will have

| (24) |

Notice that , we have

| (25) |

Since is an attention matrix, we also have

| (26) |

Therefore, we have

| (27) | ||||

which indicates that is a diagonally dominated matrix, thus Therefore, for any is just a sufficient condition. A special case for this is .

We now move on to the proof of Proposition 2. We first give a lemma on the property of effective rank.

Lemma F.3.

Let the eigenvalues of be and the eigenvalues of be . If is increasing as increases, then we have

This lemma can be proved just by using the definition of the effective rank.

Proposition 2.

Consider the update form

| (28) |

let be the largest singular value of . For satisfying , we have .

Proof.

We have

| (29) |

Therefore,

| (30) |

Suppose are the eigenvalues of , then has the same eigenvectors as , and its eigenvalues are . Since satisfies , we have . Therefore, is increasing as increases, resulting the fact that by using Lemma F.3. ∎