Continuous-Time Fixed-Lag Smoothing for

LiDAR-Inertial-Camera SLAM

Abstract

Localization and mapping with heterogeneous multi-sensor fusion have been prevalent in recent years. To adequately fuse multi-modal sensor measurements received at different time instants and different frequencies, we estimate the continuous-time trajectory by fixed-lag smoothing within a factor-graph optimization framework. With the continuous-time formulation, we can query poses at any time instants corresponding to the sensor measurements. To bound the computation complexity of the continuous-time fixed-lag smoother, we maintain temporal and keyframe sliding windows with constant size, and probabilistically marginalize out control points of the trajectory and other states, which allows preserving prior information for future sliding-window optimization. Based on continuous-time fixed-lag smoothing, we design tightly-coupled multi-modal SLAM algorithms with a variety of sensor combinations, like the LiDAR-inertial and LiDAR-inertial-camera SLAM systems, in which online timeoffset calibration is also naturally supported. More importantly, benefiting from the marginalization and our derived analytical Jacobians for optimization, the proposed continuous-time SLAM systems can achieve real-time performance regardless of the high complexity of continuous-time formulation. The proposed multi-modal SLAM systems have been widely evaluated on three public datasets and self-collect datasets. The results demonstrate that the proposed continuous-time SLAM systems can achieve high-accuracy pose estimations and outperform existing state-of-the-art methods. To benefit the research community, we will open source our code at https://github.com/APRIL-ZJU/clic.

Index Terms:

Continuous-time trajectory, Fixed-Lag Smoothing, SLAM, Multi-sensor Fusion.I Introduction

Simultaneous Localization And Mapping (SLAM) are fundamental for mobile robots to navigate autonomously in various applications. Especially, in scenarios where external signals are unavailable, e.g., the GPS-denied environment, localization and mapping with only onboard sensors can be a practical solution. Plenty of sensors have been used for SLAM purposes, including the proprioceptive sensors and exteroceptive sensors. The common proprioceptive sensors measure the state of robot, e.g., wheel encoders, Inertial Measurement Unit (IMU), and magnetometer, etc. While the exteroceptive sensors perceive the surrounding environment information, for example, radar, sonar, LiDAR, camera, barometer, altimeter, etc. Among all of the sensors, camera, IMU and LiDAR are three of the most ubiquitous sensors leveraged in SLAM algorithms [1, 2, 3, 4, 5, 6].

Recently, LiDAR-Inertial-Camera (LIC) based localization and mapping systems [4, 6, 7] have attracted significant attention, due to their versatility, high accuracy, and robustness. By combining the three sensor modalities, LIC systems have more applicable scenarios than using only the component sensors. Besides, since a single LiDAR only has a limited field of view (FOV), multiple LiDARs fusion [8] is a rational choice for highly-accurate localization and efficient mapping.

Multi-modal sensor measurements are assigned with timestamps by individual sensors, and timeoffsets generally exist among different sensors [9, 10] without hardware synchronization. Even the timeoffsets between sensors can be removed, measurements from various sensor modalities are usually received at different rates and different time instants. In order to fuse the heterogeneous sensors, accurate alignment of the asynchronous sensors is the prerequisite. Without special hardware support for synchronization, timeoffset can also be online calibrated in estimators, such as online timeoffset estimation in Visual-Inertial(VI) system [9, 11, 12, 13] and LIC system [4]. Further efforts are required to deal with different sensor frequencies and sampling time instants. For example, sensors like IMU and LiDAR consecutively provide abundant measurements at high frequencies, and it is challenging to accurately align thousands of points in LiDAR scans to the asynchronous IMU measurements. Some approaches [10, 6, 14] linearly interpolate the integrated discrete-time IMU poses at the sampling time instants of LiDAR points, in order to compensate for motion distortion in LiDAR scans.

Another alternative way is to parameterize the trajectory in a continuous-time representation [15, 16, 17], which allows pose querying at any time instants without interpolations. Formulating the trajectory in continuous-time with B-spline gains popularity in calibration tasks [18, 19], visual odometry [20], as well as LiDAR odometry [17, 21], due to its versatility and convenience in aligning asynchronous sensor measurements. However, there are two main challenges preventing continuous-time trajectory from being widely deployed: (i) Real-time performance: In conventional discrete-time state estimation, the pose in the residual is just the state to be estimated. However, in continuous-time state estimation, the pose is computed from multiple control points on the Lie group, and the state to be estimated in the residual switches to multiple control points (depend on the B-spline order). This fact not only increases the computation load but also generally increases the complexity of the Jacobian computation. Existing continuous-time odometry methods [17] rarely achieve real-time performance. (ii) Fixed-lag smoothing: Discrete-time methods typically estimate states by fixed-lag smoothing [22, 23, 3] and preserve information of the old measurements/states with marginalization, however, continuous-time methods like VIO [24] or LIO [21] rarely consider how to preserve information of old measurements/states. In fact, some of informative measurements/states are directly discarded without leaving prior information for the estimation of remaining states. There is little work about marginalization for continuous-time trajectory optimization, probably because of the high complexity of optimizing continuous-time trajectory, and the sliding strategy and marginalization strategy are significantly different from discrete-time methods.

In general, although B-spline based continuous-time trajectory optimization methods have been studied in many existing research works [24, 21, 17], the continuous-time fixed-lag smoothing within a sliding window and with probabilistic state marginalization is rarely investigated in existing literature. To the best of our knowledge, this paper is among the first to utilize continuous-time fixed-lag smoothing with probabilistic marginalization for multi-sensor fusion, and even better, we can achieve real-time performance. The contributions can be summarized as follows:

-

•

We adopt continuous-time fixed-lag smoothing method for multi-sensor fusion in a factor-graph optimization framework. Specifically, we estimate B-spline based continuous-time trajectory within a constant size of sliding window by fusing asynchronous heterogeneous sensor measurements published at various frequencies and different time instants. To attain a bounded computation complexity, old control points of the continuous-time trajectory are strategically and probabilistically marginalized out of the sliding window.

-

•

Powered by continuous-time fixed-lag smoothing, we design some LiDAR-Inertial (LI) SLAM and LIC SLAM systems at a variety of sensor combinations (even with multiple LiDARs), and derive the analytical Jacobians for efficient factor-graph optimization. Benefiting from easy accessibility of continuous-time trajectory derivatives, timeoffsets between different sensors can be online calibrated. With careful and strategic implementation, our proposed continuous-time LI SLAM and LIC SLAM systems can achieve real-time performance.

-

•

The proposed continuous-time LI SLAM and LIC SLAM systems are extensively evaluated on three publicly available datasets and self-collected datasets. The experimental results show that the proposed LI system has competitive accuracy and the proposed LIC system outperforms several state-of-the-art methods and works well in degenerated sequences.

The remainder of the paper is organized as follows: Sec. II reviews the relevant literature. Then with the continuous-time trajectory preliminary provided in Sec. III, we present continuous-time fixed-lag smoothing method leveraged in Sec. IV. In Sec. V, we further detail multi-sensor fusion SLAM systems enabled by continuous-time fixed-lag smoothing with different sensor configurations, such as LiDAR-inertial systems and LiDAR-inertial-camera systems. Sec. VI demonstrates the performance of different sensor combinations and discloses the runtime of the different systems. Finally, Sec. VII concludes the paper and discusses future work.

II Related Works

| Paper | Year | IMU1 | Camera2 | LiDAR3 | Method4 |

| V-LOAM [25] | 2018-JFR | I1 | C1, C3 | L1 | F1 |

| Wang. [26] | 2019-IROS | I2 | C1 | L1 | F2 |

| Khattak. [27] | 2019-ICUAS | ROVIO [28] | L1 | F2 | |

| Lowe. [16] | 2018-RAL | I3 | C1, C4 | L5 | F4 |

| LIC-Fusion [4] | 2019-IROS | I1 | C1 | L1 | MSCKF |

| LIC-Fusion2.0 [10] | 2020-IROS | I1 | C1 | L1, L2 | MSCKF |

| LVI-SAM [6] | 2021-ICRA | I2 | C1, C3 | L1 | F3 |

| VILENS [29] | 2021-RAL | I2 | C1, C3 | L2 | F4 |

| VILENS [30] | 2021-Arxiv | I2 | C1, C3 | L2, L3 | F4 |

| R2live [31] | 2021-RAL | I1, I2 | C1 | L1 | ESIKF, F4 |

| R3live [7] | 2021-Arxiv | I1 | C2, C3 | L1 | ESIKF |

| Super Odom. [32] | 2021-IROS | I2 | C1, C3 | L4 | F3 |

| Lvio-Fusion [33] | 2021-IROS | I2 | C1 | L1 | F4 |

| 1 I1=Integration; I2=Preintegration; I3=Raw measurements. | |||||

| 2 C1=Indirect; C2=Direct; C3=Depth From LiDAR; C4=Depth From Surfel. | |||||

| 3 L1=LOAM Feature; L2=Tracked Plane/Line; L3=PCA based Feature; | |||||

| L4=PCA based Feature; L5=Surfel. | |||||

| 4 See Fig. 1 for details. | |||||

There is a rich body of literature on multi-sensor fusion for localization and mapping. Instead of providing a comprehensive literature review of SLAM with multi-sensor fusion, we extensively review the existing LiDAR-Inertial-Camera systems, multi-LiDAR systems and continuous-time trajectory based SLAM systems, which are most relevant to this paper.

II-A Multi-sensor Fusion for SLAM

II-A1 LiDAR-Inertial-Camera Fusion for SLAM

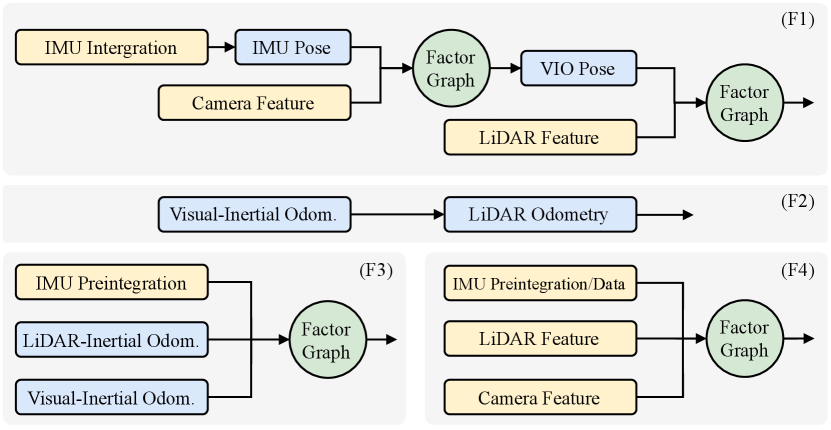

State estimation in LIC systems has been achieved either by graph-based optimization [25, 26, 16, 6, 32, 29, 30, 33] or filter-based methods [4, 10, 7], while work [31] also combines both the graph optimization and filter for localization and mapping. We summarize typical LIC systems in Tab. I, which depicts the processing methods of raw sensory measurements as well as the state estimation framework. Fig. 1 summarizes four typical pipelines using factor-graph optimization, including the loosely-coupled ones (F1, F2, and F3), as well as the tightly-coupled methods (F4). V-LOAM [2] is a loosely-coupled method, comprised of IMU integration, vision and integrated IMU poses fusion with factor-graph optimization, as well as LiDAR and VIO poses fusion with factor-graph optimization (see pipeline F1 in Fig. 1). All the three submodules can output pose estimation at different frequencies, and the estimated poses are refined step by step within the submodules. Some works [26, 27], utilizing visual-inertial odometry to provide initial pose guess for LiDAR point cloud registration (see F2 of Fig. 1), adopt another way to loosely couple sensor measurements. Some other works [6, 32] get pose estimation via IMU preintegration, visual-inertial odometry and LiDAR-inertial odometry separately, then fuse the three types of pose estimation in a pose graph, which can be solved by factor-graph optimization. In contrast to loosely-coupled methods, which somehow fuse intermediate pose estimation from sensor measurements, tightly coupled approaches [29, 30, 33, 16] directly integrate sensor data, estimating system state with IMU data (preintegration/raw measurements), image features and LiDAR features (illustrated in F4 of Fig. 1).

IMU measurement: Regarding the measurement processing for different sensor modalities, filter-based methods usually use IMU measurements to propagate system states, and optimization-based methods widely adopt preintegration [34] technique which integrates high-rate IMU data into a low-rate preintegration factor; continuous-time trajectory based methods naturally couple raw IMU measurements in the batch optimization.

Visual measurement: Visual algorithms could be categorized into the indirect and the direct upon the visual residual models [35]. Indirect methods extract and track features from images and construct geometric constraints in the estimation, while direct methods directly use the actual image values to formulate photometric error, allowing for a more finely grained geometry representation. Both methods rely on accurate depth estimation of landmarks, which can be enhanced by the highly accurate clouds of LiDAR. Specifically, Lowe et al.[16] set the depth of a feature to the nearest surfel along the feature ray, and set the depth uncertainty to the surfel’s covariance in the ray direction. Other methods [25, 6, 30, 32] first project the LiDAR cloud onto the spherical coordinates of the image, then associate the image feature with the nearest local planar patch that is formed by several nearest LiDAR points, and finally set the feature depth to the depth of the intersection between the ray (from the camera center to the feature) and the plane.

LiDAR measurement: Line and plane features based on local patch’s smoothness firstly defined in LOAM algorithm [2] are popular in data association of LiDAR clouds, followed by several methods [25, 26, 6, 4]. Zuo et al. [10] extend to track consecutive plane feature and VILENS [29, 30] tracks both line and plane feature.

II-A2 Multi-LiDAR Fusion for SLAM

Multi-LiDAR odometry LOCUS [8] and its following [36] combine motion-corrected scans from each LiDAR into a single point cloud, which is registrated by Generalized Iterative Closest Point (GICP). MILIOM [37] chooses to extract features from raw organized scan first and merges feature cloud of each LiDAR instead of merging the full scan, the feature cloud is registrated through feature-to-map matching method.

II-B SLAM with Continuous-Time Trajectory

Continuous-time trajectory representation includes linear interpolation, wavelets, Gaussian process and splines, in this paper we focus on B-spine based representation as explained in Sec. III-A. Pioneering work on solving B-spline based continuous-time SLAM problem is first systematically derived in [38], where the authors propose to represent the states as a weighted sum of continuous temporal basis function and illustrate its application case in the extrinsic calibration between IMU and camera. Thereafter, B-spline based continuous-time trajectory formulation has been widely applied to SLAM-relevant applications, such as event camera odometry [20], and LiDAR-inertial odometry [17, 21], multi-camera SLAM system [24] and LIC fusion [16]. Lowe et al. [16] tightly couple LIC measurements to estimate state in continuous-time trajectory, however they assume no timeoffset between sensors which does not hold well in real world application, and prior information is discarded without any explanation. Our previous work [17] proposes a continuous-time based method for LI system, which manages the measurements within a local window and presents a two-stage loop closure strategy to obtain global-consistent trajectory. Some IMU measurements older than current scan are included in local window rather than applying marginalization technique to retain information about the old measurements, and in addition, it uses automatic derivation to solve the NLS problem. Due to these two aspects, the system is not able to run in real time.

In this paper, we propose a continuous-time based method that supports fusing measurements from LiDAR, IMU and camera, which is very easy to expand to fuse more other sensors to improve the accuracy of localization and mapping, such as fusing GPS in outdoor cases and RFID [39] in indoor cases.

III Preliminary on Continuous-time Trajectory

This section presents in detail continuous-time trajectory representation based on B-spline and its time derivatives.

III-A B-spline Trajectory Representation

We employ B-spline to parameterize continuous-time trajectory as it has the good property of locality and closed-form analytic time derivatives, smoothness for a spline of order (degree ) [40]. Specifically, we parameterize the continuous-time 6-DoF trajectory with uniform cumulative B-splines in a split representation format [41]. The translation of order over time is controlled by the temporally uniformly distributed translational control points , , , , and the matrix format [42] could be written as:

| (1) | |||

with difference vectors . The cumulative spline matrix of uniform B-spline only depends on the B-spline order. We further define thus Eq. (1) can be written as

| (2) |

To parameterize the 3D rotation in , we adopt the cumulative B-splines in Lie groups with the following expression over time :

| (3) |

where are the control points for rotation. The difference vector between two rotations is defined as and where omitting the to simplify notation, Eq. (3) can be written in the following concise equation:

| (4) |

In this paper, we select cubic (degree = 3) B-spline and the corresponding cumulative spline matrix is

| (5) |

III-B Time Derivatives of B-spline

As mentioned before, B-spline provides closed-form analytic derivatives, enabling the proposed system to fuse high-frequency IMU measurements seamlessly. Continuous-time trajectory of IMU in global frame is denoted as , and its time derivatives can be derived:

| (6) | ||||

| (7) | ||||

| (8) |

where . Naturally, it’s straightforward to compute the linear accelerations and angular velocities in local IMU frame:

| (9) | ||||

| (10) |

where denotes the gravity vector in global frame.

In the following section, we model the continuous-time trajectory of IMU sensor in frame, termed as . The global frame is determined by the first IMU measurement after system initialization by aligning its z-axis with the gravity direction, and the gravity can be denoted as in . We assume the extrinsic rigid transformations between sensors are precalibrated [43], and we can get the camera trajectory , and LiDAR trajectory handily by transferring IMU trajectory with the known extrinsic transformations.

IV Continuous-Time Fixed-Lag Smoothing

| Symbols | Meaning |

|---|---|

| control points of B-splines in | |

| involved orientation/position control points at | |

| biases of temporal sliding window in | |

| timeoffsets of LiDAR, IMU and camera, respectively | |

| timestamp of trajectory or sensor measurements | |

| corrected timestamp of sensor measurements |

This section presents the continuous-time fixed-lag smoothing applied in factor-graph optimization, which is the core of estimator design. Before diving into details, we introduce some notations used in this paper which is summarized in Tab. II.

IV-A Factor-Graph Optimization

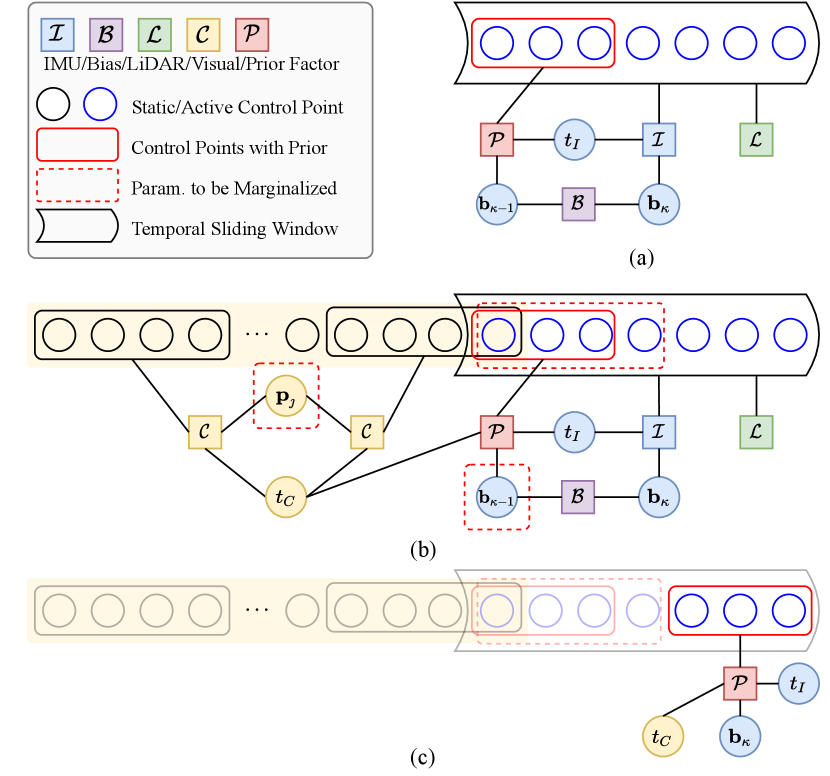

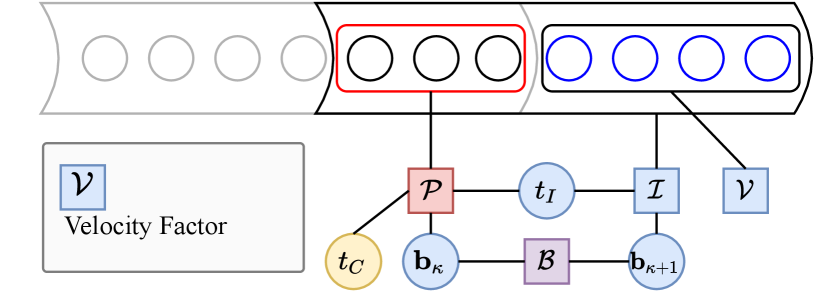

We fuse heterogeneous IMU, LiDAR, and camera measurements in a factor graph optimization framework with the continuous-time trajectory formulation. Figure. 2 (a) and (b) show the factor graphs of the LI system and LIC system, respectively. Here we only illustrate the factor graph for LIC system, and it is straightforward to apply to LI system with minor adaptions. Specifically, our estimator in the temporal sliding window (detailed in Sec. V-A2) over aims to estimate the following states:

| (11) | ||||

which include active control points of B-splines , the IMU biases , the parameters of visual landmarks , and the temporal offset between LiDAR and IMU or camera . Notably, LiDAR is taken as the base sensor in our multi-sensor fusion system, thus we need to align IMU and camera timestamps to LiDAR. Sec. V-C2 provides more details about the timeoffset estimation.

The factor graph needed to be solved consists of LiDAR factors , IMU factors , one bias factor , visual factors , and one prior factor induced from marginalization, the details of factors are provided in the following sections. With the LiDAR-inertial measurements during and tracked visual features in the keyframe sliding window (detailed in Sec. V-B2), we formulate the following Nonlinear Least-Squares (NLS) problem:

| (12) |

and solve the NLS problem by iterative optimization methods. At each iteration, the system is linearized at current estimate , and we define its error state as , where is the true state. In practice we adopt the Levenberg-Marquardt algorithm from the Ceres Solver [44] library and employ analytical derivatives to speed up the NLS problem-solving.

IV-B LiDAR Factor

A LiDAR point measurement with noise , measured at time , is associated with a 3D plane in closest point parameterization [14, 45], , where and denote the distance of the plane to origin and unit normal vector, respectively. We can transform LiDAR point to global frame by

| (13) |

and the point-to-plane distance is given by:

| (14) | ||||

where is the measure time of LiDAR point, is Jacobian matrix w.r.t error state (see our supplementary file for detail). is assumed to be under independent and identically distributed (i.i.d.) white Gaussian noise in our experiments. is the Jacobian with respect to which can be easily computed.

IV-C IMU Factor and Bias Factor

Considering raw IMU measurements at with angular velocity and linear acceleration , and the true angular velocity and linear acceleration are denoted by and , respectively. The following equations hold:

| (15) | |||

| (16) | |||

| (17) |

where are zero-mean Gaussian white noise. The gyroscope bias and accelerometer bias are modeled as random walks, driving by the white Gaussian noises and , respectively. With the timeoffset of IMU between LiDAR, we have the following IMU factor

| (18) | ||||

and bias factor

| (19) | ||||

where is the corrected IMU timestamp, and are Jacobian matrices with respect to states (see supplementary file), and are Jacobian matrices with respect to noise. By substituting the derivative of continuous-time trajectory (Eq. (9)) at time instant into Eq. (LABEL:eq:imu_factor), we can optimize the continuous-time trajectory by raw IMU measurements directly, avoiding the efforts of IMU propagation or pre-integration.

IV-D Visual Factor

A landmark observed in its anchor keyframe at timestamp and observed again in frame at timestamp , can be given the initialized inverse depth as through triangulation (as Sec. V-B1). Let denotes 2D raw observation in with noise , the estimated position of landmark in frame is

| (20) |

where denotes the back projection which transforms a pixel to the normalized image plane. are corrected timestamps to remove timeoffsets. The corresponding visual factor based on reprojection error is defined as:

| (21) | ||||

where denotes a vector with its -th element to be 1 and the others to be 0, and describes 2D raw observation in . denotes Jacobian matrix (see supplementary file).

IV-E Marginalization

To bound the size of sliding window in our continuous-time fixed-lag smoother, marginalization has to be resorted to. Although marginalization is universally leveraged in discrete-time sliding-window estimator [23, 3], it is rarely investigated in continuous-time sliding-window estimator. By utilizing probabilistic marginalization, information on the active states can be well reserved in the estimator, when measurements and old states are removed from the sliding window. Linearizing the factors at the best estimate of the state gives:

| (22) |

where we organize the reserved parameters in , and will be marginalized. Using the Schur-Complement [46], the following equation holds:

| (23) |

and by introducing new notations, we can denote the above equation by:

| (24) |

which is exactly the prior factor. Figure. 2 (b) displays the entries needed to be marginalized out of the LI temporal- and visual keyframe-sliding windows. The entries needed to be marginalized vary at different statuses. We marginalize out the control points and IMU biases when sliding the LI temporal window, and marginalize out the inverse depths of landmarks when sliding the oldest keyframe out of visual keyframe sliding window, producing a prior on

where denotes the affected control points in next sliding window. The prior factor induced from marginalization is shown in Fig. 2 (c) and will be involved in future factor-graph optimization.

V Multi-sensor Fusion

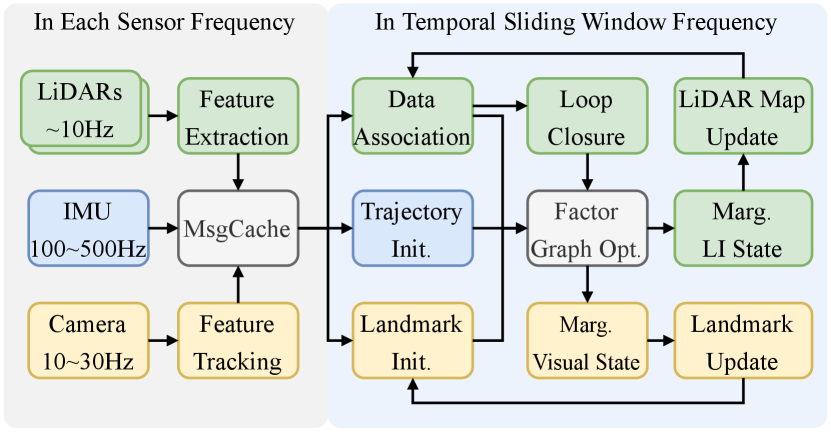

This section presents in detail the multi-sensor fusion method powered by the versatile continuous-time fixed-lag smoothing presented above. Fig. 3 shows the overall architecture of a LIC fusion system. In this section, we first introduce the LiDAR-IMU system (Sec. V-A) and visual system (Sec. V-B). Then we reveal some implementation details (Sec. V-C) to accommodate the particularity of continuous-time trajectory estimation, which is with significant distinction from discrete-time methods.

V-A LiDAR-Inertial System

V-A1 LiDAR Measurement Processing

There are three main stages for LiDAR point cloud processing: feature extraction, data association, and local map management. Inspired by [2], for each new incoming LiDAR scan, we first compute the curvature of each point according to its neighboring points, and select points with small curvature as planar points. The motion distortion in raw LiDAR scan is inevitable due to the motion when the LiDAR is scanning. Since we have formulated the continuous-time trajectory, it is handy to query poses at every time instant, which allows us to compensate for the motion distortion by aligning all the LiDAR points in one scan to the sweeping start time of that scan. After removing the incidental motion distortion in raw LiDAR scan and projecting undistorted LiDAR scan to the map frame using the initialized (or optimized) continuous-time trajectory, we can perform the point-to-plane data association by associating planar LiDAR points to tiny planes in the map. The tiny planes are found by fitting neighboring planar points on the map. The point-to-plane distance (see Eq. (14)) will be minimized in the continuous-time fixed-lag smoother. After finishing the first optimization, we update the data association using the optimized trajectory and optimize again to refine the estimation. Upon completion of the optimization, we individually transform each LiDAR point into the scan’s start time according to queried pose from the optimized trajectory, and select keyscans based on the displacement of poses or time span. Keyscans are leveraged to build the local LiDAR map in frame, and the local LiDAR map comprised of certain keyscans keeps updated upon newly-added scan.

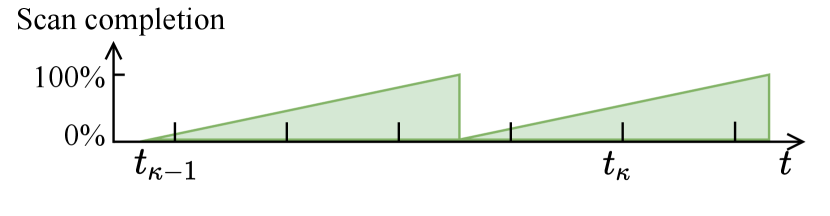

V-A2 LI Temporal Sliding Window

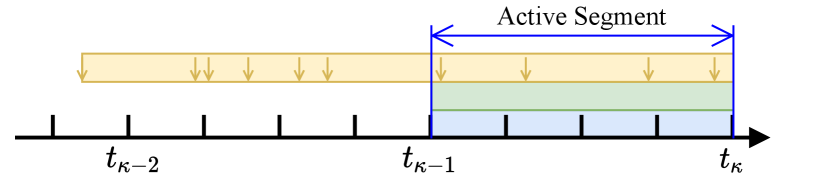

For the LI system, we maintain a temporal sliding window within a constant time duration, , where denotes the B-spline temporal knot distance and is an integer. The continuous-time trajectory of LI system is optimized and updated every seconds. Compared to optimizing the trajectory within every LiDAR scan individually [17], the temporal sliding-window optimization (fixed-lag smoothing) makes full use of all measurements within the sliding window (see Fig. 4) and prior information from marginalization, which could achieve higher accuracy. Note that the IMU bias sampling frequency is set as Hz, that is to say, we add a new IMU bias state when sliding the temporal window forward, and the discrete noise of bias factor (see Eq. (19)) is determined accordingly.

V-B Visual System

The main purpose of incorporating camera to the multi-sensor fusion estimator is to improve the robustness of LI system. We expect the LiDAR-Inertial-Camera system not to have degraded performance in structureless scenarios, which is a significant challenge for LI systems.

V-B1 Visual Front End

We extract corner features [47] from the images with KLT tracking [48] and determine image keyframe based on parallax variation and the number of features tracked, similar to [3]. Furthermore, we triangulate the landmarks tracked throughout the whole keyframe sliding window (see Sec. V-B2) using keyframe poses queried from current best-estimated continuous-time trajectory, and only keep the landmarks with low reprojection errors. Landmarks are parameterized by inverse depth represented in anchor frame, which in our case is always the oldest keyframe in the keyframe sliding window.

V-B2 Visual Keyframe Sliding Window

When processing images, we maintain a visual keyframe sliding window with a constant number of keyframes, in contrast to the constant time duration for LI temporal sliding window. This is due to the consideration that visual landmark triangulation needs observations across multiple keyframes with sufficient parallaxes. The duration of the keyframe sliding window is normally longer than the LI temporal sliding window, and estimating the entire trajectory covered by all the keyframes is time-consuming. In practice, we determine the trajectory optimization range based on the temporal sliding window of the LI system and define the control points to be optimized as active control points (shown in Fig. 4). We only optimize the control points of active trajectory segment within the LI temporal sliding window, while the other control points are involved in the optimization but kept static in the optimization. That formulation of visual system may not the best choice of high-accuracy estimation, but rather a trade-off to achieve real-time. While for the marginalization of visual keyframe sliding window, the oldest keyframe and the landmarks in it are marginalized out.

The strategy of sliding the keyframe window is similar to [3], where the newest frame in the sliding window is always the latest image of the system. If the second newest frame is determined as keyframe, we will marginalize out the oldest keyframe and landmarks in it from the sliding window, in order to keep a constant number of keyframes. Otherwise, we discard the second newest frame directly. Note that, we need to transfer the anchor frame of visual landmark if its anchor frame is marginalized.

V-C Extra Implementation Details

V-C1 Initialization

We initialize the IMU bias and gravity direction using the raw IMU measurements, assuming that the system is stationary at the start, which shares the same spirit as [49]. The system appends new IMU biases and control points when sliding the LI temporal window. The new IMU biases are initialized to the value of the previous temporal sliding window bias, and the new control points are first assigned values of the neighboring control point and further initialized via factor graph optimization as shown in Fig. 5. The velocity factor is defined as

| (25) |

where is defined in Eq. (7), which is derivative of continuous-time trajectory. is an estimate of global linear velocity at the end of current LI temporal window, and is derived by forward integrating IMU measurements from the pose at the start of temporal window. When solving the initialization problem, only the newly added control points are optimized while all the other states remain constant during optimization. After initialization, we remove the distortion of LiDAR scans with that initialized trajectory for better association results.

V-C2 Online Calibration of Timeoffset

Estimating timeoffsets between sensors is natural and convenient when modeling the trajectory in continuous time. In this paper, we choose the LiDAR sensor as the time baseline. Thus the timeoffsets of the camera and IMU with respect to the LiDAR need to be known or calibrated online. The main reason of choosing LiDAR as the base sensor is that local LiDAR map needs to be maintained, and once the timeoffset of LiDAR trajectory is changed, LiDAR map needs to be transformed accordingly, which is time-consuming. In contrast, the extra computation arising from the change of camera or IMU timestamps is insignificant.

V-C3 Loop Closure

We utilize Euclidean distance-based loop closure detection method [50] and adopt the two-stage continuous-time trajectory correction method [17] to tackle loop closures. After a loop closure optimization, we will remove the prior information of states since the current best-estimated states may be away from the linearized points, resulting in inappropriate prior constraints.

VI Experiment

| Method | Sensor(1) |

|

|

|

|

|

|

|

|

|

average | ||||||||||||||||||

| LIO-SAM(2) [50] | L, I | 0.075 | 0.069 | 0.101 | 0.076 | 0.090 | 0.137 | 0.089 | 0.083 | 0.140 | 0.096 | ||||||||||||||||||

| MILIOM (horz. LiDAR)(2) [37] | L, I | 0.104 | 0.065 | 0.063 | 0.083 | 0.072 | 0.058 | 0.076 | 0.081 | 0.088 | 0.077 | ||||||||||||||||||

| VIRAL (horz. LiDAR)(2) [51] | L, I | 0.064 | 0.051 | 0.060 | 0.063 | 0.042 | 0.039 | 0.051 | 0.056 | 0.060 | 0.054 | ||||||||||||||||||

| CLINS (w/o loop) [17] | L, I | 0.059 | 0.030 | 0.029 | 0.034 | 0.040 | 0.039 | 0.029 | 0.031 | 0.033 | 0.036 | ||||||||||||||||||

| CLIO (w/o loop) | L, I | 0.030 | 0.023 | 0.028 | 0.042 | 0.053 | 0.042 | 0.028 | 0.032 | 0.030 | 0.034 | ||||||||||||||||||

| CLIC (w/o loop) | L, I, C | 0.030 | 0.029 | 0.028 | 0.040 | 0.054 | 0.041 | 0.029 | 0.031 | 0.033 | 0.035 | ||||||||||||||||||

| MILIOM (2 LiDARs)(2) [37] | L2, I | 0.067 | 0.066 | 0.052 | 0.057 | 0.067 | 0.042 | 0.066 | 0.082 | 0.093 | 0.066 | ||||||||||||||||||

| VIRAL (2 LiDARs)(2) [51] | L2, I, C | 0.060 | 0.058 | 0.037 | 0.051 | 0.043 | 0.032 | 0.048 | 0.062 | 0.054 | 0.049 | ||||||||||||||||||

| CLIO2 (w/o loop) | L2, I | 0.040 | 0.021 | 0.031 | 0.030 | 0.037 | 0.034 | 0.033 | 0.037 | 0.044 | 0.034 | ||||||||||||||||||

| CLIC2 (w/o loop) | L2, I, C | 0.038 | 0.025 | 0.030 | 0.029 | 0.036 | 0.035 | 0.034 | 0.035 | 0.043 | 0.034 | ||||||||||||||||||

| (1) Sensors L,I,C are abbreviations of LiDAR, IMU, camera, respectively, L2 represents using two LiDAR sensors. | |||||||||||||||||||||||||||||

| (2) Results are from [51]. The horz. LiDAR in table means only the horizontal LiDAR is used for odometry. | |||||||||||||||||||||||||||||

| Method |

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|---|

| LIO-SAM (w/o loop) | 1.660 | 2.305 | 0.272 | ||||||

| CLIO (w/o loop) | 0.792 | 2.686 | 0.091 | ||||||

| LIO-SAM (w/ loop) | 0.544 | 0.592 | 0.272 | ||||||

| CLIO (w/ loop) | 0.408 | 0.381 | 0.091 |

| Method | Sensor | Handheld (1642s) | Jackal (2182s) |

|---|---|---|---|

| LIO-SAM (w/o loop) | L, I | 53.62 | 3.54 |

| CLIO (w/o loop) | L, I | fail | 3.43 |

| LVI-SAM (w/o loop) | L, I, C | 7.87 | 4.05 |

| CLIC (w/o loop) | L, I, C | 2.56 | 2.55 |

| LIO-SAM (w/ loop) | L, I | fail | 1.52 |

| CLIO (w/ loop) | L, I | fail | 1.01 |

| LVI-SAM (w/ loop) | L, I, C | 0.83 | 0.67 |

| CLIC (w/ loop) | L, I, C | 0.65 | 0.88 |

| CLIC (w/ loop, w/ calib) | L, I, C | 0.56 | 0.84 |

In the experiments, we evaluate the proposed continuous-time fixed-lag smoother in terms of pose estimation accuracy in various scenarios, systematically analyze the convergence speed and the accuracy of online timeoffset calibration, and disclose the runtime of the main stages in the proposed method. We assess a variety of sensor combinations powered by the continuous-time fixed-lag smoothing method, including

-

•

CLIO: one LIDAR and one IMU.

-

•

CLIO2: two LiDARs and one IMU.

-

•

CLIC: one LiDAR, one IMU and one camera.

-

•

CLIC2: two LiDAR, one IMU and one camera.

We adopt the Absolute Pose Error (APE) as evaluation metric to compare the proposed method against three LI systems (CLINS [17], LIO-SAM [50]), and three LIC systems (LVI-SAM [6], VIRAL-SLAM [51], LIC-Fusion 2.0 [10]) and two multi-LiADR systems (VIRAL-SLAM, MILIOM [37]). In all experiments, the temporal knot distance is 0.03s, and the duration of LI temporal sliding window length is 0.12 seconds while the visual keyframe sliding window size is 10.

VI-A Evaluation Datasets

We evaluate the following three publicly-available datasets and our self-collected datasets:

-

•

VIRAL [52]: The Visual-Inertial-RAnging-Lidar Dataset contains a variety of sensors, including two 16-beam Ouster LiDARs at 10Hz, two monocular cameras at 10Hz, and a VectorNav VN100 IMU at 385Hz, etc. The dataset comprises nine sequences collected indoors and outdoors by a MAV (Micro Aerial Vehicle).

-

•

NCD [53]: The Newer College Dataset is collected using a handheld device that consists of a 64-beam Ouster LiDAR at 10Hz with its internal IMU at 100Hz, and a stereo camera at 30Hz. The dataset combines built environments, open spaces and vegetated areas.

-

•

LVI-SAM [6]: The LVI-SAM Dataset features both handheld and vehicle (Jackal) platforms in outdoor open vegetated environments including geometrically degenerate surroundings with 16-beam LiDAR at 10Hz, camera at 20Hz, and IMU at 500Hz.

-

•

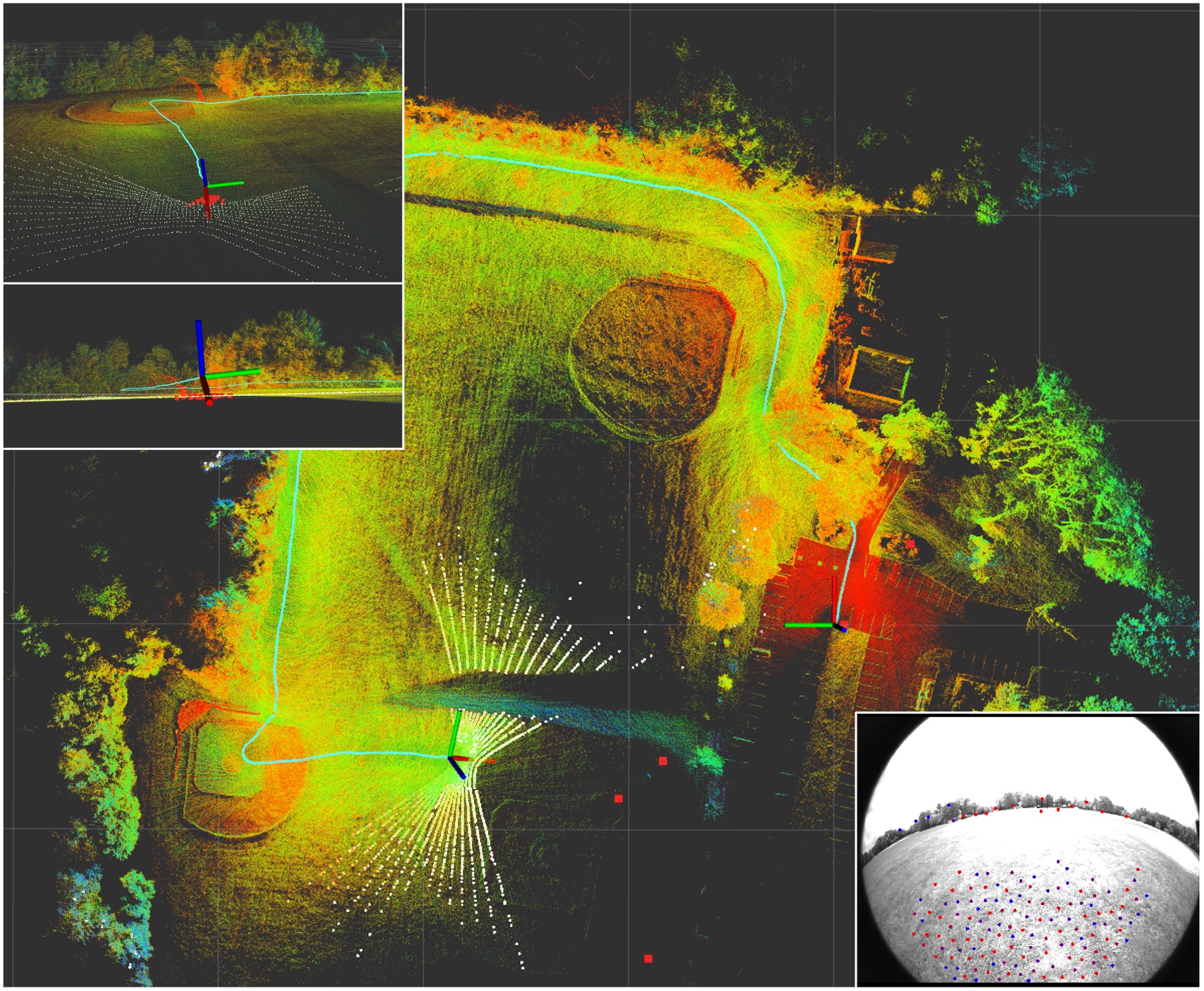

YQ: The self-collected YuQuan Dataset collected on our university campus consists of seven sequences using an electric car with a sensor rig mounted on the top shown in Fig. 6. The sensor rig comprises a 16-beam LiDAR at 10Hz, a camera at 20Hz, and an IMU at 400Hz. The trajectories of different sequences overlaid on Google map are shown in Fig. 7. GPS measurements are collected to provide groundtruth for this outdoor dataset.

-

•

Vicon Room: The self-collected Vicon Room Dataset shares the identical sensor rig as YQ dataset. This indoor dataset is collected with hand-held random motion, and a motion capture system is leveraged to provide groundtruth trajectories.

| Sequence |

|

|

|

|

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| YQ-01 (1005m) | 1.614 | 3.300 | 1.826 | 1.227 | 1.537 | ||||||||||

| YQ-02 (1021m) | 1.790 | 1.804 | 1.626 | 1.610 | 1.363 | ||||||||||

| YQ-03 (1058m) | 3.017 | 2.798 | 2.616 | 1.886 | 1.701 | ||||||||||

| YQ-04 (1233m) | 3.163 | 2.697 | 2.450 | 2.402 | 1.917 | ||||||||||

| YQ-05 (673m) | 1.721 | 1.550 | 1.537 | 8.465 | 1.439 | ||||||||||

| YQ-06 (1644m) | 3.753 | 3.862 | 3.082 | 3.682 | 1.607 | ||||||||||

| YQ-07 (414m) | 0.800 | 1.306 | 0.761 | 0.795 | 0.761 |

| Seq. |

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|---|

| Seq1 (43m) | 0.393 | 0.033 | 0.080 | ||||||

| Seq2 (84m) | 0.232 | 0.096 | 0.073 | ||||||

| Seq3 (34m) | 0.364 | 0.052 | 0.073 | ||||||

| Seq4 (53m) | 0.395 | 0.092 | 0.089 | ||||||

| Seq5 (50m) | 0.155 | 0.044 | 0.171 | ||||||

| Seq6 (88m) | 0.459 | 0.046 | 0.128 |

VI-B LiDAR-Inertial Fusion: CLIO

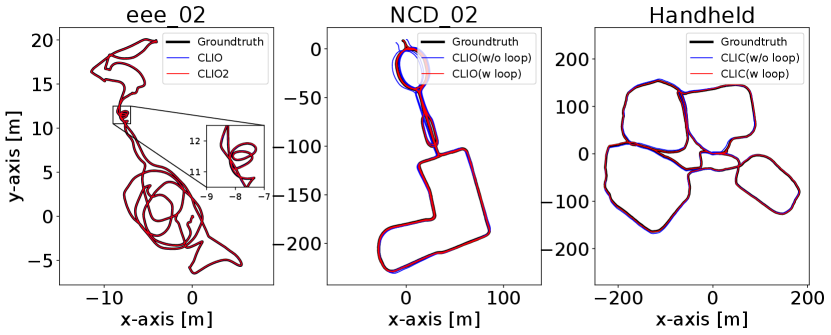

In this experiment, we evaluate the accuracy of continuous-time trajectory estimation of the CLIO system enabled by the proposed continuous-time fixed-lag smoothing. The results on VIRAL dataset are shown in Tab. III, CLIO outperforms other LI methods in most sequences and achieves an average RMSE of 0.034m. CLINS is much more accurate than all the discrete-time methods, including LIO-SAM [50], MILIOM [37], and VIRAL [51]. Compared to continuous-time method, CLINS [17], which only optimizes control points of trajectory within the time span of the newest LiDAR scan and lacks probabilistic marginalization, the proposed CLIO, optimizing all the control points in a sliding window involved with several LiDAR scans (see Fig. 4 and Fig. 8) and benefiting from marginalization, can achieve higher accuracy.

We further conduct evaluations in large-scale scenarios on the LVI-SAM dataset and NCD dataset (Tab. V and IV), CLIO achieves higher accuracy on most sequences compared to LIO-SAM. Especially, it is worth noting that on the NCD_06 sequence with the handheld sensor shaking vigorously, CLIO shows significant superiority over the discrete-time LIO-SAM. The improvement in accuracy could be attributed to our continuous-time trajectory formulation as it adequately addresses the motion distortion of LiDAR point cloud, which is of significant importance in highly-dynamic motion scenarios.

The presented continuous-time fixed-lag smoothing method supports fusion of multiple sensors conveniently. In Tab III, We also showcase the pose estimation accuracy of a system with the fusion of IMU and two LiDARs, dubbed CLIO2. We can see that CLIO2 has on-par accuracy with CLIO on most of the sequences, and shows more accurate pose estimation over the outdoor sequences (nya_xx).

VI-C LiDAR-Inertial-Camera Fusion: CLIC

In this section, we discuss the accuracy of the LiDAR-Inertial-Camera pose estimation system enabled by continuous-time fixed-lag smoothing. The experimental results on the VIRAL and LVI datasets are summarized in Tab. III and Tab. V. In addition, the evaluation results on our self-collected indoor and outdoor datasets are shown in Tab. VI and VII. CLIC tightly fuses LiDAR, IMU and camera measurements, and successfully generates good pose estimation in Handheld sequences collected over large open areas while CLIO fails, achieving significantly higher accuracy compared to the discrete-time method LVI-SAM [6]. It indicates that visual factors fused into CLIO can help improve the system’s applicability. Figure. 10 showcases a snapshot on the Handheld sequence where the LiDAR could only detect the ground while camera can track stable visual features to further constrain the optimization problem. As discussed in Sec. V-B2, we only optimize the control points within the LI temporal sliding window for computation efficiency reasons, and the other control points involved in visual keyframe sliding window are kept fixed during optimization, which might degrade the effects of visual factors. In Tab. VI and VII, LIC-Fusion 2.0 [10] shows pleasing performance in both indoor and outdoor scenarios, which benefits from reliable sliding-window plane-feature tracking. However, we can see that LIC-Fusion 2.0 has degraded performance in outdoor scenarios (see Tab. VI). The possible reason is that LIC-Fusion 2.0 relies on stably-tracked plane features to update LiDAR poses, while the outdoor scenarios are filled with tree clumps, and can fail to provide sufficient structural planes compared to indoors.

The accuracy of CLIC can be further improved when enabling online timeoffset calibration between different sensors (see Tab. V). In Fig. 9, we also show some representative estimated trajectories of different methods aligned with the ground truth trajectories.

| CLINS | CLIO | CLIC | |

|---|---|---|---|

| Update Local Map | 17.39 | 11.56 | 11.52 |

| Update Trajectory | 1184.34 | 58.55 | 80.64 |

| Update Prior | 0.00 | 8.45 | 8.44 |

| Others | 400.13 | 139.27 | 193.97 |

| Total Time Cost (second) | 1601.86 | 217.82 | 294.57 |

VI-D Online Temporal Calibration

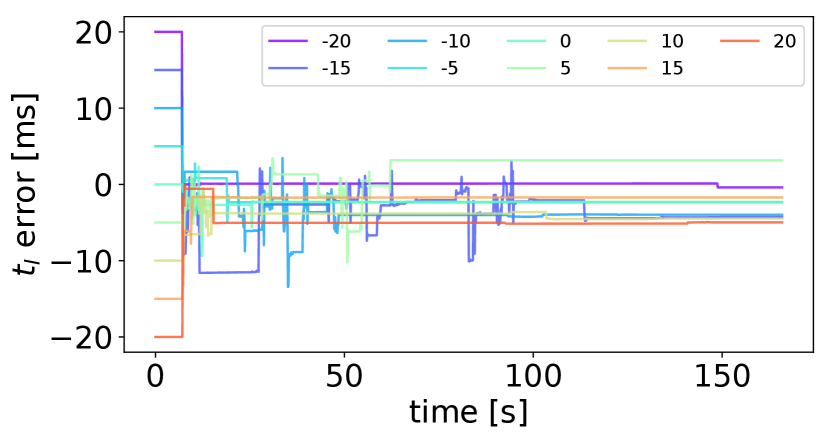

In this section, we examine the performance of online temporal calibration in the proposed multi-sensor fusion systems. Experiments are conducted on the NCD_01 sequence of NCD dataset [53]. We manually add additional timeoffsets of ms to the raw timestamps, and the timeoffest provided from NCD dataset is 0ms. Fig. 11 shows the error of estimated timeoffset over time. We start to estimate the timeoffset 5 seconds after the start of the system, and the IMU timeoffset converges quickly, with most of the trials converging within 3 seconds. In addition, the final estimated timeoffset mean(std) is -2.0ms(2.7ms).

VI-E Runtime analysis

We investigate runtime of the main modules in CLIO and CLIC in eee_01 sequence of VIRAL dataset [51]. Importantly, we notice the average runtime of proposed method remains almost constant whether in a long sequence or a short sequence. The method is implemented in C++ and executed on the desktop PC with an Intel i7-7700K and 32GB RAM, and Tab. VIII summarizes the time consumption of CLINS [17], CLIO and CLIC. The term Update Local Map in Tab. VIII represents update local LiDAR map for associating LiDAR feature, Update Trajectory represents solving the problem in Eq. 12 and Update Trajectory represents the marginalization process. The term Others includes feature extraction of image and LiDAR cloud, trajectory initialization, data association, et al. With analytical Jacobian computations for optimization and using sliding window and marginalization to bound computation complexity by the continuous-time fixed-lag smoothing, the enabled multi-sensor fusion methods (CLIO and CLIC) achieve real-time capability. In contrast, the continuous-time method, CLINS [17], consumes much more time and fails to run in real time. Here, real-time performance refers to the total elapsed time to process measurements from the sensors is less than the sensor data collection time. We also note that our current implementation is not optimal, and there is still significant space to further improve efficiency.

VII Conclusion

This paper exploits continuous-time fixed-lag smoothing for asynchronous multi-sensor fusion in a factor graph framework. Specifically, we propose to probabilistically marginalize old states and measurements out of the sliding window, and derive analytic Jacobians for continuous-time optimization. Benefiting from the nature of continuous-time trajectory formulation, heterogeneous multi-sensor measurements at any time instants can be seamlessly fused. Empowered by the continuous-time fixed-lag smoothing, we design the estimators at different sensor configurations, for tight fusion of multiple LiDARs, IMU and camera sensors. Our estimators, including LiDAR-Inertial systems and LiDAR-Inertial-Camera systems, show significant advances in pose estimation accuracy over the existing state-of-the-art methods. Online temporal calibration between sensors is also naturally supported in the continuous-time estimator. We demonstrate the accuracy and applicability of our method on three available public datasets and compare it with the state-of-art LiDAR-Inertial, LiDAR-Inertial-Camera and multi-LiDAR systems, respectively. Future work includes more efficient management of LiDAR map [54], utilizing more reliable LiDAR feature tracking and estimation method [10], and exploring the potential benefits of non-uniform B-spline.

VIII Acknowledgement

This work is supported by NSFC 62088101 Autonomous Intelligent Unmanned Systems. We would like to thank Kewei Hu and Xiangrui Zhao for fruitful discussion.

References

- [1] Raúl Mur-Artal and Juan D. Tardós “ORB-SLAM2: an Open-Source SLAM System for Monocular, Stereo and RGB-D Cameras” In IEEE Transactions on Robotics 33.5, 2017, pp. 1255–1262 DOI: 10.1109/TRO.2017.2705103

- [2] Ji Zhang and Sanjiv Singh “LOAM: Lidar Odometry and Mapping in Real-time.” In Robotics: Science and Systems 2.9, 2014

- [3] Tong Qin, Peiliang Li and Shaojie Shen “Vins-mono: A robust and versatile monocular visual-inertial state estimator” In IEEE Transactions on Robotics 34.4 IEEE, 2018, pp. 1004–1020

- [4] Xingxing Zuo, Patrick Geneva, Woosik Lee, Yong Liu and Guoquan Huang “LIC-Fusion: Lidar-inertial-camera odometry” In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019, pp. 5848–5854 IEEE

- [5] Xingxing Zuo, Wenlong Ye, Yulin Yang, Renjie Zheng, Teresa Vidal-Calleja, Guoquan Huang and Yong Liu “Multimodal localization: Stereo over LiDAR map” In Journal of Field Robotics 37.6 Wiley Online Library, 2020, pp. 1003–1026

- [6] Tixiao Shan, Brendan Englot, Carlo Ratti and Daniela Rus “LVI-SAM: Tightly-coupled lidar-visual-inertial odometry via smoothing and mapping” In 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 5692–5698 IEEE

- [7] Jiarong Lin and Fu Zhang “R3LIVE: A Robust, Real-time, RGB-colored, LiDAR-Inertial-Visual tightly-coupled state Estimation and mapping package” In arXiv preprint arXiv:2109.07982, 2021

- [8] Matteo Palieri, Benjamin Morrell, Abhishek Thakur, Kamak Ebadi, Jeremy Nash, Arghya Chatterjee, Christoforos Kanellakis, Luca Carlone, Cataldo Guaragnella and A. Agha-mohammadi “LOCUS - A Multi-Sensor Lidar-Centric Solution for High-Precision Odometry and 3D Mapping in Real-Time” In IEEE Robotics and Automation Letters 6.2 IEEE, 2020, pp. 421–428

- [9] Mingyang Li and Anastasios I Mourikis “Online temporal calibration for camera–IMU systems: Theory and algorithms” In The International Journal of Robotics Research 33.7 SAGE Publications Sage UK: London, England, 2014, pp. 947–964

- [10] Xingxing Zuo, Yulin Yang, Patrick Geneva, Jiajun Lv, Yong Liu, Guoquan Huang and Marc Pollefeys “LIC-Fusion 2.0: Lidar-inertial-camera odometry with sliding-window plane-feature tracking” In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020, pp. 5112–5119 IEEE

- [11] Tong Qin and Shaojie Shen “Online temporal calibration for monocular visual-inertial systems” In 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018, pp. 3662–3669 IEEE

- [12] Yulin Yang, Patrick Geneva, Xingxing Zuo and Guoquan Huang “Online imu intrinsic calibration: Is it necessary?” In Robotics: Science and Systems, 2020

- [13] Xiaolei Lang, Jiajun Lv, Jianxin Huang, Yukai Ma, Yong Liu and Xingxing Zuo “Ctrl-VIO: Continuous-Time Visual-Inertial Odometry for Rolling Shutter Cameras” In IEEE Robotics and Automation Letters 7.4 IEEE, 2022, pp. 11537–11544

- [14] Patrick Geneva, Kevin Eckenhoff, Yulin Yang and Guoquan Huang “Lips: Lidar-inertial 3d plane slam” In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2018, pp. 123–130 IEEE

- [15] Christiane Sommer, Vladyslav Usenko, David Schubert, Nikolaus Demmel and Daniel Cremers “Efficient Derivative Computation for Cumulative B-Splines on Lie Groups” In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 11148–11156

- [16] Thomas Lowe, Soohwan Kim and Mark Cox “Complementary perception for handheld slam” In IEEE Robotics and Automation Letters 3.2 IEEE, 2018, pp. 1104–1111

- [17] Jiajun Lv, Kewei Hu, Jinhong Xu, Yong Liu, Xiushui Ma and Xingxing Zuo “CLINS: Continuous-Time Trajectory Estimation for LiDAR-Inertial System” In 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021, pp. 6657–6663 IEEE

- [18] Joern Rehder, Janosch Nikolic, Thomas Schneider, Timo Hinzmann and Roland Siegwart “Extending kalibr: Calibrating the extrinsics of multiple IMUs and of individual axes” In 2016 IEEE International Conference on Robotics and Automation (ICRA), 2016, pp. 4304–4311 IEEE

- [19] Jiajun Lv, Jinhong Xu, Kewei Hu, Yong Liu and Xingxing Zuo “Targetless Calibration of LiDAR-IMU System Based on Continuous-time Batch Estimation” In arXiv preprint arXiv:2007.14759, 2020

- [20] Elias Mueggler, Guillermo Gallego, Henri Rebecq and Davide Scaramuzza “Continuous-time visual-inertial odometry for event cameras” In IEEE Transactions on Robotics 34.6 IEEE, 2018, pp. 1425–1440

- [21] Chanoh Park, Peyman Moghadam, Jason L Williams, Soohwan Kim, Sridha Sridharan and Clinton Fookes “Elasticity meets continuous-time: Map-centric dense 3D LiDAR SLAM” In IEEE Transactions on Robotics IEEE, 2021

- [22] Tue-Cuong Dong-Si and Anastasios I Mourikis “Motion tracking with fixed-lag smoothing: Algorithm and consistency analysis” In 2011 IEEE International Conference on Robotics and Automation, 2011, pp. 5655–5662 IEEE

- [23] Stefan Leutenegger, Simon Lynen, Michael Bosse, Roland Siegwart and Paul Furgale “Keyframe-based visual–inertial odometry using nonlinear optimization” In The International Journal of Robotics Research 34.3 SAGE Publications Sage UK: London, England, 2015, pp. 314–334

- [24] Anqi Joyce Yang, Can Cui, Ioan Andrei Bârsan, Raquel Urtasun and Shenlong Wang “Asynchronous multi-view slam” In 2021 IEEE International Conference on Robotics and Automation (ICRA), 2021, pp. 5669–5676 IEEE

- [25] Ji Zhang and Sanjiv Singh “Laser–visual–inertial odometry and mapping with high robustness and low drift” In Journal of Field Robotics 35.8 Wiley Online Library, 2018, pp. 1242–1264

- [26] Zengyuan Wang, Jianhua Zhang, Shengyong Chen, Conger Yuan, Jingqian Zhang and Jianwei Zhang “Robust high accuracy visual-inertial-laser slam system” In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019, pp. 6636–6641 IEEE

- [27] Shehryar Khattak, Huan Nguyen, Frank Mascarich, Tung Dang and Kostas Alexis “Complementary multi–modal sensor fusion for resilient robot pose estimation in subterranean environments” In International Conference on Unmanned Aircraft Systems (ICUAS), 2020, pp. 1024–1029 IEEE

- [28] Michael Bloesch, Michael Burri, Sammy Omari, Marco Hutter and Roland Siegwart “Iterated extended Kalman filter based visual-inertial odometry using direct photometric feedback” In The International Journal of Robotics Research 36.10 SAGE Publications Sage UK: London, England, 2017, pp. 1053–1072

- [29] David Wisth, Marco Camurri, Sandipan Das and Maurice Fallon “Unified multi-modal landmark tracking for tightly coupled lidar-visual-inertial odometry” In IEEE Robotics and Automation Letters 6.2 IEEE, 2021, pp. 1004–1011

- [30] David Wisth, Marco Camurri and Maurice Fallon “VILENS: Visual, inertial, lidar, and leg odometry for all-terrain legged robots” In arXiv preprint arXiv:2107.07243, 2021

- [31] Jiarong Lin, Chunran Zheng, Wei Xu and Fu Zhang “R LIVE: A Robust, Real-Time, LiDAR-Inertial-Visual Tightly-Coupled State Estimator and Mapping” In IEEE Robotics and Automation Letters 6.4 IEEE, 2021, pp. 7469–7476

- [32] Shibo Zhao, Hengrui Zhang, Peng Wang, Lucas Nogueira and Sebastian Scherer “Super odometry: IMU-centric LiDAR-visual-inertial estimator for challenging environments” In 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021, pp. 8729–8736 IEEE

- [33] Yupeng Jia, Haiyong Luo, Fang Zhao, Guanlin Jiang, Yuhang Li, Jiaquan Yan, Zhuqing Jiang and Zitian Wang “Lvio-Fusion: A Self-adaptive Multi-sensor Fusion SLAM Framework Using Actor-critic Method” In 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2021, pp. 286–293 IEEE

- [34] Christian Forster, Luca Carlone, Frank Dellaert and Davide Scaramuzza “On-manifold preintegration for real-time visual–inertial odometry” In IEEE Transactions on Robotics 33.1 IEEE, 2016, pp. 1–21

- [35] Jakob Engel, Vladlen Koltun and Daniel Cremers “Direct sparse odometry” In IEEE transactions on pattern analysis and machine intelligence 40.3 IEEE, 2017, pp. 611–625

- [36] Andrzej Reinke, Matteo Palieri, Benjamin Morrell, Yun Chang, Kamak Ebadi, Luca Carlone and Ali-Akbar Agha-Mohammadi “LOCUS 2.0: Robust and Computationally Efficient Lidar Odometry for Real-Time 3D Mapping” In IEEE Robotics and Automation Letters, 2022, pp. 1–8 DOI: 10.1109/LRA.2022.3181357

- [37] Thien-Minh Nguyen, Shenghai Yuan, Muqing Cao, Lyu Yang, Thien Hoang Nguyen and Lihua Xie “MILIOM: Tightly coupled multi-input lidar-inertia odometry and mapping” In IEEE Robotics and Automation Letters 6.3 IEEE, 2021, pp. 5573–5580

- [38] Paul Furgale, Timothy D Barfoot and Gabe Sibley “Continuous-time batch estimation using temporal basis functions” In 2012 IEEE International Conference on Robotics and Automation, 2012, pp. 2088–2095 IEEE

- [39] Shenghao Li, Shuang Liu, Qunfei Zhao and Qiaoyang Xia “Quantized Self-supervised Local Feature for Real-time Robot Indirect VSLAM” In IEEE/ASME Transactions on Mechatronics IEEE, 2021

- [40] Tom Lyche and Knut Morken “Spline methods draft” In Department of Informatics, Center of Mathematics for Applications, University of Oslo, Oslo, 2008, pp. 3–8

- [41] Adrian Haarbach, Tolga Birdal and Slobodan Ilic “Survey of higher order rigid body motion interpolation methods for keyframe animation and continuous-time trajectory estimation” In 2018 International Conference on 3D Vision (3DV), 2018, pp. 381–389 IEEE

- [42] Kaihuai Qin “General matrix representations for B-splines” In Proceedings Pacific Graphics’ 98. Sixth Pacific Conference on Computer Graphics and Applications (Cat. No. 98EX208), 1998, pp. 37–43 IEEE

- [43] Jiajun Lv, Xingxing Zuo, Kewei Hu, Jinhong Xu, Guoquan Huang and Yong Liu “Observability-Aware Intrinsic and Extrinsic Calibration of LiDAR-IMU Systems” In IEEE Transactions on Robotics IEEE, 2022

- [44] Sameer Agarwal, Keir Mierle and The Ceres Solver Team “Ceres Solver”, 2022 URL: https://github.com/ceres-solver/ceres-solver

- [45] Yulin Yang, Patrick Geneva, Xingxing Zuo, Kevin Eckenhoff, Yong Liu and Guoquan Huang “Tightly-coupled aided inertial navigation with point and plane features” In International Conference on Robotics and Automation (ICRA), 2019, pp. 6094–6100 IEEE

- [46] Gabe Sibley, Larry Matthies and Gaurav Sukhatme “Sliding window filter with application to planetary landing” In Journal of Field Robotics 27.5 Wiley Online Library, 2010, pp. 587–608

- [47] Jianbo Shi “Good features to track” In 1994 Proceedings of IEEE conference on computer vision and pattern recognition, 1994, pp. 593–600 IEEE

- [48] Bruce D Lucas and Takeo Kanade “An iterative image registration technique with an application to stereo vision” Vancouver, 1981

- [49] Patrick Geneva, Kevin Eckenhoff, Woosik Lee, Yulin Yang and Guoquan Huang “OpenVINS: A Research Platform for Visual-Inertial Estimation” In Proc. of the IEEE International Conference on Robotics and Automation, 2020

- [50] Tixiao Shan, Brendan Englot, Drew Meyers, Wei Wang, Carlo Ratti and Daniela Rus “Lio-sam: Tightly-coupled lidar inertial odometry via smoothing and mapping” In arXiv preprint arXiv:2007.00258, 2020

- [51] Thien-Minh Nguyen, Shenghai Yuan, Muqing Cao, Thien Hoang Nguyen and Lihua Xie “Viral slam: Tightly coupled camera-imu-uwb-lidar slam” In arXiv preprint arXiv:2105.03296, 2021

- [52] Thien-Minh Nguyen, Shenghai Yuan, Muqing Cao, Yang Lyu, Thien H Nguyen and Lihua Xie “Ntu viral: A visual-inertial-ranging-lidar dataset, from an aerial vehicle viewpoint” In The International Journal of Robotics Research 41.3 SAGE Publications Sage UK: London, England, 2022, pp. 270–280

- [53] Milad Ramezani, Yiduo Wang, Marco Camurri, David Wisth, Matias Mattamala and Maurice Fallon “The Newer College Dataset: Handheld LiDAR, Inertial and Vision with Ground Truth” In 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020, pp. 4353–4360 DOI: 10.1109/IROS45743.2020.9340849

- [54] Chunge Bai, Tao Xiao, Yajie Chen, Haoqian Wang, Fang Zhang and Xiang Gao “Faster-LIO: Lightweight Tightly Coupled Lidar-Inertial Odometry Using Parallel Sparse Incremental Voxels” In IEEE Robotics and Automation Letters 7.2 IEEE, 2022, pp. 4861–4868

IX Appendix

In the following, we first derive the Jacobians of the continuous-time trajectory value w.r.t control points (Sec. IV-A), then derive the Jacobians of residuals w.r.t trajectory value (Sec. IV-B, IV-C, IV-D, IV-E) and finally use chain rule to get the Jacobians of residuals w.r.t estimated state (Eq. (11)). All the analytic Jacobians are utilized in the factor graph optimization (Eq. (12)), which significantly speeds up the problem-solving. The number of control points in the error state is

| (26) |

where is B-Spline order and is the number of knot intervals in the temporal sliding window (see Sec. V-A2). Note that, not every control point in the state vector (Eq. (11)) is involved in a specific factor, thus we define there are (or ) control points before (or after) the involved control points. Here, we also define as the number of landmarks in the state.

IX-A Jacobian for Control Points

We follow the notation in [15] to give the Jacobians of the trajectory value to the control points:

| (27) |

for and for we obtain

| (28) |

where is the queried pose or velocity. Note that we follow the recursive scheme in [15] to efficient compute Jacobians of queried pose , angular velocity and linear acceleration .

IX-B Jacobians for IMU Factor

Given the IMU factor defined at Eq. (18), the Jacobians w.r.t error state is

| (29) | ||||

where

| (30) | ||||

| (31) | ||||

| (32) | ||||

| (33) |

and

| (34) | ||||

| (35) |

IX-C Jacobians for Bias Factor

Given the Bias factor defined at Eq. (19), the Jacobians w.r.t error state is

| (36) | ||||

where

| (37) |

IX-D Jacobians for LiDAR Factor

Given the LiDAR factor defined at Eq. (14), the Jacobians w.r.t error state is

| (38) | ||||

where

| (39) | ||||

| (40) |

and

| (41) |

IX-E Jacobians for Visual Factor

The visual factor (Eq. (21)) is related to two poses at time instants, and , and the Jacobians w.r.t error state is

| (42) |

where

| (43) | ||||

| (44) | ||||

| (45) | ||||

| (46) | ||||

| (47) |

and

| (48) | ||||

| (49) | ||||

| (50) | ||||

| (51) |