[1]\fnmTao \surZhuo

1]\orgdivShandong Artificial Intelligence Institute, \orgnameQilu University of Technology (Shandong Academy of Sciences, \orgaddress\cityJinan, \countryChina

2]\orgdivCollege of Computer Science and Technology, \orgnameZhejiang University, \orgaddress\cityHangZhou, \countryChina

3]\orgdivSchool of Computing, \orgnameNational University of Singapore, \orgaddress\countrySingapore

Continual Learning with Strong Experience Replay

Abstract

Continual Learning (CL) aims at incrementally learning new tasks without forgetting the knowledge acquired from old ones. Experience Replay (ER) is a simple and effective rehearsal-based strategy, that optimizes the model with current training data and a subset of old samples stored in a memory buffer. Although various ER extensions have been developed in recent years, the updated model suffers from the risk of overfitting the memory buffer when a few previous samples are available, leading to forgetting. In this work, we propose a Strong Experience Replay (SER) method that utilizes two consistency losses between the new model and the old one to further reduce forgetting. Besides distilling past experiences from the data stored in the memory buffer for backward consistency, we additionally explore future experiences of the old model mimicked on the current training data for forward consistency. Compared to previous methods, SER effectively improves the model generalization on previous tasks and preserves the learned knowledge. Experimental results on multiple image classification datasets show that our SER method surpasses the state-of-the-art methods by a noticeable margin. Our code is available at: https://github.com/visiontao/cl.

keywords:

Continual Learning, Experience Replay, Catastrophic Forgetting, Model Consistency1 Introduction

Deep neural networks have been widely used in computer vision tasks, such as object detection in images and action recognition in videos. Conventional deep neural networks are usually trained offline with the assumption that all data is available. However, for a stream of nonstationary tasks, a deep model has to continually learn new tasks without forgetting the acquired knowledge from old ones [19, 28]. Due to the previously seen samples being unavailable for joint training, simply finetuning the model usually leads to drastic performance degradation on old tasks. This phenomenon is known as catastrophic forgetting and it seriously limits the applications in practice. To address this issue, Continual Learning (CL) [23, 7] aims at preserving the acquired knowledge when learning new tasks in dynamic environments.

The core challenge in CL is to strike an optimal balance between plasticity and the stability of the model [18]. Without revisiting any previously seen samples, it is hard to guarantee that a trained deep model with updated parameters still well fits the data of previous tasks. Therefore, the rehearsal-based technique [7, 14, 16, 35, 3, 41] reduces the difficulty of CL by jointly training the model on the new data and a subset of previous data stored in the memory buffer. To leverage the memory buffer efficiently, Experience Replay (ER) [27] employs a reservoir sampling method to update the memory buffer over time. Then each data has the same probability to be stored in the memory buffer without knowing the length of the input data stream in advance. Based on such a simple strategy, ER effectively retains the acquired knowledge in various scenarios.

In order to further reduce the number of samples stored in the memory buffer and further mitigate forgetting, recent CL methods [7, 2, 31] extend ER with different strategies. For example, MIR [1] retrieves interfered samples stored in the memory buffer instead of random sampling. MER [27] combines experience replay with meta-learning to maximize transfer and minimize interference based on future gradients. DRI [39] produces imaginary data and leverages knowledge distillation [15] for retrieving past experiences in a balanced manner. Additionally, to prevent drastic model changes, rehearsal-based methods often incorporate a consistency loss for the model training. DER++ [7] mixes rehearsal with knowledge distillation in the memory buffer. CLS-ER [2] adopts a dual-memory method to maintain short-term and long-term semantic memories, and it also incorporates a consistency loss to prevent drastic model changes. TAMiL [5] entails both experience rehearsal and self-regulated scalable neurogenesis to further mitigate catastrophic forgetting. However, these methods suffer from the risk of overfitting the memory buffer when a few previous samples are available [35], leading to forgetting.

In this work, we propose a Strong Experience Replay (SER) method that extends ER with two consistency losses. Besides memorizing the label of previous data, we seek consistent prediction distributions between the new model and the old one. Ideally, when a new model has the same output logits as its original ones, the acquired knowledge can be regarded as well preserved. However, due to the samples of new classes being unseen to the old model, this goal cannot be achieved in practice. Instead, we expect the output logits of an updated model can approximate its original ones by using a consistency loss. Different from previous methods [7, 38, 2] that seek consistency on the data stored in a limited memory buffer only, we additionally seek consistent predictions on the current training data, improving the model generalization on previous tasks and further reducing forgetting.

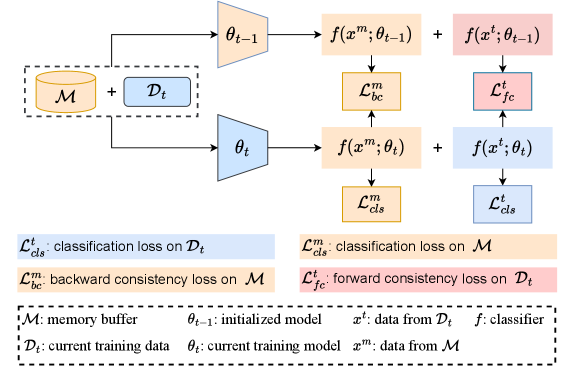

As illustrated in Figure 1, the backward consistency memorizes past experiences from the previous samples stored in the memory buffer. However, when a limited number of previous samples are available, using backward consistency may lead to a local optimum solution, resulting in overfitting. To alleviate this issue, we develop a forward consistency loss to improve the model generalization on previous tasks. Specifically, by mimicking the future experiences of the old model on the current training data, seeking consistent predictions will increase the overlapping predictions [20] between the new model and the old one. Besides, since the parameters of the old model are frozen, the previous knowledge can be well preserved when optimizing the model on new tasks. Compared to backward consistency, forward consistency leverages the complete training data and it can propagate prediction distributions to new tasks over time.

The proposed SER method effectively reduces the forgetting issue by combining both backward and forward consistency. Compared to the closest method DER++ [7], the SER method additionally incorporates a forward consistency loss for model training. Despite its simplicity, extensive experiments on multiple image classification benchmark datasets show that SER outperforms DER++ by a large margin in class-incremental scenarios, e.g., 9.2% on the CIFAR100 dataset (20 tasks) and more than 17.54% on the TinyImageNet dataset (10 tasks), see Table 1 and 2. Furthermore, comprehensive experiments on multiple image classification datasets also demonstrate the superiority of our SER method surpasses the state-of-the-art methods by a noticeable margin, especially when a few previous samples are available.

In summary, our contributions are as follows:

-

1.

We propose a forward consistency loss that improves model generalization by seeking consistent prediction distributions on the current training data. Compared to the backward consistency loss used in previous approaches, the forward consistency loss can efficiently leverage the complete training data and propagate consistent prediction distributions to new tasks over time.

-

2.

We propose a Strong Experience Replay (SER) method that distills the acquired knowledge of a trained model from both past experiences stored in the memory buffer and future experiences mimicked on the current training data. Despite its simplicity, SER greatly reduces the forgetting issue.

-

3.

The proposed method is simple and effective, extensive experiments on multiple image classification datasets show that SER outperforms the state-of-the-art methods by a large margin when a few previous samples are available.

2 Related Work

2.1 Rehearsal-based Methods

Rehearsal-based methods [26, 29, 3, 39, 43] reduce catastrophic forgetting by replaying a subset of previously seen samples stored in a memory buffer. Experience Replay (ER) [27] is a simple but effective rehearsal-based method that jointly trains the model with current data and a mini-batch of randomly selected old samples. Besides, it applies a reservoir sampling strategy to update the memory buffer over time. Based on the core idea of ER, recent CL approaches further reduce forgetting with various techniques. For example, GSS [1] stores optimally chosen examples in the memory buffer. GEM [24] and AGEM [12] leverage episodic memory to avoid forgetting and favor positive backward transfer. ERT [8] adopts a balanced sampling method and bias control. MER [27] considers experience replay as a meta-learning problem to maximize transfer and minimize interference. iCaRL [26] uses a nearest-mean-of-exemplars classifier and an additional memory buffer for model training. Besides, it adopts a knowledge distillation method to reduce forgetting further. RM [3] develops a novel memory management strategy based on per-sample classification uncertainty and data augmentation to enhance the sample diversity in the memory. DER++ [7] mixes rehearsal with knowledge distillation and regularization. CLS-ER [2] adopts a dual-memory experience replay method to maintain short-term and long-term semantic memories. LVT [38] designs a vision transformer for continual learning with replay. SCoMMER [31] enforces activation sparsity along with a complementary semantic dropout mechanism to encourage consistency. Different from these methods that distill past experiences from a limited memory buffer only, we also explore future experiences mimicked on the current training data, which improves the model generalization on previous tasks.

2.2 Regularization-based Methods

Regularization-based methods usually incorporate an additional penalty term into the loss function to prevent model changes in parameter or prediction spaces [17]. Elastic Weight Consolidation (EWC) [19, 32], Synaptic Intelligence (SI) [44], and Riemmanian Walk (RW) [10] prevent the parameter changes between the new model and the old one. LwF [23] and PASS [46] mitigate forgetting with task-specific knowledge distillation. Without any previous data for replay, recent studies [7, 25] show that these methods usually fail to handle class-incremental scenarios. Therefore, the regularization-based strategy is often simultaneously used with other CL approaches for better performance, such as iCaRL [26] and DER++ [7, 6]. In this work, besides memorizing ground truth labels of previous samples, we also seek consistent logit distributions. Therefore, we incorporate two consistency losses as model regularization to reduce forgetting.

2.3 Other CL Methods

The early CL methods usually adopt a task identity to learn task-specific knowledge. For example, PNN (Progressive Neural Networks) [30] instantiates new networks incrementally and adopts a task identity at both training and inference times. However, identity might be unknown in inference time, limiting its applications. Recently, DER [42] expanded a new backbone per incremental task without using task identities during the testing time. FOSTER [37] adds an extra model compression stage to maintain limited model storage. L2P and Dualprompt [41, 40] adopt a prompt pool to learn and extract task-specific knowledge. In addition, for rehearsal-based methods, storing raw samples is impossible sometimes due to privacy concerns, some generative approaches [33, 34] attempt to use a data generator (such as GAN [13]) to produce synthetic data for replay. But it needs a long time for model training and the generated data might be unsatisfactory for complex datasets. Additionally, to distill more knowledge from old tasks, DMC [45] and GD [22] sample external data to assist model training. Although much progress has been achieved in recent years, efficient CL with less forgetting remains a challenging problem.

3 Method

In this section, we first introduce the problem formulation of continual learning and the conventional experience replay method. Then we describe the proposed Strong Experience Replay (SER) method in detail.

3.1 Problem Formulation

Formally, given a sequence of nonstationary datasets , represents the samples with corresponding ground truth labels of the -th task. At a time step, , the goal of a CL method is to sequentially update the model on without forgetting the previously learned knowledge on .

In general, when all training data is available in advance, the loss function of conventional learning can be formulated as:

| (1) |

where denotes cross-entropy loss and denotes the network’s output logits, represents the model parameter to be optimized.

By contrast, for the -th task with training data in CL, given a trained model with the initialized parameter , the conventional model finetuning can be achieved by minimizing a classification loss function as:

| (2) |

However, when the previous data is not accessible, simply updating the model with Equation 2 usually leads to a drastic performance drop on previous datasets , i.e. catastrophic forgetting.

3.2 Experience Replay (ER)

ER [27] is a classical rehearsal-based strategy that alleviates forgetting by replaying a subset of previously seen data stored in a permanent memory buffer . By jointly training the model on current training data and previous data stored in , the training loss is computed as:

| (3) |

where is a classification loss function on the samples stored in the memory buffer and it is used to preserve the acquired knowledge as:

| (4) |

Theoretically, the larger the memory buffer, the more knowledge would be preserved. For the extreme case, when all the previous data is stored in , it is equivalent to the joint training in one task. Unfortunately, due to privacy or storage concerns, the previous data might be unavailable in practice, storing a subset of previous data in reduces the difficulty of CL.

To leverage the limited memory buffer effectively, ER adopts a reservoir sampling method [36] to update the buffer over time. In this way, when we randomly select samples from the input stream, each sample still has the same probability of being stored in the memory buffer without knowing the length of the input data stream in advance. Although ER alleviates the forgetting issue effectively and outperforms many elaborately designed CL approaches [7], its performance may drastically drop when few samples are available [35].

3.3 Strong Experience Replay (SER)

To further mitigate the forgetting issue, various ER extensions [7, 41, 2, 40] are developed to preserve learned knowledge when learning new tasks. However, these methods may overfit the memory buffer when a few samples are available. In this work, we mix the rehearsal-based strategy with model regularization and we extend ER by incorporating two consistency losses, which helps the CL model consistently evolve during training. Ideally, if an updated model still has the same outputs as its original ones when learning new tasks, it can be considered that the previously learned knowledge is not forgotten. However, this goal cannot be achieved in practice because of disjoint class labels in class-incremental scenarios. Instead, we aim to obtain approximate output logits between the new model and the old one.

Our method consists of a backward consistency loss and a forward consistency loss. Specifically, the backward consistency is used to distill the model’s past experiences on the data stored in the memory buffer. On the other hand, the forward consistency is used to distill the model’s future experiences on the current training data, as the new data is from the future for the old model. An overview of the proposed SER method is illustrated in Figure 2. Unlike the backward consistency used in DER++ [7], forward consistency can leverage all training data and it can propagate consistent predictions to new tasks over time. Details of these two consistency losses are as follows.

Backward consistency. For the -th task, given a trained model with initialized parameter , we measure the backward consistency between two models on the memory buffer with a Mean Square Error (MSE) loss:

| (5) |

As discussed in DER++ [7], the optimization of Equation 5 is equivalent to minimizing the KL divergence loss in knowledge distillation [15]. Instead of using smoothing softmax responses, directly using the logits with an MSE loss can avoid information loss occurring in prediction spaces due to the squashing function (e.g., softmax).

In addition, to reduce the computational cost, we store the logits of the network outputs when updating the memory buffer [7]. Let denote the output logits of past experience on sample , by replacing with , then Equation 5 can be rewritten as:

| (6) |

To clearly illustrate the core idea of the proposed method, we directly use in Figure 2, instead of stored in the memory buffer. Based on the classification loss on the samples stored in the memory buffer and backward consistency loss, the updated model will mimic its training trajectory and its output logits will approximate its original ones, reducing the forgetting issue.

Forward consistency. Intuitively, the previous data stored in the memory buffer only contains a small subset of . When a few previous samples are available, using a backward consistency alone cannot properly make the outputs of the new model approximate its original ones. As a result, preserving the learned knowledge on a limited memory buffer suffers from the risk of overfitting the , leading to the forgetting problem.

To address this issue, we design an additional consistency loss to distill future experiences on . In particular, we first compute the output logits of the old model on , and then employ an MSE loss as in Equation 6 to measure the new consistency as:

| (7) |

Since data in is from a future task for the old model, we simply refer to as a “forward consistency” loss.

As illustrated in Figure 1, the backward consistency retains a subset of past experiences stored in . In contrast, the forward consistency can propagate the future experiences mimicked on to new tasks over time. Therefore, forward consistency can effectively leverage the complete training data. Moreover, during the model training, the parameters of the old mode are frozen, seeking consistent predictions on the current training data enhances the updated model has a larger overlap with the old model, improving the model generalization on old tasks and further preserving the acquired knowledge.

Total training loss. Based on the above analysis, the proposed SER method combines two classification losses and two consistency losses during model training. Formally, the total loss used in SER is represented as:

| (8) |

where and are the balancing parameters.

The core challenge in CL is the stability-plasticity dilemma. For the -th task, focuses on the plasticity by finetuning the model on the new dataset ; and the other losses focus on the stability by memorizing the experiences of the old model. Specifically, and preserve past experiences on the memory buffer . retains the future experiences on the current training data . Therefore, compared to the classical ER method, the two consistency losses used in SER effectively improve the stability of the model, and they prevent model changes in prediction spaces and further reduce forgetting.

Input: initialized model parameter , dataset , memory buffer , balancing factors and .

Output: optimized parameter , updated memory buffer .

Simple Implementation. The proposed SER algorithm is described in Algorithm 1, where “aug” denotes the data augmentation. During model training, we randomly sample one batch of samples from the memory buffer and the augmented data is used for classification and backward consistency. After each training batch, a reservoir sampling method is employed to update the memory buffer. To reduce the computational cost, we store the original training data with its output logits in the memory buffer. It can be seen that SER does not need any complex computation, which makes it easy to implement. Besides, notice that we do not use the consistency losses for the first task, as there is no previous knowledge to be preserved.

4 Experiments

We evaluate the proposed method in all three CL settings: Class Incremental Learning (Class-IL), Task Incremental Learning (Task-IL), and Domain Incremental Learning (Domain-IL). For Class-IL, the model needs to incrementally learn new classes in each subsequent task and the task identity is unknown at inference. For Task-IL, the task identity is given during testing. By selecting corresponding classifiers for inference, Task-IL is easier than Class-IL. For Domain-IL, the classes remain the same in each task, but the data distributions are different.

In our experiments, we strictly follow the experimental settings in DER++ [7] and LVT [38]. To evaluate the performance of our CL method, we do not use any task identity to select the task-specific knowledge at training, even including the Task-IL. Moreover, the network architecture used in our method is fixed for all tasks, thus respecting the constant memory constraint.

| Buffer | Method | 5 tasks | 10 tasks | 20 tasks | |||

|---|---|---|---|---|---|---|---|

| Class-IL | Task-IL | Class-IL | Task-IL | Class-IL | Task-IL | ||

| - | Joint | 70.21 0.15 | 85.25 0.29 | 70.21 0.15 | 91.24 0.27 | 71.25 0.22 | 94.02 0.33 |

| SGD | 17.27 0.14 | 42.24 0.33 | 8.62 0.09 | 34.40 0.53 | 4.73 0.06 | 40.83 0.46 | |

| - | oEWC [32] | 16.92 0.28 | 31.51 1.02 | 8.11 0.47 | 23.21 0.49 | 4.44 0.17 | 26.48 2.07 |

| SI [44] | 17.60 0.09 | 43.64 1.11 | 9.39 0.61 | 29.32 2.03 | 4.47 0.07 | 32.53 2.70 | |

| LwF [23] | 18.16 0.18 | 30.61 1.49 | 9.41 0.06 | 28.69 0.34 | 4.82 0.06 | 39.38 1.10 | |

| 200 | ER [27] | 21.94 0.83 | 62.41 0.93 | 14.23 0.12 | 62.57 0.68 | 9.90 1.67 | 70.82 0.74 |

| GEM [24] | 19.73 0.34 | 57.13 0.94 | 13.20 0.21 | 62.96 0.67 | 8.29 0.18 | 66.28 1.49 | |

| A-GEM [12] | 17.97 0.26 | 53.55 1.13 | 9.44 0.29 | 55.04 0.87 | 4.88 0.09 | 41.30 0.56 | |

| iCaRL [26] | 30.12 2.45 | 55.70 1.87 | 22.38 2.79 | 60.81 2.48 | 12.62 1.43 | 62.17 1.93 | |

| FDR [4] | 22.84 1.49 | 63.75 0.49 | 14.85 2.76 | 65.88 0.60 | 6.70 0.79 | 59.13 0.73 | |

| GSS [1] | 19.44 2.83 | 56.11 1.50 | 11.84 1.46 | 56.24 0.98 | 6.42 1.24 | 51.64 2.89 | |

| HAL [11] | 13.21 1.24 | 35.61 2.95 | 9.67 1.67 | 37.49 2.16 | 5.67 0.91 | 53.06 2.87 | |

| DER++ [7] | 27.46 1.16 | 62.55 2.31 | 21.76 0.78 | 63.54 0.77 | 15.16 1.53 | 71.28 0.91 | |

| ERT [8] | 21.61 0.87 | 54.75 1.32 | 12.91 1.46 | 58.49 3.12 | 10.14 1.96 | 62.90 2.72 | |

| RM [3] | 32.23 1.09 | 62.05 0.62 | 22.71 0.93 | 66.28 0.60 | 15.15 2.14 | 68.21 0.43 | |

| LVT [38] | 39.68 1.36 | 66.92 0.40 | 35.41 1.28 | 72.80 0.49 | 20.63 1.14 | 73.41 0.67 | |

| SER (Ours) | 47.96 1.03 | 77.12 0.34 | 35.29 1.88 | 79.85 0.63 | 24.35 1.71 | 78.86 1.69 | |

| 500 | ER [27] | 27.97 0.33 | 68.21 0.29 | 21.54 0.29 | 74.97 0.41 | 15.36 1.15 | 74.97 1.44 |

| GEM [24] | 25.44 0.72 | 67.49 0.91 | 18.48 1.34 | 72.68 0.46 | 12.58 2.15 | 78.24 0.61 | |

| A-GEM [12] | 18.75 0.51 | 58.70 1.49 | 9.72 0.22 | 58.23 0.64 | 5.97 1.13 | 59.12 1.57 | |

| iCaRL [26] | 35.95 2.16 | 64.40 1.59 | 30.25 1.86 | 71.02 2.54 | 20.05 1.33 | 72.26 1.47 | |

| FDR [4] | 29.99 2.23 | 69.11 0.59 | 22.81 2.81 | 74.22 0.72 | 13.10 3.34 | 73.22 0.83 | |

| GSS [1] | 22.08 3.51 | 61.77 1.52 | 13.72 2.64 | 56.32 1.84 | 7.49 4.78 | 57.42 1.61 | |

| HAL [11] | 16.74 3.51 | 39.70 2.53 | 11.12 3.80 | 41.75 2.17 | 9.71 2.91 | 55.60 1.83 | |

| DER++ [7] | 38.39 1.57 | 70.74 0.56 | 36.15 1.10 | 73.31 0.78 | 21.65 1.44 | 76.55 0.87 | |

| ERT [8] | 28.82 1.83 | 62.85 0.28 | 23.00 0.58 | 68.26 0.83 | 18.42 1.92 | 73.50 0.82 | |

| RM [3] | 39.47 1.26 | 69.27 0.41 | 32.52 1.53 | 73.51 0.89 | 23.09 1.72 | 75.06 0.75 | |

| LVT [38] | 44.73 1.19 | 71.54 0.93 | 43.51 1.06 | 76.78 0.71 | 26.75 1.29 | 78.15 0.42 | |

| SER (Ours) | 52.23 0.29 | 78.94 0.23 | 45.17 1.02 | 83.73 0.75 | 34.14 0.53 | 83.69 1.70 | |

4.1 Experimental Setup

Datasets. The proposed method is evaluated on 5 image classification benchmark datasets. The CIFAR100 dataset contains 100 classes and each class has 500 images for training and 100 images for testing. CIFAR10 dataset has 10 classes, each consisting of 5000 training images and 1000 test images. The image size of CIFAR10 and CIFAR100 is . TinyImageNet has 200 classes and it includes 100,000 training images and 10,000 test images in total, and the image size is . Both Permuted MNIST [19] and Rotated MNIST [24] are built upon the MNIST dataset [21], which has 60,000 training images and 10,000 test images, and the image size is . Permuted MNIST applies a random permutation to the pixels and Rotated MNIST rotates the digits by a random angle in the interval . In our experiments, CIFAR10, CIFAR100, and TinyImageNet are used to evaluate the performance of Class-IL and Task-IL. Permuted MNIST and Rotated MNIST are used for Domain-IL.

Baselines. We compare the proposed SER method with three rehearsal-free methods, including oEWC [32], SI [44], and LwF [23]. We also compare SER with multiple rehearsal-based methods: iCaRL [26], ER [27], GEM [24], A-GEM [12], FDR [4], GSS [1], HAL [11], DER++ [7], Co2L [9], ERT [8], RM [3], LVT [38], CLS-ER [2], and TAMiL [5]. Notice that CLS-ER [2] updates three models (a plastic model, a stable model, and the current training model) during training. In contrast, SER only updates the current training model (the old model is frozen). To show the effectiveness of CL methods, we further provide two baselines without CL techniques: SGD (a lower bound) and Joint (an upper bound). The results of baselines are obtained from the published papers or source codes. Besides, the best parameter configurations of baselines are used for comparison.

Metrics. We evaluate the continual learning methods with two metrics: final average accuracy and average forgetting. Let denote the testing accuracy on -th task when the model is trained on -th task, and the final average accuracy on all tasks is computed as:

| (9) |

Besides, the average forgetting on tasks is defined as:

| (10) |

4.2 Implementation Details

Architectures. We employ the same network architectures used in DER++ [7]. For CIFAR10, CIFAR100, and Tiny ImageNet, we adopt a modified ResNet18 without pretraining. For the MNIST dataset, we adopt a fully connected network with two hidden layers each one comprising 100 ReLU units.

Augmentation. For fairness, we use the same data augmentation as in DER++ [7], which applies random crops and horizontal flips to both stream and buffer examples. It is worth mentioning that small data transformations enforce implicit consistency constraints [7].

Training. Following the training strategy in DER++ [7], we employ a Stochastic Gradient Descent (SGD) optimizer in all experiments. For the CIFAR10 dataset, we train the model with 20 epochs, and CIFAR100 with 50 epochs, respectively. For the Tiny ImageNet dataset, we increase the number of epochs to 100. Besides, we only use one epoch for MNIST variants. The learning rate and batch size are the same as in DER++ [7]. In addition, we employ a MultiStepLR to decay the learning rate by parameter 0.1. The milestones are as follows. CIFAR10: [15]; CIFAR100: [35, 45]; TinyImageNet: [70, 90].

Due to the dynamic learning scenarios in continual learning, it is very difficult to adjust the hyper-parameter automatically. Therefore, we follow the previous methods [7, 2, 5] that tune the balancing parameters for each dataset. In our experiments, the balancing parameters are as CIFAR10 (, ), CIFAR100 (, ), and TinyImageNet (, ), P-MNIST and R-MNIST (, ). Other parameter configurations may obtain better performance.

4.3 Comparison with Previous Methods

Evaluation on CIFAR100. We follow the experimental setting in LVT [38] to evaluate the proposed method in Class-IL and Task-IL scenarios. Table 1 reports the performance of each CL method on CIFAR100 with 5, 10, and 20 tasks and each task has disjoint class labels. It can be seen that all the rehearsal-free methods fail to reduce forgetting on the CIFAR100 dataset when compared to SGD (a low-bound). Although LwF seeks consistent predictions with knowledge distillation, without the help of previous samples for replaying, the learned model severely drifts over time. In contrast, by storing a small number of previously seen samples in a memory buffer, rehearsal-based methods can effectively preserve the learned knowledge.

Among the rehearsal-based methods, SER outperforms DER++ by a large margin on all tasks. For example, given a memory buffer with 200 samples for Class-IL, SER outperforms DER++ by 20.5% on 5 tasks and 9.2% on 20 tasks. Besides, for Task-IL with 10 tasks, SER achieves a 16.3% accuracy improvement. Figure 3 and Figure 4 plot the average accuracy of ER, DER++, and SER on CIFAR100 when learning each task. It can be seen that SER substantially improves the accuracy in both Class-IL and Task-IL for each learning stage. By adding a forward consistency loss, DER++ surpasses ER by a noticeable margin in both Class-IL and Task-IL. Moreover, with the help of a forward consistency loss, SER substantially outperforms DER++ on CIFAR100 with different lengths of tasks.

| Buffer | Method | CIFAR10 | TinyImageNet | P-MNIST | R-MNIST | ||

|---|---|---|---|---|---|---|---|

| Class-IL | Task-IL | Class-IL | Task-IL | Domain-IL | Domain-IL | ||

| - | Joint | 92.20 0.15 | 98.31 0.12 | 59.99 0.19 | 82.04 0.10 | 94.33 0.17 | 95.76 0.04 |

| SGD | 19.62 0.05 | 61.02 3.33 | 7.92 0.26 | 18.31 0.68 | 40.70 2.33 | 67.66 8.53 | |

| - | oEWC [32] | 19.49 0.12 | 68.29 3.92 | 7.58 0.10 | 19.20 0.31 | 75.79 2.25 | 77.35 0.04 |

| SI [44] | 19.48 0.17 | 68.05 5.91 | 6.58 0.31 | 36.32 0.13 | 65.86 1.57 | 71.91 8.53 | |

| LwF [23] | 19.61 0.05 | 63.29 2.35 | 8.46 0.22 | 15.85 0.58 | - | - | |

| 200 | ER [27] | 44.79 1.86 | 91.19 0.94 | 8.49 0.16 | 38.17 2.00 | 72.37 0.87 | 85.01 1.90 |

| GEM [24] | 25.54 0.76 | 90.04 0.94 | - | - | 66.93 1.25 | 80.80 1.15 | |

| A-GEM [12] | 20.04 0.34 | 83.88 1.49 | 8.07 0.08 | 22.77 0.03 | 66.42 4.00 | 81.91 0.76 | |

| iCaRL [26] | 49.02 3.20 | 88.99 2.13 | 7.53 0.79 | 28.19 1.47 | - | - | |

| FDR [4] | 30.91 2.74 | 91.01 0.68 | 8.70 0.19 | 40.36 0.68 | 74.77 0.83 | 85.22 3.55 | |

| GSS [1] | 39.07 5.59 | 88.80 2.89 | - | - | 63.72 0.70 | 79.50 0.41 | |

| HAL [11] | 32.36 2.70 | 82.51 3.20 | - | - | 74.15 1.65 | 84.02 0.98 | |

| DER++ [7] | 64.88 1.17 | 91.92 0.60 | 10.96 1.17 | 40.87 1.16 | 83.58 0.59 | 90.43 1.87 | |

| Co2L [9] | 65.57 1.37 | 93.43 0.78 | 13.88 0.40 | 42.37 0.74 | - | - | |

| CLS-ER [2] | 66.19 0.75 | 93.59 0.87 | 23.47 0.80 | 58.41 1.72 | 84.63 0.40 | 92.26 0.18 | |

| TAMiL [5] | 68.84 1.18 | 94.28 0.31 | 20.46 0.40 | 55.44 0.52 | - | - | |

| SER (Ours) | 69.86 1.28 | 94.51 0.61 | 28.50 1.21 | 70.12 0.67 | 82.76 0.62 | 91.78 0.81 | |

| 500 | ER [27] | 57.74 0.27 | 93.61 0.27 | 9.99 0.29 | 48.64 0.46 | 80.60 0.86 | 88.91 1.44 |

| GEM [24] | 26.20 1.26 | 92.16 0.69 | - | - | 76.88 0.52 | 81.15 1.98 | |

| A-GEM [12] | 22.67 0.57 | 89.48 1.45 | 8.06 0.04 | 25.33 0.49 | 67.56 1.28 | 80.31 6.29 | |

| iCaRL [26] | 47.55 3.95 | 88.22 2.62 | 9.38 1.53 | 31.55 3.27 | - | - | |

| FDR [4] | 28.71 3.23 | 93.29 0.59 | 10.54 0.21 | 49.88 0.71 | 83.18 0.53 | 89.67 1.63 | |

| GSS [1] | 49.73 4.78 | 91.02 1.57 | - | - | 76.00 0.87 | 81.58 0.58 | |

| HAL [11] | 41.79 4.46 | 84.54 2.36 | - | - | 80.13 0.49 | 85.00 0.96 | |

| DER++ [7] | 72.70 1.36 | 93.88 0.50 | 19.38 1.41 | 51.91 0.68 | 88.21 0.39 | 92.77 1.05 | |

| Co2L [9] | 74.26 0.77 | 95.90 0.26 | 20.12 0.42 | 53.04 0.69 | - | - | |

| CLS-ER [2] | 75.22 0.71 | 94.35 0.38 | 31.03 0.56 | 61.57 0.63 | 88.30 0.14 | 94.06 0.07 | |

| TAMiL [5] | 74.45 0.27 | 94.61 0.19 | 28.48 1.50 | 65.19 0.82 | - | - | |

| SER (Ours) | 75.67 0.90 | 95.67 0.56 | 33.20 0.84 | 71.53 1.14 | 88.58 0.51 | 92.14 0.63 | |

Even compared to the most recent LVT method in Class-IL, SER surpasses it by a large margin of 8.3% on 5 tasks and 3.0% on 20 tasks when 200 previous samples are stored. Moreover, SER obtains at least 6% accuracy improvement in Task-IL. Furthermore, given a larger memory buffer with 500 samples, it can be observed that SER also obtains consistent accuracy improvement. These results demonstrate that SER can effectively preserve preserves the learned knowledge with a strong experience replay.

Evaluation on CIFAR10. Table 2 shows the performance of each CL method on the CIFAR10 dataset (5 tasks). Similar to the results observed in Table 1, rehearsal-based methods surpass the rehearsal-free methods by a large margin and our SER achieves the best performance among compared algorithms. CIFAR10 is a small dataset that contains 10 classes. When the memory size is 200, the average number of stored samples for each class is 20. In contrast, for the CIFAR100 dataset with 100 classes, there are only 2 samples for each class. Therefore, by storing more samples for each class, the average accuracy on CIFAR10 is much better for all CL approaches.

Compared to DER++, SER further reduces the forgetting issue and boosts performance with the forward consistency loss. For example, SER outperforms DER++ by 5% and 3.7% in Class-IL when the memory size is 200 and 500, respectively. Notice that CLS-ER is a dual memory experience replay architecture, which computes a consistency loss on the data stored in the memory buffer and maintains short-term and long-term semantic memories with episodic memory. By contrast, our SER incorporates a forward consistency loss on current training data only. Compared to CLS-ER on CIFAR10, SER improves the accuracy by 3.7% when the memory size is 200. Furthermore, although SER does not employ the task identities during model training, it slightly outperforms the most recent method TAMiL by 1%.

Evaluation on TinyImageNet. In Table 2, the TinyImageNet data is divided into 10 tasks and each task has 20 classes. TinyImageNet is more challenging than CIFAR10 and CIFAR100 for CL when a few previous samples are available. As the results reported in Table 2, given a limited memory buffer with a buffer size of 200, which indicates that there is only 1 sample for each class on average. Compared to the low-bound baseline SGD (7.92% in Class-IL), all rehearsal-free methods and most rehearsal-based methods cannot effectively reduce forgetting with a noticeable margin. Because there are a few samples for each class only, it is very difficult to retain the acquired knowledge from limited past experiences. Therefore, these rehearsal-based methods fail to reduce forgetting.

By mimicking the future experiences of the old model on current training data, SER can distill additional knowledge and further reduce forgetting. From Table 2, we can observe that SER substantially improves the performance on TinyImageNet when compared to most previous CL methods. In particular, the average accuracy of SER is much better than DER++ in Class-IL, i.e. 28.50% vs. 10.96%. For Task-IL, SER significantly outperforms DER++ with an absolute improvement of 29.25%. Compared to another recent method Co2L, SER also obtains 14.6% accuracy improvement in Class-IL and more than 27.7% in Task-IL. Moreover, SER surpasses CLS-ER and TAMiL by a noticeable margin of 5.03% and 8.04% in Class-IL, respectively. These results suggest that SER can effectively preserve the learned knowledge when few samples are available for experience replay.

| Method | CIFAR10 | CIFAR100 | ||

|---|---|---|---|---|

| Class-IL | Task-IL | Class-IL | Task-IL | |

| ER [27] | 54.12 | 5.72 | 83.54 | 23.72 |

| DER++ [7] | 39.84 | 8.25 | 71.02 | 20.64 |

| SER (Ours) | 20.25 | 2.52 | 60.61 | 13.61 |

Evaluation on P-MNIST and R-MNIST. To evaluate the performance of the proposed method in Domain-IL, we follow the experimental settings in DER++[7], which adopts 20 tasks for Permuted MNIST (P-MNIST) and Rotated MNIST (R-MNIST). As the average accuracy reported in Table 2, based on a memory buffer with 500 samples, most rehearsal-based methods outperform the rehearsal-free methods (except A-GEM) on both two datasets. However, by reducing the memory size to 200, the performance of ER is slightly worse than oEWC on P-MNIST. In contrast, DER++ and SER are also effective in Domain-IL by seeking consistent predictions. Besides, CLS-ER achieves the best performance on P-MNIST and R-MNIST and it is comparable to SER and DER++ on both two datasets.

Based on these observations, it can be concluded that the proposed forward consistency is more effective in Class-IL and Task-IL than Domain-IL. The reason is that the category spaces in Class-IL and Task-IL are disjoint while it is the same in Domain-IL. Therefore, seeking consistency with the current training data may overwrite the previous knowledge and it does not further mitigate forgetting.

Average Forgetting. Besides average accuracy, forgetting is another important metric to measure the performance of a CL method. Table 3 shows the average forgetting of ER, DER++, and our SER. It can be observed that SER achieves the lowest forgetting in all the comparison experiments, including both Class-IL and Task-IL on two datasets. These results indicate that SER effectively reduces the forgetting issue, verifying the effectiveness of our proposed forward consistency loss.

| Loss | CIFAR10 | CIFAR100 | |||||

|---|---|---|---|---|---|---|---|

| Class-IL | Task-IL | Class-IL | Task-IL | ||||

| ✓ | 19.26 | 61.02 | 4.73 | 40.83 | |||

| ✓ | ✓ | 44.79 | 91.19 | 9.90 | 70.82 | ||

| ✓ | ✓ | ✓ | 64.88 | 91.92 | 15.16 | 71.28 | |

| ✓ | ✓ | ✓ | 69.04 | 95.79 | 19.50 | 79.82 | |

| ✓ | ✓ | ✓ | ✓ | 69.86 | 95.51 | 24.35 | 79.86 |

4.4 Ablation Study

To further analyze the effectiveness of each loss in our method, we report the ablation study results in Table 4. Without storing any previous samples, simply finetuning the model with on current training data nearly forgets all previous knowledge in Class-IL, which is only 19.26% on CIFAR10 and 4.73% on CIFAR100. By replaying a small number of previous samples, ER effectively reduces the forgetting issue on both two datasets with . Besides, based on the backward consistency loss , DER++ further reduces forgetting by seeking consistency predictions on the data stored in the memory buffer. Furthermore, fusing two classification losses and two consistency losses, i.e. , SER achieves the best performance on all the comparison experiments.

It is worth mentioning that simply extending ER with a forward consistency loss alone also surpasses DER++. For example, from the results on CIFAR10 reported in Table 4, the average accuracy is improved from 64.88% to 69.04% on Class-IL and 91.92% to 95.79% on Task-IL. These observations indicate that seeking consistent predictions on current training data can also help to preserve the acquired knowledge. Moreover, the forward consistency can be computed on the complete training data and it can propagate the consistent distributions to new tasks over time. Therefore, the forward consistency loss demonstrates better performance when a small memory buffer is given.

4.5 Stability-Plasticity Dilemma

To better understand how well different CL methods strike a balance between stability and plasticity, we report the performance of several CL methods after sequentially learning 5 tasks on the CIFAR10 dataset in Figure 5. In terms of average accuracy, DER++, CLS-ER, and SER outperform ER with the help of consistency loss, showing the effectiveness of stability. On the other hand, ER achieves better accuracy on the current training data, and it outperforms other methods on plasticity. Based on the proposed forward consistency, SER improves the model generalization on previous tasks and it maintains a better balance. Compared to DER++ and CLS-ER, SER preserves more knowledge of previous tasks and it also achieves good performance on the recent task. Therefore, SER outperforms the compared methods with the metric of average accuracy, verifying its effectiveness.

4.6 Computational Cost Analysis

Based on the same backbone, the computational cost of different CL methods relies on the efficiency of model training. Since we adopt the less or the same training epochs as the baseline methods [7, 2], we mainly analyze the computational cost by comparing the model design. As illustrated in Algorithm 1, besides the basic model optimization on the current training data, we draw one batch of samples from the memory buffer for both the experience replay and backward consistency at the same time. In contrast, DER++ [7] draws two batches of samples from the memory buffer, where one batch of samples is for the experience replay while the other is for backward consistency. Therefore, although we incorporate a forward consistency loss by computing the output logits of the old model on the current training data, SER does not increase the computational cost when compared to DER++. By contrast, CLS-ER [2] adopts a working model for experience replay, and it additionally computes the consistency with the memory buffer by a stable model and a plastic model. Compared to the computational cost of CLS-ER, the proposed SER method is more efficient.

4.7 Discussion

The proposed SER method mixes the experience replay and model regularization in CL. Compared to the previous rehearsal-based methods, we additionally design a forward consistency loss to improve the model generalization on previous tasks. Since forward consistency can leverage more data to reduce drastic model changes during training and propagate the past logit distributions to new tasks over time, the forgetting issue is effectively reduced. Moreover, the proposed method is easy to implement, and its computational cost is not expensive when compared to the state-of-the-art methods, e.g., DER++ and CLS-ER. Therefore, the proposed SER method is simple and effective. We hope it can improve the incremental learning ability of other deep models in the future.

5 Conclusion

We propose a Strong Experience Replay (SER) that distills the learned knowledge from both past and future experiences. Besides memorizing the ground truth labels of previous samples stored in the memory buffer, SER also seeks consistent predictions between the new model and the old one on the data from both the memory buffer and the input stream. By imposing a backward and a forward consistency loss into model training, SER effectively reduces the forgetting issue when a few samples are available. Extensive experiments demonstrate that SER outperforms state-of-the-art methods by a large margin. Moreover, ablation study results on two datasets show the effectiveness of the proposed forward consistency.

Acknowledgments This research is supported by the National Natural Science Foundation of China under Grant 62002188, and the Shandong Excellent Young Scientists Fund Program (Overseas) 2023HWYQ-114.

Data Availability All data sets used in this article are publicly available. Data sharing does not apply to this article as no new data sets were generated during the current study.

References

- [1] Rahaf Aljundi, Min Lin, Baptiste Goujaud, and Yoshua Bengio. Gradient based sample selection for online continual learning. In Adv. Neural Inform. Process. Syst. (NeurIPS), 2019.

- [2] Elahe Arani, Fahad Sarfraz, and Bahram Zonooz. Learning fast, learning slow: A general continual learning method based on complementary learning system. In Int. Conf. Learn. Represent. (ICLR), 2022.

- [3] Jihwan Bang, Heesu Kim, YoungJoon Yoo, Jung-Woo Ha, and Jonghyun Choi. Rainbow memory: Continual learning with a memory of diverse samples. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), pages 8218–8227, 2021.

- [4] Ari S Benjamin, David Rolnick, and Konrad Kording. Measuring and regularizing networks in function space. In Int. Conf. Learn. Represent. (ICLR), 2019.

- [5] Prashant Bhat, Bahram Zonooz, and Elahe Arani. Task-aware information routing from common representation space in lifelong learning. In Int. Conf. Learn. Represent. (ICLR), 2023.

- [6] Matteo Boschini, Lorenzo Bonicelli, Pietro Buzzega, Angelo Porrello, and Simone Calderara. Class-incremental continual learning into the extended der-verse. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI), 2022.

- [7] Pietro Buzzega, Matteo Boschini, Angelo Porrello, Davide Abati, and Simone Calderara. Dark experience for general continual learning: a strong, simple baseline. Adv. Neural Inform. Process. Syst. (NeurIPS), 33:15920–15930, 2020.

- [8] Pietro Buzzega, Matteo Boschini, Angelo Porrello, and Simone Calderara. Rethinking experience replay: a bag of tricks for continual learning. In Int. Conf. Pattern Recog. (ICPR), 2021.

- [9] Hyuntak Cha, Jaeho Lee, and Jinwoo Shin. Co2l: Contrastive continual learning. In Proc. IEEE Int. Conf. Comput. Vis. (ICCV), pages 9516–9525, 2021.

- [10] Arslan Chaudhry, Puneet K Dokania, Thalaiyasingam Ajanthan, and Philip HS Torr. Riemannian walk for incremental learning: Understanding forgetting and intransigence. In Proc. Eur. Conf. Comput. Vis. (ECCV), pages 532–547, 2018.

- [11] Arslan Chaudhry, Albert Gordo, Puneet Dokania, Philip Torr, and David Lopez-Paz. Using hindsight to anchor past knowledge in continual learning. In AAAI, 2021.

- [12] Arslan Chaudhry, Marc’Aurelio Ranzato, Marcus Rohrbach, and Mohamed Elhoseiny. Efficient lifelong learning with a-gem. In Int. Conf. Learn. Represent. (ICLR), 2019.

- [13] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial nets. Adv. Neural Inform. Process. Syst. (NeurIPS), 2014.

- [14] Tyler L. Hayes, Kushal Kafle, Robik Shrestha, Manoj Acharya, and Christopher Kanan. REMIND your neural network to prevent catastrophic forgetting. In Proc. Eur. Conf. Comput. Vis. (ECCV). Springer, 2020.

- [15] Geoffrey Hinton, Oriol Vinyals, and Jeff Dean. Distilling the knowledge in a neural network. arXiv preprint arXiv:1503.02531, 2015.

- [16] Ahmet Iscen, Jeffrey Zhang, Svetlana Lazebnik, and Cordelia Schmid. Memory-efficient incremental learning through feature adaptation. In Proc. Eur. Conf. Comput. Vis. (ECCV). Springer, 2020.

- [17] Zhong Ji, Jin Li, Qiang Wang, and Zhongfei Zhang. Complementary calibration: Boosting general continual learning with collaborative distillation and self-supervision. IEEE Trans. Image Process (TIP), 2022.

- [18] Dongwan Kim and Bohyung Han. On the stability-plasticity dilemma of class-incremental learning. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), pages 20196–20204, 2023.

- [19] James Kirkpatrick, Razvan Pascanu, Neil Rabinowitz, Joel Veness, Guillaume Desjardins, Andrei A Rusu, Kieran Milan, John Quan, Tiago Ramalho, Agnieszka Grabska-Barwinska, et al. Overcoming catastrophic forgetting in neural networks. Proceedings of the national academy of sciences, 114(13):3521–3526, 2017.

- [20] Jeremias Knoblauch, Hisham Husain, and Tom Diethe. Optimal continual learning has perfect memory and is np-hard. In Proc. Int. Conf. Mach. Learning (ICML), pages 5327–5337. PMLR, 2020.

- [21] Yann LeCun, Léon Bottou, Yoshua Bengio, and Patrick Haffner. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 1998.

- [22] Kibok Lee, Kimin Lee, Jinwoo Shin, and Honglak Lee. Overcoming catastrophic forgetting with unlabeled data in the wild. In Proc. IEEE Int. Conf. Comput. Vis. (ICCV), pages 312–321, 2019.

- [23] Zhizhong Li and Derek Hoiem. Learning without forgetting. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI), 40(12):2935–2947, 2017.

- [24] David Lopez-Paz and Marc’Aurelio Ranzato. Gradient episodic memory for continual learning. Adv. Neural Inform. Process. Syst. (NeurIPS), 30, 2017.

- [25] Zheda Mai, Ruiwen Li, Jihwan Jeong, David Quispe, Hyunwoo Kim, and Scott Sanner. Online continual learning in image classification: An empirical survey. Neurocomputing, 469:28–51, 2022.

- [26] Sylvestre-Alvise Rebuffi, Alexander Kolesnikov, Georg Sperl, and Christoph H Lampert. icarl: Incremental classifier and representation learning. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), pages 2001–2010, 2017.

- [27] Matthew Riemer, Ignacio Cases, Robert Ajemian, Miao Liu, Irina Rish, Yuhai Tu, and Gerald Tesauro. Learning to learn without forgetting by maximizing transfer and minimizing interference. In Int. Conf. Learn. Represent. (ICLR), 2019.

- [28] Anthony Robins. Catastrophic forgetting, rehearsal and pseudorehearsal. Connection Science, 7(2):123–146, 1995.

- [29] David Rolnick, Arun Ahuja, Jonathan Schwarz, Timothy Lillicrap, and Gregory Wayne. Experience replay for continual learning. Adv. Neural Inform. Process. Syst. (NeurIPS), 32, 2019.

- [30] Andrei A Rusu, Neil C Rabinowitz, Guillaume Desjardins, Hubert Soyer, James Kirkpatrick, Koray Kavukcuoglu, Razvan Pascanu, and Raia Hadsell. Progressive neural networks. arXiv preprint arXiv:1606.04671, 2016.

- [31] Fahad Sarfraz, Elahe Arani, and Bahram Zonooz. Sparse coding in a dual memory system for lifelong learning. In AAAI, 2023.

- [32] Jonathan Schwarz, Wojciech Czarnecki, Jelena Luketina, Agnieszka Grabska-Barwinska, Yee Whye Teh, Razvan Pascanu, and Raia Hadsell. Progress & compress: A scalable framework for continual learning. In Proc. Int. Conf. Mach. Learning (ICML), pages 4528–4537, 2018.

- [33] Hanul Shin, Jung Kwon Lee, Jaehong Kim, and Jiwon Kim. Continual learning with deep generative replay. Adv. Neural Inform. Process. Syst. (NeurIPS), 2017.

- [34] James Smith, Yen-Chang Hsu, Jonathan Balloch, Yilin Shen, Hongxia Jin, and Zsolt Kira. Always be dreaming: A new approach for data-free class-incremental learning. In Proc. IEEE Int. Conf. Comput. Vis. (ICCV), pages 9374–9384, 2021.

- [35] Eli Verwimp, Matthias De Lange, and Tinne Tuytelaars. Rehearsal revealed: The limits and merits of revisiting samples in continual learning. In Proc. IEEE Int. Conf. Comput. Vis. (ICCV), pages 9385–9394, 2021.

- [36] Jeffrey S Vitter. Random sampling with a reservoir. ACM Transactions on Mathematical Software (TOMS), 11(1):37–57, 1985.

- [37] Fu-Yun Wang, Da-Wei Zhou, Han-Jia Ye, and De-Chuan Zhan. Foster: Feature boosting and compression for class-incremental learning. In Proc. Eur. Conf. Comput. Vis. (ECCV). Springer, 2022.

- [38] Zhen Wang, Liu Liu, Yiqun Duan, Yajing Kong, and Dacheng Tao. Continual learning with lifelong vision transformer. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), pages 171–181, 2022.

- [39] Zhen Wang, Liu Liu, Yiqun Duan, and Dacheng Tao. Continual learning through retrieval and imagination. In AAAI, volume 36, pages 8594–8602, 2022.

- [40] Zifeng Wang, Zizhao Zhang, Sayna Ebrahimi, Ruoxi Sun, Han Zhang, Chen-Yu Lee, Xiaoqi Ren, Guolong Su, Vincent Perot, Jennifer Dy, et al. Dualprompt: Complementary prompting for rehearsal-free continual learning. In Proc. Eur. Conf. Comput. Vis. (ECCV), pages 631–648. Springer, 2022.

- [41] Zifeng Wang, Zizhao Zhang, Chen-Yu Lee, Han Zhang, Ruoxi Sun, Xiaoqi Ren, Guolong Su, Vincent Perot, Jennifer Dy, and Tomas Pfister. Learning to prompt for continual learning. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), pages 139–149, 2022.

- [42] Shipeng Yan, Jiangwei Xie, and Xuming He. Der: Dynamically expandable representation for class incremental learning. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), 2021.

- [43] Jaehong Yoon, Divyam Madaan, Eunho Yang, and Sung Ju Hwang. Online coreset selection for rehearsal-based continual learning. Int. Conf. Learn. Represent. (ICLR), 2022.

- [44] Friedemann Zenke, Ben Poole, and Surya Ganguli. Continual learning through synaptic intelligence. In Proc. Int. Conf. Mach. Learning (ICML), 2017.

- [45] Junting Zhang, Jie Zhang, Shalini Ghosh, Dawei Li, Serafettin Tasci, Larry Heck, Heming Zhang, and C-C Jay Kuo. Class-incremental learning via deep model consolidation. In Winter Conference on Applications of Computer Vision (WACV), pages 1131–1140, 2020.

- [46] Fei Zhu, Xu-Yao Zhang, Chuang Wang, Fei Yin, and Cheng-Lin Liu. Prototype augmentation and self-supervision for incremental learning. In Proc. IEEE Conf. Comput. Vis. Pattern Recognit. (CVPR), pages 5871–5880, 2021.