Containing Analog Data Deluge at Edge through Frequency-Domain Compression in Collaborative Compute-in-Memory Networks

Abstract

Edge computing is a promising solution for handling high-dimensional, multispectral analog data from sensors and IoT devices for applications such as autonomous drones. However, edge devices’ limited storage and computing resources make it challenging to perform complex predictive modeling at the edge. Compute-in-memory (CiM) has emerged as a principal paradigm to minimize energy for deep learning-based inference at the edge. Nevertheless, integrating storage and processing complicates memory cells and/or memory peripherals, essentially trading off area efficiency for energy efficiency. This paper proposes a novel solution to improve area efficiency in deep learning inference tasks. The proposed method employs two key strategies. Firstly, a Frequency domain learning approach uses binarized Walsh-Hadamard Transforms, reducing the necessary parameters for DNN (by 87% in MobileNetV2) and enabling compute-in-SRAM, which better utilizes parallelism during inference. Secondly, a memory-immersed collaborative digitization method is described among CiM arrays to reduce the area overheads of conventional ADCs. This facilitates more CiM arrays in limited footprint designs, leading to better parallelism and reduced external memory accesses. Different networking configurations are explored, where Flash, SA, and their hybrid digitization steps can be implemented using the memory-immersed scheme. The results are demonstrated using a 65 nm CMOS test chip, exhibiting significant area and energy savings compared to a 40 nm-node 5-bit SAR ADC and 5-bit Flash ADC. By processing analog data more efficiently, it is possible to selectively retain valuable data from sensors and alleviate the challenges posed by the analog data deluge.

Index Terms:

Analog Data Deluge; Compute-in-SRAM; deep neural network; frequency transforms; low power computing

I Introduction

The advent of deep learning and its application in critical domains such as healthcare, finance, security, and autonomous vehicles has led to a data deluge, necessitating efficient computational strategies [1, 2, 3]. Deep neural networks (DNNs), which are increasingly deployed at the network’s edge, are particularly challenging due to their complexity and the limited computing and storage resources at the edge [4]. This work presents novel techniques to address these challenges, focusing on the compute-in-memory (CiM) processing of DNNs and frequency-domain model compression.

CiM integrates model storage and computations, reducing significant data movements between intermediate memory hierarchy and processing modules that hinder the performance of conventional digital architectures for DNNs. Traditional memory structures such as SRAM [5], RRAM [6], and embedded-DRAM [7, 8] can be adapted for CiM, making it an attractive scheme for cost-effective adoption in various systems-on-chip (SOC). CiM schemes leverage analog representations of operands to simplify their summation over a wire by exploiting Kirchhoff’s law, thereby minimizing the necessary workload and processing elements [9, 10, 11, 12, 13].

However, the analog computations in CiM present significant implementation challenges, particularly the need for digital-to-analog converters (DAC) and analog-to-digital converters (ADC) to operate on digital inputs and digitize the analog output for routing and storage. This work proposes a novel memory-immersed digitization that can preclude a dedicated ADC and its associated area overhead, Fig. 1(b) The proposed scheme uses parasitic bit-lines of memory arrays to form within-memory capacitive DAC, and neighboring memory arrays collaborate for resource-efficient digitization [14]. Although [13] similarly explored memory-immersed digitization, the presented results were based on simulations only, and only SA functionality was shown. Meanwhile, in this work, the proposed techniques are characterized on a test chip designed in 65 nm CMOS technology. We demonstrate 5-bit memory-immersed ADC operation using a network of 1632 compute-in-SRAM arrays. Compared to a 40 nm-node 5-bit SAR ADC, our 65 nm design requires 25 less area and 1.4 less energy by leveraging in-memory computing structures. Compared to a 40 nm-node 5-bit Flash ADC, our design requires 51 less area and 13 less energy.

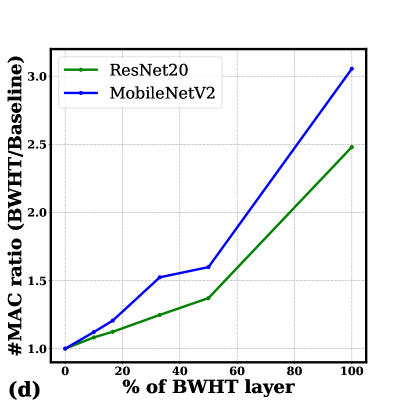

On the other hand, frequency-domain model compression, an efficient alternative to traditional model pruning techniques, leverages fast algorithms for transforms such as the discrete cosine transform (DCT) or discrete Fourier transform (DFT) to identify and remove redundant or uncorrelated information in the frequency domain [15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26]. This work focuses on Walsh-Hadamard transform (WHT)-based model compression, which can reduce model size with limited accuracy loss, shown in Fig. 1(c). However, as demonstrated in Fig. 1(d) it also introduces a notable increase in the required multiply-accumulate (MAC) operations, offsetting the benefits of model size reduction. To address this challenge, this work presents a novel analog acceleration approach[27].

These techniques and architectures present a promising solution for sustainable edge computing, effectively addressing the challenges posed by the analog data deluge [5, 6, 7, 8, 28, 29, 9, 10, 13]. By improving area efficiency in deep learning inference tasks, reducing energy consumption, and leveraging output sparsity for efficient computation, these techniques enable better handling of high-dimensional, multispectral analog data [15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26]. This makes them a promising solution for sustainable data processing at the edge, paving the way for the next generation of deep learning applications in scenarios where area and power resources are limited [1, 3, 4].

-

•

Firstly, we introduce a Compute-In-Memory (CIM) architecture that capitalizes on Blockwise Walsh-Hadamard Transform (BWHT) and soft-thresholding techniques to compress deep neural networks effectively. This innovative CIM design enables computation in just two clock cycles at a speed of 4 GHz, a feat made possible by implementing full parallelism. Notably, our design eliminates the need for ADCs or DACs. Furthermore, we propose an early termination strategy that exploits output sparsity, thereby reducing both computation time and energy consumption.

-

•

Secondly, in scenarios where the inclusion of ADCs in our design becomes necessary, we present a memory-immersed collaborative digitization technique. This method significantly reduces the area overheads typically associated with conventional ADCs, thus facilitating the efficient digitization of large data volumes. This technique not only optimizes the use of space but also enhances the system’s overall efficiency by streamlining the conversion process. It ensures that even with the inclusion of ADCs, the system maintains high performance while handling substantial data volumes.

Section II provides the essential background information required to understand the techniques proposed in this paper. Section III delves into the proposed design for a CMOS-based in-memory frequency-domain transform. In Section IV, we elaborate on the proposed memory-immersed collaborative digitization technique. Section V is dedicated to discussing the sustainability aspects of our design. Finally, Section VI concludes the paper, summarizing the key points and findings.

II Background and Related Works

II-A Walsh-Hadamard Transform (WHT)

The Walsh-Hadamard Transform (WHT) is akin to the Fast Fourier Transform (FFT) as both can transmute convolutions in the time or spatial domain into multiplications in the frequency domain. A distinguishing feature of WHT is that its transform matrix consists solely of binary values (-1 and 1), eliminating the need for multiplications, thereby enhancing efficiency.

Given as the vector in the time-domain and WHT-domain, respectively, where m is an integer power of 2 (), the WHT can be expressed as:

| (1) |

Here, is a Walsh matrix. The Hadamard matrix for WHT of is constructed as follows:

| (2) |

The Hadamard matrix is then rearranged to increase the sign change order, resulting in the Walsh matrix. This matrix exhibits the unique property of orthogonality, where every row of the matrix is orthogonal to each other, with the dot product of any two rows being zero. This property makes the Walsh matrix particularly advantageous in a wide range of signal and image processing applications [30].

However, WHT poses a computational challenge when the dimension of the input vector is not a power of two. To address this, a technique called blockwise Walsh-Hadamard transforms (BWHT) was introduced [31]. BWHT divides the transform matrix into multiple blocks, each sized to an integer power of two, significantly reducing the worst-case size of operating tensors and mitigating excessive zero-padding.

II-B Frequency-Domain Compression of Deep Neural Networks

Frequency-domain transformations, such as BWHT, can be incorporated into deep learning architectures for model compression. For instance, in MobileNetV2, which uses convolutions in its bottleneck layers to reduce computational complexity, BWHT can replace these convolution layers, achieving similar accuracy with fewer parameters. Unlike convolution layers, BWHT-based binary layers use fixed Walsh-Hadamard matrices, eliminating trainable parameters. Instead, a soft-thresholding activation function with a trainable parameter can be used to selectively attend to important frequency bands. The activation function for this frequency-domain compression is given as

| (3) |

Similarly, in ResNet20, convolutions can be replaced with BWHT layers. These transformations maintain matching accuracy while achieving significant compression on benchmark datasets such as CIFAR-10, CIFAR-100, and ImageNet [31]. However, BWHT transforms also increase the necessary computations for deep networks. The subsequent section discusses how micro-architectures and circuits can enhance the computational efficiency of BWHT-based tensor transformations.

III Analog-Domain Frequency Transforms

The escalating data deluge in the digital world has brought analog domain processing to the forefront as a potential solution for accelerating vector and matrix-level parallel instructions, particularly for low-precision computations such as matrix-vector products in DNNs. Analog computations, by their nature, simplify processing cells and allow for the integration of storage and computation within a single cell. This feature is prevalent in many compute-in-memory designs. This integration significantly mitigates the data deluge by reducing data movement during deep learning computations, a critical bottleneck for energy and performance in traditional processors [32].

However, analog domain processing does not resolve the data deluge challenge entirely due to its reliance on ADCs and DACs for domain conversions of operands. The ADC and DAC operations introduce design complexities, significant area/power overheads, and limitations in technology scalability, all contributing to the data deluge. Furthermore, the performance of ADCs and DACs is constrained by speed, power consumption, and cost, further limiting the overall capabilities of analog domain computations. In the subsequent discussion, we present our proposed techniques for analog domain processing of frequency operations. These aim to alleviate the data deluge by eliminating the need for ADC/DAC conversions, even when operating on digital input vectors and computing output vectors in the digital domain. Our approach incorporates bitplane-wise input vector processing and co-designs learning methods that can operate accurately under extreme quantization, enabling ADC/DAC-free operations.

III-A Crossbar Microarchitecture Design and Operation

In Fig. 2, we propose a design that leverages analog computations for frequency domain processing of neural networks to address the data deluge. The design’s crossbar combines six transistors (6T) NMOS-based cells for analog-domain frequency transformation. The corresponding cells for ‘-1’ and ‘1’ entries in the Walsh-Hadamard transform matrix are shown to the figure’s right. The crossbar combines these cells according to the elements in the transform matrix. Since the transform matrix is parameter-free, computing cells in the proposed design are simpler by being based only on NMOS for a lower area than conventional 6T or 8T SRAM-based compute-in-memory designs. Additionally, processing cells in the proposed crossbar are stitchable along rows and columns to enable perfect parallelism and extreme throughput, mitigating the data deluge further.

The operation of the crossbar comprises four steps, which are marked in Fig. 2. These steps are designed to minimize data movement and thus address the data deluge. The steps include precharging, independent local computations, row-wise summing, and single-bit output generation.

Fig. 3 shows the signal flow diagram for the above four steps. The 16 nm predictive technology models (PTM) simulation results are shown using the low standby power (LSTP) library in [33] and operating the system at 4 GHz clock frequency and VDD = 0.85V. Row-merge and column-merge signals in the design are boosted at 1.25V to avoid source degeneration. Unlike comparable compute-in-memory designs such as [12], which place product computations on bit lines, in our design, these computations are placed on local nodes in parallel at all array cells. This improves parallelism, energy efficiency, and performance by computing on significantly less capacitive local nodes than bit lines in traditional designs, thereby further addressing the data deluge.

III-B ADC-Free by Training against 1-bit Quantization

Fig. 4 presents the high-level operation flow of our scheme for processing multi-bit digital input vectors and generating the corresponding multi-bit digital output vector using the analog crossbar illustrated in Fig. 2. This scheme is designed to address the data deluge by eliminating the need for ADC and DAC operations. The scheme utilizes bitplane-wise processing of the multi-bit digital input vector and is trained to operate effectively with extreme quantization. In the figure, the input vector’s elements with the same significance bits are grouped and processed in a single step using the scheme described in Fig. 2, which spans two clock cycles. The analog charge-represented output is computed along the row-wise charge sum lines and thresholded to generate the corresponding digital bits. This extreme quantization approach is applied to the computed MAC output, eliminating the need for ADCs. With multiple input bitplanes, labeled as - - - in the figure, the corresponding output bitplanes are concatenated to form the final multi-bit output vector.

Due to the extreme quantization applied to the analog output in the above scheme, the resulting digital output vector only approximates the true output vector under transformation. However, unlike a standard DNN weight matrix, the transformation matrix used in our frequency domain processing is parameter-free. This characteristic enables training the system to effectively mitigate the impact of the approximation while allowing for a significantly simpler implementation without needing ADCs or DACs, thereby further addressing the data deluge. The following training methodology achieves this.

Consider the frequency-domain processing of an input vector . In Fig. 4, we process by transforming it to the frequency domain, followed by parameterized thresholding, and then reverting the output to the spatial domain. Consider a DNN with layers that chain the above sequence of operations as . Here, is a parameter-free approximate frequency transformation as followed in our scheme in Fig. 4. is a parameterized thresholding function at the ith layer whose parameters are learned from the training data.

III-C Early Termination for Energy Efficiency

To further mitigate the data deluge, we introduce an early termination mechanism in our design. This mechanism is based on the observation that the contribution of higher bitplanes in the input vector to the final output is significantly less than the lower bitplanes due to the binary representation of numbers. Therefore, we can terminate the computation early if the higher bitplanes do not significantly affect the final output, thus saving energy and reducing data movement.

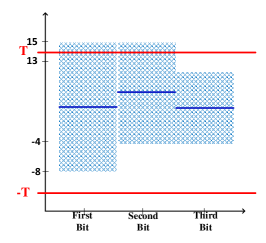

We illustrate the early termination mechanism in Fig. 6. The mechanism involves a thresholding operation that compares the partial sum of the output bitplanes with a predefined threshold. If the partial sum is less than the threshold, the computation is terminated early, and the remaining higher bitplanes are ignored. This mechanism reduces the number of computations and data movement, thereby mitigating the data deluge. The threshold for early termination is a design parameter that can be tuned based on the accuracy-energy trade-off. A lower threshold leads to more frequent early terminations, saving more energy but potentially reducing the accuracy, and vice versa. Therefore, the threshold should be carefully chosen to balance the trade-off between energy efficiency and accuracy.

Our proposed techniques for analog domain processing of frequency operations, including bitplane-wise input vector processing, ADC/DAC-free operations, and early termination mechanism, effectively address the data deluge by reducing data movement and computational complexity. These techniques also maintain high accuracy and energy efficiency, making them promising for future low-precision computations in deep neural networks. Fig. 7 shows our simulation results supporting the claim.

IV Memory-Immersed Collaborative Digitization

IV-A Integrating CiM Arrays for Collaborative Digitization

Fig. 1(b) illustrates the implementation of memory-integrated ADC. We specifically focus on our methods and results for eight-transistor (8T) compute-in-SRAM arrays, widely utilized in numerous platforms. Unlike 6T compute-in-SRAM, 8T cells are less prone to bit disturbances due to separate write and inference ports, making them more suitable for technology scaling. However, our suggestions for memory-integrated ADC apply to other memory types, including 10-T compute-in-SRAM/eDRAM and non-volatile memory crossbars.

In the proposed model, two adjacent CiM arrays work together for in-memory digitization, as depicted in Figure 8(a). When the left array calculates the input-weight scalar product, the right array performs SRAM-integrated digitization on the produced analog-mode multiply-average (MAV) outputs. Both arrays then switch their operating modes. Each array consists of 8-T cells, combining standard 6-T SRAM cells with a two-transistor weight-input product port shown in the figure. Memory cells for input-weight products are accessed using three control signals.

For in-memory digitization of charge-domain product-sum computed in the left array, column lines (CLs) in the right array realize the unit capacitors of a capacitive DAC formed within the memory array. A precharge transistor array is integrated with the column lines to generate the reference voltages. The first reference voltage is generated by summing the charges of all column lines. The developed MAV voltage in the left CiM array is compared to the first reference voltage to determine the most significant bit of the digitized output. The next precharge state of memory-immersed capacitive DAC is determined, and the precharge and comparison cycles continue until the MAV voltage has been digitized to necessary precision.

Using neighboring CiM arrays for the first reference voltage generation for in-memory digitization offers several key advantages. First, various non-idealities in analog-mode MAV computation become common-mode due to using an identical array for the first reference voltage generation. Thus, the non-idealities only minimally impact the accuracy of digitization. Second, collaborative digitization minimizes peripheral overheads. Only an analog comparator and simple modification in the precharge array are sufficient to realize a successive approximation search.

Compared to traditional CiM approaches with a dedicated ADC at each array, our scheme’s interleaving of scalar product computation and digitization cycles affects the achievable throughput. However, with simplified low-area peripherals, more CiM arrays can be accommodated than prior works employing dedicated ADCs. Therefore, our scheme compensates for the overall throughput at the system level by operating many parallel CiM arrays. This improved area efficiency of CiM arrays in our scheme minimizes the necessary exchanges from off-chip DRAMs to on-chip structures in mapping large DNN layers, a significant energy overhead in conventional techniques.

IV-B Hybrid SRAM-Integrated Flash and SAR ADC Operation

In addition to the nearest neighbor networking in Figure 8, more complex CiM networks can also be orchestrated for more time-efficient collaborative digitization in Flash and/or hybrid SAR + Flash mode. Figure 9 shows an example networking scheme where Array-1 couples with three memory arrays to the right for collaborative digitization in Flash mode. Here, the three right arrays simultaneously generate the respective reference voltages for the Flash mode of digitization and to determine the first two most significant bits in one comparison cycle.

IV-C Leveraging MAV Statistics for ADC’s Time-Efficiency

The hybrid scheme for data conversion further benefits from exploiting the statistics of the multiply average (MAV) computed by the CiM. In many CiM schemes, the computed MAV is not necessarily uniformly distributed. For example, in bit-plane-wise CiM processing, DACs are avoided by processing a one-bit input plane in one time step. This results in a skewed distribution of MAV, Fig. 10(a). The skewed distribution of MAV can be leveraged by implementing an asymmetric binary search for digitization, Fig. 10(b). Under the asymmetric search, the average number of comparisons reduces, thus proportionally reducing the energy and latency for the operation, Fig. 10(c). The proposed hybrid digitization scheme further exploits the asymmetric search, which can be accelerated by Flash digitization mode.

IV-D Test-Chip Design, Measurement Results, and Comparison to Traditional ADC

A 65 nm CMOS test chip characterized the proposed SRAM-integrated ADC. The fabricated chip’s micrograph and measurement setup are shown in Figures 11(a, b). Four compute-in-SRAM arrays of size 16x32 were implemented. The coupling of CiM arrays can also be programmed to realize hybrid Flash-SAR ADC operations, such as obtaining the two most significant bits in Flash mode and the remaining in SAR.

Figure 11(c) shows the transient waveforms of different control signals and comparator outputs, showing a hybrid Flash + SAR ADC operation. Flash mode is activated in the first comparison cycle where CiM arrays generate the corresponding reference voltages, and the first two bits of MAV digitization are extracted. Subsequently, the operation switches to SAR mode, where the remaining digitization bits are obtained by engaging one array alone with another. In the last four cycles, other arrays become free to similarly operate on a proximal CiM array to digitize MAV in SAR mode. Figure 12(a) shows the measured staircase plot of input voltage to output codes and the comparison to an ideal staircase, demonstrating near-ideal performance. This suggests that the proposed SRAM-integrated ADC operates effectively, with the hybrid Flash + SAR ADC operation providing a flexible and efficient approach to digitization within the memory array.

Fig. 13 and Table I show the design space exploration of pro- posed memory-immersed ADC compared to other ADC styles. In Fig. 13(a), leveraging in-memory structures for capacitive DAC formation, the proposed in-memory ADC is more area efficient than Flash and SAR styles. Significantly, Flash ADC’s size increases exponentially with increasing bit precision. In Fig. 13(b), SAR ADC’s latency increases with bit precision while Flash ADC can maintain a consistent latency but at the cost of the increasing area as shown in Fig. 13(a). A hybrid data conversion in the proposed in-memory provides a middle ground, i.e., lower latency than in SAR ADC. Figs. 13(c-d) show the impact of supply voltage and frequency scaling on in-memory ADC’s power and accuracy for MNIST character recognition.

In conclusion, the proposed method of integrating compute-in-memory (CiM) arrays for collaborative digitization offers a promising approach to handling the data deluge in modern computing systems. By leveraging the unique properties of 8T compute-in-SRAM arrays, the method provides a scalable and efficient solution for in-memory digitization. The hybrid SRAM-integrated Flash and SAR ADC operation further enhances time efficiency, while the exploitation of MAV statistics contributes to the ADC’s time efficiency. The successful implementation and characterization of the proposed SRAM-integrated ADC on a 65 nm CMOS test chip further validate the effectiveness of this approach.

V Sustainability

The proposed CIM architecture and the associated techniques significantly contribute to sustainability in deep learning applications. The key to this sustainability lies in the efficient use of resources and the reduction of energy consumption.

Firstly, the architecture leverages BWHT and soft-thresholding techniques to compress deep neural networks. This compression reduces the computational resources required, leading to more efficient use of hardware. By reducing the number of parameters in the BWHT layer, the architecture minimizes the memory footprint of deep learning models, reducing the energy required for data storage and retrieval. The early termination strategy enhances energy efficiency by leveraging output sparsity to reduce computation time.

Secondly, the memory-immersed collaborative digitization among CiM arrays minimizes the area overheads of ADCs for deep learning inference. This allows significantly more CiM arrays to be accommodated within limited footprint designs, improving parallelism and minimizing external memory accesses. The results demonstrate the potential of the proposed techniques for area-efficient and energy-efficient deep learning applications.

Lastly, the proposed techniques and architectures are designed to be robust and resilient, reducing the need for frequent hardware replacements or upgrades. This longevity contributes to sustainability by reducing electronic waste and the environmental impact associated with the production and disposal of hardware.

VI Conclusions

The proposed frequency-domain CIM architecture with early termination technique, and memory-immersed collaborative digitization present a comprehensive solution for sustainable and efficient deep learning applications. This leads to more efficient use of hardware and reduces the energy required for data storage and retrieval. The memory-immersed collaborative digitization among CiM arrays minimizes the area overheads of a conventional ADC for deep learning inference. This allows significantly more CiM arrays to be accommodated within limited footprint designs, improving parallelism and minimizing external memory accesses. The results demonstrate the potential of the proposed techniques for area-efficient and energy-efficient deep learning applications.

These techniques contribute significantly to sustainability in deep learning applications. By improving area efficiency in deep learning inference tasks and reducing energy consumption, these techniques contribute to the sustainability of data processing at the edge. This approach enables better handling of high-dimensional, multispectral analog data. It helps alleviate the challenges the analog data deluge poses, making it a promising solution for sustainable data processing at the edge. In conclusion, the proposed techniques and architectures pave the way for the next generation of deep learning applications, particularly in scenarios where area and power resources are limited.

VII Acknowledgment

This work was supported by COGNISENSE, one of the seven centers in JUMP 2.0, a Semiconductor Research Corporation (SRC) program sponsored by DARPA.

References

- [1] J. Chen and X. Ran, “Deep learning with edge computing: A review,” Proceedings of the IEEE, vol. 107, no. 8, pp. 1655–1674, 2019.

- [2] S. Tayebati and K. T. Cho, “A hybrid machine learning framework for clad characteristics prediction in metal additive manufacturing,” 2023.

- [3] M. Hassanalieragh, A. Page, T. Soyata, G. Sharma, M. Aktas, G. Mateos, B. Kantarci, and S. Andreescu, “Health monitoring and management using internet-of-things (iot) sensing with cloud-based processing: Opportunities and challenges,” in 2015 IEEE international conference on services computing. IEEE, 2015, pp. 285–292.

- [4] F. Yu, L. Cui, P. Wang, C. Han, R. Huang, and X. Huang, “Easiedge: A novel global deep neural networks pruning method for efficient edge computing,” IEEE Internet of Things Journal, vol. 8, no. 3, pp. 1259–1271, 2020.

- [5] R. Sehgal and J. P. Kulkarni, “Trends in analog and digital intensive compute-in-sram designs,” in IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), 2021.

- [6] S. Yu, H. Jiang, S. Huang, X. Peng, and A. Lu, “Compute-in-memory chips for deep learning: Recent trends and prospects,” IEEE Circuits and Systems Magazine, 2021.

- [7] S. Jung, J. Lee, H. Noh, J.-H. Yoon, and J. Kung, “Dualpim: A dual-precision and low-power cnn inference engine using sram-and edram-based processing-in-memory arrays,” in IEEE International Conference on Artificial Intelligence Circuits and Systems (AICAS), 2022.

- [8] S. Xie, C. Ni, A. Sayal, P. Jain, F. Hamzaoglu, and J. P. Kulkarni, “16.2 edram-cim: compute-in-memory design with reconfigurable embedded-dynamic-memory array realizing adaptive data converters and charge-domain computing,” in IEEE International Solid-State Circuits Conference (ISSCC), 2021.

- [9] J.-M. Hung, T.-H. Wen, Y.-H. Huang, S.-P. Huang, F.-C. Chang, C.-I. Su, W.-S. Khwa, C.-C. Lo, R.-S. Liu, C.-C. Hsieh et al., “8-b precision 8-mb reram compute-in-memory macro using direct-current-free time-domain readout scheme for ai edge devices,” IEEE Journal of Solid-State Circuits, 2022.

- [10] C. Sakr and N. R. Shanbhag, “Signal processing methods to enhance the energy efficiency of in-memory computing architectures,” IEEE Transactions on Signal Processing, vol. 69, pp. 6462–6472, 2021.

- [11] S. Nasrin, A. Shylendra, N. Darabi, T. Tulabandhula, W. Gomes, A. Chakrabarty, and A. R. Trivedi, “Enos: Energy-aware network operator search in deep neural networks,” IEEE Access, vol. 10, pp. 81 447–81 457, 2022.

- [12] P. Shukla, S. Nasrin, N. Darabi, W. Gomes, and A. R. Trivedi, “Mc-cim: Compute-in-memory with monte-carlo dropouts for bayesian edge intelligence,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 70, no. 2, pp. 884–896, 2023.

- [13] S. Nasrin, D. Badawi, A. E. Cetin, W. Gomes, and A. R. Trivedi, “Mf-net: Compute-in-memory sram for multibit precision inference using memory-immersed data conversion and multiplication-free operators,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 68, no. 5, pp. 1966–1978, 2021.

- [14] S. Nasrin, M. B. Hashem, N. Darabi, B. Parpillon, F. Fahim, W. Gomes, and A. R. Trivedi, “Memory-immersed collaborative digitization for area-efficient compute-in-memory deep learning,” 2023.

- [15] W. Chen, J. Wilson, S. Tyree, K. Q. Weinberger, and Y. Chen, “Compressing convolutional neural networks in the frequency domain,” in Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, 2016, pp. 1475–1484.

- [16] Z. Liu, J. Xu, X. Peng, and R. Xiong, “Frequency-domain dynamic pruning for convolutional neural networks,” Advances in neural information processing systems, vol. 31, 2018.

- [17] K. Xu, M. Qin, F. Sun, Y. Wang, Y.-K. Chen, and F. Ren, “Learning in the frequency domain,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2020, pp. 1740–1749.

- [18] S. Rossi, S. Marmin, and M. Filippone, “Walsh-hadamard variational inference for bayesian deep learning,” Advances in Neural Information Processing Systems, vol. 33, pp. 9674–9686, 2020.

- [19] H. Pan, D. Badawi, and A. E. Cetin, “Fast walsh-hadamard transform and smooth-thresholding based binary layers in deep neural networks,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2021, pp. 4650–4659.

- [20] H. Pan, X. Zhu, S. Atici, and A. E. Cetin, “Dct perceptron layer: A transform domain approach for convolution layer,” arXiv preprint arXiv:2211.08577, 2022.

- [21] Y. Koizumi, N. Harada, Y. Haneda, Y. Hioka, and K. Kobayashi, “End-to-end sound source enhancement using deep neural network in the modified discrete cosine transform domain,” in 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2018, pp. 706–710.

- [22] S. Liao, Z. Li, X. Lin, Q. Qiu, Y. Wang, and B. Yuan, “Energy-efficient, high-performance, highly-compressed deep neural network design using block-circulant matrices,” in 2017 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), 2017, pp. 458–465.

- [23] Z.-Q. J. Xu, Y. Zhang, T. Luo, Y. Xiao, and Z. Ma, “Frequency principle: Fourier analysis sheds light on deep neural networks,” arXiv preprint arXiv:1901.06523, 2019.

- [24] H. Mohsen, E.-S. A. El-Dahshan, E.-S. M. El-Horbaty, and A.-B. M. Salem, “Classification using deep learning neural networks for brain tumors,” Future Computing and Informatics Journal, vol. 3, no. 1, pp. 68–71, 2018.

- [25] J. Xue, J. Li, and Y. Gong, “Restructuring of deep neural network acoustic models with singular value decomposition.” in Interspeech, 2013, pp. 2365–2369.

- [26] M. Riera, J. M. Arnau, and A. González, “Dnn pruning with principal component analysis and connection importance estimation,” Journal of Systems Architecture, vol. 122, p. 102336, 2022.

- [27] N. Darabi, M. B. Hashem, H. Pan, A. Cetin, W. Gomes, and A. R. Trivedi, “Adc/dac-free analog acceleration of deep neural networks with frequency transformation,” arXiv preprint arXiv:2309.01771, 2023.

- [28] N. Darabi, M. B. Hashem, S. Bandyopadhyay, and A. R. Trivedi, “Exploiting programmable dipole interaction in straintronic nanomagnet chains for ising problems,” in 2023 24th International Symposium on Quality Electronic Design (ISQED), 2023, pp. 1–1.

- [29] M. B. Hashem, N. Darabi, S. Bandyopadhyay, and A. R. Trivedi, “Solving boolean satisfiability with stochastic nanomagnets,” in 2022 29th IEEE International Conference on Electronics, Circuits and Systems (ICECS), 2022, pp. 1–2.

- [30] A. Jayathilake, A. Perera, and M. Chamikara, “Discrete walsh-hadamard transform in signal processing,” IJRIT Int. J. Res. Inf. Technol, vol. 1, pp. 80–89, 2013.

- [31] H. Pan, D. Badawi, and A. E. Cetin, “Block walsh-hadamard transform based binary layers in deep neural networks,” ACM Transactions on Embedded Computing Systems (TECS), 2022.

- [32] Y. Kong, X. Chen, X. Si, and J. Yang, “Evaluation platform of time-domain computing-in-memory circuits,” IEEE Transactions on Circuits and Systems II: Express Briefs, vol. 70, no. 3, pp. 1174–1178, 2023.

- [33] Predictive technology model (ptm). [Online]. Available: https://ptm.asu.edu/

- [34] H. Jiang, W. Li, S. Huang, S. Cosemans, F. Catthoor, and S. Yu, “Analog-to-digital converter design exploration for compute-in-memory accelerators,” IEEE Design & Test, vol. 39, no. 2, pp. 48–55, 2021.