[c1]Corresponding author \vol? (201?) \pages1–?

received date

Constructing Segmented Differentiable Quadratics to Determine Algorithmic Run Times and Model Non-Polynomial Functions

Abstract

We propose an approach to determine the continual progression of algorithmic efficiency, as an alternative to standard calculations of time complexity, likely, but not exclusively, when dealing with data structures with unknown maximum indexes and with algorithms that are dependent on multiple variables apart from just input size. The proposed method can effectively determine the run time behavior at any given index , as well as , as a function of only one or multiple arguments, by combining quadratic segments, based upon the principles of Lagrangian Polynomials and their respective secant lines. Although the approach used is designed for analyzing the efficacy of computational algorithms, the proposed method can be used within the pure mathematical field as a novel way to construct non-polynomial functions, such as or , as a series of segmented differentiable quadratics to model functional behavior and reoccurring natural patterns. After testing, our method had an average accuracy of above of 99% with regard to functional resemblance.

keywords:

Time Complexity \sepAlgorithmic Run Time \sepPolynomials \sepLagrangian Interpolation1 Introduction

Runtime, and it’s theoretical subset, time complexity, are imperative to understanding the speed and continual efficiency of all algorithmsNasar Aho . Particularly because runtime information allows for thorough comparisons between the performance of competing approaches. Due to the varying environments in which algorithms are executed, time complexity is implemented as a function of inputted argumentsSipser rather than accounting for the situational execution timePusch ; this removes the need to address every extraneous factor that affects the speed of such algorithmsDean . There are countless methods on determining the formulaic runtime complexityQi Guzman , particularly because, from a theoretical perspective, the true runtime can never be determined without thoroughly examining the algorithm itselfullman ; however, this does not mean that the process cannot be expedited, simplified, or made easier.

The goal is to produce a function that can model the time complexity of any given algorithmMohr , primarily who’s runtime is defined as a function of more than just a single variable. We define where is any given algorithm and denotes the execution in a controlled environment. The following method can be used to determine run time with respect to a several variables (not just element size) by evaluating CPU time with respect to an input size. Any confounding variables such as CPU type, computing power, and/or programming language, will be bypassed as they will remain controlled during testing. The constructed polynomial series, which will be a piece-wise of segmented quadratics, will then produce the same functional asymptotic behavior as the true time complexity , which can then be independently determined through the correlation with their respective parent functions. In addition, the methods found for computing such runtimes has profound mathematical implications for representing various non-polynomial functions as differentiable quadratic segments, similar, but not identical, to the outcome of evaluating Taylor SeriesJumarie Corliss . In short, we do this by using reference points of any given non-polynomial, and developing a quadratic (using polynomial interpolationBoor ) over a particular segment that accurately matches the true functional behavior.

2 Methods

Our primary condition is the following:

Additionally,

This ensures that the targeted Time Complexity function must be constructed of only real numbers and be differentiable throughout, except for segemented bounds. It is important to note that . We also define , where k is any constant of proportionality that converts the predicted time complexity into execution time or vice-versa.

2.1 Lagrangian Polynomial Principles

We first construct a single line of intersection amongst every consecutive ordered pair of segmented indexes and respective computing time (or any alternative performance modeling metric). We use the following standard point slope formula to do so:

| (2.1) |

The polynomial of any given segment can be constructed using the explicit formula belowsauer Rashed , in this case the first three indexes within a data set are used; however, this applies for any given 3 point segment within the data set. Defined as: . Note: The proof for the following formulas is shown in section 2.5.

| (2.2) |

We then factor in the polynomial model above and the respective secant line equation, to construct the explicit average form of the initial 3 point segment such that each point is equivalent to the difference between the secant line and the original polynomial.

| (2.3) |

Before we implement this method, we must account for any given segment, and to do so, we must simplify the method of polynomial construction. First we define F(x) to be dependent on our outputs.

| (2.4) |

These outputs are determined accordingly (Note: k = 3 in our case; however, the model would work for any value of k):

| (2.5) |

Such that,

| (2.6) |

2.2 Estimating as a Function of Quadratic Segments

We can then average this with the constructed Lagrangian polynomial to get our model for any given 3-point segment. Note: We can simplify the given expression toBerrut :

| (2.7) |

Such that,

| (2.8) |

as well as the segmented averageComenetz ,

| (2.9) |

We then implement the proposed method of each selected segment to construct the function for every iteration of natural numbers by redefining from a single constructed polynomial to a multi-layered, piece-wise construction of the primary segments of such polynomials.

| (2.10) |

In order to retrieve the complexity of the algorithm at a particular index we can now simply compute . Note: but . Additionally, however, the proposed method, when graphed, will construct a continuous function, making it easy to determine the true runtime of the function as

2.3 Evaluating Quadratic Segments of Multi-variable Algorithms

2.3.1 Run Times with Non-Composite Operations

The following method will suffice if, and only if, the arguments are not directly correlated through any mathematical operation, excluding addition, subtraction, or any non-composite operation. For example, if our unknown time complexity of Algorithm was . We must first evaluate the execution time with respect to a single variable. We use , to denote the execution time of the given function; this can be determined by implementing a computing timer into the algorithm. In this case we evaluate the algorithm accordingly:

| (2.11) |

Such that,

| (2.12) |

And the same for the other argument:

| (2.13) |

Such that,

| (2.14) |

In this particular case, we first isolate the in terms of x. To do so we must first ensure and are independent of each other. Since, in our sample scenario,

| (2.15) |

Now, we can conclude that,

| (2.16) |

And,

| (2.17) |

Now, we can evaluate the over a set of fixed data points. First with respect to x:

| (2.18) |

Then with respect to b:

| (2.19) |

Once we have computed our segmented quadratics with respect a particular index group, we can construct our piece-wise function of as two independent, graphical representations.

| (2.20) |

Although our method produces non-differentiable points at segmented bounds, we can still compute partial derivatives at points such as:

| (2.21) |

We can justify the accuracy of with assuming only one inputted argument accordingly:

| (2.22) |

We can conclude that:

| (2.23) |

Alternatively,

| (2.24) |

2.3.2 Run times with Composite Operations

In order to explain the approach used in cases with unknown runtime functions that consist of composite operations, we must implement the following proof.

Theorem 2.1.

If the unknown run time function consists of composite operations, such as in , this can be instantly determined if the functional difference across a set of input values is not just a graphical translation.

Proof of Theorem 2.1.

If,

| (2.25) |

Then,

| (2.26) |

Additionally,

| (2.27) |

But,

| (2.28) |

Due to the non-composite operations of , the value of does not directly impact the value of , rather just the output of the mulitvariable function. The same can be done conversely with other variables; however, if they are directly correlated, such as in it prevents the difference from being just a translation.

| (2.29) |

Above, it is clear that both independent variables cannot be determined through inputting a constant of 0, causing a non-linear intervariable relationship. ∎

In order to construct the primary segmented function with equivalent behavior to the multivariable runtime or with any number of arguments, we must run execution tests with respect to each variable such that the remaining are treated as constants. If, like in the example stated earlier, the unknown runtime function was , then when graphically modeled for number of tests in terms of , a set of skewed logarithmic curves would be constructed, where as with respect to , a set of hyperbolic functions would be produced. By treating each temporary, non-functional, constant argument as the graphical differences can be factored in, when creating the single time complexity formula. Although there are several values that can be used, to keep the methodology consistent, we decide to take the input value that produces the average functional value over a given segment as the select constant. Although the true function is unknown, we can use the constructed, segmented quadratic to do so.

| (2.30) |

Then we can simply solve for the value of accordinglyIrving , such that :

| (2.31) |

While this process is still comprehensible, once we start introducing functions with more than 2 arguments, we must test their values in planes with multiple dimensional layers, rather than just one or two dimensions. To do so, we determine the intervariable relationships between every potential pair of arguments and construct a potential runtime formula accordingly. Note: The higher dimensional order of the function, the more convoluted the formulaic determination becomes.

Suppose the intervariable runtime function such that the corresponding segmented quadratic function is . We would evaluate the unknown with respect to a single variable such that the remaining are treated as constants. Using the example above, we would first plug in constant values into and , while graphically modeling the rate of change of as an independent function. Then we begin to adjust with increments of to determine, their respective transformational relationship. We would repeat this process for every potential pair , , , and so forth.

| (2.32) |

| (2.33) |

| (2.34) |

From their we can use the graphical model to help deduce the formulaic runtime with respect to all variables.

Similar to the analysis method with respect to a single variable, we can justify the accuracy by approximately equating the average integrated value of each independent segment with it’s true, algorithmic counterpart: Note: We define as the total number of input arguments.

| (2.35) |

This method can be simplified accordingly:

| (2.36) |

2.4 Segmented Quadratics to Construct Non-Polynomials

The following subsection will discuss the mathematical applications of this method, and will focus on the proofs behind constructing non-polynomials as piece-wise functions built upon segmented quadratics.

Lemma 2.2.

Given values of with corresponding values of a representative polynomial can be constructed such that

Proof of Lemma 2.2.

Let,

| (2.37) |

Therefore,

| (2.38) |

Then evaluate,

| (2.39) |

Therefore, is a constructed polynomial such that . It is built upon subsidiary polynomials of degree ∎

Theorem 2.3.

Referencing Lemma 1, given any real non-polynomial, an approximate quadratic piece-wise function can be constructed using segments produced by 3 values, defined over 2 values, of and their corresponding outputs such that is continuous at all x values including respective transition points, but not necessarily differentiable at such values.

Proof of Theorem 2.3.

Since the initial portion of the polynomial is based upon Lemma 1, it is clear that 3 base points will construct a quadratic polynomial, unless their respective derivatives are equivalent which would produce a sloped line. The following method is shown:

| (2.40) |

When simplified, the function would be defined accordingly:

| (2.41) |

By definition any polynomial is continuous throughout it’s designated boundsCucker , therefore, for all values within each segment, is continuous. And, since the bounded values of each segment are equivalent, we can conclude that the produced function is continuous everywhere. Formally where is any bounded point. However, this does not guarantee that ; therefore it’s derivative at the bounded point is likely undefined. ∎

Theorem 2.4.

Given any segmented quadratic constructed through averaging Lagrangian Interpolation with it’s respective secant, the graphical concavity can be determined by looking at the sign of variable such that .

Proof of Theorem 2.4.

Since constructed with three base points, the only polynomial function produced are segmented quadratics. Upward concavity exists ; while downward concavity exists . However, this process can be expedited without the need to compute second derivatives.

Since our segmented polynomial is constructed using only three points we can conclude that,

| (2.42) |

Therefore,

| (2.43) |

Thus, the sign of is the only significant value to determine the segmented concavity . Determining the functional concavity of the Lagrangian construction is important, as with certain functions, primarily those with upward concavity, it may not be necessary to compute secant line averages. ∎

When testing the mathematical accuracy of our approach, we use the segmented average value and compare it to that of the original function . In cases where we simply compute the reciprocal of the following function. We use and as placeholder variables to represent the segmented bounds.

| (2.44) |

3 Results

We divide the results section into the primary perspective (algorithmic implications) and the secondary perspective (pure mathematical implications).

3.1 Algorithmic Test Results

We tested our method on four algorithms (two single variable functions and two multivariable functions) and compared the produced time complexity formulas to the true, known, complexities to see how accurate our formulations were, and the extremity of deviations, if, at all. The first algorithm (single variable) was a binary search of elements, with a complexity of . The second (single variable) was a sort of elements, with a complexity of . The third (multivariable) was a combined search sort algorithm of unsorted elements, and select sorted elements, with a complexity of . The fourth (multivariable) was a custom algorithm of elements, with a complexity of . Although coefficients and additional constants are implemented in the predicted complexity, due to time being the only output variable, the only relevant component is the contents of the big- as it represents the asymptotic behavior of the algorithmic runtime regardless of any confounding variables. For multivariable algorithms, like stated in the methods, runtime complexities were computed with respect to each variable, and put together in the form of the final predicted complexity.

Complexity Constructed Polynomial Predicted Complexity

3.2 Mathematical Test Results

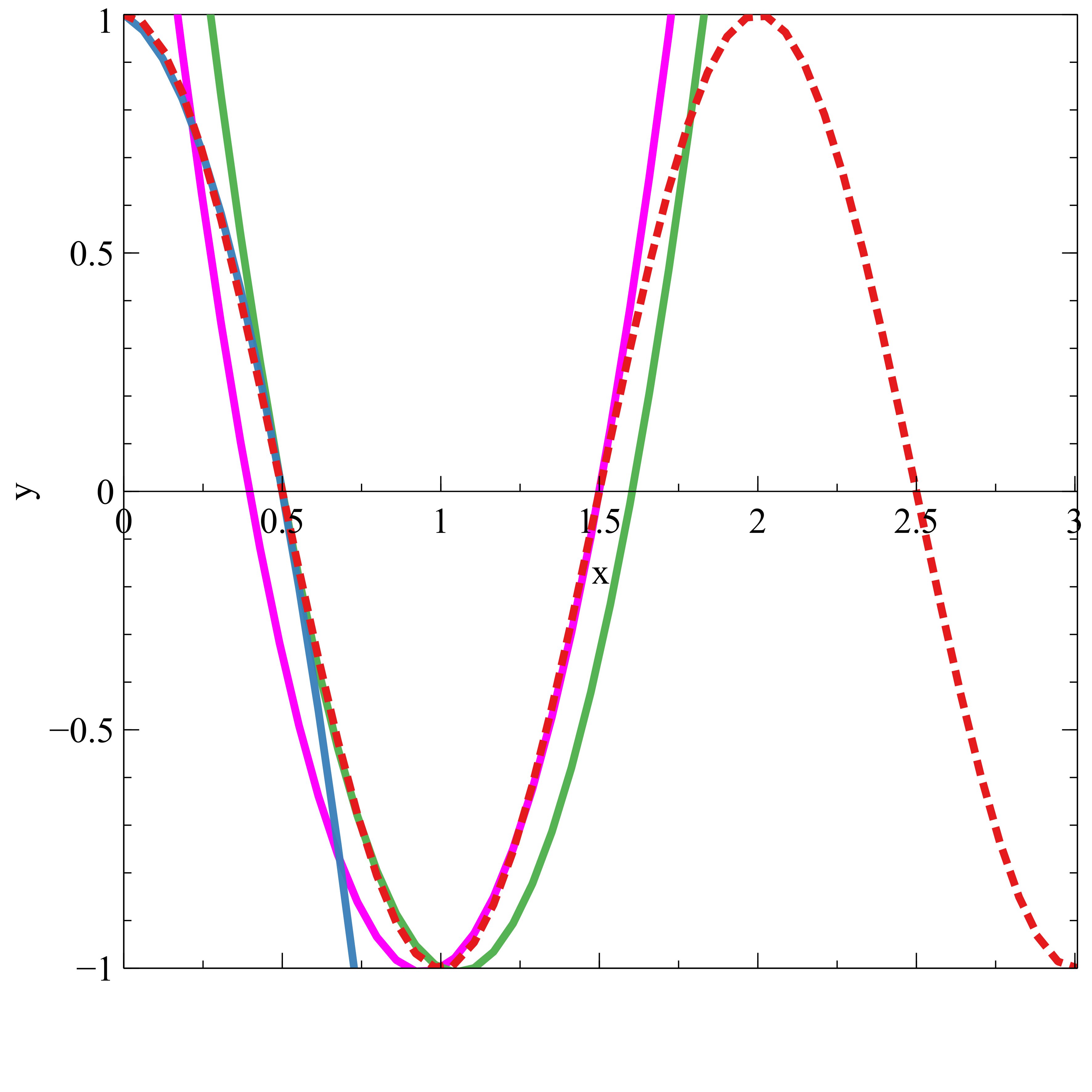

We tested our method against various non-polynomial functions, and selected one example of each common type of non-polynomial to be representative of the accuracy with it’s functional family. We made sure to remove any non-composite components such as any constants, as that would only adjust the function by a graphical translation. The functions used were (logarithm family), (rational family), (exponential family), and (trigonometric family); although we could choose more convoluted functions, we wanted to showcase performance for functions that are similar to their parent functions to attain a holistic perspective. In most cases, the Lagrangian constructions tend to exceed the vertical level of their respective non-polynomials, making secant line averages most useful with downward concavity. We defined our piece wise function until some relatively close, arbitrary whole value that leaves numbers simple, to stay consistent; however, the accuracy is still indicative of potential performance regardless of the final bound, due to the natural progression of such functions.

| Function | Constructed Polynomial | Calculated Accuracy |

|---|---|---|

4 Discussion and Implications

4.1 Algorithmic Discussion

After testing the proposed approach against several known algorithms, we were able to swiftly determine the runtime functions that correspond with their true time complexities. We tested the approach on two single variable algorithms and two multivariable algorithms such that we could compare the produced complexity behaviour with the true, known, complexity. In practice, this method will be used on algorithm’s where complexities are unknown to help determine their runtime functions; however, experimentally, we needed to know the true complexity beforehand to deduce the comparative accuracy. Regardless, in all cases our method was able to produce the correct big- runtime function. This was determined through the automated construction of segmented polynomial models given a set of input data. By treating each variable independently and graphing their grouped correlation, it made it easy to deduce the respective time complexity. To reiterate, any external coefficients and constants are a result of the particular test environment and because time is the output value. The only relevant component in determining the accuracy of the method are the contents of the big-O function. Most of the predicted runtime complexity functions followed the format of where is the constant of proportionality between execution time and standard time complexity and is any factor of translation that matches the produced graphical curve/line with their true counterpart. While these values help us overlay our constructions with their parent functions, they aren’t necessarily important in determining the accuracy of our approach as the asymptotic behavior of our construction will be the same regardless. We are confident that the proposed method can significantly help expedite the process of determining functional time complexities in all cases, including both single and mulitvariable algorithms.

4.2 Mathematical Discussion

After reviewing the results, we were able to confirm the accuracy of the proposed approach with constructing matching segmented differentiable quadratics given any non-polynomials. These include logarithmic, exponential, trigonometric, and rational functions. To determine the approaches accuracy with select functions, we calculated the average value of the formulated function over a particular segment. And after doing so, as well as reviewing the formulaic relationships between computed segments, we found a collective functional resemblance score of greater than and began to notice profound mathematical implications. After testing just a few data points, we can produce a rule that can construct the next consecutive segmented polynomial based upon the functional patterns that surface. For example, with regard to , we were able to determine that every consecutive segment was equivalent to such that the variables are the constants of the previous segment and the accuracy of any additional segments would remain identical. Not only is this a revolutionary method for accurate, polynomial replications; but it’s sheer simplicity combats flaws found in leading methods of doing so (primarily with non-sinusoidal functions), most notably Taylor series. Using these methods mathematicians and scientists can construct accurate, differentiable functions to represent patterned data, non-polynomials, and functions found in higher theoretical dimensions. Additionally, a similar approach can be used to determine the natural progression of repetitious systems such as natural disasters, planetary orbits, or pandemic-related death tolls, to lead to a better understanding of their nature. As in theory, their physical attributes and properties are built upon reoccurring, natural functions.

4.3 Conclusion

In this paper we proposed an approach to use segmented quadratic construction, based upon the principles of Lagrangian Interpolation to help determine algorithmic runtimes, as well as model non-polynomials with advanced, foreseeable applications in pure mathematics and pattern modeling/recognition found in science and nature. We hope to build upon this approach by improving and determine new ways to apply this research in all computational and mathematical based fields.

Acknowledgments

I would like to thank Professor Jeffery Ullman, Mr. Sudhir Kamath, Mr. Robert Gendron, Mr. Phillip Nho, and Ms. Katie MacDougall for their continual support with my research work.

References

- (1) Nasar, A. A. (2016). The history of Algorithmic complexity. The Mathematics Enthusiast, 13(3), 217-242.

- (2) Aho, A., Lam, M., Sethi, R., & Ullman, J. (2007). Compilers: Principles, Techniques and Tools, 2nd Editio.

- (3) Sipser, M. (1996). Introduction to the Theory of Computation. ACM Sigact News, 27(1), 27-29.

- (4) Puschner, P., & Koza, C. (1989). Calculating the maximum execution time of real-time programs. Real-time systems, 1(2), 159-176..

- (5) Dean, W. (2015). Computational complexity theory.

- (6) Qi, Q., Weise, T., & Li, B. (2017, July). Modeling optimization algorithm runtime behavior and its applications. In Proceedings of the Genetic and Evolutionary Computation Conference Companion (pp. 115-116).

- (7) Guzman, J. P., & Limoanco, T. (2017). An Empirical Approach to Algorithm Analysis Resulting in Approximations to Big Theta Time Complexity. JSW, 12(12), 946-976.

- (8) Aho, A. V., & Ullman, J. D. (1994). Foundations of computer science. WH Freeman & Co..

- (9) Mohr, A. (2014). Quantum computing in complexity theory and theory of computation. Carbondale, IL.

- (10) Jumarie, G. (2006). Modified Riemann-Liouville derivative and fractional Taylor series of nondifferentiable functions further results. Computers & Mathematics with Applications, 51(9-10), 1367-1376.

- (11) Corliss, G., & Chang, Y. F. (1982). Solving ordinary differential equations using Taylor series. ACM Transactions on Mathematical Software (TOMS), 8(2), 114-144.

- (12) De Boor, C., & Ron, A. (1990). On multivariate polynomial interpolation. Constructive Approximation, 6(3), 287-302.

- (13) Sauer, T., & Xu, Y. (1995). On multivariate Lagrange interpolation. Mathematics of computation, 64(211), 1147-1170.

- (14) Rashed, M. T. (2004). Lagrange interpolation to compute the numerical solutions of differential, integral and integro-differential equations. Applied Mathematics and computation, 151(3), 869-878.

- (15) Berrut, J. P., & Trefethen, L. N. (2004). Barycentric lagrange interpolation. SIAM review, 46(3), 501-517.

- (16) Comenetz, M. (2002). Calculus: the elements. World Scientific Publishing Company.

- (17) Irving, R. (2020). Beyond the quadratic formula (Vol. 62). American Mathematical Soc..

- (18) Cucker, F., & Corbalan, A. G. (1989). An alternate proof of the continuity of the roots of a polynomial. The American Mathematical Monthly, 96(4), 342-345.