Constant matters: Fine-grained Error Bound on Differentially Private Continual Observation Using Completely Bounded Norms

Abstract

We study fine-grained error bounds for differentially private algorithms for counting under continual observation. Our main insight is that the matrix mechanism, when using lower-triangular matrices, can be used in the continual observation model. More specifically, we give an explicit factorization for the counting matrix and upper bound the error explicitly. We also give a fine-grained analysis, specifying the exact constant in the upper bound. Our analysis is based on upper and lower bounds of the completely bounded norm (cb-norm) of . Furthermore, we are the first to give concrete error bounds for various problems under continual observation such as binary counting, maintaining a histogram, releasing an approximately cut-preserving synthetic graph, many graph-based statistics, and substring and episode counting. Finally we note that our result can be used to get a fine-grained error bound for non-interactive local learning and the first lower bounds on the additive error for -differentially-private counting under continual observation. Subsequent to this work, Henzinger et al. (SODA, 2023) showed that our factorization also achieves fine-grained mean-squared error.

1 Introduction

In recent times, many large scale applications of data analysis involved repeated computations because of the incidence of infectious diseases [App21, CDC20], typically with the goal of preparing some appropriate response. However, privacy of the user data (such as a positive or negative test result) is equally important. In such an application, the system is required to continually produce outputs while preserving a robust privacy guarantee such as differential privacy. This setting was already used as motivating example by [DNPR10] in the first work on differential privacy under continual release, where they write:

“Consider a website for H1N1 self-assessment. Individuals can interact with the site to learn whether symptoms they are experiencing may be indicative of the H1N1 flu. The user fills in some demographic data (age, zipcode, sex), and responds to queries about his symptoms (fever over F?, sore throat?, duration of symptoms?). We would like to continually analyze aggregate information of consenting users in order to monitor regional health conditions, with the goal, for example, of organizing improved flu response. Can we do this in a differentially private fashion with reasonable accuracy (despite the fact that the system is continually producing outputs)?"

In the continual release (or observation) model the input data arrives as a stream of items , with data arriving in round and the mechanism has to be able to output an answer after the arrival of each item. The study of the continual release model was initiated concurrently by [DNPR10] and [CSS11] through the study of the (continual) binary counting problem: Given a stream of bits, i.e., zeros and ones, output after each bit the sum of the bits so far, i.e., the number of ones in the input so far. [DNPR10] showed that there exists a differentially private mechanism, called the binary (tree) mechanism, for this problem with an additive error of , where the additive error is the maximum additive error over all rounds . This was further improved to at time by [CSS11] (see their Corollary 5.3). These algorithms use Laplace noise for differential privacy. [JRSS21] showed that using Gaussian noise instead an additive error of can be achieved. However, the constant has never been explicitly stated. Given the wide applications of binary counting in many downstream tasks, such as counting in the sliding window model [BFM+13], frequency estimation [CR21], graph problems [FHO21], frequency estimation in the sliding window model [CLSX12, EMM+23, HQYC21, Upa19], counting under adaptive adversary [JRSS21], optimization [CCMRT22, DMR+22, HUU23, KMS+21, STU17], graph spectrum [UUA21], and matrix analysis [UU21], constants can define whether the output is useful or not in practice. In fact, from the practitioner’s point of view, it is important to know the constant hidden in the notation. With this in mind, we ask the following central question:

Can we get fine-grained bounds on the constants in the additive error of differentially private algorithms for binary counting under continual release?

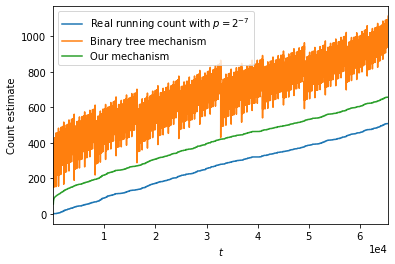

The problem of reducing the additive error for binary counting under continual release has been pursued before (see [WCZ+21] and references therein). Most of them use some “smoothening" technique [WCZ+21], assume some structure in the data [RN10], or measure error in mean squared loss [WCZ+21]111 While mean squared error is useful in some applications like learning [DMR+22, HUU23, KMS+21], in many application we prefer a worst case additive error, the metric of choice in this paper.. There is a practical reason to smoothen the output of the binary mechanism as its additive error is highly non-smooth (see Figure 1) due to the way the binary mechanism works: its additive error at any time depends on how many dyadic intervals are summed up in the output for . This forces the error to have non-uniformity of order , which makes it hard to interpret222 For example, consider a use case of ECG monitoring on a smartwatch of a heart patient. Depending on whether and for some , the error of the output of binary mechanism might send an SOS signal or not.. For example in exposure-notification systems that has to operate when the stream length is in order of , it is desirable that the algorithm is scalable and its output fulfills properties such as monotonicity and smoothness to make the output interpretable. Thus, the focus of this paper is to design a scalable mechanism for binary counting in the continual release model with a smooth additive error and to show a (small) fine-grained error bound.

Our contributions. We prove concrete bounds on the additive error for counting under continual release that is tight up to a small additive gap. Since our bounds are closed-form expressions, it is straightforward to evaluate them for any data analysis task. Furthermore, our algorithms only perform few matrix-vector multiplications and additions, which makes them easy to implement and tailor to operations natively implemented in modern hardware. Finally, our algorithms are also efficient and the additive error of the output is smooth. As counting is a versatile building block, we get concrete bounds on a wide class of problems under continual release such as maintaining histograms, generating synthetic graphs that preserve cut sizes, computing various graph functions, and substring and counting episode in string sequences (see Section 1.2 for more details). Furthermore, this also leads to an improvement in the additive error for non-interactive local learning [STU17], private learning on non-Euclidean geometry [AFKT21], and private online prediction [AFKT22]: These algorithms use the binary mechanism as a subroutine and using our mechanism instead would give a constant-factor improvements in the additive error. This in turn allows to decrease the privacy parameter in private learning, which is highly desirable and listed as motivation in several recent works [AFT22, DMR+22, HUU23]333Note that [AFT22] and [HUU23] appeared subsequent to this work on arXiv..

Our bounds bridge the gap between theoretical work on differential privacy, which mostly concentrates on asymptotic analysis to reveal the capabilities of differential private algorithms, and practical applications, which need to obtain useful information from differential private algorithms for their specific use cases.

Organization. The rest of this section gives the formal problem statement, an overview of results, technical contribution, and comparison with related works. Section 2 gives the necessary definitions, Section 3 presents the formal proof of our main result, Section 5 contains all its applications we explored. We give lower bounds in Section 6 and present experiments in Section 7.

1.1 The Formal Problem

Linear queries are classically defined as follows: There is a universe of values and a set of functions with . Given a vector of values of (with repetitions allowed) a linear query for the function computes .444Usually a linear query is defined to return the value , but as we assume that is publicly known it is simpler to use our formula. A workload for a vector and a set of functions computes the linear query for each function with . This computation can be formalized using linear algebra notation as follows: Assume there is a fixed ordering of all elements of . The workload matrix is defined by , i.e. there is a row for each function and a column for each value . Let be the histogram vector of , i.e. appears times in . Then answering the linear queries is equivalent to computing .

In the continual release setting, the vector is given incrementally to the mechanism in rounds or time steps. In time step , is revealed to the mechanism and it has to output under differential privacy, where is the workload matrix and denotes the principal submatrix of .

Binary counting corresponds to a very simple linear query in this setting: The universe equals , there is only one query with and . However, alternatively, binary counting could also be expressed as follows and this is the notation that we will use: There is only one query with for all giving rise to a simple workload matrix for the static setting and the mechanism outputs . Thus, in the continual release setting, we study the following the following workload matrix, :

| (1) |

where for any matrix , denote its th coordinate.

There has been a large body of work on designing differentially private algorithms for general workload matrices in the static setting, i.e., not under continual release. One of the scalable techniques that provably reduces the error on linear queries is a query matrix optimization technique known as workload optimizer (see [MMHM21] and references therein). There have been various algorithms developed for this, one of them being the matrix mechanism [LMH+15], which first determines two matrices and such that and then outputs , where is a vector of Gaussian values for a suitable choice of and is the identity matrix. For a privacy budget , it can be shown that the additive error, denoted as error of the answer vector (see Definition 3), of the matrix mechanism for linear queries with -sensitivity (eq. 7) represented by a workload matrix using the Gaussian mechanism is as follows: with probability over the random coins of the algorithm, the additive error is at most

| (2) |

where (resp., ) is the maximum norm of rows (resp. columns) of . The function is a function arising as in the proof of the privacy guarantee of the Gaussian mechanism when (see Theorem A.1 in [DR14]. If , one can analytically compute using Algorithm 1 in [BW18]. For the rest of this paper, we use to denote this function. Therefore, we need to find a factorization that minimizes . Note that the quantity

is the completely bounded norm555[Pau86] in Section 7.7 attributes the second equality to [Haa80]. (abbreviated as cb-norm). Here denotes the Schur product [Sch11].

The factor in equation (2) is due to the error bound of the Gaussian mechanism followed by the union bound and is the same for all factorizations. Thus to get a concrete additive error, we need to find a factorization such that the quantity is not just small, but also can be computed concretely. Furthermore we observe that if both and are lower-triangular matrices, then the resulting mechanism works not only in the static setting, but also in the continual release model. Therefore, for the rest of the paper, we only focus on finding such a factorization of the workload matrix , which is a fundamental query in the continual release model.

1.2 Our Results

1. Bounding . The question of finding the optimal value of was also raised in the conference version of [MNT20], who showed asymptotically tight bound. In the past, there has been considerable effort to get a tight bound on [Dav84, Mat93] with the best-known result is the following due to Mathias [Mat93, Corollary 3.5]:

| (3) | ||||

The key point to note is that the proof of [Mat93] relies on the dual characterization of cb-norm, and, thus, does not give an explicit factorization. In contrast, we give an explicit factorization into lower triangular matrices that achieve a bound in terms of the function, defined over natural numbers as follows:

| (4) |

Theorem 1 (Upper bound on ).

Let be the matrix defined in eq. 1. Then, there is an explicit factorization into lower triangular matrices such that

| (5) |

The bounds in eq. 3 do not have a closed form formula; however, we can show that it converges in limit as (Lemma 6). However, our focus in this paper is on concrete bounds and exact factorization. Our (theoretical) upper bound and the (analytically computed) lower bound of [Mat93] are less than apart for all (Figure 2).

Additionally, our result has the advantage that we achieve the bound with an explicit factorization of such that both and are lower-triangular matrices. As discussed above, this allows us to use it for various applications. Using this fact and carefully choosing the “noise vector” for every time epoch, the following result is a consequence of Theorem 1 and eq. 2:

Theorem 2 (Upper bound on differentially private continual counting).

Let be the privacy parameters. There is an -differentially private algorithm for binary counting in the continual release model with output in round such that in every execution, with probability at least over the coin tosses of the algorithm, simultaneously, for all rounds with , it holds that

| (6) |

where is as in eq. 4. The time to output in round is .

As mentioned above, the binary mechanism of [CSS11] and [DNPR10] and its improvement (when the error metric is expected mean squared) by [Hon15] can be seen as factorization mechanism. This has been independently noticed by [DMR+22]. While [CSS11] and [DNPR10] do work in the continual release model, [Hon15]’s optimization does not because for a partial sum, , it also uses the information stored at the nodes formed after time . Therefore, for the comparison with related work, we do not include Honaker’s optimization [Hon15]. Moreover, Honaker’s optimization is for minimizing the expected mean squared error (i.e., in norm), and not the error, which is the focus of our work. To the best of our knowledge, only [CSS11] and [DNPR10] consider additive error in terms of norm. All other approaches used for binary counting under continual observation (see [WCZ+21] and references therein) use some form of smoothening of the output and consider the expected mean squared error. While useful in some applications, many applications require to bound the additive error.

The most relevant work to ours is the subsequent work by [HUU23] that show that our factorization gives almost tight concrete bounds on performing counting under continual observation with respect to the expected mean squared error. On the other hand, we bound the maximum absolute additive error, i.e., in norm of the error. Note that our explicit factorization for has the nice property that there are exactly distinct entries (instead of possibly entries in [DMR+22])’s factorization. This has a large impact on computation time.

Remark 1 (Suboptimality of the binary mechanism with respect to the constant).

The binary mechanism computes a linear combination of the entries in the streamed vector as all of the internal nodes of the binary tree can be seen as a linear combinations of the entries of . Now we can consider the binary mechanism as a matrix mechanism. The right factor is constructed as follows: where are defined recursively as follows:

That is, , with each row corresponding to the partial sum computed for the corresponding node in the binary tree from leaf to the root. The corresponding matrix is a matrix in , where row has a one in at most entries, corresponding exactly to the binary representation of .

In particular, this factorization view tells us that , which implies that the -error is suboptimal by a factor of . Our experiments (Figure 3) confirm this behavior experimentally. With respect to the mean-squared error this was observed by several works [DMR+22, Hon15] culminating in the work of [HUU23], who gave a theoretical proof for the suboptimality of binary mechanism for mean-squared error.

Applications.

Our result for continual binary counting can be extended in various directions. We show how to use it to quantify the additive error for (1) outputting a synthetic graph on the same vertex set which approximately preserves the values of all -cuts with and being disjoint vertex sets of the graph, (2) frequency estimation (aka histogram estimation), (3) various graph functions, (4) substring counting, and (5) episode counting. Our mechanism can also be adapted for the locally private non-interactive learners of [STU17] by replacing the binary mechanism with our matrix mechanism, which requires major changes in the analysis. In Table 1, we tabulate these applications. Based on a lower bound construction of [JRSS21], we show in Section 6 that for large enough and constant the additive error in (1) is tight up to polylogarithmic factors and the additive error in (4) is tight for large enough up to a factor that is linear in , where is the universe of letters (see Corollary 4 and Section 6 for details). Finally, we can use our mechanism to estimate the running average of a sequence of bits with absolute error . All the applications are presented in detail in Section 5. We note that all our algorithms are differentially private in the setting considered in [DNPR10].

| Problem | Additive error | Reference |

|---|---|---|

| -cuts (with ) | Corollary 1 | |

| Histogram estimation | Corollary 2 | |

| Graph functions | Corollary 3 | |

| Counting all length substrings | Corollary 4 | |

| Counting all length episodes | Corollary 5 | |

| -dimensional local convex risk min. | Corollary 6 |

2. Lower Bounds. We now turn our attention to new lower bounds on continual counting under approximate differential privacy. Prior to this paper, the only known lower bound for differentially private continual observation was for counting under pure differential privacy. There are a few other methods to prove lower bounds. For example, we can use the relation between hereditary discrepancy and private linear queries [MN12] along with the generic lower bound on hereditary discrepancy [MNT20] to get an lower bound. This can be improved by using the reduction of continual counting to the threshold and get an [BNSV15].

Our lower bound is for a class of mechanisms, called data-independent mechanisms, that add a random variable sampled from the distribution which is independent of the input. Most commonly used differentially private mechanisms, such as the Laplace mechanism and the Gaussian mechanism fall under this class.

For binary counting, recall from eq. 3 that there exists a lower bound on in terms of . In Lemma 6, we show that

from above. Combined with the proof idea of [ENU20], this gives the following bound for non-adaptive input streams, i.e., where the input stream is generated before seeing any output of the mechanism.

Theorem 3 (Lower bound).

Let be a sufficiently small constant and be the set of data-independent -differentially private algorithms for binary counting under non-adaptive continual observation. Then

1.3 Our Technical Contribution

1. Using the matrix mechanism in the continual release model. Our idea to use the matrix mechanism in the continual release model is as follows: Assume is known to before any items of the stream arrive and there exists an explicit factorization of into lower triangular matrices and that can be computed efficiently by during preprocessing. As we show, this is the case for the matrix . This property leads to the following mechanism: At time , the mechanism creates with consists of the current -vector with zeros appended, and then returns the th entry of , where is a suitable “noise vector”. As and are lower-triangular, the th entry of is identical to the th entry in , where is the final input vector , and, thus, it suffices to analyze the error of the static matrix mechanism. Note that our algorithm can be implemented so that it requires time at time . The advantage of this approach is that it allows us to use the analysis of the static matrix mechanism while getting an explicit bound on the additive error of the mechanism in the continual release model.

Factorization in terms of lower triangular matrices is not known to be necessary for the continual release model; however, [DMR+22] pointed out that an arbitrary factorization will not work. For example, they discuss that [Hon15]’s optimization of the binary mechanism can be seen as a factorization but it cannot be used for continual release as the output of his linear program at time can give positive weights to values of generated at a future time . Furthermore, as instead of computing we work with -dimensional submatrices of and , we can replace a factor in the upper bound on the additive error due to the binary mechanism by a , where denotes the current time step.

2. Bounding . The upper bound can be derived in various ways. One direct approach would be to find appropriate Kraus operators of a linear map and then use the characterization by [HP93] of completely bounded norm. This approach yields an upper bound of ; however, it does not directly give lower triangular factorization and .

Instead we use the characterization given by [Pau82], which gives us a factorization in terms of lower triangular matrices. More precisely, using three basic trigonometric identities, we show that the th entry of and is an integral of every even power of the cosine function, for . This choice of matrices leads to the upper bound in eq. 5.

3. Applications. While counting and averaging under continual release follows from bounds in Theorem 1, computing cut-functions requires some ingenuity. In particular, one can consider -cuts for an -vertex graph as linear queries by constructing a matrix whose rows correspond to each possible cut query and whose columns corresponds to all possible edges in . The entry of equals to if the edge crosses the boundary of the cut . However, it is not clear how to use it in the matrix mechanism efficiently because known algorithm for finding a factorization as well as the resulting factorization depend polynomial on the dimension of the matrix and the number of rows in is . Instead we show how to exploit the algebraic structure of cut functions so that at each time step the mechanism only has to compute , where is a -dimensional matrix, is -dimensional matrix and is -dimensional. This gives an mechanism that has error (see Corollary 1 for exact constant) and can be implemented to run in time per time step.

Binary counting can also be extended to histogram estimation. [CR21] gave a differentially private mechanism for histogram estimation where in each time epoch exactly one item either arrives or is removed. We extend our approach to work in the setting that they call known-domain, restricted -sensitivity and improve the constant factor in the additive error by the same amount as for binary counting.

We also show in Section 5.6 an application of our mechanism to non-interactive local learning. The non-interactive algorithm for local convex risk minimization is an adaption of the algorithm of [STU17], which uses the binary tree mechanism for binary counting as a subroutine. Replacing it by our mechanism for binary counting (Theorem 2) leads to various technical challenges: From the algorithmic design perspective, [STU17] used the binary mechanism with a randomization routine from [DJW13], which expects as input a binary vector, while we apply randomization to , where has real-valued entries. We overcome this difficulty by using two instead of one binary counter. From an analysis point of view, the error analysis in [STU17] is based on the error analysis in [BS15] that uses various techniques, such as the randomizer of [DJW13], error-correcting codes, and the Johnson-Lindenstrauss lemma. However, one can show that we can give the same uniform approximation (see Definition 5 in [STU17] for the formal definition) as in [STU17] by using the Gaussian mechanism and two binary counters so that the rest of their analysis applies.

2 Notations and Preliminaries

We use to denote the th coordinate of a vector . For a matrix , we use to denote its th coordinate, to denote its th column, to denote its th row, to denote its trace norm of square matrix, to denote its Frobenius norm, to denote its operator norm, and to denote transpose of . We use to denote identity matrix of dimension . If all the eigenvalues of a symmetrix matrix are non-negative, then the matrix is known as positive semidefinite (PSD for short) and is denoted by . For symmetric matrices , the notations implies that is PSD. For an matrix , its tensor product (or Kronecker product) with another matrix is

We use to denote the tensor product of and . In our case, the matrix would always be identity matrix of appropriate dimension.

One main application of our results is in differential privacy formally defined below:

Definition 1.

A randomized mechanism gives -differential privacy if for all neighboring data sets and in the domain of differing in at most one row, and all measurable subset in the range of , where the probability is over the private coins of .

This definition requires, however, to define neighboring data sets in the continual release model. In this model the data is given as a stream of individual data items, each belonging to a unique user, each arriving one after the other, one per time step. In the privacy literature, there are two well-studied notions of neighboring streams [CSS11, DNPR10]: (i) user-level privacy, where two streams are neighboring if they differ in potentially all data items of a single user; and (ii) event-level privacy, where two streams are neighboring if they differ in a single data item in the stream. We here study event-level privacy.

Our algorithm uses the Gaussian mechanism. To define the Gaussian mechanism, we need to first define -sensitivity. For a function its -sensitivity is defined as

| (7) |

Definition 2 (Gaussian mechanism).

Let be a function with -sensitivity . For a given the Gaussian mechanism , which given returns , where , satisfies -differential privacy.

Definition 3 (Accuracy).

A mechanism is -accurate for a function if, for all finite input streams of length , the maximum absolute error satisfies with probability at least over the coins of the mechanism.

3 Proof of Theorem 1

The proof of Theorem 1 relies on the following lemmas.

Lemma 1 ([CQ05]).

For integer , define Then,

Proof of Theorem 1.

Define a function, , recursively as follows

| (8) |

Since the function satisfies a nice recurrence relation, it is very easy to compute. Let and be defined as follows666Recently, Amir Yehudayoff (through Rasmus Pagh) communicated to us that this factorization was stated in the 1977 work by Bennett [Ben77, page 630]:

| (9) |

Lemma 2.

Let be the matrix defined in eq. 1. Then .

Proof.

One way to prove the lemma is by using trigonometric inequalities for and that (i) For any , , (ii) For even , , (iii) For all , This proof would require a lot of algebraic manipulations. However, our proof relies on an observation that all the three matrices, and are principal submatrix of Toeplitz operators. The main idea would be to represent Toeplitz operator in its functional form: Let denote the entries of the Toeplitz operator, , with complex entries. Then its unique associated symbol is

where such that . We can then write for . Then for operator whose principal submatrix is the matrix , we arrive that its associated symbol is

Let denote the Toeplitz operator whose submatrix is the matrix and denote the Toeplitz operator whose submatrix is the matrix . Then the symbol associated with and is

| (10) |

Now . Therefore, comparing the terms, we can rewrite eq. 10 as follows:

Since, for any two Toeplitz operators, and with associated symbols and , respectively, has the associated symbol [BG00], we have the lemma. ∎

Using Lemma 1, we have

Combining with the fact that and , we have the following:

Lemma 3.

Let and be matrices defined by eq. 9. Then . Further,

4 Proof of Theorem 2

Fix a time . Let denote the principal submatrix of and be the principal submatrix of . Let the vector formed by the streamed bits be . Let be a freshly sampled Gaussian vector such that .

Let denote the principal submatrix of . The algorithm computes

and outputs the th coordinate of (denoted by ). So far, the data structure maintained by the mechanism when it enters round is simply . Now, note that the th coordinate of equals the sum of the first bits and can be computed in constant time when the sum of the bits of the previous round is known (i.e., maintained). Then, sampling a fresh Gaussian vector and computing the th coordinate of takes time . For privacy, note that the -sensitivity of is ; therefore, adding Gaussian noise with variance preserves -differential privacy. Now for the accuracy guarantee,

Therefore,

Recall that . The Cauchy-Schwarz inequality shows that the function has Lipschitz constant , i.e., the maximum row norm. Now define and note that and . Using the concentration inequality for Gaussian random variables with unit variance and a function with Lipschitz constant (e.g., Proposition 4 in [Zei16]) implies that

Setting

the result follows using a union bound over all time steps and Theorem 1, which implies that .

5 Applications in Continual Release

5.1 Continuously releasing a synthetic graph which approximates all cuts.

For a weighted graph , we let denote the size of the vertex set and denote the size of the edge set . When the graph is uniformly weighted (i.e., all existing edges have the same weight, all non-existing have weight 0), then the graph is denoted . Let be a diagonal matrix with non-negative edge weights on the diagonal. If we define an orientation of the edges of graph, then we can define the signed edge-vertex incidence matrix as follows:

One important matrix representation of a graph is its Laplacian (or Kirchhoff matrix). For a graph , its Laplacian is the matrix form of the negative discrete Laplace operator on a graph that approximates the negative continuous Laplacian obtained by the finite difference method.

Definition 4 (-cut).

For two disjoint subsets and , the size of the cut -cut is denoted and defined as

When , we denote by .

In this section we study the following problem. Let be a weighted graph and consider a sequence of updates to the edges of , where each update consists of an (edge,weight) tuple with weight in that adds the weight to the corresponding edge. For , , let denote the graph that is obtained by applying the first updates to . We give a differentially private mechanism that returns after each update a graph such that for every cut with , the number of edges crossing the cut in differs from the number of edges crossing the same cut in by at most :

Corollary 1.

There is an -differentially private algorithm that, for any stream of edge updates of length , outputs a synthetic graph in round and with probability at least 2/3 over the coin tosses of the algorithm, simultaneously for all rounds with , it holds that for any with ,

where is the graph formed at time through edge updates and is as defined in eq. 2. The time for round is .

Proof.

Let us first analyze the case where . In this case, we encode the updates as an vector and consider the following counting matrix:

For the rest of this subsection, we drop the subscript and denote by . Recall the function defined by eq. 8. Let . Using this function, we can compute the following factorization of : Let and denote the principal submatrix of and , respectively. They both consist of block matrices, each being formed by all columns and rows of and , respectively. Further, let and denote the th block matrix of rows of and , respectively. Let be the vector formed by the first updates, i.e., the edges of which are given by the vector

Let be the function of and stated in eq. 6 and . Then the edges of the weighted graph which is output at time are given by the vector where . Note that computing the output naively takes time to compute , time to generate and add , and time to multiply the result with . However, if we store the vector of of the previous round and only compute in round , then the vector can be created by “appending” to the vector . Thus, can be computed in time , which reduces the total computation time at time step to .

We next analyse the additive error of this mechanism. The output at time of the algorithm is a vector indicating the edges as Let be a row vector whose coordinates are the evaluations of the function on . Then .

As is the weighted sum of random variables from , it holds that In other words, the error is due to a graph, , with weights sampled from a Gaussian distribution .

For a subset , let

where is the th standard basis. It is known that for any positive weighted graph , the -cut . So, for any subset ,

The proof now follows on the same line as in Upadhyay et al. [UUA21]. In more details, if denotes the Laplacian of the complete graph with vertices, then . Here, means that for all . Setting , the union bound gives that with probability at least , simultaneously for all rounds ,

| (11) |

We next consider the case of cuts, where and . Without loss of generality, let . Let us denote by the graph induced by a vertex set . In this case, for the analysis, we can consider the subgraph, , formed by the vertex set . By Fiedler’s result [Fie73], , where denotes the th singular value of the Laplacian of the graph . Considering this subgraph, we have reduced the analysis of cut on to the analysis of -cut on . Therefore, using the previous analysis, we get the result.

We now give the privacy proof. At time , we only need to prove privacy for as multiplication by can be seen as post-processing. Now consider two neighboring graphs form by the stream of (edge, weight) tuple, where at time , they differ in weight. If , the output distribution is the same because the input is the same. So, consider . At this point, and differ in exactly positions by at most . Breaking in blocks of coordinates, the position where is is exactly corresponding to the edge they differ. Now multiplying with results in vector whose non-zero entries are . Using Lemma 3, . Therefore, we have -differential privacy from the choice of and Definition 2. ∎

Remark 2.

In the worst case when for some constant , this results in an additive error of order . This result gives a mechanism for maintaining the minimum cut as well as a mechanism for maintaining the maximum cut, sparsest cuts, etc with such an additive error. Moreover, we can extend the result to receive updates with weights in as long as the underlying graph only has positive weights at all time.

For maintaining the minimum cut in the continual release model we show in Appendix 6 that our upper bound is tight up to polylogarithmic factors in and for large enough and constant using a reduction from a lower bound in [JRSS21].

Our mechanism can implement a mechanism for the static setting as it allows to insert all edges of the static graph in one time step. The additive error that we achieve can even be a slight improvement over the additive error of , where is the sum of the edge weights, achieved by the mechanism in [EKKL20]. Note also that our bound does not contradict the lower bound for the additive error in that paper, as they show a lower bound only for the case that .

5.2 Continual histogram

Continual histogram. Modifying the analysis for cut functions, we can use our algorithm to compute the histogram of each column for a data base of -dimensional binary vectors in the continual release model in a very straightforward manner. Said differently, assume is a universe of size and the input at a time step consists of the indicator vector of a subset of , which is a -dimensional binary vector. Let with be the maximum number of 1s in the vector, i.e., the maximum size of a subset given at a time step.

Corollary 2.

Let be the universe of size and let be a given integer. Consider a stream of vectors such that if and for all where at time the subset with is streamed. Then there is an efficient -differentially private algorithm which outputs a vector in round such that, with probability at least 2/3 over the coin tosses of the algorithm, simultaneously, for all rounds with , it holds that

The same bounds hold if items can also be removed, i.e., as long as for all and all .

Proof.

We consider the following matrix: with every update being the indicator vector in . We drop the subscript on and denote by in the remainder of this subsection. Recall the function defined by eq. 8. Let . Using this function, we can compute the following factorization of : Let , resp. , be the principal submatrix of , resp. . Let , resp. , be the th block matrix of , resp. , consisting of all columns and the last rows. Then at any time epoch we output , where is the row-wise stacking of and for . For privacy, note that the -sensitivity of is ; therefore, adding Gaussian noise with variance preserves -differential privacy.

Using the same proof as in the case of we obtain

Using Theorem 1, we have the corollary. ∎

5.3 Other graph functions

Our upper bounds can also be applied to continual release algorithms that use the binary mechanism to compute prefix sums. Let be a sequence of function values. The difference sequence of is . For a graph function under continual release, its sensitivity may depend on the allowed types of edge updates. [FHO21] show that the -sensitivity of the difference sequence of the cost of a minimum spanning tree, degree histograms, triangle count and -star count does not depend on for partially dynamic updates (either edge insertions or deletions) and is for fully dynamic updates (edge insertions and/or deletions). Using this result, they prove that one can privately compute these graph functions under continual observation by releasing noisy partial sums of the difference sequences of the respective functions. More generally, they show the following result for any graph function with bounded sensitivity of the difference sequence.

Lemma 4 ([FHO21], cf Corollary 13).

Let be a graph function whose difference sequence has -sensitivity . Let and . For each , the binary mechanism yields an -differentially private algorithm to estimate on a graph sequence, which has additive error with probability at least .

We replace the summation by the binary mechanism in Lemma 4 by summation using , getting the following result.

Corollary 3.

Let be a graph function whose difference sequence has -sensitivity . There is an -differentially private algorithm that, for any sequence of edge updates of length to a graph , with probability at least 2/3 over the coin tosses of the algorithm, simultaneously for all rounds with , outputs at time an estimate of that has additive error at most .

5.4 Counting Substrings

We can also extend our mechanism for counting all substrings of length at most , where , in a sequence of letters. After each update (i.e., a letter), we consider the binary vector that is indexed by all substrings of length at most . The value of , which corresponds to the substring , indicates whether the suffix of length of the current sequence equals . We can cast the problem of counting substrings as a binary sum problem on the sequence of vectors and apply to the concatenated vectors, where .

Corollary 4.

Let be a universe of letters, let . There exists an -differentially private algorithm that, given a sequence of letters from , outputs, after each letter, the approximate numbers of substrings of length at most . With probability at least 2/3 over the coin tosses of the algorithm, simultaneously, for all rounds with , the algorithm has at time an additive error of at most , where is as defined in eq. 2.

Proof.

Let and be two sequences of letters that differ in only one position , i.e., if and only if . We observe that for any . Furthermore, for any , and , , there exist only two substrings of length so that . It follows that the -sensitivity is at most . Using , the proof concludes analogously to the proof of Corollary 2. ∎

5.5 Episodes

Given a universe of events (or letters) , an episode of length is a word over the alphabet , i.e., so that for each , , . Given a string , an occurence of in is a subsequence of that equals . A minimal occurrence of an epsiode in is a subsequence of that equals and whose corresponding substring of does not contain another subsequence that equals . In other words, is a minimal occurence of in if and only if (1) for all , , and (2) there does not exist so that for all , , , and either and , or and . The support of an episode on a string is the number of characters from the string that are part of at least one minimal occurrence of . Note that for an episode , its minimal occurrences may overlap. For the non-differentially private setting, Lin et al. [LQW14] provide an algorithm that dynamically maintains the number of minimal occurrences of episodes in a stream of events. For better performance, the counts may be restricted to those episodes that have some minimum support on the input (i.e., frequent episodes).

Lemma 5 ([LQW14]).

Let be a universe of events, let , and let . There exists a (non-private) algorithm that, given a sequence of events from , outputs, after each event, the number of minimal occurrences for each episode of length at most that has support at least . The time complexity per update is and the space complexity of the algorithm is .

There can be at most one minimal occurrence of that ends at a fixed element . Therefore, we can view the output of the algorithm after event as a binary vector that is indexed by all episodes of length at most and that indicates, after each event , if a minimal occurrences of epsiode ends at . Summing up the (binary) entries corresponding to in yields the number of minimal occurrences of in . Therefore, we can cast this problem of counting minimal occurrences of episodes as a binary sum problem and apply .

Corollary 5.

Let be a universe of events, let , and let . There exists an -differentially private mechanism that, given a sequence of events from , outputs, after each event, the approximate number of minimal occurrences for each episode of length at most that has support at least . With probability at least 2/3 over the coin tosses of the algorithm, simultaneously for all rounds with the algorithm has at time an additive error of at most .

Proof.

Let and be two sequences of letters that differ in only one position , i.e., if and only if . Recall that we are only interested in minimal occurences of episodes. Therefore, the number of query answers that are different for and are trivially upper bounded by two times the maximum number of episodes that end on the same character (once for and once for ), times the maximum length of an episode (as for every episode that ends at , only the one with the latest start is a minimal occurrence). This is bounded by . It follows that the global -sensitivity is at most . Using , the proof concludes analogously to the proof of Corollary 2. ∎

On sparse streams. Until now, we have focused mainly on worst case analysis. We next consider the case when the stream is sparse, i.e., number of ones in the stream is upper bounded by a parameter . Dwork et al. [DNRR15] showed that under this assumption, one can asymtotically improve the error bound on continual release to while preserving -differential privacy. Using their analysis combined with our bounds we directly get the error bound

5.6 Non-interactive Local Learning

In this section, we consider convex risk minimization in the non-interactive local differential privacy mode (LDP) using Theorem 1. That is, there are participants (also known as clients) and one server. Every client has a private input from a fixed universe . To retain the privacy of this input, each client applies a differentially-private mechanism to their data (local model) and then sends a single message to the server which allows the server to perform the desired computation (convex risk minimization in our case). After receiving all messages, the server outputs the result without further interaction with the clients (non-interactive).

In -dimensional convex risk minimization, a problem is specified by a convex, closed and bounded constraint set in and a function which is convex in its first argument, that is, for all , is convex. A data set defines a loss (or empirical risk) function: , where is a variable that is chosen as to minimize the loss function. The goal of the algorithm is to output a function that assigns to each input a value that minimizes the average loss over the data sample . For example, finding the median of the 1-dimensional data set consisting of points in the interval corresponds to finding that minimizes the loss .

When the inputs are drawn i.i.d. from an underlying distribution over the data universe , one can also seek to minimize the population risk: We will use some notations in this section. Let be disjoint intervals of of size . Let . Given a vector let be a “continuous intrapolation” of the vector , namely such that , where , with ties broken for smaller values. Also, let be defined as .

[STU17] showed the following:

Theorem 4 (Corollary 8 in [STU17]).

For every 1-Lipschitz777A function , defined over endowed with norm, is -Lipschitz with respect if for all , loss function , there is a randomized algorithm , such that for every distribution on , the distribution on obtained by running on a single draw from satisfies for all , where .

In other words, differentially private small error for 1-dimensional median is enough to solve differentially private loss minimization for general -Lipschitz functions. Prior work used a binary mechanism to determine the 1-dimensional median. We show how to replace this mechanism by the factorization mechanism of Theorem 2. As the reduction in Theorem 4 preserves exactly the additive error, our analysis of the additive error in Theorem 2 carries through, giving a concrete upper bound on the additive error.

We first recall the algorithm of [STU17]. Median is non-differentiable at its minimizer , but in any open interval around , its gradient is either or . first divides the interval into disjoint intervals of of size . Let . Every client constructs a -dimensional binary vector that has only on the coordinate if its data point . The client then executes the binary mechanism with the randomizer of Duchi et al. [DJW13] on its vector and sends the binary tree to the server. Based on this information the server computes a vector , where is the times the difference of the number of points in the interval and the number of data points in the interval . The server outputs the function .

To replace the binary tree mechanism used in [STU17] (dubbed as ) into a factorization mechanism based algorithm is not straightforward because of two reasons: (i) Smith et al. used the binary mechanism with a randomization routine from [DJW13], which expects as input a binary vector, while we apply randomization to , where is the binary vector, and (ii) the error analysis is based on the error analysis in [BS15] which does not carry over to the factorization mechanism.

We now describe how we modify to give an LDP algorithm . Instead of forming a binary tree, every client forms two binary vectors with if and 0 otherwise. Note that in both vectors exactly 1 bit is set and that

gives the number of bits in the interval minus the number of data points in the interval . The user now sends two vectors to the server formed by running the binary counter mechanism defined in Theorem 2 on and with privacy parameters . Since the client’s message is computed using a differentially private mechanism for each vector, the resulting distributed mechanism is -LDP using the basic composition theorem.

On receiving these vectors, the server first computes the aggregate vector

| (12) |

The server then computes and outputs .

To analyze our mechanism let be the vector formed in and be the vector that the server in would have formed if clients did not use any randomizer. Equation (3) in [STU17] first showed that, for all

| (13) | ||||

[STU17] (see Theorem 6) then use the fact that to show that and use eq. 13 to get their final bound, which is . We remark that we can replace by any as long as for all .

We now show an equivalent result to eq. 13. We argue that the vector serves the same purpose as . The key observation here is that contains the partial sum for the intervals and contains the partial sum for . Let be the vector corresponding to the estimates in eq. 12 if no privacy mechanism was used. Note that . Since the randomness used by different clients is independent,

where is the variance used in the binary counting mechanism of Theorem 2. Using the concentration bound as in the proof of Theorem 2, we have with . By the definition of , we therefore have ,

Now using the same line of argument as in [STU17], we get the following bound:

Corollary 6.

For every distribution on , with probability over and , the output satisfies , where . Further, is -LDP.

6 Lower bounds

Definition 5 (Max-Cut).

Given a graph , the maximum cut of the graph is the optimization problem

Let denote the maximum value.

In this section we use a reduction from the maximum sum problem. Let , let , , and for , let denote the -th coordinate of record . A mechanism for the -dimensional maximum sum problem under continual observation is to return for each , the value .

In [JRSS21] Jain et al. studied the problem of computing in the continual release model the maximum sum of a -dimensional vector. Two vectors and are neighboring if they differ in only one -dimensional vectors for some . They showed that for any -differentially private and -accurate mechanism for maximum sum problem under continual observation it holds that

-

1.

if and ;

-

2.

if .

We use this fact to show a lower bound for maintaining a minimum cut under continual observation, where each update consists of a set of edges that are inserted or deleted.

Theorem 5.

For all sufficiently large and any mechanism that returns the value of the minimum cut in a multi-graph with at least 3 nodes in the continual release model, is -differentially private, and -accurate it holds that

-

1.

if and ;

-

2.

if .

The same hold for any mechanism maintaining the minimum degree.

Proof.

Using a mechanism for the minimum cut problem under continual observation for a graph with nodes we show how to solve the -dimensional maximum sum problem under continual observation. During this reduction the input sequence of length for the maximum sum problem is transformed into a input sequence of length for the minimum cut problem. The lower bound then follows from this and the fact that in our reduction.

Let be a clique with nodes such that one of the nodes is labeled and all other nodes are numbered consecutively by . For every pair of nodes that does not contain , give it parallel edges, and give every node with parallel edges to . Note that has initially degree , every other node has initially degree and the minimum degree corresponds to the minimum cut. Whenever a new vector arrives, give to a sequence update that removes one of the parallel edges for every with . Let be the index that maximizes Note that the corresponding node labeled has degree , while has degree at least as , and every other node has degree at least . Furthermore the minimum degree also gives the minimum cut in . Thus can be used to solve the maximum sum problem and the lower bound follows from the above.

Note that the proof also shows the result for a mechanism maintaining the minimum degree. ∎

It follows that for the additive error for any -differentially private mechanism is , which implies that our additive error is tight up to a factor of if the minimum cut has constant size.

We now show a lower bound for counting substrings:

Theorem 6.

For all sufficiently large , universe , and and for any mechanism that, given a sequence of letters from , outputs, after each letter the approximate number of substrings of length at most that has support at least , is -differentially private, and -accurate it holds that

-

1.

if and ;

-

2.

if .

Proof.

Using a mechanism for substring counting under continual observation up to length and universe of letters of size we show how to create a mechanism for the -dimensional maximum sum problem under continual observation. During this reduction the input sequence of length for the maximum sum problem is transformed into a sequence of length . The lower bound follows from this and the fact that .

Let consist of many letters for , one per possible record in . Given a -dimensional bit-vector at time step we append to the input string the corresponding letter . Thus, two neighboring inputs for the maximum sum problem lead to two neighboring sequences and for the substring counting problem. The substring counting mechanism outputs at time step an approximate count of all substrings of length with maximum error over all counts and all time steps. Our mechanism determines the maximum count returned for any substring of length and returns it. This gives an answer to the maximum sum problem with additive error at most . ∎

This implies that for large enough and constant the additive error of our mechanism is tight up to a factor of .

Proof of Theorem 3.

Define the function, It is easy to see that From the basic approximation rule of Reimann integration, this implies that The following limit follows using L’Hospital rule:

That is, we have the following:

Lemma 6.

from above.

Let us consider the case when we are using an additive, data-independent mechanism for non-adaptive continual observation. That is, , where is a random variable over whose distribution does not depend on . The proof follows similarly as in the mean-squared case in Edmonds et al. [ENU20]. Note that,

| (14) |

where the expectation is over the coin tosses of .

Let be the covariance matrix of so that . Now let us define to be the so called sensitivity polytope and to be the -dimensional unit ball. As has full rank, it follows that is full dimensional. Now using Lemma 27 in [ENU20], we have that there exists an absolute constant such that

Define and . Then

That is, Further,

7 Experiments

We empirically evaluated algorithms for two problems, namely continual counting and continual top-1 statistic, i.e. the frequency of the most-frequent element in the histogram. As the focus of this paper is on specifying the exact constants beyond the asymptotic error bound on differentially private counting, we do not make any assumption on the data or perform no post-processing on the output. The main goal of our experiments is to compare the additive error of (1) our mechanism and (2) the binary mechanism instantiated with Gaussian noise (i.e., the binary mechanism that achieves -differential privacy). We do not compare our mechanism with the binary mechanism with Laplacian noise as it achieves a stronger notion of differential privacy, namely -differential privacy, and has an asymptotically worse additive error.

We also implemented Honaker’s variant of the binary mechanism [Hon15], but it was so slow that the largest value for which it terminated before reaching a 5 hour time limit was 512. For these small values of its -error was worse than the -error of the binary mechanism with Gaussian noise, which is not surprising as Honaker’s variant is optimized to minimize the -error, not the -error. Thus, we omit this algorithm in our discussion below.

Data sets for continual counting. For 8 different values of , namely for every

we generated a stream of Bernoulli random variables . Note that the eighth stream is an all-zero stream. Using Bernoulli random variables with ensures that our data streams do not satisfy any smoothness properties, i.e., it makes it challenging for the mechanism to output smooth results.

We conjectured that using different values would not affect the magnitude of the additive -error, as the noise of both algorithms is independent of the data. Indeed our experiments confirmed this conjecture, i.e., the additive error in the output is not influenced by the different input streams. Note that the same argument also applies to real-world data, i.e., we would get the same results with real-world data. This was observed before and exploited in the empirical evaluation of differentially private algorithms for industrial deployment. For example, Apple used an all-zero stream to test its first differentially private algorithm, see the discussion on the accuracy analysis in the talk of Thakurta at Usenix 2017 [Tha17]. An all-zero stream was also used in the empirical evaluation of the differentially private continual binary counting mechanism in [DMR+22] (see Figure 1 in the cited paper).

We evaluated data streams with varying values of to not only study the additive error, but also the signal to noise ratio (SNR) in data streams with different sparsity of ones.

Data sets for top-1 statistic. We generated a stream of elements from a universe of 20 items using Zipf’s law [Zip16]. Zipf’s Law is a statistical distribution that models many data sources that occur in nature, for example, linguistic corpi, in which the frequencies of certain words are inversely proportional to their ranks. This is one of the standard distributions used in estimating the error of differentially private histogram estimation [CR21].

Experimental setup. To ensure that the confidence in our estimates is as high as possible and reduce the fluctuation due to the stochasticity of the Gaussian samples, we ran both the binary tree mechanism and our matrix mechanism for repetitions and took the average of the outputs of these executions.

Results. Figure 3 shows the output of the algorithms for continual counting. Figure 4 shows the private estimate of the frequency of the most frequent item for each algorithm. On the -axis, we report the output of the algorithms and the non-private output, i.e., the real count. The -axis is the current time epoch.

(1) The first main takeaway is that our additive error (i.e., difference between our estimate and real count) is consistently less than that of the binary mechanism. For for the improvement in the additive error is factor of roughly 4. This aligns with our 1. We note that a similar observation was made in the recent work [HUU23] with respect to the -error.

(2) The second main takeway of our experiments is that the error due to the binary mechanism is a non-smooth and a non-monotonic function of while our mechanism distributes the error smoothly and it is monotonically increasing. This can be explained from the fact that the variance of the noise in our algorithm is a monotonic function, , while that of the binary mechanism is , where is the number of ones in the bitwise representation of , i.e., a non-smooth, non-monotonic function.

| Binary | 4.72 | 2.40 | 1.12 | 0.61 | 0.31 | 0.15 | 0.072 |

| Our mech | 14.50 | 7.37 | 3.46 | 1.86 | 0.96 | 0.47 | 0.22 |

(3) In Table 2, we present the average SNR over all time epochs between the private estimates and the true count for different sparsity values of the stream. We notice that our output is consistently better and is about three times better when . We noticed that for when the fraction of ones is about , the average SNR for the binary mechanism drops below , i.e., the error is larger than the true count, while for our mechanism it only drops below when the fraction of ones is . That is, our mechanism can handle three times sparser streams than the binary mechanism for the same SNR. This observation continued to hold for different privacy parameters.

(4) For histogram estimation, our experiments reveal that our mechanism performs consistently better than the binary mechanism, both in terms of the absolute value of the additive error incurred as well as in terms of the smoothness of the error. This is consistent with our theoretical results. Further, on average over all time epochs, the SNR for our mechanism is while that of binary mechanism is , i.e., it is a factor of about 3 better.

8 Conclusion

In this paper, we study the problem of binary counting under continual release. The motivation for this work is that (1) only an asymptotic analysis is known for the additive error for the classic mechanism (known as the binary mechanism) for binary counting under continual release, and (2) in practice the additive error is very non-smooth, which hampers its practical usefulness. Thus, we ask

Is it possible to design differentially private algorithms with fine-grained bounds on the constants of the additive error?

We first observe that the matrix mechanism can actually be used for binary counting in the continual release model if the factorization uses lower-triangular matrices. Then we give an explicit factorization for that fulfill the following properties:

(1) We improved a 28 years old result on to give an analysis of the additive error that only has a small gap between the upper and lower bound for the counting problem. This means that the behavior of the additive error is very well understood.

(2) The additive error is a monotonic smooth function of the number of updates performed so far. In contrast, previous algorithms would either output with the error that changes non-smoothly over time, making them less interpretable and reliable.

(3) The factorization for the binary mechanism consists of two lower-triangular matrices with exactly distinct non-zero entries that follow a simple pattern so that only space is needed.

(4) We show that all these properties are not just theoretical advantages, but also makes a big difference in practice (see Figure 3).

(5) Our algorithm is very simple to implement, consisting of a matrix-vector multiplication and the addition of two vectors. Simplicity is an important design principle in large-scale deployment due to one of the important goals, which is to reduce the points of vulnerabilities in a system. As there is no known technique to verify whether a system is indeed -differentially private, it is important to ensure that a deployed system faithfully implements a given algorithm that has provable guarantee. This is one main reason for us to pick the Gaussian mechanism: it is easy to implement with floating point arithmetic while maintaining the provable guarantee of privacy. Further, the privacy guarantee can be easily stated in the terms of concentrated-DP or Renyi-DP.

Finally, we show that our bounds have diverse applications that range from binary counting to maintaining histograms, various graph functions, outputting a synthetic graph that maintains the value of all cuts, substring counting, and episode counting. We believe that there are more applications of our mechanism, and this work will bring more attention to leading constants in the analysis of differentially private algorithms.

Acknowledgements

This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (Grant agreement

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/c04c27b5-c121-4e8f-b7ba-53debe16f4a3/LOGO_ERC-FLAG_EU_crop.jpg)

No. 101019564 “The Design of Modern Fully Dynamic Data Structures (MoDynStruct)” and from the Austrian Science Fund (FWF) project “Fast Algorithms for a Reactive Network Layer (ReactNet)”, P 33775-N, with additional funding from the netidee SCIENCE Stiftung, 2020–2024. JU’s research was funded by Decanal Research Grant. The authors would like to thank Rajat Bhatia, Aleksandar Nikolov, Rasmus Pagh, Vern Paulsen, Ryan Rogers, Thomas Steinke, Abhradeep Thakurta, and Sarvagya Upadhyay for useful discussions.

References

- [AFKT21] Hilal Asi, Vitaly Feldman, Tomer Koren, and Kunal Talwar. Private stochastic convex optimization: Optimal rates in l1 geometry. In International Conference on Machine Learning, pages 393–403. PMLR, 2021.

- [AFKT22] Hilal Asi, Vitaly Feldman, Tomer Koren, and Kunal Talwar. Private online prediction from experts: Separations and faster rates. arXiv preprint arXiv:2210.13537, 2022.

- [AFT22] Hilal Asi, Vitaly Feldman, and Kunal Talwar. Optimal algorithms for mean estimation under local differential privacy. arXiv preprint arXiv:2205.02466, 2022.

- [App21] Apple. https://covid19.apple.com/contacttracing, 2021.

- [Ben77] G Bennett. Schur multipliers. 1977.

- [BFM+13] Jean Bolot, Nadia Fawaz, Shan Muthukrishnan, Aleksandar Nikolov, and Nina Taft. Private decayed predicate sums on streams. In Proceedings of the 16th International Conference on Database Theory, pages 284–295. ACM, 2013.

- [BG00] Albrecht Böttcher and Sergei M Grudsky. Toeplitz matrices, asymptotic linear algebra and functional analysis, volume 67. Springer, 2000.

- [BNS19] Mark Bun, Jelani Nelson, and Uri Stemmer. Heavy hitters and the structure of local privacy. ACM Transactions on Algorithms, 15(4):51, 2019.

- [BNSV15] Mark Bun, Kobbi Nissim, Uri Stemmer, and Salil Vadhan. Differentially private release and learning of threshold functions. In 2015 IEEE 56th Annual Symposium on Foundations of Computer Science, pages 634–649. IEEE, 2015.

- [BS15] Raef Bassily and Adam Smith. Local, private, efficient protocols for succinct histograms. In Proceedings of the forty-seventh annual ACM symposium on Theory of computing, pages 127–135. ACM, 2015.

- [BW18] Borja Balle and Yu-Xiang Wang. Improving the gaussian mechanism for differential privacy: Analytical calibration and optimal denoising. In International Conference on Machine Learning, pages 394–403. PMLR, 2018.

- [CCMRT22] Christopher A Choquette-Choo, H Brendan McMahan, Keith Rush, and Abhradeep Thakurta. Multi-epoch matrix factorization mechanisms for private machine learning. arXiv preprint arXiv:2211.06530, 2022.

- [CDC20] CDC. https://www.cdc.gov/coronavirus/2019-ncov/daily-life-coping/contact-tracing.html, 2020.

- [CLSX12] T-H Hubert Chan, Mingfei Li, Elaine Shi, and Wenchang Xu. Differentially private continual monitoring of heavy hitters from distributed streams. In International Symposium on Privacy Enhancing Technologies Symposium, pages 140–159. Springer, 2012.

- [CQ05] Chao-Ping Chen and Feng Qi. The best bounds in wallis? inequality. Proceedings of the American Mathematical Society, 133(2):397–401, 2005.

- [CR21] Adrian Cardoso and Ryan Rogers. Differentially private histograms under continual observation: Streaming selection into the unknown. arXiv preprint arXiv:2103.16787, 2021.

- [CSS11] T.-H. Hubert Chan, Elaine Shi, and Dawn Song. Private and continual release of statistics. ACM Trans. Inf. Syst. Secur., 14(3):26:1–26:24, 2011.

- [Dav84] Kenneth R Davidson. Similarity and compact perturbations of nest algebras. 1984.

- [DJW13] John C Duchi, Michael I Jordan, and Martin J Wainwright. Local privacy and statistical minimax rates. In 2013 IEEE 54th Annual Symposium on Foundations of Computer Science, pages 429–438. IEEE, 2013.

- [DMR+22] Sergey Denisov, Brendan McMahan, Keith Rush, Adam Smith, and Abhradeep Thakurta. Improved differential privacy for sgd via optimal private linear operators on adaptive streams. arXiv preprint arXiv:2202.08312, 2022.

- [DNPR10] Cynthia Dwork, Moni Naor, Toniann Pitassi, and Guy N. Rothblum. Differential privacy under continual observation. In Proceedings of the 42nd ACM Symposium on Theory of Computing, pages 715–724, 2010.

- [DNRR15] Cynthia Dwork, Moni Naor, Omer Reingold, and Guy N Rothblum. Pure differential privacy for rectangle queries via private partitions. In International Conference on the Theory and Application of Cryptology and Information Security, pages 735–751. Springer, 2015.

- [DR14] Cynthia Dwork and Aaron Roth. The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science, 9(3–4):211–407, 2014.

- [EKKL20] Marek Eliáš, Michael Kapralov, Janardhan Kulkarni, and Yin Tat Lee. Differentially private release of synthetic graphs. In Proceedings of the Annual Symposium on Discrete Algorithms, pages 560–578. SIAM, 2020.

- [EMM+23] Alessandro Epasto, Jieming Mao, Andres Munoz Medina, Vahab Mirrokni, Sergei Vassilvitskii, and Peilin Zhong. Differentially private continual releases of streaming frequency moment estimations. arXiv preprint arXiv:2301.05605, 2023.

- [ENU20] Alexander Edmonds, Aleksandar Nikolov, and Jonathan Ullman. The power of factorization mechanisms in local and central differential privacy. In Proceedings of the 52nd Annual ACM SIGACT Symposium on Theory of Computing, pages 425–438, 2020.

- [FHO21] Hendrik Fichtenberger, Monika Henzinger, and Wolfgang Ost. Differentially private algorithms for graphs under continual observation. In 29th Annual European Symposium on Algorithms, ESA 2021, September 6-8, 2021, Lisbon, Portugal (Virtual Conference), 2021.

- [Fie73] Miroslav Fiedler. Algebraic connectivity of graphs. Czechoslovak mathematical journal, 23(2):298–305, 1973.

- [Haa80] Uffe Haagerup. Decomposition of completely bounded maps on operator algebras, 1980.

- [Hon15] James Honaker. Efficient use of differentially private binary trees. Theory and Practice of Differential Privacy (TPDP 2015), London, UK, 2015.

- [HP93] Uffe Haagerup and Gilles Pisier. Bounded linear operators between -algebras. Duke Mathematical Journal, 71(3):889–925, 1993.

- [HQYC21] Ziyue Huang, Yuan Qiu, Ke Yi, and Graham Cormode. Frequency estimation under multiparty differential privacy: One-shot and streaming. arXiv preprint arXiv:2104.01808, 2021.

- [HUU23] Monika Henzinger, Jalaj Upadhyay, and Sarvagya Upadhyay. Almost tight error bounds on differentially private continual counting. SODA, 2023.

- [JRSS21] Palak Jain, Sofya Raskhodnikova, Satchit Sivakumar, and Adam Smith. The price of differential privacy under continual observation. arXiv preprint arXiv:2112.00828, 2021.

- [KMS+21] Peter Kairouz, Brendan McMahan, Shuang Song, Om Thakkar, Abhradeep Thakurta, and Zheng Xu. Practical and private (deep) learning without sampling or shuffling. In International Conference on Machine Learning, pages 5213–5225. PMLR, 2021.

- [LMH+15] Chao Li, Gerome Miklau, Michael Hay, Andrew McGregor, and Vibhor Rastogi. The matrix mechanism: optimizing linear counting queries under differential privacy. The VLDB journal, 24(6):757–781, 2015.

- [LQW14] Shukuan Lin, Jianzhong Qiao, and Ya Wang. Frequent episode mining within the latest time windows over event streams. Applied intelligence, 40(1):13–28, 2014.

- [Mat93] Roy Mathias. The hadamard operator norm of a circulant and applications. SIAM journal on matrix analysis and applications, 14(4):1152–1167, 1993.

- [MMHM21] Ryan McKenna, Gerome Miklau, Michael Hay, and Ashwin Machanavajjhala. Hdmm: Optimizing error of high-dimensional statistical queries under differential privacy. arXiv preprint arXiv:2106.12118, 2021.

- [MN12] Shanmugavelayutham Muthukrishnan and Aleksandar Nikolov. Optimal private halfspace counting via discrepancy. In Proceedings of the forty-fourth annual ACM symposium on Theory of computing, pages 1285–1292, 2012.

- [MNT20] Jiří Matoušek, Aleksandar Nikolov, and Kunal Talwar. Factorization norms and hereditary discrepancy. International Mathematics Research Notices, 2020(3):751–780, 2020.

- [Pau82] Vern I Paulsen. Completely bounded maps on -algebras and invariant operator ranges. Proceedings of the American Mathematical Society, 86(1):91–96, 1982.

- [Pau86] Vern I Paulsen. Completely bounded maps and dilations. New York, 1986.

- [RN10] Vibhor Rastogi and Suman Nath. Differentially private aggregation of distributed time-series with transformation and encryption. In Proceedings of SIGMOD International Conference on Management of data, pages 735–746, 2010.

- [Sch11] Jssai Schur. Remarks on the theory of restricted "a ned bilinear forms with infinitely many ä mutable ones. 1911.

- [STU17] Adam Smith, Abhradeep Thakurta, and Jalaj Upadhyay. Is interaction necessary for distributed private learning? In IEEE Symposium on Security and Privacy, 2017.

- [Tha17] Abhradeep Thakurta. Differential privacy: From theory to deployment, https://www.youtube.com/watch?v=Nvy-TspgZMs&t=2320s&ab_channel=USENIX, 2017.

- [Upa19] Jalaj Upadhyay. Sublinear space private algorithms under the sliding window model. In International Conference on Machine Learning, pages 6363–6372, 2019.

- [UU21] Jalaj Upadhyay and Sarvagya Upadhyay. A framework for private matrix analysis in sliding window model. In International Conference on Machine Learning, pages 10465–10475. PMLR, 2021.

- [UUA21] Jalaj Upadhyay, Sarvagya Upadhyay, and Raman Arora. Differentially private analysis on graph streams. In International Conference on Artificial Intelligence and Statistics, pages 1171–1179. PMLR, 2021.

- [WCZ+21] Tianhao Wang, Joann Qiongna Chen, Zhikun Zhang, Dong Su, Yueqiang Cheng, Zhou Li, Ninghui Li, and Somesh Jha. Continuous release of data streams under both centralized and local differential privacy. In Proceedings of the 2021 ACM SIGSAC Conference on Computer and Communications Security, pages 1237–1253, 2021.

- [Zei16] Ofer Zeitouni. Gaussian fields, 2016.

- [Zip16] George Kingsley Zipf. Human behavior and the principle of least effort: An introduction to human ecology. Ravenio Books, 2016.