Consensus Function from an norm Regularization Term for its Use as Adaptive Activation Functions in Neural Networks

Abstract

The design of a neural network is usually carried out by defining the number of layers, the number of neurons per layer, their connections or synapses, and the activation function that they will execute. The training process tries to optimize the weights assigned to those connections, together with the biases of the neurons, to better fit the training data. However, the definition of the activation functions is, in general, determined in the design process and not modified during the training, meaning that their behavior is unrelated to the training data set. In this paper we propose the definition and utilization of an implicit, parametric, non-linear activation function that adapts its shape during the training process. This fact increases the space of parameters to optimize within the network, but it allows a greater flexibility and generalizes the concept of neural networks. Furthermore, it simplifies the architectural design since the same activation function definition can be employed in each neuron, letting the training process to optimize their parameters and, thus, their behavior. Our proposed activation function comes from the definition of the consensus variable from the optimization of a linear underdetermined problem with an regularization term, via the Alternating Direction Method of Multipliers (ADMM). We define the neural networks using this type of activation functions as networks. Preliminary results show that the use of these neural networks with this type of adaptive activation functions reduces the error in regression and classification examples, compared to equivalent regular feedforward neural networks with fixed activation functions.

Index Terms:

Activation Functions, ADMM, Functional Analysis, norm regularization, Neural NetworksI Introduction

Neural Networks are widely used for different applications, such as identification, security, defense, face and speech recognition, healthcare, or weather and stock market prediction, among others, [1, 2, 3, 4, 5, 6, 7, 8]. Although the architectural design of the neural networks may amply vary depending on the particular application, they all share the basic intrinsic foundation of mimicking the behavior of a biological brain by connecting several computational nodes, the so-called neurons, that perform simple non-linear operations, with the aim of developing complex practices. Mathematically, neural networks are a powerful tool that can basically describe any relationship between any given input-output set of data, potentially modeling any imaginable non-linear mapping. Despite its questionless capabilities, neural networks rely on the simplicity of connecting several layers of neurons that enchains two straightforward operations: a linear combination of the results provided from the neurons of a previous layer, and a non-linear function, which outcome is passed to the next layer. The number of layers, the number of neurons per layer, and the type of non-linear functions implemented to the neurons of each layer, the so-called activation or transfer function, is part of the architectural design of the neural network. The larger the network, the more complex capabilities and highly non-linearities could be modeled, [9].

The fitting of the data to the designed network is done by adapting the weights and biases that define the linear combination on the first operation done in each neuron during the training process. However, the selection of the activation functions generally is reduced to a limited set of non-linear functions, such as the sigmoid, rectified linear units, max-pooling, etc. This selection has, a priori, little or no influence from the dataset that is used for training the network. The exploration of adaptive activation functions to increase the flexibility of the neural networks during the training process is an active topic of research. [10] points out the importance that the activation functions have on the neural network performance and review some initial attempts to find an optimal distribution of activation functions from a reduced set of options, by using evolutionary algorithms. [11] defines the maxout activation function, which extracts the maximum of a set of linear functions, arbitrarily approximating any convex function. [12] proposes adaptive piecewise linear units that are learned using gradient descend during the training process, enabling the approximation of both convex and non-convex functions; and [13] introduces an unit, which performs the norm of the normalized input data, adapting the values of during the training process and generalizing the pooling operator, for which the maxpooling and maxout units are particular cases of it. These methods progress towards neural network architectures whose training process learns, not only the relationships among the neurons, but also the neurons themselves. However, they do not totally generalize the activation functions and require software applications to approximate the gradients during the training process, thus, a better understanding of the underlying functional analysis of generalized activation functions is still required.

In this paper, we present a parametric activation function whose parameters are learned during the training process and that can adapt to mimic most of the common activation functions regularly used. On one hand, this imply to increase the space of training parameters, but on the other hand, this generalizes the global structure of the neurons, since their activation functions do not need to be predefined. The definition of the proposed activation function comes from the functional analysis of the consensus variable when performing a linear optimization problem with a general norm regularization term, via the Alternating Direction Method of Multipliers (ADMM), as it is described in Sect. II. The mathematical development for the use of the proposed activation function, and the analysis for the computation of the gradients for the optimization of the implied parameters is detailed in Sect. III. Section IV presents some preliminary results, showing that the use of these adaptive activation functions reduces both the training and testing errors when they are applied on shallow neural networks, compared to equivalent regular feedforward networks in which the activation functions are predetermined. Finally, Sect. V concludes the paper.

II Activation function definition

In this work, we propose an implicit, parametric, non-linear activation function that can adapt its shape during the training process to create a flexible neural network. This function appears as the result of the research carried out by the same authors on the field of optimization of linear under-determined problems, via the ADMM, [14, 15, 16, 17, 18, 19, 20, 21, 22]. Specifically, the linear problem can be optimized with an norm regularization term as follows:

| (1) |

On one hand, is each of the submatrices in which is divided by rows, where is the number of data and the number of unknowns, usually having . is each of the subvectors in which is divided; and is each of the independent solutions that can be generated for each division. The optimization process using the ADMM algorithm imposes the agreement of all solutions via the consensus variable , as depicted in Fig. 1.

On the other hand, the regularization term imposes a certain structure in the particular solution sought for the problem, where is just a design hyperparameter to weight the importance of this type of structure in the optimization process. The parameter induces a preferred direction of searching for the solution. In this sense, a norm-1 regularization (lasso) imposes a sparse solution, [22, 23, 24], a norm-2 regularization (ridge regression) seeks for the solution with minimum energy [25, 26, 27], meanwhile a norm-infinity minimizes the magnitude of the greatest component [28, 29, 30]. As a more general expression, the bridge regression regularization term, of the form , accounts for the previously mentioned lasso for and ridge regression for , and performs a smooth version of the elastic net regularization for , [31, 32, 33, 18, 34, 35]. Meanwhile, the parameter defines the way the distances are measured, distorting the metric space. Particularly, for large values of , the distance between two different points tends to be very similar, and specifically, , leads to the discrete distance.

The optimization problem on (1) is solved by introducing the Lagrangian multipliers or dual variables , one for each constrain, and sequentially optimizing , v, and , in this order. See Ref. [21] for a detailed explanation. The optimization of the primal and dual variables and are always the same regardless the type of regularization, since this one only affects the definition of the consensus variable v. Its optimization, therefore, comes from the computation of the gradient of the Lagrangian with respect to each component :

| (2) |

where is the augmented parameter. Notice that the input variable in the expression (2) is , namely, the sum of the average of the components of the variables and , while the output is, indeed, . Consequently, this is, in general, a non explicit expression except for some particular values of and , and it has to be solved by iteratively reweighting the expression with the previous iterations of .

Definitely, the selection of the values of and for the regularization term will determine the shape of this implicit function. Interestingly, the behavior of this function resemble the definition of the common activation functions, such as the ReLU (), PReLU (), linear (), sigmoid (), or soft clipping (), as depicted in Figure 2, [36, 37]. By redefining as the input in Eqn. (2), dropping the component dependence , grouping and redefining the constant as a tuning hyperparameter, and for the particular case of , we define the implicit, non-linear, parametric activation function as follows:

| (3) |

which defines the non-linear mapping

| (4) |

where is the input and so-called activation variable, is the learning parameter of the function, and is the output of the activation function . We call the -network to the neural networks designed with this type of activation function. The general case for would lead to the denominated -network, which will extend this work in the future.

III Network implementation

The top part of Fig. 3 depicts an example of a fully connected network of layers with neurons per layer, for ( indicates a super index, not a power). Additionally, the layer is considered the input layer, and does not have neurons properly, but is it just the input data; the layer is the output layer and all the others are the intermediate or hidden layers. The parameters that define the network include the weights of the synapses that connect the different layers and the internal parameter of each neuron for defining the activation function. The benefit of using a parametric activation function is that the non-linear general expression is the same in all neurons. In this way, as represented at the bottom part of Fig. 3, the activation variable , namely, the input to the activation function of the neuron of layer , can be expressed as follows:

| (5) |

where is the bias of the neuron of layer , thus considering . The output of the neuron is , and notice that corresponds to the input data to the network, where is its dimensionality, and is the output data, with dimensionality .

III-A Feedforward pass

The first challenge appears in the actual implementation of the activation function, since this is an implicit expression. In order to overcome this, a reweighted iteration process during each feedforward pass is proposed.

-

•

Method 1: Taking into account that , the activation function in (3), where is the input and is the output, can be expressed as follows:

(6) In this way, a quick iteration can be done to approach the actual value of the output by considering the output of the previous iteration on the evaluation of the right hand side of equation (6). However, this method has the disadvantage of a potential divergence for values of , when the input is large in magnitude, thus only assuring the convergence for .

-

•

Method 2: A second approach is proposed since the activation function (3) can be represented in a different way. Taking into account that, for this function, , the implicit expression can be reformulated as

(7) in which, again, a quick iteration over the output values can approach the actual output of the activation function. This second method will have convergence problems when is large for small input values in magnitude, as they will tend to be close to since , though the function is expected to have a quasi-linear behavior for input values smaller than in magnitude.

Since both methods result to be complementary, a solution for implementing the activation function consists on combining these two methods: if , use (6); if , use (6) for small input values and (7) for large input values. For is one of the few particular cases in which the activation function has an explicit expression, as .

The last step for the feedforward evaluation consists on determine the threshold that discriminates the input values between small or large. As shown on the left side of Fig. 2, the activation functions are odd and strictly monotonically increasing functions, and have three clear intervals: one for large negative values, one for values around zero, and another one for large positive values. The points in which the tendency changes depends on the parameter , and marks the threshold for considering the input values as small or large. For values of , the slope of the functions gets a value close to for inputs around , while it gets small values for large inputs in magnitude. Then, the threshold can be selected as this point of change of tendency, selecting the input value for which the derivative of the activation functions is equal to .

From the implicit expression of the activation function in (3), its derivative can be expressed as follows:

| (8) |

By setting , the output value for the threshold, , is computed:

| (9) |

Because of the properties of the activation function, it can be considered the positive value for the positive input and the negative value for the negative input. The expression in (9) indicates the value of the output of the activation function to achieve a slope of , but it is necessary to compute the value of the input . To this end, it is easy to extract the input given the output from (3), since

| (10) |

And by introducing (9) into (10),

| (11) |

In summary, for evaluating the implicit activation function employ the following casuistic:

| (12) |

III-B Optimization of the parameters of the network via Backpropagation

Training a neural network is the process of obtaining the optimal parameters that defines it, commonly the weights and biases, given a set of input-output training data. Backpropagation is the training method that evaluates the error in the last layer and transfers it throughout the network, to the first layer. In the proposed network, besides the weights and biases, the neural network is tuned as well with the parameters of the activation function in each neuron.

In regards of the general structure of the network described in Fig. 3, consider a training dataset of size , where is the input data and is the output data, for all . The mean square error for a specific parameter of the network is

| (13) |

where is the computed output of the network for the input . Notice that, for each sample data , the component of the output vector is , namely, the output of the neuron of the last layer.

The parameter is updated based on the gradient descend:

| (14) |

where is the iteration step and is the learning ratio, which can be different for the parameters , , than for the weights and biases, .

Since the error can be accumulated for each training pair, the analysis can be done for each pair independently, dropping the dependence of the training sample.

III-B1 Optimization of the parameter in the activation functions

Consider a multidimensional regression neural network and start with the optimization of the parameters of the last layer. The error with respect to the parameter of the activation function of the neuron, is

| (15) | |||

The partial derivative of the activation function with respect to the parameter is required to complete the expression. Similar as shown in Eqn. (8), and remembering the implicit expression of the activation function in (3), this partial derivative can be computed as follows:

| (16) |

Therefore,

| (17) |

For the intermediate layers, the chain rule can be applied. The partial derivative of the error with respect to the parameter of the node of the layer can be expressed as follows:

| (18) |

since this parameter affects the nodes of layer , where is the activation variable of the node of layer . Similarly, and making use of Eqn. (5),

| (19) |

By defining the error term as

| (20) |

and introducing it into (19),

| (21) |

Knowing that

| (22) |

by introducing (20)-(22) into (18), the final expression is

| (23) |

and defining, for the last layer,

| (24) |

The computation of the expression is defined in (16) and, on the same way,

| (25) |

III-B2 Optimization of the weights and biases

The optimization of the weights and biases is done as in any regular neural network. Considering the weight that goes from the neuron of layer to the neuron of layer , and taking into account that the bias of neuron of layer is , the partial derivative of the error with respect to the weight can be represented as follows:

| (26) |

III-B3 Modification for a classification network

In case of using the network for classification, the last layer should be modified to a regular softmax function. The output of the neuron of the last layer would be

| (29) |

This last layer does not have parameters to optimize, thus its presence will only affect the optimization of the weights and biases. The gradient of the error with respect to is

| (30) |

where

| (31) | ||||

The rest of parameters are optimized as in Sect. III-B.

III-B4 Summary of expressions of gradients

In summary, the final expressions for the optimization of the parameters of the network are the following:

-

•

Gradient of the error with respect to :

(32) for for regression, or for for classification.

-

•

Gradient of the error with respect to the weights and biases:

(33) for . Where the error term is defined as

(34) and, for the last layer,

(35) for regression, and

(36) for classification.

IV Numerical Results

The network has been implemented in Matlab and tested to validate its correct performance and to see how the activation functions adapt to the given data. The training is performed until reaching a maximum of number of iterations or when the training error goes below maximum error threshold.

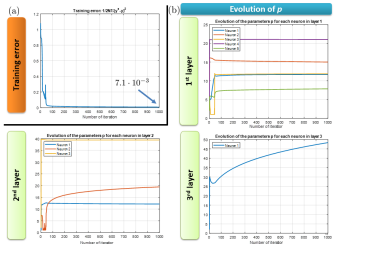

IV-A Example #1: Evolution of the parameters

The first example simply tries to visualize the evolution of the parameters of each neuron during the training process. A simple 3-layers network with , , and neurons, respectively, is defined to match the non-linear function . The training data set is values taken from a uniform random distribution in the range [-5,5]. The configuration parameters are shown in Table I and the training results in Fig. 4. It can be seen how the parameter of each neuron evolves to adapt the shape of the activation function in order to better match the training data. Figure 5 illustrates the performance of the trained network. It performs as expected, although is shows some error since the output should be closer to , due to the reduced size of the training dataset.

| Parameter | Value |

|---|---|

| Initial | 2 |

| Initial | random |

| Max # of iterations | 1000 |

| Max error | |

| Iterations for the activation function | 100 |

| 100 | |

| 0.1 |

IV-B Example #2: Comparison with a regular feedforward network

The second example tries to compare the performance of the proposed network with a regular feedforward network for regression. To this end, a 4-layers neural network with , , , and neurons per layer, respectively, is trained to implement (a) the function , and (b) the function . To make the comparison as fair as possible, the activation functions of the feedforward network are selected to be saturation linear and symmetric, (’satlins’) for the hidden layers, since they have the same shape as the proposed activation functions for ; and purelin, (’linear’) for the output layer, as a linear neuron is recommended for the last layer of regression networks, and it has the same shape as the proposed activation function for , and also to have the same slope. Likewise, the learning ratios of the weights and biases are set the same. For numerical reasons, we set the initial value of to for the hidden layers, and the value of to . The configuration parameters of both networks are shown in Tables II and III, respectively. The training data set is values taken from a uniform random distribution in the range [-1,1]. Two types of training are done for the network: (i) fixing the value of of each neuron, namely, and the activation functions get fixed, and (ii), allowing the adaptation of the activation functions. The training progress and the performance results are shown in Figs. 6 and 7, while Table IV shows the error computed as defined in (13).

| Parameter | Value |

|---|---|

| Initial | hidden layers: 100 output layer: 2 |

| Initial | random |

| Max # of iterations | 1000 |

| Max error | |

| Iterations for the activation function | 10 |

| (i) 0, (ii) | |

| 0.01 |

| Parameter | Value |

|---|---|

| Activation functions | hidden layers: ’satlins’ output layer: ’linear’ |

| Initial | NGuyen-Widrow |

| Max # of iterations | 1000 |

| Max gradient | |

| 0.01 |

| Network | Error case | Error case |

|---|---|---|

| Feedforward | ||

| network, | ||

| network, |

It can be seen how the adaptative network reduces the error with respect to a equivalent regular network. It is also interesting to see how the final values of for each neuron greatly vary even within the same layer. Table V shows the final values of case (a) after the training is complete. Neuron #1 of layer 3 generates an activation function similar to a saturation linear and symmetric, while neuron #2 of the same layer generates an activation function similar to a ReLU. Notice that, since , the minimum allowed value of is .

| Parameter | Layer 1 | Layer 2 | Layer 3 | Layer 4 |

|---|---|---|---|---|

| Neuron #1 | 139.95 | 395.13 | 326.07 | 2.00 |

| Neuron #2 | 93.16 | 17.06 | 1.01 | |

| Neuron #3 | 39.22 | 43.27 | 28.00 | |

| Neuron #4 | 100.00 | 120.19 | ||

| Neuron #5 | 100.00 | 165.76 | ||

| Neuron #6 | 25.06 | |||

| Neuron #7 | 100.00 | |||

| Neuron #8 | 30.45 | |||

| Neuron #9 | 190.52 | |||

| Neuron #10 | 48.08 |

IV-C Example #3: Classification Application

Matlab contains a dataset of examples for describing types of human activities based on features. The type of activities are Sitting, Standing, Walking, Running, and Dancing, while the features include mean acceleration, root mean square body acceleration in , , and , among others.

The network and a regular feedforward network are designed with 3 layers containing , , and neuron each, respectively. The last layer is configured as a softmax layer for classification. The configuration parameters for the network and the feedforward network are shown in Tables VI and VII, respectively. The network is trained with (i) fixed activation functions, , and (ii) allowing the adaptation of the activation functions, , to compare the results.

Out of the total dataset, randomly select data per class, for a total of training cases, and randomly selected cases from the remaining set for testing. This selection is done five times, computing the training and testing classification errors for each case.

| Parameter | Value |

|---|---|

| Initial | hidden layers: 5 output layer: ’softmax’ |

| Initial | random |

| Max # of iterations | 1000 |

| Max error | |

| Iterations for the activation function | 10 |

| (i) 0, (ii) | |

| 0.1 |

| Parameter | Value |

|---|---|

| Activation functions | hidden layers: ’tansig’ output layer: ’softmax’ |

| Initial | NGuyen-Widrow |

| Max # of iterations | 1000 |

| Max gradient | |

| 0.1 |

Table VIII and Fig. 8 show the results of the mean and standard deviation of the training and classification errors. Although the feedforward network provides a smaller training error, the adaptative network is able to achieve a smaller testing error. However, non-adaptative network gets the worse results.

| Network | Training Error (std) | Testing Error (std) |

|---|---|---|

| network, | ||

| network, | ||

| Feedforward |

V Conclusion

This paper has proposed a new structure for the design of neural networks by defining a parametric non-linear activation function that can adapt its shape during the training process. This allows to configure the whole network with one single activation function definition, not having to predefine its expression for each layer, and also allowing different shapes for the neurons within the same layer after training. This increases the space of parameters for optimization, but provides more flexibility and generalization on the architecture of neural networks.

The proposed activation functions comes as the expression of the consensus variable when optimizing a linear problem with an norm regularization term. This expression is, in general, implicit, meaning that there is no an explicit expression of the output variable given the input value. Although this is a challenge for its use, a detailed analysis is provided for its evaluation by the use of a reweighted iteration process. In this paper, the particular case of is implemented. The evaluation of the feedforward pass of the network with this activation function, as well as a detailed explanation of the training process via the backpropagation method for the optimization of the parameter and the weigths and biases is also provided for completeness.

Preliminary results for both regression and classification show that the proposed network reduces the error of the testing sets when comparing with an equivalent regular neural network with the same number of layers and neuron, and with predefined activation functions that are as similar as possible as the network activation function with the initial values of at the beginning of the training process.

Future work will implement the networks, that is, allowing the distinction of the parameters and during the training process to account for a larger set of possible shapes of the activation function. The application of this methodology to more complex networks, such as Deep, Convolutional, or Recurrent Neural Networks will open the way to the definition of more general and flexible neural networks. It is expected, consequently, a boost in the performance of these neural networks in terms of error reduction when using the proposed adaptive activation functions.

ACKNOWLEDGEMENT

This work has been funded by the Department of Energy (Award # DE-SC0017614).

References

- [1] S. C. KR et al., “Real time object identification using deep convolutional neural networks,” in 2017 International Conference on Communication and Signal Processing (ICCSP). IEEE, 2017, pp. 1801–1805.

- [2] S. Akcay, M. E. Kundegorski, C. G. Willcocks, and T. P. Breckon, “Using deep convolutional neural network architectures for object classification and detection within x-ray baggage security imagery,” IEEE transactions on information forensics and security, vol. 13, no. 9, pp. 2203–2215, 2018.

- [3] A. Agarwal, S. Kumar, and D. Singh, “Development of neural network based adaptive change detection technique for land terrain monitoring with satellite and drone images,” Defence Science Journal, vol. 69, no. 5, p. 474, 2019.

- [4] S. Lawrence, C. L. Giles, A. C. Tsoi, and A. D. Back, “Face recognition: A convolutional neural-network approach,” IEEE transactions on neural networks, vol. 8, no. 1, pp. 98–113, 1997.

- [5] L. Deng, G. Hinton, and B. Kingsbury, “New types of deep neural network learning for speech recognition and related applications: An overview,” in 2013 IEEE international conference on acoustics, speech and signal processing. IEEE, 2013, pp. 8599–8603.

- [6] F. Amato, A. López, E. M. Peña-Méndez, P. Vaňhara, A. Hampl, and J. Havel, “Artificial neural networks in medical diagnosis,” pp. 47–58, 2013.

- [7] J. A. Weyn, D. R. Durran, R. Caruana, and N. Cresswell-Clay, “Sub-seasonal forecasting with a large ensemble of deep-learning weather prediction models,” Journal of Advances in Modeling Earth Systems, vol. 13, no. 7, p. e2021MS002502, 2021.

- [8] J. Shen and M. O. Shafiq, “Short-term stock market price trend prediction using a comprehensive deep learning system,” Journal of big Data, vol. 7, no. 1, pp. 1–33, 2020.

- [9] Z. Zhang, “Artificial neural network,” in Multivariate time series analysis in climate and environmental research. Springer, 2018, pp. 1–35.

- [10] X. Yao, “Evolving artificial neural networks,” Proceedings of the IEEE, vol. 87, no. 9, pp. 1423–1447, 1999.

- [11] I. Goodfellow, D. Warde-Farley, M. Mirza, A. Courville, and Y. Bengio, “Maxout networks,” in International conference on machine learning. PMLR, 2013, pp. 1319–1327.

- [12] F. Agostinelli, M. Hoffman, P. Sadowski, and P. Baldi, “Learning activation functions to improve deep neural networks,” arXiv preprint arXiv:1412.6830, 2014.

- [13] C. Gulcehre, K. Cho, R. Pascanu, and Y. Bengio, “Learned-norm pooling for deep feedforward and recurrent neural networks,” in Joint European Conference on Machine Learning and Knowledge Discovery in Databases. Springer, 2014, pp. 530–546.

- [14] S. Boyd and L. Vandenberghe, Convex optimization. Cambridge university press, 2009.

- [15] S. Boyd, N. Parikh, E. Chu, B. Peleato, and J. Eckstein, “Distributed optimization and statistical learning via the alternating direction method of multipliers,” Foundations and Trends® in Machine Learning, vol. 3, no. 1, pp. 1–122, July 2011.

- [16] J. Heredia-Juesas, L. Tirado, A. Molaei, and J. A. Martinez-Lorenzo, “Admm based consensus and sectioning norm-1 regularized algorithm for imaging with a cra,” in 2019 IEEE International Symposium on Antennas and Propagation and USNC-URSI Radio Science Meeting. IEEE, 2019, pp. 549–550.

- [17] J. Heredia Juesas, G. Allan, A. Molaei, L. Tirado, W. Blackwell, and J. A. M. Lorenzo, “Consensus-based imaging using admm for a compressive reflector antenna,” in 2015 IEEE International Symposium on Antennas and Propagation & USNC/URSI National Radio Science Meeting. IEEE, 2015, pp. 1304–1305.

- [18] J. Heredia-Juesas, A. Molaei, L. Tirado, and J. A. Martinez-Lorenzo, “Fast node communication admm-based imaging algorithm with a compressive reflector antenna,” in 2018 IEEE International Symposium on Antennas and Propagation & USNC/URSI National Radio Science Meeting. IEEE, 2018, pp. 535–536.

- [19] J. Heredia-Juesas, L. Tirado, and J. A. Martinez-Lorenzo, “Fast source reconstruction via admm with elastic net regularization,” in 2018 IEEE International Symposium on Antennas and Propagation & USNC/URSI National Radio Science Meeting. IEEE, 2018, pp. 539–540.

- [20] J. Heredia-Juesas, A. Molaei, L. Tirado, and J. A. Martinez-Lorenzo, “Sectioning-based admm imaging for fast node communication with a compressive antenna,” IEEE Antennas and Wireless Propagation Letters, vol. 18, no. 2, pp. 226–230, 2018.

- [21] ——, “Consensus and sectioning-based admm with norm-1 regularization for imaging with a compressive reflector antenna,” IEEE Transactions on Computational Imaging, vol. 7, pp. 1189–1204, 2021.

- [22] J. Heredia-Juesas, A. Molaei, L. Tirado, W. Blackwell, and J. A. Martinez-Lorenzo, “Norm-1 regularized consensus-based admm for imaging with a compressive antenna,” IEEE Antennas and Wireless Propagation Letters, vol. 16, pp. 2362–2365, 2017.

- [23] M. R. Osborne, B. Presnell, and B. A. Turlach, “On the lasso and its dual,” Journal of Computational and Graphical statistics, vol. 9, no. 2, pp. 319–337, 2000.

- [24] R. Tibshirani, “Regression shrinkage and selection via the lasso,” Journal of the Royal Statistical Society: Series B (Methodological), vol. 58, no. 1, pp. 267–288, 1996.

- [25] A. E. Hoerl and R. W. Kennard, “Ridge regression: Biased estimation for nonorthogonal problems,” Technometrics, vol. 12, no. 1, pp. 55–67, 1970.

- [26] H.-P. Piepho, “Ridge regression and extensions for genomewide selection in maize,” Crop Science, vol. 49, no. 4, pp. 1165–1176, 2009.

- [27] Z. Zhang, G. Dai, C. Xu, and M. I. Jordan, “Regularized discriminant analysis, ridge regression and beyond,” Journal of Machine Learning Research, vol. 11, no. Aug, pp. 2199–2228, 2010.

- [28] S. Shahabuddin, M. Juntti, and C. Studer, “Admm-based infinity norm detection for large mu-mimo: Algorithm and vlsi architecture,” in 2017 IEEE International Symposium on Circuits and Systems (ISCAS). IEEE, 2017, pp. 1–4.

- [29] J. Shen, H. Xu, and P. Li, “Online optimization for max-norm regularization,” in Advances in Neural Information Processing Systems, 2014, pp. 1718–1726.

- [30] I. Gravagne and I. D. Walker, “Properties of minimum infinity-norm optimization applied to kinematically redundant robots,” in Proceedings. 1998 IEEE/RSJ International Conference on Intelligent Robots and Systems. Innovations in Theory, Practice and Applications (Cat. No. 98CH36190), vol. 1. IEEE, 1998, pp. 152–160.

- [31] J. Huang, S. Ma, H. Xie, and C.-H. Zhang, “A group bridge approach for variable selection,” Biometrika, vol. 96, no. 2, pp. 339–355, 2009.

- [32] C. Park and Y. J. Yoon, “Bridge regression: adaptivity and group selection,” Journal of Statistical Planning and Inference, vol. 141, no. 11, pp. 3506–3519, 2011.

- [33] W. J. Fu, “Penalized regressions: the bridge versus the lasso,” Journal of computational and graphical statistics, vol. 7, no. 3, pp. 397–416, 1998.

- [34] C. De Mol, E. De Vito, and L. Rosasco, “Elastic-net regularization in learning theory,” Journal of Complexity, vol. 25, no. 2, pp. 201–230, 2009.

- [35] H. Zou and T. Hastie, “Regularization and variable selection via the elastic net,” Journal of the royal statistical society: series B (statistical methodology), vol. 67, no. 2, pp. 301–320, 2005.

- [36] B. Karlik and A. V. Olgac, “Performance analysis of various activation functions in generalized mlp architectures of neural networks,” International Journal of Artificial Intelligence and Expert Systems, vol. 1, no. 4, pp. 111–122, 2011.

- [37] P. Sibi, S. A. Jones, and P. Siddarth, “Analysis of different activation functions using back propagation neural networks,” Journal of Theoretical and Applied Information Technology, vol. 47, no. 3, pp. 1264–1268, 2013.