Connect the Dots: Tighter Discrete Approximations of Privacy Loss Distributions

Abstract

The privacy loss distribution (PLD) provides a tight characterization of the privacy loss of a mechanism in the context of differential privacy (DP). Recent work [24, 19, 20, 18] has shown that PLD-based accounting allows for tighter -DP guarantees for many popular mechanisms compared to other known methods. A key question in PLD-based accounting is how to approximate any (potentially continuous) PLD with a PLD over any specified discrete support.

We present a novel approach to this problem. Our approach supports both pessimistic estimation, which overestimates the hockey-stick divergence (i.e., ) for any value of , and optimistic estimation, which underestimates the hockey-stick divergence. Moreover, we show that our pessimistic estimate is the best possible among all pessimistic estimates.

Experimental evaluation shows that our approach can work with much larger discretization intervals while keeping a similar error bound compared to previous approaches and yet give a better approximation than an existing method [24].

1 Introduction

Differential privacy (DP) [9, 8] has become widely adopted as a notion of privacy in analytics and machine learning applications, leading to numerous practical deployments including in industry [13, 29, 16, 3, 7] and government agencies [2]. The DP guarantee of a (randomized) algorithm is parameterized by two real numbers and ; the smaller these values, the more private the algorithm.

The appeal of DP stems from the strong privacy that it guarantees (which holds even if the adversary controls the inputs of all other users in the database), and from its nice mathematical properties. These include composition, whose basic form [8] says that executing an -DP algorithm and an -DP algorithm and returning their results gives an algorithm that is -DP. While basic composition can be used to bound the DP properties of algorithms, it is known to not be tight, in particular for large values of . In fact, advanced composition [12] yields a general improvement, often translating to reduction in the privacy bound of the composition of mechanisms each of which being -DP. Such a reduction can be sizeable in practical deployments, and therefore much research has been focusing on obtaining tighter composition bounds in various settings.

In the aforementioned setting where each mechanism has the same DP parameters, Kairouz et al. [17] derived the optimal composition bound. For the more general case of composing mechanisms whose privacy parameters are possibly different, i.e., the th mechanism is guaranteed to be -DP for some parameters , computing the (exact) DP parameters of the composed mechanism is known to be #P-complete [27].

While the results of [17, 27] provide a complete picture of privacy accounting when we assume only that the th mechanism is -DP, we can often arrive at tighter bounds when taking into account some additional information about the privacy loss of the mechanisms. For example, the Moments Accountant [1] and Rényi DP [26] methods keep track of (upper bounds on) the Renyi divergences of the output distributions on two adjacent databases; this allows one to compute upper bounds on the privacy parameters. These tools were originally introduced in the context of deep learning with DP (where the composition is over multiple iterations of the learning algorithm) in which they provide significant improvements over simply using the DP parameters of each mechanism. Other known tools that can also be used to upper-bound the privacy parameters of composed mechanisms include concentrated DP [11, 6] and its truncated variant [5]. These methods are however all known not to be tight, and do not allow a high-accuracy estimation of the privacy parameters.

A numerical method for estimating the privacy parameters of a DP mechanism to an arbitrary accuracy, which has been the subject of several recent works starting with [24, 30], relies on the privacy loss distribution (PLD). This is the probability mass function of the so-called privacy loss random variable in the case of discrete mechanisms, and its probability density function in the case of continuous mechanisms. From the PLD of a mechanism, one can easily obtain its (tight) privacy parameters. Moreover, a crucial property is that the PLD of a composition of multiple mechanisms is the convolution of their individual PLDs. Thus, [19] used the Fast Fourier Transform (FFT) in order to speed up the computation of the PLD of the composition. Furthermore, explicit bounds on the approximation error for the resulting algorithm were derived in [19, 20, 18, 15]. The PLD has been the basis of multiple open-source implementations from both industry and academia including [23, 22, 25]. We note that the PLD can be applied to mechanisms whose privacy loss random variables do not have bounded moments, and thus for which composition cannot be analyzed using the Moments Accountant or Rényi DP methods. An example such mechanism is DP-SGD-JL from [4].

A crucial step in previous papers that use PLDs is in approximating the distribution so that it has finite support; this is especially needed in the case where the PLD is continuous or has a support of a very large size, as otherwise the FFT cannot be performed efficiently. With the exception of [15]111Gopi et al. [15] uses an estimator that is neither pessimistic nor optimistic, and instead derive their final values of using concentration bound-based error estimates., previous PLD-based accounting approaches [24, 19, 20, 18] employ pessimistic estimators and optimistic estimators of PLDs. Roughly speaking, the former overestimate (i.e., give upper bounds on) , whereas the latter underestimate . For efficiency reasons, we would like the support of the approximate PLDs to be as small as possible, while retaining the accuracy of the estimates.

Our Contributions

Our main contributions are the following:

-

We obtain a new pessimistic estimator for a PLD and a given desired support set. Our pessimistic estimator is simple to construct and is based on the idea of “connecting the dots” of the hockey-stick curve at the discretization intervals. Interestingly, we show that this is the best possible pessimistic estimator (and therefore is at least as good as previous estimators).

-

We complement the above result by obtaining a new optimistic estimator that underestimates the PLD. This estimator is based on the combination of a greedy algorithm and a convex hull computation. In contrast to the pessimistic case, we prove that there is no “best” possible optimistic estimator.

-

We conduct an experimental evaluation showing that our estimators can work with much larger discretization intervals while keeping a similar error bound compared to previous approaches and yet give a better approximation than existing methods.

2 Preliminaries

For , we use to denote . For a set , we write to denote . Similarly, for , we use to denote . Here we use the (standard) convention that and ; we also use the convention that . Moreover, we use as a shorthand for .

We use to denote the support of a probability distribution . For two distributions , we use to denote the product distribution of the two. Furthermore, when are over an additive group, we use to denote the convolution of the two distributions.

2.1 Hockey-Stick Divergence and Curve

Let . The -hockey-stick divergence between two probability distributions and over a domain is given as

| (1) |

where is over all measurable sets .

For any pair of distributions, let be its hockey-stick curve, given as .

The following characterization of hockey-stick curves, due to [32], is helpful:

Lemma 2.1 ([32]).

A function is a hockey-stick curve for some pair of distributions if and only if the following three conditions hold:

-

(i)

is convex and non-increasing,

-

(ii)

,

-

(iii)

for all .

2.2 Differential Privacy

The definition of differential privacy (DP) [9, 8]222For more background on differential privacy, we refer the reader to the monograph [10]. can be stated in terms of the hockey-stick divergence as follows.

Definition 2.2.

For a notion of adjacent datasets, a mechanism is said to satisfy -differential privacy (denoted, -DP) if for all adjacent datasets , it holds that .

We point out that the techniques developed in this paper are general and do not depend on the specific adjacency relation. For the rest of the paper, for convenience, we always use to denote .

In most situations however, mechanisms satisfy -DP for multiple values of and . This is captured by the privacy loss profile of a mechanism given as . It will be more convenient to consider the hockey-stick curve instead of the privacy profile. The only difference is that the hockey-stick curve takes as parameter instead of as in the privacy profile.

2.3 Dominating Pairs

A central notion in our work is that of a dominating pair for a mechanism, defined by Zhu et al. [32].

Definition 2.3 (Dominating Pairs [32]).

A pair of distributions dominates a pair of distributions if it holds that

we denote this as .

A pair of distributions is a dominating pair for a mechanism if for all adjacent datasets , it holds that ; we denote this as .

A pair of distributions is a tightly dominating pair for if for every , it holds that .

Note that, by definition, if and only if is no smaller than pointwise, i.e., for all .

The following result highlights the importance of dominating pairs.

Theorem 2.4 ([32]).

If and , then , where is the composition of and . Furthermore, this holds even for adaptive composition.333In adaptive composition of , can also take the output of as an auxiliary input. Here the has to hold for all possible auxiliary input.

Thus, in order to upper bound the privacy loss profile , it suffices to compute for a dominating pair for .

2.4 Privacy Loss Distribution

Privacy Loss Distribution (PLD) [11, 30] is yet another way to represent the privacy loss. For simplicity, we give a definition below specific to discrete distributions ; it can be extended, e.g., to continuous distributions by replacing the probability masses with probability densities of at .

Definition 2.5 ([11]).

The privacy loss distribution (PLD) of a pair of discrete distributions, denoted by , is the distribution of the privacy loss random variable generated by drawing and let .

As alluded to earlier, PLD can be used to compute the hockey-stick divergence [24, 30] (proof provided in Appendix A for completeness):

Lemma 2.6.

For any pair of discrete distributions and , we have

Note that the RHS term above depends only on and not directly on themselves. For convenience, we will abbreviate the RHS term as .

The main advantage in dealing with PLDs is that composition simply corresponds to convolution of PLDs [24, 30]:

Lemma 2.7.

Let be discrete distributions. Then we have

2.5 Accounting Framework via Dominating Pairs and PLDs

Dominating pairs and PLDs form a powerful set of building blocks to perform privacy accounting. Recall that in privacy accounting, we typically have a mechanism where each is a “simple” mechanism (e.g., Laplace or Gaussian mechanisms) and we would like to understand the privacy profile of .

The approach taken in previous works [24, 19, 20, 18] can be summarized as follows.444Note that their results are not phrased in terms of dominating pairs, since the latter is only defined and studied in [32]. Nonetheless, these previous works use similar (but more restricted) notions for “worst case” distributions.

-

1.

Identify a dominating pair for each .

-

2.

Find a pessimistic estimate555We remark that this is slightly inaccurate as the “pessimistic estimate” in previous works may actually not be a valid PLD; please see Section 4.1 for a more detailed explanation. such that is supported on a certain set of prespecified values.

-

3.

Compute .

-

4.

Compute from using the formula from Lemma 2.6.

By Theorem 2.4 and Lemmas 2.7 and 2.6, we can conclude that ; in other words, the mechanism is -DP as desired.

Note that the reason that one needs to have finite support in the second step is so that it can be computed efficiently via the Fast Fourier Transform (FFT). Currently, there is only one approach used in previous works, called Privacy Buckets [24]. Roughly speaking, this amounts simply to rounding the PLD up to the nearest point in the specified support set. (See Section 4.1 for a more formal description.)

While the above method gives us an upper bound of , there are scenarios where we would like to find a lower bound on ; for example, this can be helpful in determining how tight our upper bound is. Computing such a lower bound is also possible under the similar framework, except that we need to know a list of tightly dominating pairs such that there exists an adjacent datasets for which . If such tightly dominating pairs can be identified, then we can follow the same blueprint as above except we replace a pessimistic estimate with an optimistic estimate . This would indeed gives us a lower bound of .

The described framework is illustrated in Figure 1.

3 Finitely-Supported PLDs

As alluded to in the previous section, to take full advantage of FFT, it is important that a PLD is discretized in a way such that its support is finite. For exposition purposes, we will assume that the discretization points include and . We will use for discretization points for the PLD and for the corresponding discretization points for the hockey-stick curve:

Assumption 3.1.

Let be any finite subset of such that , and let be such that and for all .

For the remaining of this work, we will operate under the above assumption and we will not state this explicitly for brevity.

Using the characterization in Lemma 2.1, we can also characterize the hockey-stick curve of PLDs whose support is on a prespecified finite set , stated more precisely in the lemma below. Furthermore, the “inverse” part of the lemma yields an algorithm (Algorithm 1) that can construct given which we will use in the sequel.

Lemma 3.2.

A function is a hockey-stick curve for some pair such that if and only if the following conditions hold:

-

(i)

is convex and non-increasing,

-

(ii)

,

-

(iii)

for all ,

-

(iv)

For all , the curve restricted to is linear: i.e., for all , we have .

-

(v)

For all , .

A consequence of Lemma 3.2 is that is completely specified by . More formally, given , the only possible extension of to a hockey-stick curve is its piecewise-linear extension defined by

for all . Note that this may still not be a hockey-stick curve, as it may not be convex.

-

Proof of Lemma 3.2.

We start with the converse direction by describing an algorithm that, given , can construct the desired . In fact, we will construct distributions and with supports contained in satisfying the following:

-

()

for all ,

-

()

,

-

()

for all .

The first two conditions imply that and the last condition implies that as desired.

The construction of is described in Algorithm 1.

Algorithm 1 PLD Discretization. procedure DiscretizePLD()for dofor do -

()

Let us now verify that both and are valid probability distributions. First, notice that due to the convexity of . Furthermore,

where the last inequality follows from (iii). Thus, is indeed a probability distribution. As for , notice that for all . Finally, we also have

meaning that is a probability distribution as desired.

Properties 1 and 2 are immediate from the construction. We will now check Property 3, based on two cases whether .

-

Case I: . In this case, we have .

-

Case II: . Suppose that for . We have

Furthermore, we have

Similarly, we also have . Combining the three equalities, we arrive at

which is equal to due to assumption (iv).

As a result, as desired.

We will now prove this direction. (i), (ii), and (iii) follow immediately from Lemma 2.1. As a result, it suffices to only prove (iv) and (v). Suppose that for some pair such that . Let be a shorthand for the distribution of . To prove (iv), consider any for some . We then have

which completes the proof of (iv).

Next, we prove (v). Consider any . We have

thereby completing our proof. ∎

4 Pessimistic PLDs with Finite Support

As we have described in Figure 1, pessimistic estimates of PLDs with finite supports are crucial in the PLD-based privacy accounting framework. The better these pessimistic estimates approximate the true PLD, the more accurate is the resulting upper bound .

Equipped with tools developed in the previous section, we will now describe our finite-support pessimistic estimate of a PLD. Specifically, given a pair of distributions, we would like to compute such that with . In fact, as we will show below (Lemma 4.1), our choice of “best approximates” .

Our construction of the pair is simple: run DiscretizePLD (Algorithm 1) on the input .

Recall from the proof of Lemma 3.2 that this construction simply gives , which is a piecewise-linear extension of . In other words, we simply “connect the dots” to construct the hockey-stick curve of our pessimistic estimate. Note that this, together with the convexity of (Lemma 2.1), implies that as desired.

Additionally, it is not hard to observe that our choice of pessimistic PLD is the best possible, in sense that is the least element (under the domination partial order) among all pairs that dominate :

Lemma 4.1.

Let be any pair of distributions such that is supported on and . Then, we must have

- Proof.

4.1 Comparison to Privacy Loss Buckets

The primary previous work that also derived a pessimistic estimate of PLD is that of Meiser and Mohammadi [24], which has also been used (implicitly) in later works [19, 20, 18]. In our terminology, the Privacy Buckets (PB) algorithm of Meiser and Mohammadi [24]666This is referred to as grid approximation in [19, 20, 18]. can be restated as follows: let the pessimistic-PB estimate be the probability distribution where

for all . In other words, is a probability distribution on that stochastically dominates ; furthermore, is the least such distribution under stochastic dominant (partial) ordering. In previous works [24, 19, 20, 18], such an estimate is then used in place of the true (non-discretized) PLD for accounting and computing ’s (via Lemma 2.7 and Lemma 2.6).

A priori, it is not clear whether is even a valid PLD (for some pair of distributions). However, it not hard to prove that this is indeed the case:

Lemma 4.2.

There exists a pair of distributions such that .

-

Proof.

Let be defined by

for all . It is clear that is a valid distribution.

Then, define by

for all and let . To check that is a valid distribution, it suffices to show that . This is true because

Finally, it is obvious from the definitions of that . ∎

Combining the above lemma and the fact that dominates with Lemma 4.1, we can conclude that our estimate is no worse than the PB estimate:

Corollary 4.3.

Let be as defined above. Then, for all , we have .

5 Optimistic PLDs with Finite Support

We next consider optimistic PLDs, i.e., dominated by a given . We start by showing that, unlike pessimistic PLDs for which there is the “best” possible choice (Lemma 4.1), there is no such a choice for optimistic PLDs:

Lemma 5.1.

There exists a pair of distributions and a finite set such that, for any pair such that , there exists a pair such that is supported on and .

-

Proof.

Let be the result of the -DP binary randomized response, i.e.,

It is simple to verify that

Let be where for any .

Let be defined as

and let be its piecewise-linear extension. It is again simple to verify that satisfies the conditions in Lemma 3.2 and therefore for some such that is supported on . Furthermore, it can be checked from our definition that .

Similarly, let be defined as

and let be its piecewise-linear extension. Again, for some such that is supported on and .

Now, consider any such that is supported on . We claim that or . To prove this, assume for the sake of contradiction that and . This means that

From piecewise-linearity of restricted to (Lemma 3.2), we then have , a contradiction to the assumption that . ∎

5.1 A Greedy and Convex Hull Construction

The previous lemma shows that, unfortunately, there is no canonical choice for an optimistic estimate for a given PLD. Due to this, we propose a simple greedy algorithm to construct an optimistic estimate for the PLD of a given pair . Similar to before, it will be more convenient to deal directly with the hockey-stick curve. Here we would like to construct such that its piecewise-linear extension point-wise lower bounds . The distribution (and ) can then be computed using Algorithm 1.

Our algorithm will assume that we can compute the derivative of at any given point (denoted by ). We remark that, for many widely used mechanisms including Laplace and Gaussian mechanisms, the closed-formed formula for can be easily computed.

First Greedy Attempt. Before describing our algorithm, let us describe an approach that does not work; this will demonstrate the hurdles we have to overcome. Consider the following simple greedy algorithm: start with and, if we are currently at , then find the largest possible such that the line is below . (In other words, the line is tangent to .)

While this is a natural approach, there are two issues with this algorithm:

-

First and more importantly, it is possible that at some discretization point becomes negative! Obviously this invalidates the construction as will not correspond to a hockey-stick curve.

-

Secondly, each computation of tangent line requires several (and sequential) computations of the derivative —rendering the algorithm inefficient—and is also subject to possible numerical instability.

We remark that if we instead start from right (i.e., ) and proceed greedily to the left (in decreasing order of ), then the first issue will become that can be smaller than , which also makes it an invalid hockey-stick curve due to Lemma 2.1(iii). As will be explained below, we will combine these two directions of greedy together with a convex hull algorithm in our revised approach.

An Additional Assumption. For our algorithm, we will also need a couple of assumptions. The first one is that 1 belongs to the discretization set:

Assumption 5.2.

(or equivalently ).

For the remainder of this section, we will use to denote the index for which (i.e., ).

We show below that this assumption is necessary. When it does not hold, then it may simply be impossible to find an optimistic estimate at all:

Lemma 5.3.

There exists a pair of distributions such that any satisfies .

-

Proof.

Let . We simply have . Due to Lemma 2.1(iii), we must have . This simply implies as desired. ∎

Our Greedy + Convex Hull Algorithm. We are now ready to describe our final algorithm. The main idea is to not attempt to create the curve in one left-to-right or right-to-left sweep, but rather to simply generate a “candidate set” for each . (Such a set will in fact be a singleton for all except , for which , but we will refer to ’s as sets here for simplicity of notation.) We then compute the convex hull of these points and take it (or more precisely its lower curve) as our optimistic estimate. This last step immediately ensures the convexity of our curve, which is required for it to be a valid hockey-stick curve (Lemma 2.1(i)).

To construct the candidate set , we combine the left-to-right and right-to-left greedy approaches. Specifically, for , we draw the tangent line of the true curve at and let its intersection with the vertical line be ; then we add into . Similarly, for , we draw the tangent line of the true curve at and let its intersection with the vertical line be ; then we add into . At the very end points and , we also add and respectively to .

Notice that this algorithm, unlike the previous (failed) greedy approach, only requires a calculation of at each , which can be done in parallel. Furthermore, efficient algorithms for convex hull are well known in the literature and can be used directly.

The complete and more precise description of our algorithm is given in Algorithm 2. We also note here that for all and (as the point constructed from the left may be different from the point from the right). Nonetheless, we write ’s as sets for simplicity of notation. An illustration of the algorithm can be found in Figure 4.

Having described our algorithm, we will now proof its correctness, i.e., that it outputs a pair of distributions dominated by the input pair.

Theorem 5.4.

Let denote the output of where . Then, under 5.2, we have .

To prove Theorem 5.4, it will be crucial to have the following lower bounds on the candidate points.

Lemma 5.5.

-

(i)

For all , .

-

(ii)

For all , .

-

(iii)

For all , .

-

(iv)

For all , .

-

Proof.

- (i)

-

(ii)

The statement obviously holds for . Next, consider . Since is non-increasing (from Lemma 2.1(i)), we have . Therefore, .

-

(iii)

The statement obviously holds for . For , the convexity of (Lemma 2.1(i)) immediately implies that

-

(iv)

The statement obviously holds for . For , the convexity of (Lemma 2.1(i)) immediately implies that

We are now ready to prove Theorem 5.4.

-

Proof of Theorem 5.4.

We start by observing that the vertices of the convex hull consists of the points , where and .

First, we have to show that constitute a valid input to the DiscretizePLD algorithm. Per Lemma 3.2, we only need to show that (i) is non-increasing, (ii) is convex, and (iii) for all . Notice also that is simply the piecewise-linear curve connecting .

To see that (i) holds, observe that the second-rightmost point in the convex hull must be for some where or . In either case, Lemma 5.5 implies that . Since is the lower curve of the convex hull and is its rightmost segment, we can conclude that is non-increasing in the range . Finally, since we simply have for all , it is also non-increasing in the range , thereby proving (i).

As for (ii), since forms the lower boundary of the convex hull , is convex in the range . Again, since we simply have for all , we can conclude that it is convex for the entire range .

Finally, for (iii), Lemma 5.5 states that all the points is above the curve . Since is in the convex hull , we can conclude that also lies above this curve, as desired.

Now that we have proved that is a valid input to the DiscretizePLD algorithm (and therefore the output is a pair of valid probability distributions), we will next show that . This is equivalent to showing that for all . To prove this, we consider three cases based on the value of . For brevity, we say that a curve is below a curve when they share the same domain and for all .

-

Case I: . In this case, .

-

Case II: . Suppose that . Let denote the line segment from to , and denote the line segment from to . From Lemma 5.5(iv), is below . Furthermore, since is a lower boundary of the convex hull containing , it must also be below . Therefore, we have

where the last inequality follows from convexity of .

-

Case III: . Suppose that . Similarly to the previous case, let denote the line segment from to , and denote the line segment from to . From Lemma 5.5(iii), is below . Furthermore, since is a lower boundary of the convex hull containing , it must also be below . Therefore, we have

where the last inequality follows from convexity of .

As a result, we can conclude that , completing our proof. ∎

5.2 Comparison to Privacy Loss Buckets

Similar to Section 4.1, PB [24] can also be applied for optimistic-estimate: let be the probability distribution where

for all . That is, is a probability distribution on that is stochastically dominated by ; furthermore, is the greatest such distribution under stochastic dominant (partial) ordering. It is important to note that, unlike the pessimistic-PB estimate, the optimistic-PB estimate is not necessarily a valid PLD for some pair of distributions. This can easily be seen by, e.g., taking a PLD of any -DP mechanism for finite and let ; the optimistic-PB estimate puts all of its mass at , which is clearly not a valid PLD.

We present illustrations of our optimistic PLD and optimistic-PB estimates in Figure 3. Recall that in the pessimistic case, we can show that the pessimistic-PB estimate is no better than our approach (Corollary 4.3). Although we observe similar behaviours in the optimistic case in simple examples (e.g. Figure 3) and also in our experiments in Section 6, this unfortunately does not hold in general. Indeed, if has a non-zero mass at (or equivalently ), then the optimistic-PB estimate still keeps this mass while our does not. The latter is because we set in Algorithm 2. Note here that we cannot set here because the monotonicity may not hold anymore; it is possible that for some . Such examples highlight the challenge in finding a good optimistic estimate (especially in light of the non-existence of the best one, i.e., Lemma 5.1), and we provide further discussion regarding this in Section 7.

6 Evaluation

We compare our algorithm with the Privacy Buckets algorithm [24] as implemented in the Google DP library777Even though there are several other papers [19, 20, 18] that build on PB, all of them still use the same PB-based approximation, with the differences being how the truncation is computed for FFT. We use the implementation in the Google DP library github.com/google/differential-privacy/tree/main/python, and the algorithm of Gopi et al. [15] implemented in Microsoft PRV Accountant.888Implementation from github.com/microsoft/prv_accountant Gopi et al.’s algorithm does not fit into the pessimistic/optimistic framework as described in Section 2.5. Instead, their algorithm uses an approximation of PLD that is neither optimistic nor pessimistic and uses a concentration bound to derive pessimistic and optimistic estimates. Indeed, their approximate distribution maintains the same expectation as the true PLD, which is the main ingredient in their improvement over previous work.

As a first cut, we evaluate pessimistic and optimistic estimates on the privacy parameter , for a fixed value of , for varying number of compositions of the Gaussian mechanism with noise scale , while comparing our approach to the two other implementations mentioned above. We use the same discretization interval to evaluate each algorithm. The reason for choosing the Gaussian mechanism is that the exact value of can be computed explicitly. We find that the estimates given by our approach are the tightest.

Remark 6.1.

For any specified discretization interval, each algorithm has a different choice of how many discretization points are included in . Our implementation uses the same set of discretization points as used by the Google DP implementation. The number of discretization points increases with the number of self compositions (we use the Google DP implementation to perform self composition999We found that the Google DP implementation has a significantly worse running time when computing optimistic estimates, due to lack of truncation. We modify the self-composition method in the Google DP library to incorporate truncation when computing optimistic estimates, and evaluate both ours and Google DP implementation with this minor modification. These do not change the estimates significantly, but drastically reduce the running time.). On the other hand, Microsoft PRV Accountant chooses a number of discretization points, depending on the number of compositions desired, and this number does not change after self composition. In all the evaluation experiments mentioned in this paper, we find that the number of discretization points in our approach are lower than the number of discretization points in the PRV Accountant (even after composition).

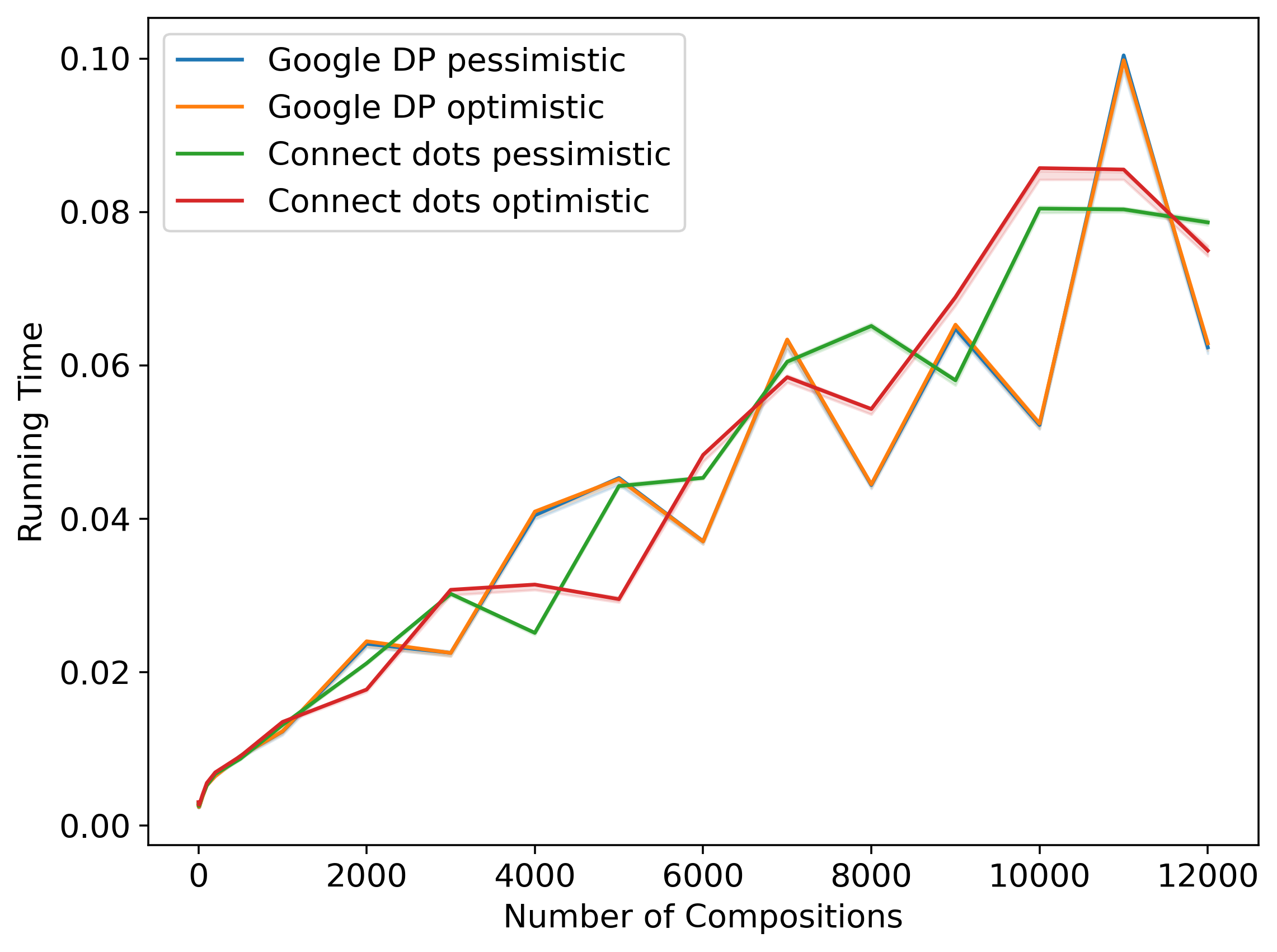

Our main evaluation involves computing pessimistic and optimistic estimates on the privacy parameter , for a fixed value of , for varying number of compositions of the Poisson sub-sampled Gaussian mechanism and comparing our approach to each of the two other implementations. Note that this particular mechanism is quite popular in that it captures the privacy analysis of DP-SGD where the number of compositions is equal to the number of iterations of the training algorithm, and the subsampling rate is equal to the fraction of the batch size divided by the total number of training examples [1]. In particular, we consider the Gaussian mechanism with noise scale , Poisson-subsampled with probability . We compare against each competing algorithm twice, once where both algorithms use the same discretization interval, and once where our approach uses a larger discretization interval than the competing algorithm. We additionally plot the running time required for this computation for each number of compositions; we ran the evaluation for each number of compositions and plot the mean running time along with a shaded region indicating 25th–75th percentiles of running time.

Comparison with Google DP.

The comparison with Google DP is presented in Figure 6. Figures 6(a) and 6(c) compare the ’s and runtimes for both methods using the same discretization interval, and finds that our method gives a significantly tighter estimate for a mildly larger running time. Figures 6(b) and 6(d) compare the ’s and runtimes for both methods with different discretization intervals, and using a discretization interval that is larger, our method gives comparable estimates, with a drastic speed-up ().

Comparison with Microsoft PRV Accountant.

The comparison with Microsoft PRV Accountant is presented in Figure 7. Figures 7(a) and 7(c) compare the ’s and runtimes for both methods using the same discretization interval, and finds that our method gives a significantly tighter estimate with already shorter running time. Figures 7(b) and 7(d) compare the ’s and runtimes for both methods with different discretization intervals, and using a discretization interval that is larger, our method gives comparable estimates, with an even larger speed-up.

In Appendix B, we perform a similar evaluation for the Poisson-subsampled Laplace mechanism.

7 Discussion & Open Problems

In this work, we have proposed a novel approach to pessimistic and optimistic estimates for PLDs, which outperforms previous approaches under similar discretization intervals, and allows for a more compact representation of PLDs while retaining similar error guarantees. There are still several interesting future directions that one could consider.

As we have proved in Lemma 5.1, there is no unique “best” way to pick an optimistic estimate, and we proposed a greedy algorithm (Algorithm 2) for this task. However, it is difficult to determine how good this greedy algorithm is in general. Instead, it might also be interesting to find that minimizes a certain objective involving and . For example, one could consider the area between the two curves, or the Fréchet distance between them. An intriguing direction here is to determine (i) which objective captures the notion of “good approximation” better in terms of composition, and (ii) for a given objective, whether there is an efficient algorithm to compute such . We remark that for some objectives, such as the area between the two curves, it is possible to discretize the candidate values for each and use dynamic programming in an increasing order of (with the state being ). Even for these objectives, it remains interesting to determine whether such discretization is necessary and whether more efficient algorithms exist.

Also related is the question of how to theoretically explain our experimental findings (Section 6). Although we see significant numerical improvements, it is intriguing to understand theoretically where these improvements come from and which properties of PLDs govern how big such improvements are. More broadly, given an estimate of a PLD, how can we quantify how “good” it is? Previous work (e.g., [19, 15]) has obtained certain theoretical bounds on the errors; it would be interesting to investigate whether these bounds can help answer the aforementioned question.

Furthermore, the entire line of work on PLD-based accounting [24, 19, 20, 18], including this paper, has so far considered only non-interactive compositions, meaning that the mechanisms that are run in subsequent steps cannot be changed based on the outputs from the previous steps. This is not a coincidence: interactive composition is highly non-trivial and in fact it is known that the advanced composition theorem (which is even a more specific form of PLD) does not hold in this regime [28]. Several solutions have been proposed here, such as modified formulae for advanced compositions [28, 31] and Renyi DP [14, 21]. However, as discussed earlier, these methods may be loose even in the non-interactive setting, which is the original motivation for PLD-based accounting. Therefore, it would be interesting to understand whether there is a tighter method similar to PLDs that also works in the interactive setting.

Acknowledgments

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

References

- [1] Martin Abadi, Andy Chu, Ian Goodfellow, H Brendan McMahan, Ilya Mironov, Kunal Talwar, and Li Zhang. Deep learning with differential privacy. In CCS, pages 308–318, 2016.

- [2] John M Abowd. The US Census Bureau adopts differential privacy. In KDD, pages 2867–2867, 2018.

- [3] Apple Differential Privacy Team. Learning with privacy at scale. Apple Machine Learning Journal, 2017.

- [4] Zhiqi Bu, Sivakanth Gopi, Janardhan Kulkarni, Yin Tat Lee, Judy Hanwen Shen, and Uthaipon Tantipongpipat. Fast and memory efficient differentially private-SGD via JL projections. In NeurIPS, 2021.

- [5] Mark Bun, Cynthia Dwork, Guy N Rothblum, and Thomas Steinke. Composable and versatile privacy via truncated CDP. In STOC, pages 74–86, 2018.

- [6] Mark Bun and Thomas Steinke. Concentrated differential privacy: Simplifications, extensions, and lower bounds. In TCC, pages 635–658, 2016.

- [7] Bolin Ding, Janardhan Kulkarni, and Sergey Yekhanin. Collecting telemetry data privately. In NeurIPS, pages 3571–3580, 2017.

- [8] Cynthia Dwork, Krishnaram Kenthapadi, Frank McSherry, Ilya Mironov, and Moni Naor. Our data, ourselves: Privacy via distributed noise generation. In EUROCRYPT, pages 486–503, 2006.

- [9] Cynthia Dwork, Frank McSherry, Kobbi Nissim, and Adam D. Smith. Calibrating noise to sensitivity in private data analysis. In TCC, pages 265–284, 2006.

- [10] Cynthia Dwork and Aaron Roth. The algorithmic foundations of differential privacy. Found. Trends Theor. Comput. Sci., 9(3-4):211–407, 2014.

- [11] Cynthia Dwork and Guy N Rothblum. Concentrated differential privacy. arXiv:1603.01887, 2016.

- [12] Cynthia Dwork, Guy N Rothblum, and Salil Vadhan. Boosting and differential privacy. In FOCS, pages 51–60, 2010.

- [13] Úlfar Erlingsson, Vasyl Pihur, and Aleksandra Korolova. RAPPOR: Randomized aggregatable privacy-preserving ordinal response. In CCS, pages 1054–1067, 2014.

- [14] Vitaly Feldman and Tijana Zrnic. Individual privacy accounting via a Rényi filter. In NeurIPS, 2021.

- [15] Sivakanth Gopi, Yin Tat Lee, and Lukas Wutschitz. Numerical composition of differential privacy. In NeurIPS, 2021.

- [16] Andy Greenberg. Apple’s “differential privacy” is about collecting your data – but not your data. Wired, June, 13, 2016.

- [17] Peter Kairouz, Sewoong Oh, and Pramod Viswanath. The composition theorem for differential privacy. In ICML, pages 1376–1385, 2015.

- [18] Antti Koskela and Antti Honkela. Computing differential privacy guarantees for heterogeneous compositions using FFT. arXiv:2102.12412, 2021.

- [19] Antti Koskela, Joonas Jälkö, and Antti Honkela. Computing tight differential privacy guarantees using FFT. In AISTATS, pages 2560–2569, 2020.

- [20] Antti Koskela, Joonas Jälkö, Lukas Prediger, and Antti Honkela. Tight differential privacy for discrete-valued mechanisms and for the subsampled Gaussian mechanism using FFT. In AISTATS, pages 3358–3366, 2021.

- [21] Mathias Lécuyer. Practical privacy filters and odometers with Rényi differential privacy and applications to differentially private deep learning. arXiv:2103.01379, 2021.

- [22] Google’s Differential Privacy Libraries. DP Accounting Library. https://github.com/google/differential-privacy/tree/main/python/dp_accounting, 2020.

- [23] Antti Koskela. Lukas Prediger. Code for computing tight guarantees for differential privacy. https://github.com/DPBayes/PLD-Accountant, 2020.

- [24] Sebastian Meiser and Esfandiar Mohammadi. Tight on budget? Tight bounds for -fold approximate differential privacy. In CCS, pages 247–264, 2018.

- [25] Microsoft. A fast algorithm to optimally compose privacy guarantees of differentially private (DP) mechanisms to arbitrary accuracy. https://github.com/microsoft/prv_accountant, 2021.

- [26] Ilya Mironov. Rényi differential privacy. In CSF, pages 263–275, 2017.

- [27] Jack Murtagh and Salil Vadhan. The complexity of computing the optimal composition of differential privacy. In TCC, pages 157–175, 2016.

- [28] Ryan M. Rogers, Salil P. Vadhan, Aaron Roth, and Jonathan R. Ullman. Privacy odometers and filters: Pay-as-you-go composition. In NIPS, pages 1921–1929, 2016.

- [29] Stephen Shankland. How Google tricks itself to protect Chrome user privacy. CNET, October, 2014.

- [30] David M Sommer, Sebastian Meiser, and Esfandiar Mohammadi. Privacy loss classes: The central limit theorem in differential privacy. PoPETS, 2019(2):245–269, 2019.

- [31] Justin Whitehouse, Aaditya Ramdas, Ryan Rogers, and Steven Wu. Improved privacy filters and odometers: Time-uniform bounds in privacy composition. In TPDP, 2021.

- [32] Yuqing Zhu, Jinshuo Dong, and Yu-Xiang Wang. Optimal accounting of differential privacy via characteristic function. In AISTATS, pages 4782–4817, 2022.

Appendix A Proofs

See 2.6

-

Proof.

We have from the definition of hockey-stick divergence that

where the last line follows from the fact that

Appendix B Evaluation of Poisson-Subsampled Laplace Mechanism

Similar to Section 6, we compute pessimistic and optimistic estimates on the privacy parameter , for a fixed value of , for varying number of compositions of the Poisson sub-sampled Laplace mechanism and comparing our approach to the Google DP implementation.101010we were unable to compare against Microsoft PRV Accountant, since their implementation does not have support for the Laplace mechanism yet. In particular, we consider the Laplace mechanism with noise scale , Poisson-subsampled with probability . We compare against Google DP implementation twice, once where both algorithms use the same discretization interval, and once where our approach uses a larger discretization interval than the competing algorithm. We additionally plot the running time required for this computation for each number of compositions; we ran the evaluation for each number of compositions and plot the mean running time along with a shaded region indicating 25th–75th percentiles of running time.

The comparison with Google DP is presented in Figure 8. Figures 6(a) and 8(c) compare the ’s and runtimes for both methods using the same discretization interval, and finds that our method gives a significantly tighter estimate for a mildly larger running time. Figures 8(b) and 8(d) compare the ’s and runtimes for both methods with different discretization intervals, and using a discretization interval that is larger, our method gives comparable estimates, with a significant running time speed-up ().

Appendix C Inaccuracies from Floating-Point Arithmetic

We briefly discuss the errors due to floating-point arithmetic. In our implementation, we use the default float datatype in python, which conforms to IEEE-754 “double precision”. Roughly speaking, this means that the resolution of each floating-point number of . The number of operations performed in our algorithms scales linearly with the support size of the (discretized) PLD, which is less than in all of our experiments. Therefore, a rough heuristic suggests that the numerical error for here would be less than . We stress however that this is just a heuristic and is not a formal guarantee: achieving a formal guarantee is much more complicated, e.g., our optimistic algorithm requires computing a convex hull and one would have to formalize how the numerical error from convex hull computation affects the final .

Finally, we also remark that, while Gopi et al. [15, Appendix A] note that they experience numerical issues around , we do not experience the same issues in our algorithm even for similar setting of parameters even for as small as .