Conformal Contextual Robust Optimization

Abstract

Data-driven approaches to predict-then-optimize decision-making problems seek to mitigate the risk of uncertainty region misspecification in safety-critical settings. Current approaches, however, suffer from considering overly conservative uncertainty regions, often resulting in suboptimal decision-making. To this end, we propose Conformal-Predict-Then-Optimize (CPO), a framework for leveraging highly informative, nonconvex conformal prediction regions over high-dimensional spaces based on conditional generative models, which have the desired distribution-free coverage guarantees. Despite guaranteeing robustness, such black-box optimization procedures alone inspire little confidence owing to the lack of explanation of why a particular decision was found to be optimal. We, therefore, augment CPO to additionally provide semantically meaningful visual summaries of the uncertainty regions to give qualitative intuition for the optimal decision. We highlight the CPO framework by demonstrating results on a suite of simulation-based inference benchmark tasks and a vehicle routing task based on probabilistic weather prediction.

1 INTRODUCTION

Predict-then-optimize or contextual robust optimization problems are of long-standing interest in safety-critical settings where decision-making happens under uncertainty [58, 22, 23, 50]. In traditional robust optimization, results are made to be robust to distributions anticipated to be present upon deployment [8, 10]. Since such decisions are sensitive to proper model specification, recent efforts have sought to supplant this with data-driven uncertainty regions [15, 9, 56, 35].

Model misspecification is ever more present in contextual robust optimization, spurring efforts to define similar data-driven uncertainty regions [46, 14, 58]. Such methods, however, focus on box- and ellipsoid-based uncertainty regions, both of which are necessarily convex and often overly conservative, resulting in suboptimal decision-making.

Conformal prediction provides a principled framework for producing distribution-free prediction regions with marginal frequentist coverage guarantees [4, 55]. By using conformal prediction on a user-defined score function and obtaining an empirical quantile of over a calibration set , prediction regions attain marginal coverage guarantees. Such prediction regions, however, are notably defined implicitly. For simple scores, such as residuals, an explicit expression of such regions can be written, making these the most common approaches used in practice [59, 31, 5, 33, 42].

The disadvantage is that such score functions ignore the structure that is often present in high-dimensional data, such as images. Choices of simplistic scores, thus, tend to be overly conservative and often produce convex prediction regions even when is non-convex. Recent work has demonstrated that defining scores using conditional generative models produces sharper and, hence, more informative prediction regions [26, 60, 49]. We, thus, extend the line of data-driven predict-then-optimize work by considering such generative model-based prediction regions.

In addition to contributing to the predict-then-optimize line of inquiry, we view this work as addressing a concern of the conformal prediction community: how to use implicitly defined non-convex, high-dimensional prediction regions. Works producing such regions have themselves noted the difficulty in their use [54, 34]. Initial works on coverage for images have framed the utility of their results in highlighting regions of the image with the greatest variability and, hence, uncertainty [5, 31, 7].

Extending such visualization gives invaluable intuition to the end user. For instance, a black-box optimization procedure for producing drug candidates to robustly bind to a predicted protein structure offers little insight into the decision-making process; however, semantic summaries of the uncertainty region would reveal regions of flexibility of the protein, clarifying why particular structures were deemed optimal in the candidate drug. Such interest in explainable robust decision-making was highlighted in a recent survey [52], especially given the “right to explanation” mandated by the EU’s “General Data Protection Regulation” [18, 36]. Our main contributions, thus, are:

-

•

Proposing Conformal-Predict-Then-Optimize (CPO) to leverage informative, non-convex prediction regions for decision-making.

-

•

Providing interpretable visual summaries of uncertainty regions using representative points.

-

•

Demonstrating the generality of CPO across a suite of benchmark tasks and a traffic routing task based on probabilistic weather prediction.

2 BACKGROUND

2.1 Conformal Prediction

Given a dataset of i.i.d. observations from a distribution , conformal prediction [4, 55] produces prediction regions with distribution-free theoretical guarantees. A prediction region maps from observations of to sets of possible values for and is said to be marginally valid at the level if .

Split conformal is one popular version of conformal prediction. In this approach, marginally calibrated regions are designed using a “score function” . Intuitively, the score function should have the quality that is smaller when it is more reasonable to guess that given the observation . For example, if one has access to a function which attempts to predict from , one might take . The score function is evaluated on each point of a dataset called the “calibration dataset,” yielding , where . Note that the calibration dataset cannot be used to pick the score function; if data is used to design the score function, it must independent of . We then define as the quantile of . For any future , the set satisfies . This inequality is known as the coverage guarantee, and it arises from the exchangeability of the score of a test point with . The coverage guarantee possesses finite-sample properties.

As noted in Vovk’s tutorial [55], while the coverage guarantee holds for any score function, different score functions may lead to more or less informative prediction regions. For example, the score leads to the highly uninformative prediction region of all possible values of . Predictive efficiency is one way to quantify informativeness, defined as the inverse of the expected Lebesgue measure of the prediction region, i.e. [61, 53]. Methods employing conformal prediction often seek to identify prediction regions that are efficient and calibrated.

2.2 Representative Points

The problem of summarizing the distribution of a random vector with points arises in many contexts, such as in optimal stratification [16, 17], density estimation [28], and signal quantization [43]. Such points are known as representative points (RPs). Denoting the space of all sets such that as , the RPs of a random variable are

| (1) |

For a comprehensive review of representative points, see [24]. Despite extensive study, no general algorithm exists for the efficient construction of representative points for arbitrary distributions. Typical implementations use clustering algorithms, such as Lloyd’s algorithm, on .

2.3 Predict-Then-Optimize

Predict-then-optimize problems are formulated as

| (2) |

where are decision variables, an unknown cost parameter, observed contextual variables, and a compact feasible region. The predict-then-optimize framework is so called as the nominal approach first predicts and subsequently solves . Alternatively, a predictive contextual distribution is assumed, with respect to which the optimization formulation is solved. A full review is presented in [22].

This formulation, however, is inappropriate in risk-sensitive downstream tasks. For this reason, recent works have begun investigating a risk-sensitive variant or “robust” alternative to this traditional formulation, namely by replacing with [46, 14, 58], where is constructed to guarantee coverage of , precisely stated in Lemma 3.1.

3 METHOD

We now propose CPO, a way to perform robust predict-then-optimize decision-making over informative, non-convex prediction regions based on generative models. We then discuss how to construct visual summaries of the contents of the conformal prediction regions using a collection of representative points.

3.1 CPO: Problem Formulation

Let , where is a general metric space, and be the -field of . While the standard predict-then-optimize framework assumes a linear objective function , we consider general convex-concave objective functions that are -Lipschitz in under the metric for any fixed , as follows:

| (3) |

where is a uncertainty region predictor. Exact solution of this problem is intractable, as no practical methods exist to optimize over the predictor function space . Practical solution of this optimization problem, thus, involves optimizing over several prespecified uncertainty region predictors . For any fixed , this robust counterpart to the nominal predict-then-optimize problem produces a valid upper bound if . Denoting the pessimism gap as , we clearly see if , formalized below.

Lemma 3.1.

Consider any that is -Lipschitz in under the metric for any fixed . Assume further that . Then,

| (4) |

The proof is deferred to Appendix A. Thus, validity of ensures the RO procedure produces a valid bound with probability , with more efficient prediction regions resulting in tighter upper bounds.

3.2 CPO: Score Function

We assume a conditional generative model is learned for this prediction task. For most score functions, the min-max optimization problem of Equation 3 is computationally intractable. Crucially, however, we can consider an extension to the score proposed in [60], which lends itself to a decomposition under which such optimization becomes tractable. For a fixed and , let

| (5) |

We refer to this score as “Generalized Probabilistic Conformal Prediction,” (GPCP) whose validity follows from that of the original PCP framework [60]. We discuss the selection of in Section 3.4.

3.3 CPO: Optimization Algorithm

We fix and take to be the prediction region . Let . It follows that is convex by Danskin’s Theorem by assumption of the convexity of in . Exact solution of the min-max problem, thus, follows using standard gradient-based optimization techniques on . By Danskin’s Theorem, , where . We follow the standard projected gradient descent optimization scheme, projecting into at each iterate, denoted by .

Efficient solution of this RO problem, therefore, reduces to being able to efficiently solve the maximization problem over . While challenging over general nonconvex regions, the GPCP score formulation lends itself to a highly structured prediction region, namely of the form with being a ball of radius , the conformal quantile, under the metric. This decomposition of means the maximum can be efficiently computed by aggregating the maxima over the individual balls:

| (6) |

where the maximum over a ball can be efficiently computed with traditional convex optimization techniques. This procedure is summarized in Algorithm 1. The convergence of this procedure proceeds as follows, whose proof is deferred to Appendix B.

Lemma 3.2.

Let for , , and convex-concave and -Lipschitz in for any fixed . Let be a minimizer of . For any , define and . Then the iterates returned by Algorithm 1 satisfy

| (7) |

3.4 CPO: Selection

Crucially, the convergence highlighted in Lemma 3.2 reveals that the number of “outer” iterations (i.e. ) has no dependence on . This is apparent from the proof, in which the iterate count hinges upon the Lipschitz constant of , which critically is -Lipschitz regardless of what is selected, as each is -Lipschitz.

We can, thus, solely focus attention on the impact the choice of has on the “inner” optimization computational cost, namely . This linearly increasing cost with , however, must be juxtaposed with the improved statistical efficiency of such prediction regions. In particular, [60] empirically demonstrated region size generally decreased nonlinearly up to a saturation point as a function of .

Critically, this inflection point can be determined prior to performing the optimization, since doing so only requires access to and test samples to estimate the prediction region size. As pointed out in [60] and proven in [13], estimation of the volume of a union of hyperspheres is complicated by the need to account for overlapped regions. is, thus, chosen based on Monte Carlo estimates of the prediction region volume using Voronoi cells of the hypersphere centers given by [21]:

| (8) |

where denotes a random variable defined uniformly over the region associated with , the volume of a hypersphere of radius , and the Voronoi cell of , defined as . Muller’s method enables efficient sampling of [45, 27].

We then choose to be the inflection point, namely the for some user-specified volume tolerance. Critically, these volume estimates must be performed on a distinct subset of the data from as exchangeability with future test points is otherwise lost in conditioning on for selecting [61]. We, thus, partition , using for calibration and for volume estimation, detailed in Algorithm 2.

3.5 CPO: Representative Points

We now frame the problem of summarizing the prediction region . We critically note that this issue of interpretability is non-existent in traditional approaches to robust predict-then-optimize, where uncertainty regions are interpretable by construction, being balls around nominal estimates . In other words, there is a fundamental tension in qualitative interpretability and the expressiveness of uncertainty regions, requiring a bespoke method for recovering intuition when leveraging conformal prediction regions. Formally, for a user-specified number of summary points and query , we seek

| (9) |

We use the shorthand . In other words, we wish to construct representative points for a uniform sampling of the prediction region. A naive approach would simply involve explicitly gridding the output space , filtering such points with the rejection criterion of , and clustering the remaining points per the metric. This, however, is intractable in high-dimensional cases. Thus, a sampling method is employed to circumvent gridding, paralleling the technique leveraged for volume estimation.

samples are initially drawn for each . Importantly, such uniform sampling of the balls leads to non-uniform sampling over if naively aggregated across , as overlapped regions will be more densely sampled. For this reason, we subsample by discarding those samples for which for . This results in samples drawn from the desired .

RPs must be aggregated separately for each connected subregion of to ensure each . That is, we must identify . To do so, we determine if two points belong to the same by considering the corresponding connected components problem defined on the graph induced by the edge criterion . For each , we find a subset of the total representative points, for which we use K-Means++ with the metric. The full procedure is in Algorithm 3.

3.6 CPO: Projection

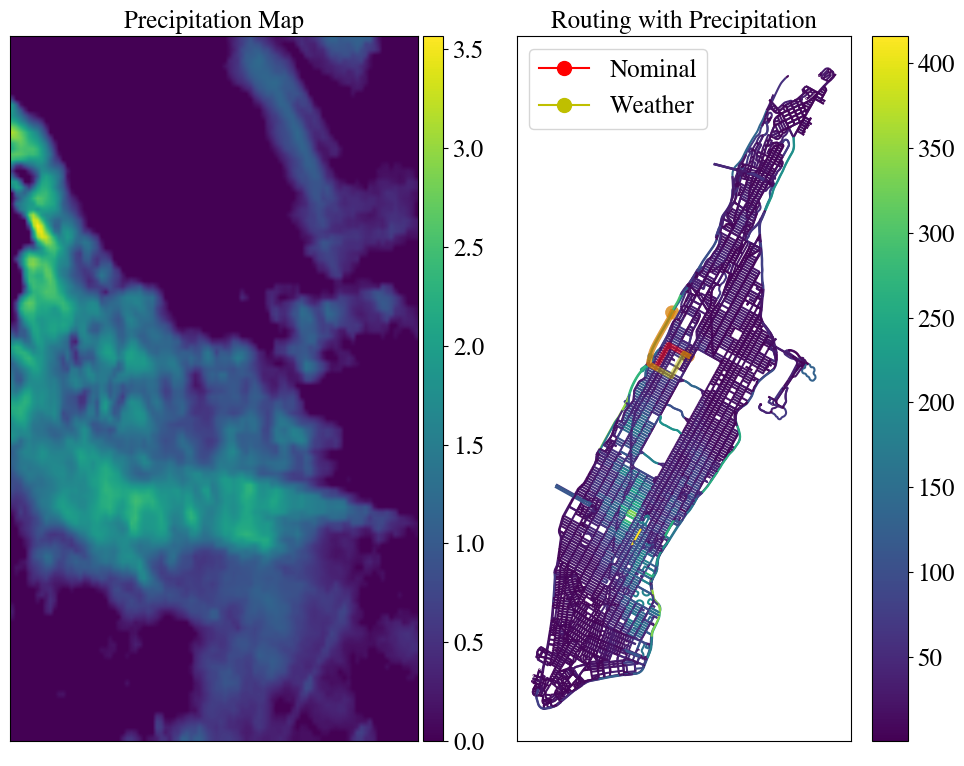

After obtaining , further insight can be gleaned by exploring the local projection around each . An example of this is visualizing the road-level variability in traffic predictions from uncertainty in upstream weather predictions, shown in Figure 5. To do this, we visualize the extent of the Voronoi cell associated with along the space dimensions. That is, for each Voronoi cell, we visualize the Frechet variance along the projections , where . Such projections preserve the structure of the objects being modeled, making them visually interpretable. For instance, in the traffic example corresponds to the projection of to a single road . Similarly, would project to a single atom for a molecular reconstruction task. Formally,

| (10) |

4 EXPERIMENT

We now demonstrate the utility of the CPO framework. Code will be made public upon acceptance.

4.1 SBI: Fractional Knapsack

We first study the fractional knapsack problem under various complex contextual mappings, namely

| (11) | |||

where and . The distributions and are taken to be those from various simulation-based inference (SBI) benchmark tasks provided by [30], chosen as they have with complex structure. We specifically study Two Moons, Lotka-Volterra, Gaussian Linear Uniform, Bernoulli GLM, Susceptible-Infected-Recovered (SIR), and Gaussian Mixture, fully described in Appendix C. We note that, while these particular distributions have little semantic meaning in the traditional context of fractional knapsack, this experiment highlights the capacity for CPO to succeed even for complex distributions, which we leverage in a more semantically meaningful case in Section 4.2.

4.1.1 SBI: Quantitative Assessment

| Box | PTC-B | Ellipsoid | PTC-E | CPO | |

|---|---|---|---|---|---|

| Gaussian Uniform | 0.94 | 0.96 | 0.95 | 0.95 | 0.95 |

| Gaussian Mixture | 0.95 | 0.93 | 0.94 | 0.93 | 0.94 |

| Bernoulli GLM | 0.96 | 0.95 | 0.95 | 0.94 | 0.94 |

| Lotka Volterra | 0.95 | 0.96 | 0.94 | 0.94 | 0.95 |

| SIR | 0.94 | 0.95 | 0.93 | 0.95 | 0.93 |

| Two Moons | 0.93 | 0.94 | 0.94 | 0.94 | 0.96 |

| Box | PTC-B | Ellipsoid | PTC-E | CPO | Nominal |

|---|---|---|---|---|---|

| 0.0 (0.0) | 0.0 (0.0) | 0.0 (0.0) | -0.27 (0.35) | -0.43 (0.4) | -4.48 (0.56) |

| 0.0 (0.0) | -6.6 (1.67) | 0.0 (0.0) | -7.38 (1.78) | -7.77 (1.87) | -11.66 (1.23) |

| 0.0 (0.0) | -0.18 (0.49) | 0.0 (0.0) | -0.06 (0.25) | -0.18 (0.37) | -3.53 (0.27) |

| -0.52 (0.02) | -0.05 (0.24) | -0.02 (0.0) | -0.22 (0.18) | -0.68 (0.26) | -1.88 (0.01) |

| -0.16 (0.02) | -0.22 (0.09) | -0.08 (0.01) | -0.22 (0.06) | -0.38 (0.05) | -0.52 (0.02) |

| 0.0 (0.0) | 0.0 (0.0) | 0.0 (0.0) | 0.0 (0.0) | -0.15 (0.11) | -0.38 (0.01) |

We first demonstrate the quantitative improvement in decision-making from leveraging CPO over the box- (PTC-B) and ellipsoid-based (PTC-E) regions proposed in [58], as well as box- and ellipsoid-based sets constructed based solely on observations of , i.e. where we ignore , referred to as Box and Ellipsoid. For CPO, we use

| (12) |

was taken to be a neural spline normalizing flow [19] trained with FAVI [2]. Visualizations of the exact and variational posteriors are provided in Appendix E. s were chosen by studying the inflections of the prediction region volume estimate under each distributional setup, with , seen in Figure 2. Inflection points were around for most setups.

For assessing coverage and the robust objective value, we sampled test points i.i.d. from . Coverage was assessed across all 1000 samples by measuring the proportion of samples for which . For assessing the objective, optimization was performed across 10 samples, with , , and sampled per run.

The results are seen in Table 1. We include the nominal optima as a reference, i.e. for the true . Recall that, by Lemma 3.1, with proper , the robust objective values should be valid upper bounds on the nominal optima, with more conservative regions resulting in more vacuous bounds. We see this as, although all approaches result in valid coverage guarantees and hence produce valid upper bounds, the overly conservative nature of alternate regions results in their consistent looseness compared to CPO. Notably, these differences are more accentuated in cases where has complex structure; level sets under the Gaussian Linear, Gaussian Mixture, and Bernoulli GLM cases are roughly ellipsoidal, seen in Appendix E, resulting in comparable performance between CPO and PTC-E. Thus, as discussed and highlighted in Section 4.2, the benefits of CPO primarily manifest under difficult-to-model contextual distributions, where sets for simple geometries become overly large.

4.1.2 SBI: Representative Point Recovery

We next demonstrate that Algorithm 3 can approximately recover RPs for such uncertainty regions, leveraged to glean insights in the modeling task of Section 4.2. Notably, RPs are not unique; for instance, any rigid rotation of for a uniform distribution over a 2D ball results in a distinct yet optimal set of RPs. The RP objective minimum, however, is unique, meaning suboptimality can be assessed by measuring

| (13) |

representative points were produced per setup. To compute , a grid discretization over the space was performed followed by a clustering for each connected component of this discretization. That is, the support was discretized into bins per dimension. Each discretized point was assessed for membership in , resulting in a collection of points , from which we could recover in the manner described in Section 3.5. Visualizations of the exact and approximate RPs are provided for tasks where in Appendix F.

To make explicit discretization possible, problems were projected into lower-dimensional versions, namely . Figure 3 demonstrates the suboptimality of decreases with increasing samples. Of note is that this convergence is slower in higher dimensional problems: for low dimensional cases, recovery of optimal RPs happens for small , meaning any fluctuations thereafter are noise, as seen in the Two Moons case.

| Box | PTC-B | Ellipsoid | PTC-E | CPO | Nominal | |

|---|---|---|---|---|---|---|

| Coverage | 0.94 | 0.93 | 0.94 | 0.92 | 0.94 | — |

| Objective | 7863.45 (0.0) | 34559.03 (171.3) | 7038.77 (0.0) | 8807.68 (4.22) | 4171.22 (321.34) | 299.50 (0.0) |

4.2 Robust Vehicle Routing

Optimal routing is a long-standing point of interest in the operations research community, with widespread applications such as in resource distribution and urban traffic flow management [44, 51, 47, 38]. We study the traffic flow problem from [3].

Recent work has demonstrated the utility of generative models in quantifying uncertainty for weather predictions over traditional physics-based approaches [1, 6, 29, 57]. We specifically leverage a latent diffusion model for such forecasting from [39]. Formally, a forecaster maps precipitation readings from radar networks , specifically over time steps with resolutions , to , the precipitation for some fixed point beyond .

We consider the robust traffic flow problem (RTFP) for a source-target pair over the network graph of Manhattan, where and . The precipitation was combined with the nominal speed limits to obtain the final travel costs along edges, fully described in Appendix G. Formally, we seek

| (14) | |||

where represents the proportion of traffic routed along edge , is the edge weight vector, is the node-arc incidence matrix, and has entries and for .

We again demonstrate the quantitative improvement in decision-making resulting from using the more informative CPO prediction regions. Experiments were conducted with and chosen uniformly at random from . We take the score as defined in Equation 12 on the edge weight space rather than the initial precipitation map space. Results are shown in Table 2. Again, although all approaches achieve coverage guarantees, bounds resulting from alternate regions are significantly looser compared to those from CPO. This is especially prominent in this task compared to those of Section 4.1 due to the high dimension of the prediction space() and complex nature of .

Notably, the formulation in Equation 14 is a relaxation of the standard LP formulation of the robust shortest paths problem (RSPP), in which . Given that is a totally unimodular matrix, the solutions of the box-constrained RTFP and RSPP are equivalent, i.e. for both Box and PTC-B; they, however, are not equivalent under more general constraint sets [12], i.e. Ellipsoid, PTC-E, and CPO, resulting in the observed suboptimality of box constraints. This is highlighted in Figure 4, where the Box constraint results in a fully concentrated allocation of traffic along a single path.

Despite apparent quantitative improvements resulting from the CPO optimal solution, it is difficult to directly understand why such allocations were deemed optimal without a qualitative impression of , as framed in Section 3.5. We, therefore, now construct representative points and their corresponding projections, two of which are visualized in Figure 5. The RPs highlight the multimodal nature of the edge weights distribution, where exhibits a case of precipitation more heavily concentrating along the northeast corridor across Manhattan and one where it concentrates on the west. In addition, the projection around reveals especially high uncertainty on the path through Central Park with less on surrounding roads. CPO, thus, hedges its allocation in Figure 4 more evenly across paths, unlike the concentrated allocation under the Box region.

5 DISCUSSION

We have presented CPO, a framework to leverage informative, non-convex conformal regions for predict-then-optimize decision-making. This work suggests many directions for future work. We are pursuing the use of CPO for sequential decision-making, where non-exchangeable conformal prediction is required to handle sampling [25]. Another interesting extension would be applications of CPO to discrete objects using GFlowNets for conditional sampling [41, 32]. Finally, leveraging CPO over function spaces would enable its use to distributionally robust optimization.

References

- [1] Shreya Agrawal et al. “Machine learning for precipitation nowcasting from radar images” In arXiv preprint arXiv:1912.12132, 2019

- [2] Luca Ambrogioni et al. “Forward amortized inference for likelihood-free variational marginalization” In The 22nd International Conference on Artificial Intelligence and Statistics, 2019, pp. 777–786 PMLR

- [3] Enrico Angelelli, Valentina Morandi, Martin Savelsbergh and Maria Grazia Speranza “System optimal routing of traffic flows with user constraints using linear programming” In European journal of operational research 293.3 Elsevier, 2021, pp. 863–879

- [4] Anastasios N Angelopoulos and Stephen Bates “A gentle introduction to conformal prediction and distribution-free uncertainty quantification” In arXiv preprint arXiv:2107.07511, 2021

- [5] Anastasios N Angelopoulos et al. “Image-to-image regression with distribution-free uncertainty quantification and applications in imaging” In International Conference on Machine Learning, 2022, pp. 717–730 PMLR

- [6] Georgy Ayzel, Tobias Scheffer and Maik Heistermann “RainNet v1. 0: a convolutional neural network for radar-based precipitation nowcasting” In Geoscientific Model Development 13.6 Copernicus GmbH, 2020, pp. 2631–2644

- [7] Omer Belhasin et al. “Principal Uncertainty Quantification with Spatial Correlation for Image Restoration Problems” In arXiv preprint arXiv:2305.10124, 2023

- [8] Aharon Ben-Tal, Laurent El Ghaoui and Arkadi Nemirovski “Robust optimization” Princeton university press, 2009

- [9] Dimitris Bertsimas, Vishal Gupta and Nathan Kallus “Data-driven robust optimization” In Mathematical Programming 167 Springer, 2018, pp. 235–292

- [10] Hans-Georg Beyer and Bernhard Sendhoff “Robust optimization–a comprehensive survey” In Computer methods in applied mechanics and engineering 196.33-34 Elsevier, 2007, pp. 3190–3218

- [11] Geoff Boeing “OSMnx: New methods for acquiring, constructing, analyzing, and visualizing complex street networks” In Computers, Environment and Urban Systems 65 Elsevier, 2017, pp. 126–139

- [12] Diah Chaerani, Cornelis Roos and A Aman “The robust shortest path problem by means of robust linear optimization” In Operations Research Proceedings 2004: Selected Papers of the Annual International Conference of the German Operations Research Society (GOR). Jointly Organized with the Netherlands Society for Operations Research (NGB) Tilburg, September 1–3, 2004, 2005, pp. 335–342 Springer

- [13] Timothy M Chan “A (slightly) faster algorithm for Klee’s measure problem” In Proceedings of the twenty-fourth annual symposium on Computational geometry, 2008, pp. 94–100

- [14] Abhilash Reddy Chenreddy, Nymisha Bandi and Erick Delage “Data-driven conditional robust optimization” In Advances in Neural Information Processing Systems 35, 2022, pp. 9525–9537

- [15] Meysam Cheramin, Richard Li-Yang Chen, Jianqiang Cheng and Ali Pinar “Data-driven robust optimization using scenario-induced uncertainty sets” In arXiv preprint arXiv:2107.04977, 2021

- [16] Tore Dalenius “The problem of optimum stratification” In Scandinavian Actuarial Journal 1950.3-4 Taylor & Francis, 1950, pp. 203–213

- [17] Tore Dalenius and Margaret Gurney “The problem of optimum stratification. II” In Scandinavian Actuarial Journal 1951.1-2 Taylor & Francis, 1951, pp. 133–148

- [18] Finale Doshi-Velez and Been Kim “Towards a rigorous science of interpretable machine learning” In arXiv preprint arXiv:1702.08608, 2017

- [19] Conor Durkan, Artur Bekasov, Iain Murray and George Papamakarios “Neural spline flows” In Advances in Neural Information Processing Systems 32, 2019

- [20] Conor Durkan, Artur Bekasov, Iain Murray and George Papamakarios “nflows: normalizing flows in PyTorch” Zenodo, 2020 DOI: 10.5281/zenodo.4296287

- [21] H. Edelsbrunner “The union of balls and its dual shape” In Discrete & Computational Geometry 13.3, 1995, pp. 415–440 DOI: 10.1007/BF02574053

- [22] Adam N Elmachtoub and Paul Grigas “Smart “predict, then optimize”” In Management Science 68.1 INFORMS, 2022, pp. 9–26

- [23] Adam N Elmachtoub, Jason Cheuk Nam Liang and Ryan McNellis “Decision trees for decision-making under the predict-then-optimize framework” In International Conference on Machine Learning, 2020, pp. 2858–2867 PMLR

- [24] Kai-Tai Fang and Jianxin Pan “A Review of Representative Points of Statistical Distributions and Their Applications” In Mathematics 11.13 MDPI, 2023, pp. 2930

- [25] Clara Fannjiang et al. “Conformal prediction under feedback covariate shift for biomolecular design” In Proceedings of the National Academy of Sciences 119.43 National Acad Sciences, 2022, pp. e2204569119

- [26] Shai Feldman, Stephen Bates and Yaniv Romano “Calibrated multiple-output quantile regression with representation learning” In Journal of Machine Learning Research 24.24, 2023, pp. 1–48

- [27] George Fishman “Monte Carlo: concepts, algorithms, and applications” Springer Science & Business Media, 2013

- [28] Bernard D Flury and Thaddeus Tarpey “Representing a large collection of curves: A case for principal points” In The American Statistician 47.4 Taylor & Francis, 1993, pp. 304–306

- [29] Gabriele Franch et al. “Precipitation nowcasting with orographic enhanced stacked generalization: Improving deep learning predictions on extreme events” In Atmosphere 11.3 MDPI, 2020, pp. 267

- [30] Joeri Hermans et al. “Averting a crisis in simulation-based inference” In arXiv preprint arXiv:2110.06581, 2021

- [31] Eliahu Horwitz and Yedid Hoshen “Conffusion: Confidence intervals for diffusion models” In arXiv preprint arXiv:2211.09795, 2022

- [32] Edward J Hu et al. “GFlowNet-EM for learning compositional latent variable models” In International Conference on Machine Learning, 2023, pp. 13528–13549 PMLR

- [33] Yuge Hu, Joseph Musielewicz, Zachary W Ulissi and Andrew J Medford “Robust and scalable uncertainty estimation with conformal prediction for machine-learned interatomic potentials” In Machine Learning: Science and Technology 3.4 IOP Publishing, 2022, pp. 045028

- [34] Rafael Izbicki, Gilson Shimizu and Rafael B Stern “Cd-split and hpd-split: Efficient conformal regions in high dimensions” In The Journal of Machine Learning Research 23.1 JMLRORG, 2022, pp. 3772–3803

- [35] Chancellor Johnstone and Bruce Cox “Conformal uncertainty sets for robust optimization” In Conformal and Probabilistic Prediction and Applications, 2021, pp. 72–90 PMLR

- [36] Margot E Kaminski “The right to explanation, explained” In Berkeley Technology Law Journal 34.1 JSTOR, 2019, pp. 189–218

- [37] Diederik P Kingma and Jimmy Ba “Adam: A method for stochastic optimization” In arXiv preprint arXiv:1412.6980, 2014

- [38] Václav Kořenář “Vehicle routing problem with stochastic demands” In ALLOCATION FRAGMENTS OF THE DISTRIBUTED DATABASE, 2003, pp. 24

- [39] Jussi Leinonen et al. “Latent diffusion models for generative precipitation nowcasting with accurate uncertainty quantification” In arXiv preprint arXiv:2304.12891, 2023

- [40] Jan-Matthis Lueckmann et al. “Benchmarking simulation-based inference” In International Conference on Artificial Intelligence and Statistics, 2021, pp. 343–351 PMLR

- [41] Nikolay Malkin et al. “GFlowNets and variational inference” In arXiv preprint arXiv:2210.00580, 2022

- [42] Huiying Mao, Ryan Martin and Brian J Reich “Valid model-free spatial prediction” In Journal of the American Statistical Association Taylor & Francis, 2022, pp. 1–11

- [43] Joel Max “Quantizing for minimum distortion” In IRE Transactions on Information Theory 6.1 IEEE, 1960, pp. 7–12

- [44] Andrea Mor and Maria Grazia Speranza “Vehicle routing problems over time: a survey” In Annals of Operations Research 314.1 Springer, 2022, pp. 255–275

- [45] Mervin E Muller “A note on a method for generating points uniformly on n-dimensional spheres” In Communications of the ACM 2.4 ACM New York, NY, USA, 1959, pp. 19–20

- [46] Shunichi Ohmori “A predictive prescription using minimum volume k-nearest neighbor enclosing ellipsoid and robust optimization” In Mathematics 9.2 MDPI, 2021, pp. 119

- [47] Michał Okulewicz and Jacek Mańdziuk “A metaheuristic approach to solve dynamic vehicle routing problem in continuous search space” In Swarm and Evolutionary Computation 48 Elsevier, 2019, pp. 44–61

- [48] Adam Paszke et al. “Pytorch: An imperative style, high-performance deep learning library” In Advances in Neural Information Processing Systems 32, 2019

- [49] Yash Patel et al. “Variational Inference with Coverage Guarantees” In arXiv preprint arXiv:2305.14275, 2023

- [50] Egon Peršak and Miguel F Anjos “Contextual robust optimisation with uncertainty quantification” In International Conference on Integration of Constraint Programming, Artificial Intelligence, and Operations Research, 2023, pp. 124–132 Springer

- [51] Meead Saberi and İ Ömer Verbas “Continuous approximation model for the vehicle routing problem for emissions minimization at the strategic level” In Journal of Transportation Engineering 138.11 American Society of Civil Engineers, 2012, pp. 1368–1376

- [52] Utsav Sadana et al. “A Survey of Contextual Optimization Methods for Decision Making under Uncertainty” In arXiv preprint arXiv:2306.10374, 2023

- [53] Matteo Sesia and Emmanuel J Candès “A comparison of some conformal quantile regression methods” In Stat 9.1 Wiley Online Library, 2020, pp. e261

- [54] Matteo Sesia and Yaniv Romano “Conformal prediction using conditional histograms” In Advances in Neural Information Processing Systems 34, 2021, pp. 6304–6315

- [55] Glenn Shafer and Vladimir Vovk “A Tutorial on Conformal Prediction.” In Journal of Machine Learning Research 9.3, 2008

- [56] Chao Shang and Fengqi You “A data-driven robust optimization approach to scenario-based stochastic model predictive control” In Journal of Process Control 75 Elsevier, 2019, pp. 24–39

- [57] Xingjian Shi et al. “Deep learning for precipitation nowcasting: A benchmark and a new model” In Advances in neural information processing systems 30, 2017

- [58] Chunlin Sun, Linyu Liu and Xiaocheng Li “Predict-then-Calibrate: A New Perspective of Robust Contextual LP” In arXiv preprint arXiv:2305.15686, 2023

- [59] Renukanandan Tumu, Lars Lindemann, Truong Nghiem and Rahul Mangharam “Physics constrained motion prediction with uncertainty quantification” In arXiv preprint arXiv:2302.01060, 2023

- [60] Zhendong Wang et al. “Probabilistic conformal prediction using conditional random samples” In arXiv preprint arXiv:2206.06584, 2022

- [61] Yachong Yang and Arun Kumar Kuchibhotla “Finite-sample efficient conformal prediction” In arXiv preprint arXiv:2104.13871, 2021

Checklist

-

1.

For all models and algorithms presented, check if you include:

-

(a)

A clear description of the mathematical setting, assumptions, algorithm, and/or model. [Yes]

-

(b)

An analysis of the properties and complexity (time, space, sample size) of any algorithm. [Yes]

-

(c)

(Optional) Anonymized source code, with specification of all dependencies, including external libraries. [No, will be released upon acceptance]

-

(a)

-

2.

For any theoretical claim, check if you include:

-

(a)

Statements of the full set of assumptions of all theoretical results. [Yes]

-

(b)

Complete proofs of all theoretical results. [Yes]

-

(c)

Clear explanations of any assumptions. [Yes]

-

(a)

-

3.

For all figures and tables that present empirical results, check if you include:

-

(a)

The code, data, and instructions needed to reproduce the main experimental results (either in the supplemental material or as a URL). [Yes]

-

(b)

All the training details (e.g., data splits, hyperparameters, how they were chosen). [Yes]

-

(c)

A clear definition of the specific measure or statistics and error bars (e.g., with respect to the random seed after running experiments multiple times). [Yes]

-

(d)

A description of the computing infrastructure used. (e.g., type of GPUs, internal cluster, or cloud provider). [Yes]

-

(a)

-

4.

If you are using existing assets (e.g., code, data, models) or curating/releasing new assets, check if you include:

-

(a)

Citations of the creator If your work uses existing assets. [Not Applicable]

-

(b)

The license information of the assets, if applicable. [Not Applicable]

-

(c)

New assets either in the supplemental material or as a URL, if applicable. [Not Applicable]

-

(d)

Information about consent from data providers/curators. [Not Applicable]

-

(e)

Discussion of sensible content if applicable, e.g., personally identifiable information or offensive content. [Not Applicable]

-

(a)

-

5.

If you used crowdsourcing or conducted research with human subjects, check if you include:

-

(a)

The full text of instructions given to participants and screenshots. [Not Applicable]

-

(b)

Descriptions of potential participant risks, with links to Institutional Review Board (IRB) approvals if applicable. [Not Applicable]

-

(c)

The estimated hourly wage paid to participants and the total amount spent on participant compensation. [Not Applicable]

-

(a)

Appendix A Prediction Region Validity Lemma

Lemma A.1.

Consider any that is -Lipschitz in under the metric for any fixed . Assume further that . Then,

| (15) |

Proof.

We consider the event of interest conditionally on a pair where :

Since we have the assumption that , the result immediately follows. ∎

Appendix B Optimization Convergence Lemma

We first begin by citing a standard result of projected gradient descent, from which the result of interest immediately follows.

Lemma B.1.

Let be a closed convex set, and be convex, differentiable, and -Lipschitz. Let be a minimizer of , and define and . Then the iterates returned by projected gradient descent satisfy

| (16) |

Lemma B.2.

Let for , , and convex-concave and -Lipschitz in for any fixed . Let be a minimizer of . For any , define and . Then the iterates returned by Algorithm 1 satisfy

| (17) |

Proof.

Notice that is convex by Danskin’s Theorem by assumption of the convexity of in . By Danskin’s Theorem, , where . Further notice

| (18) |

Denote . Clearly, is -Lipschitz by assumption on the structure of . Further, as the point-wise maximum of -Lipschitz functions is itself -Lipschitz, it follows that is also -Lipschitz. The conclusion, thus, follows by applying Lemma B.1 to . ∎

Appendix C Simulation-Based Inference Benchmarks

The benchmark tasks are a subset of those provided by [40]. For convenience, we provide brief descriptions of the tasks curated by this library; however, a more comprehensive description of these tasks can be found in their manuscript.

C.1 Gaussian Linear

10-dimensional Gaussian model with a Gaussian prior:

C.2 Gaussian Linear Uniform

10-dimensional Gaussian model with a uniform prior:

C.3 SLCP with Distractors

Simple Likelihood Complex Posterior (SLCP) with Distractors has uninformative dimensions in the observation over the standard SLCP task:

C.4 Bernoulli GLM Raw

10-parameter GLM with Bernoulli observations and Gaussian prior. Observations are not sufficient statistics, unlike the standard “Bernoulli GLM” task:

C.5 Gaussian Mixture

A mixture of two Gaussians, with one having a much broader covariance structure:

C.6 Two Moons

Task with a posterior that has both global (bimodal) and local (crescent-shaped) structure:

C.7 SIR

Epidemiology model with (susceptible), (infected), and (recovered). A contact rate and mean recovery rate of are used as follows:

C.8 Lotka-Volterra

An ecological model commonly used in describing dynamics of competing species. parameterizes this interaction as :

Appendix D Training Details

All encoders were implemented in PyTorch [48] with a Neural Spline Flow architecture. The NSF was built using code from [20]. Specific architecture hyperparameter choices were taken to be the defaults from [20] and are available in the code. Optimization was done using Adam [37] with a learning rate of over 5,000 training steps. Minibatches were drawn from the corresponding prior and simulator as specified per task in the preceding section. Training these models required between 10 minutes and two hours using an Nvidia RTX 2080 Ti GPUs for each of the SBI tasks.

Appendix E Posteriors

We provide visualizations of approximate and reference posteriors (produced with MCMC from [40]).

E.1 Gaussian Linear

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/9aeab011-bd6c-438a-925f-edd111b48960/gaussian_linear_SNPE.png)

E.2 Gaussian Mixture

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/9aeab011-bd6c-438a-925f-edd111b48960/gaussian_mixture_SNPE.png)

E.3 Gaussian Linear Uniform

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/9aeab011-bd6c-438a-925f-edd111b48960/gaussian_linear_uniform_SNPE.png)

E.4 Two Moons

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/9aeab011-bd6c-438a-925f-edd111b48960/two_moons_SNPE.png)

E.5 SLCP

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/9aeab011-bd6c-438a-925f-edd111b48960/slcp_SNPE.png)

E.6 Bernoulli GLM

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/9aeab011-bd6c-438a-925f-edd111b48960/bernoulli_glm_SNPE.png)

Appendix F SBI Representative Points

F.1 Gaussian Mixture

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/9aeab011-bd6c-438a-925f-edd111b48960/gaussian_mixture_rps.png)

F.2 Two Moons

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/9aeab011-bd6c-438a-925f-edd111b48960/two_moons_rps.png)

Appendix G Robust Vehicle Routing Setup

The routing graph of Manhattan was extracted using OSMnx, with local highway speeds extracted using OpenStreetMap [11]. Highway speed imputation was performed on edges where such information was not available, specifically by averaging over those highways of comparable categorization, namely “residential,” “secondary,” or “tertiary.” Doing so defined a nominal travel cost .

We now wish to modify these nominal travel costs to account for the weather predictions made upstream. That is, we wish to account for the precipitation map in these edge weights. To do so, we use the global coordinates of each to find the precipitation at the corresponding location. Concretely, we determine the pixel coordinate by scaling the coordinate to the range of the region that was forecasted. So, for a forecast over the window , the corresponding pixel lookup is:

The corresponding precipitation associated with each vertex, therefore, is . We define the final travel cost for each edge with endpoints as:

| (19) |

We then solve SPP on the weighted directed graph with edge weights . An example of this weighting and the corresponding shortest path is illustrated in Figure 6.