Conditioning with Conditionals

On Lie-Bracket Averaging for a Class of Hybrid Dynamical Systems with Applications to Model-Free Control and Optimization

Abstract

The stability of dynamical systems with oscillatory behaviors and well-defined average vector fields has traditionally been studied using averaging theory. These tools have also been applied to hybrid dynamical systems, which combine continuous and discrete dynamics. However, most averaging results for hybrid systems are limited to first-order methods, hindering their use in systems and algorithms that require high-order averaging techniques, such as hybrid Lie-bracket-based extremum seeking algorithms and hybrid vibrational controllers. To address this limitation, we introduce a novel high-order averaging theorem for analyzing the stability of hybrid dynamical systems with high-frequency periodic flow maps. These systems incorporate set-valued flow maps and jump maps, effectively modeling well-posed differential and difference inclusions. By imposing appropriate regularity conditions, we establish results on -closeness of solutions and semi-global practical asymptotic stability for sets. These theoretical results are then applied to the study of three distinct applications in the context of hybrid model-free control and optimization via Lie-bracket averaging.

Keywords Hybrid systems, averaging theory, multi-time scale dynamical systems, extremum seeking

1 Introduction

The theory of averaging has been crucial over the past century for analyzing and synthesizing systems with fast oscillatory or time-varying dynamics [41], including nonlinear controllers [27], estimation methods, and model-free optimization algorithms [6, 48, 40, 44]. Stability analyses based on averaging theory have been extensively explored for systems modeled as Ordinary Differential Equations (ODEs) [41, 33] and extended to certain Hybrid Dynamical Systems (HDS) [53, 49, 39], as well systems wherein dithers are injected into a non-smooth/switched system, and averaging is used to obtain a continuous approximation of the dynamics [26, 24].

Stability results based on averaging theory typically rely on a well-defined “average system” whose solutions remain “close” to those of the original system over compact time domains and state space subsets. This closeness is established by transforming the original dynamics into a perturbed version of the average dynamics. By leveraging uniform stability properties via bounds, it can be shown that the trajectories of the original dynamics converge similarly to those of the average dynamics as the time scale separation increases. This method has been applied to dynamical systems modeled as ODEs [41, 27, 49] and some classes of HDS and switching systems [53, 32, 39, 15, 25, 36]. These findings have led to the development of new hybrid algorithms for extremum-seeking (ES) and adaptive systems [40, 29, 28, 3, 9].

Despite significant progress over the last decade, gaps remain between the averaging tools available for ODEs and those suitable for HDS. Most averaging results for HDS have been limited to “first-order” averaging, where the nominal stabilizing vector field is derived by neglecting higher-order perturbations. As indicated in [41, Section 2.9], [5], and [16], first-order approximations may not accurately characterize the stability of certain highly oscillatory systems, such as those in vibrational control [44] and Lie-bracket ES [16, 22]. In these cases, the stabilizing average dynamics require consideration of higher-order terms. This high-order averaging, well-known in ODE literature, is the focus of this paper, which aims to develop similar tools for hybrid dynamical systems with highly oscillatory flow maps and demonstrate their applications in control and optimization problems.

Based on this, our main contributions are the following:

(a) First, a novel high-order averaging theorem is introduced to study the “closeness of solutions” property between certain HDS with periodic flow maps and their corresponding average dynamics. In contrast to existing literature, we focus on hybrid systems with differential and difference inclusions, where stabilizing effects are determined by an average hybrid system obtained through the recursive application of averaging at high orders of the inverse frequency. These higher-order averages become essential when traditional first-order average systems fail to capture stability properties of the system. For compact time domains and compact sets of initial conditions, it is established that each solution of the original HDS is -close to some solution of its average hybrid dynamics. This notion of closeness between solutions is needed because, in general, HDS generate solutions with discontinuities, or “jumps”, for which the standard (uniform) distance between solutions is not a suitable metric, see [49, 53] and [20, Ex. 3, pp. 43]. Moreover, the models studied in this paper admit multiple solutions from a given initial condition, a property that is particularly useful to study families of functions of interest as in e.g., switching systems. To establish the closeness of solutions property, we introduce a new change of variable that is recursive in nature, and which leverages the structure in which the “high-order” oscillations appear in the flow map.

(b) Next, by leveraging the property of closeness of solutions between the original and the average hybrid dynamics, as well as a uniform asymptotic stability assumption on the (second-order) average hybrid dynamics, we establish a semi-global practical asymptotic stability result for the original hybrid system. For the purpose of generality, we allow the average hybrid dynamics to have a compact set that is locally asymptotically stable with respect to some basin of attraction, which recovers the entire space whenever the stability properties are actually global. By using proper indicators in the stability analysis, the original hybrid system is shown to render the same compact set semi-global practically asymptotically stable with respect to the same basin of attraction, thus effectively extending [49, Thm. 2] to high-order averaging.

c) Finally, by exploiting the structure of the HDS, as well as the high-order averaging results, we study three different novel stabilization and optimization problems that involve hybrid set-valued dynamics and highly oscillatory control: (a) distance-based synchronization in networks with switching communication topologies and control directions, extending the results of [18, 17] to oscillators on dynamic graphs, operating under intermittent control; (b) source-seeking problems in non-holonomic vehicles operating under faulty and spoofed sensors, which extends the results of [16] to vehicles operating in adversarial environments, and those of [38] to vehicles with non-holonomic models with angular actuation; and (c) the solution of model-free global extremum seeking problems on smooth compact manifolds, which extends the recent results of [37] to Lie-bracket-based algorithms. In this manner, an important gap in the hybrid extremum-seeking control literature is also filled by integrating hybrid dynamics into Lie-bracket-based extremum-seeking algorithms.

2 Preliminaries

2.1 Notation

The bilinear form is the canonical inner product on , i.e. , for any two vectors . Given a compact set and a vector , we use . We also use to denote the closure of the convex hull of . A set-valued mapping is outer semicontinuous (OSC) at if for each sequence satisfying for all , we have . A mapping is locally bounded (LB) at if there exists an open neighborhood of such that is bounded. The mapping is OSC and LB relative to a set if is OSC for all and is bounded. A function is of class if it is nondecreasing in its first argument, nonincreasing in its second argument, for each , and for each . Throughout the paper, for two (or more) vectors , we write . Let . Given a Lipschitz continuous function , we use to denote the generalized Jacobian [13] of with respect to the variable . A function is said to be of class if its th-derivative is locally Lipschitz continuous. For two vectors , the notation denotes the outer product defined as . We let and , where the dimension will be clear from the context. Finally, all simulation time units may be assumed to be in seconds.

2.2 Hybrid Dynamical Systems

2.2.1 Model

We consider HDS aligned with the framework of [21], given by the following inclusions:

| (1a) | |||

| (1b) | |||

where is called the flow map, is called the jump map, is called the flow set, and is called the jump set. We use to denote the data of the HDS . We note that systems of the form (1) generalize purely continuous-time systems (obtained when ) and purely discrete-time systems (obtained when ). Of particular interest to us are time-varying systems, which can also be represented as (1) by using an auxiliary state with dynamics and , where indicates the rate of change of . In this paper, we will always work with well-posed HDS that satisfy the following standing assumption [21, Assumption 6.5].

Assumption 1

The sets are closed. The set-valued mapping is OSC, LB, and for each the set is convex and nonempty. The set-valued mapping is OSC, LB, and for each the set is nonempty.

2.2.2 Properties of Solutions

Solutions to (1) are parameterized by a continuous-time index , which increases continuously during flows, and a discrete-time index , which increases by one during jumps. Therefore, solutions to (1) are defined on hybrid time domains (HTDs). A set is called a compact HTD if for some finite sequence of times . The set is a HTD if for all , is a compact HTD.

The following definition formalizes the notion of solution to HDS of the form (1).

Definition 1

A hybrid arc is a function defined on a HTD. In particular, is such that is locally absolutely continuous for each such that the interval has a nonempty interior. A hybrid arc is a solution to the HDS (1) if , and:

-

1.

For all such that has nonempty interior: for all , and for almost all .

-

2.

For all such that : and .

A solution is maximal if it cannot be further extended. A solution is said to be complete if .

In this paper, we also work with an “inflated” version of (1), which is instrumental for robustness analysis [21, Def. 6.27].

Definition 2

For , the -inflation of the HDS with data (1) is given by , where the sets are defined as:

| (2a) | ||||

| (2b) | ||||

| and the set-valued mappings are defined as: | ||||

| (2c) | ||||

| (2d) | ||||

for all .

The following definition, corresponding to [21, Def. 5.23], will be used in this paper.

Definition 3

Given , two hybrid arcs and are said to be -close if:

-

•

for each with there exists such that , with and .

-

•

for each with there exists such that , with and .

2.2.3 Stability Notions

To study the stability properties of the HDS (1), we make use of the following notion, which is instrumental for the study of “local” stability properties.

Definition 4

Let be a compact set contained in an open set . A function is said to be a proper indicator function for on if is continuous, if and only if , and if the sequence , , approaches the boundary of or is unbounded then, the sequence is also unbounded.

The use of proper indicators is common when studying (uniform) local stability properties [45]. The following definition is borrowed from [21, Def. 7.10 and Thm. 7.12].

Definition 5

A compact set contained in an open set is said to be uniformly asymptotically stable (UAS) with a basin of attraction for the HDS (1) if for every proper indicator on there exists such that for each solution to (1) starting in , we have

| (3) |

for all . If is the whole space, then , and in this case the set is said to be uniformly globally asymptotically stable (UGAS) for the HDS (1).

3 Motivational Example

The problem of target seeking in mobile robotic systems is ubiquitous across various engineering applications, including rescue missions [8], gas leak detection, and general autonomous vehicles performing exploration missions in hazardous environments that could be too dangerous for humans [55, 38]. Different algorithms have been considered for resolving source-seeking problems in mobile robots [55]. Naturally, these algorithms rely on real-time exploration and exploitation mechanisms that are robust with respect to different types of disturbances, including small bounded measurement noise and implementation errors [38], as well as certain structured and bounded additive perturbations [47]. Nevertheless, in many realistic applications, the seeking robots operate in environments where their sensors rarely have continuous and perfect access to measurements of the environment. Common reasons for faulty measurements include intermittent communication networks, interference due to obstacles or external environmental conditions, to name just a few [30, 19]. In addition to encountering faulty and intermittent measurements, autonomous robots operating in adversarial environments might also be subject to malicious spoofing attacks that deliberately modify some of the signals used by the controllers. In such situations, it is natural to ask whether the mobile robots can still complete their missions, and under what conditions (if any) such success can be guaranteed.

To study the above question, we consider a typical model of a mobile robot studied in the context of source seeking problems: a planar kinematic model of a non-holonomic vehicle, with equations

where the vector models the position of the vehicle in the plane, the vector captures the orientation of the vehicle, is the forward velocity, and is the angular velocity. We use the above model for the kinematics of the vehicle to simplify the discussion since our focus is on modeling the effect of intermittent measurements and spoofing attacks. Nevertheless, this model is ubiquitous in the source-seeking literature, see [7], [14], [38], [55], and [50].

The main objective of the vehicle is to stabilize its position at a particular unknown target point using only real-time measurements of a distance-like signal , which for simplicity is assumed to be , strongly convex with minimizer at the point , and having a globally Lipschitz gradient (these assumptions will be relaxed later in Section 5.1.2). In other words, the vehicle seeks to solve the problem without knowledge of the mathematical form of . Since nonholonomic vehicles cannot be simultaneously stabilized in position and orientation using smooth feedback, we let the vehicles oscillate continuously using a predefined constant angular velocity . This model can also capture vehicles that have no directional control over , such as in quad-copters that have lost one propeller, leading to a constant yaw rate during hover that can be effected, though not eliminated or reversed, by modulating the remaining propellers [51].

Under “nominal” operating conditions, and to stabilize (a neighborhood of) the target , we can consider the following feedback controller that only uses real-time measurements of the potential field evaluated at the current position

where is a tunable parameter. To capture the effect of having intermittent measurements and malicious spoofing attacks in the algorithm, we re-write the nominal closed-loop system as the following mode-dependent dynamical system:

| (4a) | ||||||

| (4b) | ||||||

| (4c) | ||||||

| where is a logic mode that is kept constant during the evolution of (4). In this case, the map is | ||||||

| (4d) | ||||||

where describes the nominal operation mode, describes the mode in which no measurement of is available to the vehicle, and corresponds to the mode in which the control algorithm is under spoofing that is able to reverse the sign of the measurements of . In this way, the dynamics of the vehicle switch in real-time between the three operating modes as the robot simultaneously seeks the target . Note that, at every switching instant, the states remain constant, and jumps according to , i.e., the system is allowed to switch from its current mode to any of the other two modes. Figure 1 illustrates this switching behavior. To study the stability properties of the system, we consider the coordinate transformation:

which is defined via a rotation matrix and therefore is invertible for all . Using , the transformation leads to the new system

| (5a) | ||||

| (5b) | ||||

with jumps and . In (5), the vector is now constant, but still restricted to the compact set . For this system, we can investigate the stability properties of the state with respect to the set . To do this, we model the closed-loop system as a HDS of the form (1), with switching signal being generated by an extended auxiliary hybrid automaton, with states , and set-valued dynamics

| (6d) | |||

| (6h) | |||

where , , is the classic indicator function, the set of logic modes has been partitioned as , with and , and the sets are given by

| (7) |

for some constants and . In this system, the states and can be seen as timers used to coordinate when the system jumps.

In particular, every hybrid arc generated by the hybrid automaton (6) satisfies the following two properties for any two times that are in its domain [21, Ex. 2.15],[40, Lemma 7]:

| (8a) | ||||

| (8b) | ||||

where is the total number of jumps during the time interval , and is the total activation time during , and during flows of the system, of the modes in the set . In fact, condition (8a) imposes an average dwell-time (ADT) constraint [23] on the switches of , while condition (8b) imposes an average activation time (AAT) constraint [54] on the time spent in modes 1 and 2. These conditions are parameterized by the tunable constants and , respectively. Note that (8a) immediately rules out Zeno behavior. By modeling the switching signals as solutions of the hybrid automaton (6), we can study the closed-loop system without pre-specifying the switching times of , which are generally unknown. Instead, we consider any possible solution generated by the interconnection (5)-(7).

While the -dynamics in (5) are periodic with high-frequency oscillations, existing averaging tools in the literature [49] do not capture the stabilizing effect of the control law (4d). This can be observed by introducing a new time scale , which leads to the following dynamics (in the s-time scale):

| (9) |

Using (4d) and the property , it is easy to see that for any constant vector the average of the vector field (9) along the fast varying state is equal to zero. Since the first-order average vector field is zero, which is only marginally stable, no stability conclusions can be obtained from a direct application of first-order averaging theory. Indeed, in this case, the stabilizing effects are dictated by higher-order terms that are neglected from the first-order average. Similar obstacles emerge when using first-order averaging theory in the analysis of Lie-bracket-based extremum seeking controllers [16] and in certain vibrational controllers [44]. On the other hand, as we will show in the next section, by using second-order averaging theory for HDS, we can obtain the following second-order average hybrid dynamics of (5)-(7), with states :

In turn, under the smoothness and strong convexity assumption on , this HDS renders UGAS the compact set for and sufficiently small (see Section 5-A-2). By using Theorem 2 in Section 4, we will be able to conclude that, for any compact set and any , there exists such that for all every trajectory of (5) that starts in , satisfies

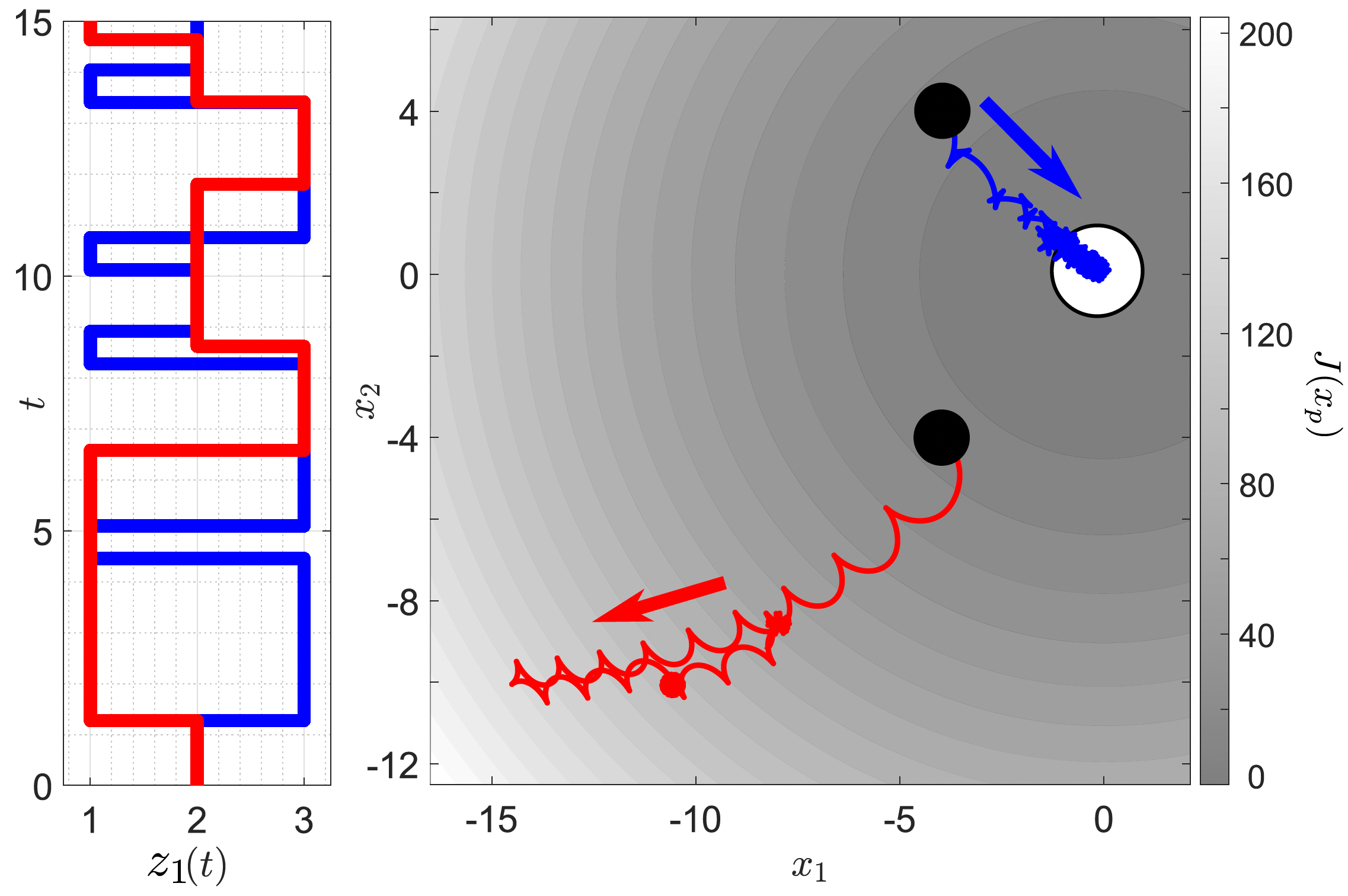

for all in the domain of the solution. A more general result will be stated later in Section 5.1 for a class of hybrid Lie-bracket averaging-based systems. In the meantime, we illustrate in Figure 2 (in blue color) the convergence properties of the vehicle under the controller (4d). The inset also shows the evolution in time of the switching signal , indicating which mode is active at each time. As observed, the vehicle operating under spoofing and intermittent sensing () is able to successfully complete the source-seeking mission. We also show in red color an unstable trajectory of the vehicle under the same controller and a more frequent spoofing attack () that does not satisfy (8b) (i.e., with a larger value of in (8b)), also shown in the inset. For both scenarios depicted in Figure 2, the switching signals are generated as solutions of the hybrid automaton (6).

Remark 1

The results and tools discussed in this section (extended in Section 5.1) can help practitioners evaluate the resilience of non-holonomic vehicle seeking dynamics against intermittent measurements and spoofing attacks. By characterizing the parameters and that ensure stable (practical) source seeking, practitioners can design effective detection and rejection mechanisms to ensure the control system operates in its nominal mode “sufficiently often”.

The motivational problem in this section was modeled using a high-amplitude, high-frequency oscillatory system, whose averaged system corresponds to a switching system. However, the results presented in this paper are applicable to a broader class of hybrid systems, with switching systems representing just one specific case.

4 Second-Order Averaging for a Class of Hybrid Dynamical Systems

We consider a subclass of HDS (1), given by

| (10a) | |||||

| (10b) | |||||

where is a small parameter, , , , , for , and . The set-valued mapping is given by

| (11) |

where is a continuous function of the form

| (12) |

The functions , the sets , and the set-valued mappings , are application-dependent. Their regularity properties are characterized by the following assumption:

Assumption 2

and are closed. is OSC, LB, and convex-valued relative to ; is OSC and LB relative to ; for all the set is nonempty; and for all the set is nonempty.

For the function defined in (12) we will ask for stronger regularity conditions in terms of smoothness and periodicity of the mappings in a small inflation of (c.f. Eq. (2a)).

Assumption 3

asmp:A1There exists and , for , such that for all the following holds:

-

(a)

Smoothness: For , the functions satisfy that: with respect to , with respect to , and is continuous in .

-

(b)

Periodicity: For , satisfies

for all .

-

(c)

Zero-Average of in :

for all .

Remark 2

The periodicity and smoothness assumptions on are standard [41, Ch. 2]. Periodicity is particularly important in higher-order averaging as it simplifies the computations of the near-identity transformations required to establish the closeness-of-solutions property [41, Section 2.9]. Without the periodicity assumption, rigorous proofs become significantly more complex. For example, in the general time-varying case, higher-order averaging necessitates the use of general-order functions of the small parameter rather than polynomials. This increases the complexity of the asymptotic approximations that can be achieved [41, Theorem 4.5.4] without providing additional practical benefits, since in the applications of interest, the periodicity assumption holds by design.

To study the stability properties of the HDS (10), we first characterize its 2-order average HDS. To define this system, for each we define the following auxiliary function:

| (13a) | |||

| as well as the Lie-bracket between (13a) and : | |||

| (13b) | |||

where we omitted the function arguments to simplify notation. Using (13), we introduce the 2-order average mapping of , denoted , given by:

| (14) |

where . The following definition leverages .

Definition 6

Remark 3

It is worth mentioning that if satisfies

for some functions and some , then the Lie bracket in (13b) reduces to:

where is the time-varying function:

and, consequently, the average of reduces to:

where . However, in contrast to the standard Lie-bracket averaging framework [16], here we do not necessarily assume that admits such a decomposition, which enables the study of more general vector fields .

The next lemma follows directly by Assumption 2 and the continuity properties of , , c.f., Assumption LABEL:asmp:A1.

Lemma 1

The following Theorem the first main result of this paper. It uses the notion of -closeness, introduced in Definition 3. All the proofs are deferred to Section 6.

Theorem 1

thm:closeness_of_trajsSuppose that Assumptions 2-LABEL:asmp:A1 hold. Let be a compact set such that every solution of the HDS (15) with initial conditions in has no finite escape times. Then, for each and each , there exists such that for each every solution of (10) starting in is -close to some solution of (15) starting in .

Remark 4

The result of \threfthm:closeness_of_trajs establishes a novel closeness-of-solutions property (in the sense of Definition 3) between the high-frequency high-amplitude periodic-in-the-flows HDS (10) and the second-order average HDS defined in (15). Unlike Lie-bracket averaging results for Lipschitz ODEs [16, Thm. 1], the result does not assert closeness between all solutions of (10) and , but rather that for every solution of the original HDS (10) there exists a solution of the 2nd-order average dynamics that is -close. Thus, Theorem LABEL:thm:closeness_of_trajs effectively extends to the 2nd-order case existing 1st-order averaging results for HDS, such as [49, Thm. 1].

As a result of Theorem LABEL:thm:closeness_of_trajs, if all trajectories of satisfy suitable convergence bounds, then the trajectories of the original HDS (10) will approximately inherit the same bounds on compact sets and time domains. To extend these bounds to potentially unbounded hybrid time domains, we use the following stability definition [49, Def. 6].

Definition 7

defn:SPUAS For the HDS (10), a compact set is said to be Semi-Globally Practically Asymptotically Stable (SGPAS) with respect to the basin of attraction as if, for each proper indicator function on there exists such that, for each compact set and for each , there exists such that for all and for all solutions of (10) with we have

| (16) |

for all .

As discussed in Definition 5, if covers the whole space, then we can also take in (16). If, additionally, is compact, then (16) describes a Global Practical Asymptotic Stability (GPAS) property.

With \threfdefn:SPUAS at hand, we can now state the second main result of this paper, which establishes a novel second-order averaging result for HDS:

Theorem 2

The discussion preceding Theorem LABEL:thm:avg_UAS_implies_org_PUAS directly implies the following Corollary, which we will leverage in Section 5 for the study of globally practically stable Lie-bracket hybrid ES systems on smooth boundaryless compact manifolds.

Corollary 1

Remark 5

In Theorem LABEL:thm:avg_UAS_implies_org_PUAS, it is assumed that renders UAS the set . However, in certain cases might depend on additional tunable parameters , and might only be SGPAS as , see [40, 39, 28]. In this case, the SGPAS result of Theorem LABEL:thm:avg_UAS_implies_org_PUAS still applies as , where the tunable parameters are now sequentially tuned, starting with . This observation follows directly by modifying the proof of Theorem LABEL:thm:avg_UAS_implies_org_PUAS as in [39, Thm. 7]. An example will be presented in Section 5.1 in the context of slow switching systems.

5 Applications to Hybrid Model-Free Control and Optimization

We present three different applications to illustrate the results of Section 4 in the context of model-free optimization and control. In all our examples, we will consider a HDS of the form (10), where is allowed to switch between a finite number of vector fields, some of which might not necessarily have a stabilizing second-order average HDS. Specifically, we consider systems where the main state evolves in an application-dependent closed set , under the following dynamics:

| (18) |

where is a logic mode allowed to switch between values in the set , where . The switching behavior can be either time-triggered (modeled via the hybrid automaton (6)) or state-triggered (modeled via a hysteresis-based mechanism incorporated into the sets and ). In both cases, Zeno behavior will be precluded by design. Since for each the functions will be designed to satisfy \threfasmp:A1, the 2-order average mapping can be directly computed for each mode using (14):

| (19) | ||||

To guarantee that is well-defined, we let depend on a vector of frequencies , for some , which satisfies the following:

Assumption 4

The frequencies satisfy and , .

The conditions of Assumption 4 are standard in vibrational control [44] and extremum seeking [6, 48, 40, 47, 22].

5.1 Switching Model-Free Optimization and Stabilization

In our first two applications, the set of logic modes satisfies , where , indicates “stable” modes, and indicates “unstable” modes. The logic mode models a switching signal generated by the hybrid automaton (6) studied in Section 3, with state , which induces the ADT constraint (8a) and the AAT constraint (8b) on the switching, effectively ruling out Zeno behavior. Using the data of system (6), the closed-loop switched system has the form of (10), with sets

| (20) |

flow map , given by (18), , and jump map:

| (21) |

By construction, Assumption 2 is satisfied and the corresponding HDS can be directly obtained using (15):

| (22a) | ||||

| (22b) | ||||

where . We consider two different applications where this model is applicable.

5.1.1 Synchronization of Oscillators with Switching Control Directions and Graphs

Consider a collection of oscillators evolving on the -torus , which is an embedded (and compact) submanifold in the Euclidean space. Let be the state of the oscillator, for , and denote its input by . Moreover, let , where , , such that for all . The dynamics of the overall network of oscillators are given by

| (23) |

where is the unknown vector of control directions. Let , and note that is invariant under the dynamics in (23). The connectivity between oscillators is dictated by the network topology which, at any given instant, is defined by a unique element from a collection of graphs , , where is the set of nodes representing the oscillators, and is the edge set representing the interconnections. To simplify our presentation, we make the following assumption.

Assumption 5

asmp:ex_synchronization_A1 For each the graph is connected and undirected.

Our goal is to synchronize the oscillators under switching communication topologies and unknown control directions . To solve this problem, we consider the feedback law

| (24) |

where , and is a tuning parameter. In (24), the set indicates the neighbors of the agent according to the topology of the graph . We consider a model where both the network topology and the control directions are allowed to switch in (23) and (24). To capture this behavior, let , and fix a choice of all possible bijections . In this way, for each there is a particular control direction and graph acting in (23) and (24). To study the overall dynamics of the system, we define the coordinates

where , and , which lead to the following polar representation of (23):

| (25) |

where the feedback law becomes:

| (26) |

with distance function . Using (19), the -order average mapping is given by

| (27) |

where , , and

which is the well-known Kuramoto model over the graph [18, 17]. The subset that characterizes synchronization has the polar coordinate representation Now observe that the vector field in (23) under the feedback law (24), or equivalently the vector field in (25) under the feedback law (26), has the same structure as the vector fields in (18) with trivial dependence on the intermediate timescale . Moreover, using (6) with to generate the switching signal , the closed-loop system has the form (10) with and given by (20), and given by (21). For this system, we study stability of the set using two tunable parameters (c.f. Remark 5). The following proposition establishes a novel “model-free” synchronization result for oscillators under switching graphs and control directions via averaging.

Proposition 1

Example 1

To illustrate the above result, we consider two scenarios. In the first scenario, is static and the control directions are switching. In this case, we consider two oscillators (i.e., ), and . We let , , and we consider the bijection that satisfies , , , . For the numerical simulation results, shown in Fig. 4, we used , , , , and a switching mode generated by (6) with , , and arbitrary and . As observed in the figure, (local) practical synchronization is achieved.

In the second scenario, we incorporate switching graphs . We let , , , and we consider the set , and the collection of graphs shown in Fig. 3. For the numerical simulation results, shown in Fig. 5, we used , , , , , , and a switching mode generated by (6) with , , and arbitrary and . As shown in Fig. 5, (local) practical synchronization is also achieved despite simultaneous switches happening in the network topology and the control directions.

5.1.2 Lie-Bracket Extremum Seeking under Intermittence and Spoofing

Consider the following control-affine system

| (28) |

where the goal is to steer the state towards the set of solutions of the optimization problem

| (29) |

where is the parameter search space, and is a continuously differentiable cost function whose mathematical form is unknown, but which is available via measurements or evaluations. Problem (29) describes a standard extremum seeking (ES) problem [16, 6, 40, 43], where exact knowledge of the functions is in general not required. However, unlike traditional ES, we aim to achieve convergence to the solutions of (29) despite sporadic failures in accessing measurements of and intermittent cost measurements affected by malicious external spoofing designed to destabilize the system, as in the motivational example of Section 3, see also [30, 19].

To study this scenario, we consider the following mode-dependent Lie-bracket-based control law

| (30) |

where is a tuning parameter and is the logic mode generated by the hybrid automaton (6) . Note that (4) is a particular case of (28). Using equation (19) to study system (28) under the feedback law (30), we obtain:

where the matrix-valued mapping is given by

We make the following regularity assumptions on and :

Assumption 6

asmp:ex_2_A1 The following holds:

-

1.

There exists and such that

-

2.

The cost function is , strongly convex with strong-convexity parameter , and minimizer , and there exists such that , for all .

Remark 6

The following result formalizes the discussions of Section 3, and establishes a novel resilience result for Lie-bracket ES algorithms under spoofing and intermittent feedback:

5.2 Switched Global Extremum Seeking on Smooth Compact Manifolds

We now leverage the tools presented in this paper to solve global ES problems of the form (29) on sets that describe a class of smooth, boundaryless, compact manifolds. This application illustrates the use of Corollary LABEL:corollary1. As thoroughly discussed in the literature [35, 11, 37, 10], for such problems, no smooth algorithm with global stability bounds of the form (17) can be employed as the average system due to inherent topological obstructions. Here, we show how to remove this obstruction by using adaptive switching between multiple ES controllers in problems that satisfy the following assumption:

Assumption 7

asmp:mfd_ex_A1 The following holds:

-

(a)

The set is a smooth, embedded, connected, and compact submanifold without boundary, endowed with a Riemannian structure by the metric induced by the metric of the ambient Euclidean space .

-

(b)

The function is smooth on an open set containing , has a unique minimizer satisfying , , and there exists a known number such that for all we have , where is the orthonormal projection (defined by the metric ) of the gradient onto the tangent space , .

While \threfasmp:mfd_ex_A1 suffices to guarantee the local, or at best, almost-global uniform practical asymptotic stability of the minimizer for a smooth Lie Bracket-based extremum seeking controller on [18, 17], its (smooth) average system will necessarily have more than one critical point in due to the topology of the manifold [35, 34]. To remove this obstruction to achieve uniform global ES, we consider a hybrid algorithm that implements state-triggered switching between certain functions introduced in the following definition:

Definition 8

Let , and . A family of functions is said to be a -gap synergistic family of functions subordinate to on if:

-

1.

, is smooth, its unique minimizer on is , and has a finite number of critical points in , i.e. , where .

-

2.

, , i.e. the family agrees with on the neighborhood of the minimizer ,

-

3.

such that

In words, the constructions in Definition 8 involve families of diffeomorphisms that “warp the manifold in sufficiently many ways so as to distinguish the minimizer from other critical points of the function ”. The reader is referred to [46] and [37] for the full details (and examples) of these constructions, which are leveraged in [37] for the design of algorithms using classic averaging. We stress that constructing these diffeomorphisms does not necessarily require exact knowledge of the mathematical form of (although knowledge of is required), but only a qualitative description of that allows to identify the threshold below which the only critical point corresponds to . Such knowledge is reasonable for most practical applications where the optimal value is known to lie within a certain bounded region, or be equal to certain value. A similar assumption was considered in [52]. Under such qualitative knowledge, our goal is to attain global ES from all initial conditions, ensuring that (29) is solved regardless of where the algorithm’s trajectories are initialized or land after a sudden disturbance.

To design hybrid Lie-bracket-based algorithms with global stability properties, we consider dynamics of the form (28), where, for simplicity we allow the functions to be independent of . We formalize this in the following assumption:

Assumption 8

asmp:mfd_ex_A2 The following holds for problem (29):

-

(a)

There exists a family of smooth vector fields on , for which the operator defined by

is such that

-

(b)

There exists a -gap synergistic family of functions subordinate to on , which are available for measurement.

Assumption LABEL:asmp:mfd_ex_A2 is natural in the setting we consider herein. Indeed, item (a) in Assumption LABEL:asmp:mfd_ex_A2 is a standard assumption in the context of Lie-bracket extremum seeking on manifolds, see e.g. [17, Assumption 1]. On the other hand, item (b) is a standard assumption in the context of robust global stabilization problems on manifolds via hybrid control, see e.g. [37, 35, 12].

To solve (29), we consider the HDS (10), with given by (28), and the following hybrid feedback law

where is a tuning gain, is a logic state, the sets and are given by

and the flow map in (11) uses . Finally, the jump map in (10b) is defined as

Using equation (19) with , we obtain:

| (31) |

We can now state the following global result for Lie-bracket ES systems on smooth compact manifolds:

Proposition 3

Example 2

exmp:optimization_on_manifolds_example To illustrate the result of Proposition 3, let be the unit sphere, and consider the cost . We define the vector fields for by , where is the unit vector in with zero elements except the -element which is set to . The function has two critical points where vanishes: a critical point that corresponds to the minimum value , and a critical point that corresponds to the maximum value . Let , and define the family of functions by , where the maps are defined as follows:

and where , , is the matrix exponential where is the group of real invertible -matrices, and is the skew-symmetric matrix associated with the unit vector . It can be verified, see [37], that, when , the family is a -gap synergistic family of functions subordinate to on for any . We use to emulate the situation in which no switching takes place since, when , , for all . To generate the numerical results, we used , , , and , which completely defines the HDS (10). We simulate two scenarios. In the first scenario, a small adversarial input, tailored to (locally) stabilize the critical point for an ES algorithm without switching, is added to the vector field , i.e., we use , where . For details on the construction of , we refer the reader to the appendix in the extended manuscript [4]. The simulation results of the first scenario for and , starting from the initial condition , which is nearby , are shown in Fig. 6. As observed, without switching (), the small disturbance effectively traps the non-hybrid ES algorithm in the vicinity of the problematic critical point , despite the fact that and that the system is persistently perturbed by the dithers. On the other hand, as predicted by Proposition 3, the hybrid ES algorithm () renders the set GPAS as .

In the second scenario, a similarly constructed adversarial input, tailored to (locally) stabilize the problematic critical points of the functions and , in the absence of switching, is added to . The simulation results for , with and starting from , which coincides with the problematic critical point of , are shown in Fig. 7. It can be observed that a state jump from to is trigger to allow the ES algorithm escape the problematic critical point of . The detailed construction of the adversarial signals can be found in the Appendix of the Supplemental Material. All computer codes used to generate the simulations of this paper are available at [2].

6 Analysis and Proofs

In this section, we present the proofs of our main results.

6.1 Proof of Theorem LABEL:thm:closeness_of_trajs

We follow similar ideas as in standard averaging theorems [27, Thm. 10.4], [49, Thm.1], but using a recursive variable transformation applied to the analysis of the HDS.

Fix , and , and let Assumption LABEL:asmp:A1 generate . For any , consider the -inflation of , given by

| (32) |

where the data is constructed as in Definition 2 from the data of in (6). Fix such that for any solution to the system (32) starting in there exists a solution to system (15) starting in such that the two solutions are -close. Such exists due to [21, Proposition 6.34]. Without loss of generality, we may take and .

Let be the set of maximal solutions to (15) starting in , and define the following sets:

| (33) |

where is compact by [49, Proposition 2]. Consider the functions defined as follows:

| (34a) | ||||

| (34b) | ||||

where for simplicity we omitted the arguments in the right-hand side of (34). Using integration by parts and item (c) in Assumption LABEL:asmp:A1, we have , for all . Let be defined as:

| (35) |

which satisfies .

Below, Lemmas 2-4 follow directly by the continuity and periodicity properties of the respective functions. For completness, the proofs can be found in the Appendix of the supplemental material, or in the Extended Manuscript [4].

Lemma 2

Let be a compact set. Then, there exist such that and , , for all and all .

Next, for each , we define

as well as the auxiliary functions

| (36a) | ||||

| (36b) | ||||

| (36c) | ||||

The functions , , will play an important role in our analysis. We state some of their regularity properties.

Lemma 3

Let be a compact set. Then, there exist such that for :

| (37a) | |||

| (37b) | |||

| (37c) | |||

, and .

The following technical Lemma will be key for our results. The proof of the first items leverages the Lipschitz extension Lemma of [49, Lemma 2].

Lemma 4

Let be a compact set, and for let be given by (36), and be given by Lemma 3. Consider the closed sets

| (38) |

Then, there exist functions , such that the following holds:

-

(a)

For all , we have and .

-

(b)

For all , we have , and .

-

(c)

For all and :

-

(d)

For all :

(39) (40) -

(e)

There exists such that for all , , where

(41) -

(f)

There exists such that for all , , where

(42)

Continuing with the proof of Theorem LABEL:thm:closeness_of_trajs, let , , , , and , which satisfies due to the definition of . Let and consider the restricted HDS:

| (43d) | ||||

| (43h) | ||||

with , , and flow set and jump set defined in (38), with given by (33). We divide the rest of proof into four main steps:

Step 1: Construction and Properties of Auxiliary Functions: Using the compact set and the functions given by (36), let Lemmas 2-3 generate . Using these constants, the set , and the functions (), let Lemma 4 generate the functions . Then, for each and each , we define

| (44) |

where

| (49) |

Using the inequalities in item (b) of Lemma 4, we have

| (50) |

for all . Similarly, due to items (b)-(c) of Lemma 4, the definition of in (44), and inequality (50),

| (51) |

for all . Also, note that due to item (a) of Lemma 4, the functions and satisfy and for all , with given by (37).

Step 2: Construction of First Auxiliary Solution: Let be a solution to (43) with . By construction, we have

| (52) |

Using (37a), item (b) of Lemma 4, (50), and the choice of

| (53) |

For each , let be defined via (44). It follows that is a hybrid arc, and due to (52) and (53) it satisfies

| (54) |

Thus, using (37a), item (b) of Lemma 4, and (6.1), we get

| (55) |

for all .

Next, for each such that , we have that:

Using (53) and the construction in (2b), we conclude that . Thus, using (43h) and (53), we obtain:

| (56) |

Similarly, from (44), for all such that has a nonempty interior, we have:

| (57) |

for all . Therefore, using the construction of the inflated flow set (2a), and the bound (53), we have that . Since is Lipschitz continuous due to Lemma 4, is locally absolutely continuous, and it satisfies

| (58) |

for almost all . To compute (58), note that

where we used (11), the structure of (12), and (43). Therefore, using the definition of , we have that (58) can be written as

for almost all . Using the last containment in (57), and items (a), (d), (e), and (f) of Lemma 4, we obtain

for all . Thus, and

| (61) |

for almost all , where

Finally, using the equality in (57), we can write (61) as:

| (64) | ||||

| (65) |

which holds for almost all .

Step 3: Construction of Second Auxiliary Solution: Using the solution to the restricted HDS (43) considered in Step 2, as well as the hybrid arc , we now define a new auxiliary solution. In particular, for each , using (44) we define:

| (66) |

By construction, is a hybrid arc. Since, by combining equation (44) and inequalities (53) and (55) we have that

| (67) |

for all , and for all , it follows that the hybrid arc (66) satisfies .

Now, for each such that it satisfies , where we used (44) and (66). Therefore, using the construction of the inflated jump set (2b), we conclude that , and using (55)-(56) we have:

where we also used (66) and the definition of the inflated jump map in (2d). On the other hand, for each such that has a nonempty interior, we must have:

| (68) |

and therefore, due to (55), the inclusion in (68), and the definition of inflated flow set (2a):

| (69) |

Since is Lipschitz continuous in all arguments due to Lemma 4, it follows that is locally absolutely continuous and satisfies:

Using the definition of in (49), as well as (69), item (a) of Lemma 4, and (36c), we obtain:

Therefore, using (65) we have that for almost all :

| (74) |

where the last term can be written in compact form as

Using (66), (68), (69) and Lemma 2, we have:

| (75a) | ||||

| (75b) | ||||

for all and all , where we omitted the time arguments to simplify notation. Using , we have that

where the inclusion follows from (65), and are defined in (39), (40), (41), and (42). Since is globally Lipschitz continuous by Lemma 4, it follows that . Using (68), (69), items (c), (e) and (f) of Lemma 4, as well as (75b), we obtain for all and all :

| (76) |

where , the constant comes from the proof of items (e)-(f) in Lemma 4, and where we used the fact that . Therefore, using the above bounds, as well as (53) to bound (75a), we conclude that for all and all : , where , and we used the fact that and the choice of . Using (55), (66), and (74), we conclude that for almost all :

where is the inflated average flow map in (32). It follows that is -close to some solution of the average system (15), with . Using (44), (66), and the fact that (67) also holds with due to the fact that , we conclude that is -close to .

Step 4: Removing from the HDS (43). We now study the properties of the solutions to the unrestricted system (10) from , based on the properties of the solutions of the restricted system (43) initialized also in . Let be a solution of (10) starting in . We consider two scenarios:

-

(a)

For all such that we have . Then, it follows that is -close to .

-

(b)

There exists such that for all such that and either:

-

(1)

there exists sequence satisfying such that and for each , or else

-

(2)

and .

Then, we must have that the solution agrees with a solution to (43) up to time , which implies that . But, since , and due to the construction of in (33), we have that is contained in the interior of , so neither of the above two cases can occur.

-

(1)

Since item (b) cannot occur, we obtain the desired result.

6.2 Proof of Theorem LABEL:thm:avg_UAS_implies_org_PUAS

The proof proceeds in a similar manner to the proof of \threfthm:closeness_of_trajs and the proof of [49, Theorem 2].

Let be a proper indicator for on , and let such that each solution of the averaged HDS (15) starting in satisfies

Let be compact, and let

| (77) | ||||

| (78) |

By construction, the continuity of , and the OSC property of , the set is compact and satisfies . Let and observe that, due to the robustness properties of well-posed HDS [21, Lemma 7.20], there exists such that all solutions to the inflated averaged HDS (32) that start in satisfy for all :

| (79) |

Without loss of generality we may assume that , and we define . Using we let Lemmas 2 and 3 generate the constants so that the bounds Lemma 2 and (37) hold for all and all . Using these constants, we define as in the proof of Theorem 1, for all . Since and are continuous, and converges to zero as the argument grows unbounded, there exists such that for all and satisfying and all :

| (80) |

Then, we let and fix . As in the proof of Theorem LABEL:thm:closeness_of_trajs, we define the restricted HDS:

| (81a) | |||||

| (81b) | |||||

and we let denote a solution to (81) starting in . As in the proof of Theorem LABEL:thm:closeness_of_trajs, using (44), we define, for each , the arc , which, as shown in the proof of Theorem LABEL:thm:closeness_of_trajs, is a hybrid arc that is a solution to the inflated HDS (32), and therefore satisfies (79) for all . We can now use (80) to obtain:

| (82) |

for all . Since , remains in the set , which is compact and satisfies due to the fact that . We can now use the exact same argument of Step 4 in the proof of Theorem LABEL:thm:closeness_of_trajs to establish that (82) also holds for every solution starting in of the unrestricted HDS (10).

6.3 Proofs of Propositions 1-3

Proof of Proposition 1: Since , and can be arbitrary. \threfasmp:ex_synchronization_A1 guarantees that the subset is UAS for the averaged vector field (LABEL:asmp:ex_synchronization_A1) for each fixed . Hence, since system (6d) satisfies Assumption 1, and is compact, by [21, Thm. 7.12 and Lemma 7.20], the set is SGPAS as with respect to some basin of attraction . Therefore, by \threfthm:avg_UAS_implies_org_PUAS, we conclude that is SGPAS as for (10) with respect to .

Proof of Proposition LABEL:prop:ex2_es_intermittent: We show that the average HDS (22) renders UGAS the set , such that Theorem LABEL:thm:avg_UAS_implies_org_PUAS can be directly used. Indeed, consider the Lyapunov function , and note that for each , we have . Using strong convexity and globally Lipschitz of , we obtain: For : ; For : ; For : . By [40, Proposition 3], we obtain that for and for sufficiently small, the set is UGAS for the average HDS (22). Hence, by Theorem LABEL:thm:avg_UAS_implies_org_PUAS, is SGPAS as for the original system (10).

Proof of Proposition 3: We show that renders UGAS the set . To do this, we consider the Lyapunov function , which during flows satisfies . If for some , then we have three possible cases:

-

1.

, but , which implies that due to item (a) in \threfasmp:mfd_ex_A2. Consequently, we must have ; or

-

2.

, which again implies that ; or

-

3.

and , but In that case, we can decompose where , and therefore , and However, by definition of , we have which contradicts the assumption that . Hence, this case cannot happen.

Therefore, for some implies that . Due to item (b) in \threfasmp:mfd_ex_A2 and item (b) in \threfasmp:mfd_ex_A1, and since flows are allowed only in the set , which contains no critical points other than in , it follows that if and only if . Next, observe that immediately after jumps we have for all : , since, by definition of the jump map we have and, using the structure of ,

By [42, Theorem 3.19], is UGAS for the HDS (15). By \threfcorollary1, is GPAS as for the HDS (10).

7 Conclusions

A second-order averaging result is introduced for a class of hybrid dynamical systems that combine differential and difference inclusions. Under regularity conditions, this result establishes the closeness of solutions between the original and average dynamics, enabling semi-global practical asymptotic stability when the average hybrid system has an asymptotically stable compact set. The findings are demonstrated through the analysis and design of various hybrid and highly oscillatory algorithms for model-free control and optimization problems. The theoretical tools developed in this paper also enable the study of systems for which global control Lyapunov functions, as defined in [44], do not exist. For additional applications, we refer the reader to the recent manuscript [1]. Other future research directions will study incorporating resets into the controllers [31, 39], stochastic phenomena, the development of hybrid source-seeking controllers with multi-obstacle avoidance capabilities [9], as well as developing experimental validations of the proposed algorithms.

References

- [1] M Abdelgalil and Jorge I. Poveda. Hybrid Minimum-Seeking in Synergistic Lyapunov Functions: Robust Global Stabilization under Unknown Control Directions. arXiv:2408.04882, 2024.

- [2] Mahmoud Abdelgalil. Higher order averaging for hybrid dynamical systems. https://github.com/maabdelg/HOA-HDS.

- [3] Mahmoud Abdelgalil, Daniel E Ochoa, and Jorge I Poveda. Multi-time scale control and optimization via averaging and singular perturbation theory: From odes to hybrid dynamical systems. Annual Reviews in Control, 56:100926, 2023.

- [4] Mahmoud Abdelgalil and Jorge I Poveda. On Lie-bracket averaging for a class of hybrid dynamical systems with applications to model-free control and optimization: Extended manuscript. arXiv preprint arXiv:2308.15732, 2023.

- [5] Mahmoud Abdelgalil and Haithem Taha. Recursive averaging with application to bio-inspired 3-d source seeking. IEEE Control Systems Letters, 6:2816–2821, 2022.

- [6] K. B. Ariyur and M. Krstić. Real-Time Optimization by Extremum-Seeking Control. Wiley, 2003.

- [7] S. Azuma, M. S. Sakar, and G. J. Pappas. Stochastic Source Seeking by Mobile Robots. IEEE Transactions on Automatic Control, 57(9):2308–2321, 2012.

- [8] Ilario A Azzollini, Nicola Mimmo, and Lorenzo Marconi. An extremum seeking approach to search and rescue operations in avalanches using arva. IFAC-PapersOnLine, 53(2):1627–1632, 2020.

- [9] M. Benosman and J. I. Poveda. Robust source seeking and formation learning-based controller. US Patent 10,915,108 B2, 2019.

- [10] Emmanuel Bernuau, Wilfrid Perruquetti, and Emmanuel Moulay. Retraction obstruction to time-varying stabilization. Automatica, 49(6):1941–1943, 2013.

- [11] P. Casau, R. Cunha, R. G., and C. Silvestre. Hybrid Control for Robust and Global Tracking on Smooth Manifolds. IEEE Transactions on Automatic Control, 65:1870–1885, 2020.

- [12] Pedro Casau, Ricardo G Sanfelice, and Carlos Silvestre. Robust synergistic hybrid feedback. IEEE Transactions on Automatic Control, 2024.

- [13] Francis H Clarke, Yuri S Ledyaev, Ronald J Stern, and Peter R Wolenski. Nonsmooth analysis and control theory, volume 178. Springer, 2008.

- [14] J. Cochran and M. Krstić. Nonholonomic source seeking with tuning of angular velocity. IEEE Trans. on Autom. Control, 54:717–731, 2009.

- [15] Avik De, Samuel A Burden, and Daniel E Koditschek. A hybrid dynamical extension of averaging and its application to the analysis of legged gait stability. The International Journal of Robotics Research, 37(2-3):266–286, 2018.

- [16] H. Dürr, M. Stanković, C. Ebenbauer, and K. H. Johansson. Lie bracket approximation of extremum seeking systems. Automatica, 49:1538–1552, 2013.

- [17] H. Durr, M. Stanković, K. H. Johansson, and C. Ebenbauer. Extremum seeking on submanifolds in the Euclidian space. Automatica, 50:2591–2596, 2014.

- [18] Hans-Bernd Dürr, Miloš S Stanković, Karl Henrik Johansson, and Christian Ebenbauer. Examples of distance-based synchronization: An extremum seeking approach. In 51st Allerton Conference, pages 366–373, 2013.

- [19] Felipe Galarza-Jimenez, Jorge I Poveda, Gianluca Bianchin, and Emiliano Dall’Anese. Extremum seeking under persistent gradient deception: A switching systems approach. IEEE Control Systems Letters, 6:133–138, 2021.

- [20] R. Goebel, R. G. Sanfelice, and A. R. Teel. Hybrid Dynamical Systems. IEEE Control Systems Magazine, 29(2):28–93, 2009.

- [21] R. Goebel, R. G. Sanfelice, and A. R. Teel. Hybrid Dynamical Systems: Modeling, Stability, and Robustness. Princeton University Press, 2012.

- [22] Victoria Grushkovskaya, Alexander Zuyev, and Christian Ebenbauer. On a class of generating vector fields for the extremum seeking problem: Lie bracket approximation and stability properties. Automatica, 94:151–160, 2018.

- [23] J. P. Hespanha and A. S. Morse. Stabilization of switched systems with average dwell-time. 38th IEEE Conference on Decision and Control, pages 2655–2660, 1999.

- [24] Luigi Iannelli, Karl Henrik Johansson, Ulf T Jönsson, and Francesco Vasca. Averaging of nonsmooth systems using dither. Automatica, 42(4):669–676, 2006.

- [25] Luigi Iannelli, Karl Henrik Johansson, Ulf T Jönsson, and Francesco Vasca. Subtleties in the averaging of a class of hybrid systems with applications to power converters. Control Eng. Practice, 16(8):961–975, 2008.

- [26] Luigi Iannelli, Karl Henrik Johansson, UT Jonsson, and Francesco Vasca. Dither for smoothing relay feedback systems. IEEE Transactions on Circuits and Systems I: Fundamental Theory and Applications, 50(8):1025–1035, 2003.

- [27] H. K. Khalil. Nonlinear Systems. Prentice Hall, 2002.

- [28] Suad Krilašević and Sergio Grammatico. Learning generalized nash equilibria in monotone games: A hybrid adaptive extremum seeking control approach. Automatica, 151:110931, 2023.

- [29] R. J. Kutadinata, W. Moase, and C. Manzie. Extremum-Seeking in Singularly Perturbed Hybrid Systems. IEEE Transactions on Automatic and Control, 62(6):3014–3020, 2017.

- [30] Christophe Labar, Christian Ebenbauer, and Lorenzo Marconi. Extremum seeking with intermittent measurements: A lie-brackets approach. IEEE Transactions on Automatic Control, 67(12):6968–6974, 2022.

- [31] Yaoyu Li and John E Seem. Extremum seeking control with reset control, June 12 2012. US Patent 8,200,344.

- [32] Daniel Liberzon and Hyungbo Shim. Stability of linear systems with slow and fast time variation and switching. In 2022 IEEE 61st Conference on Decision and Control (CDC), pages 674–678. IEEE, 2022.

- [33] Marco Maggia, Sameh A Eisa, and Haithem E Taha. On higher-order averaging of time-periodic systems: reconciliation of two averaging techniques. Nonlinear Dynamics, 99:813–836, 2020.

- [34] C. G. Mayhew. Hybrid Control for Topologically Constrained Systems, Ph.D Dissertation. University of California, Santa Barbara, 2010.

- [35] Christopher G Mayhew and Andrew R Teel. On the topological structure of attraction basins for differential inclusions. Systems & Control Letters, 60(12):1045–1050, 2011.

- [36] Daniel E Ochoa and Jorge I Poveda. Momentum-based nash set-seeking over networks via multi-time scale hybrid dynamic inclusions. IEEE Transactions on Automatic Control, 2023.

- [37] Daniel E Ochoa and Jorge I Poveda. Robust global optimization on smooth compact manifolds via hybrid gradient-free dynamics. Automatica, 171:111916, 2025.

- [38] J. I. Poveda, M. Benosman, A. R. Teel, and R. G. Sanfelice. Coordinated hybrid source seeking with robust obstacle avoidance in multi-vehicle autonomous systems. IEEE Transactions on Automatic Control, Vol. 67(No. 2):pp. 706–721, 2022.

- [39] J. I. Poveda and N. Li. Robust hybrid zero-order optimization algorithms with acceleration via averaging in continuous time. Automatica, 123, 2021.

- [40] J. I. Poveda and A. R. Teel. A framework for a class of hybrid extremum seeking controllers with dynamic inclusions. Automatica, 76:113–126, 2017.

- [41] Jan A Sanders, Ferdinand Verhulst, and James Murdock. Averaging methods in nonlinear dynamical systems, volume 59. Springer, 2007.

- [42] R. Sanfelice. Hybrid Feedback Control. Princeton Uni. Press, 2021.

- [43] Alexander Scheinker and Miroslav Krstić. Minimum-seeking for clfs: Universal semiglobally stabilizing feedback under unknown control directions. IEEE Transactions on Automatic Control, 58(5):1107–1122, 2012.

- [44] Alexander Scheinker and Miroslav Krstić. Model-free stabilization by extremum seeking. Springer, 2017.

- [45] Eduardo D Sontag. Remarks on input to state stability of perturbed gradient flows, motivated by model-free feedback control learning. Systems & Control Letters, 161:105138, 2022.

- [46] T. Strizic, J. I. Poveda, and A. R. Teel. Hybrid gradient descent for robust global optimization on the circle. 56th IEEE Conference on Decision and Control, pages 2985–2990, 2017.

- [47] Raik Suttner and Sergey Dashkovskiy. Robustness and averaging properties of a large-amplitude, high-frequency extremum seeking control scheme. Automatica, 136:110020, 2022.

- [48] Y. Tan, D. Nes̆ić, and I. Mareels. On non-local stability properties of extremum seeking controllers. Automatica, 42(6):889–903, 2006.

- [49] A. R. Teel and D. Nes̆ić. Averaging Theory for a Class of Hybrid Systems. Dynamics of Continuous, Discrete and Impulsive Systems, 17:829–851, 2010.

- [50] H. Dürr and M. Krstic and A. Scheinker and C. Ebenbauer. Extremum seeking for dynamic maps using Liebrackets and singular perturbations. Automatica, 83(91-99), 2017.

- [51] M. Mueller, R. D’Andrea. Stability and control of a quadrocopter despite the complete loss of one, two, or three propellers. In IEEE international conference on robotics and automation, pages 45–52, 2014.

- [52] R. Suttner, S. Dashkovskiy. Exponential stability for extremum seeking control systems. IFAC-PapersOnLine, 50(1):15464–15470, 2017.

- [53] W. Wang, A. Teel, and D. Nes̆ić. Analysis for a class of singularly perturbed hybrid systems via averaging. Automatica, 48(6), 2012.

- [54] G. Yang and D. Liberzon. A Lyapunov-based small-gain theorem for interconnected switched systems. Systems and Control Letters, 78:47–54, 2015.

- [55] Chunlei Zhang, Daniel Arnold, Nima Ghods, Antranik Siranosian, and Miroslav Krstic. Source seeking with non-holonomic unicycle without position measurement and with tuning of forward velocity. Systems & control letters, 56(3):245–252, 2007.

Appendix A Proofs of Auxiliary Lemmas 2-4

Proof of Lemma 2: By \threfasmp:A1, is in and and in , and therefore so is since it is the integral of with respect to . Moreover, is in , , and . It follows that is in , , and , since it is the integral with respect to of terms that involve and , which are all in , , and . Consequently, is in all its arguments. In addition, since is the integral with respect to of , it follows that is also in all its arguments. The conclusion of the lemma follows from the fact that the set is compact and that all functions are periodic in , and therefore is uniformly Lipschitz continuous and bounded in , and the vector field is uniformly Lipschitz continuous on .

Proof of Lemma 3: By \threfasmp:A1, is continuous with respect to , and is with respect to and , i.e. continuously differentiable and its differential (the jacobian matrix ) is locally Lipschitz continuous. Since is the integral of with respect to , it follows that , , and are in all arguments. In addition, we note that is in and , and continuous with respect to . Therefore, is the integral with respect to of terms that are locally Lipschitz continuous in and , and continuous in . Consequently, is also in all arguments. Moreover, since all terms are periodic in and , we obtain that all terms are globally Lipschitz in and . This establishes the inequalities for the maps for . An identical argument establishes the inequality for the map

Proof of Lemma 4: By invoking [49, Lemma 2], there exist functions , constructed using the saturation function as in [49, Lemma 2], such that, due to Lemma 3, the properties in items (a), (b), and (c) hold. To establish item (d), we note that since in the set , and by using the construction of in (36a) and the definition of in (13a), we directly obtain , which establishes (39). Similarly, since

| (83a) | ||||

| (83b) | ||||

and since , and

| (84) |

we can combine (83)-(84) to obtain (40). To establish items (e) and (f), let satisfy , for , for all , which exists due to the continuity of , the compactness of and the periodicity of with respect to . In addition, since is LB, there exist such that . Moreover, since and are globally Lipschitz by construction, it follows that the generalized Jacobians and are OSC, compact, convex, and bounded [13]. Therefore, , , and , we have

which establishes the result.

Appendix B Construction of an adversarial input on

Let , and let be a set of points such that there exists such that, if , then for all . Consider the auxiliary functions , given by

Using the function , the constant , and an arbitrary choice of , we define the smooth bump functions , for , by the expression:

| (85) |

It is straightforward to verify that , for all . In other words, , where . Moreover, it can be shown that there exists such that for all . Using the above construction, let be given by

where is a tuning parameter. It is straightforward to see that the map is smooth and that

Define the candidate Lyapunov functions by , then observe that, due to the properties of the functions , the derivatives of the functions along the vector field are given by

for all , and that . In other words, the vector field , locally stabilizes all the points in the set . Moreover, if is another smooth vector field such that

for some and for all , then we have that:

That is, if , then the perturbation does not destroy the (local) stability of the the point .