Conditional Denoising Diffusion Probabilistic Model for Ground-roll Attenuation

Abstract

Ground-roll attenuation is a challenging seismic processing task in land seismic survey. The ground-roll coherent noise with low frequency and high amplitude seriously contaminate the valuable reflection events, corrupting the quality of seismic data. The transform-based filtering methods leverage the distinct characteristics of the ground roll and seismic reflections within the transform domain to attenuate the ground-roll noise. However, the ground roll and seismic reflections often share overlaps in the transform domain, making it challenging to remove ground-roll noise without also attenuating useful reflections. We propose to apply a conditional diffusion denoising probabilistic model (c-DDPM) to attenuate the ground-roll noise and recover the reflections efficiently. We prepare the training dataset by using the finite-difference modelling method and the convolution modelling method. After the training process, the c-DDPM can generate the clean data given the seismic data as condition. The ground roll obtained by subtracting the clean data from the seismic data might contain some residual reflection energy. Thus, we further improve the c-DDPM to allow for generating the clean data and ground roll simultaneously. We then demonstrate the feasibility and effectiveness of our proposed method by using the synthetic data and the field data. The methods based on the local time-frequency (LTF) transform and U-Net are also applied to these two examples for comparing with our proposed method. The test results show that the proposed method perform better in attenuating the ground-roll noise from the seismic data than the LTF and U-Net methods.

Index Terms:

conditional denoising diffusion probabilistic model, ground-roll attenuation, deep generative model.I Introduction

Ground roll is often dominated by Rayleigh surface waves, generated from seismic sources near the Earth’s surface [1]. In land seismic survey, ground roll is a typical coherent noise with the main characteristics of low frequency, low velocity, high amplitude [2, 3, 4]. Dispersion is also observed in ground roll, that is, different frequency components propagate at different velocities and arrive at the receiver at different times [5]. Thus, ground roll usually appears as fan-shaped distribution with downward oblique straight lines in seismic recording. The ground-roll noise will corrupt the useful reflections, and thus needing to be attenuated for improving the quality of seismic data and the fidelity of seismic reflection imaging [6, 7].

The transform-based filtering methods play an important role for suppressing ground roll in seismic data processing. Since ground roll typically has lower frequency than seismic reflection signals, low-cut filters or high-pass filters can be applied to remove the low-frequency noise associated with ground roll. However, they also remove the low-frequency components of seismic signals. Thus, a variety of transform-based seismic processing methods are developed to suppress ground-roll noise while preserving valuable seismic signals. These methods leverage the distinct characteristics of ground roll and seismic reflections within the transform domain, such as frequency-wavenumber () transform [8, 9], local time-frequency (LTF) transform [10], Radon transform [11, 12], wavelet transform [13, 14, 15], S-transform [16, 17] and Karhunen-Loeve (KL) transform [18, 3, 19]. These transforms provides a different representation of seismic data, enabling the identification and attenuation of ground roll in the transformed seismic data. However, the ground roll and reflections can have significant overlap in the transform domain in complex real data scenario. This will make it very challenging to separate ground roll from seismic reflections.

Deep learning (DL) has received widespread attention in the field of geophysics, involving various tasks in seismic processing, inversion, and interpretation [20, 21, 22, 23, 24, 25]. In recent years, the DL methods are increasingly applied for attenuating ground roll [26, 27, 28, 29, 30, 31]. Li et al. applied a Convolutional Neural Network (CNN) for learning the features of scattered ground-roll noise and then removing the learned noise from shot gathers [26]. Kaur et al. applied the LTF and regularized non-stationary regression to a few shot gathers to prepare the labeled data for training a Generative Adversarial Network (GAN) for ground-roll attenuation [32]. Yuan et al. also adopted the GAN to attenuate ground roll in seismic data [33]. Oliveira et al. combined a CNN and a conditional GAN to build the self-supervised two-step scheme for attenuating ground-roll noise [34]. To avoid the preparation for clean data as training labels, Liu et al. designed the blind-fan networks to suppress the coherent ground-roll noise in seismic data in a self-supervised manner, but a careful selection of the mask structure is really needed [35].

Denoising Diffusion Probabilistic Model (DDPM) is an advanced generative model with superior ability to generate high-quality, diverse and complex samples [36]. Considering the powerful performance of DDPM, Durall et al. introduced a DDPM to handle seismic data processing tasks, including demultiple, denoising and interpolation [37]. Conditional DDPM (c-DDPM) further extends the capability of standard DDPM by guiding the generation process with the condition [38]. Thus, we propose to apply the c-DDPM to suppress ground-roll noise in seismic data.

Typically, we can design the clean data as the target data distribution for the c-DDPM. Once the c-DDPM is trained, we can generate the clean data corresponding to the conditioning seismic data, contaminated by ground-roll noise. The ground roll can then be obtained by subtracting the generated clean data from the seismic data. However, the obtained ground roll usually contains some reflection residuals due to the inaccurate prediction of the clean data. Thus, we propose an improved c-DDPM to predict the ground roll with higher accuracy. Here, we adjust the forward and reverse processes of the diffusion model and modify the network architecture. Given the conditioning seismic data, the improved c-DDPM is able to generate the clean data and ground roll simultaneously.

To the best of our knowledge, this is the first work that applies c-DDPM to ground-roll attenuation task, showing promising results compared with the methods based on LTF and U-Net. The rest of the paper is organized as follows. First, we introduce the theory of the conventional c-DDPM and our improved c-DDPM. Secondly, we introduce the network architecture and how to prepare the training dataset and train the model. In the Examples section, we use test the synthetic and field data to test our proposed method. The LTF and U-Net methods are also applied to these data for comparing with the proposed method. At the end, we make discussions and conclusion.

II C-DDPM

II-A Conventional c-DDPM

For conventional c-DDPM, the target data distribution should be either clean data or ground roll data. Once one of them is obtained by the c-DDPM, the other one can be obtained by subtracting it from the noisy data. In the conventional c-DDPM, we typically set the clean data as the target distribution and the noisy data as the condition. Correspondingly, the ground roll can be obtained by subtracting the generated clean data from the noisy data. The forward process for the c-DDPM is defined on a Markov chain within timesteps:

| (1) |

where is sampled from the training datasets , are the latent variables fixed on the Markov chain. is the pre-defined Gaussian translation and obtains by adding random noise to :

| (2) |

where is a predefined variance schedule that increases with timestep . The property of the Markov chain enables:

| (3) |

A more explicit form of Eq. 3 is:

| (4) |

where and . At timestep , converges to a prior distribution, i.e, a standard normal distribution. Thus, the forward process is a diffusion process that gradually converts a clean image to a pure noise image.

The reverse process is also defined on a Markov chain, which converts pure noise to the data distribution , under the guidance of :

| (5) |

where the Gaussian translation has learned mean and fixed variance :

| (6) |

For the detailed inference of the formulas, see [36]. The optimization function of the network is:

| (7) |

After training the network, we can gradually generate the clean data with the sampling step in c-DDPM:

| (8) |

where is the estimated latent variable in the sampling process.

II-B Improved c-DDPM

One limit of the conventional c-DDPM is that the ground roll obtained by subtracting the predicted clean data from the noisy data usually suffer from some leakage of reflections because of the inaccurate prediction of . Thus, we design two target distribution, clean data and ground roll, in the improved c-DDPM given the noisy data as condition. The forward and reverse processes for the improved c-DDPM are shown in Fig. 1. In detail, the forward process in the improved c-DDPM is

| (9) |

| (10) |

where and refer to the clean data and ground roll, respectively. The reverse process is formulated as:

| (11) |

| (12) |

Correspondingly, the optimization function becomes

| (13) |

where and represents the estimated noise for sampling and respectively. Note that, both the noise are jointly estimated from and with the network. Once the training process is finished, we can gradually generate the clean data and the ground roll with the noisy data as condition:

| (14) |

| (15) |

The proposed training process and sampling process of the improved c-DDPM are shown in Algorithm 1 and Algorithm 2, respectively.

III Network architecture and training

We adopt the modified U-Net architecture, an adaptation of the network used by [39], as the backbone for the c-DDPM. The only difference between the two U-Nets used for the conventional and improved c-DDPM is the channel numbers of the input and output layers. According to Eq. 7, the U-Net used in the conventional c-DDPM takes the condition and noisy clean data as input and produces the estimated noise as output. We concatenate and in the input layer with 2 channels. The input and output layer for the U-Net used in conventional c-DDPM is shown in Fig. 2a. Compared with the U-Net in the conventional c-DDPM, we include the noisy ground roll data as an additional input channel and the estimated noise as an additional output channel in the improved c-DDPM (Eq. 13).

Fig. 2b shows the input and output layers for the U-Net used in improved c-DDPM. For convenience, we refer to the conventional c-DDPM and the improved c-DDPM as ”DDPM-1c” and ”DDPM-2c”, respectively. The architecture of the U-Net used jointly for the DDPM-1c and the DDPM-2c is shown in Fig. 3. The U-Net consists of the encoder and decoder. The skip connection in the U-Net enables conveying the information from the encoder to the decoder. Our U-Net mainly includes 5 Resnet blocks in both the encoder and decoder, a MidAtten block that connects the encoder and decoder, a time-embedding block and an output block. The self-attention mechanism is introduced into the MidAtten block to help the U-Net to learn the features of the image[40].Furthermore, the time embedding block introduces position encoding to inform the U-Net of the current time step in the reverse step [40].

Given the network architecture, we then prepare the training dataset for training the network. In a supervised manner, the training dataset should include noisy seismic data as input and corresponding clean data as label. There are various methods, such as finite difference modeling and convolution modeling, for preparing the datasets.

Finite difference modeling is capable of generating seismic data with excellent dynamic features, but it is computationally intense. Convolution modeling is computationally simple and efficient and allows for flexible adjustment of the location, amplitude, and frequency of seismic events. However, the convolution modeling often fails to capture the dynamic features of seismic data. To balance the cost and accuracy of the data generation, we apply the finite difference modelling to produce the clean data accurately, while using the convolution modelling to generate coherent ground-roll noise efficiently. Similar strategy is also used in [33].

To prepare the clean data for training, we first simulate 792 shot gathers by using various layered velocity models and then extract 792 shot gathers from the 2007 BP Anisotropic Velocity Benchmark datasets. We generate the ground roll randomly by adjusting the location of linear reflectivity and the frequency and amplitude of the wavelet in the convolution modeling. To adapt the trained network to the data of the example section, we need to select a reasonable frequency range in the convolution modelling. When the ground roll are much stronger than the clean data, the performance of the network greatly deteriorates. Thus, we set the average amplitude of the ground roll to be 1.5-3 times larger than the clean data. We then make the summation of the clean data and ground roll as the noisy data. The dimension of each sample of the training datasets is fixed to 640 in the time direction and 224 in the horizontal. The volume of our training datasets is 1584. Two examples of the prepared datasets are shown in Figure 4.

We train the DDPM-1c and DDPM-2c with 63 epochs and the batch size of 4. We use an Adam optimization with an initial learning rate of 0.0001. As a comparison, we use the same training parameters to train the U-Net in a supervised fashion, where the noisy and clean data are used as the input and output of this U-Net, respectively. Then, we directly use the trained models in the synthetic and field data examples.

IV Examples

IV-A SEAM Arid synthetic example

We first test the proposed methods DDPM-1c and DDPM-2c on the synthetic data example. Besides, we also apply the LTF and U-Net to the synthetic data as comparison. Fig. 5 shows the 2D slice of the SEAM Arid model. To provide the benchmark for comparison, we can directly obtain the clean data and the ground roll by applying the elastic finite-difference modeling method to the modified velocity model. We use the and model shown in Fig. 5a and 5c but set to zero to simulate the clean data, while we retain the near-surface part above 600 m of Fig. 5 but make the model beneath 600 m homogeneous to simulate the ground roll. The 25-Hz Ricker wavelet is used to simulate these seismic data. The simulated clean data and ground roll are shown in Fig. 6b and Fig. 6c, respectively. The simulated data has a total of 640 traces and 224 time samples per trace, with a time sampling interval of 0.004 s. We first apply automatic gain control (AGC) to these simulated data. Then, we add the weighted ground-roll data to the clean data to obtain the noisy data shown in Fig. 6a. The weighting factor for the ground roll can be used to adjust the SNR of noisy data. We can see from Fig. 6a that the coherent ground-roll noise corrupts the shallow reflections at near offsets and deeper reflections at far offsets. We use our proposed method (DDPM-1c, DDPM-2c), LTF and U-Net to remove the ground roll from the noisy data. Fig. 6d and 6e show the clean data and ground roll obtained by the LTF method, respectively. We can obviously see some ground-roll noise in the denoising result. The clean data and ground roll corresponding to the U-Net method are shown in Fig. 6f and 6g. We can still see that the ground roll is not removed completely, especially for the part pointed by the red box. Besides, the energy leakage of reflections is observed in Fig. 6g. The clean data predicted by using DDPM-1c is shown in Fig. 6h. As pointed by the red box, the ground-roll energy leakage is relatively weak. The separated ground-roll data by using DDPM-1c is shown in Fig. 6i. We can see that the energy leakage of reflections is less visible compared with LTF and U-Net. Fig. 6j and 6k show the predicted clean data and ground roll with DDPM-2c. We can see that the predicted clean data is comparable to the simulated clean with very slight contamination from ground roll. As pointed by the red box, the ground roll is completely removed, and the masked reflections are recovered well. In addition, there is obviously less remaining reflection energy in the predicted ground roll compared with the result of DDPM-1c. The comparison between these figures tell us that the proposed DDPM-2c shows better performance in ground-roll attenuation than LTF, U-Net and DDPM-1c.

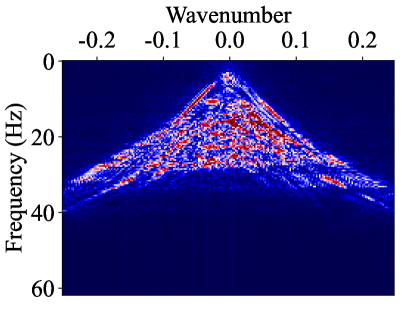

We apply the F-K plane analysis to further evaluate the performance of the ground-roll attenuation methods. The F-K spectra corresponding to the data in Fig. 6a and Fig. 6b are shown in Fig. 7a and 7b, respectively. From the spectra, we observe that the clean data and ground roll have overlap in the frequency band of 20 - 35Hz. Fig. 7c displays the F-K spectra of the predicted clean data in Fig. 6d, containing some residual ground-roll energy. Fig. 7d, 7e, and 7f show the F-K spectra corresponding to the predicted clean data in Fig. 6f, 6h, and 6j, respectively. The F-K spectra corresponding to DDPM-2c has less residual ground roll and looks closer to that of the simulated clean data.

Moreover, we utilize amplitude spectra to assess the performance of these methods for attenuating ground roll in noisy data. The amplitude spectra of the true clean data and the predicted clean data in Fig. 6 are illustrated in Fig. 8a. We compute the spectra of these data over the same time and space window. Obviously, the U-Net result has an increased energy from 10 to 40 Hz, arising from the inaccurate amplitude of the predicted clean data. On the other hand, the clean data predicted by LTF, DDPM-1c and DDPM-2c have consistent frequencies with the true clean data. Furthermore, the amplitude spectra of the true noise data and the separated ground-roll data in Fig. 6 are shown in Fig. 8b. We can see that amplitude spectra corresponding to DDPM-2c shows the best consistency with the target spectra. Although the prediction of DDPM-1c slightly degrades, it is still better than the LTF and U-Net methods.

| Methods | MAE | MSE | SSIM | PSNR |

|---|---|---|---|---|

| LTF | 0.0229 | 0.0014 | 0.7463 | 28.4057 |

| U-Net | 0.0328 | 0.0026 | 0.7272 | 25.7754 |

| DDPM-1c | 0.0189 | 0.0009 | 0.7426 | 30.3792 |

| DDPM-2c | 0.0191 | 0.0009 | 07807 | 30.1405 |

| Methods | MAE | MSE | SSIM | PSNR |

|---|---|---|---|---|

| LTF | 0.0229 | 0.0014 | 0.5991 | 28.4307 |

| U-Net | 0.0328 | 0.0026 | 0.4516 | 25.7766 |

| DDPM-1c | 0.0188 | 0.0009 | 0.6084 | 30.4044 |

| DDPM-2c | 0.0174 | 0.0008 | 0.7038 | 30.5280 |

For a quantitation assessment, we then use four evaluation metrics, including Mean absolute error (MAE), mean square error (MSE), peak signal-to-noise ratio (PSNR) and structural similarity index measure (SSIM) to measure the difference between the ground truth and predicted results. You are referred to Appendix A for more details about these evaluation metrics. The lower the values of MAE and MSE, and the higher the values of PSNR and SSIM, the closer the predicted data is to the target. Considering that the DDPMs has the inherent generative diversity, we perform the sampling process for the DDPM-based methods five times and compute the mean value of the metrics. The evaluation results for the clean data and ground roll predicted by different methods are summarized in Table. I and II, respectively. We can see from Table. I that our proposed methods (DDPM-1c and DDPM-2c) perform better than the LTF and U-Net methods in predicting clean data. Meanwhile, Table. II illustrates that DDPM-2c has superior performance in predicting ground roll.

IV-B Field data example

We then apply the c-DDPM method to the field data with no needs to re-train the model. Fig. 9a shows a shot gather from the Line 001 dataset after AGC and down-sampling. The trace number is 640, and the recording length is 224 with a time sampling of 0.0047 s. The ground-roll noise is predominantly located at near offset and seriously contaminates the reflection events. The denoising result of the LTF method is shown in Fig. 9b. We can see that the reflections are still masked by strong ground-roll residuals. The ground roll corresponding to the LTF method is shown in Fig. 9c. There is obvious leakage of reflections at the masked area of the ground roll and reflections. The predicted clean data using U-Net is shown in Fig. 9d. As shown by the red box, the reflections are partially recovered, but the ground roll still obviously contaminates the seismic data. Fig. 9e shows the corresponding ground roll. We can see the ground roll is more complete, while there are some leakage of reflections as pointed by the red arrows. The clean data predicted by DDPM-1c and DDPM-2c are shown in Fig. 9f and Fig. 9h, respectively. As pointed by the red box, the reflections are recovered better than the LTF and U-Net methods. The predicted ground roll by using DDPM-1c and DDPM-2c are shown in Fig. 9g and Fig. 9i, respectively. As pointed out by the red arrows, we can see the slight reflection energy in the result of DDPM-1c, while the result of DDPM-2c has no visible reflections. These figures show that the DDPM-2c has better performance on the field data than the LTF, U-Net and DDPM-1c methods.

We also show the f-k spectra of the original seismic data and the denoising results of different methods in Fig. 10. The amplitude spectra of the original seismic data and denoising results are shown in Fig. 11a. We can see that the LTF does not attenuate the ground roll with frequency from 8 Hz to 15 Hz effectively. The amplitude spectra of the original seismic data and the predicted ground roll in Fig. 9 are shown in Fig. 11b. We can see that DDPM-1c and DDPM-2c predicts the ground roll with frequency from 10 to 20 Hz very well.

V Discussion

The proposed method (DDPM-1c and DDPM-2c) shows better performance on the synthetic and field data examples in ground-roll attenuation than the LTF and U-Net methods. Moreover, DDPM-2c can output the clean data and ground roll simultaneously with high quality. To make a further investigation of the proposed method, we then analyse the influence of AGC and training dataset on the denoising performance, along with the acceleration in diffusion denoising implicit model(DDIM).

V-A The influence of AGC

It is more challenging when the energy of ground roll is much stronger than the reflections in the seismic data. Thus, we perform AGC on the noisy input in the synthetic and field data examples, to balance the amplitudes across the whole data. AGC adjust the amplitudes of seismic data in a window according to the mean energy within the window. The reflections overlapped by strong ground-roll noise will have smaller gain than the reflections unaffected by the ground-roll noise. Thus, the predicted reflections in the strongly-affected area tends to be weaker than the predicted reflections in the unaffected area. This can be seen in Fig. 9f and 9h.

There are two possible solutions to this issue:

1) Improve the network so that it can work well when the ground roll energy is dominant in the noisy data. DDPMs are deep generative models originally made for image synthesis. The network architecture for a range of 0-255 RGB of an image should be carefully modified for the high-resolution task such as seismic processing.

2) Reconstruct the clean data instead of separating it from the noisy data after AGC. Both the DDPM-1c and DDPM-2c are required to separate the reflection energy from the noisy condition after AGC. The network can learn to reconstruct the contaminated area with continuous reflection energy by lessening the constraint of the condition. In other words, the c-DDPM should possess more diversity to construct the contaminated area.

V-B The influence of training datasets

The preparation of training dataset is an important component of the DL method. We applied the convolution modeling to generate the ground roll. We configure the source wavelet frequency for the convolution modeling to fall within a certain range, ensuring the trained model generalizes effectively across both synthetic and field data examples. The training dataset used above is named as ”dataset1”. To test how the frequency of the ground roll influence the generation quality, we enlarge the frequency range of the ground roll and generate a new training dataset, named as ”dataset2”. The new dataset is then used to train the DDPM-2c with the same training parameters as before. After training, we then apply the trained model to the noisy field data in Fig. 9a. We can see that the residual ground-roll noise with higher frequency are also removed, but the direct arrivals are also missing. Additionally, there are more leakage of reflections into the predicted ground roll when using dataset2 for training compared with the scenario for dataset1. This is because the model trained on dataset2 tends to generate the events with higher frequency (which usually refer to the reflections), especially in the area where the reflections and the ground roll heavily overlap.

We then compute the amplitude spectra of the predicted clean data and ground roll corresponding to dataset2 to further demonstrate the influence of the frequency band of the ground roll for training on the data generation. The amplitude spectra of the predicted clean data using dataset2 exhibits an obvious decrease after 20Hz, while the amplitude spectra of the ground roll increases after 20Hz. The experiment result demonstrates that the frequency band of the training dataset affects the generation quality of the ground roll and the clean data. Thus, we should carefully prepare the training dataset with a reasonable frequency band to improve the predicted clean data and ground roll.

V-C DDIM

We implemented a conventional sampling process of the DDPM in the c-DDPM. This sampling process is time-consuming because many timesteps are required. In order to accelerate the sampling, DDIM defines both the forward and reverse processes on a non-Markov chain instead of a Markov chain [41]. This non-Markov chain allows DDIM to sample within significantly reduced timesteps, making it much more computationally efficient. However, the reduced timesteps inevitably degrades the quality of the generation. Therefore, we employ the conventional sampling process of the DDPM instead of the sampling process of the DDIM. Here, we provide a brief illustration of the sampling process of the DDIM for reference. The sampling step in DDIM is

| (16) |

where is the sampled timesteps from the original range 0 … , is the pre-defined noise schedule, and . When , there is no random noise added in every sampling step, thus the sampling process is fixed. When , the sampling process is the same to the DDPM, only with reduced timesteps.

VI Conclusion

We propose to apply the c-DDPM to attenuate the ground-roll noise in the seismic recordings. We use the finite-difference modelling and convolution modelling methods to prepare the training datasets. After the training process, the c-DDPM can generate the clean data given the seismic recordings as condition. To improve the accuracy of prediction for ground roll, we further improve the c-DDPM to simultaneously generating the clean data and ground-roll data. Tests on one synthetic data and one field data show that the proposed method (the conventional c-DDPM and improved c-DDPM) performs better in ground-roll attenuation than the other methods using LTF and U-Net. Besides, the improved c-DDPM directly predict the ground roll with high accuracy.

VII Appendix A

In the appendix, we will provide a brief introduction to the algorithms of MAE, MSE, SSIM and PSNR. The MSE is calculated by

| (17) |

where and is the size of the data, is the true data, and is the estimated data. The MSE is calculated by

| (18) |

The PSNR is calculated by

| (19) |

The SSIM is calculated by

| (20) |

where is the mean of the true data and estimated data , respectively, is the variance of and , respectively, is the covariance of and , , where is the dynamic scope,

References

- [1] G. Beresford-Smith and R. N. Rango, “Dispersive noise removal in tx space: Application to arctic data,” Geophysics, vol. 53, no. 3, pp. 346–358, 1988.

- [2] R. Saatcilar and N. Canitez, “A method of ground-roll elimination,” Geophysics, vol. 53, no. 7, pp. 894–902, 1988.

- [3] X. Liu, “Ground roll suppression using the karhunen-loeve transform,” Geophysics, vol. 64, no. 2, pp. 564–566, 1999.

- [4] M. J. Porsani, M. G. Silva, P. E. Melo, and B. Ursin, “Ground-roll attenuation based on svd filtering,” in SEG International Exposition and Annual Meeting. SEG, 2009, pp. SEG–2009.

- [5] M. I. Al-Husseini, J. B. Glover, and B. J. Barley, “Dispersion patterns of the ground roll in eastern saudi arabia,” Geophysics, vol. 46, no. 2, pp. 121–137, 1981.

- [6] Ö. Yilmaz, Seismic data analysis: Processing, inversion, and interpretation of seismic data. Society of exploration geophysicists, 2001.

- [7] Y. Chen, S. Jiao, J. Ma, H. Chen, Y. Zhou, and S. Gan, “Ground-roll noise attenuation using a simple and effective approach based on local band-limited orthogonalization,” IEEE Geoscience and Remote Sensing Letters, vol. 12, no. 11, pp. 2316–2320, 2015.

- [8] S. Treitel, J. L. Shanks, and C. W. Frasier, “Some aspects of fan filtering,” Geophysics, vol. 32, no. 5, pp. 789–800, 1967.

- [9] G. Beresford-Smith and R. Rango, “Suppression of ground roll by windowing in two domains,” First Break, vol. 7, no. 2, 1989.

- [10] Y. Liu and S. Fomel, “Seismic data analysis using local time-frequency decomposition,” Geophysical Prospecting, vol. 61, no. 3, pp. 516–525, 2013.

- [11] D. Trad, T. Ulrych, and M. Sacchi, “Latest views of the sparse radon transform,” Geophysics, vol. 68, no. 1, pp. 386–399, 2003.

- [12] D. C. Henley, “Coherent noise attenuation in the radial trace domain,” Geophysics, vol. 68, no. 4, pp. 1408–1416, 2003.

- [13] A. J. Deighan and D. R. Watts, “Ground-roll suppression using the wavelet transform,” Geophysics, vol. 62, no. 6, pp. 1896–1903, 1997.

- [14] R. Zhang and T. J. Ulrych, “Physical wavelet frame denoising,” Geophysics, vol. 68, no. 1, pp. 225–231, 2003.

- [15] J. Gilles, “Empirical wavelet transform,” IEEE transactions on signal processing, vol. 61, no. 16, pp. 3999–4010, 2013.

- [16] R. G. Stockwell, L. Mansinha, and R. Lowe, “Localization of the complex spectrum: the s transform,” IEEE transactions on signal processing, vol. 44, no. 4, pp. 998–1001, 1996.

- [17] R. Askari and H. R. Siahkoohi, “Ground roll attenuation using the s and x-f-k transforms,” Geophysical Prospecting, vol. 56, no. 1, pp. 105–114, 2008.

- [18] I. Jones and S. Levy, “Signal-to-noise ratio enhancement in multichannel seismic data via the karhunen-loeve transform,” Geophysical prospecting, vol. 35, no. 1, pp. 12–32, 1987.

- [19] R. Montagne and G. L. Vasconcelos, “Optimized suppression of coherent noise from seismic data using the karhunen-loève transform,” Physical Review E, vol. 74, no. 1, p. 016213, 2006.

- [20] X. Wu, L. Liang, Y. Shi, and S. Fomel, “Faultseg3d: Using synthetic data sets to train an end-to-end convolutional neural network for 3d seismic fault segmentation,” Geophysics, vol. 84, no. 3, pp. IM35–IM45, 2019.

- [21] S. Yu and J. Ma, “Deep learning for geophysics: Current and future trends,” Reviews of Geophysics, vol. 59, no. 3, p. e2021RG000742, 2021.

- [22] C. Song and T. A. Alkhalifah, “Wavefield reconstruction inversion via physics-informed neural networks,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–12, 2021.

- [23] Y. Li, T. Alkhalifah, and Z. Zhang, “Deep-learning assisted regularized elastic full waveform inversion using the velocity distribution information from wells,” Geophysical Journal International, vol. 226, no. 2, pp. 1322–1335, 2021.

- [24] S. M. Mousavi and G. C. Beroza, “Deep-learning seismology,” Science, vol. 377, no. 6607, p. eabm4470, 2022.

- [25] R. Harsuko and T. A. Alkhalifah, “Storseismic: A new paradigm in deep learning for seismic processing,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–15, 2022.

- [26] H. Li, W. Yang, and X. Yong, “Deep learning for ground-roll noise attenuation,” in SEG Technical Program Expanded Abstracts 2018. Society of Exploration Geophysicists, 2018, pp. 1981–1985.

- [27] D. Liu, W. Chen, M. D. Sacchi, and H. Wang, “Should we have labels for deep learning ground roll attenuation?” in SEG Technical Program Expanded Abstracts 2020. Society of Exploration Geophysicists, 2020, pp. 3239–3243.

- [28] C. Zhang and M. van der Baan, “Ground-roll attenuation using a dual-filter-bank convolutional neural network,” IEEE Transactions on Geoscience and Remote Sensing, vol. 60, pp. 1–11, 2021.

- [29] N. Pham and W. Li, “Physics-constrained deep learning for ground roll attenuation,” Geophysics, vol. 87, no. 1, pp. V15–V27, 2022.

- [30] L. Yang, S. Wang, X. Chen, O. M. Saad, W. Cheng, and Y. Chen, “Deep learning with fully convolutional and dense connection framework for ground roll attenuation,” Surveys in Geophysics, pp. 1–34, 2023.

- [31] L. Xing, H. Lin, L. Wang, Z. Xu, H. Liu, and Q. Li, “Ground roll intelligent suppression based on spatial domain synchrosqueezing wavelet transform convolutional neural network,” Geophysical Prospecting, vol. 72, no. 1, pp. 247–267, 2024.

- [32] H. Kaur, S. Fomel, and N. Pham, “Seismic ground-roll noise attenuation using deep learning,” Geophysical Prospecting, vol. 68, no. 7, pp. 2064–2077, 2020.

- [33] Y. Yuan, X. Si, and Y. Zheng, “Ground-roll attenuation using generative adversarial networks,” Geophysics, vol. 85, no. 4, pp. WA255–WA267, 2020.

- [34] D. A. Oliveira, D. G. Semin, and S. Zaytsev, “Self-supervised ground-roll noise attenuation using self-labeling and paired data synthesis,” IEEE Transactions on Geoscience and Remote Sensing, vol. 59, no. 8, pp. 7147–7159, 2020.

- [35] S. Liu, C. Birnie, A. Bakulin, A. Dawood, I. Silvestrov, and T. Alkhalifah, “A self-supervised scheme for ground roll suppression,” arXiv preprint arXiv:2310.13967, 2023.

- [36] J. Ho, A. Jain, and P. Abbeel, “Denoising diffusion probabilistic models,” Advances in neural information processing systems, vol. 33, pp. 6840–6851, 2020.

- [37] R. Durall, A. Ghanim, M. R. Fernandez, N. Ettrich, and J. Keuper, “Deep diffusion models for seismic processing,” Computers & Geosciences, vol. 177, p. 105377, 2023.

- [38] J. Ho and T. Salimans, “Classifier-free diffusion guidance,” arXiv preprint arXiv:2207.12598, 2022.

- [39] C. Saharia, J. Ho, W. Chan, T. Salimans, D. J. Fleet, and M. Norouzi, “Image super-resolution via iterative refinement,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 45, no. 4, pp. 4713–4726, 2022.

- [40] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- [41] J. Song, C. Meng, and S. Ermon, “Denoising diffusion implicit models,” arXiv preprint arXiv:2010.02502, 2020.