revtex4-2Repair the float package

Concatenating Binomial Codes with the Planar Code

Abstract

Rotation symmetric bosonic codes are an attractive encoding for qubits into oscillator degrees of freedom, particularly in superconducting qubit experiments. While these codes can tolerate considerable loss and dephasing, they will need to be combined with higher level codes to achieve large-scale devices. We investigate concatenating these codes with the planar code in a measurement-based scheme for fault-tolerant quantum computation. We focus on binomial codes as the base level encoding, and estimate break-even points for such encodings under loss for various types of measurement protocol. These codes are more resistant to photon loss errors, but require both higher mean photon numbers and higher phase resolution for gate operations and measurements. We find that it is necessary to implement adaptive phase measurements, maximum likelihood quantum state inference, and weighted minimum weight decoding to obtain good performance for a planar code using binomial code qubits.

I Introduction

One of the major challenges in building a quantum computer is implementing error correction. Error correction, the encoding of quantum information to protect it against environmental noise, is crucial in order to perform large-scale calculations. In this work we are interested in the performance of bosonic codes in which qubits are encoded into the infinite-dimensional Hilbert space of a harmonic oscillator. Bosonic codes can provide protection against typical sources of noise such as loss and dephasing [1, 2, 3] and can be implemented in a variety of physical architectures, including electromagnetic modes, for example microwave modes controlled by superconducting qubits [4, 5, 6], or mechanical degrees of freedom such as trapped ion motional modes [7, 8].

Experiments in the context of superconducting qubits have shown that bosonic codes are amongst the most promising approaches to practical quantum computing in that system. Specifically it has been possible to operate these experiments beyond the memory break even point at which the lifetime of an encoded qubit equals that of an unencoded qubit [4, 9]. In addition it is possible to perform logical gates and fault tolerant measurements in such experiments [5, 10, 11, 12, 13, 14, 15].

No matter how good bosonic codes become, however, there will be residual errors. Large scale quantum computation will require bosonic codes to be concatenated with a higher level code, such as a surface code [16, 17], that allows fault tolerant quantum computation [2]. The bosonic code must be able to suppress the noise below the fault tolerance threshold of the higher level code. For the so-called GKP codes [2] there have been studies of how to achieve this [18, 19, 20] but for widely used codes such as the so-called cat codes [21, 22, 23] and binomial codes [24] there is not a quantitative understanding of the performance required for large-scale computation.

In this work we describe and implement simulations to determine the performance of an architecture that concatenates the surface code with a class of bosonic codes that Grimsmo and collaborators termed rotational symmetric codes [25]. They provided a unified analysis of these codes, which include both cat codes and binomial codes, as well as a theoretical scheme for logical gates, measurements, and state preparation [25]. Our approach uses this gate set to study the performance of a specific choice of higher level code and architecture for quantum computation.

The gate set proposed in [25] suggests that a natural approach to realising fault tolerant compuation with concatenated bosonic codes is Measurement Based Quantum Computation (MBQC) with code foliation [26, 27, 28]. In particular, for rotation symmetric codes the natural “easy” operations include a gate, realized with a cross-Kerr interaction between two modes, and X-basis measurement, realized as a phase measurement [25]. Together with state preparation of states, this forms the the basis for MBQC. These considerations motivate our scheme which uses bosonic encoded qubits, in particular binomial qubits, as the ’physical qubits’ of a 2D surface code, realised by MBQC.

The aim of this work is to conduct a preliminary investigation of this quantum computation scheme, taking into account a realistic model of photon loss which is the primary source of noise for bosonic codes. Furthermore, the X basis measurements that we require for our scheme will be realised as phase measurements of a bosonic mode. The phase measurement itself, as well as the procedure for inferring the qubit state, are imperfect processes which will introduce inaccuracy to our scheme. We will compare different methods of phase measurement such as heterodyne and adaptive homodyne measurement [29], combined with different procedures for qubit state inference.

We obtain threshold values for varying orders of discrete rotational symmetry. We find that whilst increasing the order of discrete rotational symmetry generally improves the threshold value, thresholds for binomial encoded qubits tend to be lower than for the trivial Fock space encoding, , when using the most naive measurement and qubit state inference techniques. By using more sophisticated measurements and state inference methods as well as incorporating the soft information from measurements into decoding we are able to find schemes that beat the trivial encoding when qubit state measurement error for the trivial encoding is not too small.

The point at which the lifetime of a bosonic encoded qubit outperforms the trivial encoding is referred to as break even [30]. The competition between the bosonic codes and the trivial encoding arises because the rotationally symmetric codes achieve tolerance to loss errors at the expense of increased Fock number. In comparison, the trivial encoding has no intrinsic robustness to loss but has a very low average number. We find that optimal measurement and state inference is required for the rotational symmetric codes to outperform the trivial encoding. Finally, we examine the sub-threshold performance of binomial codes and find that below of threshold the loss tolerance of a binomial code is more beneficial and codes with increased rotational symmetry perform better than the trivial code and better than codes with reduced symmetry.

This paper is structured as follows. In Sec. II we review rotation symmetric bosonic codes and measurement based quantum computation. In Sec. III we introduce the measurement models and qubit state inference techniques used to realise the computational scheme. Sec. III.4 then quantifies the performance of these methods. In Sec. III.5 we introduce methods used for the 2D planar code, including an X basis Pauli twirling approximation and modified MWPM decoding algorithm. Sec. III.6 compares the code thresholds obtained using these techniques, as well as examining the subthreshold scaling of the code. Finally, in Sec. IV we review our findings and highlight the main conclusions.

II Setup and Description of Cluster State Model

In this section we will define the various elements of the error correction scheme we investigate. This scheme involves encoding qubits in bosonic modes using a rotational symmetric encoding, specifically the binomial encoding. These encoded qubits are then entangled using gates to form a specific two dimensional many-body entangled resource state, also known as a cluster state, which maps onto the two dimensional surface code [26]. In this work, we will restrict to the 2D surface code. The rotation symmetric bosonic (RSB) encoded qubits are then measured and subject to error correction in order to enact the identity gate on the code. Note that the scheme could be extended to non-trivial gates, however this will not be considered in this work. Though the 2D surface code is not fault tolerant, it is a more straightforward setting in which we conduct a preliminary investigation into our concatenated scheme.

II.1 Rotation Symmetric Bosonic Codes

Encoding a qubit in a single bosonic mode involves defining a two dimensional subspace of the mode’s Hilbert space as the span of the logical codewords. The remaining space can be used to detect and correct errors. The most prevalent error sources to which bosonic modes are subject are loss and dephasing. Rotation-symmetric encodings have been tailored specifically to be able to correct loss and can exactly correct up to a certain order of photon loss. This property is tightly connected to the discrete rotational symmetry of these codes.

Rotation-Symmetric Bosonic (RSB) codes [25] have logical -operators of the form

| (1) |

where is the Fock space number operator, and are the Fock space annihilation and creation operators, respectively. Such codes have discrete rotational symmetry of order as the projector onto the codespace commutes with the discrete rotation operator

| (2) |

Indeed, The choice of the logical Z operator determines the form of the logical codewords as finite rotated superpositions of a “primitive” state [25] , where different types of rotation symmetric codes, such as cat and binomial codes, differ only in the choice of primitive. Explicitly, for

| (3) |

The operator also enforces a specific Fock grid structure of the logical codewords. In particular, for has support on every Fock state, as per Fig. 1. This structure results in a Fock grid distance between logical zero and logical one states.

Dual basis codewords are defined in the usual way

| (4) |

In contrast to primal codewords, they have support on every Fock state They are separated by a distance in phase space Note that whilst Therefore, whilst increasing is advantageous for the resilience of codewords to loss and gain errors, it is detrimental to the capacity of codewords to tolerate dephasing errors.

Binomial codes are so called due to the binomial coefficient weighting of their Fock state coefficients. In particular

| (5) |

where the parameter relates to the number of loss and dephasing errors that are correctable by the code, as detailed in [24].

In the following we will omit the subscript in our notation and simply use to denote an RSB qubit encoded in the state When referring to ‘logical’ operations and states, we will mean operations and states at the level of the surface code.

We study a quantum computing scheme which requires the preparation of RSB states, destructive X basis measurement, and an RSB gate. These three elements are sufficient to enact all Clifford gates [25]. Universality may be achieved for this scheme by injecting magic states but non-trivial gates are beyond the scope of this work.

II.1.1 RSB Gate

As discussed above the only logical gate we need to enact on RSB encoded qubits in our computational scheme is the controlled phase gate. The gate may be realised by a controlled rotation at the physical level generated by a cross-Kerr interaction between the modes [21, 31]

| (6) |

where modes involve RSB qubits with discrete rotational symmetry of order respectively. Loss is the dominant imperfection in our model and if the cross-Kerr gate is slow the effect of loss is exacerbated. Both the strength of the loss and the time required for the gate are captured by the parameter which we will use to characterize the noise level. The gate time is chosen such that thus is proportional to the photon loss rate and inversely proportional to the cross-Kerr nonlinearity. In all that follows we only consider CROT gates between identical encodings, so

Note that low cross-Kerr nonlinearity in practical devices might lead to a different coupling non-linearity being preferred, such as a SNAIL [32]. In this case as well the effect of loss will depend on the per photon loss probability during a gate. So while the details of code performance would differ, we expect qualitatively similar results for such a gate.

The other RSB operation we need is a destructive single qubit X measurement, which we investigate in Sec.III.1.

II.2 Noise Model

A primary noise source plaguing bosonic modes is photon loss. We consider a model in which photon loss occurs during the implementation of each CROT gate.

II.2.1 Photon Loss Noise

To describe the noise due to photon loss, we use the framework of quantum trajectory theory [33] to analyse a master equation describing losses occurring on each qubit during the implementation of the CROT gate. The master equation describing the combined effects of the gate and loss is

| (7) |

where , is the Lindblad superoperator. We will describe the effects of loss using the parameter ,

In the trajectory approach the density matrix can be written as a sum over the number of photon emission events that occur during the gate. Each term involves an integral over the emission time of the photons. As described in more detail in Appendix A we commute the loss past the CROT gate in each such term so that we are left with an effective error operator acting on each qubit.

We will introduce a notation that can be generalized to the case of modes. Suppose that the times for which losses occur on mode 1 are listed in a vector . We will suppose that these emission times are arranged in time order such that for times we have . Similarly emission times for mode 2 are . We will sometimes need to refer to the emission times for all modes, and we do this by defining an array of emission time vectors The number of photon emissions for mode is the number of entries in the vector . The total number of photon emissions is the sum of the , so for two modes we have . Then the noisy CROT gate with a fixed number of photon emissions can be expressed as

| (8) |

where are error operators acting on qubits 1,2 respectively, given by

| (9a) | ||||

| (9b) | ||||

This expression is derived in the Appendix A, specifically Eq. A.1. The effective delay parameters appearing in these expressions are given by

| (10) |

The various factors in have a natural interpretation. removes photons from mode , hence the factor . The probability of an event with this number of photon emissions, and the resulting conditioning of the wavefunction, are described by the constant factor and the non-unitary operator . Finally the action of the gate during the photon loss means that photon loss events on the neighbouring mode lead to a phase shift on mode 1. This correlated noise process is described by the unitary factor . It has very significant consequences for the performance of error correction as we will see.

Turning now to the multimode case where we will need to perform many simultaneous CROT gates. Specifically the gates to be performed are represented by a graph where the vertices represent qubits and the edges represent the location of the gates. We will also use the notation of neighbourhoods - that is, a qubit is said to be in the neighbourhood of qubit , , if qubits share an edge. We consider applying all CROT gates simultaneously. Using the same notations as for the two-qubit case, we can express the net noise operator for a qubit as

| (11) |

Note that the mode acquires a phase shift for a photon emission on any neighbouring qubit.

The overall effect of the noise for a given set of photon emission times is given by the operator

| (12) |

This equation, derived in the Appendix as equation A.1, expresses the noise as an ideal gate followed by a modified photon loss noise operator. We can think of the overall effect of this noise as defining a noise operator that acts on the ideal cluster state as follows

| (13) |

where

| (14) |

and is the ideal gate with and no photon loss events.

This leaves us to determine how to correctly sample a set of emission times . We show in Appendix A that the probability distribution for photon emission times is unnaffected by the application of the CROT gates. This greatly simplifies our simulations since the photon emission times can be determined independently for each qubit according to a probability distribution that is independent of the particular choice of . The details of the sampling of emissions times are givein in Appendix A.2.

II.3 Measurement Based Quantum Computation

Measurement Based Quantum Computation (MBQC) [34, 26] is an alternative to the circuit-based model of quantum computing. In MBQC, computation is performed by preparing an entangled many-body resource state upon which single qubit measurements are performed, realising quantum gates. The resource state, often referred to as a cluster state, is entangled exclusively using gates. The single qubit measurements on the cluster state effectively consume the qubits and drive the computation.

In this work we will use MBQC to implement the 2D surface code [35]. Whilst the 2D surface code is not fault-tolerant, it provides a simpler setting to explore the implications of realistic noise and measurement on code performance than a full 3D simulation.

In this section we will first describe some preliminary notation used for cluster states, before detailing the construction of 1D cluster states. We will then explain how 1D cluster states can be entangled to realise the 2D surface code as a foliation of the repetition code.

II.3.1 Cluster States

A cluster state can be associated to a graph where vertices represent qubits. Two vertices share an edge if the two qubits of the cluster state are entangled via a gate. In our model, each vertex of the graph represents an RSB qubit, and vertices share an edge if the qubit pair is entangled via a CROT gate. The cluster state provides the resource for the quantum computation. Computation will be carried out by single qubit measurements, which will be elaborated upon in Sec. II.3.

II.3.2 1D Cluster State Description

We begin by constructing a 1D cluster state and using it to teleport a qubit along the line. For a length 1D cluster state, we prepare a single qubit in an arbitrary state and the remaining qubits in the state. We then entangle qubits in a line by applying between adjacent qubits. We then measure each qubit in the X basis, which teleports the logical state from the first qubit, down the chain to the final qubit.

We can understand the 1D cluster state in terms of its stabilisers, as in [36] for example. Initially, the logical operators describing the 1D chain will simply be and before the gates the stabiliser group is given by After applying gates, operators transform as

| (15) |

where denotes all qubits which are entangled to qubit by a gate. This causes logicals and stabilisers to transform as

| (16) |

At the conclusion of the measurements the first qubits are left in eigenstates and by multiplication with stabilizers we find that the logicals become and reflecting teleportation along the chain.

It is useful to consider how loss at any given location on the chain affects the performance of the scheme. Loss on qubit propagates through the controlled rotation () to act as dephasing on qubits . In our simulations of this teleportation scheme we assess performance by assuming that the final qubit in the chain has no noise and applying a noise-free recovery to assign a fidelity to the teleportation procedure.

II.3.3 2D Surface Code

In order to construct the 2D surface code in Fig. 2, we start with parallel 1D cluster states, prepared as before and entangle every odd (primal) qubit of each 1D cluster with the corresponding primal qubit of its neighbouring 1D cluster state, via a dual qubit prepared in the state. Every second qubit of the 1D cluster state is also a dual qubit. The resulting cluster state is depicted in Fig. 4. Measuring every qubit in the X basis realises a measurement of parity checks of primal stabilisers given by a product of on qubits around a primal plaquette. A primal plaquette stabiliser checks for type errors on that plaquette. More explicitly, consider the stabilisers of the cluster state. For a plaquette of the cluster state, the associated stabiliser is , where denote the primal qubits represented by the edges of the given plaquette as in Fig. 3. To teleport logical information across the 2D foliated state, we measure both primal and dual qubits in the X basis. This also provides stabiliser outcomes, from which we extract the error syndrome.

Having obtained the error syndrome, error correction can be carried out in the usual way [16, 17]. We use a minimum weight perfect matching (MWPM) algorithm to match up pairs of violated stabilisers in the error syndrome in a way that minimises the total distance between pairs of violated stabilisers. The paths between violated stabilisers given by MWPM determine which qubits have experienced errors and therefore give the Pauli correction to which the final logical state with be subject. After the correction has been applied, the simulation can perform a hypothetical logical measurement that determines if the correction has succeeded, or if it has failed and the code has suffered a logical error.

The model we simulate can either be viewed as the foliated 1D repetition code, or equivalently a single 2D timeslice of plaquette stabilizers of the surface code. The noise model we adopt for this scheme includes photon loss occurring during gates and measurement errors on single qubit X measurements, the latter of which arise naturally from the realistic measurement model employed. In the commonly used phenomenological error models for the surface code, both these noise sources map to gate noise rather than phenomenological measurement noise, so that we are still able to observe an error correction threshold for this scheme. Note however that there is only a single logical operator that can be corrected. Therefore while it is possible to learn a lot about the interplay between loss and measurement errors in this code concatenation scheme, a larger scale simulation corresponding to a surface code simulation would still be desirable.

III Analysis and Numerics

III.1 Single Qubit Phase Measurement

We require X basis measurements of individual qubits for our quantum computation scheme. This measurement translates to a phase-estimation problem based on measurements of a single bosonic mode. Feasible methods for conducting phase measurements are heterodyne measurement and adaptive homodyne (AHD) measurement [29]. We compare the performance of heterodyne and AHD measurement to an ideal canonical phase measurement which would be the optimal choice of measurement if it could be implemented.

All phase measurements can be defined using the general positive operator-valued measurement (POVM) [29]

| (17) |

where is the measurement outcome and H is a Hermitian matrix with real positive entries and for all that is defined explicitly in Appendix B. The quality of the phase measurement depends on the choice of .

III.1.1 Canonical Phase Measurement

The ideal realisation of a phase measurement of a bosonic mode is simply the projector onto an (unnormalised) phase eigenstate [37]. This measurement model is very hard to implement, however we include it in simulations as a benchmark of the best possible measurement to which we can compare the other schemes implemented. It has POVM elements

| (18) |

where the phase eigenstate is

III.1.2 Heterodyne Measurement

Heterodyne measurement involves simultaneous homodyne measurements of orthogonal quadratures of a bosonic mode, resulting in adding noise to both quadratures. Heterodyne measurement projects the qubit onto the coherent state . We can obtain then obtain the phase as The POVM for heterodyne measurement with outcome is

| (19) |

and

| (20) |

III.1.3 Adaptive Homodyne Measurement

Adaptive Homodyne Measurement (AHD) [29] is a better performing alternative to heterodyne measurement. Ordinary homodyne measurement involves the measurement of a single quadrature of the harmonic oscillator mode. It has lower noise than heterodyne measurement but cannot determine the phase of the bosonic mode. In AHD measurement, however, the phase of the local oscillator field is continuously updated based on prior measurements. This rotates the quadrature that is being measured and the scheme is designed to lock in of the phase of the field. Various adaptive schemes are possible we use the Mark II scheme from [29]. The AHD POVM elements are determined by a specific choice of the matrix that appears in Eq. 17, which is defined in Appendix B.

III.1.4 Measurement Error

In our scheme phase measurements are used to implement basis measurements of a RSB code. The phase measurement must be able to resolve angles less than in order to distinguish qubit states. Thus for a fixed phase measurement it becomes harder to perform qubit measurement as increases. Neither AHD nor heterodyne measurements provide ideal projective qubit measurements. Both have inherent measurement error which is dependent upon the mean photon number, in addition to the requirement to resolve angles of order .

Since in this setting we just require the ability to distinguish the two qubit -eigenstates based on phase measurement, we will use a Qubit State Inference (QSI) procedure to achieve this. If the inferred value of the qubit state differs from ‘actual’ state of the qubit determined by our simulation then that constitutes a qubit measurement error. As we will explain in greater detail below this QSI procedure can be chosen to partial compensate from the phase errors that we have seen occur correlated to photon losses on neighbouring qubits.

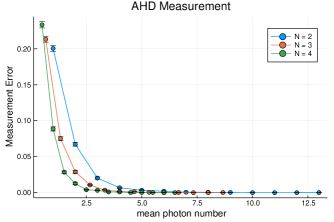

In Figs. 5,6 the data for the measurement error rate as a function of mean photon number is generated numerically. We assume in order to decouple the measurement error from the noise due to photon loss. We then simulate many shots of qubit measurement and calculate the fraction of shots where the logical state as given by the Qubit State Inference, see Sec. III.2, differs from the actual answer found according to Eq. 39. By varying , for fixed we vary the mean photon number.

Fig. 5 shows the measurement error as a function of for heterodyne measurement, for binomial codes of different We see that for a given , codes of higher will have a higher mean photon number.

Fig. 6 shows measurement error as a function of mean photon number for AHD measurement. Not only is the overall measurement error much lower than in the heterodyne case, as expected, but also the measurement error is more consistent across values. As we will see in Sec. III.6, this has a significant impact on threshold values.

It is important to note that the ‘measurement error’ we are referring to is measurement error at the level of the bosonic mode. This manifests as Pauli errors of the binomial code qubits, rather than as errors in the binomial code single qubit measurement outcomes. Correcting binomial code qubit measurement outcomes would require multiple measurement rounds. Measurement error in our context contributes to Pauli noise independently from noise due to photon loss.

III.2 Qubit State Inference

Once we have obtained the phase of an RSB encoded qubit, we must infer how the phase of the bosonic mode maps onto a X basis qubit measurement outcome. In this section, we will quantify the performance of such qubit state inference (QSI) techniques in terms of the fidelity of teleportation along a 3-qubit 1D cluster state as described in Sec.II.3.2 using heterodyne measurement for the phase measurement.

III.2.1 Binning Algorithm

The binning algorithm utilises the discrete rotational symmetry of RSB codes to straightforwardly bin the phase measurement in the complex plane according to the position of the logical plus and minus states in phase space. Fig. 7 below represents a binning algorithm QSI for an RSB code.

III.2.2 Maximum Likelihood QSI

For a three qubit 1D cluster state, the maximum likelihood QSI uses the phase measurement outcomes of the first two qubits to simultaneously infer the states of these qubits. It takes the full noise channel into account for each qubit, and therefore is the most accurate of the QSI techniques we examine. The maximum likelihood QSI works by calculating the probability of the two qubits being in states given their phase measurement outcomes and choosing which maximise this probability.

To design our QSI techniques we analyse their performance relative to a simplified noise model where the photon loss occurs before the CROT gates. The loss may be commuted past the CROT gates and will spread to dephasing noise on adjacent qubits. This is in contrast to how we model the noise to which the bosonic modes are subject in our full simulations where loss occurs the CROT gates, as described in Sec. 14. We use a simpler noise model to design our QSI techniques in order to make them tractable to implement and analyse numerically. Consequently, for the QSI techniques we model photon loss using Kraus operators for photon loss of order

| (21) |

Here the parameter describes the loss probability for each photon. It corresponds to in the full model discussed previously in which photon losses can occur an any time. The dephasing operators acting on qubit , given by the commutator with the of loss on qubit , are

| (22) |

Suppose that the first two qubits in the cluster have measurement outcomes and we aim to infer qubit states in order to obtain the Pauli correction we need to apply to qubit three. We will define the likelihood function which is given by the conditional probability for the phase measurement outcomes given the initial qubit states.

Each qubit of the cluster is subject to noise due to photon loss as well as dephasing from the photon loss ocurring on neighbouring qubits. Therefore

| (23) |

Explicitly, maximum likelihood QSI chooses giving

| (24) |

For schemes with more qubits the exact maximum likelihood QSI can be formulated in the same way. However it becomes intractable as the number of qubits in the cluster state is increased. For this reason we consider an approximation that can be performed efficiently.

III.2.3 Local Maximum Likelihood QSI

We define a local maximum likelihood QSI that uses a local approximation of the noise channel acting on any given qubit to obtain an efficient approximation for the likelihood function.

The form of the , likelihood function for the qubit being in state conditioned upon its phase measurement outcome , depends upon the number of qubits to which the qubit in question is entangled. Suppose that the qubits entangled to a specific qubit are denoted by Let the state of qubit be and the state of all the qubits will be indicated by . Let denote the set containing all vectors of length with entries indicating the number of photon emissions on the mode . Let

| (25) |

and

| (26) |

Where the a priori photon emission probabilities are

| (27) |

and

| (28) |

Then we define the approximate likelihood function to be

| (29) |

where we truncate the two sums over numbers of photon losses at for numerical tractability. Given the local maximum likelihood QSI works by choosing as follows

| (30) |

III.3 1D Performance Metric

Having used a QSI procedure to determine the states of qubits in the chain up to the final qubit , we apply a Pauli operation that depends on these outcomes. In the ideal case by applying the recovery operator to the final qubit, we obtain the original logical state on that qubit. We can write the effect of both the QSI procedure and the recovery operation as a CPTP map where is the set of all measurement outcomes.

The quantum channel representing the overall teleportation operation, including encoding into a linear cluster state chain, photon loss, phase measurement QSI and recovery is

| (31) |

where

and

The quantum channel acts on an initial qubit state and maps it to an output qubit state encoded in the final RSB qubit of the cluster. is the noise channel commuted past the CROT gates as defined as in Eq. 13 and are the measurement operators..

We will use the entanglement fidelity as the performance metric to quantify the performance of the 1D cluster state. We follow the standard definition of entanglement fidelity [39] of the quantum channel by supposing that our system of interest , in our case the final qubit of the cluster state, is coupled to an ancilla qubit in a reservoir and letting

| (32) |

where denotes the identity channel on the ancilla qubit. may be any maximally entangled state but we choose .

III.4 1D Cluster State Results

III.4.1 Numerical Performance of Phase Measurements

We performed simulations of the minimal instance of the binomial encoded 1D cluster state scheme with a 3-qubit cluster state. Fig. 8 below quantifies the performance of the different measurement schemes. We see that both canonical phase and AHD measurement schemes significantly outperform heterodyne. Furthermore, there does not appear to be a notable difference between the performance of AHD and canonical phase measurements. This indicates that AHD is a good alternative to the optimal canonical phase measurement. Note that the nonzero infidelity at zero is due to the measurement error inherent described in Sec. III.1.4. Even in the absence of photon loss, due to the finite mean photon number of the binomial encoded qubits, measurement error will lead to qubit-level errors.

III.4.2 Qubit State Inference Comparison

We now examine a numerical comparison of the performance of the binning algorithm QSI, maximum likelihood QSI and local maximum likelihood QSI for the three qubit cluster state telecorrection scheme. These qubit state inference techniques are described in detail in Sec. III.2.

Fig. 9 shows the performance of the three QSI techniques for a 3-qubit cluster state with binomial code qubits, as a function of the loss parameter As expected, for no loss the QSI technqiues converge on the same value of infidelity. However as is increased, the binning algorithm performs significantly worse than both the maximum likelihood and local maximum likelihood QSI techniques. Notably, the performance of maximum likelihood and local maximum likelihood is statistically identitical here, indicating that the local maximum likelihood QSI is a good replacement for the full maximum likelihood QSI.

Together these results indicate that adaptive phase measurement combined with a local maximum likelihood QSI is a considerable improvement on the straightfoward heterodyne and binning approach to qubit measurement for the binomial code.

III.5 2D Surface Code Methods

To enable simulations of the full 2D surface code we need to introduce some additional methods. In the following we will discuss the twirling methods that we used in order to have tractable simulations as well as the details of the surface code decoder that we implemented.

III.5.1 X-Basis Pauli Twirl

For the purpose of numerical tractability when simulating these larger systems, we introduce an X-basis Pauli twirl for the RSB qubits. We show in Appendix C that in the limit where the twirl has no effect on the measurement statistics and this approximation becomes exact. For non-zero photon loss the twirled noise model is only approximately the same as the physical photon loss model. By removing certain coherences in the photon loss error model the twirl enables simulations to find the threshold of the planar code. We do not expect that the twirl qualitatively changes our results. Simulations that avoid it would require much more expensive simulations, for example based on tensor network simulations [40].

We define the twirl as a mapping to each qubit of the RSB code cluster state. The X-basis Pauli twirl acting on a state is given by

| (33) |

Note that while the twirl defined here affects qubit , the density matrix describes the state of the whole set of qubits, not all of which are affected by the twirl. The operator acts on the qubits that are not affected by the twirl.

Consider the first measurement to be performed on qubit given times and locations of the photon emissions labelled by . The state of the remaining qubits for a given phase measurement outcome will be

| (34) |

The norm of this state relates to the probability of the measurement outcome, and in fact we will only be interested in integrals over values of that map to a given outcome for qubit .

In our simulations we replace these states with states where a -basis twirl is applied prior to the measurement:

| (35) |

To see why this replacement could be justified, let denote the POVM elements of the noise-plus-measurement-plus-QSI process that is applied to the ideal RSB code qubits and aggregates the phase measurements into the outcomes for the measurement. The application of the Pauli twirl to this encoded ideal initial state is justified so long as is diagonal in the X-basis of the RSB code qubit since in this case . For the case that we provide a proof that in the case of the binning QSI in Appendix C.

Note that this replacement works only in the case where, as here, we are interested in some averaged quantity for the schemes such as average entanglement fidelity for a logical qubit channel. If we inspected the data conditioned on phase measurements analysed in some more fine grained way then this twirling approximation is not necessarily justified.

This twirl dramatically simplifies our numerical implementation. Suppose that the ideal initial cluster state of the scheme is . So in particular is in the codespace of the RSB code. Consider measuring qubit of the cluster as before. Then measuring qubit of an qubit cluster state will result in the state

| (36) |

This is a state on qubits. The adjoint noise map on qubit is

| (37) |

where denotes the loss operator acting on qubit with the times and locations of photon emissions given by . The noise map on all qubits other than is

| (38) |

Eq. III.5.1 is simply stating that after the the measurement, the remaining cluster state is in a mixture of “qubit was in the logical plus state when measured”, and “qubit was in the logical minus state when measured”, with probabilities

| (39) |

where and the denominator of the above expression can be inferred by the normalisation requirement. Note that to obtain this we have used the fact that which is a readily verified property of the ideal cluster state . While we have focussed here on a single measurement, clearly each successive measurement can be treated in the same way since after the first measurement the states are again ideal cluster states in the RSB code subspace.

In our numerical simulations we sample using these probabilities to determine which state the qubit was in when measured. The Pauli X-basis twirl is implicit in this step of the algorithm. We then compare this outcome to the outcome we get by inferring the qubit state from the phase measurement outcome . A bit flip error is placed on the qubit if the ‘actual’ state of the qubit disagrees with the inferred measurement outcome.

III.5.2 Modified MWPM Decoder

We can further optimise the performance of RSB codes in our concatenated model by modifying the standard MWPM decoder. The standard MWPM decoder works by taking the error syndromes and forming a complete graph, where edge weights between nodes are given by the Manhattan distance - that is, each each edge of the lattice is assigned unit weight. We can use a modified MWPM decoder to assign edge weights based on phase measurement results. We follow the approach of [41] by utilising the information contained in realistic phase measurements which is discarded when mapping to a binary X basis measurement outcome. As in [41], we adopt the concepts of hard and soft measurement. In our model, the phase measurement of the RSB qubit gives the ‘soft’ measurement outcome , which is then processed by a QSI technique and mapped onto an observed ‘hard’ outcome , corresponding to the , respectively. Recall the discussion in Sec. III.5.1 in which we stated that after subjecting a qubit of the cluster to the X-basis Pauli twirl, we sample probabilistically according to Eq. 39 to determine whether the qubit was in the state when measured. We define the ‘ideal’ hard outcome to be this sampled outcome, which is kept track of in our numerical simulation but is not accessible to the decoder.

During the MWPM algorithm, a complete graph is created from nodes of the syndrome graph. Distances are then calculated between all possible combinations of node pairs. If, for a given node pair, edge weights of the syndrome graph are calculated using soft measurement outcomes, distances cannot be precomputed and must be evaluated using modified MWPM algorithm. This means that for each node pair in the complete graph, modified MWPM algorithm must be run again. In contrast, if edge weights for a node pair are given fixed unit weight, modified MWPM algorithm need only be run a single time. We use a hybrid model in which for nodes separated by Manhattan distances greater than , edge weights are calculated using observed hard measurement outcomes, whilst for nodes pairs for which , edge weights are calculated using soft measurement outcomes. This provides the advantage of exploiting soft information for a more accurate syndrome decoder, whilst avoiding the long runtime of exhaustively calculating shortest paths between every possible node pair combination.

Let the soft outcome observed be , the probability distribution function of which, conditioned on the ideal hard outcome , is . For the maximum likelihood and local maximum likelihood QSI techniques, is given by Eqs. 23 and 29 respectively. The soft outcome is then mapped onto the observed hard outcome according to to hardening map

| (40) |

The QSI technique we choose gives the explicit form of the hardening map. In our 2D surface code simulations, the two QSI techniques we employ are the binning algorithm QSI and local maximum likelihood QSI. The full maximum likelihood QSI would be too computationally expensive.

We say that a bit flip occurs on the qubit if the observed hard outcome disagrees with the ideal hard outcome. Again, this information is accessible only to the simulation and not to the decoder.

As mentioned, a standard MWPM decoder proceeds by assigning each edge of the surface code lattice unit weight, This is equivalent to saying that each qubit is equally likely to have experienced an error. However this assumption is not accurate in general and the information contained in the soft measurement outcomes can be utilised to weight the qubits (edges) according to the probability that the observed hard outcome disagrees with the ideal hard outcome, and thus the probability that the qubit experienced an error. We define the likelihood ratio for a given qubit

| (41) |

where is the soft measurement outcome, is the observed hard measurement outcome and is the ‘other’ value of the hard outcome, ie Edges for which the soft measurement outcome is used to determine the weight are then weighted according to

| (42) |

whilst edges whose weights are determined by the observed hard measurement outcome are weighted as

| (43) |

where is the physical error rate. In our model, the physical error rate is given by the effective bit flip rate due to measurement error and photon loss, and can be calculated numerically. This is done by, for a given loss rate, simulating the code subject to loss and averaging over the number of qubits suffering a bit flip to obtain an effective bit flip rate.

III.5.3 2D Performance Metrics

We quantify the behaviour of the 2D foliated surface code in terms of its threshold value.

The threshold of a code refers to the error rate below which increasing the size of the code decreases the logical error rate. For realistic computations, it will be necessary to operate in the sub-threshold regime. Therefore, having a high threshold is a desirable property as it reflects a code’s capacity to tolerate noise whilst still being able to do computations.

Finally, we note that we benchmark the performance of the binomial code against the trivial Fock space encoding, as well as binomial codes of different orders of discrete rotational symmetry.

III.5.4 Overview of Numerical Model

We now provide a sketch of the numerical model used.

Implementation of noise: As described in Eq. 14 and in AppendixA the photon emission times for each qubit can be sampled independently according to a probability distribution that ignores the CROT gates. The outcome of this sample is an array describing the times and locations of photon emissions. Given this the error operator for each qubit is determined and the overall error operation is just the product of each of these single-qubit error operators.

Phase measurement: Iterating over the lattice, each qubit is subject to a phase measurement modelled by a rejection sampling algorithm with . Assuming a phase measurement with POVM the probability distribution used in rejection sampling is where the error operator for the qubit being measured is as defined in Eq. 13, and is the maximally mixed state. The initial state of the qubit is taken as the maximally mixed state which is the reduced density matrix of any single qubit in a cluster state, as can be shown by a simple stabilizer argument. This probability distribution corresponds to the -basis twirled phase measurement probability distribution implied by Eq. III.5.1. If the qubit being measured is primal, the measurement outcome is stored in a primal measurement outcome array, and similarly if the qubit is dual.

Single-qubit decoding: The binning algorithm QSI requires no input other than the soft measurement outcome of the qubit in question, and uses the discrete rotational symmetry of RSB codes to bin the measurement outcome in the complex plane, as previously described. The local maximum likelihood QSI uses the (soft) measurement outcome of each qubit, as well as the (hard) inferred measured outcomes of neighbouring qubits to determine the explicit form of the probability distribution to be maximised. Soft measurement outcomes are inferred sequentially across the lattice left to right, top to bottom, so that each qubit has already had measurement outcomes of neighbours ‘above’ it in the lattice inferred to use as input for its own QSI. Once the soft measurement outcome of the qubit has been inferred, for dual qubits we are done. For primal qubits we determine the ‘actual state’ of the qubit as described in Sec. III.5.1. The ‘actual state’ of the primal qubit is compared to the inferred measurement outcome. If they disagree, a bit flip error is placed on that qubit which is represented by a . Otherwise, no error has occurred and the qubit is assigned the value

Error correction: We begin by measuring logical Z before error correction. We choose one of the smooth boundaries. If an odd number of boundary qubits have errors, the logical Z measurement is , otherwise it is . Error correction proceeds as in Sec. II.3. Stabiliser measurements are modelled by, for each plaquette, multiplying together the stored values of all primal qubits comprising the plaquette. If a plaquette has an odd number of qubits with errors, there will be an odd number of values and so the result of the multiplication will be . Otherwise, it will be . Stabilisers with measurement outcomes form the error syndrome. The BlossomV implementation of the MWPM algorithm is then used to pair up nodes in the error syndrome. As we are modelling the planar code, there can be an odd number of stabiliser outcomes, so it is also possible to pair syndrome nodes with the boundary. If the modified MWPM algorithm decoder is being used, it is used as described in Sec. III.5.2 to calculate weights between pairs of syndrome nodes to feed into MWPM. Otherwise, each edge of the lattice is assigned weight one. The now paired syndrome nodes are used to determine if a logical error has occurred. We pick the same smooth boundary we used for our initial logical Z measurement. For each of the paired syndrome nodes, we check if the path between them crosses our chosen smooth boundary. If an odd number of crosses occur out of all pairs, the logical Z measurement is , otherwise it is We compare the logical Z measurement before correction to the new logical Z measurement. If they disagree, a logical error has occurred.

III.6 2D Surface Code Results

In this section we will discuss the results of our simulations of the planar code concatenated with binomial codes under loss errors.

III.6.1 Threshold curves

In this section we will refer to codes using heterodyne phase measurement and the binning algorithm QSI as Method 1 Codes, and codes using AHD measurement and local ML QSI as Method 2 Codes. Figs. 10,12,13,14 show the threshold for different codes as a function of measurement error and the photon loss rate. Measurement error is related to mean photon number as per Figs. 5,6, and is determined by the code parameters through The thresholds in the plots are determined for each code (fixed by ) by sweeping for different code distances.

Fig. 10 shows the threshold for a range of binomial codes against photon loss . Binomial codes with varying values of are compared to the trivial encoding. The data point for a given value of and is plotted at the effective measurement error that is determine for this code in Sec. III. This allows a comparison of the threshold for the trivial encoding with a given qubit measurement error. In this initial example, Method 1 codes are used. The most striking feature of this plot is that the trivial encoding significantly outperforms the binomial codes, for all values of Furthermore, increasing does not have an advantageous effect on the threshold, as might be expected. Finally, varying the binomial code parameter and therefore varying the effective measurement error (for a code of fixed ) also does not appear to significantly change the threshold.

These results can be understood as follows. Firstly examining Fig. 5 we see that for heterodyne measurement of binomial codes, increasing causes a significant jump in the measurement error, for a given . This is likely because each higher value of requires increased phase resolution for the phase measurement, going as . Moreover the total photon loss probability is proportional to mean photon number , which in turn is proportional to for binomial codes. Finally the phase uncertainty of the heterodyne phase measurement is proportional to . Therefore, the improved tolerance to loss we expect to see when increasing for a binomial code is counteracted by the increase in measurement error and the increase in the total photon loss probability, meaning that we do not see an improvement in threshold. As decreases, the measurement error increases, meaning that the decreased mean photon number and therefore reduced probability of photon loss is counteracted by the increase in measurement error. This explains why the threshold values for the binomial codes of varying roughly lie on a straight line in Fig. 10.

These effects are strongly suggested by Fig. 11, which plots the same data as Fig. 10, except against rather than . This effectively normalises the data against mean photon number. We see that binomial codes of higher do better by this measure, and furthermore that binomial codes outperform the trivial encoding. Thus if we look at the thresholds against the probability of a single photon emission occuring rather than against the probability for each photon to be lost then indeed there is increased tolerance to photon loss errors for the higher codes, although it is the original thresholds measured against that matter in practice.

We can improve upon the threshold results from Fig. 10 by using the Local Maximum Likelihood QSI rather than the Binning Algorithm QSI for single qubit measurements. Fig. 12 shows that using the Local ML QSI, whilst still using heterodyne measurement, results in improved threshold values for codes of lower . Local ML QSI takes into account an approximation of the local noise experienced by a qubit; the loss to which the qubit itself is subject, but also the dephasing errors that are propagated from loss errors on neighbouring qubits. Crucially, the dephasing errors are proportional to which is just enough to cause a measurement error on neighbouring qubits. Therefore, the Local ML QSI can be expected to have a significant effect on threshold compared to the Binning Algorithm QSI.

We can further improve threshold results by using AHD measurement rather than heterodyne measurement. As shown in Fig. 6, for AHD measurement, not only is the overall measurement error much lower than for heterodyne measurement, but also increasing increases the measurement error by a much smaller margin. The effects of this on threshold values are shown in Fig. 13. We have significant overall improvements in threshold using AHD measurement, and in particular increasing corresponds to an increase in threshold, for a given value of measurement error. As increasing does not cause a large increase in measurement error for AHD measurement, the increase in reflects the increased capacity of a code to tolerate loss.

The final improvement to threshold results we can make is by using modified MWPM decoding to improve MWPM. Modified MWPM decoding utilises the continuous variable nature of bosonic codes to weight error paths and improve the accuracy of the matching step of the MWPM algorithm. Fig. 14 shows that using modified MWPM decoding significantly increases threshold values relative to MWPM decoding. Interestingly, we see and outperforming the code. For codes of higher , it is more difficult to distinguish between codewords as their angular separation in phase space is less and in addition they have higher photon numbers leading to more photon loss events. Despite the increased loss tolerance of the high codes it appears that is the optimal value when subject to these competing effects.

As we noted above the measurement errors resulting from imperfect phase measurement depend on the parameters of the binomial code. Higher values of offer enhanced phase resolution at the price of increased as shown in Figs. 5,6. For this reason the best choice of code balances better phase resolution with increased loss due to higher mean photon number. For both regular and modified MWPM decoding, this optimum appears to be at a value of measurement error . This corresponds to an optimal choice of for each value of . This behaviour is quite distinct from what we saw in Fig. 10 where there was only a very weak dependence of the threshold on . This is likely because the phase resolution in Method I is dominated by poor performance of heterodyne phase measurement which has phase uncertainty scaling like relative to the AHD phase measurement which has uncertainty scaling like . This opens a window over which it is possible to improve performance by increasing phase resolution at the price of increased .

III.6.2 Sub-threshold Behaviour

While we have found that the thresholds against photon loss of the binomial codes are at best only comparable to the trivial encoding, this reflects the behaviour of these codes at high levels of photon loss. Far below the threshold the increased loss tolerance of the binomial codes could result in reduced overhead using binomial codes. This motivates us to look at the sub-threshold performance of these codes by investigate the scaling of logical errors with the distance of the planar code.

Below the code threshold, it is well known [42] that the logical error rate of the code will scale as

| (44) |

where is the code distance. Clearly, we may obtain as the slope of a plot of against We quantify the performance of the codes in the subthreshold regime by this parameter A steeper slope corresponds to a faster decay of the logical failure rate with increased code distance. This is desirable as it means that for a fixed target logical failure rate, a code with a larger would require a smaller code size.

Fig. 15 shows vs for binomial codes of for both Method 1 and Method 2 Codes. For Method 1 Codes, we see increase as decreases, as expected. We also see that higher corresponds to higher an effect which grows as decreases. This is expected as codes with higher are better able to correct loss errors. In the sub-threshold regime where is low, this property of higher codes is is evident as it is not outweighed by the detrimental effect of higher mean photon number. For Method 2 Codes, we see a similar pattern. In both cases, having higher appears to be advantageous. For a given value of , Method 2 codes have higher values than Method 1 codes, leading us to the conclusion that Method 2 codes with a higher discrete rotational symmetry number are the best performing codes to use in the sub-threshold regime. Note that when Method 2 is used, the codes have a higher threshold compared to Method 1. Therefore, for a given Method 1 Codes will be at a lower fraction of the threshold value than Method 2 Codes. The trivial encoding outperforms both Method 1 and Method 2 codes in the subthreshold regime.

However again we note that the threshold of the trivial encoding is significantly higher than the binomial codes; therefore, for a given the trivial encoding is at a lower proportion of threshold, so better performance is unsurprising.

The comparison to the trivial encoding in the sub-threshold regime in particular should be interpreted as a helpful benchmark rather than a rigorous prediction of how the trivial encoding would actually perform in this regime. We have not attempted to use a realistic measurement model for the trivial encoding but rather ideal projective measurements with no measurement error. In contrast, we have subjected the binomial codes to realistic models of measurement. This effect is particularly important to note in the sub-threshold regime, where the strength of noise due to photon loss is low and therefore the impact of measurement error is more significant.

Figs. 1617 corrects for this by plotting against normalised by the threshold value, We are most interested in the right hand side of these plots, which is the region in which we are further away from ie further below threshold. Sufficiently below threshold, Method 2 codes outperform their Method 1 counterparts with the same values. For Method 2 codes outperform Method 1 for all fractions of threshold. For Method 2 codes do better lower than below threshold. Realistic quantum computers are expected to operate far below threshold and Method 2 codes have superior subthreshold scaling in this regime.

Furthermore, codes of higher outperform their lower counterparts sufficiently far below threshold. For Method 2 codes, below of threshold it is advantageous to have higher . For Method 1 codes this is true below of threshold. For instance, for the Method 1 code at we have whilst for the Method 2 code, . This difference would scale up to a significant reduction in overhead in a realistic setting. Consider, for instance, using these codes to achieve a target logical error rate of which is standard for quantum chemistry algorithms [43]. For the Method 1 code, this would require a code distance of 115, whilst for the Method 2 code it would require a code distance of 28. Given that the codes are 2D, this amounts to saving over 12000 qubits.

Compared to the trivial encoding we see codes do better for Method 1 and codes do better for Method 2 when the location of threshold is taken into account. This reinforces the advantage obtained by using higher codes in the sub-threshold regime, especially for Method 2 codes.

In this comparison where we have attempted to factor out the location of the threshold in order to study sub-threshold performance, note that that the location of the threshold will be a crucial consideration in practice. Namely, for codes with a higher threshold, it will be easier to access the sub-threshold regime. Overall the main point here is that codes with lower mean photon number can be highly advantageous, and that the choice of RSB code in a large-scale computation needs careful consideration. The best choice likely depends on details that we have not attempted to capture in this preliminary investigation.

IV Discussion and Conclusion

In this paper, we have investigated a scheme for quantum computation in which the binomial code was concatenated with the surface code and realised by MBQC. We subjected this scheme to a realistic model of photon loss and compared several methods of phase measurement and qubit state inference. We also incorporated the soft information available from phase measurements into the decoding process by using a hybrid modified MWPM algorithm decoder. Using these techniques, we found phase diagrams of code thresholds as a function of loss, and measurement error, , for codes of varying orders of discrete rotational symmetry, . Finally, we investigated the performance of these codes in the subthreshold regime.

A key conclusion of our analysis is the impact of the quality of phase measurement on the performance of binomial codes. As shown in Fig. 5, increasing the order of discrete rotational symmetry , and therefore increasing the mean photon number, significantly increases the effective measurement error for heterodyne measurement. This counteracts the advantage of increased tolerance to photon loss possessed by codes of higher . This effect can be clearly seen in Figs. 12, 10, in which for a fixed varying the measurement error by changing the binomial code parameter gives a roughly flat line in This affect arises because the probability of a photon loss event is roughly linear in while the phase resolution of the heterodyne measurement is roughly inversely proportional to . The phase resolution of heterodyne measurement does not grow sufficiently with increased mean photon number to counteract the increased rate of photon loss events in that limit.

It is therefore necessary to use an adaptive homodyne phase measurement to realise the full power of the binomial codes. The improved phase resolution of adaptive homotdyne schemes enables the increased loss tolerance of higher codes to ‘win out,’ resulting in higher thresholds against loss.

The other main performance improvement we saw came from using the modified algorithm decoding rather than unweighted MWPM decoding. By using the soft information of the continuous variable measurement outcomes, we were able to achieve a threshold against loss of , compared to using MWPM. This is not only a significant improvement, but is objectively a high threshold against loss.

An important consideration is our comparison of the performance of binomial codes to the trivial encoding. We find that even our best scheme for phase measurement and quantum state inference results in thresholds that are at best comparable to those of the trivial encoding with some measurement error probability. The trivial encoding only has a maximum of a single photon per qubit, and therefore performs relatively well against loss because it has a very low mean photon number. We believe that the conclusion that should be drawn from this is that the which error correcting coding is best in a given situation will depend on details of the noise model in a given physical system that are beyond the scope of this work. When modelling the trivial encoding we assumed ideal measurements and added the measurement error as classical noise on the measurement outcome. A more accurate comparison would simulate realistic measurement for the trivial encoding. Whether or not the trivial encoding does actually outperform the binomial code would depend on the noise of such a detailed implementation. One way of making a comparison between the trivial and binomial codes in these simulations is to note that the threshold against loss that we found corresponds to a trivial encoding with a measurement error rate of around .

Finally we studied the supression of logical errors below threshold. In this case we do see that the binomial codes are able to suppress logical errors more rapidly with increasing when the photon loss rate is low enough.

Our analysis has shown that for the binomial code concatenated with the surface code, the performance is highly dependent upon accurate phase measurement, qubit state inference and decoding. All of these features need to be well chosen for the apparent advantage of binomial codes in tolerating photon loss errors to be realised in practice.

Acknowledgements

We thank Ben Brown for his contributions to the initial stages of this project. We acknowledge support from Australian Research Council via the Centre of Excellence in Engineered Quantum Systems (CE170100009). We acknowledge the traditional owners of the land on which this work was undertaken at the University of Sydney, the Gadigal people of the Eora Nation.

Appendix A Quantum Trajectory Simulation of Loss

A.1 Justification of independent sampling of cluster qubits

We aim to produce the state corresponding to the graph graph which has vertices total. This can be produced by applying a CROT gate to all pairs of qubits that share an edge of :

| (45) |

where

| (46) |

and

We aim to simulate photon losses occurring at random times during the implementation of these gates using a quantum trajectories approach, as per [44]. Having found an analytic expression for these quantum trajectories we show by analysing the norm of the full evolution of the cluster that loss times can be sampled independently for each qubit of the cluster.

According to quantum trajectory theory the evolution of the quantum state between photon emissions is given by the non-Hermitian Hamiltonian

| (47) |

where the tilde indicates that the state is unnormalised.

Suppose that photons in total have been emitted during the gate time for the gate CROT gate. The th photon emission removes a photon from the mode with . The time between the th and th photon emission is . ( where is the final photon emission time).

As in the main text the vector is a temporally ordered list of photon emission times for mode . The number of emissions for mode is . The full set of emission times is recorded in the array . We have .

Trajectory theory states that the non-Hermitian time evolution operator corresponding to the noisy gate with the specified photon emission times is

| (48) |

We will make use of the following identities [25] to simplify this expression,

| (49) | ||||

| (50) |

One can show using induction that the time evolution operator corresponding to this sequence of photon loss events is

| (51) |

The operators are as defined in the main text. We have used the fact that .

This expression gives the error process as the product of ideal CROT gates followed by photon losses on each qubit. We used this representation of the errors in the main text and also in our simulations. However for the simulations it is necessary to be able to sample accurately from the distribution over photon loss events . To compute the probability density for this pattern of emissions it is helpful to also be able to write the noise operator as photon emissions followed by the ideal gate. We can rearrange the expression above as follows

| (52) |

We now consider the inner product

| (53) |

Where indicates the normally ordered product of operators and so

| (54) |

Eq. A.1 shows that the probability for obtaining a given pattern of photon emissions is a simple product distribution over the modes. This greatly simplifies the task of drawing random samples of photon emission events during the CROT gate. Moreover it is clear that the probability distribution has no dependence on . A simple calculation shows that in fact the photon emission probabilities are the same as for uncoupled modes experiencing photon loss at rate for a time and no other dynamics. This justifies the approach of independently sampling loss times for the cluster qubits that is described in detail in the following subsection.

A.2 Sampling Algorithm

Given the result on the statistic of the photon emissions, we choose to simplify the numerical recipe by iterating across each qubit of the cluster, and sampling from each qubit independently as follows:

1. Generate a uniform random number .

2. Iteratively solve the Schrodinger equation

| (55) |

3. Do step 2 up until time at which

Apply the jump operator , renormalise the state.

4. Repeat steps 1-3 until

The output of this sampling is a particular set of photon emissions . The noisy state is then obtained by applying the noise operator to each qubit .

Appendix B POVMs for AHD Measurement

We follow the presentation of [30] in giving the theoretical details of AHD POVMs as follows

| (56) |

where

| (57) | ||||

| (58) |

and

| (59) |

are generalised binomial coefficients. The are recursively defined

| (60) | ||||

| (61) |

Appendix C X Basis Pauli Twirl

In the following we will show the invariance of the probability distribution of qubit measurement outcomes under an X-basis Pauli twirl in the case .

We start by defining the measurement operators as described in the main text in the limit of no photon loss. Given a phase measurement the POVM elements for qubit measurement are as follows

| (62) |

The integral over is determined by the QSI technique resulting in the measurement outcome either being binned as or . We will restrict our attention here only to a pure binning QSI.

We will show that

| (63) |

where are the -eigenstates of our RSB code.

If this we perform this measurement on a qubit that is part of a RSB code cluster state then this property is sufficient to ensure that the conditioned state satisfies

| (64) |

This identity means performing an -basis twirl on qubit does not affect either the probability of the measurement outcome or the post-measurement state.

Before making this calculation we recall the following definitions. An arbitrary phase measurement can be represented by the general POVM [29]

| (65) |

where is a Hermitian matrix with real positive entries, for all and And for a rotational symmetric bosonic code [25]

| (66) |

where the are real coefficients. The satisfy the following normalisation conditions

Suppose that we are using the binning algorithm QSI. There are binning regions centered at for Each binning region spans the angles where even corresponds to bins and odd corresponds to bins. The POVM for ‘measuring a X basis outcome’ is therefore given by the sum over binning regions for even (odd) , respectively. We will now integrate the term with dependence from Eq. 62, over the binning regions

| (67) | ||||

| (68) |

Consider the case

| (69) |

This is zero whenever is even, and is an even function of when that number is odd.

In the case we have

| (70) |

Now we can find

| (71) |

The first term here is zero because for all and due to the normalisation conditions on the . The final term is also zero. When is even the coefficients are zero. In the case that is odd the terms with and cancel pairwise. The factor in the summand does not change if we swap and while the factor changes sign. This is because if is odd then one and only one of are odd. Thus we have as required.

This gives the result for , the argument for is exactly the same.

References

- Chuang et al. [1997] I. L. Chuang, D. W. Leung, and Y. Yamamoto, Bosonic quantum codes for amplitude damping, Phys. Rev. A 56, 1114 (1997).

- Gottesman et al. [2001] D. Gottesman, A. Kitaev, and J. Preskill, Encoding a qubit in an oscillator, Phys. Rev. A 64, 012310 (2001).

- Cochrane et al. [1999a] P. T. Cochrane, G. J. Milburn, and W. J. Munro, Macroscopically distinct quantum-superposition states as a bosonic code for amplitude damping, Physical Review A 59, 2631 (1999a).

- Ofek et al. [2016] N. Ofek, A. Petrenko, R. Heeres, P. Reinhold, Z. Leghtas, B. Vlastakis, Y. Liu, L. Frunzio, S. Girvin, L. Jiang, et al., Extending the lifetime of a quantum bit with error correction in superconducting circuits, Nature 536, 441 (2016).

- Hu et al. [2019] L. Hu, Y. Ma, W. Cai, X. Mu, Y. Xu, W. Wang, Y. Wu, H. Wang, Y. Song, C.-L. Zou, et al., Quantum error correction and universal gate set operation on a binomial bosonic logical qubit, Nature Physics 15, 503 (2019).

- Campagne-Ibarcq et al. [2020] P. Campagne-Ibarcq, A. Eickbusch, S. Touzard, E. Zalys-Geller, N. E. Frattini, V. V. Sivak, P. Reinhold, S. Puri, S. Shankar, R. J. Schoelkopf, L. Frunzio, M. Mirrahimi, and M. H. Devoret, Quantum error correction of a qubit encoded in grid states of an oscillator, Nature 584, 368 (2020).

- Flühmann et al. [2019] C. Flühmann, T. L. Nguyen, M. Marinelli, V. Negnevitsky, K. Mehta, and J. Home, Encoding a qubit in a trapped-ion mechanical oscillator, Nature 566, 513 (2019).

- De Neeve et al. [2022] B. De Neeve, T.-L. Nguyen, T. Behrle, and J. P. Home, Error correction of a logical grid state qubit by dissipative pumping, Nature Physics 18, 296 (2022).

- Sivak et al. [2022] V. V. Sivak, A. Eickbusch, B. Royer, S. Singh, I. Tsioutsios, S. Ganjam, A. Miano, B. L. Brock, A. Z. Ding, L. Frunzio, S. M. Girvin, R. J. Schoelkopf, and M. H. Devoret, Real-time quantum error correction beyond break-even (2022).

- Rosenblum et al. [2018a] S. Rosenblum, P. Reinhold, M. Mirrahimi, L. Jiang, L. Frunzio, and R. J. Schoelkopf, Fault-tolerant detection of a quantum error, Science 361, 266 (2018a), https://www.science.org/doi/pdf/10.1126/science.aat3996 .

- Rosenblum et al. [2018b] S. Rosenblum, P. Reinhold, M. Mirrahimi, L. Jiang, L. Frunzio, and R. J. Schoelkopf, Fault-tolerant detection of a quantum error, Science 361, 266 (2018b), https://www.science.org/doi/pdf/10.1126/science.aat3996 .

- Ma et al. [2020] W.-L. Ma, M. Zhang, Y. Wong, K. Noh, S. Rosenblum, P. Reinhold, R. J. Schoelkopf, and L. Jiang, Path-independent quantum gates with noisy ancilla, Phys. Rev. Lett. 125, 110503 (2020).

- Reinhold et al. [2020] P. Reinhold, S. Rosenblum, W.-L. Ma, L. Frunzio, L. Jiang, and R. J. Schoelkopf, Error-corrected gates on an encoded qubit, Nature Physics 16, 822 (2020).

- Ma et al. [2021] W.-L. Ma, S. Puri, R. J. Schoelkopf, M. H. Devoret, S. Girvin, and L. Jiang, Quantum control of bosonic modes with superconducting circuits, Science Bulletin 66, 1789 (2021).

- Vlastakis et al. [2013] B. Vlastakis, G. Kirchmair, Z. Leghtas, S. E. Nigg, L. Frunzio, S. M. Girvin, M. Mirrahimi, M. H. Devoret, and R. J. Schoelkopf, Deterministically encoding quantum information using 100-photon schrödinger cat states, Science 342, 607 (2013), https://www.science.org/doi/pdf/10.1126/science.1243289 .

- Dennis et al. [2002] E. Dennis, A. Kitaev, A. Landahl, and J. Preskill, Topological quantum memory, Journal of Mathematical Physics 43, 4452–4505 (2002).

- Kitaev [2003] A. Kitaev, Fault-tolerant quantum computation by anyons, Annals of Physics 303, 2–30 (2003).

- Noh and Chamberland [2020] K. Noh and C. Chamberland, Fault-tolerant bosonic quantum error correction with the surface–gottesman-kitaev-preskill code, Physical Review A 101, 10.1103/physreva.101.012316 (2020).

- Noh et al. [2022] K. Noh, C. Chamberland, and F. G. Brandão, Low-overhead fault-tolerant quantum error correction with the surface-gkp code, PRX Quantum 3, 010315 (2022).

- Vuillot et al. [2019] C. Vuillot, H. Asasi, Y. Wang, L. P. Pryadko, and B. M. Terhal, Quantum error correction with the toric gottesman-kitaev-preskill code, Phys. Rev. A 99, 032344 (2019).

- Cochrane et al. [1999b] P. T. Cochrane, G. J. Milburn, and W. J. Munro, Macroscopically distinct quantum-superposition states as a bosonic code for amplitude damping, Phys. Rev. A 59, 2631 (1999b).

- Leghtas et al. [2013] Z. Leghtas, G. Kirchmair, B. Vlastakis, R. J. Schoelkopf, M. H. Devoret, and M. Mirrahimi, Hardware-efficient autonomous quantum memory protection, Phys. Rev. Lett. 111, 120501 (2013).

- Mirrahimi et al. [2014] M. Mirrahimi, Z. Leghtas, V. V. Albert, S. Touzard, R. J. Schoelkopf, L. Jiang, and M. H. Devoret, Dynamically protected cat-qubits: a new paradigm for universal quantum computation, New Journal of Physics 16, 045014 (2014).

- Michael et al. [2016] M. H. Michael, M. Silveri, R. Brierley, V. V. Albert, J. Salmilehto, L. Jiang, and S. Girvin, New class of quantum error-correcting codes for a bosonic mode, Physical Review X 6, 10.1103/physrevx.6.031006 (2016).

- Grimsmo et al. [2020] A. L. Grimsmo, J. Combes, and B. Q. Baragiola, Quantum computing with rotation-symmetric bosonic codes, Physical Review X 10, 10.1103/physrevx.10.011058 (2020).

- Raussendorf et al. [2002] R. Raussendorf, D. Browne, and H. Briegel, The one-way quantum computer–a non-network model of quantum computation, Journal of Modern Optics 49, 1299 (2002).

- Bolt et al. [2016] A. Bolt, G. Duclos-Cianci, D. Poulin, and T. Stace, Foliated quantum error-correcting codes, Physical Review Letters 117, 10.1103/physrevlett.117.070501 (2016).

- Brown and Roberts [2020] B. J. Brown and S. Roberts, Universal fault-tolerant measurement-based quantum computation, Physical Review Research 2, 10.1103/physrevresearch.2.033305 (2020).

- Wiseman and Killip [1998] H. M. Wiseman and R. B. Killip, Adaptive single-shot phase measurements: The full quantum theory, Physical Review A 57, 2169–2185 (1998).

- Hillmann et al. [2021] T. Hillmann, F. Quijandría, A. L. Grimsmo, and G. Ferrini, Performance of teleportation-based error correction circuits for bosonic codes with noisy measurements (2021), arXiv:2108.01009 [quant-ph] .

- Zhang et al. [2017] Y. Zhang, X. Zhao, Z.-F. Zheng, L. Yu, Q.-P. Su, and C.-P. Yang, Universal controlled-phase gate with cat-state qubits in circuit qed, Phys. Rev. A 96, 052317 (2017).

- Frattini et al. [2017] N. E. Frattini, U. Vool, S. Shankar, A. Narla, K. M. Sliwa, and M. H. Devoret, 3-wave mixing josephson dipole element, Applied Physics Letters 110, 10.1063/1.4984142 (2017).

- Wiseman and Milburn [2009] H. M. Wiseman and G. J. Milburn, Quantum measurement and control (Cambridge university press, 2009).