Computing Semilinear Sparse Models for Approximately Eventually Periodic Signals

Abstract

Some elements of the theory and algorithmics corresponding to the computation of semilinear sparse models for discrete-time signals are presented. In this study, we will focus on approximately eventually periodic discrete-time signals, that is, signals that can exhibit an aperiodic behavior for an initial amount of time, and then become approximately periodic afterwards. The semilinear models considered in this study are obtained by combining sparse representation methods, linear autoregressive models and GRU neural network models, initially fitting each block model independently using some reference data corresponding to some signal under consideration, and then fitting some mixing parameters that are used to obtain a signal model consisting of a linear combination of the previously fitted blocks using the aforementioned reference data, computing sparse representations of some of the matrix parameters of the resulting model along the process. Some prototypical computational implementations are presented as well.

keywords:

Autoregressive models, neural-network models, parameter identification, least-squares approximation, time-series analysis.1 Introduction

In this document, some elements of the theory and algorithmics corresponding to the computation of semilinear sparse models for discrete-time signals are presented. The study reported in this document is focused on approximately eventually periodic discrete-time signals, that is, signals that can exhibit an aperiodic behavior for an initial amount of time, and then become approximately periodic afterwards.

The main contribution of the work reported in this document is the application of a colaborative scheme involving sparse representation methods, linear autoregressive models and GRU neural network models, where each block model is first fitted independently using some reference data corresponding to some given signal. Subsequently, some mixing parameters are fitted, in order to obtain a signal model consisting of a linear combination of the previously fitted blocks, using the aforementioned reference data to fit the mixing coefficients. Along the process, some of the matrices of parameters of the resulting model are fitted using sparse representation methods. Some theoretical aspects of the aforementioned process are described in §3. As a byproduct of the work reported in this document, a toolset of Python programs for semilinear sparse signal model computation based on the ideas presented in §3 and §4 has been developed, and is available in Vides (2021a).

The applications of the sparse signal model identification technology developed as part of the work reported in this document, range from numerical simulation for predictive maintenance of industrial equipement and structures, to geological data analysis.

2 Preliminaries and Notation

Given , let us consider the function defined by the expression

Given a matrix with singular values denoted by the expressions for . We will write to denote the number

Given a time series , a positive integer and any , we will write to denote the vector

Given an ordered sample from a time series , we will write to denote the Hankel type trajectory matrix corresponding to , that is determined by the following expression.

We will write to denote de identity matrix in , and we will write to denote the matrices in representing the canonical basis of (each equals the -column of ).

We will write to denote the set . Given any matrix , we will write to denote the conjugate transpose of . A matrix will be called an orthogonal projector whenever . Given any matrix , we will write to denote the spectrum of , that is, the set of eigenvalues of .

3 Semilinear Modeling of Approximately Eventually Periodic Signals

A discrete-time signal represented by a times series is said to be approximately eventually periodic (AEP) if it can be aperiodic for an initial amount of time, and then becomes approximately periodic afterwards. In other words, there are and two integers such that for each and each integer . The integer will be called an approximate period of .

Remark 1

Based on the notion of approximately eventually periodic signal considered on this study, it can be seen that given an AEP signal , there is a positive integer such that the tail of is approximately periodic.

3.1 Semilinear Sparse Autoregressors

Given an AEP signal and a lag value . For the study reported in this document we will consider semilinear signal models of the form:

| (1) |

where denotes a linear operation defined by the expression

| (2) |

the term represents a linear combination of neural networks based on gated recurrent unit (GRU) layers, whose structure is described by the block diagram

| (3) |

and represents some suitable error term. For some given integer , each GRU cell in the GRU block in (3) is described for each by the following equations:

| (4) |

with , and where denotes the sigmoid function. The configuration considered in (3) is based on a GRU layer in order to prevent vanishing gradients, by taking advantage of the GRU structure presented in Cho et al. (2014). Although LSTM networks can be used to prevent vanishing gradients as well, for the study reported in this document GRU networks were chosen instead of LSTM networks, as they have fewer trainable parameters. The affine layer of the neural network described in (3) is determined by the expression

In order to compute models of the form (1), we can combine sparse autoregressive models of the form (2) that can be computed using the methods presented in Vides (2021b), with GRU neural network models of the form (3) that can be computed using the computational tools provided as part of TensorFlow, Keras and PyTorch, that are described as part of Chollet et al. (2015) and Paszke et al. (2019).

An approximate representation

of the linear component of (1) such that

can be computed using some sample and a corresponding subsample for some suitable , by approximately solving the matrix equation

| (5) |

using sparse least-squares approximation methods.

Schematically, the semilinear autoregressors considered in this study can be described by a block diagram of the form,

| (6) |

where the block is represented by (2), each block is represented by (3), and where the block is a mixing block defined by the expression

for some coefficients to be determined and some given , with and for each and each .

The details of the computation of the neural network blocks of model (1) will be omitted for brevity, for details on the theory and computation of the GRU neural network models considered for this study the reader is kindly referred to Cho et al. (2014), Chollet et al. (2015), Paszke et al. (2019) and Vides (2021a).

Several interesting papers have been written on the subject of hybrid time series models that combine ARIMA and ANN models, two important references on this matter are Zhang (2003) and Khandelwal et al. (2015). Besides using sparse AR models instead of ARIMA models, another important distinctive aspect of the modeling approach reported in this document, is that instead of using the recurrent neural network components of (6) represented by in (1) to approximate the residuals . Using some suitable training subsets of a given data sample from an arbitrary AEP signal under consideration, first the parameters of the blocks , , , of (6) are fitted independently using , and then the coefficients of the block of (6) are fitted using and some corresponding predicted values generated with , , , . Computing sparse representations of some of the matrix parameters of the resulting model along the process.

3.2 An Operator Theoretic Approach to the Computation

of Linear Components of Semilinear Sparse Autoregressors

Given an AEP signal whose behavior can be approximately described by a model of the form (2), that can be computed by approximately solving an equation of the form (5) for some suitable integers with , and given some sample , if we consider any sample , such that for some positive integer the states in satisfy the conditions , for each . We will have that the matrix

| (7) |

will approximately satisfy the condition

| (8) |

Using matrices of the form (7) one can express linear models of the form (2) as follows.

| (9) |

One can observe that to each model of the form (2), there corresponds a matrix of the form (7). From here on, a matrix that satisfies the previous conditions will be called the matrix form of a linear model of the form (2).

Given , and two matrices and , let us write to represent the problem of finding , and an orthogonal projector such that . The matrix will be called a solution to the problem .

Theorem 2

Given , two integers , a sample from an AEP signal with , and a matrix . If , then there is a sparse matrix with at most nonzero entries such that .

Since by (Vides, 2021b, Lemma 3.2). This result is a consequence of the application of (Vides, 2021b, Theorem 3.6) to the problem .

Given an AEP signal with approximate period and a signal model for of the form (1), if for the linear component of the corresponding residuals are small, then the significative contribution of to the modeling process of , will be beneficial for interpretability purposes.

Let us consider an AEP signal with approximate period and approximately periodic tail . Given some suitable integer lag value such that there is an approximate linear model for of the form (2) with small residuals . Let be the matrix form of and let . By applying a Krylov subspace approach along the lines presented in (Saad, 2011, §6.1), and as a consequence of (Vides, 2021b, Theorem 4.3.), one can find a matrix whose columns form an orthonormal basis of the subspace determined by the expression

such that each satisfies the relation , for some . The matrix will be called an approximately periodic (AP) -section of and will be denoted by .

Remark 3

Based on the previous considerations, when a given AEP signal with approximate period is well explained by the linear component of a semilinear model of the form (1), that is, when the corresponding residuals are relatively small, the matrix corresponding to the model should mimic the approximate periodicity of the approximately periodic tail of , in the sense that the number should be relatively small for some suitable matrix norm (in the sense of (Saad, 2011, §1.5)). Consequently, the points in should cluster around .

4 Algorithms

The ideas in section §3 can be tanslated into a prototypical algorithm represented by algorithm 1, that relies on (Vides, 2021b, Algorithm 1), Theorem 2 and (Vides, 2021b, Theorem 4.3.).

-

0:

Estimate the lag value using auto-correlation function based methods;

5 Numerical Experiments

In this section, some computational implementations of the methods reported in this document are presented. The experimental results documented in this section can be replicated using the function NumericalExperiment.py or the Jupyter notebook SLSpAARModelsDemo.ipynb, that are available in Vides (2021a). The configuration required to replicate the results in this section is available as part of the aforementioned programs.

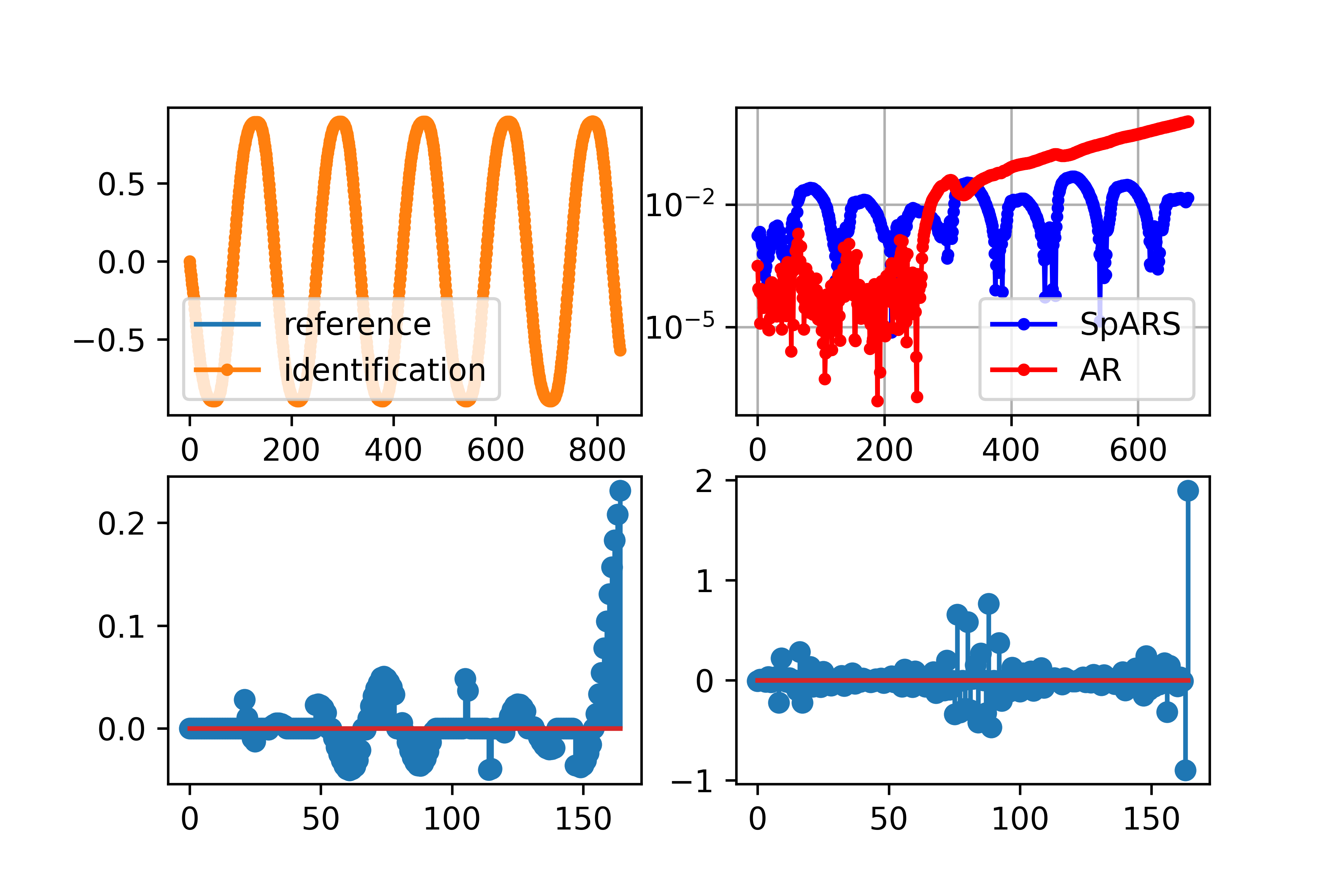

From here on, we will refer to the sparse semilinear models proposed in this document as SpARS models. The signal approximations computed using the SpARS models presented in this document are compared with the approximations obtained using standard AR models, and the standard AR models are computed using the Python program Autoreg included as part of the statsmodels module. In this section we will write to denote nonzero elements. For the experiments documented in this section two GRU RNN blocks were used, the block was computed using TensorFlow 2.6.0 and its input weights were replaced by their corresponding sparse representations, that were computed using (Vides, 2021b, Algorithm 1) along the lines of Theorem 2, and the block was computed using PyTorch 1.9.1+cpu and its input weights were left unchanged.

5.1 Numerical Experiment 1

In this section, algorithm 1 is applied to compute a SpARS model for the signal data sample recorded in the csv file VortexSheddingSignal.csv in the DataSets folder in Vides (2021a). The graphic representations of the results produced by the command line

>>> NumericalExperiment(1)

In every figure like figure 2, the red dots represent the points in each considered spectrum, the blue lines represent , and the number represents the estimated approximate period for each signal considered.

5.2 Numerical Experiment 2

In this section, algorithm 1 is applied to compute a SpARS model for the signal data sample recorded in the csv file NLOscillatorSignal.csv in the DataSets folder in Vides (2021a). The graphic representations of the results produced by the command line

>>> NumericalExperiment(2)

5.3 Numerical Experiment 3

In this section, algorithm 1 is applied to compute a SpARS model for the signal data sample recorded in the csv files:

-

•

art_daily_no_noise.csv

-

•

art_daily_small_noise.csv

that are included as part of the datasets described in Ahmad et al. (2017). The graphic representations of the results produced by the command line

>>> NumericalExperiment(3.1)

for the periodic signal without noise are shown in figures 5 and 6, respectively. The graphic representations of the results produced by the command line

>>> NumericalExperiment(3.2)

for the periodic signal with noise are shown in figures 7 and 8.

5.4 Approximation Errors

The approximation root mean square errors (RMSE) are summarized in table 1.

| Experiments | SpARS Model | AR Model |

|---|---|---|

| Experiment 1 | 0.0031265041 | 0.0000035594 |

| Experiment 2 | 0.0162975118 | 0.3100591516 |

| Experiment 3.1 | 0.0000000000 | 0.0000000074 |

| Experiment 3.2 | 3.9894749119 | 4.0939437825 |

It is appropriate to mention that the root mean square errors can present little fluctuations as one performs several numerical simulations, due primarily to the nature of the neural-network models, as the linear components tend to present very low or no variability from simulation to simulation.

5.5 Data Availability

The Python programs that support the findings of this study are openly available in the SPAAR repository, reference number Vides (2021a). The time series data used for the experiments 1 and 2 documented in §5.1 and §5.2, respectively, are available as part of Vides (2021a). The time series data used for experiment 3 in §5.3 are available as part of the Numenta Anomaly Benchmark (NAB) described in Ahmad et al. (2017).

6 Conclusion

Although in some experiments in §5 the root mean square errors corresponding to the AR and SpARS models are similar, the AP -sections corresponding to the SpARS models exhibit a better mimetic approximately periodic behavior in the sense of remark 3, as it can be visualized in figures 2, 4, 6 and 8. This mimetic behavior is interesting not just from a theoretical point of view, as it provides a criterion for how well the linear component of a given model mimics or captures the eventual approximate periodic behavior of the signal under study, but also for practical computational reasons, as long term predictions or simulations can be affected when the eigenvalues of the AP -section of the matrix form corresponding to the linear component of a signal model, lie outside the set , as one can observe in figures 3 and 4. Another advantage of the SpARS modeling technology is the reduction of the amount of data needed to fit the corresponding linear component, when compared to a nonsparse linear model, as illustrated in §5.1. Although the RMSE for the standard AR model was smaller than the RMSE of the SpARS model, only of the reference data were needed to fit the sparse model, while of the reference data were needed to fit the standard AR model. This difference for the amounts of training data was only necessary for the experiment in §5.1 due to the relatively small data sample size with respect to the lag value, for the other experiments, of the reference data were used to train both the sparse and the nonsparse models.

The numerical simulations documented in this paper were performed with computational resources from the Scientific Computing Innovation Center of UNAH, as part of the researh project PI-063-DICIHT. Some experiments were performed on Google Colab and IBM Quantum Lab as well.

References

- Ahmad et al. (2017) Ahmad, S., Lavin, A., Purdy, S., and Agha, Z. (2017). Unsupervised real-time anomaly detection for streaming data. Neurocomputing, 262, 134–147. https://doi.org/10.1016/j.neucom.2017.04.070. Online Real-Time Learning Strategies for Data Streams.

- Cho et al. (2014) Cho, K., van Merriënboer, B., Gulcehre, C., Bahdanau, D., Bougares, F., Schwenk, H., and Bengio, Y. (2014). Learning phrase representations using RNN encoder–decoder for statistical machine translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), 1724–1734. Association for Computational Linguistics, Doha, Qatar. 10.3115/v1/D14-1179. URL https://aclanthology.org/D14-1179.

- Chollet et al. (2015) Chollet, F. et al. (2015). Keras. https://keras.io.

- Khandelwal et al. (2015) Khandelwal, I., Adhikari, R., and Verma, G. (2015). Time series forecasting using hybrid arima and ann models based on dwt decomposition. Procedia Computer Science, 48, 173–179. https://doi.org/10.1016/j.procs.2015.04.167. International Conference on Computer, Communication and Convergence (ICCC 2015).

- Paszke et al. (2019) Paszke, A., Gross, S., Massa, F., Lerer, A., Bradbury, J., Chanan, G., Killeen, T., Lin, Z., Gimelshein, N., Antiga, L., Desmaison, A., Kopf, A., Yang, E., DeVito, Z., Raison, M., Tejani, A., Chilamkurthy, S., Steiner, B., Fang, L., Bai, J., and Chintala, S. (2019). Pytorch: An imperative style, high-performance deep learning library. In H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett (eds.), Advances in Neural Information Processing Systems 32, 8024–8035. Curran Associates, Inc.

- Saad (2011) Saad, Y. (2011). Numerical Methods for Large Eigenvalue Problems. Society for Industrial and Applied Mathematics. 10.1137/1.9781611970739.

- Vides (2021a) Vides, F. (2021a). Spaar: Sparse signal identification python toolset. URL https://github.com/FredyVides/SPAAR.

- Vides (2021b) Vides, F. (2021b). Sparse system identification by low-rank approximation. CoRR, abs/2105.07522. URL https://arxiv.org/abs/2105.07522.

- Zhang (2003) Zhang, G. (2003). Time series forecasting using a hybrid arima and neural network model. Neurocomputing, 50, 159–175. https://doi.org/10.1016/S0925-2312(01)00702-0.