Compression Ratio Learning and Semantic Communications for Video Imaging

Abstract

Camera sensors have been widely used in intelligent robotic systems. Developing camera sensors with high sensing efficiency has always been important to reduce the power, memory, and other related resources. Inspired by recent success on programmable sensors and deep optic methods, we design a novel video compressed sensing system with spatially-variant compression ratios, which achieves higher imaging quality than the existing snapshot compressed imaging methods with the same sensing costs. In this article, we also investigate the data transmission methods for programmable sensors, where the performance of communication systems is evaluated by the reconstructed images or videos rather than the transmission of sensor data itself. Usually, different reconstruction algorithms are designed for applications in high dynamic range imaging, video compressive sensing, or motion debluring. This task-aware property inspires a semantic communication framework for programmable sensors. In this work, a policy-gradient based reinforcement learning method is introduced to achieve the explicit trade-off between the compression (or transmission) rate and the image distortion. Numerical results show the superiority of the proposed methods over existing baselines.

I Introduction

Cameras have become ubiquitous in robotic systems and enable robots to evaluate the environment, infer their status, and make intelligent decisions. However, modern cameras always suffer from the age-old problem of limited dynamic range caused by the full-well capacity, dark current noise, and read noise [1], and the challenge of motion blur resulting from either object motion during the image exposure or camera motions due to the robot movements.

To overcome some of these challenges, computational imaging techniques have been widely used for generating high dynamic range (HDR) images [2, 3, 4] or high-speed videos [5, 6]. These methods use spatially-varying pixel exposure or pixel-wise coded exposure along with optimization techniques to get improved performance in imaging systems. Among these methods, snapshot compressive imaging (SCI) [7, 8, 9] captures a set of consecutive video frames with one single exposure and is popular for realizing sparse measurements and low requirements on memory, bandwidth, and power [10].

Due to the hardware limitation of conventional cameras, existing SCI systems capture an image by exposing photo-sensitive elements for a fixed exposure time, leading to a fixed temporal compression ratio for all pixels. The fixed compression ratio of the current SCI systems severely limits their abilities to record natural scenes in a measurement-efficient way. With a small compression ratio, more measurements are sampled, increasing the power, memory, and related-resources. With a large compression ratio, fewer samples are needed but the reconstructed video frames have poor quality. If pixels within SCI systems can be generated under different compression ratios, the video compressive sensing system can maintain high quality reconstruction and achieve efficient measurement. However, such a video compressive sensing system not only has special requirements on the hardware, but also requires an optimal pixel-wise compression ratio assignment policy, which is not trivial as both the shot/read noises and object/camera motions will affect the choice of compression ratios.

Fortunately, programmable sensors or focal-plane sensor–processors [11, 12, 13] can vary compression ratios spatially through pixel-level control of exposure time and read-out operations. Recent works in deep optics, on the other hand, demonstrate the superiority of jointly learning optic parameters and image processing methods over the traditional computational imaging techniques with heuristic designs on optics for applications in HDR imaging [14], video compressive sensing [15, 16]111In these deep optic-based works on video compressive sensing, the coded aperture and the exposure time for generating a snapshot image is optimized but the compression ratio, the number of measurements over a time window, is fixed. Different from these works, we focus on studying the shutter speed and read-out frequencies to adjust per-pixel compression ratio. The most significant difference is that some pixel locations will have more measurements than other locations in the captured sensor data of our system., and motion deblurring [17]. Inspired by these pioneering works, we focus on minimizing the number of measurements for various applications and develop a novel video compressive sensing system with pixel-wise compression ratios, where the ratio allocation policy and the video reconstruction algorithms are optimized through a combination of supervised learning and policy gradient reinforcement learning (RL) [18, 19].

In addition to sensor development, we also study the optimal transmission method for sensor data generated by programmable sensors in terms of source and channel coding methods since the raw sensor data collected by the sensors at the robots need to be sent to the admins for human-robot interaction. For the sensing systems with image-type data [17, 15, 20], off-the-shelf codecs (e.g. BPG [21] or JPEG [22]) as well as other deep image compression methods [23] can be used as potential source coding and the fifth‐generation (5G)-standardized LDPC [24] or polar codes [25] can be chosen for channel coding. However, it is sub-optimal to design the source and channel coding for sensor data independently from the deep optics and video reconstruction algorithms for the following two-fold reasons. The distribution of sensor data will be different from that of regular images under the influence of deep optics. Different pixels in the sensor data contribute differently to the following video reconstruction process and should be compressed differently.

To address these challenges, semantic communications [26, 27, 28, 29] are promising solutions. The deep optics and video reconstruction networks define special message generation and interpretation processes between transceivers, which are called semantic encoders and decoders in semantic communications, respectively. The communication systems should be optimized to ensure the interpretations of the messages through semantic decoders are correct rather than the delivery of raw sensor data itself. To realize semantic communications, one potential way is to introduce task-aware compression [30] for programmable sensors and design the compression methods under the guidance of the video reconstruction process. In this case, the channel coding is still designed separately while earlier studies on joint source and channel coding (JSCC) [31] have demonstrated the benefits of co-designing the source and channel coding process. In this work, a semantic communication framework that achieves the joint optimization of deep optics, data compression, channel coding, and reconstruction is designed, which significantly improves the transmission efficiency of sensor data.

Specifically, our contributions can be summarized as follows,

-

•

We introduce a RL-based method for adjusting the parameters in programmable sensors, which differs from existing deep optic methods based on differentiable models or functions [17].

-

•

We build a novel video compressive sensing system with spatially-variant compression ratios, where the ratio allocation policy is learned through an explicit rate-distortion function.

-

•

We introduce a RL-based method for the explicit trade-off between transmission rates and task accuracy in semantic communications, where the rate allocation policy is trained jointly with coding modules.

-

•

We propose a semantic communication system for programmable sensors, realizing the co-design of deep optics, data compression, channel coding, and reconstruction algorithms.

II Video Compressed Sensing with Spatially-variant Ratios

As shown in Fig. 1, the principle of the proposed video compressed sensing system is to learn spatially-variant compression ratios for better imaging quality but with a fewer number of measurements. This idea can be further combined with the existing deep optic-based SCI systems with the pixel-wise coded aperture and shuttering functions [20]. In SCI systems with a fixed compression ratio, a fixed number of measurements will be generated for all spatial locations. While in the proposed system with spatially-variant ratios, some locations will have more measurements than others. For example, given four video frames, two snapshots (m1, m2) will be captured in the current SCI systems with ratio. By contrast, the proposed systems will generate one snapshot with dense measurements (m1) and three other snapshots with sparse measurements (m2, m3, m4). Specifically, spatial locations with ratio will only have measurements on m1 after one readout operation while locations with ratio will have values on all four snapshots after four readout operations. To improve the sensing efficiency, the ratio map should be jointly designed with the deep optics and video reconstruction algorithms. In this section, we will introduce the details of the proposed system including the forward model and the training losses.

II-A System overview

We show the overall pipeline of the proposed sensing system in Fig 2. Denote and as the height and width of video frames. We first generate a compression ratio map, , from a small trainable matrix using a ratio generation network with one convolution layer, three residual blocks, and one transposed convolution layer. The spatial size of the trainable matrix is set as 1/8 of the ratio map. We then simulate the capture of a scene, , with a programmable sensor using and possibly an extra random binary mask, , which is referred to as coded apertures in earlier works [20, 10]. The captured sensor data, , stacked with is then fed into a video reconstruction network to produce a reconstructed video, . Two training losses (, ) are designed to update the learnable matrix, the ratio generation network, and the video reconstruction network to improve the reconstruction quality while at the same time reduce the average compression ratio.

II-B Compression ratio generation

Given video frames, five kinds of compression ratios are considered during the ratio map design, i.e. , , , , . Therefore, each pixel in takes one of the five discrete values. To generate the ratio map, the feature maps generated by the ratio generation network are designed to have five channels. After applying a Softmax function to the channel dimension of the feature maps, each channel indicates the discrete possibility of taking the corresponding ratio. Denote the feature maps representing the discrete probability distributions as . The final ratio map is obtained through a sampling operation according to .

II-C Sensor forward model with varying ratios

The learned ratio map will guide the behaviors of the programmable sensor. The exposure time and readout operations under different ratios are summarized in Fig. 3. We consider that each measurement is read out after a continuous exposure ends and before a new round of exposure begins. Also, the generated ”signal” electrons are cleared after each read-out operation. For example, when the ratio is , each exposure lasts frame time, generating measurements in total. In each exposure, the signal will integrate from 0. For a specific ratio, , the equivalent measurement matrix, , is of size with each row representing one measurement base. For the -th () row, is a binary vector with the column to the column taking values 1 while for other columns 0. For example. . The first row indicates the first measurement is the integrated signal from frame time to while for the second row indicating the second measurement from time to . With the measurement matrices, the sensing process at spatial location with ratio can be modelled as,

| (1) |

where is the signal at spatial location , is the captured sensor data at location , represents the camera exposure function capturing the noise effects of the camera [17].

If the random binary masks, are used to modulate the signal [20], the sensing process can be modelled as,

| (2) |

where represents element-wise multiplication, is the signal at spatial location .

Following [17], we consider two kinds of noises, i.e. shot noise and read noise in the camera exposure function. The level of shot noise is proportional to the signal electrons’ strength. For a signal in [0,1] range, , where is independent of signals. By contrast, the level of read noise is fixed for a camera, depending on the photon flux. We use the same settings for and as [17], that is, and . With the definition of noises, .

Note that increasing will decrease the signal strength of each measurement because of the short exposure. Since the distortion effects of shot noise and read noise on signals will increase as the signal strength decreases, the imaging quality will not increase unlimitedly as increases. Also, using a long exposure will be more beneficial for stationary scenes as the signal strength can increase but not good for moving objects. The best choice of ratios should be a mix of small and large compression ratios for different locations so that the captured sensor data can take benefits from both the long exposure with a larger signal-to-noise ratio and the short exposure with less motion blur.

II-D Video reconstruction network

After obtaining , the next step is to reconstruct the targeted video using a deep neural network. The overall architecture of the proposed video reconstruction network is shown in Fig. 4. Our network consists of three components, an initial reconstruction stage (IR), a fusion network (FN), and a deep reconstruction network (DRN) built based on the single-stage spectral-wise transformers (SST) proposed in [32]. The IR and FN are introduced to mitigate the influence of spatially-variant and . Specifically, if is not considered, we get the initial reconstruction, , in IR by considering Eq. (1),

| (3) |

where is fortunately a diagonal matrix as defined in Sec. II-C. By contrast, if is considered, we first rewrite Eq. (2) as,

| (4) |

where and denotes the diagonal matrix constructed from . In this case, some rows of may be all zero, making non-invertible. Since the row vectors of are orthogonal to each other, we reconstruct by using the non-zero row vectors of independently,

| (5) |

Following the coarse-to-fine criteria, we further use a shallow FN to deal with the spatially-variant coded exposure and ratios, which takes , and possibly as inputs and outputs the second-level reconstruction results, . The architecture of FN is shown in Fig 4.

Next, as illustrated in Fig 4, the DRN takes as the input and reconstructs the final videos, , by cascading three SSTs [32]. Each SST follows the design of U-net [33] with an encoder, a bottleneck, and a decoder. The embedding and mapping block are convolutional layers (conv) with 3×3 kernels. The feature maps in the encoder sequentially pass through one spectral-wise attention block (SAB), one conv with stride 2 (for downsampling), one SAB and one conv with stride 2. The bottleneck is one SAB. The decoder has a symmetrical architecture to the encoder. Following the spirit of U-Net, the skip connections are used for feature aggregation between the encoder and decoder to alleviate the information loss from the downsampling operation. The basic unit of SST is SAB, whose architecture is also shown in Fig 4. It has one feed forward network (FFN), one spectral-wise multi-head self-attention (S-MSA), and two layer-normalization. Different from original MSA that calculates the self-attention along the spatial dimension, S-MSA regards each feature map as a token and calculates the self-attention along the channel dimension, making it computational-effective. More details of S-MSA are explained in [32] 222As the network design of DRN is not the main focus of the paper, we omit some details here for page limits. Interesting readers can refer to the original paper for more details..

II-E Training losses

We use , to train the ratio generation parts and the video reconstruction network, respectively. Specifically, the learnable matrix and the ratio generation network are trained based on policy gradients. In our system, each spatial location in is regarded as an agent and its action space is the available compression ratios. Earlier works have proved the global convergence of policy gradient RL in multi-agency situation [34]. We define the reward of each location, , under action according to the rate-distortion trade-off theory,

| (6) |

where denotes target videos, denotes the mean-squared error (MSE) (or called distortion) of the reconstructed videos at spatial location , denotes the number of measurements employed at location , which can also be viewed as the compression rate, is an introduced parameters for rate-distortion trade-off. Increasing will penalize more on the compression rate, leading to a smaller average compression ratio. As this is not a sequential decision problem, there is no need to define future rewards. Although can only approximately evaluate the effect of action on the as the DRN will aggregate information from neighbouring pixels, it is also the most direct way to evaluate action .

At the same time, the expected reward, , is the value function for spatial location , where the expectation is w.r.t. with probability . Note that, . Following [19], we can approximate the gradient of the to parameters in ratio generation parts with samples generated from ,

| (7) |

where denotes the probability of chosen action from distribution . Note that the update of is based on the average value of from different .

On the other hand, the video reconstruction network is trained in a supervised way based on the MSE between and ,

| (8) |

III Efficient transmission of data from programmable sensors

Sometimes the sensor data captured by robots’ on-device sensors need to be sent to remote servers/devices through wireless channels for data storage or video reconstruction. Efficient transmission of these sensor data requires advanced data compression techniques to reduce the data rates and bandwidth requirement. As discussed above, existing communication systems focusing on reproducing the raw sensor data are sub-optimal. As a part of semantic communications, designing the compression methods concerning the video reconstruction network based on task-aware compression [30] is promising. After compressing the original sensor data into compact bit streams, the transmitter will add parity bits and modulate the streams for robust transmission over unreliable channels using off-the-shelf methods. The number of modulated symbols after channel coding and modulation is the communication cost.

Nevertheless, there is an increasing belief in the communication community that the classic framework based on the Shannon separation theory needs to be upgraded for joint designs [29]. For semantic communication systems, jointly optimizing the channel coding and modulation with the other components may lead to better video reconstruction quality with fewer communication resources.

In this section, we will design semantic communication frameworks for the proposed video compressed sensing systems based on both the task-aware compression and semantic communications with joint designs.

III-A Task-aware compression

III-A1 Architecture

The overall architecture of the deployed deep compression methods for the proposed video compressed sensing systems is shown in Fig. 5. It is mainly based on the architecture used in [30] by substituting the basic feature extraction unit from convolutional blocks to SABs. Some other changes are also made for simplicity333As the learned compression ratio is universal to all scenes, the spatial importance of should also be independent from the contents of , therefore, the side links conditioned on a quality map used in [30] can be safely removed. Also, instead of estimating the quality map by a task loss function, we directly change the the distortion metric in the training loss of [30] to task loss, which should be more precise in describing the spatial importance.. For a sensor data , we first reshape its dimension to , as each spatial location has at most eight measurements. Zero-padding is used for locations with fewer measurements. An encoder, , then takes the reshaped sensor data as inputs and generates a latent representation , which is given by, . is then quantized to by a quantizer. The embedding and mapping blocks are conv3x3. The features inside the encoder subsequently go through five SAB-downsampling pairs. Each downsampling operation will decrease the spatial size of the features by but double the channel dimension. Next, a hyper-encoder, , takes as inputs and generates an image-specific side information via . The channel dimension of the features keeps constant in . Then the quantized side information is saved as a lossless bitstream through a factorized entropy model and entropy coding. After that is forwarded to the hyper-decoder, , to draw the parameters of a Gaussian entropy model, which approximates the distribution of and is used to save as a lossless bitstream. For reconstructing , a decoder, , operates on and generates by . At last, the reconstructed is used for video reconstruction. Note that and , and have symmetric architectures, respectively.

III-A2 Training losses

The goal of task-aware compression is to minimize the length of the bitstreams and the distortion between and . This objective raises an optimization problem of minimizing , where denotes the video reconstruction quality, represents the number of bits used to encode and , and is an introduced trade-off parameter between coding rate and video distortion. Increasing will increase the compression ratio but decrease the video reconstruction quality.

III-A3 Communication costs

After obtaining the compression systems with different compression ratios, we can then estimate the required number of modulated symbols to transmit these bit streams under different channel conditions. Specifically, the number of modulated symbols (in complex number) used for transmitting a bitstream depends on the implemented channel coding rate (e.g. 1/3, 1/2, 2/3) and modulation order (e.g. 4, 16, 64). Suppose the length of bitstream is , the length of modulated symbols can be calculated as . Another way to evaluate the communication costs is to use Shannon capacity theorem, if the signal-to-noise (SNR) ratio is .

III-B Semantic communications with joint design

Although the aforementioned deep compression method enables the co-design of deep optics, algorithms, and source coding, the widely-used entropy coding method [30, 23] prohibits the co-design of communication-specific components with deep compression methods. When entropy coding methods are used, each bit from entropy coding methods needs to be transmitted error-freely; otherwise, error propagation will happen in the entropy decoding process. This property leaves the communication systems with no choice but treat each bit equally and carefully. Semantic communications with joint design, on the other hand, allow the effect of channel noise to be considered during the compression process.

III-B1 Architecture

As shown in Fig. 6, the framework consists of three components: semantic coders, semantic-channel coders, and a rate allocation network (RAN). Semantic coders define a special message generation and interpretation method between transceivers based on a shared knowledge base. Semantic-channel coders directly learn the end-to-end mappings between semantic messages and modulated symbols. RAN is responsible for controlling the transmission rates. Different from deep compression methods that focus on the source coding rate only, the transmission rates in semantic communications depend on source coding rate, channel coding rate, and modulation order.

Specifically, the deep optic methods are special semantic encoders, which encode natural scene into sensor data in a predefined way and the video reconstruction networks, which decode the target video from , are special semantic decoders. The sensor data, , is the semantic message of a scene to be shared between transmitters and receivers. Note that conventional communication systems emphasized the accurate transmission of . With the semantic decoder (defining ), the semantic communication systems should be optimized to maximize the quality of under limited communication costs. Also, this is the first attempt to define the semantic coders in the generation process of source data.

Given , the transmitter will use a semantic-channel encoder (SCE) to generate a predefined maximum number of modulated symbols, , from which some symbols will be chosen to transmit through noisy channels by rate control techniques 444Otherwise, if all the messages are transmitted with the same number of modulated symbols, it disobeys the Shannon source coding theory saying that the minimal possible expected length of codewords should be a function of the entropy of the input word. . This process can be modelled as,

| (9) |

where the number of modulated symbols is measured in real number. The SCE is composed of an embedding layer, three consecutive SAB-downsampling pairs, two stacked SAB-3Conv pairs, and a mapping layer. The size of feature maps after each operation is shown in Fig. 6.

To adjust the communication costs according to the semantic contents of message , the RAN takes as inputs, which are not only generated modulated symbols but also the high-level representations of in feature space, and generates a spatially-variant coding rate map . Each element in the spatial location of indicates how many symbols out of the symbols in at the same spatial location will be kept for transmission. Specifically, takes four discreet value , and only the first symbols of in the channel dimension will be transmitted. The generation of is similar to the generation of in Sec. II-B: the RAN generates a feature map representing the discreet probability distribution of taking each value from and a sampling operation is conducted to generate . With , a mask operation is conducted on to delete unnecessary symbols. The remaining symbols are then reshaped into a vector , where denotes the number of remaining symbols. Next, power normalization is applied to to satisfy power constraints,

| (10) |

Finally, is directly transmitted through wireless environments. The effect of channel noise on can be represented as,

| (11) |

where is multiplicative noise and is additive noise. In additive white Gaussian noise (AWGN) channel, and the value of depends on the current signal-to-noise ratio (SNR). Simultaneously, each element of can be quantized using 2 bits and the bitstream from is also transmitted. Different from the transmission of , the bitstream of is transmitted in an error-free way as in the deep compression methods because a minor error in will make it impossible to reshape the flatten vector back to .

At the receiver side, and are reshaped from and bitstreams respectively. After concatenation, they are fed into a semantic-channel decoder (SCD), which has a symmetric architecture to the SCE. This process can be modelled as,

| (12) |

The recovered sensor data is used to generate videos using the video generation network.

III-B2 Training losses

Similar to Sec. II-E, we use and to train the RAN and SCE/SCD, respectively. Specifically, the RAN is trained based on policy gradients RL, where is the state, describes the actions, and each spatial location of is an agent. Note that the spatial size of is 8 times that of and so the action taken at each spatial location of will have a strong effect on the reconstruction quality of a area of . Considering this, we first define and apply a average pooling to , obtaining . We then define the reward of location , , under action as follows,

| (13) |

where describes the distortion caused by the agent at spatial location , is the transmission rate (also the action) at , is an introduced parameter for rate-distortion trade-off. Increasing will penalize more on the transmission rate, leading to fewer modulated symbols to be transmitted. We adjust the communication costs by tuning .

At the same time, the expected reward, , is the value function for spatial location . and the gradient of to the parameters in RAN can be approximated as follows,

| (14) |

Note that the update of is based on the average value of from different locations.

On the other hand, the SCE and SCD are trained in a supervised way based on the MSE between and ,

| (15) |

III-B3 Communication costs

The communication costs consist of two parts: the transmission of and . As is of shape , the number of complex modulated symbols used to transmit can be denoted as . On the other hand, is of shape and each element can be represented by 2 bits, therefore the bitstream length from can be modelled as . If the channel coding rate is and the modulation order is , the length of the modulated symbols for can be calculated as . The total communication costs are .

IV Experiments

In this section, we first demonstrate the superiority of the proposed video compressed sensing system in terms of sampling rate. Based on the developed sensors, we then evaluate the performance of different communication systems regarding communication costs.

IV-A Video imaging with spatially-variant compression ratios

The following experiments are conducted to evaluate the effectiveness of the method proposed in Sec. II.

IV-A1 Dataset

Following [17], we use the Need for Speed (NfS) dataset [35] to train the network and evaluate its performance. The NfS dataset is collected with significant camera motions and suitable for representing the scene captured by moving robots’ on-board cameras. The dataset consists of 100 videos obtained from the internet, from which 80 are used for training and 20 for testing. Each video is captured at 240 frames per second (fps) with a resolution that we center crop to . For each video, we select 80 random -frame-long segments within the video, therefore in our experiments. The images are turned to gray images and normalized to be within . Our input and output to the end-to-end model are both the 16-frame video segment.

IV-A2 Implementation details

Our model is implemented in PyTorch. The ratio generation parts are trained with the SGD optimizer (momentum=0.9) at a learning rate of . The video reconstruction network is trained with the Adam optimizer at a learning rate of . The training process is as follows. We first fix the ratio at for all spatial locations and train the video reconstruction network for 100 epochs. After that, we gradually increase in Eq. (6) from to and jointly train the ratio generation parts and video reconstruction network. For each , we train for about epochs. For baselines with a fixed compression ratio for all locations, we set the ratio from to and train the video reconstruction network for epochs in each fixed ratio. Furthermore, we consider both cases where the binary mask, , is used or not used. When is used, it is randomly initialized and fixed during the experiments.

IV-A3 Results without binary mask

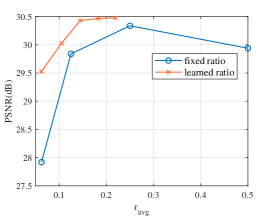

We first compare our method with its fixed-ratio version when is not used. We use the peak signal-to-noise ratio (PSNR), , as the performance metric. The results are shown in Fig. 7, where denotes the average compression ratio for all spatial locations. As shown in Fig. 7, the imaging quality increases along with the growth of for both methods until reaches where the effects of shot noise and read noise surpass the growth of the number of measurements. From the figure, the proposed method with learned ratios has a significant performance gain over the method with fixed ratio. For example, when , the learned-ratio method has nearly dB gain over the fixed-ratio method, demonstrating the superiority of learning a compression ratio map in reducing the number of measurement samples.

IV-A4 Results with binary mask

We then consider and show the performance comparison in Fig. 8. Due to the usage of binary mask, the system performance of the fixed-ratio method increases when , showing the positive effect of coded apertures. However, as using will decrease the signal strength, the performance of fixed-ratio method decreases when increases when compared to the same method without in Fig. 7. From the Figure, the proposed method with learned ratio also has a steady performance gain over the fixed-ratio method, further proving the effectiveness of the developed sensors.

IV-A5 Learned compression ratio maps

We show the learned ratio maps when is used in Fig. 9. As discussed above, there are only five ratio choices from to and their colors are shown in the color bar. From the figure, the learned ratio maps have fixed patterns for a local area. The patterns are different for different . Besides, the learned ratio maps are a mix of low ratios and high ratios so that the benefits from both long exposure and low exposure can be considered.

IV-A6 Visual results

IV-B Semantic communications for programmable sensors

In this subsection, we will first evaluate semantic communication frameworks based on the task-aware compression and the joint design, respectively. After that, we will compare semantic communications with the existing transmission methods.

In the following experiments, we first restore the network parameters related to the sensors from pretrained models in the previous subsection and fix them when training the semantic communication frameworks. We use the sensor network with in Fig. 7. The experiments are called fixed-sensing experiments. Next, we jointly train the sensing network with the communication parts, which are called joint-sensing experiments.

IV-B1 Channel condition

We assume the sensor data is transmitted through the AWGN channel with dB.

IV-B2 Implementation details of different semantic communication frameworks

We now describe in more details about the implementations of different semantic communication frameworks.

-

•

Task-aware compression plus capacity-achieving channel coding (Compr+Cap): We first convert the sensor data into bitstreams and then assume the transmission of the bitstreams can reach Shannon channel capacity. In the considered channel condition, . Note that it is hard to achieve Shannon capacity in real systems, so its performance can only be regarded as an ideal reference for compression methods. To adjust the communication costs, we train the compression network under different . During training, we first set and gradually decrease to increase the bitstream length. The network is first trained with the Adam optimizer at a learning rate of for about epochs and then at for another epochs under each .

-

•

Task-aware compression plus LDPC plus QAM (Compr+LDPC): The bitstreams from task-aware compression methods are transmitted through LDPC channel coding and quadrature amplitude modulation (QAM) modulation. As stated in earlier work [27], when dB for AWGN channels, we should use 16QAM and 2/3 LDPC. In this case, .

-

•

Semantic communications without RAN (SemCom+noRAN): In earlier task-oriented semantic communications [29], the RAN is not implemented and all the source data is transmitted with the same communication costs. We also delete the RAN in the proposed framework and demonstrate its performance as a reference. To adjust the communication costs, we change the channel dimension of in Eq. (9) from to . The network is trained for about epochs at a learning rate of and then for epochs at under each dimension of .

-

•

Semantic communications (SemCom): This is the proposed end-to-end semantic communication framework with rate control. To adjust the transmission rate, we train the network under different in Eq. (13). The training consists of two steps: we first set for all locations and train the SCE/SCD with all 48 symbols used for about epochs. After that, we set and gradually increase it to decrease communication costs. The RAN is trained with the SGD optimizer and the other parts are trained with the Adam optimizer at a learning rate of for epochs and at for another epochs under each . For low situations, we transmit the first symbols of rather than . Also, exploration strategies are used when sampling . Specifically, the sampling probability is set to , where we set ==== to encourage the RAN to explore more on other actions so that the SCE/SCD can perform well on all actions.

IV-B3 Comparison of semantic communications in fixed-sensing experiments

We show the performance comparison of different semantic communication methods in the fixed-sensing experiment in Fig. 11, where denotes the average number of modulated symbols used for the video clips in the test dataset. From the figure, the ’Compr+LDPC’ performs slightly better than ’SemCom+noRAN’ while the proposed ’SemCom’ method outperfroms these methods to a relatively large extend, showing the advantage of jointly designing the channel coding and modulation. It also demonstrates the effectiveness of directly implementing the rate-distortion trade-off on the modulated symbols through the proposed RAN. Furthermore, the proposed ’SemCom’ has a similar performance with ’Compr+Cap’, showing that semantic communications with joint designs are promising ways to approach Shannon capacity.

IV-B4 Comparison of semantic communications in joint-sensing experiments

The performance comparison of different semantic communication methods in the joint-sensing experiment is shown in Fig. 12. From the figure, SemCom performs significantly better than ’Compr+LDPC’ and even surpasses the ’Compr+Cap’ in large cases, further proving the benefits of joint designs. However, we cannot conclude for sure that semantic communications can surpass Shannon capacity as the performance of ’Compr+Cap’ depends on our implementation of task-aware compression methods.

IV-B5 Implementation of conventional communication methods

We now explain how the sensor data can be transmitted in conventional communication systems and introduce their implementation details.

-

•

Transmit sensor data by joint source and channel coding (Sensordata+JSCC): In conventional communication systems, the most straightforward way is to directly transmit the raw sensor data regardless of its usage. To simulate this process, the raw sensor data need to be compressed and channel-coded. Recent works on deep JSCC [31] have shown that training a network for joint source and channel coding can perform better than using standardized image compression methods and channel coding methods. Therefore, we follow its design and build a deep network similar to SCE/SCD to transmit the raw sensor. The network is optimized by the mean-squared error (MSE) between original sensor data and reconstructed sensor data.

-

•

Transmit reconstructed video by H.264 video coding plus LDPC plus QAM (Video+H.264+LDPC): Another choice is to reconstruct the video first via a locally-deployed video reconstruction network and then transmit the reconstructed video. In this way, the computational-costive reconstruction network need to be run at the transmitter. To transmit the video, we use H.264 [36] for video source coding, LDPC for channel coding, and QAM for modulation.

IV-B6 Comparison of semantic communications with conventional communication systems

The performance comparison between semantic communications and conventional communication systems is shown in Fig. 13. From the figure, Sensordata+JSCC performs the worst. This is because the important data for video reconstruction networks does not get targeted protection during transmission. Video+H.264+LDPC performs better than Sensordata+JSCC as the videos have been reconstructed at the transmitter side. However, this method is still not as effective as SemCom, which shows we can achieve the efficient transmission of sensor data without running time-consuming and resource-costive reconstruction algorithm at the transmitter side.

V Conclusion

In this work, we propose a novel video imaging system with learned compression ratios. We also propose a semantic communication framework for programmable sensors with task-oriented reconstruction algorithms. From the perspective of algorithm development, we show that by combining the policy-gradient reinforcement learning and supervised learning, we can achieve the explicit (compression or transmission) rate-distortion trade-off in different cases. The proposed training pipelines can be extended to many other applications.

References

- [1] P. E. Debevec and J. Malik, “Recovering high dynamic range radiance maps from photographs,” in Seminal Graph. Pap.: Pushing Boundaries, Vol. 2, 2023, pp. 643–652.

- [2] S. W. Hasinoff, D. Sharlet, R. Geiss, A. Adams, J. T. Barron, F. Kainz, J. Chen, and M. Levoy, “Burst photography for high dynamic range and low-light imaging on mobile cameras,” ACM Trans. Graph., vol. 35, no. 6, pp. 1–12, 2016.

- [3] S. K. Nayar and T. Mitsunaga, “High dynamic range imaging: Spatially varying pixel exposures,” in Proc. IEEE Conf. Comput. Vis. Pattern Recognit., vol. 1. IEEE, 2000, pp. 472–479.

- [4] Nayar and Branzoi, “Adaptive dynamic range imaging: Optical control of pixel exposures over space and time,” in Proc. IEEE Int. Conf. Comput. Vis. IEEE, 2003, pp. 1168–1175.

- [5] J. Gu, Y. Hitomi, T. Mitsunaga, and S. Nayar, “Coded rolling shutter photography: Flexible space-time sampling,” in Proc. IEEE Int. Conf. Comput. Photography. IEEE, 2010, pp. 1–8.

- [6] A. C. Sankaranarayanan, P. K. Turaga, R. G. Baraniuk, and R. Chellappa, “Compressive acquisition of dynamic scenes,” in Proc. Eur. Conf. Comput. Vis. Springer, 2010, pp. 129–142.

- [7] P. Llull, X. Liao, X. Yuan, J. Yang, D. Kittle, L. Carin, G. Sapiro, and D. J. Brady, “Coded aperture compressive temporal imaging,” Opt. Express, vol. 21, no. 9, pp. 10 526–10 545, 2013.

- [8] A. Wagadarikar, R. John, R. Willett, and D. Brady, “Single disperser design for coded aperture snapshot spectral imaging,” Appl. Opt., vol. 47, no. 10, pp. B44–B51, 2008.

- [9] A. A. Wagadarikar, N. P. Pitsianis, X. Sun, and D. J. Brady, “Video rate spectral imaging using a coded aperture snapshot spectral imager,” Opt. Express, vol. 17, no. 8, pp. 6368–6388, 2009.

- [10] X. Yuan, Y. Liu, J. Suo, and Q. Dai, “Plug-and-play algorithms for large-scale snapshot compressive imaging,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2020, pp. 1447–1457.

- [11] S. J. Carey, A. Lopich, D. R. Barr, B. Wang, and P. Dudek, “A 100,000 fps vision sensor with embedded 535gops/w 256 256 simd processor array,” in 2013 Symp. VLSI Circuits. IEEE, 2013, pp. C182–C183.

- [12] J. Chen, S. J. Carey, and P. Dudek, “Feature extraction using a portable vision system,” in IEEE/RSJ Int. Conf. Intell. Robots Syst., Workshop Vis.-based Agile Auton. Navigation UAVs, vol. 2, 2017.

- [13] M. Z. Wong, B. Guillard, R. Murai, S. Saeedi, and P. H. Kelly, “Analognet: Convolutional neural network inference on analog focal plane sensor processors,” arXiv preprint arXiv:2006.01765, 2020.

- [14] C. A. Metzler, H. Ikoma, Y. Peng, and G. Wetzstein, “Deep optics for single-shot high-dynamic-range imaging,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2020, pp. 1375–1385.

- [15] J. N. Martel, L. K. Mueller, S. J. Carey, P. Dudek, and G. Wetzstein, “Neural sensors: Learning pixel exposures for hdr imaging and video compressive sensing with programmable sensors,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 42, no. 7, pp. 1642–1653, 2020.

- [16] M. Iliadis, L. Spinoulas, and A. K. Katsaggelos, “Deepbinarymask: Learning a binary mask for video compressive sensing,” Digit. Signal Process., vol. 96, p. 102591, 2020.

- [17] C. M. Nguyen, J. N. Martel, and G. Wetzstein, “Learning spatially varying pixel exposures for motion deblurring,” in Proc. IEEE Int. Conf. Comput. Photogr. (ICCP). IEEE, 2022, pp. 1–11.

- [18] R. S. Sutton, D. McAllester, S. Singh, and Y. Mansour, “Policy gradient methods for reinforcement learning with function approximation,” Adv. Neural Inf. Process. Syst., vol. 12, 1999.

- [19] S. Liu, Z. Zhu, N. Ye, S. Guadarrama, and K. Murphy, “Improved image captioning via policy gradient optimization of spider,” in Proc. IEEE Int. Conf. Comput. Vis., 2017, pp. 873–881.

- [20] E. Vargas, J. N. Martel, G. Wetzstein, and H. Arguello, “Time-multiplexed coded aperture imaging: Learned coded aperture and pixel exposures for compressive imaging systems,” in Proc. IEEE Int. Conf. Comput. Vis., 2021, pp. 2692–2702.

- [21] F. Bellard. (2018) BPG image format. [Online]. Available: https://bellard.org/bpg/

- [22] G. K. Wallace, “The jpeg still picture compression standard,” Commun. ACM, vol. 34, no. 4, pp. 30–44, 1991.

- [23] J. Ballé, V. Laparra, and E. P. Simoncelli, “End-to-end optimized image compression,” in Int. Conf. Learn. Represent., 2017.

- [24] J. Thorpe, “Low-density parity-check (ldpc) codes constructed from protographs,” IPN Prog. Rep., vol. 42, no. 154, pp. 42–154, 2003.

- [25] H. Vangala, E. Viterbo, and Y. Hong, “A comparative study of polar code constructions for the awgn channel,” arXiv preprint arXiv:1501.02473, 2015.

- [26] Z. Qin, X. Tao, W. Tong, J. Lu, and G. Y. Li, “Semantic communications: Principles and challenges,” arXiv preprint arXiv:2201.01389, 2021.

- [27] B. Zhang, Z. Qin, and G. Y. Li, “Semantic communications with variable-length coding for extended reality,” IEEE J. Sel. Top., pp. 1–14, 2023.

- [28] Q. Lan, D. Wen, Z. Zhang, Q. Zeng, X. Chen, P. Popovski, and K. Huang, “What is semantic communication? a view on conveying meaning in the era of machine intelligence,” J. Commun. Inf. Netw., vol. 6, no. 4, pp. 336–371, 2021.

- [29] M. Jankowski, D. Gündüz, and K. Mikolajczyk, “Wireless image retrieval at the edge,” IEEE J. Sel. Areas Commun., vol. 39, no. 1, pp. 89–100, 2020.

- [30] M. Song, J. Choi, and B. Han, “Variable-rate deep image compression through spatially-adaptive feature transform,” in Proc. IEEE Int. Conf. Comput. Vis., 2021, pp. 2380–2389.

- [31] E. Bourtsoulatze, D. B. Kurka, and D. Gündüz, “Deep joint source-channel coding for wireless image transmission,” IEEE Trans. Cogn. Commun. Netw., vol. 5, no. 3, pp. 567–579, 2019.

- [32] Y. Cai, J. Lin, Z. Lin, H. Wang, Y. Zhang, H. Pfister, R. Timofte, and L. M. Van Gool, “Multi-stage spectral-wise transformer for efficient spectral reconstruction,” in Proc. IEEE/CVF Conf. Comput. Vis. Pattern Recognit., 2022, pp. 19–20.

- [33] O. Ronneberger, P. Fischer, and T. Brox, “U-net: Convolutional networks for biomedical image segmentation,” in Proc. Med. Image Comput. Comput.-Assist. Interv. Springer, 2015, pp. 234–241.

- [34] S. Leonardos, W. Overman, I. Panageas, and G. Piliouras, “Global convergence of multi-agent policy gradient in markov potential games,” in Proc. Int. Conf. Learn. Represent., 2021.

- [35] H. Kiani Galoogahi, A. Fagg, C. Huang, D. Ramanan, and S. Lucey, “Need for speed: A benchmark for higher frame rate object tracking,” in Proc. IEEE Int. Conf. Comput. Vis., 2017, pp. 1125–1134.

- [36] T. Wiegand, G. J. Sullivan, G. Bjontegaard, and A. Luthra, “Overview of the h. 264/avc video coding standard,” IEEE Trans. Circuits Syst. Video Technol., vol. 13, no. 7, pp. 560–576, 2003.