Completion Delay of Random Linear Network Coding in Full-Duplex Relay Networks

Abstract

As the next-generation wireless networks thrive, full-duplex and relay techniques are combined to improve the network performance. Random linear network coding (RLNC) is another popular technique to enhance the efficiency and reliability of wireless communications. In this paper, in order to explore the potential of RLNC in full-duplex relay networks, we investigate two fundamental perfect RLNC schemes and theoretically analyze their completion delay performance. The first scheme is a straightforward application of conventional perfect RLNC studied in wireless broadcast, so it involves no additional process at the relay. Its performance serves as an upper bound for all perfect RLNC schemes. The other scheme allows sufficiently large buffer and unconstrained linear coding at the relay. It attains the optimal performance and serves as a lower bound for all RLNC schemes. For both schemes, closed-form formulae to characterize the expected completion delay at a single receiver as well as for the whole system are derived. Numerical results are also demonstrated to validate the theoretical characterizations, and compare the two fundamental schemes with the existing one.

Index Terms:

Full-duplex, relaying, random linear network coding (RLNC), completion delay, throughputI Introduction

Among the emerging techniques for promoting evolution of the next generation (5G) wireless networks, relay techniques [2] is widely considered for the purpose of catering to the ever-growing demand for throughput and coverage. As an up-and-coming paradigm, network coding (NC) and particularly random linear network coding (RLNC) [3] has shown great capabilities to realize higher transmission efficiency and throughput in wireless communications. Different NC schemes have shown significant gains in a multitude of wireless transmission scenarios, from wireless broadcast [4, 5, 6, 7, 8, 9] and wireless sensor networks [10], to D2D [11] and Wi-Fi direct transmission [12]. A considerable amount of research (e.g., [13, 14, 15, 16, 17]) has investigated the combination of relay techniques with RLNC for the two-hop relay networks, which are the network model proposed in IEEE 802.16j and 3GPP LTE-Advanced [18, 19] for the sake of simplicity and explicitness of system design. In particular, in the two-hop relay network depicted in Fig. 1 with the relay station operating in the half-duplex mode, Ref. [17] proposed an RLNC scheme with online scheduling that can achieve near-optimal completion delay performance, a key metric for transmission efficiency (e.g., [9],[13, 14, 15, 16, 17],[20],[21]).

Compared with the traditional half-duplex relay, the full-duplex relay can potentially double the throughput by simultaneously fetching and forwarding data [22, 23]. Moreover, with recent developments in the self-interference cancellation technology [24], the full-duplex relay has been considered as a key component to construct versatile networks in 5G. In the literature, there have been studies on combining full-duplex relaying with NC for performance enhancement over broadcast networks. For example, physical-layer NC has been applied in full-duplex relay networks with multiple receivers [25]. An NC-based Automatic Repeat Request (ARQ) scheme [26] was proposed to enhance the downlink throughput for a two-way full-duplex relay network. When the relay station in Fig. 1 operates in the full-duplex mode, the recent work [27] proposed an RLNC scheme with scheduling, known as FBPF (Fewest Broadcast Packet First), which demonstrated a better throughput (equivalently, completion delay) performance than the ARQ. FBPF assumes full linear independence among the packets generated by the source, so it belongs to perfect RLNC, which is always considered in analyzing the optimal performance RLNC can achieve (e.g., [4, 28, 29, 13, 17]). Even though FBPF is a type of perfect RLNC, it does not shed light on the best possible completion delay performance that RLNC can achieve because it does not fully utilize the packets buffered in the relay, i.e., it does not invoke coding while stores and forwards packets via an unnecessarily large buffer at the relay.

In this paper, in order to further explore the potential of RLNC in the full-duplex relay network in Fig. 1, we are inspired to investigate two fundamental perfect RLNC schemes and study their completion delay performance. The main contributions of this paper are summarized as follows.

-

•

We first investigate perfect RLNC without buffer, which does not involve any buffer or additional process at the relay. As a result, this scheme provides a fundamental performance guarantee among all possible perfect RLNC schemes. For this scheme, explicit formulae of expected completion delay at a single receiver as well as for the system are deduced.

-

•

We then investigate perfect RLNC with buffer, which allows sufficiently large buffer and unconstrained linear coding at the relay. It turns out that it attains the best completion delay performance among all RLNC schemes. For this scheme, by modeling the transmission process as a Markov chain, we deduce a closed-form formula of the expected completion delay at a single receiver, which involves combinatorial numbers related to Schroeder paths. In order to compute the expected completion delay at a single receiver in a more handy manner, we further derive a recursive formula for it.

-

•

For perfect RLNC with buffer, we also model such a Markov chain that the expected system completion delay can be calculated by a formula built upon its -step transition probability matrix, whose size grows exponentially with the increasing number of receivers. Furthermore, we characterize a non-trivial closed-form lower bound, which is the maximum of two individual ones that can be explicitly and recursively computed. The first stems from the expected system completion delay in wireless broadcast, and the other is selected to be the expected completion delay at a single receiver with the worst channel condition.

For full-duplex relay networks, the formulae of the expected completion delay of perfect RLNC without buffer obtained in this paper serve as upper bounds for the completion delay performance of all perfect RLNC schemes, including FBPF [27], the relay-centered RLNC schemes [13], and the one adapted from [17]. The formulae of the expected completion delay of perfect RLNC with buffer obtained in this paper serves as lower bounds for the completion delay performance of all RLNC schemes, including Fulcrum codes [30], DSEP Fulcrum codes [31], Sparse RLNC [32], Telescopic codes[33]. The study of this paper provides a theoretical guideline for future works on the detailed design of RLNC-based transmission.

We would like to remark that when the RS in the system operates in the half-duplex mode, the completion delay performance of perfect RLNC was analyzed in [17], but it does not shed light on the completion delay analyses in this paper, because its focus is to determine whether to fetch a packet from the BS or to broadcast a packet to the receivers at every timeslot at the RS.

The remainder of this paper is organized as follows. Section II introduces the system model and two fundamental perfect RLNC schemes. Section III theoretically analyzes the expected completion delay of two fundamental schemes. Section IV provides extensive numerical analyses to justify the theoretical characterizations and compare the two fundamental schemes’ performance with FBPF. Section V concludes the paper.

Throughout the paper, we shall use and to respectively represent an identity matrix and an all- matrix, where the matrix size, if not explained, can be inferred in the context.

II System Model and Two Fundamental Perfect RLNC Schemes

II-A System Model

We consider the two-hop full-duplex relay network depicted in Fig. 1, where a base station (BS) attempts to deliver packets to a set of receivers via a full-duplex relay station (RS) with a limited buffer size. The network transmission is considered to be time-slotted, that is, at every timeslot, the BS can deliver one packet to the RS, while the full-duplex RS can simultaneously fetch a coded packet from the BS and broadcast a coded packet to all receivers. The memoryless channel between the BS and the RS, together with the channel between the RS and every receiver , are subject to independent random packet erasures with erasure probability and , respectively. Every receiver is interested in recovering all original packets. Herein, the completion delay refers to the total number of packets transmitted by the BS before every receiver is able to recover all original packets. Notice that the definition of completion delay is the same as the one in [13][27], which takes the initial packets transmitted by the BS into account, so it is slightly different from the one in [13][6]. The packet number divided by the completion delay is set as a measurement of throughput in [27].

In practice, at every timeslot, even though the BS and the RS transmit simultaneously, the RS obtains what the BS transmits at the end of the timeslot. Thus, for the RS, the broadcast process is always one timeslot behind the reception process, i.e., what the RS broadcasts at timeslot has nothing to do with what it receives at timeslot . Same as the consideration in [13], in this paper, we shall also ignore this constant timeslot lag at the RS and this assumption does not affect the analysis on the completion delay performance.

For the full-duplex relay network, Ref. [27] has proposed the FBPF scheduling scheme, in which if the RS buffer is not empty, the RS selects a packet that has been broadcast the fewest number of times from the unlimited buffer, and broadcasts the selected packets to the receivers. Since perfect RLNC is adopted in the design of the FBPF scheme, any different packets received by a receiver are assumed to be linearly independent.

II-B Two Fundamental Perfect RLNC Schemes

Since the FBPF perfect RLNC scheme permits unlimited buffer at the RS for additional scheduling procedures, it is not a perfect RLNC scheme with the simplest setting at the RS. Thus, it does not reflect a fundamental performance guarantee provided by perfect RLNC in the full-duplex relay network, that is, there exist other perfect RLNC schemes with simpler settings at the RS and their completion delay performance is not as good as that of the FBPF scheme. On the other hand, the FBPF perfect RLNC scheme cannot yield the best performance gain in terms of completion delay as it does not involve NC at the RS.

In order to study the fundamental completion delay performance of perfect RLNC in the full-duplex relay network, we consider two basic perfect RLNC schemes, one without buffering at the RS and the other with buffering and recoding at the RS.

The first scheme, called perfect RLNC without buffer, does not require buffer and thus there is no recoding at the RS. The role of the RS is just to directly forward the received packets. At every timeslot, the BS delivers one coded packet, which is a random linear combination of original packets, to the RS. If the RS successfully receives the coded packet, then it will broadcast it to all receivers. Otherwise, the RS will not transmit anything. Notice that the full-duplex RS can simultaneously fetch the coded packet from the BS and broadcast it to all receivers. As perfect RLNC is considered, any coded packets generated by the BS are assumed linearly independent and sufficient to recover the original packets. As a result, once every receiver obtains packets, the transmission completes. Since this scheme does not involve any extra operation at the RS, it is the most straightforward and simplest application of perfect RLNC in the full-duplex relay network. As a result, it provides a fundamental performance guarantee for all perfect RLNC schemes designed for the full-duplex relay network in terms of completion delay.

The other scheme, called perfect RLNC with buffer, assumes buffer size and no coding constraints at the RS. At each timeslot, the BS transmits a coded packet to the RS, and if the RS receives the packet and its buffer is not full, then it stores the received packet in its buffer. Meanwhile, no matter whether the RS successfully receives the packet from the BS or not, it broadcasts a packet which is a random linear combination of all the packets stored in the buffer. Due to the causality at the RS to firstly buffer received packets and then broadcast a linear combination of buffered packets to receivers, the number of linearly independent packets obtained at a receiver is always no larger than the number of packets buffered at the RS. Once every receiver obtains linearly independent packets, the transmission completes. As perfect RLNC is considered, the original packets can be recovered from any coded packets generated by the BS. Moreover, there is no coding constraint at the RS in this scheme. As a result, as long as the RS stores coded packets transmitted from the BS, the original packets can be recovered from any randomly generated coded packets at the RS. This justifies why setting the buffer size to is sufficient, and actually, when the RS successfully stores coded packets transmitted from the BS in the buffer, there is no need for the BS to transmit new coded packets to the RS at all. To sum up, the scheme perfect RLNC with buffer attains the best completion delay performance among all perfect RLNC schemes in the full-duplex relay network.

III Completion Delay Analysis

One of the main contributions in this paper is to theoretically analyze the expected completion delay of the benchmark schemes introduced in Sec. II-B, that is, the perfect RLNC scheme without buffer and with buffer, respectively.

III-A Perfect RLNC without Buffer

In perfect RLNC without buffer, the completion delay at a single receiver , denoted by , is defined to be the number of packets the BS transmits till receiver is able to recover all original packets, and the completion delay for the system, denoted by , is defined as

The completion delay at a single receiver follows the negative binomial distribution with the probability mass function , so that the expectation of is equal to

| (1) |

Proposition 1.

The expected completion delay of the perfect RLNC scheme without buffer is

| (2) |

where is the regularized incomplete beta function.

Proof:

Let denote the number of packets broadcast from the RS till every receiver is able to decode all original packets. Since the considered perfect RLNC scheme does not have any buffer at the RS, any out of the packets broadcast from the RS are linearly independent. Thus, can be regarded as the completion delay for the wireless broadcast system with original packets, receivers and independent erasure probability . By Theorem 1 in [6],

| (3) |

On the other hand, as the RS immediately broadcasts a packet it successfully receives from the BS, also represents the number of successfully received packets from the BS at the RS, and just represents the number of transmissions from the BS till the RS successfully receives packets. As it takes on average transmissions to successfully receive one packet at the RS from the BS, , which implies (2) based on (3). ∎

For the special case that the channel from the RS to every receiver is perfect, i.e., , the full-duplex relay network becomes essentially the same as a point-to-point transmission, so the system completion delay of both perfect RLNC schemes considered herein follows the negative binomial distribution with the expected value . For the other special case , the full-duplex relay network degenerates to the wireless broadcast with erasure probability , so is given by , same as the one obtained in [6].

III-B Perfect RLNC with Buffer, Single Receiver Case

In perfect RLNC with buffer, the completion delay at receiver is denoted by , where means the number of original packets generated by the source and to be recovered at every receiver. The system completion delay, denoted by , is defined as

In order to characterize the expected completion delay , we first recall the following combinatorial number

| (4) |

The Schroeder path (see, e.g., [34]) from to is a path with possible movement , , in every step transition and with for every point in the path. Then, represents the number of Schroeder paths from to with step transitions.

Theorem 2.

At a single receiver , the expected completion delay of the perfect RLNC scheme with buffer is

| (5) |

Proof:

Model the transmission process as a Markov chain consisting of states. Every state, labeled as , represents the scenario that the RS and the receiver have respectively obtained and packets. Due to the causality at the RS to firstly buffer received packets (from the BS) and then broadcast a random linear combination of the buffered packets to receivers, all states in have , and the only absorbing state in is (assuming ). The -step transition probability from a transient state to another state is given by

-

•

for , ;

-

•

for , ,,,.

-

•

for , ,.

Let denote the matrix of -step transition probabilities among all transient states. Assume the states are ordered lexicographically, so that the first row/column in is indexed by the state . By the standard technique to calculate the expected transition times from a transient state to an absorbing one (see, e.g., Sec. 4.6 in [35]), the expected completion delay can be formulated as

| (6) |

where represents the -dimensional row unit vector with the first entry equal to . The details to derive (5) based on (6) is provided in Appendix--A. ∎

Remark.

In the literature, Ref. [9, 13, 37, 30, 36] also studied the completion delay performance from a Markov chain approach in different network settings. For example, [9] characterized the system completion delay for wireless broadcast with feedback by means of a moment generating function. For wireless broadcast with receivers, Ref. [36] characterized the cumulative distribution function of the system completion delay of RLNC by means of a Markov chain model. For the relay-centered network with a single receiver, Ref. [13] established a Markov chain model to obtain an implicit recursive formula for the expected completion delay of perfect RLNC. However, in the above references, there is no explicit expression for the completion delay studied. In comparison, we also adopt the Markov chain approach to model the transmission process in full-duplex relay networks, and by deliberate analysis we obtain a closed-form formula of the completion delay at a single receiver.

With increasing, the calculation of according to (5) becomes tedious because it involves the combinatorial number , which can be extremely large. Stemming from (5), we next deduce an equivalent recurrence expression for .

Corollary 3.

can be recursively expressed as

| (10) |

with the initial value .

Proof:

Same as in the proof of Theorem 2, write for short. Thus, can be expressed as

| (11) |

First, it is trivial to see .

Recall that represents the number of Schroeder paths from to with exactly step transitions. Moreover, for every Schroeder path in , the number of step transitions that are in the form of from to , from to and from to are respectively equal to , and . Now assign a weight to every step transition as follows. If the step transition is from to , then its weight is ; if the step transition is from to or from to , then its weight is . For every Schroeder path, define its weight as the product of all the weights for the step transitions in the path. Thus, based on (11), can be regarded as the sum of weights of all Schroeder paths from to .

All Schroeder paths from to can be partitioned into categories. The first category consists of all those Schroeder paths that contain the step transition from to , which has weight . Thus, the sum of weights of all Schroeder paths in the first category is equal to . For , the category consists of all those Schroeder paths that satisfy the followings:

-

•

the step transitions from to and from to are contained;

-

•

the step transitions from to is equivalent to a Schroeder path from to ;

-

•

the step transitions from to is equivalent to a Schroeder path from to .

As a result, the sum of weights of all Schroeder paths in category equals to . To add up the weights of all Schroeder paths in all categories, we obtain

that is, (10) holds. ∎

Based on (9) and (10), can be recursively computed for arbitrarily large . It is also interesting to observe from Eq. (1), (5), (9) and (10) that the parameters and have the same impact on the expected completion delay at a single receiver for both perfect RLNC without buffer and perfect RLNC with buffer.

III-C Perfect RLNC with Buffer, Multiple-Receiver Case

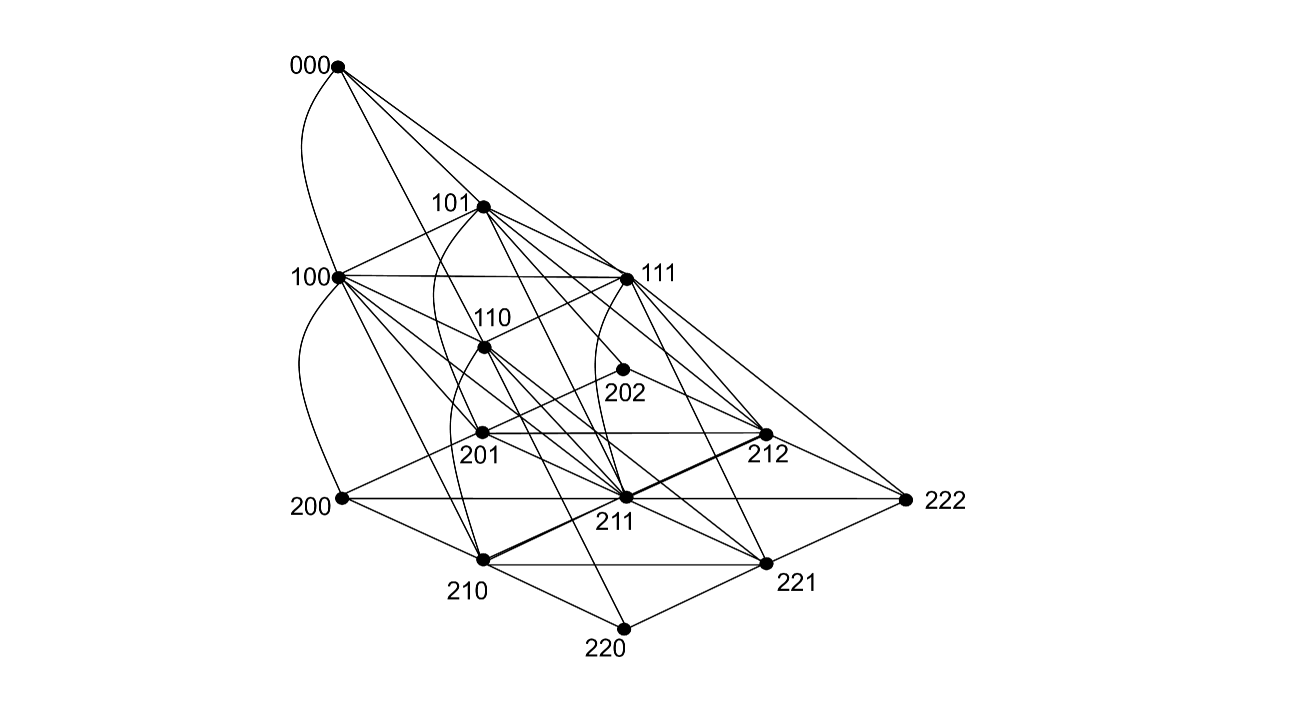

The transmission process of the perfect RLNC scheme with buffer for multiple receivers on the full-duplex relay network can be modeled as a Markov chain, denoted by , in the following way. Every state in can be labeled by an -tuple , where represents the number of packets successfully received by the RS, and represents the number of packets successfully received by receiver . Notice that for every receiver . Thus, by conditioning on , we can compute the number of states in the Markov chain as states. Except for the state , which is absorbing, all other states are transient (assuming ). There is a -step transition in the Markov chain once the BS broadcasts a new packet in a timeslot. An illustration of the -step transition diagram for the case is given in Fig. 2. We next define the -step transition probability from state to state for the Markov chain.

Let denote the set of receivers who have not obtained packets at state yet, that is, . In addition, denote by the set of receivers who have obtained a new packet after the -step transition from to , that is, . The -step transition probability from to can be formulated by the following different cases depending on the value of and .

-

•

Case : . In this case . We have

(12) -

•

Case : . In this case . We have

(13) -

•

Case : . Notice that is not necessarily contained in in this case. We have

(14)

Analogous to (6), the expected completion delay for the system can be expressed as

| (15) |

where denotes the matrix of -step transition probabilities among all transient states, with the first row/column indexed by the state .

Since the number of states in the Markov chain increases exponentially with increasing , it may not be convenient to compute based on (15). We next provide an alternative way to analyze by exploring its connection with , which is given by (3) and denotes the expected system completion delay for the special case , or equivalently, the expected system completion delay in wireless broadcast. For this purpose, we first focus on a single receiver and compare with , which represents the expected completion delay at receiver for the special case . For , let and respectively denote the number of timeslots that the RS and receiver take to receive the packet. Thus, , , and for all . By conditioning on the relation between and , we can deduce the followings.

-

•

, which means the RS has received the packet upon the reception of the packet by receiver . In this case, it takes on average timeslots for receiver to further obtain the packet so that ;

-

•

, which means upon the reception of the packet at the RS, receiver has only received fewer than packets. In this case, it takes on average additional timeslots for the RS to receive the packet, and timeslots for receiver to receive the packet. Thus, .

In all, we have

| (16) |

Since and , we have

| (17) |

Moreover, due to (9), (16) and , the following lemma can be obtained.

Lemma 4.

When , .

We next make a connection between and similar to that between and in (17). For independent geometrically distributed random variables (starting from ) with respective parameters , define

| (18) |

can be explicitly computed by the min-max identity (see, e.g., [35]). Based on ,

| (19) |

For , the connection between and can be analyzed by conditioning on the relation among , and , . To ease the following presentation, we first elaborate the case of , and then give a general conclusion for .

Lemma 5.

For the case ,

| (20) |

Proof:

We analyze by conditioning on the following different cases:

-

•

Let represent the case and , which is equivalent to . In this case, when both receivers have obtained packets, receiver has obtained packet as well. Thus, it only takes additional timeslots on average for receiver to get the packet, that is, .

-

•

Let represent the case and , which is equivalent to . In a similar argument to case , we have .

-

•

Let represent the case and . We further divide into two subcases, that is, and . In the first subcase , when both receivers have obtained packets, it takes extra timeslots on average for the RS to get the packet and then timeslots on average to make both receivers obtain the packets, that is,

In the second subcase , when both receivers have obtained packets, the RS has already received the packet, so it takes extra timeslots on average to make both receivers obtain the packets, that is,

In all,

and consequently

-

•

The last case and has probability to occur.

Eq. (LABEL:eqn:recursive_expression_of_fullduplex1) can now be proved to be correct due to . ∎

Let denote the number of timeslots receiver takes to receive the packet in the special case . Thus, (LABEL:eqn:recursive_expression_of_fullduplex1) implies

| (21) |

Let denote the following identity

| (22) |

Eq. (23) in Theorem 6 extends (16) from the single receiver case to the case . It implies an approximation, which can also serve as a lower bound, for based on . First, because , we have

| (24) |

Since and can be explicitly computed based on Lemma 4 together with the recursive formula (10), we shall adopt as an explicitly computable lower bound on . Second, we shall omit in the approximation whose performance will be justified below. For brevity, let denote

| (25) |

Theorem 7.

For the case and ,

| (26) |

We can now consider as an approximation as well as a lower bound for when and . Notice that and can be explicitly computed by (3) and by Lemma 4 together with the recursive formula (10), respectively.

Before proceeding to generalize the approximation of to the case , we briefly discuss the approximation accuracy, which depends on how close approximates the difference . In the process of obtaining the approximation value in Theorem 7, we neglect the term , approximate as , and add , which represents the expected number of timeslots the RS takes to obtain the first packet. Observe that with increasing , both and decrease by Lemma 4, and so does . Without loss of generality, we next analyze the loss by approximating as via the following cases.

-

•

Case 1: , so that , and are small. Thus, tends to zero fast with increasing . Moreover, also converges to fast with increasing . Actually, with increasing , when a receiver obtains the new packet, there is a higher probability that the RS has obtained at least packets from the BS. It turns out that the approximation of the transmission scenario from the RS to receivers as a wireless broadcast is accurate, that is, is a close approximation of .

-

•

Case 2: , so that both and are relatively small. The gap between and is negligible for small . However, for larger , the approximation of as becomes less accurate than that in Case 1. Moreover, tends to with increasing (but not as fast as in Case 1). Hence, the approximation of by in this case performs a little less accurate than that in Case 1 (with the same , ).

-

•

Case 3: . In this case, with increasing , , and decrease slower than those in Case 1 and 2 (which have the same , but larger ). Consequently, is not negligible even for large . Similarly, does not converge to with increasing. As a result, for large , the approximation of by is not as accurate as in Case 1 and 2 (with the same , ).

Notice that for every receiver , the expected completion delay , which has been explicitly characterized in Theorem 2 and can be efficiently computed according to (9), is naturally a lower bound of . When is much smaller than , or with large enough , the probability that the completion delay at receiver is no larger than that at receiver , that is, is high. As a result, the following simple lower bound for

| (27) |

may provide a better approximation compared with (7) when the difference of and is large or is relatively large, particularly for Case 2 and 3 discussed above.

Corollary 8.

For the case and ,

| (28) |

We now generalize the approximation of as from to . Write

| (29) |

Because , we can express

| (30) |

where . Based on (29), (30) and (19), we have

| (31) |

For , it has been proved in Theorem 7 that . For , further analysis of involves much more details which are beyond the scope of this paper, so we directly ignore this term in our approximation. Because Similar to (24), . Consequently, we obtain the following approximation of

| (32) |

where is generalized from (25) to denote . Recall that represents the expected system completion delay for the special case , which degenerates to the wireless broadcast, and according to (3), we have the following explicit expression

| (33) |

Based on Lemma 4 and the definition of in (7), we can also express in the following closed-form, which is a function of and

| (34) |

Moreover, notice that can be explicitly computed by the following recursive procedure:

-

•

.

- •

For fixed , keeps the same so that the accuracy for the approximation of depends on . In particular, both and in decrease with increasing , and converges to when increases to , which represents the case . Moreover, as can be explicitly computed by Lemma 4, the use of in is to approximate the joint probability , which is a key component in characterizing in (31). Analogous to the discussion above Corollary 8 for the case , we further argue that for sufficiently large , when , is a closer approximation of compared with the case , while the approximation performs the worst for the case .

In (32), we use the sign “” instead of “” because we did not prove the term in the explicit characterization of in (31) to be non-negative for , which we conjecture to be correct. By further taking the simple lower bound into account, we obtain the following approximation of for ,

| (35) |

where is rigorously proved to be in Theorem 7 and Corollary 8 for . The tightness of the above approximation will be numerically analyzed in the next section.

It is worthwhile noting that in the case that is relatively small and there is a receiver whose successful receiving probability is much smaller than others’, is a better lower bound of compared with . Otherwise, will be a better approximation of , because it is built upon the expected system completion delay of wireless broadcast, which has already taken all receivers into consideration. In the full-duplex relay network modeled in this paper, as is a lower bound for the expected system completion delay of an arbitrary RLNC scheme, so is .

IV Numerical Analysis

In this section, we numerically analyze the average completion delay of the two fundamental perfect RLNC schemes and compare the numerical results with the theoretical characterizations obtained in the previous section. Moreover, we compare the performance of the two fundamental schemes with the one proposed in [27] — from the perspective of the average completion delay and the average buffer size taken at the RS, both of which are normalized by . We adopt the following abbreviations in the figure legends. The FBPF perfect RLNC scheme, the perfect RLNC scheme without buffer, and the perfect RLNC scheme with buffer are respectively labeled as “FBPF”, “PRLNC w/t buffer”, and “PRLNC w/ buffer”. The results obtained by simulation are labeled with “simu”, and the theoretical results from Proposition 1 and Theorem 2 are labeled with “th”. The performance for the single receiver and for the system is respectively labeled as “single r” and “system”.

Under the settings , and , Fig. 3 depicts the average completion delay per packet at a single receiver as well as for the system with varying . One may observe the followings. First, the average completion delay of every scheme decreases with increasing , and converges to . Second, the average completion delay of FBPF is upper bounded by that of perfect RLNC without buffer and lower bounded by that of perfect RLNC with buffer. Most importantly, the simulation results numerically verify the theoretical derivations in Proposition 1 and Theorem 2, as well as validate the lower bound (35) for the expected system completion delay for perfect RLNC with buffer.

Under the settings , and , Fig. 3 depicts the average completion delay per packet at a single receiver as well as for the system with increasing . In addition to similar observations to Fig. 3, one may further conclude the followings. The average completion delay for the system of all three schemes converges to . Moreover, the plots for the average completion delay at a single receiver in Fig. 3 and Fig. 3 are almost identical, which infers that for all three schemes, the exchange of the values of and does not affect the completion delay performance at a single receiver. Theoretically, (1) and (5) justify this observation for perfect RLNC without buffer and with buffer, respectively. Lastly, with increasing , the lower bound (35) becomes tighter, which is in line with the discussion in the previous section.

Table I lists the average buffer size per packet needed at the RS for the FBPF scheme with the settings , , and different . It is interesting to notice that the required buffer size increases with increasing . In comparison, the perfect RLNC scheme without buffer demands no buffer, and the buffer size per packet of the perfect RLNC scheme with buffer is always , which is smaller than that of the FBPF scheme when .

| 0.5 | 0.6 | 0.7 | 0.8 | 0.9 | 1 | ||

|---|---|---|---|---|---|---|---|

|

1.293 | 1.383 | 1.474 | 1.559 | 1.63 | 1.698 |

Fig. 3 has demonstrated the tightness of the lower bound (35) for the expected system completion delay for perfect RLNC with buffer. Recall that the bound (35) consists of two parts, both of which can be explicitly computed. One is , which is a lower bound deduced from the perspective of wireless broadcast, and the other is , which is the expected completion delay at the single receiver with the worst channel condition. Above Corollary 8, we have discussed, for , the accuracy of the lower bound and conclude that is necessary to form the better lower bound (28). In the remaining part of this section, we shall further numerically compare the accuracy of the two lower bounds and .

Under the settings , , Fig. 4 compares the average system completion delay per packet with the two lower bounds (labeled as “lower bound : Eq. (7)”) and (labeled as “lower bound : Eq. (27)”). The comparison is conducted under different choices of , that is, . We can conclude the following observations from Fig. 4 about the two lower bounds.

-

•

First, the average system completion delay per packet as well as its two lower bounds decrease with fixed and increasing . They also decrease and converge to some values with fixed and increasing .

-

•

Second, for all choices of , is tighter than for small . This is because is obtained from the perspective of wireless broadcast so it takes all receivers’ completion delay into account. In particular, the approximation of by is relatively accurate for small and when , the bound is exactly equal to , that is, .

-

•

Moreover, in the two cases with , is always better than . When , converges to the average system completion delay with increasing . However, when , decreases faster with increasing , so that outperforms. This justifies the usefulness to supplement in the tighter lower bound (28).

-

•

Last, by comparing the three curves related to , we can find that approximating by merely will be much looser with decreasing because it does not take into consideration. Therefore, the additional term we introduce in the lower bound (7) is indispensable in estimating .

In summary, what have been observed from Fig. 4 are consistent with the (3-case) discussion about the accuracy to approximate as in the previous section (above Corollary 8), and they affirm that (28) is a tighter lower bound than the individual use of (7) or (27).

For multi-receiver case , Fig. 5 compares the average system completion delay per packet with (labeled as “approx”) and (labeled as “single r”), which constitute the lower bound in (35) as an extension of (28). The comparison is conducted under three different settings of and : (a) and is evenly distributed over ; (b) and for each receiver ; (c) and for each receiver . The followings can be observed.

-

•

For all settings, there is a noticeable gap between and the average system completion delay per packet even for large (compare with Fig. 4 in which the gap is very small for ). This is mainly because the number of receivers considered herein is much more than that in Fig. 4, so that the approximation accuracy from the perspective of a single receiver declines.

-

•

In Settings (a) and (c), the curve of is close to the average system completion delay per packet for all choices of . However, the tightness of in Setting (a) is slightly worse than that in Setting (c) mainly because half of the receivers’ in Setting (a) are larger than .

-

•

In Setting (b), the accuracy of to approximate is not as good as that in Settings (a) and (c), because herein. Meanwhile, the approximation becomes more accurate for large , affirming that (35) is a tighter lower bound of compared with or .

V Concluding Remarks

In this paper, for full-duplex relay networks, we consider two fundamental perfect RLNC schemes to investigate the fundamental benefit RLNC can provide, and derive closed-form formulae for their expected completion delay, both at a single receiver and for the whole system. The expected completion delay of the two schemes can respectively serve as an upper bound for all perfect RLNC schemes and a lower bound for all RLNC schemes. It provides a theoretical guideline for future works on the detailed design of RLNC-based transmission schemes in the full-duplex relay networks. As an ensuing work, by adapting recently proposed efficient RLNC schemes such as Fulcrum [30] or circular-shift RLNC [38], we will further design practical RLNC schemes with small buffer as well as low coding complexity at the RS, and with the completion delay performance closer to the theoretical limit characterized in this paper. Another ensuing work is to study the completion delay performance of perfect RLNC in a more general network model which contains direct links between the BS and the receivers as well as multiple full-duplex relays.

-A Proof of Eq. (40)

For brevity, write . Let denote the total probability to enter state starting from state in the Markov chain under the constraint that the transitions from to are not allowed to visit states for all and . E.g., when . For brevity, will be written as .

To reflect the number of original packets in the notation, write . Stemming from (6), we can obtain the following equivalent characterization

| (36) |

Let represent the total probability that state can be entered starting from in the Markov chain , so that for all . For , because and , we have and thus

| (37) |

Since is the -step transition probability from state to itself in as well as from state to itself in , and is the -step transition probability from state to itself in , based on (36) and (37), we obtain the following recursive expression

| (38) |

As , we have and

| (39) |

As , in order to prove (5) based on (39) for , it remains to show

| (40) |

which is equivalent to prove

| (41) |

In the remaining proof, we shall first make a connection between and for . Notice that for every , the -step transition probability for state keeps the same, and , for all . Thus, . Moreover, by making use of the property

for and , one may readily verify that for ,

| (42) |

On the other hand, for , can also be expressed as

| (43) |

where the last equality holds due to for all subject to and . Then, the addition of (-A) and (42) multiplied by on both sides yields , which implies for ,

| (44) |

We last characterize given that . Notice that for . It turns out that

| (45) |

where represents the number of all those transitions from to that contain

-

•

exactly -step transitions in the form , -step transitions in the form , and -step transitions in the form ;

-

•

no -step transitions in the form .

Under this constraint, coincides with the Narayana number (see, e.g., Chapter 2 in [39]) with parameters , so . As ,

| (46) |

where the second last equality can be readily verified based on the combinatorial equation for . By plugging (-A) back to (45),

Thus, the right-hand side of (44) can be expressed as

| (47) |

As , the first term in (-A) can be expressed as

Moreover, by altering the index of and the fact , we can express the second term in (-A) as

Consequently,

| (48) |

where the second equality holds because .

-B Proof of Theorem 6

Given that there are receivers and source packets in the network. For the parameter defined in (18), by the min-max identity, , where . Moreover, we have

and taking a summation on each side of the above two equations yields

| (49) |

As a result, Eq. (LABEL:eqn:recursive_expression_of_fullduplex1) in Lemma 5 and (21) can be respectively rewritten as

By the above two equations and (22), in order to prove (23), it suffices to show

| (50) |

Observe that

| (51) |

Due to the memoryless property of geometric distribution, under the condition and , the events and are independent of the arrival timeslot of packet at the RS, and they have respective probability and to occur. Hence,

It turns out that . By plugging the above expression back to (-B), we obtain

| (52) |

and similarly

| (53) |

Based on (52), (53), and , the correctness of (50) can be verified.

-C Proof of Theorem 7

It remains to prove . To ease the analysis, we make use of the following Markov chain consisting of states, in which (i) state , , represents the scenario that receivers and have respectively obtained and packets; (ii) there is a -step transition once at least one receiver obtains a new packet. In the Markov chain, let represent the -step transition probability from state to , and denote the total probability to visit state among all paths from to . Notice that the Markov chain defined herein is different from the one modeled at the beginning of Sec. III-C and illustrated in Fig. 2, because it does not involve the number of received packets at the RS in the state description. Observe that for ,

| (54) |

For brevity, write for and write for . In this way,

| (55) |

| (56) |

for . We next characterize the -step transition probability in .

For , by the memoryless property of a geometric distribution, the -step transition probability starting from state is not affected by the arrival time of the packet at the RS and thus is invariant of . Specifically,

| (57) |

For , the -step transition probability starting from state is affected by the arrival time of the packet at the RS. Notice that when is in state , it implies the occurrence of the joint event of and , which will be denoted by . Thus, we can respectively express , , and as

| (58) | ||||

| (59) | ||||

| (60) |

where .

Similarly, for ,

| (61) |

For the special case , let , and respectively represent , and . Based on the above derivation of -step transition probabilities in , we obtain the following comparisons. For ,

| (62) |

For ,

| (63) |

For ,

| (64) |

Assume and . Eqs. (62)-(64) together imply that there are higher probabilities to visit state along the paths from to compared with the case , that is, . Since , . Since ,

| (65) |

When , we have , so (65) implies and . Therefore, and .

Assume . In this case, , , and converges to while and converge to with increasing (assume is sufficiently large). For relatively small , is still larger than due to the effect of (63), so we have . However, with increasing , will occur because decreases faster than , ( may or may not converge to depending on whether is larger than ). As a result, for large , (65) is insufficient to imply so that we need further manipulation on the expression of .

For , write , and let , respectively represent , for the special case . In terms of , we can express , , recursively as

| (66) |

where the last equality holds by (57) and the definition of . Similarly,

| (67) |

By plugging (66), (67) back to (56), we can deduce the following recursive expression of ,

where , . In this way, for , we have , where , respectively refer to , in the case of . Because

| (68) |

we has . When or , and , so that for all .

Assume . Based on (-C) and (61), we have

so that . With increasing , both and decrease and converges to a nonnegative constant value (for sufficiently large ). We can then deduce, based on (68), that both and converge to zero with increasing too. As a result, even if is possible to occur, is negligible compared with . We can now assert .

Acknowledgement

The authors would like to appreciate the valuable suggestions by the editor as well as anonymous reviewers to help improve the quality of the paper.

References

- [1] R. Su, Q. T. Sun and Z. Zhang, “On the delay of random linear network coding in full-duplex relay networks,” IEEE ISIT, 2021.

- [2] G. Liu, F. R. Yu, H. Ji, V. C. M. Leung and X. Li, “In-band full-duplex relaying: a survey, research issues and challenges,” IEEE Commun. Surv., vol. 17, no. 2, 2015.

- [3] T. Ho, M. Médard, R. Koetter, D. R. Karger, M. Effros, J. Shi and B. Leong, “A random linear network coding approach to multicast,” IEEE Trans. Inf. Theory, vol. 52, no. 10, 2006.

- [4] A. Eryilmaz, A. Ozdaglar, M. Medard and E. Ahmed, “On the delay and throughput gains of coding in unreliable networks,” IEEE Trans. Inf. Theory, vol. 54, no. 12, 2008.

- [5] E. Tsimbalo, A. Tassi and R. J. Piechocki, “Reliability of multicast under random linear network coding,” IEEE Trans. Commun., vol. 66, no. 6, 2018.

- [6] R. Su, Q. T. Sun and Z. Zhang, “Delay-complexity trade-off of random linear network coding in wireless broadcast,” IEEE Trans. Commun., vol. 68, no. 9, 2020.

- [7] I. Chatzigeorgious and A. Tassi, “Decoding delay performance of random linear network coding for broadcast,” IEEE Trans. Veh. Technol., vol. 66, no. 8, 2017.

- [8] B. T. Swapna, A. Eryilmaz, and N. B. Shroff, “Throughput-delay analysis of random linear network coding for wireless broadcasting,” IEEE Trans. Inf. Theory, vol. 59, no. 10, 2013.

- [9] D. E. Lucani, M. Medard and M. Stojanovic, “On coding for delay — network coding for time-division duplexing,” IEEE Trans. Inf. Theory, vol. 58, no. 4, 2012.

- [10] L. Wang, Y. Yang and W. Zhao, “Network coding-based multipath routing for energy efficiency in wireless sensor networks,” EURASIP J. WIREL. COMM., vol. 2012, 2012.

- [11] J. Huang, H. Gharavi, H. Yan and C. Xing, “Network coding in relay-based device-to-device communications,” IEEE Netw., vol. 31, no. 4, 2017.

- [12] S. Chieochan and E. Hossain, “Wireless fountain coding with IEEE 802.11E block ACK for media streaming in wireline-cum-Wifi networks: a performance study,” IEEE Trans. on Mob. Comput.,vol. 10, no. 10, 2011.

- [13] G. Giacaglia, X. Shi, M. Kim, D. E. Lucani and M. Médard, “Systematic network coding with the aid of a full-duplex relay,” IEEE ICC, 2013.

- [14] H. Khamfroush, P. Pahlevani, D. E. Lucani, M. Hundebøll and F. H. P. Fitzek, “On the coded packet relay network in the presence of neighbors: benefits of speaking in a crowded room,” IEEE ICC, 2014.

- [15] Y. Li, S. Blostein and W.-Y. Chan, “Systematic network coding for two-hop lossy transmissions,” EURASIP J. Adv. Signal Process, vol. 2015, 2015.

- [16] B. Maboudi, P. Pahlevani, “Security overhead of random linear network coding in multicast relay networks,” T. EMERG. TELECOMMUN. T., 2020.

- [17] C. Chen, S. J. Baek, and G. De Veciana, “Opportunistic scheduling of randomly coded multicast transmissions at half-duplex relay stations,” IEEE Trans. Inf. Theory, vol. 62, no. 10, 2016.

- [18] K. Loa, et al., “IMT-advanced relay standards [WiMAX/LTE update],” IEEE Commun. Mag., vol. 48, no. 8, 2010.

- [19] A. Damnjanovic, et al., “A survey on 3GPP heterogeneous networks,” IEEE Wireless Commun., vol. 18, no. 3, 2011.

- [20] P. Sadeghi, R. Shams and Danail Traskov, “An optimal adaptive network coding scheme for minimizing decoding delay in broadcast erasure channels,” EURASIP J. Wireless Commun. and Netw., vol. 2010, 2010.

- [21] S. Sorour and S. Valaee, “Completion delay minimization for instantly decodable network codes,” IEEE/ACM Trans. Netw., vol. 23, no. 5, 2015.

- [22] Z. Zhang, X. Chai, K. Long, A. V. Vasilakos and L. Hanzo, “Full duplex techniques for 5G networks: self-interference cancellation, protocol design, and relay selection,” IEEE Commun. Mag., vol. 53, no. 5, 2015.

- [23] Z. Zhang, K. Long, A. V. Vasilakos and L. Hanzo, “Full-duplex wireless communications: challenges, solutions, and future research directions,” Proc. IEEE, vol. 104, no. 7, 2016.

- [24] Y. Zhang, M. Xiao, S. Han, M. Skoglund and W. Meng, “On precoding and energy efficiency of full-duplex millimeter-wave relays,” IEEE Trans. Wirel. Commun., vol. 18, no. 3, 2019.

- [25] S. Han, Y. Zhang, W. Meng and H. Chen, “Self-interference-cancellation-based SLNR precoding design for full-duplex relay-assisted system,” IEEE Trans. Veh. Technol., vol. 67, no. 9, 2018.

- [26] H. Zhu, B. Smida and D. J. Love, “An efficient network-coded ARQ scheme for two-way wireless communication with full-duplex relaying,” IEEE Access, vol. 7, 2019.

- [27] C. Chen, Z. Meng, S. J. Baek, X. Yu, C. Li and R. Yin, “Low-complexity coded transmission without CSI for full-duplex relay networks,” IEEE GLOBECOM, 2020.

- [28] B. Shrader, A. Ephremides, “Queueing delay analysis for multicast with random linear coding,” IEEE Trans. Inf. Theory, vol. 58, no. 1, 2011.

- [29] H. Khamfroush, D.E. Lucani, and J. Barros, “Network coding for wireless coopertive networks: Simple rules, near-optimal delay,” in Proc. IEEE ICC Workshops, 2014.

- [30] D. E. Lucani, M. V. Pedersen, D. Ruano, C. W. Sørensen, F. H. P. Fitzek, J. Heide, O. Geil, V. Nguyen and M.Reisslein, “Fulcrum: flexible network coding for heterogeneous devices,” IEEE Access, vol. 6, 2018.

- [31] V. Nguyen, E. Tasdemir, G. T. Nguyen, D. E. Lucani, F. H. P. Fitzek, M.Reisslein, “DSEP Fulcrum: Dynamic sparsity and expansion packet for Fulcrum network coding,” IEEE Access, vol. 8, 2020.

- [32] A. Tassi, I. Chatzigeorgiou and D. E. Lucani, “Analysis and optimization of sparse random linear network coding for reliable multicast services,” IEEE Trans. Commun., vol. 64, no. 1, 2016.

- [33] J. Heide and D. Lucani, “Composite extension finite fields for low overhead network coding: telescopic codes,” IEEE ICC, 2015.

- [34] N. J. A. Sloane. The On-Line Encyclopedia of Integer Sequence. Accessed: 22nd, Jan. 2021[Online]. Available: https://oeis.org/A088617.

- [35] S. M. Ross, Introduction to Probability Models, Academic Press, 11th ed., 2014.

- [36] M. Nistor, D. E. Lucani, T. T. V. Vinhoza, R. A. Costa and J. Barros, “On the delay distribution of random linear network coding,” IEEE Journal on Selected Areas in Communications, vol. 29, no. 5, 2011.

- [37] J. Heide, M. V. Pedersen, F. H. P. Fitzek and M. Medard, “On code parameters and coding vector representation for practical RLNC,” IEEE ICC, 2011.

- [38] H. Tang, Q. T. Sun, Z. Li, X. Yang and K. Long, “Circular-shift linear network coding,” IEEE Trans. Inf. Theory, vol. 65, no. 1, 2019.

- [39] T. K. Petersen, Eulerian Numbers, Birkhuser Basel, 1st ed., 2015.