Completely Positive Factorization by a Riemannian Smoothing Method

Abstract

Copositive optimization is a special case of convex conic programming, and it consists of optimizing a linear function over the cone of all completely positive matrices under linear constraints. Copositive optimization provides powerful relaxations of NP-hard quadratic problems or combinatorial problems, but there are still many open problems regarding copositive or completely positive matrices. In this paper, we focus on one such problem; finding a completely positive (CP) factorization for a given completely positive matrix. We treat it as a nonsmooth Riemannian optimization problem, i.e., a minimization problem of a nonsmooth function over a Riemannian manifold. To solve this problem, we present a general smoothing framework for solving nonsmooth Riemannian optimization problems and show convergence to a stationary point of the original problem. An advantage is that we can implement it quickly with minimal effort by directly using the existing standard smooth Riemannian solvers, such as Manopt. Numerical experiments show the efficiency of our method especially for large-scale CP factorizations.

Keywords: completely positive factorization, smoothing method, nonsmooth Riemannian optimization problem

AMS: 15A23, 15B48, 90C48, 90C59

1 Introduction

The space of real symmetric matrices is endowed with the trace inner product . A matrix is called completely positive if for some there exists an entrywise nonnegative matrix such that , and we call a CP factorization of . We define as the set of completely positive matrices, equivalently characterized as

where denotes the convex hull of a given set . We denote the set of copositive matrices by It is known that and are duals of each other under the trace inner product; moreover, both and are proper convex cones [3, Section 2.2]. For any positive integer , we have the following inclusion relationship among other important cones in conic optimization:

where is the cone of symmetric positive semidefinite matrices and is the cone of symmetric nonnegative matrices. See the monograph [3] for a comprehensive description of and .

Conic optimization is a subfield of convex optimization that studies minimization of linear functions over proper cones. Here, if the proper cone is or its dual cone , we call the conic optimization problem a copositive programming problem. Copositive programming is closely related to many nonconvex, NP-hard quadratic and combinatorial optimizations [29]. For example, consider the so-called standard quadratic optimization problem,

| (1) |

where is possibly not positive semidefinite and e is the all-ones vector. Bomze et al. [9] showed that the following completely positive reformulation,

where is the all-ones matrix, is equivalent to (1). Burer [15] reported a more general result, where any quadratic problem with binary and continuous variables can be rewritten as a linear program over . As an application to combinatorial problems, consider the problem of computing the independence number of a graph with nodes. De Klerk and Pasechnik [24] showed that

where is the adjacency matrix of . For surveys on applications of copositive programming, see [6, 10, 16, 28, 29].

The difficulty of the above problems lies entirely in the completely positive conic constraint. Note that because neither nor is self-dual, the primal-dual interior point method for conic optimization does not work as is. Besides this, there are many open problems related to completely positive cones. One is checking membership in , which was shown to be NP-hard by [27]. Computing or estimating the cp-rank, as defined later in (3), is also an open problem. We refer the reader to [4, 28] for a detailed discussion of those unresolved issues.

In this paper, we focus on finding a CP factorization for a given , i.e., the CP factorization problem:

| (CPfact) |

which seems to be closely related to the membership problem . Sometimes, a matrix is shown to be completely positive through duality, or rather, for all , but in this case, a CP factorization will not necessarily be obtained.

1.1 Related work on CP factorization

Various methods of solving CP factorization problems have been studied. Jarre and Schmallowsky [33] stated a criterion for complete positivity, based on the augmented primal dual method to solve a particular second-order cone problem. Dickinson and Dür [26] dealt with complete positivity of matrices that possess a specific sparsity pattern and proposed a method for finding CP factorizations of these special matrices that can be performed in linear time. Nie [36] formulated the CP factorization problem as an -truncated -moment problem, for which the author developed an algorithm that solves a series of semidefinite optimization problems. Sponsel and Dür [45] considered the problem of projecting a matrix onto and by using polyhedral approximations of these cones. With the help of these projections, they devised a method to compute a CP factorization for any matrix in the interior of . Bomze [7] showed how to construct a CP factorization of an matrix based on a given CP factorization of an principal submatrix. Dutour Sikirić et al. [43] developed a simplex-like method for a rational CP factorization that works if the input matrix allows a rational CP factorization.

In 2020, Groetzner and Dür [31] applied the alternating projection method to the CP factorization problem by posing it as an equivalent feasibility problem (see (FeasCP)). Shortly afterwards, Chen et al. [19] reformulated the split feasibility problem as a difference-of-convex optimization problem and solved (FeasCP) as a specific application. In fact, we will solve this equivalent feasibility problem (FeasCP) by other means in this paper. In 2021, Boţ and Nguyen [12] proposed a projected gradient method with relaxation and inertia parameters for the CP factorization problem, aimed at solving

| (2) |

where is the closed ball centered at 0. The authors argued that its optimal value is zero if and only if .

1.2 Our contributions and organization of the paper

Inspired by the idea of Groetzner and Dür [31], wherein (CPfact) is shown to be equivalent to a feasibility problem called (FeasCP), we treat the problem (FeasCP) as a nonsmooth Riemannian optimization problem and solve it through a general Riemannian smoothing method. Our contributions are summarized as follows:

1. Although it is not explicitly stated in [31], (FeasCP) is actually a Riemannian optimization formulation. We propose a new Riemannian optimization technique and apply it to the problem.

2. In particular, we present a general framework of Riemannian smoothing for the nonsmooth Riemannian optimization problem and show convergence to a stationary point of the original problem.

3. We apply the general framework of Riemannian smoothing to CP factorization. Numerical experiments clarify that our method is competitive with other efficient CP factorization methods, especially for large-scale matrices.

In Section 2, we review the process to reconstruct (CPfact) into another feasibility problem; in particular, we take a different approach to this problem from those in other studies. In Section 3, we describe the general framework of smoothing methods for Riemannian optimization. To apply it to the CP factorization problem, we employ a smoothing function named LogSumExp. Section 4 is a collection of numerical experiments for CP factorization. As a meaningful supplement, in Section 5, we conduct further experiments (FSV problem and robust low-rank matrix completion) to explore the numerical performance of various sub-algorithms and smoothing functions on different applications.

2 Preliminaries

2.1 cp-rank and cp-plus-rank

First, let us recall some basic properties of completely positive matrices. Generally, many CP factorizations of a given may exist, and they may vary in their numbers of columns. This gives rise to the following definitions: the cp-rank of , denoted by , is defined as

| (3) |

where if Similarly, we can define the cp-plus-rank as

Immediately, for all , we have

| (4) |

Every CP factorization of is of the same rank as since holds for any matrix . The first inequality of (4) comes from the fact that for any CP factorization ,

The second is trivial by definition.

Note that computing or estimating the cp-rank of any given is still an open problem. The following result gives a tight upper bound of the cp-rank for in terms of the order .

Theorem 2.1 (Bomze, Dickinson, and Still [8, Theorem 4.1]).

For all , we have

| (5) |

The following result is useful for distinguishing completely positive matrices in either the interior or on the boundary of .

Theorem 2.2 (Dickinson [25, Theorem 3.8]).

We have

2.2 CP factorization as a feasibility problem

Groetzner and Dür [31] reformulated the CP factorization problem as an equivalent feasibility problem containing an orthogonality constraint.

Given , we can easily get another CP factorization with columns for every integer , if we also have a CP factorization with columns. The simplest way to construct such an matrix is to append zero columns to i.e., Another way is called column replication, i.e.,

| (6) |

where denotes the -th column of . It is easy to see that The next lemma is easily derived from the previous discussion, and it implies that there always exists an CP factorization for any . Recall that definition of is given in (5).

Lemma 2.3.

Suppose that , . Then if and only if has a CP factorization with columns.

Let denote the orthogonal group of order , i.e., the set of orthogonal matrices. The following lemma is essential to our study. (Note that many authors have proved the existence of such an orthogonal matrix (see, e.g., [17, Lemma 2.1] and [31, Lemma 2.6]).

Lemma 2.4.

Let . if and only if there exists .

The next proposition puts the previous two lemmas together.

Proposition 2.5.

Let , where may possibly be not nonnegative. Then there exists an orthogonal matrix such that and

This proposition tells us that one can find an orthogonal matrix which can turn a “bad” factorization into a “good” factorization . Let and be an arbitrary (possibly not nonnegative) initial factorization . The task of finding a CP factorization of can then be formulated as the following feasibility problem,

| (FeasCP) |

We should notice that the condition is necessary; otherwise, (FeasCP) has no solution even if Regardless of the exact which is often unknown, one can use in (5). Note that finding an initial matrix is not difficult. Since a completely positive matrix is necessarily positive semidefinite, one can use Cholesky decomposition or spectral decomposition and then extend it to columns by using (6). The following corollary shows that the feasibility of (FeasCP) is precisely a criterion for complete positivity.

Corollary 2.6.

Set , an arbitrary initial factorization of . Then if and only if is feasible. In this case, for any feasible solution , is a CP factorization of .

In this study, solving (FeasCP) is the key to finding a CP factorization, but it is still a hard problem because is nonconvex.

2.3 Approaches to solving (FeasCP)

Groetzner and Dür [31] applied the so-called alternating projections method to (FeasCP). They defined the polyhedral cone, and rewrote (FeasCP) as

The alternating projections method is as follows: choose a starting point ; then compute and , and iterate this process. Computing the projection onto amounts to solving a second-order cone problem (SOCP), while computing the projection onto amounts to a singular value decomposition. Note that we need to solve an SOCP alternately at every iteration, which is still expensive in practice. A modified version without convergence involves calculating an approximation of by using the Moore-Penrose inverse of ; for details, see [31, Algorithm 2].

Our way is to use the optimization form. Here, we denote by (resp. ) the max-function (resp. min-function)) that selects the largest (resp. smallest) entry of a vector or matrix. Notice that We associate (FeasCP) with the following optimization problem:

For consistency of notation, we turn the maximization problem into a minimization problem:

| (OptCP) |

The feasible set is known to be compact [32, Observation 2.1.7]. In accordance with the extreme value theorem [40, Theorem 4.16], (OptCP) attains the global minimum, say . Summarizing these observations together with Corollary 2.6 yields the following proposition.

3 Riemannian smoothing method

The problem of minimizing a real-valued function over a Riemannian manifold , which is called Riemannian optimization, has been actively studied during the last few decades. In particular, the Stiefel manifold,

(when , it reduces to the orthogonal group) is an important case and is our main interest here. We treat the CP factorization problem, i.e., (OptCP) as a problem of minimizing a nonsmooth function over a Riemannian manifold, for which variants of subgradient methods [11], proximal gradient methods [23], and the alternating direction method of multipliers (ADMM) [34] have been studied.

Smoothing methods [21], which use a parameterized smoothing function to approximate the objective function, are effective on a class of nonsmooth optimizations in Euclidean space. Recently, Zhang, Chen and Ma [47] extended a smoothing steepest descent method to the case of Riemannian submanifolds in . This is not the first time that smoothing methods have been studied on manifolds. Liu and Boumal [35] extended the augmented Lagrangian method and exact penalty method to the Riemannian case. The latter leads to a nonsmooth Riemannian optimization problem to which they applied smoothing techniques. Cambier and Absil [18] dealt with the problem of robust low-rank matrix completion by solving a Riemannian optimization problem, wherein they applied a smoothing conjugate gradient method.

In this section, we propose a general Riemannian smoothing method and apply it to the CP factorization problem.

3.1 Notation and terminology of Riemannian optimization

Let us briefly review some concepts in Riemannian optimization, following the notation of [13]. Throughout this paper, will refer to a complete Riemannian submanifold of Euclidean space . Thus, is endowed with a Riemannian metric induced from the Euclidean inner product, i.e., for any , where is the tangent space to at . The Riemannian metric induces the usual Euclidean norm for . The tangent bundle is a disjoint union of the tangent spaces of . Let be a smooth function on . The Riemannian gradient of is a vector field on that is uniquely defined by the identities: for all ,

where is the differential of at . Since is an embedded submanifold of , we have a simpler statement for that is also well defined on the whole :

where is the usual gradient in and denotes the orthogonal projector from to . For a subset , the function is smooth, i.e., continuously differentiable on . Given a point and , denotes a closed ball of radius centered at . denotes the set of positive real numbers. We use subscript notation to select the th entry of a vector and superscript notation to designate an element in a sequence .

3.2 Ingredients

Now let us consider the nonsmooth Riemannian optimization problem (NROP):

| (NROP) |

where and is a proper lower semi-continuous function (maybe nonsmooth or even non-Lipschitzian) on . For convenience, the term smooth Riemannian optimization problem (SROP) refers to (NROP) when is continuously differentiable on . To avoid confusion in this case, we use instead of ,

| (SROP) |

Throughout this subsection, we will refer to many of the concepts in [47].

First, let us review the usual concepts and properties related to generalized subdifferentials in . For a proper lower semi-continuous function , the Fréchet subdifferential and the limiting subdifferential of at are defined as

The definition of above is not the standard one: the standard definition follows [39, 8.3 Definition]. But these definitions are equivalent by [39, 8.5 Proposition]. For locally Lipschitz functions, the Clarke subdifferential at , , is the convex hull of the limiting subdifferential. Their relationship is as follows:

Notice that if is convex, and coincide with the classical subdifferential in convex analysis [39, 8.12 Proposition].

Example 1 (Bagirov, Karmitsa, and Mäkelä [2, Theorem 3.23]).

From a result on the pointwise max-function in convex analysis, we have

where ’s are the standard bases of and

Next, we extend our discussion to include generalized subdifferentials of a nonsmooth function on submanifolds . The Riemannian Fréchet subdifferential and the Riemannian limiting subdifferential of at (see, e.g., [47, Definition 3.1]) are defined as

If , the above definitions coincide with the usual Fréchet and limiting subdifferentials in . Moreover, it follows directly that, for all , one has . According to [47, Proposition 3.2], if is a local minimizer of on , then . Thus, we call a point a Riemannian limiting stationary point of (NROP) if

| (7) |

In this paper, we will treat it as a necessary condition for a local solution of (NROP) to exist.

The smoothing function is the most important tool of the smoothing method.

Definition 3.1 (Zhang and Chen [46, Definition 3.1]).

A function is called a smoothing function of , if is continuously differentiable in for any ,

and there exist a constant and a function such that

| (8) |

Example 2 (Chen, Wets, and Zhang [22, Lemma 4.4]).

The LogSumExp function, , given by

is the smoothing function of because we can see that

(i) is smooth on for any . Its gradient is given by ,

| (9) |

where is the unit simplex.

(ii) For all and , we have Then, the constant and . The above inequalities imply that

Gradient sub-consistency or consistency is crucial to showing that any limit point of the Riemannian smoothing method is also a limiting stationary point of (NROP).

Definition 3.2 (Zhang, Chen and Ma [47, Definition 3.4 & 3.9]).

A smoothing function of is said to satisfy gradient sub-consistency on if, for any ,

| (10) |

where the subdifferential of associated with at is given by

Similarly, is said to satisfy Riemannian gradient sub-consistency on if, for any ,

| (11) |

where the Riemannian subdifferential of associated with at is given by

If one substitutes the inclusion with equality in (10), then satisfies gradient consistency on , and similarly in (11) for . Thanks to the following useful proposition from [47], we can induce gradient sub-consistency on from that on if is locally Lipschitz.

Proposition 3.3 (Zhang, Chen and Ma [47, Proposition 3.10]).

Let be a locally Lipschitz function and a smoothing function of For , if gradient sub-consistency holds on , then Riemannian gradient sub-consistency holds on as well.

The next example illustrates Riemannian gradient sub-consistency on for in Example 2, since any convex function is locally Lipschitz continuous.

3.3 Riemannian smoothing method

Motivated by the previous papers [18, 35, 47] on smoothing methods and Riemannian manifolds, we propose a general Riemannian smoothing method. Algorithm 1 is the basic framework of this general method.

| (12) |

Now let us describe the convergence properties of the basic method. First, let us assume that the function has a minimizer on for each value of .

Theorem 3.4.

Proof.

Let be a global solution of (NROP), that is,

From the Definition 3.1 of the smoothing function, there exist a constant and a function such that, for all ,

| (13) |

with Substituting and combining with the global solution , we have that

By rearranging this expression, we obtain

| (14) |

Since minimizes on for each , we have that , which leads to

| (15) |

The second inequality above follows from (13). Combining (14) and (15), we obtain

| (16) |

Now, suppose that is a limit point of , so that there is an infinite subsequence such that Note that because is complete. By taking the limit as , on both sides of (16), again by the definition of the smoothing function, we obtain

Thus, it follows that Since is a point whose objective value is equal to that of the global solution , we conclude that , too, is a global solution. ∎

This strong result requires us to find a global minimizer of each subproblem, which, however, cannot always be done. The next result concerns the convergence properties of the sequence under the condition that has the following additional property:

| (17) |

Example 4.

The above property holds for in Example 2; i.e., we have on , provided that . Note that under the equality,

the -th component of can be rewritten as

For any fixed , consider the derivative of a real function . Then we have

where “, ” are shorthand for and . For the last inequality above, we observe that ; hence, the term is a convex combination of all entries of , which implies that This completes the proofs of our claims.

In [18], the authors considered a special case of Algorithm 1, wherein the smoothing function of also satisfies (17) and a Riemannian conjugate gradient method is used for (12).

Theorem 3.5.

Proof.

For each , is obtained by approximately solving

starting at . Then at least, we have

Since , property (17) ensures

The claim that sequence is strictly decreasing follows from these two inequalities.

Suppose that, for all and for all ,

| (18) |

Then for each ,

which proves our claims.

Now, we show (18) is true if the smoothing function has property (17). Fix any ; (17) implies that is strictly decreasing as

Actually, is monotonically increasing on

On the other hand, from the definition of the smoothing function, we have that

Hence, we have as claimed. ∎

Note that the above weak result does not ensure that . Next, for better convergence (compared with Theorem 3.5) and an effortless implementation (compared with Theorem 3.4), we propose an enhanced Riemannian smoothing method: Algorithm 2. This is closer to the version in [47], where the authors use the Riemannian steepest descent method for solving the smoothed problem (19).

| (19) |

| (20) |

The following result is adapted from [47, Proposition 4.2 & Theorem 4.3]. Readers are encouraged to refer to [47] for a discussion on the stationary point associated with on .

Theorem 3.6.

In Algorithm 2, suppose that the chosen sub-algorithm has the following general convergence property for (SROP):

| (21) |

Moreover, suppose that, for all , the function satisfies the convergence assumptions of the sub-algorithm needed for above and satisfies the Riemannian gradient sub-consistency on . Then

- 1.

- 2.

Proof.

Fix any . By (21), we have . Hence, there is a convergent subsequence of whose limit is 0. This means that, for any , there exists an integer such that If , we get . Thus, statement (1) holds.

Next, suppose that is a limit point of generated by Algorithm 2, so that there is an infinite subsequence such that From (1), we have

and we find that for . Hence,

∎

Now let us consider the selection strategy of the nonnegative sequence with . In [47], when shrinks, the authors set

| (22) |

with an initial value of and constant . In the spirit of the usual smoothing methods described in [21], one can set

| (23) |

with a constant . The latter is an adaptive rule, because determines subproblem (19) and its stopping criterion at the same time. The merits and drawbacks of the two rules require more discussion, but the latter seems to be more reasonable.

We conclude this section by discussing the connections with [18] and [47]. Our work is based on an efficient unification of them. [18] focused on a specific algorithm and did not discuss the underlying generalities, whereas we studied a general framework for Riemannian smoothing. Recall that the “smoothing function” is the core tool of the smoothing method. In addition to what are required by its definition (see Definition 3.1), it needs to have the following “additional properties” (AP) in order for the algorithms to converge:

We find that not all smoothing functions satisfy (AP1) and for some functions it is hard to prove whether (AP2) holds. For example, all the functions in Table 1 are smoothing functions of , but only the first three meet (AP1); the last two do not. In [21], the authors showed that the first one in Table 1, , has property (AP2). The others remain to be verified, but doing so will not be a trivial exercise. To a certain extent, Algorithm 1 as well as Theorem 3.5 guarantee a fundamental convergence result even if one has difficulty in showing whether one’s smoothing function satisfies (AP2). Therefore, it makes sense to consider Algorithms 1 and 2 together for the sake of the completeness of the general framework.

| , where is the hyperbolic tangent function. | ||

| , where is the Gauss error function. |

Algorithm 2 expands on the results of [47]. It allows us to use any standard method of (SROP), not just steepest descent, to solve the smoothed problem (19). Various standard Riemannian algorithms for (SROP), such as the Riemannian conjugate gradient method [41] (which often performs better than Riemannian steepest descent), the Riemannian Newton method [1, Chapter 6], and the Riemannian trust region method [1, Chapter 7], have extended the concepts and techniques used in Euclidean space to Riemannian manifolds. As shown by Theorem 3.6, no matter what kind of sub-algorithm is implemented for (19), it does not affect the final convergence as long as the chosen sub-algorithm has property (21). On the other hand, we advocate that the sub-algorithm should be viewed as a “Black Box” and the user should not have to care about the code details of the sub-algorithm at all. We can directly use an existing solver, e.g., Manopt [14], which includes the standard Riemannian algorithms mentioned above. Hence, we can choose the most suitable sub-algorithm for the application and quickly implement it with minimal effort.

4 Numerical experiments on CP factorization

The numerical experiments in Section 4 and 5 were performed on a computer equipped with an Intel Core i7-10700 at 2.90GHz with 16GB of RAM using Matlab R2022a. Our Algorithm 2 is implemented in the Manopt framework [14] (version 7.0). The number of iterations to solve the smoothed problem (19) with the sub-algorithm is recorded in the total number of iterations. We refer readers to the supplementary material of this paper for the available codes.111Alternatively, https://github.com/GALVINLAI/General-Riemannian-Smoothing-Method.

In this section, we describe numerical experiments that we conducted on CP factorization in which we solved (OptCP) using Algorithm 2, where different Riemannian algorithms were employed as sub-algorithms and was used as the smoothing function. To be specific, we used three built-in Riemannian solvers of Manopt 7.0 — steepest descent (SD), conjugate gradient (CG), and trust regions (RTR), denoted by SM_SD, SM_CG and SM_RTR, respectively. We compared our algorithms with the following non-Riemannian numerical algorithms for CP factorization that were mentioned in subsection 1.1. We followed the settings used by the authors in their papers.

- •

- •

- •

We have shown that is a smoothing function of with gradient consistency. of the matrix argument can be simply derived from entrywise operations. Then from the properties of compositions of smoothing functions [5, Proposition 1 (3)], we have that is a smoothing function of with gradient consistency. In practice, it is important to avoid numerical overflow and underflow when evaluating . Overflow occurs when any is large and underflow occurs when all are small. To avoid these problems, we can shift each component by and use the following formula:

whose validity is easy to show.

The details of the experiments are as follows.

If was of full rank, for accuracy reasons, we obtained an initial by using Cholesky decomposition. Otherwise, was obtained by using spectral decomposition.

Then we extended to columns by column replication (6). We set if was known or was sufficiently large.

We used RandOrthMat.m [42] to generate a random starting point on the basis of the Gram-Schmidt process.

For our three algorithms, we set and used an adaptive rule (23) of with . Except for RIPG_mod, all the algorithms terminated successfully at iteration , where was attained before the maximum number of iterations (5,000) was reached. In addition, SpFeasDC_ls failed when . Regarding RIPG_mod, it terminated successfully when was attained before at most 10,000 iterations for , and before at most 50,000 iterations in all other cases. In the tables of this section, we report the rounded success rate (Rate) over the total number of trials, although the definitions of “Rate” in the different experiments (described in Sections 4.1-4.4) vary slightly from one experiment to the other. We will describe them later.

4.1 Randomly generated instances

We examined the case of randomly generated matrices to see how the methods were affected by the order or . The instances were generated in the same way as in [31, Section 7.7]. We computed by setting for all where is a random matrix based on the Matlab command randn, and we took to be factorized. In Table 2, we set and for the values . For each pair of and , we generated 50 instances if and 10 instances otherwise. For each instance, we initialized all the algorithms at the same random starting point and initial decomposition , except for RIPG_mod. Note that each instance was assigned only one starting point.

Table 2 lists the average time in seconds (Times) and the average number of iterations (Iters) among the successful instances. For our three Riemannian algorithms, Iters contains the number of iterations of the sub-algorithm. Table 2 also lists the rounded success rate (Rate) over the total number (50 or 10) of instances for each pair of and . Boldface highlights the two best results in each row.

As shown in Table 2, except for APM_mod, each method had a success rate of 1 for all pairs of and . Our three algorithms outperformed the other methods on the large-scale matrices with . In particular, SM_CG with the conjugate-gradient method gave the best results.

4.2 A specifically structured instance

Let denote the all-ones vector in and consider the matrix [31, Example 7.1],

Theorem 2.2 shows that for every By construction, it is obvious that . We tried to factorize for the values in Table 3. For each , using and the same initial decomposition , we tested all the algorithms on the same 50 randomly generated starting points, except for RIPG_mod. Note that each instance was assigned 50 starting points.

Table 3 lists the average time in seconds (Times) and the average number of iterations (Iters) among the successful starting points. It also lists the rounded success rate (Rate) over the total number (50) of starting points for each . Boldface highlights the two best results for each .

We can see from Table 3 that the success rates of our three algorithms were always 1, whereas the success rates of the other methods decreased as increased. Likewise, SM_CG with the conjugate-gradient method gave the best results.

| Methods | SM_SD | SM_CG | SM_RTR | SpFeasDC_ls | RIPG_mod | APM_mod | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | |

| 20 | 1 | 0.0409 | 49 | 1 | 0.0394 | 41 | 1 | 0.0514 | 35 | 1 | 0.0027 | 24 | 1 | 0.0081 | 1229 | 0.32 | 0.3502 | 2318 |

| 30 | 1 | 0.0549 | 58 | 1 | 0.0477 | 44 | 1 | 0.0690 | 36 | 1 | 0.0075 | 24 | 1 | 0.0231 | 1481 | 0.04 | 1.0075 | 2467 |

| 40 | 1 | 0.0735 | 65 | 1 | 0.0606 | 46 | 1 | 0.0859 | 37 | 1 | 0.0216 | 46 | 1 | 0.0574 | 1990 | 0 | - | - |

| 100 | 1 | 0.2312 | 104 | 1 | 0.1520 | 56 | 1 | 0.4061 | 45 | 1 | 0.2831 | 109 | 1 | 0.8169 | 4912 | 0 | - | - |

| 200 | 1 | 1.0723 | 167 | 1 | 0.5485 | 69 | 1 | 1.9855 | 53 | 1 | 2.2504 | 212 | 1 | 5.2908 | 9616 | 0 | - | - |

| 400 | 1 | 14.6 | 314 | 1 | 4.1453 | 86 | 1 | 22.1 | 69 | 1 | 36.9 | 636 | 1 | 90.6 | 17987 | 0 | - | - |

| 600 | 1 | 50.6 | 474 | 1 | 14.7 | 105 | 1 | 46.4 | 80 | 1 | 140.1 | 882 | 1 | 344.7 | 26146 | 0 | - | - |

| 800 | 1 | 133.3 | 643 | 1 | 30.0 | 109 | 1 | 93.5 | 89 | 1 | 413.3 | 1225 | 1 | 891.1 | 34022 | 0 | - | - |

| Methods | SM_SD | SM_CG | SM_RTR | SpFeasDC_ls | RIPG_mod | APM_mod | ||||||||||||

| Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | |

| 20 | 1 | 0.0597 | 52 | 1 | 0.0551 | 42 | 1 | 0.0842 | 37 | 1 | 0.0057 | 15 | 1 | 0.0105 | 1062 | 0.30 | 0.7267 | 2198 |

| 30 | 1 | 0.0793 | 59 | 1 | 0.0673 | 45 | 1 | 0.1161 | 39 | 1 | 0.0128 | 17 | 1 | 0.0336 | 1127 | 0 | - | - |

| 40 | 1 | 0.1035 | 67 | 1 | 0.0882 | 48 | 1 | 0.1961 | 41 | 1 | 0.0256 | 19 | 1 | 0.0822 | 1460 | 0 | - | - |

| 100 | 1 | 0.5632 | 103 | 1 | 0.3395 | 57 | 1 | 1.4128 | 50 | 1 | 0.8115 | 86 | 1 | 1.1909 | 4753 | 0 | - | - |

| 200 | 1 | 4.5548 | 163 | 1 | 2.4116 | 68 | 1 | 14.3 | 65 | 1 | 8.1517 | 184 | 1 | 9.2248 | 9402 | 0 | - | - |

| 400 | 1 | 46.5 | 296 | 1 | 19.2 | 89 | 1 | 77.1 | 80 | 1 | 124.3 | 453 | 1 | 156.6 | 17563 | 0 | - | - |

| 600 | 1 | 209.0 | 446 | 1 | 65.7 | 99 | 1 | 294.5 | 76 | 1 | 981.8 | 795 | 1 | 616.7 | 25336 | 0 | - | - |

| 800 | 1 | 609.7 | 628 | 1 | 160.4 | 114 | 1 | 650.7 | 83 | 1 | 4027.4 | 1070 | 1 | 1289.4 | 26820 | 0 | - | - |

| Methods | SM_SD | SM_CG | SM_RTR | SpFeasDC_ls | RIPG_mod | APM_mod | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | Rate | Times | Iters | |

| 10 | 1 | 0.0399 | 71 | 1 | 0.0313 | 49 | 1 | 0.0424 | 45 | 1 | 0.0043 | 149 | 1 | 0.0074 | 2085 | 0.80 | 0.0174 | 616 |

| 20 | 1 | 0.0486 | 85 | 1 | 0.0408 | 63 | 1 | 0.0637 | 55 | 0.98 | 0.0139 | 201 | 0.74 | 0.0212 | 3478 | 0.90 | 0.0591 | 864 |

| 50 | 1 | 0.2599 | 295 | 1 | 0.1073 | 101 | 1 | 0.2104 | 76 | 0.98 | 0.3389 | 770 | 0 | - | - | 0.76 | 0.6948 | 1416 |

| 75 | 1 | 0.3843 | 329 | 1 | 0.1923 | 135 | 1 | 0.4293 | 93 | 0.98 | 1.0706 | 1186 | 0 | - | - | 0.64 | 1.4809 | 1510 |

| 100 | 1 | 0.7459 | 458 | 1 | 0.3289 | 168 | 1 | 0.9074 | 108 | 0.80 | 1.6653 | 1083 | 0 | - | - | 0.60 | 2.8150 | 1690 |

| 150 | 1 | 1.8076 | 647 | 1 | 0.7837 | 241 | 1 | 2.6030 | 145 | 0.70 | 3.7652 | 1170 | 0 | - | - | 0.35 | 9.9930 | 2959 |

4.3 An easy instance on the boundary of

Consider the following matrix from [44, Example 2.7]:

The sufficient condition from [44, Theorem 2.5] ensures that this matrix is completely positive and Theorem 2.2 tells us that , since .

We found that all the algorithms could easily factorize this matrix. However, our three algorithms returned a CP factorization whose smallest entry was as large as possible. In fact, they also maximized the smallest entry in the symmetric factorization of , since (OptCP) is equivalent to

When we did not terminate as soon as , for example, after 1000 iterations, our algorithms gave the following CP factorization whose the smallest entry is around :

4.4 A hard instance on the boundary of

Next, we examined how well these methods worked on a hard matrix on the boundary of . Consider the following matrix on the boundary taken from [30]:

Since and is of full rank, it follows from Theorem 2.2 that ; i.e., there is no strictly positive CP factorization for Hence, the global minimum of (OptCP), , is clear. None of the algorithms could decompose this matrix under our tolerance, , in the stopping criteria. As was done in [31, Example 7.3], we investigated slight perturbations of this matrix. Given

we factorized for different values of using Note that provided and approached the boundary as . We chose the largest . For each we tested all of the algorithms on 50 randomly generated starting points and computed the success rate over the total number of starting points.

Table 4 shows how the success rate of each algorithm changes as approaches the boundary. The table sorts the results from left to right according to overall performance. Except for SM_RTR, whose success rate was always 1, the success rates of all the other algorithms significantly decreased as increased to 0.9999. Surprisingly, the method of SM_CG, which performed well in the previous examples, seemed unable to handle instances close to the boundary.

| SM_RTR | SM_SD | RIPG_mod | SM_CG | SpFeasDC_ls | APM_mod | |

| 0.6 | 1 | 1 | 1 | 1 | 1 | 0.42 |

| 0.65 | 1 | 1 | 1 | 1 | 1 | 0.44 |

| 0.7 | 1 | 1 | 1 | 1 | 1 | 0.48 |

| 0.75 | 1 | 1 | 1 | 1 | 1 | 0.52 |

| 0.8 | 1 | 1 | 1 | 1 | 0.96 | 0.46 |

| 0.82 | 1 | 1 | 1 | 1 | 0.98 | 0.4 |

| 0.84 | 1 | 1 | 1 | 1 | 0.86 | 0.24 |

| 0.86 | 1 | 1 | 1 | 1 | 0.82 | 0.1 |

| 0.88 | 1 | 1 | 1 | 1 | 0.58 | 0.18 |

| 0.9 | 1 | 1 | 1 | 1 | 0.48 | 0.18 |

| 0.91 | 1 | 1 | 1 | 1 | 0.4 | 0.14 |

| 0.92 | 1 | 1 | 1 | 1 | 0.2 | 0.18 |

| 0.93 | 1 | 1 | 0.98 | 1 | 0.22 | 0.22 |

| 0.94 | 1 | 1 | 0.98 | 1 | 0.1 | 0.2 |

| 0.95 | 1 | 1 | 1 | 1 | 0.12 | 0.32 |

| 0.96 | 1 | 1 | 0.96 | 0.98 | 0.06 | 0.34 |

| 0.97 | 1 | 1 | 0.86 | 0.82 | 0.06 | 0.14 |

| 0.98 | 1 | 1 | 0.76 | 0.28 | 0.02 | 0 |

| 0.99 | 1 | 0.68 | 0.42 | 0 | 0 | 0 |

| 0.999 | 1 | 0 | 0.14 | 0 | 0 | 0 |

| 0.9999 | 1 | 0 | 0 | 0 | 0 | 0 |

5 Further numerical experiments: comparison with [18, 47]

As described at the end of Section 3, the algorithms in [47] and [18] are both special cases of our algorithm. In this section, we compare them to show whether it performs better when we use other sub-algorithms or other smoothing functions. We applied Algorithm 2 to two problems: finding a sparse vector (FSV) in a subspace and robust low-rank matrix completion, which are problems implemented in [47] and [18], respectively. Since they both involve approximations to the norm, we applied the smoothing functions listed in Table 1.

We used the six solvers built into Manopt 7.0, namely, steepest descent; Barzilai-Borwein (i.e., gradient-descent with BB step size); Conjugate gradient; trust regions; BFGS (a limited-memory version); ARC (i.e., adaptive regularization by cubics).

5.1 FSV problem

The FSV problem is to find the sparsest vector in an -dimensional linear subspace ; it has applications in robust subspace recovery, dictionary learning, and many other problems in machine learning and signal processing [37, 38]. Let denote a matrix whose columns form an orthonormal basis of : this problem can be formulated as

where is the sphere manifold, and counts the number of nonzero components of . Because this discontinuous objective function is unwieldy, in the literature, one instead focuses on solving the norm relaxation given below:

| (24) |

where is the norm of the vector .

Our synthetic problems of the minimization model (24) were generated in the same way as in [47]: i.e., we chose for and for . We defined a sparse vector , whose first components are 1 and the remaining components are 0. Let the subspace be the span of and some random vectors in . By mgson.m [20], we generated an orthonormal basis of to form a matrix . With this construction, the minimum value of should be equal to . We chose the initial points by using the M.rand() tool of Manopt 7.0 that returns a random point on the manifold M and set x0 = abs(M.rand()). The nonnegative initial point seemed to be better in the experiment. Regarding the the settings of our Algorithm 2, we chose the same smoothing function in Table 1 and the same gradient tolerance strategy (22) as in [47]: We compared the numerical performances when using different sub-algorithms. Note that with the choice of the steepest-descent method, our Algorithm 2 is exactly the same as the one in [47].

For each , we generated 50 pairs of random instances and random initial points. We claim that an algorithm successfully terminates if , where is the -th iteration. Here, when we count the number of nonzeros of , we truncated the entries as

| (25) |

where is a tolerance related to the precision of the solution, taking values from to . Tables 5 and 6 report the number of successful cases out of 50 cases. Boldface highlights the best result for each row.

|

|

|

|

BFGS | ARC | ||||||||||

| 21 | 19 | 0 | 22 | 23 | 23 | ||||||||||

| 21 | 19 | 0 | 22 | 23 | 23 | ||||||||||

| 21 | 19 | 0 | 22 | 23 | 23 | ||||||||||

| 16 | 19 | 0 | 22 | 23 | 23 | ||||||||||

| 36 | 42 | 0 | 34 | 36 | 35 | ||||||||||

| 36 | 42 | 0 | 34 | 36 | 35 | ||||||||||

| 36 | 42 | 0 | 34 | 36 | 35 | ||||||||||

| 34 | 42 | 0 | 34 | 36 | 35 | ||||||||||

| 44 | 47 | 1 | 44 | 47 | 45 | ||||||||||

| 44 | 47 | 0 | 44 | 47 | 45 | ||||||||||

| 44 | 47 | 0 | 44 | 47 | 45 | ||||||||||

| 43 | 47 | 0 | 44 | 47 | 45 | ||||||||||

| 47 | 47 | 2 | 45 | 45 | 45 | ||||||||||

| 47 | 47 | 2 | 45 | 45 | 45 | ||||||||||

| 47 | 47 | 0 | 45 | 45 | 45 | ||||||||||

| 46 | 47 | 0 | 45 | 45 | 45 | ||||||||||

|

|

|

|

BFGS | ARC | ||||||||||

| 0 | 19 | 0 | 22 | 23 | 23 | ||||||||||

| 0 | 19 | 0 | 22 | 23 | 23 | ||||||||||

| 0 | 19 | 0 | 22 | 23 | 19 | ||||||||||

| 0 | 18 | 0 | 22 | 22 | 17 | ||||||||||

| 8 | 42 | 0 | 34 | 36 | 35 | ||||||||||

| 1 | 42 | 0 | 34 | 36 | 35 | ||||||||||

| 0 | 42 | 0 | 34 | 36 | 33 | ||||||||||

| 0 | 42 | 0 | 34 | 34 | 29 | ||||||||||

| 3 | 47 | 0 | 44 | 47 | 45 | ||||||||||

| 2 | 47 | 0 | 44 | 47 | 45 | ||||||||||

| 1 | 47 | 0 | 44 | 47 | 44 | ||||||||||

| 0 | 46 | 0 | 44 | 44 | 36 | ||||||||||

| 5 | 47 | 0 | 45 | 45 | 45 | ||||||||||

| 2 | 47 | 0 | 45 | 45 | 45 | ||||||||||

| 0 | 47 | 0 | 45 | 45 | 45 | ||||||||||

| 0 | 47 | 0 | 45 | 45 | 37 |

As shown in Table 5 and 6, surprisingly, the conjugate-gradient method, which performed best on the CP factorization problem in Section 4, performed worst on the FSV problem. In fact, it was almost useless. Moreover, although the steepest-descent method employed in [47] was not bad at obtaining low-precision solutions with , it had difficulty obtaining high-precision solutions with . The remaining four sub-algorithms easily obtained high-precision solutions, with the Barzilai-Borwein method performing the best in most occasions. Combined with the results in Section 4, this shows that in practice, the choice of sub-algorithm in the Riemannian smoothing method (Algorithm 2) is highly problem-dependent. For the other smoothing functions in Table 1, we obtained similar results as in Table 5 and 6.

|

|

|

|

BFGS | ARC | ||||||||||

| 24 | 28 | 0 | 28 | 28 | 25 | ||||||||||

| 24 | 28 | 0 | 28 | 28 | 25 | ||||||||||

| 24 | 28 | 0 | 28 | 28 | 25 | ||||||||||

| 23 | 28 | 0 | 28 | 28 | 25 | ||||||||||

| 39 | 37 | 1 | 40 | 39 | 40 | ||||||||||

| 39 | 37 | 0 | 40 | 39 | 40 | ||||||||||

| 39 | 37 | 0 | 40 | 39 | 40 | ||||||||||

| 39 | 37 | 0 | 40 | 39 | 40 | ||||||||||

| 45 | 48 | 3 | 45 | 43 | 41 | ||||||||||

| 45 | 48 | 0 | 45 | 43 | 41 | ||||||||||

| 45 | 48 | 0 | 45 | 43 | 41 | ||||||||||

| 45 | 48 | 0 | 45 | 43 | 41 | ||||||||||

| 44 | 46 | 1 | 44 | 44 | 43 | ||||||||||

| 44 | 46 | 0 | 44 | 44 | 43 | ||||||||||

| 44 | 46 | 0 | 44 | 44 | 43 | ||||||||||

| 44 | 46 | 0 | 44 | 44 | 43 | ||||||||||

|

|

|

|

BFGS | ARC | ||||||||||

| 3 | 28 | 0 | 28 | 28 | 25 | ||||||||||

| 0 | 28 | 0 | 28 | 28 | 25 | ||||||||||

| 0 | 28 | 0 | 28 | 28 | 22 | ||||||||||

| 0 | 28 | 0 | 28 | 27 | 12 | ||||||||||

| 5 | 37 | 0 | 40 | 39 | 40 | ||||||||||

| 0 | 37 | 0 | 40 | 39 | 40 | ||||||||||

| 0 | 37 | 0 | 40 | 39 | 39 | ||||||||||

| 0 | 37 | 0 | 40 | 37 | 30 | ||||||||||

| 13 | 48 | 0 | 45 | 43 | 41 | ||||||||||

| 2 | 48 | 0 | 45 | 43 | 41 | ||||||||||

| 0 | 48 | 0 | 45 | 43 | 40 | ||||||||||

| 0 | 48 | 0 | 45 | 43 | 37 | ||||||||||

| 14 | 46 | 0 | 44 | 44 | 43 | ||||||||||

| 0 | 46 | 0 | 44 | 44 | 43 | ||||||||||

| 0 | 46 | 0 | 44 | 44 | 43 | ||||||||||

| 0 | 46 | 0 | 44 | 43 | 40 |

5.2 Robust low-rank matrix completion

Low-rank matrix completion consists of recovering a rank- matrix of size from only a fraction of its entries with . The situation in robust low-rank matrix completion is one where only a few observed entries, called outliers, are perturbed, i.e.,

where is the unperturbed original data matrix of rank and is a sparse matrix. This is a case of adding non-Gaussian noise for which the traditional minimization model,

is not well suited to recovery of . Here, is a fixed rank manifold, denotes the set of indices of observed entries, and is the projection onto , defined as

In [18], the authors try to solve

because the sparsity-inducing property of the norm leads one to expect exact recovery when the noise consists of just a few outliers.

In all of the experiments, the problems were generated in the same way as in [18]. In particular, after picking the values of , we generated the ground truth , with independent and identically distributed (i.i.d.) Gaussian entries of zero mean and unit variance and . We then sampled observed entries uniformly at random, where is the oversampling factor. In our experiments, we set throughout. We chose the initial points by using the rank- truncated SVD decomposition of .

Regarding the setting of our Algorithm 2, we tested all combinations of the five smoothing functions in Table 1 and six sub-algorithms mentioned before (30 cases in total). We set and chose an aggressive value of for reducing , as in [18]. The stopping criterion of the loop of the sub-algorithm was set to a maximum of 40 iterations or the gradient tolerance (23), whichever was reached first. We monitored the iterations through the root mean square error (RMSE), which is defined as the error on all the entries between and the original low-rank matrix , i.e.,

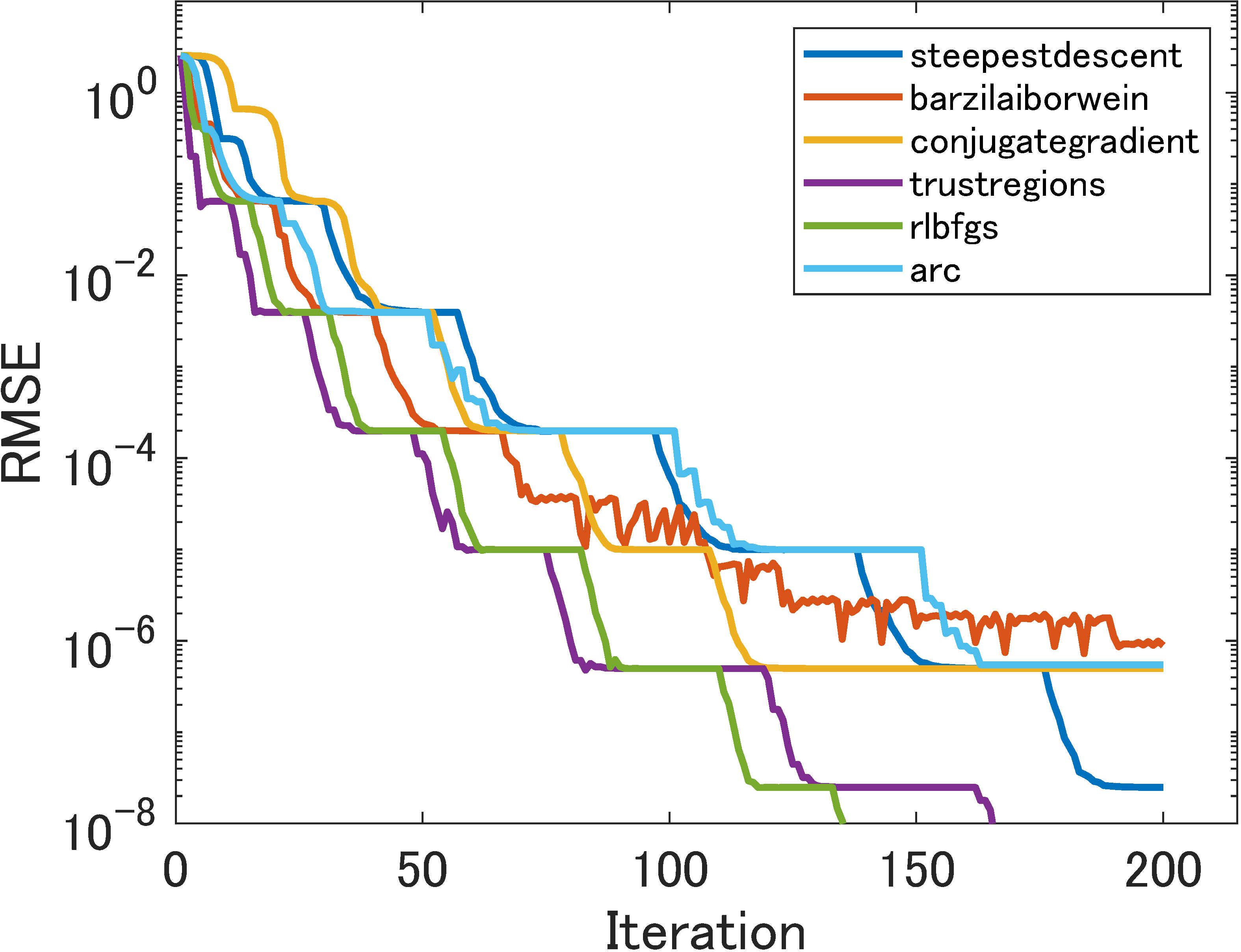

5.2.1 Perfect low-rank matrix completion

As in [18], we first tested all the methods on a simple perfect matrix (without any outliers) of size and rank 10. The results are shown in Figure 1. We can see that the choice of smoothing function does not have much effect on numerical performance. In terms of the number of iterations ((a)–(e)), our Algorithm 2 inherits the convergence of its sub-algorithm at least Q-superlinearly when trust regions or ARC are used. But the single iteration cost of trust regions and ARC is high; they are not efficient in terms of time. Specifically, the conjugate-gradient method employed in [18] stagnates at lower precision. Overall, Barzilai-Borwein performed best in terms of time and accuracy.

5.2.2 Low-rank matrix completion with outliers

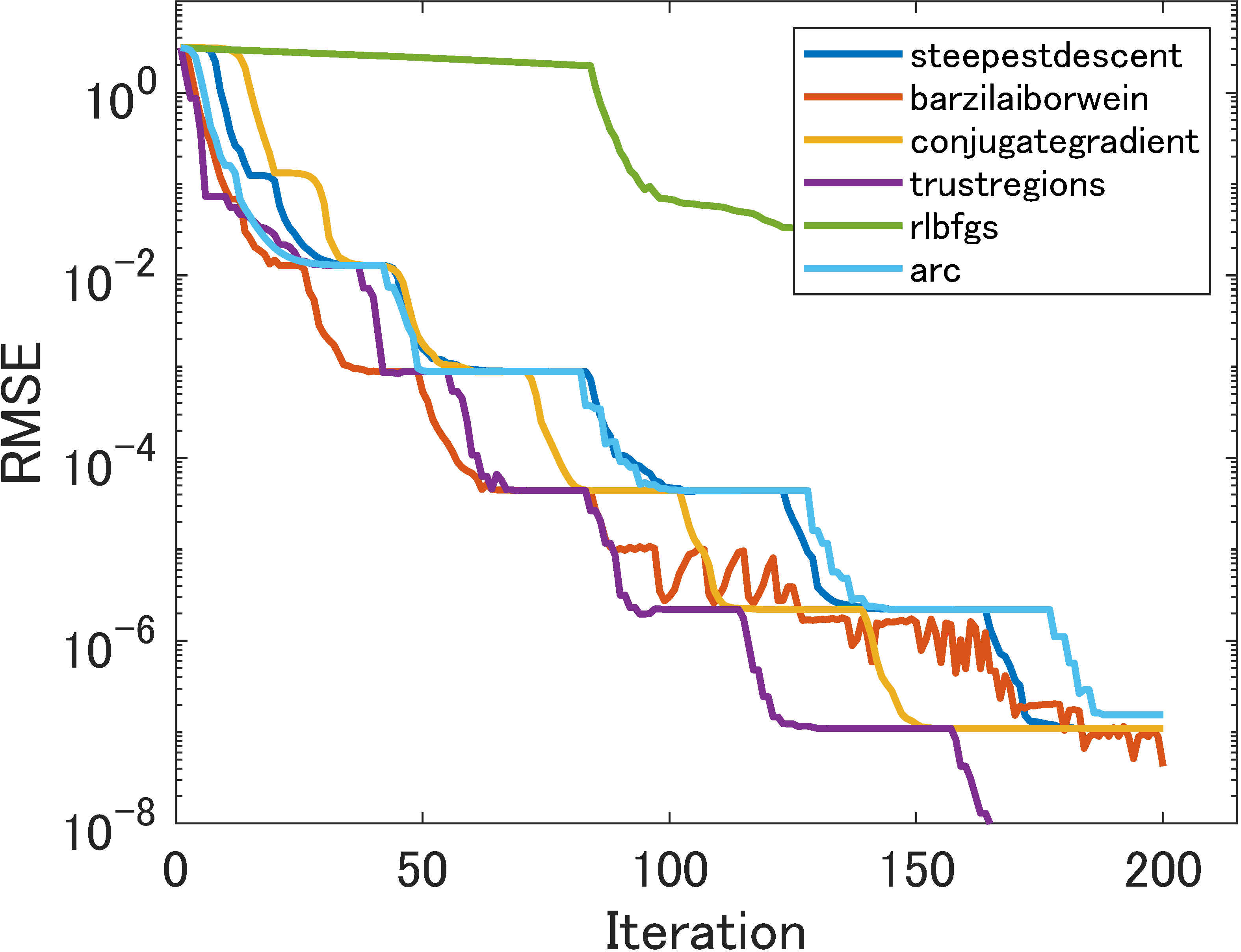

Given a matrix for which we observed the entries uniformly at random with an oversampling of 5, we perturbed of the observed entries by adding noise to them in order to create outliers. The added item was a random variable defined as where is a random variable with equal probability of being equal to or , while is a Gaussian random variable of mean and variance .

Figure 2 reports the results of two instances with outliers generated using and . Again, we can see that the choice of smoothing function does not have much effect. In most cases, BFGS and trust regions were better than the other methods in terms of number of iterations, and BFGS was the fastest. Furthermore, the conjugate-gradient method employed in [18] still stagnated at solutions with lower precision, approximately , while steepest descent, BFGS, and trust regions always obtained solutions with at least precision.

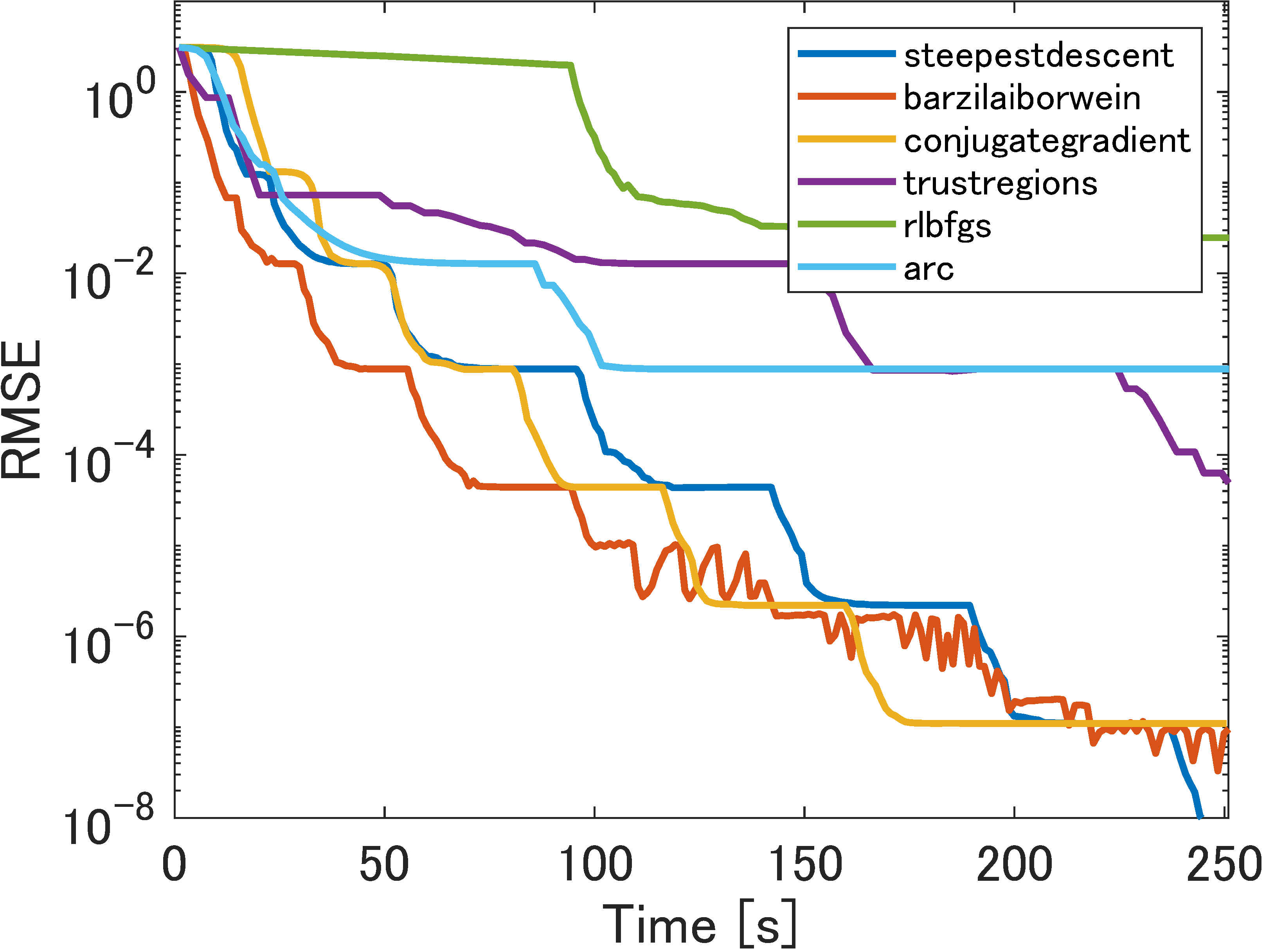

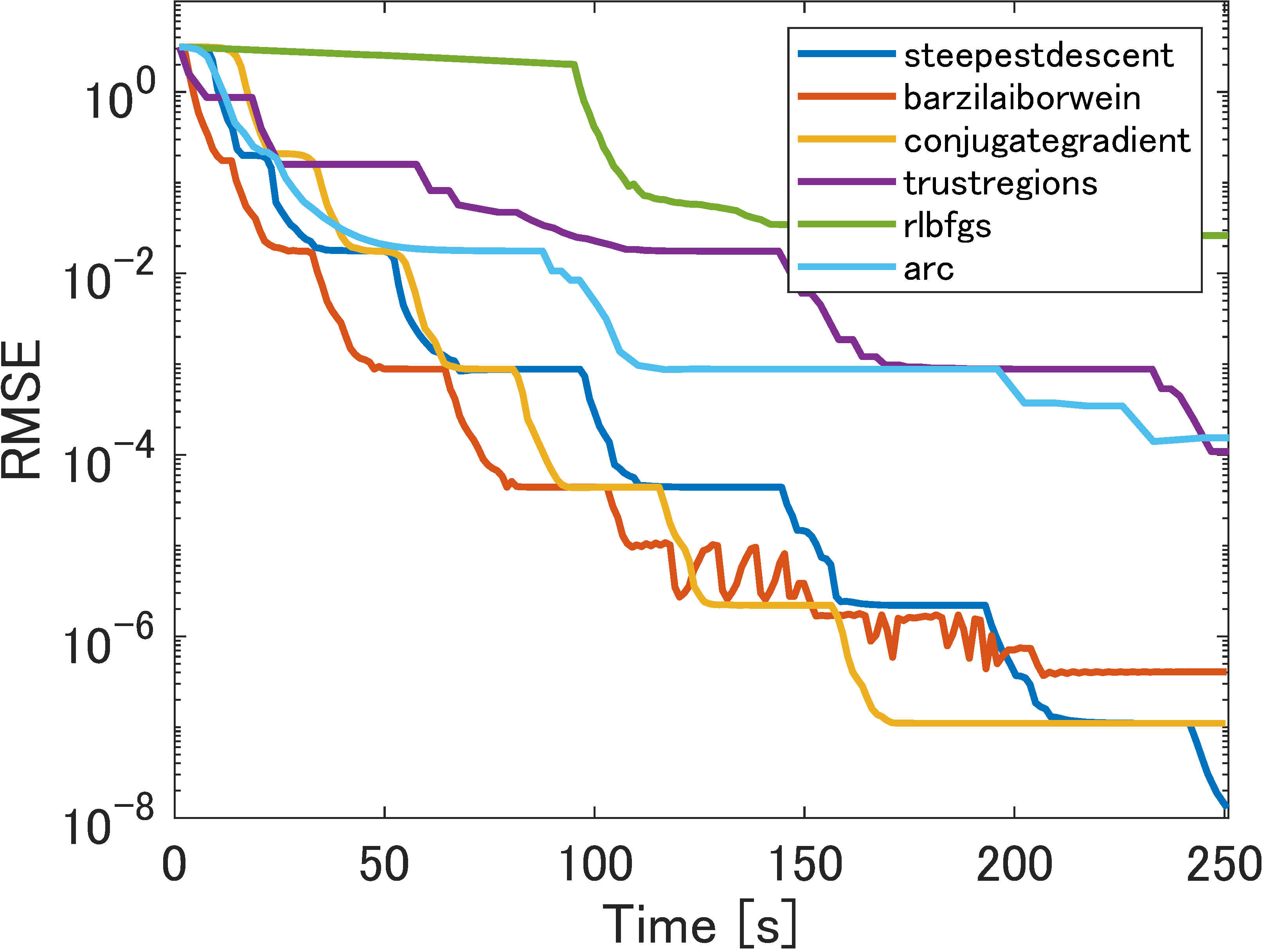

Next, we ran the same experiment on larger matrices, with outliers. Figure 3 illustrates the results of these experiments, with and . In most cases, trust regions still outperformed the other methods in terms of number of iterations, while BFGS performed poorly. Barzilai-Borwein and the conjugate-gradient method were almost as good in terms of time.

6 Concluding remarks

We examined the problem of finding a CP factorization of a given completely positive matrix and treated it as a nonsmooth Riemannian optimization problem. To this end, we studied a general framework of Riemannian smoothing for Riemannian optimization. The numerical experiments clarified that our method can compete with other efficient CP factorization methods, in particular on large-scale matrices.

Let us we summarize the relation of our approach to the existing CP factorization methods. Groetzner and Dür [31] and Chen et al. [19] proposed different methods to solve (FeasCP). Boţ and Nguyen [12] tried to solve another model (2). However, the methods they used do not belong to the Riemannian optimization techniques, but are rather Euclidean ones, since they treated the set as a usual constraint in Euclidean space. By comparison, we recognize the existence of manifolds, namely, the Stiefel manifold , and use optimization techniques specific to them. This change in perspective suggests the possibility of using the rich variety of Riemannian optimization techniques. As the experiments in Section 4 show, our Riemannian approach is faster and more reliable than the Euclidean methods.

In the future, we plan to extend Algorithm 2 to the case of general manifolds and, particularly, to quotient manifolds. This application is believed to be possible, although effort should be put into establishing analogous convergence results. In fact, convergence has been verified in a built-in example in Manopt 7.0 [14]: robust_pca.m computes a robust version of PCA on data and optimizes a nonsmooth function over a Grassmann manifold. The nonsmooth term consists of the norm, which is not squared, for robustness. In robust_pca.m, Riemannian smoothing with a pseudo-Huber loss function is used in place of the norm.

As in the other numerical methods, there is no guarantee that Algorithm 2 will find a CP factorization for every . It follows from Proposition 2.7 that if and only if the global minimum of (OptCP), say , is such that . Since our methods only converge to a stationary point, Algorithm 2 provides us with a local minimizer at best. We are looking forward to finding a global minimizer of (OptCP) in our future work.

Acknowledgments

This work was supported by the Research Institute for Mathematical Sciences, an International Joint Usage/Research Center, at Kyoto University, JSPS KAKENHI Grant, number (B)19H02373, and JST SPRING Grant, number JPMJSP2124. The authors would like to sincerely thank the anonymous reviewers and the coordinating editor for their thoughtful and valuable comments which have significantly improved the paper.

Data availability

The data that support the findings of this study are available from the corresponding author upon request.

Conflict of interest

All authors declare that they have no conflicts of interest.

References

- [1] Absil, P.-A., Mahony, R., Sepulchre, R.: Optimization Algorithms on Matrix Manifolds. Princeton University Press, Princeton (2009)

- [2] Bagirov, A., Karmitsa, N., Mäkelä, MM.: Introduction to Nonsmooth Optimization: Theory, Practice and Software. Springer International Publishing, Berlin (2014)

- [3] Berman, A., Shaked-Monderer, N.: Completely Positive Matrices. World Scientific, Singapore (2003)

- [4] Berman, A., Dür, M., Shaked-Monderer, N.: Open problems in the theory of completely positive and copositive matrices. Electron. J. Linear Algebra. 29, 46–58 (2015)

- [5] Bian, W., Chen, X.: Neural network for nonsmooth, nonconvex constrained minimization via smooth approximation. IEEE Trans. Neural Netw. Learn. Syst. 25, 545–556 (2013)

- [6] Bomze, I.M.: Copositive optimization–recent developments and applications. Eur. J. Oper. Res. 216, 509–520 (2012)

- [7] Bomze, I.M.: Building a completely positive factorization. Cent. Eur. J. Oper. Res. 26, 287–305 (2018)

- [8] Bomze, I.M., Dickinson, P.J., Still, G.: The structure of completely positive matrices according to their cp-rank and cp-plus-rank. Linear Algebra Appl. 482, 191–206 (2015)

- [9] Bomze, I.M., Dür, M., De Klerk, E., Roos, C., Quist, A.J., Terlaky, T.: On copositive programming and standard quadratic optimization problems. J. Glob. Optim. 18, 301–320 (2000)

- [10] Bomze, I.M., Schachinger, W., Uchida, G.: Think co(mpletely) positive! Matrix properties, examples and a clustered bibliography on copositive optimization. J. Glob. Optim. 52, 423–445 (2012)

- [11] Borckmans, P.B., Selvan, S.E., Boumal, N., Absil, P.-A.: A Riemannian subgradient algorithm for economic dispatch with valve-point effect, J. Comput. Appl. Math. 255, 848–866 (2014)

- [12] Boţ, R. I., Nguyen, D.-K.: Factorization of completely positive matrices using iterative projected gradient steps. Numer. Linear Algebra Appl. 28, e2391 (2021)

- [13] Boumal, N.: An Introduction to Optimization on Smooth Manifolds. To appear with Cambridge University Press, Mar 2022.

- [14] Boumal, N., Mishra, B., Absil, P.-A., Sepulchre, R.: Manopt, a Matlab toolbox for optimization on manifolds. J. Mach. Learn. Res. 15, 1455–1459 (2014)

- [15] Burer, S.: On the copositive representation of binary and continuous nonconvex quadratic programs. Math. Program. 120, 479–495 (2009)

- [16] Burer, S.: A gentle, geometric introduction to copositive optimization. Math. Program. 151, 89–116 (2015)

- [17] Burer, S., Monteiro, R.D.C.: Local minima and convergence in low-rank semidefinite programming. Math. Program. 103, 427–444 (2005)

- [18] Cambier, L., Absil, P.-A.: Robust low-rank matrix completion by Riemannian optimization. SIAM J. Sci. Comput. 38, S440–S460 (2016)

- [19] Chen, C., Pong, T.K., Tan, L., Zeng, L.: A difference-of-convex approach for split feasibility with applications to matrix factorizations and outlier detection. J. Glob. Optim. 78, 107–136 (2020)

- [20] Chen, M.: Gram-Schmidt orthogonalization. MATLAB Central File Exchange. https://www.mathworks.com/matlabcentral/fileexchange/55881-gram-schmidt-orthogonalization (2022). Accessed 24 June 2022

- [21] Chen, X.: Smoothing methods for nonsmooth, nonconvex minimization. Math. Program. 134, 71–99 (2012)

- [22] Chen, X., Wets, R.J.B., Zhang, Y.: Stochastic variational inequalities: residual minimization smoothing sample average approximations. SIAM J. Optim. 22, 649–673 (2012)

- [23] De Carvalho Bento, G., da Cruz Neto, J.X., Oliveira, P.R.: A new approach to the proximal point method: convergence on general Riemannian manifolds. J. Optim. Theory Appl. 168, 743–755 (2016)

- [24] De Klerk, E., Pasechnik, D.V.: Approximation of the stability number of a graph via copositive programming. SIAM J. Optim. 12, 875–892 (2002),

- [25] Dickinson, P.J.: An improved characterization of the interior of the completely positive cone. Electron. J. Linear Algebra. 20, 723–729 (2010)

- [26] Dickinson, P.J., Dür, M.: Linear-time complete positivity detection and decomposition of sparse matrices. SIAM J. Matrix Anal. Appl. 33, 701–720 (2012)

- [27] Dickinson, P.J., Gijben, L.: On the computational complexity of membership problems for the completely positive cone and its dual. Comput. Optim. Appl. 57, 403–415 (2014)

- [28] Dür, M.: Copositive programming — a survey. In: Diehl, M., Glineur, F., Jarlebring, E., Michiels W. (eds.) Recent advances in optimization and its applications in engineering, pp. 3–20. Springer, Berlin, Heidelberg (2010).

- [29] Dür, M., Rendl, F.: Conic optimization: A survey with special focus on copositive optimization and binary quadratic problems. EURO J. Comput. Optim. 9, 100021 (2021)

- [30] Dür, M., Still, G.: Interior points of the completely positive cone. Electron. J. Linear Algebra. 17, 48-53 (2008).

- [31] Groetzner, P., Dür, M.: A factorization method for completely positive matrices. Linear Algebra Appl. 591, 1–24 (2020)

- [32] Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, Cambridge (2012)

- [33] Jarre, F., Schmallowsky, K.: On the computation of certificates. J. Glob. Optim. 45, 281–296 (2009)

- [34] Kovnatsky, A., Glashoff, K., Bronstein, M.M.: MADMM: a generic algorithm for non-smooth optimization on manifolds. In: European Conference on Computer Vision, pp. 680–696. Springer, Cham (2016)

- [35] Liu, C., Boumal, N.: Simple algorithms for optimization on Riemannian manifolds with constraints. Appl. Math. Optim. 82, 949–981 (2020)

- [36] Nie, J.: The -truncated -moment problem. Found. Comput. Math. 14, 1243–1276 (2014)

- [37] Qu, Q., Sun, J., Wright, J.: Finding a sparse vector in a subspace: Linear sparsity using alternating directions. Adv. Neural. Inf. Process. Syst. 27 (2014)

- [38] Qu, Q., Zhu, Z., Li, X., Tsakiris, M.C., Wright, J., Vidal, R.: Finding the sparsest vectors in a subspace: Theory, algorithms, and applications. arXiv preprint arXiv:2001.06970. (2020)

- [39] Rockafellar, R.T., Wets, R.J.B.: Variational Analysis. Springer Science & Business Media, Berlin (2009)

- [40] Rudin, W.: Principles of Mathematical Analysis. McGraw-Hill, New York (1964)

- [41] Sato, H., Iwai, T.: A new, globally convergent Riemannian conjugate gradient method. Optim. 64, 1011–1031 (2015)

- [42] Shilon O.: Randorthmat. MATLAB Central File Exchange. https://www.mathworks.com/matlabcentral/fileexchange/11783-randorthmat (2022). Accessed 7 April 2022

- [43] Dutour Sikirić, M., Schürmann, A., Vallentin, F.: A simplex algorithm for rational cp-factorization. Math. Program. 187, 25–45 (2020)

- [44] So, W., Xu, C.: A simple sufficient condition for complete positivity. Oper. Matrices. 9, 233–239 (2015)

- [45] Sponsel, J., Dür, M.: Factorization and cutting planes for completely positive matrices by copositive projection. Math. Program. 143, 211–229 (2014)

- [46] Zhang, C., Chen, X.: A smoothing active set method for linearly constrained non-Lipschitz nonconvex optimization, SIAM J. Optim. 30, 1–30 (2020)

- [47] Zhang, C., Chen, X., Ma, S.: A Riemannian smoothing steepest descent method for non-Lipschitz optimization on submanifolds. arXiv preprint arXiv:2104.04199. (2021)