∎

Comparisons of Algorithms in Big Data Processing

Abstract

Change management of information systems includes careful assessment of increasing number of algorithms’ robustness. MapeReduce is a popular parallel computing framework for big data processing. Algorithms used in the framework prove to be effective only when certain conditions are true. The First-In-First-Out (FIFO) and Hadoop Fair Scheduler (HFS) algorithms do not take the rack structure of data centers into account, so they are known to not be heavy-traffic delay optimal or even throughput optimal. The recent advances on scheduling for data centers considering the rack structure and the heterogeneity of servers resulted in the state-of-the-art Balanced-PANDAS algorithm that outperforms the classic MaxWeight algorithm and its derivation, JSQ-MaxWeight algorithm. In both Balanced-PANDAS and MaxWeight algorithms, the processing rate of local, rack-local, and remote servers are assumed to be known. However, with the change of traffic over time in addition to estimation errors of processing rates, it is not realistic to consider the processing rates to be known. In this research, we study the robustness of Balanced-PANDAS and MaxWeight algorithms in terms of inaccurate estimations of processing rates. We observed that Balanced-PANDAS is not as sensitive as MaxWeight on the accuracy of processing rates, making it more appealing to use in Big Data processing centers.

Keywords:

Hadoop MapReduce Data center Scheduling Load balancing Robustness1 Introduction and Related Work

Parallel computing for big data has different applications from online social networks, health-care industry, advertisement placement, and machine learning to search engines and data centers. The most popular big data parallel computing framework is MapReduce which is broadly used in Hadoop [White, 2012], Dryad [Isard et al., 2007], Google [Dean and Ghemawat, 2008], Deep Learning [Daghighi, 2019], and grid-computing [Isard et al., 2009, Saadatmand et al., 2019a, b]. Before talking about MapReduce, we present some details on the network structure of data centers. Data centers used to mainly consist of two parts, storage and computing, and these two parts were connected to each other through a network link with high bandwidth. When this structure was being used for normal data processing, the communication of data from storage to processing unit was not creating any bottleneck. However, with the emergence of Big Data, the network between the two units was unable to accommodate fast and reliable data transfer. Hence, scientists come up with the idea of bringing data into processing unit by splitting both data and processing units into hundreds of small units and combining each computing unit with a small storage unit. As a result, each small unit consisting of storage and processing units, called a server, is capable of storing small pieces of data and processing them at the same time. In other words, data does not need to be transferred from storage unit to processing unit since they are already together. However, note that the big data cannot be stored in the storage unit of a single server. The solution is to split the big data into small chunks of data, typically 68-128 MB, and storing them on multiple servers. In practice, each data chunk is stored on three servers to increase availability and decrease data loss probability. As a result, processing of big data consists of processing of multiple data chunks that make the whole big data, and concatenating the data chunk processing results for the completion of the big data process. The processing of each data chunk is called Map task and the concatenation of the results on all the Map tasks is called Reduce task, which make up the MapReduce processing framework for big data. Note that at least for Reduce tasks, servers need to be connected and cannot completely be isolated. We later see that even for executing Map tasks, servers may need to exchange data. Hence, servers are connected to each other through links and switches. The structure of switches connecting servers is a complete field of research in computer science, but servers are generally connected to each other by top of the rack switches as well as core switches in the following way. The hundred servers are grouped into batches of 20-50 servers, where each batch is inter-connected to each other by a switch, called rack switch, and all rack switches are connected to one or more core switches which make all the servers connected.

The rack structure of data centers brings a lot of complexity for load balancing. As a result, most theoretical work on load balancing for data centers either consider homogeneous model of servers or ignore the rack structure and only consider data locality, where data locality refers to the fact that the data chunk associated to a Map task is stored on three servers, so is not available on other servers immediately. Examples of works that consider homogeneous server model are [Livny and Melman, 1982, Singh, 1970, Kreimer, 2002], and [Lowery et al., 2008]. A branch of research on homogeneous servers is utilization of the power of two or more choices for load balancing that lowers the messaging overhead between hundreds of servers and the core load balancing scheduler. For example you can refer to [Dahlgaard et al., 2016, Mitzenmacher, 2001, Gardner et al., 2017, Richa et al., 2001, Byers et al., 2004, Doerr et al., 2011, Lumetta and Mitzenmacher, 2007, Cooper et al., 2014, Luczak et al., 2005], and [Gast, 2015]. Although there has been a huge body of work on heuristic algorithms for heterogeneous server model, examples of which are [White, 2010, Isard et al., 2009, Zaharia et al., 2010, Jin et al., 2011, He et al., 2011, Polo et al., 2011, Zaharia et al., 2008, Daghighi and Kavousi, 2017, Kavousi, 2017, Moaddeli et al., 2019], and [Salehi, 2017], there has been a few recent works on algorithms with theoretical guarantees on such more complicated models.

The scheduling problem for a data center with a rack structure is a specific case of the open affinity scheduling problem, where each task type can be processed by each server but with different processing rates. The classic MaxWeight algorithm [van de Ven et al., 2009, Sadiq and De Veciana, 2009, Stolyar et al., 2004, Meyn, 2009], c--rule [Van Mieghem, 1995, Mandelbaum and Stolyar, 2004], and the work by Harrison and López [1999], Harrison [1998], and by Bell et al. [2001, 2005] have different approaches on solving the load balancing problem for the affinity scheduling, but they either not solve the delay optimality or have unrealistic assumptions including known task arrival rates and existence of one queue per task type. The state-of-the-art on scheduling for data centers considering the rack structure, no knowledge of task arrival rates, and having queues on the order of servers not the number of task types is presented by Xie et al. [2016] and by Yekkehkhany [2017], which is extended for a general number of data locality levels by Yekkehkhany et al. [2018]. The central idea to all algorithms in [Xie et al., 2016, Yekkehkhany, 2017], and [Yekkehkhany et al., 2018] is to use weighted workload on servers instead of the queue lengths, which leads to a better perfromance in terms of average delay expereinced by submitted tasks. The Balanced-PANDAS alborithm, where PANDAS stands for Priority Algorithm for Near-Data Scheduling, is the name for the weighetd-workload based algorithms proposed in [Xie et al., 2016, Yekkehkhany, 2017], and [Yekkehkhany et al., 2018]. The Join-the-Shortest-Queue-MaxWeight (JSQ-MaxWeight, JSQ-MW) proposed by Wang et al. [2013] that only considers data locality is also extended to the case where rack structure is considered by Xie et al. [2016]. The priority algorithm proposed by Xie and Lu [2015] is another work that only considers data locality, not the rack structure of data centers which is interesting in its own rights since both throughput and heavy-traffic delay optimality are proved for it; however, it is not even throughput optimal for a system with rack structure.

All of the algorithms mentioned above consider complete knowledge about the processing rates of different task types on different servers. However, the reality is that the processing rates are mostly not known due to errors in estimation methods and the change of the system structure over time or the change of traffic which can change the processing rates. Hence, it is important that the algorithm that is used for load balancing is robust to estimation errors of processing rates. In this work, we run extensive simulations to evaluate the robustness of the state-of-the-art algorithms on load balancing with different levels of data locality. It is observed that the Balanced-PANDAS algorithm not only has a better heavy-traffic delay performance, but it also is more robust to changes of processing rate estimations, while MaxWeight based algorithm does not perform as well as Balanced-PANDAS under processing rate estimation errors. In order to estimate the processing rates of tasks on servers and better model the data center structure, reinforcement learning methods can be used as it is discussed in [Musavi et al., 2016b, Yildiz et al., 2013, Musavi et al., 2016a], and [Musavi, 2019]. A recent work by Yekkehkhany and Nagi [2020] considers an exploration-exploitation approach as in the reinforcement learning method to both learn the processing rates and exploit load balancing based on the current estimation of the processing rates. They propose the Blind GB-PANDAS algorithm that is proven to be throughput-optimal and have a lower mean task completion time than the existing methods. A more sophisticated risk-averse exploration-exploitation approach can be considered for this problem when different tasks have different risk-levels as discussed in [Yekkehkhany et al., 2019] and [Yekkehkhany et al., 2020].

The rest of the paper is organized as follows. Section 2 presents the system model that is used throughout the paper, section 3 summarizes the preliminary materials including the description of Balanced-PANDAS, Priority, and MaxWeight based algorithms that are needed before we present the robustness comparison among different algorithms in section 4.

2 System Model

We consider the same system model described in [Xie et al., 2016] and [Yekkehkhany et al., 2018] as follows. A discrete time model is considered, where time is indexed by . Assume a data center with servers and denote the set of all servers as . Without loss of generality, assume that the first servers are connected to each other with a top of the rack switch and are called the first rack, the second servers are connected to each other with another top of the rack switch and are called the second rack, and so on. Hence, there are racks in total. All the top of the rack switches are connected to each other with one or more core switches in a symmetric manner. As a result, there are three levels of data locality as described below. Recall that the data chunk associated to a map task is stored on three servers by Hadoop’s default, so all those three servers are called local servers for the map task or in other words the map task can receive service locally from those three servers. Since servers have the data chunk of local tasks, the processing is immediately started after servers are assigned to process them. Note that the three servers storing the data for a map task is normally different for different map tasks. Hence, we associate a type to each map task, which is the label of the three servers, i.e. such that . This gives us a unique and informative way of representation for different task types as follows:

where a task type is denoted by given that and are the three local servers for task of type and the set of all task types is denoted by .

A map task is not limited to receive service from one of the local servers. It can receive service from one of the servers that are in the same rack as the local servers with a slightly lower service rate. The slight depreciation of processing rate for such servers, which are called rack-local servers, is for the travel time of the data associated to a map task from a local server to the rack-local server that is assigned for processing the map task rack-locally. Finally, all other servers other than the local and rack-local servers, which are called remote servers, have the lowest processing rate for a map task, since data needs to be transmitted through at least two of the top rack switches and a core switch, so the server cannot immediately start processing the task when it is assigned to do so remotely. In order to formally define the rack-local and remote servers, we need to propose a notation. Let denotes the label of the rack that the -th server belongs to. Then, the set of rack-local and remote servers to task of type , denoted by and , respectively, are as follows:

The service and arrival process of tasks is described below.

Task arrival process: Let denote the number of tasks of type that arrive to the system at time slot , where and it is assumed that and . The set of arrival rates for all task types is denoted by .

Service process:

The processing of a task on a local server is assumed to be faster than on a rack-local server, and the processing of a task on a rack-local server is faster than on a remote server. This fact is formalized as follows. The processing time of a task on a local, rack-local, and remote server has means and respectively, where Note that the processing time of a task can have any distribution with the given means, but the heavy-traffic delay optimality of Balanced-PANDAS algorithm is only proven under Geometric service time distribution, while MaxWeight based algorithm does not have a general heavy-traffic delay optimality under any distribution for service time.

Capacity region characterization:

An arrival rate for all task types is supportable for service by the servers if and only if the load on each server is strictly less than the capacity of the server. Considering a processing rate of one for each server, an arrival rate vector is in the capacity region of the system if and only if:

where is the rate of incoming tasks of type that are processed by server .

3 Load Balancing Algorithms

In this section, we introduce the main three algorithms on scheduling for data centers with more than or equal to two levels of data locality that have theoretical guarantees on optimality in some senses and under some conditions. In order to introduce the load balancing algorithm of each method, we also need to present the queueing structure required for that method. The three algorithms are

-

1.

Priority algorithm [Xie and Lu, 2015], which is best fit for applications with two levels of data locality, e.g. for the cases that only data locality is taken into account. An example is scheduling for Amazon Web Services inside a rack.

-

2.

Balanced-PANDAS [Xie et al., 2016, Yekkehkhany, 2017], and [Yekkehkhany et al., 2018], which is the state-of-the-art for scheduling applications with multiple levels of data locality and is observed to perform better in terms of average task completion time by fourfold in comparison to MaxWeight based algorithms. It is proven by [Xie et al., 2016] that under mild conditions, Balanced-PANDAS is both throughput and heavy-traffic delay optimal for a system with three levels of data locality and a rack structure.

-

3.

MaxWeight based algorithms [Stolyar et al., 2004] and [Wang et al., 2016], which can be used for multiple levels of data locality and are throughput optimal, but not heavy-traffic delay optimal, and it is observed that they generally have poor performance at high loads compared to weighted workload based algorithm used in Balanced-PANDAS algorithm.

The following three subsections present a complete introduction to these three main algorithms.

3.1 Priority algorithm

The Priority algorithm is designed for a system with two levels of data locality. In other words, it only considers data locality, but not the rack structure. Hence, there are only local and remote servers from the perspective of a task. The queueing structure under this algorithm is to have a single queue per server, where the queue corresponding to a server only keeps tasks that are local to that server. At the arrival of a task, a central scheduler routes the incoming task to the local server with the shortest queue length. An idle server is scheduled to process a task in its corresponding queue as long as there is one, and if the idle server’s queue length is zero, it is scheduled to process a task from the longest queue in the system. The priority algorithm is proved to be both throughput and heavy-traffic delay optimal. However, its extension to more than two levels of data locality is not even throughput optimal, let alone heavy-traffic delay optimal.

3.2 Balanced-PANDAS algorithm

The Balanced-PANDAS algorithm can be used for a system with multiple levels of data locality, but here we propose the algorithm for a data center with a rack structure with three levels of data locality. The queueing structure under using this algorithm is to have three queues per server, one queue for storing local tasks to the server, another queue for storing rack-local tasks to the server, and a third queue for storing remote tasks to the server. Hence, server has a tuple of three queues denoted by , where they refer to the queues storing local, rack-local, and remote tasks respectively. The corresponding queue lengths at time are denoted by . The workload on server at time slot is defined as follows:

An incoming task of type is routed to the corresponding queue of the server with the minimum weighted workload, where ties are broken randomly, in the set below:

An idle server at time slot is scheduled to process a local task from if ; otherwise, it is scheduled to process a rack-local task from if ; otherwise, it is scheduled to process a remote task from if ; otherwise, it remains idle until a task joins one of its three queues. The Balanced-PANDAS algorithm is throughput optimal. It is also heavy-traffic delay optimal for a system with a rack structure of three levels of data locality if , which means that the rack-local service is faster than the remote service in a specific manner.

3.3 MaxWeight based algorithm

The MaxWeight algorithm is proposed by Stolyar et al. [2004] and a modification of it called JSQ-MaxWeight algorithm is proposed by Wang et al. [2016], which is described below. Consider one queue per server, i.e. server has a single queue called , where its queue length at time slot is denoted by . The routing policy is as the Priority algorithm, i.e. an incoming task of type is routed to the queue of the shortest length in . This routing policy is called join-the-shortest-queue (JSQ). An idle server at time slot on the other hand is scheduled to process a task from a queue with the maximum weighted queue length, where ties are broken at random, in the set below:

The JSQ-MaxWeight algorithm is throughput optimal, but it is not heavy-traffic delay optimal.

4 Robustness Comparison of Scheduling Algorithms

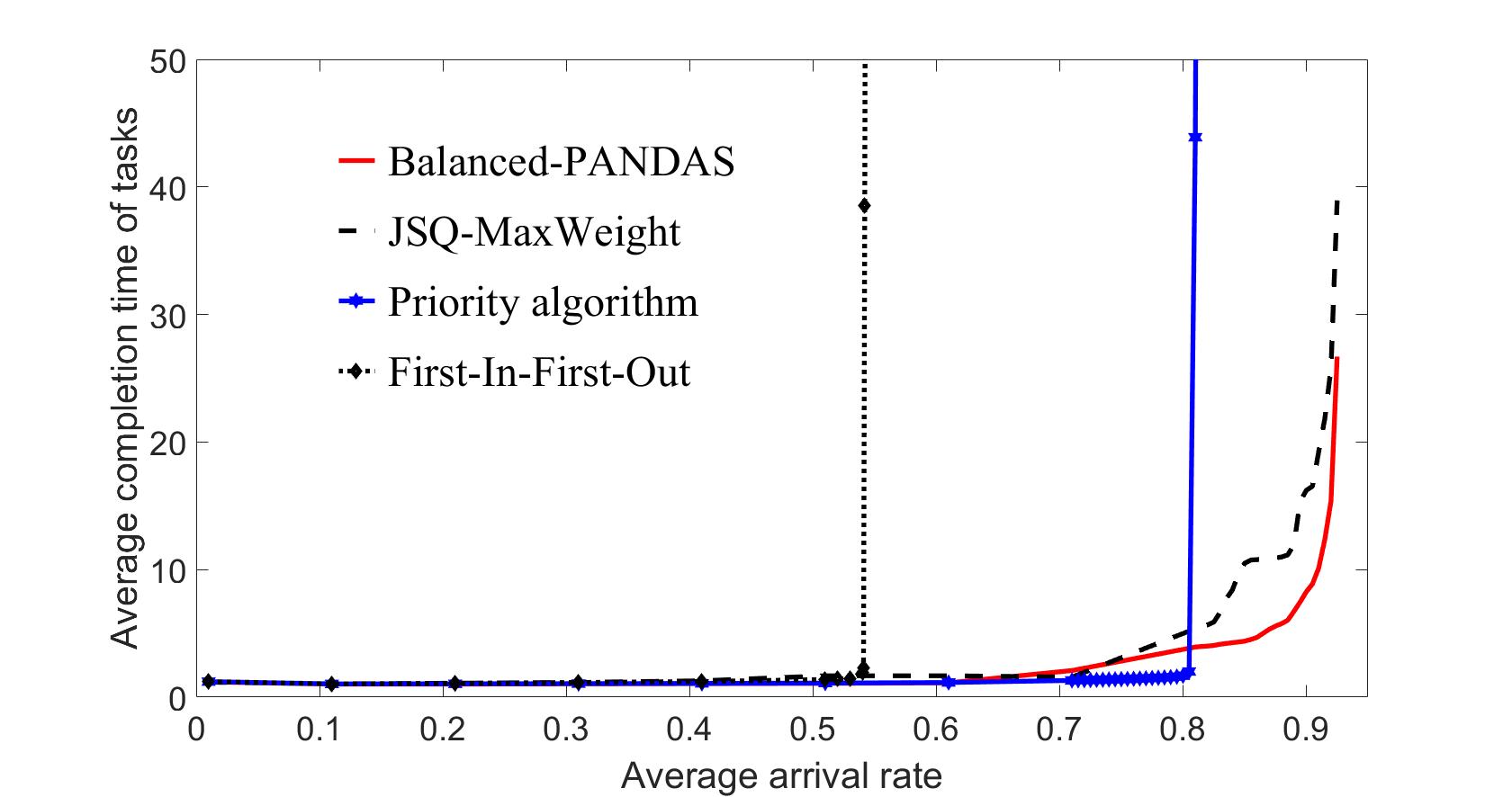

In this section, we present the results on our extensive simulations on robustness of scheduling algorithms presented in section 3. To this end, we run the algorithms with parameters that have error to study which algorithm can tolerate errors better than others. More specifically, we use incorrect and in the algorithms for calculating weighted workloads or weighted queue lengths and observe the average task completion time under these scenarios. The arrival process is a Poisson process and the processing time has an exponential distribution. We have also tested the algorithms for processing times with heavy-tailed distributions and observed similar results. We make these parameters and off their real value, either greater than the real value or smaller than the real value, and evaluate algorithms under these cases. The traffic load is assumed the same under all algorithms so that the comparison makes sense. We further compare all the three algorithms mentioned in section 3 with the Hadoop’s default scheduler which is First-In-First-Out (FIFO). Figure 1 shows the comparison between the four algorithms when precise value of parameters are known by the central scheduler. As we see, Balanced-PANDAS algorithm has the lowest average task completion time at high loads. A closer look of high loads is presented in figure 2, where Balanced-PANDAS obviously outperform JSQ-MaxWeight in terms of average task completion time.

The performance of the four algorithms are compared to each other when the parameters are lower than their real values by certain percentages, where the results are shown in figure 3. As is seen, Balanced-PANDAS has best performance among all algorithms by changing the parameters’ error from 5% to 30%. In fact, figure 4 shows that the Balanced-PANDAS has the least sensitivity against change of parameters while JSQ-MaxWeight’s performance varies notably by the increase of error in parameter estimations.

Comparison of the algorithms when the parameters are off for some percentages, but higher than their real values are given in figure 5. It is again observed that the Balanced-PANDAS algorithm has consistent better performance than the JSQ-MaxWeight algorithm. The sensitivity comparison of the Balanced-PANDAS and JSQ-MaxWeight algorithms in this case is presented in figure 6.

5 Conclusion and Future Work

In this work, we did a literature review on both classical and state-of-the-art scheduling algorithms for the affinity scheduling problem. Data center load balancing is a special case of the affinity scheduling problem. Considering the rack structure of data centers, there are three levels of data locality. The priority algorithm that is heavy-traffic delay optimal is not even throughput optimal for three levels of data locality. The Balanced-PANDAS algorithm is the state-of-the-art in heavy-traffic delay optimality. We investigated the robustness of Balanced-PANDAS and JSQ-MaxWeight algorithms with respect to errors in parameter estimation. We observe that Balanced-PANDAS keeps its better performance even in the absence of precise parameter values versus JSQ-MaxWeight. Note that the JSQ-MaxWeight algorithm is also robust under parameter estimation errors, but it is more sensitive than Balanced-PANDAS, specially at high loads close to the boundary of the capacity region. For future work, one can use machine learning tools to estimate the system parameters and make them more precise in the meanwhile that the load balancing algorithm is working with the estimated parameters. The scheduling algorithms presented in this work can also be applied to a vast number of applications including but not limited to healthcare and super market models [Winkler, 1987, Clower and Leijonhufvud, 1975, Eisenhauer, 2001, Hosseini et al., 2017], web search engines [Schwartz, 1998, Salehi et al., 2018, Broder, 2002, Krishna and Rani, 2018, Xie et al., 2018], electric vehicle charging [Alinia et al., 2018, Gan et al., 2013, Wang et al., 2005, Deilami et al., 2011, Chehardeh and Hatziadoniu, 2018, Almalki et al., 2015, Chehardeh et al., 2016, Saadatmand et al., 2020a, b] and so on.

References

- Alinia et al. [2018] Bahram Alinia, Mohammad Sadegh Talebi, Mohammad H Hajiesmaili, Ali Yekkehkhany, and Noel Crespi. Competitive online scheduling algorithms with applications in deadline-constrained ev charging. In 2018 IEEE/ACM 26th International Symposium on Quality of Service (IWQoS), pages 1–10. IEEE, 2018.

- Almalki et al. [2015] Mishari Metab Almalki, Maziar Isapour Chehardeh, and Constantine J Hatziadoniu. Capacitor bank switching transient analysis using frequency dependent network equivalents. In North American Power Symposium (NAPS), 2015. IEEE, 2015.

- Bell et al. [2005] Steven Bell, Ruth Williams, et al. Dynamic scheduling of a parallel server system in heavy traffic with complete resource pooling: Asymptotic optimality of a threshold policy. Electronic Journal of Probability, 10:1044–1115, 2005.

- Bell et al. [2001] Steven L Bell, Ruth J Williams, et al. Dynamic scheduling of a system with two parallel servers in heavy traffic with resource pooling: asymptotic optimality of a threshold policy. The Annals of Applied Probability, 11(3):608–649, 2001.

- Broder [2002] Andrei Broder. A taxonomy of web search. In ACM Sigir forum, volume 36, pages 3–10. ACM, 2002.

- Byers et al. [2004] John W Byers, Jeffrey Considine, and Michael Mitzenmacher. Geometric generalizations of the power of two choices. In Proceedings of the sixteenth annual ACM symposium on Parallelism in algorithms and architectures, pages 54–63. ACM, 2004.

- Chehardeh and Hatziadoniu [2018] Maziar Isapour Chehardeh and Constantine J Hatziadoniu. A systematic method for reliability index evaluation of distribution networks in the presence of distributed generators. In 2018 North American Power Symposium (NAPS). IEEE, 2018.

- Chehardeh et al. [2016] Maziar Isapour Chehardeh, Mishari Metab Almalki, and Constantine J Hatziadoniu. Remote feeder transfer between out-of-phase sources using sts. In Power and Energy Conference at Illinois (PECI), 2016 IEEE. IEEE, 2016.

- Clower and Leijonhufvud [1975] Robert Clower and Axel Leijonhufvud. The coordination of economic activities: a keynesian perspective. The American Economic Review, 65(2):182–188, 1975.

- Cooper et al. [2014] Colin Cooper, Robert Elsässer, and Tomasz Radzik. The power of two choices in distributed voting. In International Colloquium on Automata, Languages, and Programming, pages 435–446. Springer, 2014.

- Daghighi [2019] Amirali Daghighi. Application of an artificial neural network as a third-party database auditing system. 2019.

- Daghighi and Kavousi [2017] Amirali Daghighi and Mohammadamir Kavousi. Scheduling for data centers with multi-level data locality. In Electrical Engineering (ICEE), 2017 Iranian Conference on, pages 927–936. IEEE, 2017.

- Dahlgaard et al. [2016] Søren Dahlgaard, Mathias Bæk Tejs Knudsen, Eva Rotenberg, and Mikkel Thorup. The power of two choices with simple tabulation. In Proceedings of the twenty-seventh annual ACM-SIAM symposium on Discrete algorithms, pages 1631–1642. SIAM, 2016.

- Dean and Ghemawat [2008] Jeffrey Dean and Sanjay Ghemawat. Mapreduce: simplified data processing on large clusters. Communications of the ACM, 51(1):107–113, 2008.

- Deilami et al. [2011] Sara Deilami, Amir S Masoum, Paul S Moses, and Mohammad AS Masoum. Real-time coordination of plug-in electric vehicle charging in smart grids to minimize power losses and improve voltage profile. IEEE Transactions on Smart Grid, 2(3):456–467, 2011.

- Doerr et al. [2011] Benjamin Doerr, Leslie Ann Goldberg, Lorenz Minder, Thomas Sauerwald, and Christian Scheideler. Stabilizing consensus with the power of two choices. In Proceedings of the twenty-third annual ACM symposium on Parallelism in algorithms and architectures, pages 149–158. ACM, 2011.

- Eisenhauer [2001] Elizabeth Eisenhauer. In poor health: Supermarket redlining and urban nutrition. GeoJournal, 53(2):125–133, 2001.

- Gan et al. [2013] Lingwen Gan, Ufuk Topcu, and Steven H Low. Optimal decentralized protocol for electric vehicle charging. IEEE Transactions on Power Systems, 28(2):940–951, 2013.

- Gardner et al. [2017] Kristen Gardner, Mor Harchol-Balter, Alan Scheller-Wolf, Mark Velednitsky, and Samuel Zbarsky. Redundancy-d: The power of d choices for redundancy. Operations Research, 65(4):1078–1094, 2017.

- Gast [2015] Nicolas Gast. The power of two choices on graphs: the pair-approximation is accurate? ACM SIGMETRICS Performance Evaluation Review, 43(2):69–71, 2015.

- Harrison [1998] J Michael Harrison. Heavy traffic analysis of a system with parallel servers: asymptotic optimality of discrete-review policies. Annals of applied probability, pages 822–848, 1998.

- Harrison and López [1999] J Michael Harrison and Marcel J López. Heavy traffic resource pooling in parallel-server systems. Queueing systems, 33(4):339–368, 1999.

- He et al. [2011] Chen He, Ying Lu, and David Swanson. Matchmaking: A new mapreduce scheduling technique. In Cloud Computing Technology and Science (CloudCom), 2011 IEEE Third International Conference on, pages 40–47. IEEE, 2011.

- Hosseini et al. [2017] Mohammad Hosseini, Yu Jiang, Ali Yekkehkhany, Richard R Berlin, and Lui Sha. A mobile geo-communication dataset for physiology-aware dash in rural ambulance transport. In Proceedings of the 8th ACM on Multimedia Systems Conference. ACM, 2017.

- Isard et al. [2007] Michael Isard, Mihai Budiu, Yuan Yu, Andrew Birrell, and Dennis Fetterly. Dryad: distributed data-parallel programs from sequential building blocks. In ACM SIGOPS operating systems review, volume 41, pages 59–72. ACM, 2007.

- Isard et al. [2009] Michael Isard, Vijayan Prabhakaran, Jon Currey, Udi Wieder, Kunal Talwar, and Andrew Goldberg. Quincy: fair scheduling for distributed computing clusters. In Proceedings of the ACM SIGOPS 22nd symposium on Operating systems principles, pages 261–276. ACM, 2009.

- Jin et al. [2011] Jiahui Jin, Junzhou Luo, Aibo Song, Fang Dong, and Runqun Xiong. Bar: An efficient data locality driven task scheduling algorithm for cloud computing. In Proceedings of the 2011 11th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, pages 295–304. IEEE Computer Society, 2011.

- Kavousi [2017] Mohammadamir Kavousi. Affinity scheduling and the applications on data center scheduling with data locality. arXiv preprint arXiv:1705.03125, 2017.

- Kreimer [2002] Joseph Kreimer. Real-time system with homogeneous servers and nonidentical channels in steady-state. Computers & Operations Research, 29(11):1465–1473, 2002.

- Krishna and Rani [2018] K Hari Krishna and Kosuru Anusha Rani. Reducing the energy consumption energy-efficient query processing node in web search engines. 2018.

- Livny and Melman [1982] Miron Livny and Myron Melman. Load balancing in homogeneous broadcast distributed systems. In ACM SIGMETRICS Performance Evaluation Review, volume 11, pages 47–55. ACM, 1982.

- Lowery et al. [2008] James Craig Lowery, Mark Andrew Collins, and Brent Schroeder. Systems and methods for provisioning homogeneous servers, May 22 2008. US Patent App. 11/562,921.

- Luczak et al. [2005] Malwina J Luczak, Colin McDiarmid, et al. On the power of two choices: balls and bins in continuous time. The Annals of Applied Probability, 15(3):1733–1764, 2005.

- Lumetta and Mitzenmacher [2007] Steve Lumetta and Michael Mitzenmacher. Using the power of two choices to improve bloom filters. Internet Mathematics, 4(1):17–33, 2007.

- Mandelbaum and Stolyar [2004] Avishai Mandelbaum and Alexander L Stolyar. Scheduling flexible servers with convex delay costs: Heavy-traffic optimality of the generalized c-rule. Operations Research, 52(6):836–855, 2004.

- Meyn [2009] Sean Meyn. Stability and asymptotic optimality of generalized maxweight policies. SIAM Journal on control and optimization, 47(6):3259–3294, 2009.

- Mitzenmacher [2001] Michael Mitzenmacher. The power of two choices in randomized load balancing. IEEE Transactions on Parallel and Distributed Systems, 12(10):1094–1104, 2001.

- Moaddeli et al. [2019] Amir Moaddeli, Iman Nabati Ahmadi, and Negin Abhar. The power of d choices in scheduling for data centers with heterogeneous servers. arXiv preprint arXiv:1904.00447, 2019.

- Musavi [2019] Negin Musavi. A game theoretical framework for the evaluation of unmanned aircraft systems airspace integration concepts. arXiv preprint arXiv:1904.08477, 2019.

- Musavi et al. [2016a] Negin Musavi, Deniz Onural, Kerem Gunes, and Yildiray Yildiz. Unmanned aircraft systems airspace integration: A game theoretical framework for concept evaluations. Journal of Guidance, Control, and Dynamics, pages 96–109, 2016a.

- Musavi et al. [2016b] Negin Musavi, Kaan Bulut Tekelioglu, Yildiray Yildiz, Kerem Gunes, and Deniz Onural. A game theoretical modeling and simulation framework for the integration of unmanned aircraft systems in to the national airspace. AIAA Infotech@ Aerospace, pages 6–19, 2016b.

- Polo et al. [2011] Jorda Polo, Claris Castillo, David Carrera, Yolanda Becerra, Ian Whalley, Malgorzata Steinder, Jordi Torres, and Eduard Ayguadé. Resource-aware adaptive scheduling for mapreduce clusters. In ACM/IFIP/USENIX International Conference on Distributed Systems Platforms and Open Distributed Processing, pages 187–207. Springer, 2011.

- Richa et al. [2001] Andrea W Richa, M Mitzenmacher, and R Sitaraman. The power of two random choices: A survey of techniques and results. Combinatorial Optimization, 9:255–304, 2001.

- Saadatmand et al. [2019a] Sepehr Saadatmand, Mohammad Saleh Sanjarinia, Pourya Shamsi, and Mehdi Ferdowsi. Dual heuristic dynamic programing control of grid-connected synchronverters. North American Power Symposium (NAPS), 2019, pages 1–6, 2019a.

- Saadatmand et al. [2019b] Sepehr Saadatmand, Mohammad Saleh Sanjarinia, Pourya Shamsi, Mehdi Ferdowsi, and Donald C Wunsch. Heuristic dynamic programming for adaptive virtual synchronous generators. North American Power Symposium (NAPS), 2019, pages 1–6, 2019b.

- Saadatmand et al. [2020a] Sepehr Saadatmand, Sima Azizi, Mohammadamir Kavousi, and Donald Wunsch. Autonomous control of a line follower robot using a q-learning controller. In 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), pages 0556–0561. IEEE, 2020a.

- Saadatmand et al. [2020b] Sepehr Saadatmand, Mohammadamir Kavousi, and Sima Azizi. The voltage regulation of boost converters using dual heuristic programming. In 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), pages 0531–0536. IEEE, 2020b.

- Sadiq and De Veciana [2009] Bilal Sadiq and Gustavo De Veciana. Throughput optimality of delay-driven maxweight scheduler for a wireless system with flow dynamics. In Communication, Control, and Computing, 2009. Allerton 2009. 47th Annual Allerton Conference on, pages 1097–1102. IEEE, 2009.

- Salehi [2017] Mehdi Salehi. Optimal physiology-aware scheduling of clinical states in rural ambulance transport. In 2017 International Conference on Inventive Computing and Informatics (ICICI), pages 247–252. IEEE, 2017.

- Salehi et al. [2018] Sara Salehi, Jia Tina Du, and Helen Ashman. Use of web search engines and personalisation in information searching for educational purposes. Information Research: An International Electronic Journal, 23(2):n2, 2018.

- Schwartz [1998] Candy Schwartz. Web search engines. Journal of the American Society for Information Science, 49(11):973–982, 1998.

- Singh [1970] Vijendra P Singh. Two-server markovian queues with balking: heterogeneous vs. homogeneous servers. Operations Research, 18(1):145–159, 1970.

- Stolyar et al. [2004] Alexander L Stolyar et al. Maxweight scheduling in a generalized switch: State space collapse and workload minimization in heavy traffic. The Annals of Applied Probability, 14(1):1–53, 2004.

- van de Ven et al. [2009] Peter van de Ven, Sem Borst, and Seva Shneer. Instability of maxweight scheduling algorithms. In INFOCOM 2009, IEEE, pages 1701–1709. IEEE, 2009.

- Van Mieghem [1995] Jan A Van Mieghem. Dynamic scheduling with convex delay costs: The generalized c— mu rule. The Annals of Applied Probability, pages 809–833, 1995.

- Wang et al. [2005] Chwei-Sen Wang, Oskar H Stielau, and Grant A Covic. Design considerations for a contactless electric vehicle battery charger. IEEE Transactions on industrial electronics, 52(5):1308–1314, 2005.

- Wang et al. [2013] Weina Wang, Kai Zhu, Lei Ying, Jian Tan, and Li Zhang. A throughput optimal algorithm for map task scheduling in mapreduce with data locality. ACM SIGMETRICS Performance Evaluation Review, 40(4):33–42, 2013.

- Wang et al. [2016] Weina Wang, Kai Zhu, Lei Ying, Jian Tan, and Li Zhang. Maptask scheduling in mapreduce with data locality: Throughput and heavy-traffic optimality. IEEE/ACM Transactions on Networking (TON), 24(1):190–203, 2016.

- White [2010] Tom White. Hadoop: The definitive guide, yahoo, 2010.

- White [2012] Tom White. Hadoop: The definitive guide. ” O’Reilly Media, Inc.”, 2012.

- Winkler [1987] Fedelma Winkler. Consumerism in health care: beyond the supermarket model. Policy & Politics, 15(1):1–8, 1987.

- Xie and Lu [2015] Qiaomin Xie and Yi Lu. Priority algorithm for near-data scheduling: Throughput and heavy-traffic optimality. In Computer Communications (INFOCOM), 2015 IEEE Conference on, pages 963–972. IEEE, 2015.

- Xie et al. [2016] Qiaomin Xie, Ali Yekkehkhany, and Yi Lu. Scheduling with multi-level data locality: Throughput and heavy-traffic optimality. In INFOCOM 2016-The 35th Annual IEEE International Conference on Computer Communications, IEEE. IEEE, 2016.

- Xie et al. [2018] Xiaohui Xie, Yiqun Liu, Maarten de Rijke, Jiyin He, Min Zhang, and Shaoping Ma. Why people search for images using web search engines. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, pages 655–663. ACM, 2018.

- Yekkehkhany [2017] Ali Yekkehkhany. Near data scheduling for data centers with multi levels of data locality. (Dissertation, University of Illinois at Urbana-Champaign), 2017.

- Yekkehkhany and Nagi [2020] Ali Yekkehkhany and Rakesh Nagi. Blind gb-pandas: A blind throughput-optimal load balancing algorithm for affinity scheduling. IEEE/ACM Transactions on Networking, 2020.

- Yekkehkhany et al. [2018] Ali Yekkehkhany, Avesta Hojjati, and Mohammad H Hajiesmaili. Gb-pandas:: Throughput and heavy-traffic optimality analysis for affinity scheduling. ACM SIGMETRICS Performance Evaluation Review, 45(2), 2018.

- Yekkehkhany et al. [2019] Ali Yekkehkhany, Ebrahim Arian, Mohammad Hajiesmaili, and Rakesh Nagi. Risk-averse explore-then-commit algorithms for finite-time bandits. arXiv preprint arXiv:1904.13387, 2019.

- Yekkehkhany et al. [2020] Ali Yekkehkhany, Ebrahim Arian, Rakesh Nagi, and Ilan Shomorony. A cost-based analysis for risk-averse explore-then commit finite-time bandits. 2020.

- Yildiz et al. [2013] Yildiray Yildiz, Adrian Agogino, and Guillaume Brat. Predicting pilot behavior in medium scale scenarios using game theory and reinforcement learning. In AIAA Modeling and Simulation Technologies (MST) Conference, page 4908, 2013.

- Zaharia et al. [2008] Matei Zaharia, Andy Konwinski, Anthony D Joseph, Randy H Katz, and Ion Stoica. Improving mapreduce performance in heterogeneous environments. In Osdi, volume 8, page 7, 2008.

- Zaharia et al. [2010] Matei Zaharia, Dhruba Borthakur, Joydeep Sen Sarma, Khaled Elmeleegy, Scott Shenker, and Ion Stoica. Delay scheduling: A simple technique for achieving locality and fairness in cluster scheduling. In Proceedings of the 5th European Conference on Computer Systems, pages 265–278. ACM, 2010.