1900 \vgtccategoryResearch \vgtcinsertpkg

Comparative Analysis of Change Blindness in Virtual Reality and Augmented Reality Environments

Abstract

Change blindness is a phenomenon where an individual fails to notice alterations in a visual scene when a change occurs during a brief interruption or distraction. Understanding this phenomenon is specifically important for the technique that uses a visual stimulus, such as Virtual Reality (VR) or Augmented Reality (AR). Previous research had primarily focused on 2D environments or conducted limited controlled experiments in 3D immersive environments. In this paper, we design and conduct two formal user experiments to investigate the effects of different visual attention-disrupting conditions (Flickering and Head-Turning) and object alternative conditions (Removal, Color Alteration, and Size Alteration) on change blindness detection in VR and AR environments. Our results reveal that participants detected changes more quickly and had a higher detection rate with Flickering compared to Head-Turning. Furthermore, they spent less time detecting changes when an object disappeared compared to changes in color or size. Additionally, we provide a comparison of the results between VR and AR environments.

Human-centered computingVisualizationVisualization techniquesTreemaps; \CCScatTwelveHuman-centered computingVisualizationVisualization design and evaluation methods

1 Introduction

A visual stimulus, a virtual environment created by computer graphics, is the primary component of immersive technologies [8]. It delivers visual information to provide an immersive experience to a Virtual Reality (VR) and Augmented Reality (AR) user. The user in these immersive environments is subject to have the same limitations as the human visual system encountered in the real world. Change blindness, for example, refers to the failure of individuals to notice significant changes in an environment [23]. These changes include object displacement or removal from the Field of View (FoV), alterations in the appearances of observers, or complete scene transformations. Change blindness can even occur when the changes are directly in front of individuals [28, 32].

Change blindness presents substantial challenges, particularly in time-sensitive and irreversible scenarios like vehicular navigation, criminal investigations, or military operations [29, 42, 9, 20, 11, 10]. While the earlier studies show that training can temporarily improve change detection such as pop-out detection [1], its effectiveness is limited to specific domains, and errors can still occur [13]. Therefore, it is crucial to adopt a systematic approach to fully understand change blindness itself and improve visual content.

The emergence of immersive technologies, especially Head-Mounted Displays (HMDs) has opened a new era of visual perception research. It enables unlimited alteration and control of variables in immersive environments. Virtual environments facilitate numerous alterations that would be unattainable in the real world, allowing for the investigation of change blindness under various conditions. Employing object alterations, such as modifying object orientation, removing objects, or changing a room structure, may be unfeasible in a real-world setting. It can be readily used within VR environments[35, 7, 38]. However, VR/AR HMDs have limited FoV [2, 22]. The FoV refers to the extent of the visual environment that a user can perceive through the HMDs. Their limited FoV could restrict the user’s ability to perceive changes in their surroundings if the changes occur outside the FoV. Moreover, the quantitative relationship between the change blindness effects and the object alteration conditions or attention-disrupting conditions remains unclear.

To address these research gaps, we establish the following research questions (RQs):

-

•

RQ1: How do the limited FoVs influence change detection in immersive environments, and how does the influence differ between VR and AR?

-

•

RQ2: How do different types of object alteration methods, such as color change, size modification, or displacement, affect change detection in both VR and AR environments?

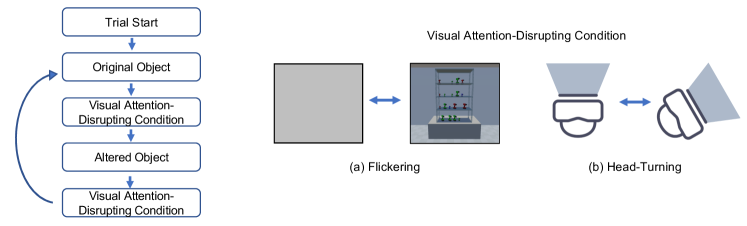

To tackle the research questions, we designed and conducted two user studies. In Study 1, we evaluated different types of visual attention-disrupting methods (Flickering and Head-Turning) and object manipulation methods (Object Removal, Color Alteration, and Size Alteration) in VR. Study 2 evaluated the same conditions with an additional Head-Turning angle in AR.

Our results show that participants identified changes faster when an object was altered within FoV than when the object was altered outside of FoV. Change detection was more challenging when the object attributes, such as color or size were altered, as opposed to when the object was entirely removed. Additionally, the results show differences in change detection between AR and VR environments, highlighting the distinct effects of different immersive technologies.

2 Related Work

2.1 Change Blindness

Change blindness is a visual perception phenomenon that occurs when a stimulus changes without being noticed by observers. Earlier psychology and cognitive studies reported change blindness is caused due to the limitation of visual short-term memory [5, 40, 3, 6, 21]. According to a coherence theory and triadic architecture [26, 24], a human visual attention involves three stages including early processing, focused attention, and release of attention. The early processing stage is a low-level processing that takes place in parallel across the entire visual environment. It generates proto-objects which is with weak spatial and temporal coherence. In the focused attention stage, the generated proto-objects are then selected and combined into spatial and temporal individual object images. These images enable humans to understand the continuity of objects across interruptions. Finally, they are dissolved when attention is released. Detecting changes in visual stimuli is highly relevant to the focused attention stage.

Change blindness occurs when the focused attention stage is interrupted [33, 16, 25, 27]. Earlier research investigated what could divert focused attention and result in change blindness. Flickering is a widely used attention-disrupting method in change blindness studies in 2D display settings [28]. It distracts observers by repeatedly displaying a blank image between the original and altered images. Changing an object while the entire scene is moving [36] and asking observers to perform complex tasks [31, 39] also could confuse the observers in deciding where to focus. Moreover, if an object is changed outside of the observers’ sight, the observers may not have a chance to pay attention. Some research investigated change blindness in VR, and their details are reported in the next subsection.

2.2 Change Blindness in Virtual Environments

Previous research reported that change blindness could also occur in virtual environments. Steinicke et al.[35] investigated change blindness phenomena in various stereoscopic display systems including Active Stereoscopic Workbench, Passive Back-Projection Wall, and VR HMD device using flickering as a distraction method. They reported that change blindness has occurred in all types of display systems, and their participants have different response times to find changes depending on the displays. Triesch et al. [39] investigated change blindness in a virtual environment asking participants to move virtual objects or change their sizes. Their findings showed that as task complexity increases, so does change blindness.

Change blindness in VR is investigated as a method to render a larger virtual environment in a limited physical space [38]. Suma et al. [37] made participants recognize virtual space larger than the actual tracking area utilizing change blindness. The authors changed the position of a door when the participant was exploring a room to make them go out in a different direction than entered. Consequently, while the participants are visiting many different rooms, they only walk roundly in a limited tracking space and perceive the virtual area as larger than the real one. Surprisingly, the participants failed to detect these changes, resulting in an illusion in which they perceived themselves to be in a vast virtual environment.

Factors that can either amplify or diminish change blindness remain underexplored. The majority of change blindness research has only used manipulation methods that add or remove objects in a scene. It would be because of the limitations of the study environments. For example, changing the color or size of an object, such as a tree, car, building, or animal, in a short time is not applicable in the real world. However, change blindness can be explored further in VR beyond such limitations. Martin et al. [18] recently investigated the effect of object shapes, colors, and locations on change blindness. They experimented with factors (altered object’s complexity, distance between the object and observer, alteration type, and alteration timing) that can affect change blindness, and they found the effects of distance between the object and observer and the complexity of the altered object for the change detection ratio. However, they could not find an effect of alteration types which is found in the change blindness studies in 2 dimensional [28, 17]. In our work, we investigated what visual attention-disrupting and object-alteration conditions can reinforce or discourage the change blindness phenomenon in the VR and AR environment with identical shape objects on shelves as grid-like to free from any other uncontrolled variables.

3 Experiment Design

The primary objective of our research is to investigate the impact of limited FoV of VR/AR HMDs and object alteration methods on change blindness in VR/AR environments. To achieve this goal, we conduct two controlled experiments.

In the first experiment, we evaluate two visual attention-disrupting conditions (Flickering and 60∘ Head-Turning) and four object alternation conditions (Object Removal, Color Alteration, and Size Alteration) in VR. The second experiment investigates the impact of the same conditions with an additional visual attention-disrupting condition (30∘ Head-Turning) in an AR environment.

Previous change blindness studies in a VR environment reported unclear results with different shapes of manipulated objects on different positions and not uniformed background images[18]. Therefore, we design an experiment scene to investigate the change blindness phenomenon with identical shape objects (hand drills) and unobtrusive background design (monochromatic gray wall).

3.1 Experiment Environment

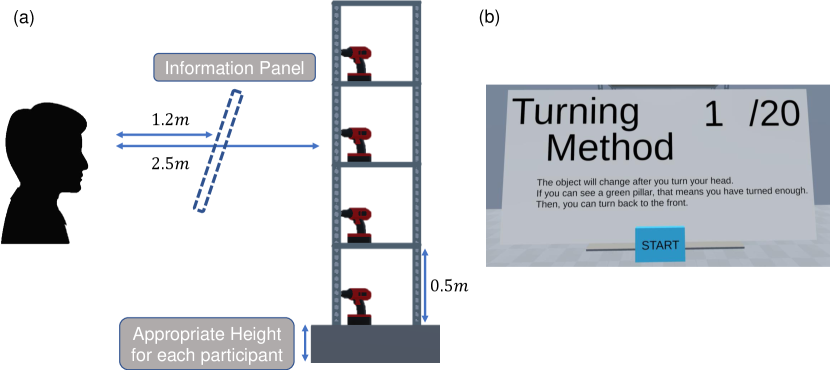

The virtual scene is a 10m 10m room with a shelf (Figure 1). In VR, the scene has a monochromatic gray background, while there is no background in AR. The shelf has four layers, each containing multiple drill objects that can be altered. These drills can be in three different sizes (small: 3cm (width) 6cm (height) 10cm (depth), medium: 5cm 10cm 15cm, or large: 7cm 14cm 20cm) and colors (red, green, or blue). Participants can select one drill object during a trial that they believe has been altered. To prevent participants from anticipating the changes, the position, size, and color of the drills are randomized. An altered object’s color and size are also randomized for each trial to make the altered object unpredictable for the participants. For the Head-turning condition, two gray columns are located on the left and right sides of the shelf and change their color to green to notice participants that the object is altered when they turn their head.

The distance between a participant and the shelf is 2.5m, and the position of the shelf is set as the center of objects on the 3rd shelf to match it with the participant’s eye level (Figure 4).

An information panel is located between the participant and the shelf to show the visual attention-disrupting condition and the remaining number of tasks. The participant starts a trial by clicking a button located on the bottom of the panel.

3.2 Visual Attention-Disrupting Conditions

To evaluate the impact of the limited FoV of VR/AR HMDs on change blindness, we use two visual attention-disrupting conditions:

-

•

Flickering: It is a traditional method used to investigate change blindness [28, 33]. In this condition, a monochromatic gray barrier periodically obstructs an observer’s view, and an object is altered while the observer’s sight is blocked. The barrier is designed to block the observer’s sight for 250 ms, and this blocking period repeats every 500 ms. This flickering time is chosen with the task difficulty and the range of human eye blinking in mind, which typically falls between 100 ms and 400 ms [4, 18].

-

•

Head-Turning: In this condition, an observer is required to voluntarily rotate his or her head to the left or right until the shelf is outside the FoV and an object is altered when the shelf is out of FoV. In the VR environment, a Head-Turning angle of to ensure that the shelf is completely outside of the FoV (). Similarly, due to the narrower FoV () in the AR environment, a reduced Head-Turning angle is also used to ensure that the alteration is occurred outside of the FoV. Additionally, to ensure clarity of the alteration that occurred outside the FoV, the turning angle is quantified based on the horizontal angle of the HMD, and the observer is required to stand stationary in a location. However, a Head-turning angle of is also used to enable a direct comparison between VR and AR conditions. To ensure that the observer is aware that he or she has turned his or her head sufficiently, the virtual scene includes columns on the left and right sides that change color from red to green when the turning angle exceeds the set angle (Figure 1). This visual feedback serves as a confirmation that the required Head-Turning motion has been performed adequately.

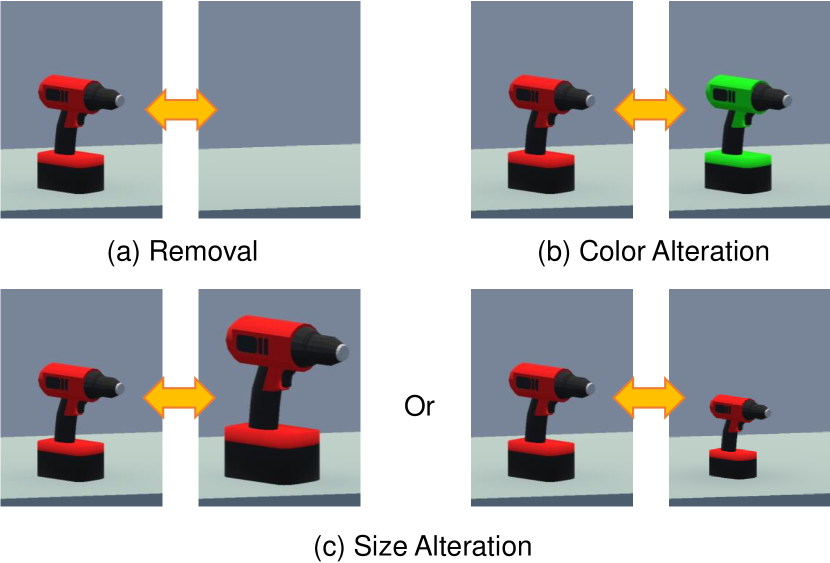

3.3 Object alternation Conditions

Object alteration occurs when participants’ visual attention is disrupted as one of these two visual attention-disrupting conditions. One of the drills on the shelf will be changed by one of the following four alternation methods:

-

•

Object Removal: The target object temporarily vanishes from the scene and then reappears.

-

•

Color Alteration: This condition changes the target object’s color to a different one (i.e., red, blue, or green) from its original color.

-

•

Size Alteration: This condition involves altering the target object’s size, either transitioning between small and medium or medium and large.

In the VR experiment, each participant is asked to complete a total of 40 tasks (5 trials each for Removal and Color Alteration conditions + 10 trials for Size Alteration condition) 2 visual attention-disrupting conditions) to find and select an altered object. Similarly, in the AR experiment, each participant is asked to complete a total of 60 tasks (5 trials each for Removal and Color Alteration conditions + 10 trials for Size Alteration condition) 3 visual attention-disrupting conditions).

It is worth noting that we initially conducted the study with two types of Size Alteration conditions: medium to small, and medium to large. Upon analysis, however, both were found to be equivalent. As a result, we merged the two conditions resulting in a doubled size of trials for the Size Alteration condition.

3.4 Measurements

We employ multiple measures to evaluate the impact of limited FoV and object alteration conditions. These measures offer a comprehensive understanding of participants’ performance and their subject experience through the experiments.

Detection Time: It is the duration from the start of a task until the participant identifies and selects the altered object. This measurement assesses the time it takes for the participant to perceive and recognize alteration in each task. A shorter detection time indicates a quicker and more efficient perception and recognition of alteration. On the other hand, a longer detection time suggests a delayed perception and recognition of alteration, indicating a higher presence of change blindness. If a participant fails to detect the altered object within the designated time limit (60 seconds), that case is excluded from the detection time analysis.

Detection Rate: In a trial, a participant’s selection may not always be correct. The detection rate assesses the participant’s ability to correctly identify the altered objects within the given time limit. It is a ratio of the number of correct selections to the total number of responses, except for the detection failure cases. A higher detection rate indicates that the participant could remember the objects accurately without misconceiving them.

Timeout Rate: If a participant fails to detect the altered object within the time limit, we consider this as the occurrence of change blindness. The timeout rate is the ratio of the number of timeouts to the total number of trials for each condition. A higher timeout rate indicates a higher occurrence of change blindness.

Head-Turning Count: This measurement counts how many times a participant turns his or her head during the trials in the Head-Turning condition. In the Flickering condition, the alternation count is proportional to the response time. In the Head-Turning condition, the Head-Turning count can indicate the alternation count.

Participants’ preference and feedback: After finishing all the tasks, the participant is asked to do questionnaires. The questionnaires ask which manipulation method was the hardest or the easiest, which visual attention-disruption method was the hardest, and what strategy they used to detect the change.

3.5 Procedures

Upon arrival, a participant is asked to read and sign an informed consent form according to the IRB protocol (IRB VR: #12970, AR: #13243). The participant then completes a demographic questionnaire. Following that, the participant receives a description of the experiment’s purpose and procedures, visual attention-disrupting conditions, and object alteration conditions. A training session in an immersive VR or AR environment is provided to the participant. In the training session, the participant is instructed on how to select an object using either a controller with a ray casting (VR experiment) or his or her hand with a pinch gesture (AR experiment). During the training session, the participant familiarizes with the visual attention-disrupting conditions. The participant is not required to physically move around the scenes during the experiments.

The main study is conducted after the training session. For each visual attention-disrupting condition, the participant is required to complete 20 trials for each object alteration condition. A new trial begins when the participant selects a start button on the information panel (Figure 4). The information panel shows the current visual attention-disrupting condition and the number of remaining trials. For each trial, 16 21 drill objects are randomly generated on the shelf with random positions, colors, and sizes. The participant is asked to find an altered object as fast and correctly as possible.

After completing all trials with a specific visual attention-disrupting condition, the participant is asked to complete a questionnaire. The questionnaire asks about the experience of the visual attention-disrupting condition, object alteration condition, and the strategy for identifying the altered object during the trials. Next, the participant undergoes the same procedure with another visual attention-disrupting condition. After all the trials, the participant is asked to complete a post-questionnaire to compare the difficulty of visual attention-disrupting methods and their reason. The order of the visual attention disruption and object alteration conditions is fully counterbalanced by the Latin square.

3.6 Hypothesis

We set the following five hypotheses.

-

H1:

In both VR and AR, Head-Turning would have a longer detection time, lower detection rate, and higher timeout rate than Flickering because participants will take more time to physically turn their heads and refocus on the scene after head movement.

-

H2:

In both VR and AR environments, the Removal condition is expected to result in a shorter detection time and higher detection rate compared to other alteration conditions. This is because the object’s spatial information can be perceived non-attentionally, but other visual channels are not, according to triadic architecture [24].

-

H3:

In both VR and AR, each visual channel of an object, such as color, size, or shape, is expected to have different perception difficulties and make a different result of change detection. This is because visual channels, size, and color have traditionally been regarded as separate in visual perception[41, 34].

-

H4:

In the AR environment, Head-Turning with a larger angle has a longer detection time, a lower detection rate, and a higher timeout rate than Head-Turning with a smaller angle. We assume the large turning angle makes refocusing on the scene harder than the smaller angle.

- H5:

3.7 Apparatus

In the VR experiment, the Vive Pro HMD with a wireless adapter and a single controller is utilized. It features a 110∘ vertical and horizontal FoV and its resolution is 1440 x 1600 pixels per eye (2880 x 1600 pixels combined). The wireless adapter offers near-zero latency. In the AR experiment, Microsoft HoloLens 2 is used. It features a 43∘ horizontal and 29∘ vertical FoV, a resolution of 2048 × 1080 for each eye, and a refresh rate of 75Hz. According to Sauer et al. [30], however, these manufacturer FoV angles are not consistent with real-world use. The true FoV boundaries of the HMDs are shown in Fig 1. The application for both experiments is executed in Unity 2021.3.7f1 and ran on a Windows 10 desktop with Intel Xeon W-2245 CPU (3.90GHz), 64GB RAM, and Nvidia GeForce RTX 3090 graphics card. The average luminosity of tracking space is about 250 lux, and the brightness setting on Hololens 2 is 100%.

4 Results

In this section, we report user study results. We use a two-way (visual attention-disrupting x object alternation) repeated measures Analysis of Variance (ANOVA) test at a significance level of 5% for the detection time, detection rate, and timeout rate analyses. A one-way repeated measures ANOVA test at the same significance level of 5% is used for Head-Turning count and qualitative analyses.

In this experiment, we aim to investigate the change blindness phenomenon in a VR environment, which is affected by object manipulation conditions and visual attention-disrupting conditions. Previous studies about change blindness in a VR environment reported unclear results with different shapes of alternated objects on different positions and not uniformed background images[18]. Therefore, in this experiment, we designed a VR experiment scene to investigate the change blindness phenomenon with the same shape objects and unobtrusive background design.

4.1 Experiment 1: VR

4.1.1 Participants

A total of 22 participants (11 males and 11 females) are recruited from the university’s participant recruitment system (SONA). Their average age is 19.7, ranged from 18 to 22. All participants have 20/20 (or corrected 20/20) vision, and they do not have impairments in using VR devices. They were rewarded 1 SONA credit as follows the university SONA policy. According to the pre-questionnaire, 18 out of 22 participants have experience using the VR device.

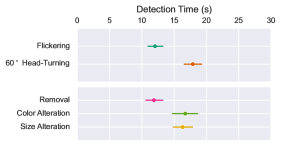

4.1.2 Quantitative Analysis

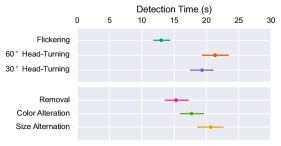

Detection Time: The results with each condition are reported in Figure 5. No interaction effect is disclosed between the visual attention-disrupting conditions and the object alternation conditions (=.873). We find a main effect on the visual attention-disrupting conditions (F(1,21)=32.7, <.001, =.609). Flickering has a faster detection time (M=12.0s, SD=4.90) than Head-Turning (M=17.9s, SD=5.58). In addition, a main effect on the object alternation conditions (F(2,42)=18.7, p .001, =.471) is also found. Its pairwise comparison results show that Removal (M=11.9s, SD=4.58) has a faster detection time result than the result with Color Alteration (M=16.7s, SD=6.56, p<.001) and Size Alternation (M=16.3s, SD=5.48, p<.001).

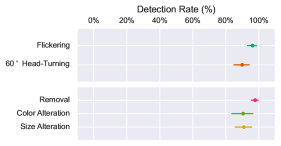

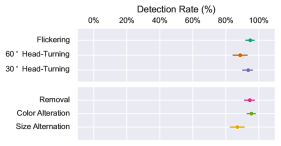

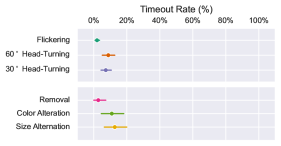

Detection Rate: Figure 6 shows the detection rate results with each condition. Simple effects on the object alternation conditions (F(2, 42)=5.90, = .006, =.219) are disclosed. Regarding the object alternation conditions, Removal (M=97.7%, SD=7.65) produced a higher detection rate result than the results with Color Alteration (M=90.5%, SD=20.7, =.017) and Size Alteration (M=91.0%, SD=17.0, =.001).

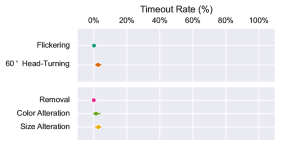

Timeout Rate: The timeout rate results with each condition are reported in Figure 7. These results show an interaction effect between the visual attention-disrupting and the object alteration conditions (F(2,42)=3.22, =.050, =.133). In the Head-Turning condition, the participants have a lower timeout rate with the Removal (M=0.00%, SD=0.00) condition than the Size Alteration (M=5.00%, SD=6.57 =.002) condition. With the Size Alteration condition, the Flickering (M=0.50%, SD=2.08) condition has a lower timeout rate than the Head-Turning (=.005) condition. There is a simple effect on the visual attention-disrupting condition (F(1,21)=6.37, =.020, =.233). The Flickering condition shows a lower timeout rate (M=0.152%, SD=1.22) than the Head-Turning condition (2.58%, SD=6.81). There is another simple effect on the object alteration (F(2,42)=4.11, =.023, =.164) conditions. The removal condition has a lower timeout rate (M=0.00%, SD=0.00) than the Size Alteration condition (M=2.73%, SD=5.38).

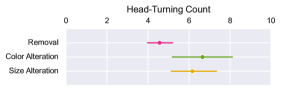

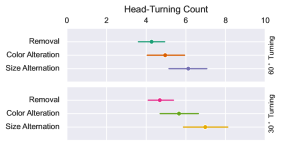

Head-Turning Count: The results are reported in Figure 8. A main effect is found on the object alternation conditions (F(2, 42)=8.67, p<.001, =.292); the participants made less number of Head-Turning with Removal (M=4.56, SD=1.47) than Color Alteration (M=6.66, SD=3.42 =.003) and Size Alteration (M=6.16, SD=2.49, <.001).

4.1.3 Qualitative Analysis

We find a significant difference in the subjective difficulty in finding the altered object (F(1,42) = 4.776, = .034, = .102) between Flickering (M = 3.27) and Head-Turning (M = 4.27) from 1 (easiest) to 7 (hardest). Many participants commented that they could easily remember the objects as images in their heads within the Flickering condition. But within the Head-Turning condition, they noted it was not easy to remember and to keep their eyes focused on the objects. P15 commented “When I turned my head, it was a lot harder to pay attention to the changes because I would slowly forget the details of the environment.”. And P22 stated “Self turning was more difficult. The change was less noticeable and harder to recognize whether that be color or size. With the faster flickering, I was able to quickly spot the change whether that be size or color. I could focus on rows at a time with this method or sometimes the whole picture and notice it. However with self turning, I had to focus on smaller parts of the picture and focus on either size or color change, not both.”

Among the object alteration conditions, the participants rated the Removal condition as the easiest condition, 16 participants chose it for the Flickering condition, while 14 selected it for the Head-Turning condition. Conversely, the Size Alteration condition was consistently regarded as the most challenging, with 16 participants selecting it under both the Flickering and Head-Turning conditions.

4.2 Experiment 2: AR

4.2.1 Participants

A total of 22 participants (10 males and 12 females) are recruited from SONA. Their average age is 20, ranging from 18 to 24. All participants have 20/20 (or corrected 20/20) vision, and they do not have impairments in using AR devices.We reward 2 SONA credits to each participant as follows the university SONA policy. According to the pre-questionnaire, 10 out of 22 participants (45.5%) have experience using an AR device. None of the participants from the VR study took part in the AR study.

4.2.2 Quantitative Analysis

Detection Time: The detection time results with each condition are reported in Figure 9. No significant interaction effect is disclosed.

Two main effects from the visual attention-disrupting conditions (F(2,42)=18.1, <.001, =.463) and object alternation conditions (F(2,42)=21.6, <.001, =.504) are found. Flickering has a faster detection time (M=13.0s, SD=5.15) than 30∘ Head-Turning (M=19.3s, SD=7.63, <.001), and 60∘ Head-Turning (M=21.3s, SD=8.29, <.001). Removal has a faster detection time (M=15.3s, SD=7.77) than Color Alteration (M=17.7s, SD=7.38, =.005) and Size Alteration (M=20.7s, SD=7.86, <.001). In addition, Color Alteration (M=17.7s, SD=7.38) has a faster detection time than Size Alteration (M=20.7s, SD=7.86, =.001).

Detection Rate: The detection rate results are reported in Figure 10. The results only disclose the main effect of the object-alternation conditions (F(2, 42)=7.15, =.002, =.254). Pairwise comparisons show that Removal (M=94.5%, SD=12.2) has a higher detection rate than Size Alteration (M=87.0%, SD=18.6, =.011). Color Alteration (M=95.5%, SD=10.6) also has a higher detection rate than Size Alteration (M=87.0%, SD=18.6, <.001).

Timeout Rate: Figure 11 presents the timeout rate results with each condition. Two main effects are found on the visual attention-disrupting conditions (F(2, 42)=4.03, =.025, =.161) and the object-alteration conditions (F(2, 42)=9.90, <.001, =.320). Flickering (M=1.97%, SD=5.57) has less timeout rate than both Head-Turning conditions (30∘: M=7.30%, SD=12.7, =.014; 60∘: M=8.79%, SD=15.4, =.006). We also find Removal (M=1.52%, SD=6.33) has a significantly lower timeout rate than Color Alteration (M=7.27%, SD=12.9, =.006) and Size Alteration (M=9.24%, SD=14.8, <.001).

Head-Turning Count: A main effect is found on the object-alteration condition (F(2, 42)=12.4, <.001, =.371). Removal (M=4.48, SD=1.56, <.001) and Color Alteration (M=5.31, SD=2.39, =.002) made less Head-Turning than Size Alteration (M=6.55, SD=2.54).

4.2.3 Qualitative Analysis

There is a significant difference in difficulty to finding the altered objects between the visual attention-disrupting conditions(F=(1,42) = 5.988, = .004, =.160). The participants responded that Head-Turning 60∘ (M=4.32) and 30∘ (M=4.09) are more difficult than Flickering (M=2.95). They mentioned that the reason why Head-Turning is more difficult is the movement makes them hard to focus on one part. P1 stated that “The flickering method was easier for me because it didn’t require me to think as hard or keep as much stored in my short term memory at a time. I could just take in a few objects at a time, wait to see if they changed at all, then move on with confidence that I didn’t miss the changing object.”. P4 also mentioned “I had to refocus my attention after I turned back on the object I was looking at specifically whereas with flickering I could easily compare the two different shelves while maintaining my focus on the same location.” However, as inferred from the score of both Head-Turning conditions was not significantly different, the participants did not mention the difference in angle specifically.

Among the object alteration conditions, the participants stated that Removal was the easiest condition. For the Flicking method, 13 participants chose the Removal condition. for both the 60∘ Head-Turning, and 30∘ Head-Turning conditions, 14 participants chose the Removal condition. Conversely, the Size Alternation condition is responded to as the most challenging.

5 Discussion

The two user studies in VR and AR provide insights into the participants’ performance and perception. In this section, we discuss our findings and compare the results between VR and AR using the between-subject design.

Detection Time

The participants demonstrate faster detection of the altered object in the Flickering condition compared to the Head-Turning condition in both VR and AR. This result represents that when object alternation has occurred outside of FoV, it takes more time for participants to detect the changes compared to when it occurred within FoV. The participants’ comments also support this, as they mentioned difficulties in paying attention to the objects when the objects moved out of the FoV. This finding supports H1 that the participants would spend more time turning their heads and refocusing the scene leading the slower detection in the Head-Turning condition.

In the AR experiment, different angles of the Head-Turning conditions do not affect the participants’ detection time. This also indicates that the narrower FoV does not significantly impact the detection of changes occurring outside of the FoV. This rejects H4. However, it would be affected if the altered object remains outside of the FoV significantly longer period than just during the Head-Turning. Further studies are needed to evaluate the effect of the significantly long period when the altered object is out of the FoV.

Regarding the object alteration conditions, our findings in both VR and AR align with the previous studies conducted in a 2D environment[28, 17]. With the Removal condition, the detection time is significantly faster than the result with the Color Alteration and Size Alteration conditions in both VR and AR. This finding supports H2 that the participants would find the altered object with the Removal condition easily.

In AR, however, the Size Alteration condition has significantly slower detection time than the Removal and Color Alteration conditions. Interestingly, the increase in detection time with the Color Alternation condition in AR is only 5.7% compared to VR, while the other conditions show increases of at least 26.7% and up to 29.0%. This result suggests that the perception of the color visual channel is not significantly decreased in AR compared to VR. This supports H3, that the visual channel would be perceived differently in AR and VR. Additionally, this result aligns with the conventional approach of visual perception (i.e., the coherence theory and triadic architecture) [25, 26] which proposes each visual channel is perceived differently. Consequently, these detection time results represent that the limited FoV and type of visual channel influence how fast the observer can detect changes.

The 3-way mixed ANOVA (2 immersive environments (between-subject) 2 visual attention-disturbing111For the mixed ANOVA, we only include the 60∘ Head-Turning from the AR study. 3 object alteration) shows a main effect on the detection time of the immersive environment (F(1, 42)=5.051, =.030, =.107). However, no interaction effect is found related to the immersive environment. Overall, the average detection time in the VR environment (M=14.97s, SD=6.013) is significantly faster than the one in the AR environment (M=17.89s, SD=7.981). This difference can be because of the different sizes of the FoV that can occur the effect from the vertical displacement, and different environmental characteristics, such as different object transparency in VR and AR environments.

Detection Rate

These detection rate results indicate which conditions are more likely to cause confusion for observers during change detection. In both immersive environments, the Removal condition has higher detection rates than the Size Alteration condition. In VR, the Color Alteration condition has significantly lower detection rates than the Removal condition. However, in AR, it’s significantly higher than the results from the Size Alteration conditions. This supports H1 and suggests that the color perception in both immersive environments is not significantly different.

The mixed ANOVA does not reveal a significant main effect on the detection rate between VR and AR. This suggests that, despite potential differences in visual perception speed between VR and AR, the accuracy of perception remains similar in both VR and AR.

Timeout Rate

Different from the detection rate, this timeout rate results represent the ratio of tasks in which the participants were not able to find the altered object despite their awareness that one of the objects has been altered. This highlights instances where participants experienced difficulty in identifying the altered object within the given time limit (60 seconds). Similar to the detection time and rate, the participants find the altered object faster when it is changed inside of FoV in both types of immersive environments. In VR and AR, the Flickering condition has a significantly lower timeout rate than the Head-Turning condition. In the VR environment, an interaction effect is observed between the visual attention-disturbing and the object alteration conditions. Within the Head-Turning condition, the Removal condition has a lower timeout rate than the Size Alteration condition. Furthermore, under the Size Alteration condition, the timeout rate for the Flickering condition is lower than for the Head-Turning condition. This result indicates that change blindness is more likely to occur when an object changes outside the FoV. In VR, the Size Alteration is particularly more influenced by object changes outside the FoV compared to the Removal condition. Consequently, it supports H1. Additionally, in AR, the different angles of the Head-Turning conditions do not affect the result. It is the same with the detection time results and rejects H4; the Head-Turning angle does not affect the timeout rate.

The Removal condition results in a significantly lower timeout rate than the Size Alteration condition in both environments. However, only in AR does the Removal condition yield a lower result than that of the Color Alteration condition. However, although the effect of Color Alternation conditions in VR is not significant, the order of the timeout rate for the condition is the same within AR. From this, we can guess that the AR environment has a possibility to reinforce the Color Alternation condition’s effect.

The previous study on change blindness in VR conducted by Martin et al. [18] reported the effect of FoV on the timeout rate. This is in line with our findings, where changes outside the FoV are more difficult to detect than those within the FoV. However, Martin et al. did not find any significant effects based on the type of object alternation. Their experiments were conducted in an environment that closely resembled daily visual experiences, populated with a variety of object shapes that might divert the participant’s attention. This might account for the differences observed in our results.

Head-Turning Count

This Head-Turning count represents the number of times the participants need to rotate their heads to detect a change that occurs outside of the FoV.

In both VR and AR, the Removal condition required the fewest Head-Turning counts to detect change. This finding indicates the Removal condition is more easily detected with fewer alterations, aligning with the results of detection time and rate. This result supports H1 that the presence or absence of objects is the most memorable aspect of the visual stimulus.

In VR, the required counts for different object alternation conditions are similar to one another, but this is not the case in AR. This difference may come from the mixed entities between virtual and real entities in AR.

5.1 Comparison to the previous studies

Previous studies in immersive environments designed experiments using objects of various shapes, backgrounds, and distances [35, 18]. Such variations can lead to results that are influenced by a mix of the environment and object characteristics, such as shape, color, size, and position. Even though Martin et al. [18] did their study in a VR setting, they were not able to identify any effects related to object alternation types. They claimed that their results differed from the previous change blindness study in some aspects that concentrated on 2D image alternation types within a traditional desktop environment [17]. Understanding this gap and the difficulties in drawing clear conclusions from complex settings, our study aimed to set up a more controlled environment to better analyze the factors involved. Consequently, our findings highlight distinct effects across various alteration types and offer a comparative analysis between VR and AR. The controlled setting helped in minimizing confounding variables, thereby providing a clearer insight into the factors at play in immersive environments.

5.2 Limitation

In this section, we discuss the limitations of our work. One limitation may arise from the limitation of the devices and their FoVs. Our study is conducted in VR and AR environments and compared results within both environments. However, due to the limitation of the devices and their FoVs, we were not able to fully control all variables, such as FoV, interaction techniques (controller in VR and hand gesture in AR), and transparency of virtual objects. Further studies are needed to evaluate the effect of the significantly long period when the altered object is out of the FoV.

The second limitation stems from the challenges in accurately measuring the duration of a user’s head-turning. We did not explicitly record the actual duration of head-turning as it was challenging to separate the duration of head-turning and the time to focus on the scene as these would be expected to be mixed. During the experiment, participants were free to move their heads vertically and even horizontally while they found the changed object. In the Flickering condition, the object was altered periodically for a constant time. However, in the Head-Turning condition, such alteration period was handled by participants, leading to potential variability. A more precise measurement of head-turning duration could provide more accurate results regarding the effect of different visual attention-disrupting conditions on detection time.

The third limitation pertains to the age groups of our study’s participants. According to related research, visual memory and perception are reduced by age [19, 15]. The age range of participants in our study was focused from 18 to 24. Therefore, outcomes with other age-ranged participant groups might differ from our findings.

Finally, most change blindness studies, even ours, have repeated measuring experiments that ask to find some changed thing. However, in that procedure, participants will focus on objects and try to remember as much as they can. Consequently, this kind of study can be just a visual memory test even if the visual working memory is related to the change blindness phenomenon.

6 Conclusion

In this paper, we examine the effects of the limited FoV and the object alternation conditions for change blindness within controlled VR and AR environments. Our results indicate that a limited FoV impacts both the detection time and rates of change detection. Specifically, when object alternations occur outside the FoV, detecting change blindness becomes significantly more challenging than when they occur within the FoV. Additionally, our findings reveal that different object alternation conditions influence the user’s ability to detect changes. Specifically, in both VR and AR environments, it is significantly easier to detect changes when an object alternates by appearing and disappearing than when its size or color is altered. Our results also highlight differences in change blindness detection between VR and AR environments. Detecting changes is generally easier in VR than in AR, a difference that might be attributed to factors like variations in FoV and the contrast between real and virtual backgrounds.

References

- [1] M. Ahissar and S. Hochstein. Learning pop-out detection: Specificities to stimulus characteristics. Vision research, 36(21):3487–3500, 1996.

- [2] P. Baudisch and R. Rosenholtz. Halo: a technique for visualizing off-screen objects. In Proceedings of the SIGCHI conference on Human factors in computing systems, pp. 481–488, 2003.

- [3] P. M. Bays, R. F. Catalao, and M. Husain. The precision of visual working memory is set by allocation of a shared resource. Journal of vision, 9(10):7–7, 2009.

- [4] V. Beanland, A. J. Filtness, and R. Jeans. Change detection in urban and rural driving scenes: Effects of target type and safety relevance on change blindness. Accident Analysis & Prevention, 100:111–122, 2017.

- [5] T. F. Brady, T. Konkle, and G. A. Alvarez. A review of visual memory capacity: Beyond individual items and toward structured representations. Journal of vision, 11(5):4–4, 2011.

- [6] J. Castillo Escamilla, J. J. Fernández Castro, S. Baliyan, J. J. Ortells-Pareja, J. J. Ortells Rodríguez, and J. M. Cimadevilla. Allocentric spatial memory performance in a virtual reality-based task is conditioned by visuospatial working memory capacity. Brain Sciences, 10(8):552, 2020.

- [7] S. G. Charlton and N. J. Starkey. Driving on familiar roads: Automaticity and inattention blindness. Transportation research part F: traffic psychology and behaviour, 19:121–133, 2013.

- [8] J. J. Cummings and J. N. Bailenson. How immersive is enough? a meta-analysis of the effect of immersive technology on user presence. Media psychology, 19(2):272–309, 2016.

- [9] G. Davies and S. Hine. Change blindness and eyewitness testimony. The Journal of psychology, 141(4):423–434, 2007.

- [10] J. DiVita, R. Obermayer, W. Nugent, and J. M. Linville. Verification of the change blindness phenomenon while managing critical events on a combat information display. Human factors, 46(2):205–218, 2004.

- [11] R. J. Fitzgerald, C. Oriet, and H. L. Price. Change blindness and eyewitness identification: Effects on accuracy and confidence. Legal and Criminological Psychology, 21(1):189–201, 2016.

- [12] Y. Gaffary, B. Le Gouis, M. Marchal, F. Argelaguet, B. Arnaldi, and A. Lécuyer. Ar feels “softer” than vr: Haptic perception of stiffness in augmented versus virtual reality. IEEE transactions on visualization and computer graphics, 23(11):2372–2377, 2017.

- [13] J. G. Gaspar, M. B. Neider, D. J. Simons, J. S. McCarley, and A. F. Kramer. Change detection: training and transfer. PloS one, 8(6):e67781, 2013.

- [14] J. A. Jones, J. E. Swan, G. Singh, E. Kolstad, and S. R. Ellis. The effects of virtual reality, augmented reality, and motion parallax on egocentric depth perception. In Proceedings of the 5th symposium on Applied perception in graphics and visualization, pp. 9–14, 2008.

- [15] P. C. Ko, B. Duda, E. Hussey, E. Mason, R. J. Molitor, G. F. Woodman, and B. A. Ally. Understanding age-related reductions in visual working memory capacity: Examining the stages of change detection. Attention, Perception, & Psychophysics, 76:2015–2030, 2014.

- [16] D. T. Levin and D. J. Simons. Failure to detect changes to attended objects in motion pictures. Psychonomic Bulletin & Review, 4(4):501–506, 1997.

- [17] L.-Q. Ma, K. Xu, T.-T. Wong, B.-Y. Jiang, and S.-M. Hu. Change blindness images. IEEE transactions on visualization and computer graphics, 19(11):1808–1819, 2013.

- [18] D. Martin, X. Sun, D. Gutierrez, and B. Masia. A study of change blindness in immersive environments. IEEE Transactions on Visualization and Computer Graphics, 2023.

- [19] M. Naveh-Benjamin. Adult age differences in memory performance: tests of an associative deficit hypothesis. Journal of Experimental Psychology: Learning, Memory, and Cognition, 26(5):1170, 2000.

- [20] K. J. Nelson, C. Laney, N. B. Fowler, E. D. Knowles, D. Davis, and E. F. Loftus. Change blindness can cause mistaken eyewitness identification. Legal and criminological psychology, 16(1):62–74, 2011.

- [21] Y. Pertzov, M. Y. Dong, M.-C. Peich, and M. Husain. Forgetting what was where: The fragility of object-location binding. PLoS One, 7(10):e48214, 2012.

- [22] T. Piumsomboon, Y. Lee, G. Lee, and M. Billinghurst. Covar: a collaborative virtual and augmented reality system for remote collaboration. In SIGGRAPH Asia 2017 Emerging Technologies, pp. 1–2. 2017.

- [23] M. C. Potter, B. Wyble, C. E. Hagmann, and E. S. McCourt. Detecting meaning in rsvp at 13 ms per picture. Attention, Perception, & Psychophysics, 76:270–279, 2014.

- [24] R. A. Rensink. The dynamic representation of scenes. Visual cognition, 7(1-3):17–42, 2000.

- [25] R. A. Rensink. Visual search for change: A probe into the nature of attentional processing. Visual cognition, 7(1-3):345–376, 2000.

- [26] R. A. Rensink. Change detection. Annual review of psychology, 53(1), 2002.

- [27] R. A. Rensink. Failure to see more than one change at a time. Journal of vision, 2(7):245–245, 2002.

- [28] R. A. Rensink, J. K. O’regan, and J. J. Clark. To see or not to see: The need for attention to perceive changes in scenes. Psychological science, 8(5):368–373, 1997.

- [29] D. Romer, Y.-C. Lee, C. C. McDonald, and F. K. Winston. Adolescence, attention allocation, and driving safety. Journal of Adolescent Health, 54(5):S6–S15, 2014.

- [30] Y. Sauer, A. Sipatchin, S. Wahl, and M. García García. Assessment of consumer vr-headsets’ objective and subjective field of view (fov) and its feasibility for visual field testing. Virtual Reality, 26(3):1089–1101, 2022.

- [31] D. J. Simons and C. F. Chabris. Gorillas in our midst: Sustained inattentional blindness for dynamic events. perception, 28(9):1059–1074, 1999.

- [32] D. J. Simons and D. T. Levin. Change blindness. Trends in cognitive sciences, 1(7):261–267, 1997.

- [33] D. J. Simons and R. A. Rensink. Change blindness: Past, present, and future. Trends in cognitive sciences, 9(1):16–20, 2005.

- [34] S. Smart and D. A. Szafir. Measuring the separability of shape, size, and color in scatterplots. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, pp. 1–14, 2019.

- [35] F. Steinicke, G. Bruder, K. Hinrichs, and P. Willemsen. Change blindness phenomena for virtual reality display systems. IEEE Transactions on visualization and computer graphics, 17(9):1223–1233, 2011.

- [36] J. W. Suchow and G. A. Alvarez. Motion silences awareness of visual change. Current Biology, 21(2):140–143, 2011.

- [37] E. A. Suma, S. Clark, S. L. Finkelstein, and Z. Wartell. Exploiting change blindness to expand walkable space in a virtual environment. In 2010 IEEE Virtual Reality Conference (VR), pp. 305–306. IEEE, 2010.

- [38] E. A. Suma, S. Clark, D. Krum, S. Finkelstein, M. Bolas, and Z. Warte. Leveraging change blindness for redirection in virtual environments. In 2011 IEEE Virtual Reality Conference, pp. 159–166. IEEE, 2011.

- [39] J. Triesch, D. H. Ballard, M. M. Hayhoe, and B. T. Sullivan. What you see is what you need. Journal of vision, 3(1):9–9, 2003.

- [40] L. G. Ungerleider, S. M. Courtney, and J. V. Haxby. A neural system for human visual working memory. Proceedings of the National Academy of Sciences, 95(3):883–890, 1998.

- [41] C. Ware. Information visualization: perception for design. Morgan Kaufmann, 2019.

- [42] C. B. White and J. K. Caird. The blind date: The effects of change blindness, passenger conversation and gender on looked-but-failed-to-see (lbfts) errors. Accident Analysis & Prevention, 42(6):1822–1830, 2010.