Communication-Efficient Federated Learning over MIMO Multiple Access Channels

Abstract

Communication efficiency is of importance for wireless federated learning systems. In this paper, we propose a communication-efficient strategy for federated learning over multiple-input multiple-output (MIMO) multiple access channels (MACs). The proposed strategy comprises two components. When sending a locally computed gradient, each device compresses a high dimensional local gradient to multiple lower-dimensional gradient vectors using block sparsification. When receiving a superposition of the compressed local gradients via a MIMO-MAC, a parameter server (PS) performs a joint MIMO detection and the sparse local-gradient recovery. Inspired by the turbo decoding principle, our joint detection-and-recovery algorithm accurately recovers the high-dimensional local gradients by iteratively exchanging their beliefs for MIMO detection and sparse local gradient recovery outputs. We then analyze the reconstruction error of the proposed algorithm and its impact on the convergence rate of federated learning. From simulations, our gradient compression and joint detection-and-recovery methods diminish the communication cost significantly while achieving identical classification accuracy for the case without any compression.

Index Terms:

Federated learning, distributed machine learning, gradient compression, gradient reconstruction, compressed sensing.I Introduction

Federated learning is a distributed machine learning technique for training a global model at a parameter server (PS) through collaboration with wireless devices, each with its own local training data set, where the local data never leaves the devices. [1, 2, 3, 4, 5]. In federated learning, each wireless device updates a local model based on its local training data set and then sends the local model update (e.g., a gradient vector of the local model) to the PS. The PS then updates a global model by aggregating the local model updates and then broadcasts the updated global model to the devices. By repeating this collaboration between the PS and the devices, the PS is able to train the global model without directly accessing the devices’ data, thereby enhancing the privacy of data generated at the devices. Thanks to this advantage, federated learning has received a great deal of attention as a viable solution for privacy-sensitive machine learning applications at the wireless edge [3, 4, 5, 6, 7].

One of the primary research goals in federated learning is to improve communication efficiency in transmitting the local model updates from the devices. The primary reason is that the communication overhead required by these local updates increases with the number of parameters representing the global model, which is typically an enormous value. To reduce the communication overhead, gradient compression methods based on lossy compression of the local gradient vectors computed at the devices have been widely considered in the literature [8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19]. Two representative approaches for gradient compression are gradient quantization and gradient sparsification. In gradient quantization, local gradients are quantized and then transmitted using digital transmission [8, 9, 10, 11, 12, 13]. The most widely adopted technique is one-bit quantization, in which the device only transmits the sign of each local gradient entry. It is shown in [10] that aggregating the signs of different local gradient vectors based on majority voting achieves the same variance reduction as transmission without quantization. This scalar quantization technique is extended in [12, 13] to exploit vector quantization. In these works, a high-dimensional local gradient vector is partitioned and then quantized using vector quantizers such as lattice quantizers [12] and Grassmannian quantizers [13]. Gradient sparsification considers sparsifying the gradient by removing less significant elements. Digital transmission with gradient sparsification is studied in [14, 15, 16] by adopting an encoding function to leverage the sparsity of the gradient vector. Analog transmission with gradient sparsification is developed in [17, 18] in which the sparsified gradient vector is compressed by projection onto a lower-dimensional space as in compressed sensing (CS) [20, 21]. Gradient compression that exploits the advantages of both gradient quantization and sparsification is also studied in [19] by leveraging the idea of quantized CS. It is reported in [19] that gradient compression based on quantized CS not only reduces communication overhead for federated learning but also mitigates gradient reconstruction error at the PS.

Federated learning over a wireless multiple-access channel (MAC) is another promising solution to reduce communication overhead, particularly when a large number of devices participate in federated learning [22, 23, 24, 25, 26, 27, 28]. In this solution, multiple devices simultaneously transmit their local model updates (e.g., local gradient vectors) via shared radio resources; thereby, the communication overhead required for the local update transmission does not increase with the number of the devices. The most widely adopted approach for federated learning over the wireless MAC is over-the-air computation which exploits the superposition property of the wireless MAC [22]. To further improve the reliability of communication under channel fading, federated learning over a wireless multiple-input multiple-output (MIMO) MAC has recently been studied by developing efficient multi-antenna transmission and/or reception techniques for the PS and/or the wireless devices [23, 24, 25, 26, 27, 28]. These studies demonstrate that the use of multiple antennas improves model recovery at the PS by mitigating channel fading and interference effects. For example, a multi-antenna technique proposed in [27] enables almost perfect reconstruction of the local gradient vectors when the PS is equipped with a massive number of antennas. One major challenge, however, is that the communication overhead in the wireless MIMO MAC is still considerable if no gradient compression is applied at the wireless devices. Moreover, despite the potentials of both the gradient compression and simultaneous transmission over the wireless MIMO MAC to reduce communication overhead, none of the existing studies have investigated communication-efficient federated learning to take advantage of both techniques.

In this work, we study communication-efficient federated learning over a wireless MIMO MAC operating with gradient compression at the wireless devices. We particularly consider the analog gradient compression technique in [17, 18] in which a local gradient vector is sparsified and then projected onto a lower-dimensional space as in CS [20, 21]. Under this consideration, we reformulate the reconstruction problem of the local gradient vectors from uplink received signals at the PS into a large-scale CS problem with the double-sided linear transformation. To be specific, reconstructing a sparse matrix from its noisy linear measurement , where is a local gradient matrix whose columns are the local gradient vectors sent from different devices, is a MIMO channel matrix, is a random measurement matrix used for the compression, and is a channel noise matrix. Existing CS algorithms to solve this problem require significant computational complexity since the number of the entries of is proportional to both the number of the devices and the number of global model parameters, which can be on the order of millions in federated learning applications. To overcome this limitation, we propose a computationally-efficient algorithm to solve the gradient reconstruction problem at the PS based on a divide-and-conquer strategy. We then analyze the reconstruction error of the proposed algorithm and its impact on the convergence rate of federated learning. Simulation results demonstrate that the proposed algorithm enables almost lossless reconstruction of the local gradient vectors at the PS while achieving significant performance gain over the existing CS algorithms. The major contributions of this paper are summarized as follows:

-

•

We propose a computationally-efficient algorithm to solve the gradient reconstruction problem at the PS for enabling communication-efficient federated learning over a wireless MIMO MAC. Our key observation is that the gradient reconstruction problem in the form of can be decomposed into parallel small-scale CS recovery problems if a compressed gradient matrix is explicitly estimated from MIMO detection. Inspired by this observation, the proposed algorithm first estimates the compressed gradient matrix based on the minimum-mean-square-error (MMSE) MIMO detection, then solves small-scale CS recovery problems in parallel by employing the expectation-maximization generalized-approximate-message-passing (EM-GAMP) algorithm in [29]. A major drawback of our divide-and-conquer strategy is that MIMO detection error propagates into the subsequent CS recovery process. To alleviate this drawback, the proposed algorithm also leverages a turbo decoding principle that allows the MIMO detection and the CS recovery processes to iteratively exchange their beliefs on the estimate of the compressed gradient matrix. One prominent feature of the proposed algorithm is that the accuracy of the gradient reconstruction at the PS improves as the iteration continues because, in each iteration, the belief from uplink received signals at the PS is complemented by the sparse property of the local gradient vectors.

-

•

We analyze the reconstruction error of the proposed algorithm and its impact on the convergence rate of federated learning. In this analysis, we model each local gradient vector as an independent and identically distributed (IID) random vector whose distribution is assumed to be known at the PS a priori. Under this consideration, we characterize a mean-square-error (MSE) upper bound in reconstructing a global gradient vector when employing the proposed gradient reconstruction algorithm. Furthermore, our analysis demonstrates that the reconstruction error reduces as the gradient compression ratio at the devices decreases and the orthogonality of the channels from the devices to the PS increases. We also characterize the convergence rate of federated learning operating with a stochastic gradient descent (SGD) algorithm. Our result shows that if the reconstruction error at the PS is below a certain level, federated learning converges to a stationary point of a smooth loss function at the rate of , where is the number of total iterations of the SGD algorithm. The trade-off between communication overhead and the convergence rate of federated learning is also captured in our analysis.

-

•

Using simulations, we evaluate the performance of federated learning over a wireless MIMO MAC for an image classification task using the MNIST dataset [30]. These simulations compare the classification accuracy and the normalized MSE of the proposed gradient reconstruction algorithm with the existing CS recovery algorithms under the same compression ratio and a similar computational complexity. Simulation results demonstrate that the proposed algorithm achieves almost the same classification accuracy as perfect reconstruction while superior performance to the existing CS algorithms. It is also shown that the proposed algorithm is more robust to the increase in the gradient compression ratio than other algorithms. We also investigate the effect of the number of turbo iterations and the sparsification level of the gradient compression on the performance of the proposed algorithm. Based on these simulation results, we demonstrate that using the proposed algorithm at the PS enables accurate reconstruction of the local gradient vectors while effectively reducing the communication overhead by taking advantage of the gradient compression and simultaneous transmission over the wireless MIMO MAC.

Notation

Upper-case and lower-case boldface letters denote matrices and column vectors, respectively. is the statistical expectation, is the probability, and is the transpose. is the cardinality of set . represents the -th entry of vector . is the Euclidean norm of a real vector . represents a multivariate Gaussian distribution with mean vector and covariance matrix , while denotes the probability density function of a Gaussian random vector with mean vector and covariance matrix . and are -dimensional vectors whose elements are zero and one, respectively.

II System Model

In this section, we present a federated learning framework considered in our work. We then describe uplink transmission and reception procedures in federated learning over a wireless MIMO MAC.

II-A Federated Learning Framework

We consider federated learning over a wireless MIMO MAC in which a PS equipped with antennas trains a global model (e.g., deep neural network) by collaborating with single-antenna wireless devices, as illustrated in Fig. 1. We assume that the global model is fully represented by a parameter vector , where is the number of the parameters (e.g., the weights and biases of a deep neural network). We also assume that the training data samples to optimize the global model are available only at the wireless devices and differ across the devices. Let be a local training data set available at device , which consists of training data samples. A local loss function at device for the parameter vector is defined as

| (1) |

where is a loss function computed with a training data sample for the parameter vector . Then a global loss function for the parameter vector is represented as

| (2) |

We focus on a scenario where a gradient-based algorithm (e.g., SGD algorithm) is adopted to optimize the parameter vector for minimizing the global loss function in (2). We denote as the number of total iterations of the optimization algorithm, and as the parameter vector at iteration . Each iteration of the optimization algorithm corresponds to one communication round consisting of a pair of downlink and uplink communications. During the downlink communication, the PS broadcasts the current parameter vector to the wireless devices. Then, during the uplink communication, the wireless devices transmit the gradient of the parameter vector computed based on their local training data sets to the server. The major focus of our work is to investigate uplink transmission and reception strategies at the server for federated learning; thereby, for simplicity of the analysis, we assume that the downlink communication is error free111The framework under consideration and the proposed approach can be readily extended to a noisy downlink scenario[35]. Under this assumption, all the devices have a globally consistent parameter vector for all . In what follows, we describe uplink transmission at the wireless devices and uplink reception at the PS during each uplink communication.

II-B Uplink Transmission at Wireless Devices

For the uplink transmission, each device computes a local gradient vector for the current parameter vector based on its local training data set. A local gradient vector computed at device is given by

| (3) |

where is a mini-batch randomly drawn from , and is a gradient operator. We assume that the mini-batch size at device is fixed as for all . Since direct transmission of the local gradient vector in (3) imposes large communication overhead when , we assume that each device applies lossy compression to its local gradient vector before uplink transmission. Details of the gradient compression technique considered in our work will be elaborated in Sec. III-A. Let be a compressed gradient vector determined at device with . Then an uplink signal vector transmitted from device at iteration is denoted by , where is a power scaling factor for device at iteration . In particular, the power scaling factor is set to be , so that an average power of transmitting each entry of the uplink signal vector becomes one (i.e., , ). All devices simultaneously transmit their uplink signal vectors over a wireless MAC by utilizing shared radio resources that are orthogonal in either the time or the frequency domain. We assume that the power scaling factor is separately conveyed to the PS in an error-free fashion because the overhead to transmit this scalar value is negligible compared to communication overhead to transmit the compressed gradient vector.

II-C Uplink Reception at Parameter Server

Once the uplink transmission of the wireless devices ends, the PS reconstructs the local gradient vectors from uplink received signals. Let be the aggregation of the compressed gradient entries sent by devices at the -th resource, where is the -th element of . Then an uplink received vector at the PS for the -th resource is given by

| (4) |

where is a power allocation matrix, is a MIMO channel matrix from devices to the server, , and is a Gaussian noise vector. We assume that the channel matrix remains constant within one communication round (i.e., block-fading assumption) and is perfectly known at the PS via uplink channel estimation. Based on the received signal vectors , the PS reconstructs the local gradient vectors, , sent from all the wireless devices. Details of the gradient reconstruction algorithm developed in our work will be elaborated in Sec. IV.

Let be the reconstruction of the local gradient vector at the PS. Then the PS compute a global gradient vector from the reconstructed local gradient vectors as follows:

| (5) |

where is assumed to be known at the PS a priori. The global gradient vector in (5) is utilized to update the parameter vector . For example, if the PS adopts a gradient descent algorithm to optimize the parameter vector, the corresponding update rule is given by

| (6) |

where is a learning rate at iteration .

III Communication-Efficient Federated Learning over MIMO MAC

One major problem of the uplink transmission in federated learning over a wireless MIMO MAC is that direct transmission of the local gradient vector in (3) imposes considerable communication overhead when the number of the model parameters is large (i.e., ). To alleviate this problem, in this section, we present a gradient compression technique for wireless devices, which takes the advantage of both gradient compression and simultaneous transmission over the wireless MIMO MAC. We then formulate a gradient reconstruction problem at the PS when employing the gradient compression technique at the devices and shed light on the challenges of solving this problem.

III-A Gradient Compression at Wireless Devices

The key idea of the gradient compression technique is to sparsify a local gradient vector in a block-wise fashion and then compress the sparsified vector as in CS [20, 21]. This technique is a simple variant of the gradient compression technique in [17, 18, 19]. Our compression technique consists of two steps, block sparsification and dimensionality reduction, as elaborated below.

Block Sparsification: The first step of our compression technique is to divide the local gradient vector into sub-vectors with dimension , then sparsify each sub-vector by dropping some gradient entries with small magnitudes. Meanwhile, to compensate for information loss caused by the gradient dropping, the dropped gradient entries are stored at each device and then added to the local gradient vector at the next iteration. Under the above strategy, the local gradient vector that needs to be sent by device at iteration is determined as

| (7) |

where is a residual gradient vector stored by device before iteration . Then the -th local gradient sub-vector at device is defined as

| (8) |

where is the -th entry of , and is a set of parameter indices associated with the -th sub-vector at device . We assume that are mutually exclusive subsets of such that and known a priori at the PS. After constructing sub-vectors, each local gradient sub-vector is sparsified by setting all but the top- entries with the largest magnitudes as zeros, where is a sparsification level such that . We define as a sparsification ratio. As a result, device attains local gradient sub-vectors , each of which is an -sparse vector. We denote the block sparsification step described above as . In the sequel, we will show that increasing the number of the gradient sub-vectors, , reduces the computational complexity of the gradient reconstruction process at the PS. However, if is set too large, the non-zero entries in can significantly differ from the top- entries of ; this mismatch may lead to a decrease in the performance of federated learning. Therefore, in practical systems, can be determined according to a target learning performance and the computing power of the PS.

After the block sparsification, the residual gradient vector is updated as

| (9) |

where such that for all .

Dimensionality Reduction: The second step of our compression technique is to compress each sparse sub-vector by random projection onto a lower dimensional space as in CS [20, 21]. Let be a compressed gradient sub-vector generated from . Then is determined as

| (10) |

where is a random measurement matrix with at iteration . In this work, we set as an IID random matrix with which provides promising properties (e.g., restricted isometry property, null space property) for guaranteeing accurate recovery of a sparse signal [21]. Furthermore, is randomly and independently drawn for each iteration, in order to avoid a null-space problem caused by a fixed random measurement matrix, as discussed in [31].

By concatenating these compressed sub-vectors, a compressed gradient vector for device at iteration is finally obtained as

| (11) |

The dimension of the compressed vector is ; thereby, the compression ratio of our technique is . The overall procedures of our gradient compression technique is illustrated in Fig. 1 and also summarized in Steps 5–8 in Algorithm 1.

A significant advantage of our gradient compression technique is that it requires times lower communication overhead than the direct transmission of the local gradient vector, utilizing radio resources per device. Another prominent advantage is that the communication overhead of our technique does not increase with the number of devices participating in federated learning because all the devices utilize the same resources available in the wireless MAC. Therefore, our compression technique effectively reduces the communication overhead of federated learning with a large number of devices.

Input: , ,

Output:

Remark 1 (Digital Transmission Strategy): In this work, we focus only on analog transmission at the wireless devices in which the uplink signal vector is transmitted without digital modulation. Nevertheless, digital transmission of the uplink signal vector is also feasible by utilizing a digital symbol modulator as a scalar quantizer. When employing our gradient compression technique, the distribution of the uplink signal vector can be modeled as for large , which will be justified in Sec. IV-B. Consequently, a complex-valued signal vector, defined as , follows for large . This fact implies that each entry of can be effectively modulated using quadrature amplitude modulation (QAM). Therefore, our gradient compression technique can be compatible with commercial digital communication systems by utilizing the above digital transmission strategy.

III-B Gradient Reconstruction at Parameter Server

When employing the compression technique in Sec. III-A, the PS faces a new challenge in enabling accurate reconstruction of the global gradient vector in (5). The underlying reason is that the local gradient vectors are not only compressed, but also simultaneously sent via a wireless MIMO MAC that causes different channel fading across devices. To shed light on this challenge, we formulate a gradient reconstruction problem at the PS when employing the compression technique in Sec. III-A.

Let be a set of resource indices associated with the transmission of the -th compressed sub-vector. Then an uplink received signal matrix associated with the -th compressed sub-vector is given by . From (4) and (11), the uplink received signal matrix is rewritten as

| (12) |

where , , , and the equality (a) holds because from (10). Note that is an -sparse matrix because are -sparse vectors by the sparsification step during the gradient compression. Therefore, the gradient reconstruction problem reduces to parallel CS recovery problems, each of which aims at finding an -sparse matrix from a noisy linear measurement . The overall procedures of gradient reconstruction and parameter update at the PS are illustrated in Fig. 1 and also summarized in Steps 12–17 in Algorithm 1, where denotes the gradient reconstruction algorithm chosen by the PS.

III-C Limitations of Conventional Approaches

The CS recovery problem with the form of (12) has also been studied for various applications [32, 33, 34]. The simplest approach to solve this problem is to formulate an equivalent CS recovery problem by converting the received signal matrix in (12) into an equivalent vector form:

| (13) |

Based on this form, an existing compressed sensing algorithm can be applied to recover a sparse vector from its noisy linear measurement . This approach, however, requires tremendous computational complexity because the dimensions of both and are extremely large in many federated learning applications. For example, Table I in Sec. VI shows that the orthogonal matching pursuit (OMP) algorithm to solve the Kronecker-form problem in (13) requires the complexity order of in our simulation with , , and . Another existing approach is to directly solve a matrix-form problem in (12) by modifying conventional CS algorithms such as orthogonal matching pursuit (OMP) [32, 33]. This approach, however, still requires significant computational complexity that comes from large-scale matrix multiplications. For example, Table I in Sec. VI shows that the OMP algorithm to solve the matrix-form problem in (12) requires the complexity order of in our simulation with , , and . To overcome the limitations of the existing CS approaches, in the following section, we will present a new low-complexity approach to solve the CS recovery problem in (12).

IV Proposed Gradient Reconstruction Algorithm

When employing the gradient compression technique in Sec. III-A, it is essential to develop a computationally-efficient solution for reconstructing the global gradient vector at the PS while minimizing reconstruction error. To achieve this goal, in this section, we propose a novel low-complexity algorithm for solving the gradient reconstruction problem in Sec. III-B, on the basis of a divide-and-conquer strategy combined with a turbo decoding principle. In the rest of this section, we omit the index for ease of notation.

IV-A Basic Principle: Divide-and-Conquer Strategy

The basic principle of the proposed algorithm is to decompose the gradient reconstruction problem in (12) into small-scale CS recovery problems by applying MIMO detection to explicitly estimate a compressed gradient matrix . To be more specific, the output of the MIMO detection applied to detect from a received signal matrix in (12) can be expressed as

| (14) |

where is a MIMO detection error. Then the -th column of , namely , is expressed as

| (15) |

where is the -th column of . Since every local gradient sub-vector sent by each device is an -sparse vector as explained in Sec. III-A, reconstructing from is exactly a noisy CS recovery problem which finds an -dimensional sparse vector from an -dimensional observation. Solving this problem in parallel for all and requires much smaller complexity than directly solving the CS recovery problem in (12) which finds a -dimensional unknown vector from a -dimensional observation.

Despite the advantage in reducing the computational complexity, a major drawback of our strategy is that error in the MIMO detection (e.g., in (14)) propagates into the subsequent CS recovery process. To alleviate this drawback, we leverage a turbo decoding principle that allows the MIMO detection and the CS recovery processes to exchange their beliefs about the entries of the compressed gradient matrix. The proposed algorithm based on the above principle is illustrated in Fig. 3.

IV-B Statistical Modeling

To enable the turbo decoding principle based on a Bayesian approach, we establish a statistical model for local gradient sub-vectors as well as for compressed gradient sub-vectors, as done in [19]. For statistical modeling of the local gradient sub-vectors, we focus on the fact that every local gradient sub-vector is sparse, while its non-zero entries are arbitrarily distributed. We also use the fact that the entries of each local gradient sub-vector are likely to be independent because they are associated with a randomly drawn subset of the model parameters, as explained in Sec. III-A. Motivated by these facts, we model each local gradient sub-vector as an IID random vector whose entry follows the Bernoulli Gaussian-mixture distribution which is well known for its suitability and generality for modeling a sparse random vector [29]. Note that the probability density function of the Bernoulli Gaussian-mixture distribution with parameter is given by

| (16) |

where . As can be seen in (16), the above distribution is suitable for modeling the local gradient sub-vector because the sparsity of this sub-vector is effectively captured by the Bernoulli model with parameter , while the distribution of non-zero local gradient entries is well approximated by the Gaussian-mixture model with components. How to tune the parameter for each gradient sub-vector will be discussed in Sec. IV-D.

To further justify the Bernoulli Gaussian-mixture model, in Fig. 2, we compare the empirical cumulative distribution function (CDF) of the entries of the local gradient sub-vector obtained from simulation222In this simulation, we employ Algorithm 3 with and . Further details of the simulation are described in Sec. VI. with the CDF of the Bernoulli Gaussian-mixture distribution whose parameters are determined by Algorithm 3. Fig. 2 shows that the empirical CDF is very similar to the corresponding model-based CDF. This result implies that the local gradient sub-vector can be well modeled by an IID random vector with the Bernoulli Gaussian-mixture distribution.

On the basis of the Bernoulli Gaussian-mixture model, we also determine a statistical model for the compressed gradient entries. First of all, as can be seen from (10), the -th entry of the compressed gradient sub-vector is given by . This implies that is nothing but the sum of independent random variables because the random measurement matrix has IID entries, while the local gradient sub-vector can be modeled as an IID random vector. Therefore, for large , every compressed gradient entry can be modeled as a Gaussian random variable by the central limit theorem.

IV-C Module A: MIMO Detection

In Module A of the proposed algorithm depicted in Fig. 3, the compressed gradient matrix is estimated from the uplink received signal matrix . To minimize the MSE of the estimate of , we employ the MMSE MIMO detection based on a Gaussian model justified in Sec. IV-B. The input of Module A is the prior information about the mean and variance of , denoted by and , respectively, . This information is set approximately at the beginning of the algorithm and is passed from Module B during the turbo iterations of the algorithm. As discussed in Sec. IV-B, the prior distribution of can be modeled as for large by the central limit theorem, where , , and . Note that is diagonal because are associated with different parameters in the global model. Then, the posterior distribution of for a given observation with is determined as , where the posterior mean and covariance are computed as [36]

| (17) | ||||

| (18) |

respectively, where . The -th entry of , denoted by , is the MMSE estimate of for a given observation . Meanwhile, the -th diagonal entry of , denoted by , represents the MSE between and .

After computing the MMSE estimates of the compressed gradient entries, Module A passes its knowledge about these values to Module B. As illustrated in Fig. 3, the prior information utilized in Module A has been passed from Module B; thereby, Module A extracts the extrinsic information by excluding the contribution of the prior information from the posterior information. In particular, the extrinsic distribution of can be determined by assuming that its prior and posterior distributions are given by and , respectively. Then, by Bayes’ rule, the extrinsic distribution of is given by , where the extrinsic mean and variance are computed as [37, 38]

| (19) |

respectively. Then the values of are passed to Module B which utilizes these values as the prior information about .

IV-D Module B: CS Recovery

In Module B of the proposed algorithm, each local gradient sub-vector is reconstructed from the prior information about the compressed gradient entries. To this end, the extrinsic mean and variance of passed from Module A are harnessed as the prior mean and variance of , respectively, i.e., , where and , . Then the prior mean and covariance of are determined as and , respectively, where . Note that is diagonal because and are associated with different parameters in the global model for . For large with the IID random measurement matrix in (10), all the entries of are statistically identical by the central limit theorem. Motivated by this fact, we approximate the prior covariance as , where . Then, with some tedious manipulations from (17)–(19), the prior mean is rewritten as an additive white Gaussian noise (AWGN) observation of , i.e.,

| (20) |

where is an effective noise independent of , as also derived in [38].

To reconstruct the local gradient sub-vector from the prior mean in (20), we need to solve a noisy CS recovery problem. Unfortunately, finding the exact MMSE solution to this problem is infeasible as the exact distribution of the local gradient sub-vector is unknown at the PS. To circumvent this challenge, we employ the EM-GAMP algorithm in [29] based on the statistical model in Sec. IV-B. This algorithm iteratively computes an approximate MMSE solution to the CS recovery problem by modeling the distribution of an unknown sparse vector using the Bernoulli Gaussian-mixture model; the parameters of this model are also updated iteratively based on the EM principle. As discussed in Sec. IV-B, the local gradient sub-vectors are well modeled by the Bernoulli Gaussian-mixture distribution; thereby, the EM-GAMP algorithm is a powerful means of solving our CS recovery problem in (20). This algorithm also computes the posterior mean and variance of each compressed gradient entry as a byproduct; thereby, it can be directly combined with the turbo decoding principle in the proposed algorithm. In Algorithm 2, we summarize the EM-GAMP algorithm employed in Module B of the proposed algorithm, where we omit the indices and for ease of notation.

The major steps in Algorithm 2 are described below. In Step 3, the pseudo-prior mean and variance of are determined. In Step 4, the posterior mean and variance of are computed based on (20) and . In Step 6, the estimate of and the corresponding error variance are determined. In Step 7, the posterior mean and variance of are computed under the assumptions of and with as derived in [29], where , ,

for all . In Step 8, the parameters of the Bernoulli Gaussian-mixture model are computed based on the EM principle as derived in [29], where ,

| (21) |

The asymptotic convergence of the EM-GAMP algorithm was studied in [39], in which the authors showed that under certain conditions, the performance of this algorithm asymptotically coincides with that of the original GAMP algorithm with perfect knowledge of the input distribution. We refer interested readers to [39] for more details. Meanwhile, the complexity order of the EM-GAMP algorithm is given by in terms of the number of real multiplications (see Step 3 and Step 6 of Algorithm ).

function

Once the EM-GAMP algorithm applied to converges, Module B acquires not only the approximate MMSE estimate of , but also the posterior mean and variance of compressed gradient entries, given by (see Step 4 in Algorithm 2). As done in Module A, the extrinsic mean and variance, denoted by and , respectively, are computed from and according to (19), . Then these values are passed to Module A which utilizes these values as the prior information about .

IV-E Summary

In Algorithm 3, we summarize the operations of the proposed algorithm that iterates between Module A and Module B based on the turbo decoding principle. We denote as the total number of the iterations of the proposed algorithm. As can be seen in Algorithm 3, in Module A, the compressed gradient entries are estimated based on the observation of the uplink received signals. Meanwhile, in Module B, the estimates of the compressed gradient entries are refined by exploiting their sparse property. Since Module A and Module B utilize different sources of information for estimating the compressed gradient entries, these modules can complement each other by iteratively exchanging their extrinsic beliefs about the compressed gradient entries. Recall that the reduction in the estimation error of the compressed gradient entries leads to the decrease in the noise in the CS recovery process for reconstructing the local gradient sub-vectors, as discussed in Sec. IV-A. Therefore, the use of the turbo decoding principle in the proposed algorithm improves the reconstruction accuracy of the local gradient sub-vectors at the PS.

V Performance Analysis

In this section, we analyze the reconstruction error of the proposed algorithm and its impact on the convergence rate of federated learning over a wireless MIMO MAC.

V-A Reconstruction Error Analysis

In this analysis, we characterize the MSE of a global gradient vector reconstructed from the proposed algorithm. To this end, we model each local gradient sub-vector as an IID random vector, which can be justified using the Bernoulli Gaussian-mixture distribution, as discussed in Sec. IV-B. Meanwhile, we assume that the distribution of the local gradient sub-vectors can differ across devices and blocks, while the mean and variance of each gradient sub-vector are known333To realize this assumption, the information of the means and variances of the local gradient sub-vectors needs to be conveyed from the devices to the PS. Fortunately, the overhead to transmit this information is negligible compared to communication overhead to transmit the compressed gradient vectors. at the PS. Under these assumptions, extrinsic means and variances passed from Module A of the proposed algorithm are characterized as given in the following lemma:

Lemma 1

Let be the extrinsic mean of computed from Module A of the proposed algorithm for a given observation . For large , an extrinsic mean vector is an AWGN observation of with noise variance , i.e.,

| (22) |

where

| (23) |

, is the -th largest eigenvector of , is the eigenvector associated with , is the rank of , , is the -th column of , and is the variance of each entry of .

Proof:

See Appendix A. ∎

Utilizing the result in Lemma 1, we characterize an MSE upper bound for the global gradient vector reconstructed by the proposed algorithm. This result is given in the following theorem:

Theorem 1

In the asymptotic regime of and for some , the global gradient vector, , reconstructed by the proposed algorithm with satisfies the following MSE bound:

| (24) |

where is a global gradient vector at iteration .

Proof:

See Appendix B. ∎

Theorem 1 shows that the reconstruction error of the proposed algorithm reduces as the compression ratio approaches to one (i.e., no compression). Since the communication overhead increases as the compression ratio decreases, Theorem 1 clearly reveals the trade-off between communication overhead at the devices and reconstruction accuracy at the PS in federated learning over a wireless MIMO MAC. Another important observation is that in (24) decreases not only as the signal-to-noise ratio (SNR) of device increases, but also as decreases, which can be seen from (23). This observation implies that decreases with the orthogonality between the channel of device and other devices’ channels because , , as , . Therefore, our analytical result demonstrates that the reconstruction error of the proposed algorithm depends on both the gradient compression ratio and wireless environment (e.g., SNR or channel distribution).

V-B Convergence Rate Analysis

Now, we characterize the convergence rate of federated learning over the wireless MIMO MAC when employing a SGD algorithm. To this end, we make the following assumptions:

Assumption 1: The loss function is -smooth and lower bounded by some constant , i.e., , .

Assumption 2: For a given parameter vector , the global gradient vector in (5) is unbiased, i.e., , while its variance is upper bounded as with a constant , for all .

Rationale: Assumptions 1–2 are typical for analyzing the convergence rate of the SGD algorithm for a smooth loss function, as considered in [10, 19]. In particular, a variance scaling factor depends on both the mini-batch size and the sparsification level employed at each device, while this factor decreases with the mini-batch size and the sparsification level.

Under Assumptions 1–2, we characterize the convergence rate of federated learning, as given in the following theorem:

Theorem 2

Let be the maximum normalized reconstruction error of the global gradient vector at the PS. Under Assumptions 1–2, if , federated learning based on SGD with a learning rate satisfies the following bound:

| (25) |

for any , where and .

Proof:

See Appendix C. ∎

Theorem 2 shows that federated learning converges to a stationary point of a smooth loss function at the rate of , which is the same order as the original SGD algorithm [10], if the maximum normalized reconstruction error at the PS is lower than a certain level . Theorem 2 also shows that the convergence rate of federated learning improves as the reconstruction error of the global gradient vector at the PS reduces because increases as decreases. Fortunately, the reconstruction error of the global gradient vector can be effectively reduced by employing the proposed gradient reconstruction algorithm, as will be demonstrated in Sec. VI. Therefore, our analytical and numerical results together imply that the convergence rate of federated learning can be improved using the proposed algorithm. As discussed in Theorem 1, the reconstruction error of the proposed algorithm reduces as the compression ratio decreases; thereby, Theorem 2 also reveals the trade-off between the communication overhead and convergence rate of federated learning.

VI Simulation Results

In this section, using simulation, we evaluate the performance of federated learning over a wireless MIMO MAC when employing the communication-efficient federated learning framework in Algorithm 1. We set , , and , while modeling the entries of the MIMO channel matrix as IID Gaussian random variables with zero mean and unit variance. We assume that the downlink communication is error free unless specified otherwise. For the gradient compression technique in Sec. III-A, we set and , where is the ratio of the sparsification level. For the gradient reconstruction at the PS, we consider the following algorithms:

-

•

Proposed algorithm: This is given in Algorithm 3. To initialize the algorithm, we set444In this initialization, we use and . , , and . An initial estimate is randomly drawn from . The parameters of the Bernoulli Gaussian-mixture model for are initialized with , , , , , , where and are the largest and the smallest entry of , respectively. Other parameters are set as , , and , unless otherwise specified.

-

•

LMMSE-OMP: This is a variation of the proposed algorithm that employs the LMMSE MIMO detector followed by the OMP algorithm in [20, 21, 40] without harnessing the turbo decoding principle. In this algorithm, the distribution of the compressed gradient vector is modeled as . The OMP algorithm is set to stop after iterations.

- •

- •

The computational complexities of the above algorithms are characterized in Table I. Note that in our simulations, different numbers of blocks, , is adopted for different algorithms, in order to ensure that they have a similar complexity order, as specified in Table I. Meanwhile, the same sub-vector index sets are adopted by every device (i.e., , ).

| Algorithm | Complexity order | Value in simulation for , | Value in simulation for , |

| Proposed algorithm () | |||

| LMMSE-OMP () | |||

| 2D-OMP () | |||

| Kron-OMP () |

In this simulation, we consider an image classification task using the MNIST dataset in which a handwritten digit (from to ) of a grayscale image is classified using a neural network [30]. We particularly adopt the neural network that consists of input nodes, a single hidden layer with hidden nodes, and output nodes. The activation functions of the hidden layer and the output layer are set as the ReLU and the softmax functions, respectively. The weights of this neural network at iteration is mapped into a parameter vector with . To train the network, we consider a mini-batch SGD algorithm with mini-batch size , a fixed learning rate , and the cross-entropy loss function. The local training data set of device , , is determined by randomly drawing training data samples labeled with digit among training data samples in the MNIST dataset; this corresponds to a non-IID setting because each device has the information of only one digit. For performance evaluation, we consider two metrics: Classification accuracy computed for training data samples in the MNIST dataset, and normalized MSE (NMSE) of the global gradient vector, defined as with .

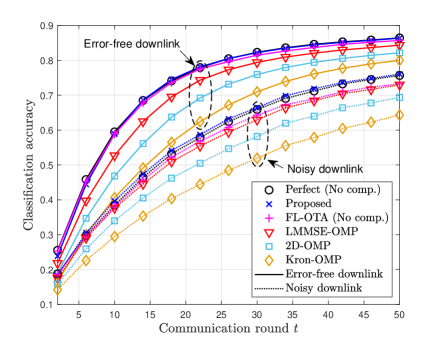

In Fig. 4, we evaluate the classification accuracy and the NMSE of federated learning with different gradient reconstruction algorithms when and . In Fig. 4(a), we also plot the classification accuracy of federated learning based on over-the-air computation (FL-AirComp) developed in [28] by assuming no device selection and no intelligent reflecting surface between the PS and the wireless devices. In a noisy downlink communication scenario, we assume that the parameter vector received by device at iteration is modeled as , where , is an independent Gaussian random vector distributed as , and is the -th entry of . Fig. 4(a) shows that for both the error-free and the noisy downlink scenarios, the proposed algorithm achieves almost the same classification accuracy as perfect reconstruction with no compression. Meanwhile, Fig. 4(b) shows that the proposed algorithm enables almost lossless (less than dB) reconstruction of the global gradient vector at the PS. Unlike the proposed algorithm, conventional CS algorithms (2D-OMP and Kron-OMP) cause degradation in classification accuracy (see Fig. 4(a)) due to severe loss of reconstruction accuracy (see Fig. 4(b)). Although both the proposed algorithm and LMMSE-OMP adopt the divide-and-conquer strategy described in Sec. IV, the proposed algorithm outperforms LMMSE-OMP, attained by harnessing the turbo decoding principle with the EM-GAMP algorithm. FL-AirComp provides a similar classification accuracy as the proposed algorithm, but this framework assumes no gradient compression at the devices; thereby, AirComp requires times higher communication overhead than the federated learning framework considered in the proposed algorithm.

In Fig. 6, we evaluate the classification accuracy of federated learning with different compression ratios when . Fig. 6 shows that the classification accuracies of all the gradient reconstruction algorithms degrade with the compression ratio. This result follows from the fact that the smaller the projected dimension in (10), the more difficult the reconstruction of the local gradient vectors at the PS. Since communication overhead of federated learning reduces with the compression ratio, this result also reveals the trade-off between communication overhead and learning performance of federated learning. Despite this trade-off, the proposed algorithm provides almost the same accuracy even at the compression ratio of , implying that the proposed algorithm has the best robustness against the increase in the compression ratio. Therefore, the proposed algorithm minimizes the communication overhead of federated learning required to achieve a certain level of classification accuracy.

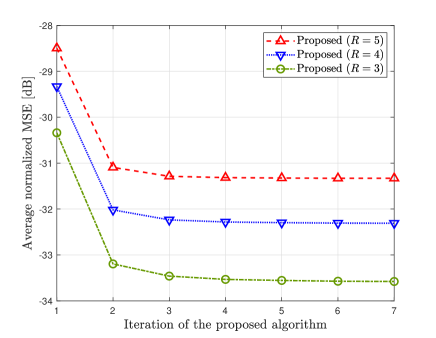

In Fig. 6, we evaluate the average NMSE of the proposed algorithm with different numbers of turbo iterations when . The NMSE is averaged over the first 20 iterations. Fig. 6 shows that the reconstruction accuracy of the proposed algorithm improves with the number of turbo iterations. This performance improvement comes from the information exchange between Module A and Module B in the proposed algorithm; during this exchange, the information from the uplink received signals and the sparse property of the local gradient vector complement each other to improve the reconstruction accuracy of the local gradient vectors. Since this effect vanishes as the information exchange repeats, the performance improvement of the proposed algorithm becomes marginal after a few iterations (i.e., 3 iterations).

In Fig. 7, we evaluate the average classification accuracy and NMSE of the proposed gradient reconstruction algorithm with different sparsification ratios. These performance measures are averaged over the first 50 iterations. Fig. 7(a) shows that there exists the optimal sparsification ratio that provides the highest classification accuracy. This result is due to the trade-off between the amount of the local gradient information conveyed to the PS and the reconstruction accuracy of the local gradient vectors at the PS. To be more specific, increasing the sparsification ratio increases the number of non-zero gradient entries conveyed to the PS per iteration but, at the same time, decreases the sparsity of the local gradient vectors, which leads to the degradation in the reconstruction accuracy, as shown in Fig. 7(b). Our simulation results imply that the performance of the proposed algorithm can be maximized by optimizing the selection of the sparsification level, which would be interesting for future work.

VII Conclusion

In this paper, we have studied communication-efficient federated learning over a wireless MIMO MAC in which communication overhead for local gradient transmission is effectively reduced by employing gradient compression at wireless devices and simultaneous transmission via shared radio resources. Under this scenario, we have presented a turbo-iterative algorithm that solves a computationally demanding gradient reconstruction problem at the PS based on a divide-and-conquer strategy. One prominent feature of the presented algorithm is that gradient reconstruction accuracy at the PS improves as the iteration continues. In each iteration, the belief from uplink received signals at the PS is complemented by the sparse property of the local gradient vectors. We have also characterized the reconstruction error of the presented algorithm and a sufficient condition to guarantee the convergence of federated learning. Using simulations, we have demonstrated that the presented algorithm achieves almost the same performance as a perfect reconstruction scenario while providing a superior performance compared to existing CS algorithms.

An important direction for future research is to optimize device scheduling and power control for uplink communication in federated learning, taking into account the different locations of devices in wireless networks. It would also be important to study downlink communication of federated learning to enable accurate and efficient broadcasting of the global model to wireless devices.

Appendix A Proof of Lemma 1

Suppose that the -th local gradient sub-vector at device , , follows an IID random distribution with mean and variance . Since is an IID random matrix with , the projection in (10) implies that for large by the central limit theorem. Then from (17) and (18), the posterior mean and variance of for are computed as

| (26) |

respectively, where , , , and is the -th column of . By plugging , , , and into (19), the extrinsic mean and variance of for are computed as

| (27) |

respectively. From the eigendecomposition of , the extrinsic variance can be rewritten as

| (28) |

where , is the -th largest eigenvector of , is the eigenvector associated with , and is the rank of . Since is an IID Gaussian vector for large , the extrinsic mean becomes an AWGN observation of where the noise variance is the extrinsic variance [38]. This completes the proof.

Appendix B Proof of Theorem 1

Suppose that the -th local gradient sub-vector at device , , follows an IID random distribution with mean and variance . Under the assumption that the distributions of are known at the PS in prior, the EM-GAMP algorithm in Module B of the proposed algorithm reduces to the GAMP algorithm in [43] which does not have the EM step (i.e., without Step 8 in Algorithm 2). In the asymptotic regime of and , the behavior of the GAMP algorithm applied to reconstruct from is characterized by a scalar state evolution. If this state evolution has a unique fixed point, the output of the GAMP algorithm, , converges to the MMSE estimate of for a given observation [41, 42, 43]. Therefore, the MSE between and is less than or equal to the MSE between and its linear MMSE estimate, i.e.,

| (29) |

where is the linear MMSE estimate of for a given observation in (22). From (22) with , the MSE of the linear MMSE estimate is computed as [36]

| (30) |

where the equality (a) follows from as under the assumption that is an IID random matrix with . Since the -th local gradient vector is reconstructed as , the MSE between and is expressed as

| (31) |

where the equality (a) holds because different parameters in the global model are simultaneously transmitted by different devices when for . Applying (29) and (30) into (31) yields the result in (24).

Appendix C Proof of Theorem 2

Under Assumption 1, the improvement of the loss function at iteration is upper bounded as

| (32) |

where the equality (a) follows from (6) with , and the inequality (b) follows from . Let be a normalized reconstruction error of the global gradient vector at iteration . Then the inequality in (32) is rewritten as

| (33) |

Since and under Assumption 2, taking the expectation of both sides of (33) conditioned on yields

| (34) |

where , , and .

A lower bound of the initial loss with is expressed as

| (35) |

where the inequality (c) follows from (C). Plugging an adaptive learning rate into (35) yields [11]

| (36) |

If , from and for , the right-hand-side of (36) is further lower bounded as

| (37) |

Since for , the inequality in (37) is rewritten as in (25) for any with .

References

- [1] J. Konec̆ný, B. H. McMahan, and D. Ramage, “Federated optimization: Distributed optimization beyond the datacenter,” arXiv:1511.03575 [cs.LG], Nov. 2015 [Online] Available: https://arxiv.org/abs/1511.03575

- [2] H. B. McMahan, E. Moore, D. Ramage, S. Hampson, and B. A. Arcas “Communication-efficient learning of deep networks from decentralized data,” in Proc. 20th Int. Conf. Artif. Intell. Statist. (AISTATS), 2017, pp. 1273–-1282.

- [3] S. Niknam, H. S. Dhillon, and J. H. Reed, “Federated learning for wireless communications: Motivation, opportunities and challenges,” IEEE Commun. Magazine, vol. 58, no. 1, pp. 19–25, Jan. 2020.

- [4] G. Zhu, D. Liu, Y. Du, C. You, J. Zhang, and K. Huang, “Toward an intelligent edge: Wireless communication meets machine learning,” IEEE Commun. Magazine, vol. 58, no. 1, pp. 19–25, Jan. 2020.

- [5] D. Gündüz, D. B. Kurka, M. Jankowski, M. M. Amiri, E. Ozfatura, and S. Sreekumar, “Communicate to learn at the edge,” IEEE Commun. Magazine, vol. 58, no. 12, pp. 14–19, Dec. 2020.

- [6] S. Samarakoon, M. Bennis, W. Saad, and M. Debbah, “Distributed federated learning for ultra-reliable low-latency vehicular communications,” IEEE Trans. Commun., vol. 68, no. 2, pp. 1146–1159, Feb. 2020.

- [7] M. Chen, O. Semiari, W. Saad, X. Liu, and C. Yin, “Federated echo state learning for minimizing breaks in presence in wireless virtual reality networks,” IEEE Trans. Wireless Commun., vol. 19, no. 1, pp. 177–191, Jan. 2020.

- [8] J. Konec̆ný, H. B. McMahan, F. X. Yu, P. Richtárik, A. T. Suresh, and D. Bacon, “Federated learning: Strategies for improving communication efficiency,” arXiv:1610.05492v2 [cs.LG], Oct. 2016 [Online] Available: https://arxiv.org/abs/1610.05492

- [9] D. Alistarh, J. Li, R. Tomioka, and M. Vojnovic, “QSGD: Randomized quantization for communication-optimal stochastic gradient descent,” in Proc. Advances Neural Inf. Process. Syst. (NeurIPS), 2017, pp. 1709–-1720.

- [10] J. Bernstein, Y.-X. Wang, K. Azizzadenesheli, and A. Anandkumar, “signSGD: Compressed optimisation for non-convex problems,” in Proc. Int. Conf. Mach. Learn. (ICML), 2018, pp. 560–569.

- [11] S. Lee, C. Park, S.-N. Hong, Y. C. Eldar, and N. Lee, “Bayesian federated learning over wireless networks,” arXiv:2012.15486 [eess.SP], Dec. 2020 [Online] Available: https://arxiv.org/abs/2012.15486

- [12] N. Shlezinger, M. Chen, Y. C. Eldar, H. V. Poor, and S. Cui, “UVeQFed: Universal vector quantization for federated learning,” IEEE Trans. Signal Process., vol. 69, pp. 500–514, 2021.

- [13] Y. Du, S. Yang, and K. Huang, “High-dimensional stochastic gradient quantization for communication-efficient edge learning,” IEEE Trans. Signal Process., vol. 68, pp. 2128–2142, 2020.

- [14] A. F. Aji and K. Heafield, “Sparse communication for distributed gradient descent,” in Proc. Empirical Methods in Natural Language Process. (EMNLP), 2017.

- [15] J. Wangni, J. Wang, J. Liu, and T. Zhang, “Gradient sparsification for communication-efficient distributed optimization,” in Proc. Advances Neural Inf. Process. Syst. (NeurIPS), 2018, pp. 1299–-1309.

- [16] Y. Lin, S. Han, H. Mao, Y. Wang, and W. J. Dally, “Deep gradient compression: Reducing the communication bandwidth for distributed training,” in Proc. Int. Conf. Learn. Represent. (ICLR), 2018, pp. 1–13.

- [17] M. M. Amiri and D. Gündüz, “Machine learning at the wireless edge: Distributed stochastic gradient descent over-the-air,” IEEE Trans. Signal Process., vol. 68, pp. 2155–2169, Mar. 2020.

- [18] M. M. Amiri and D. Gündüz, “Federated learning over wireless fading channels,” IEEE Trans. Wireless Commun., vol. 19, no. 5, pp. 3546–3557, May 2020.

- [19] Y. Oh, N. Lee, Y.-S. Jeon, and H. V. Poor “Communication-efficient federated learning via quantized compressed sensing,” arXiv:2111.15071 [cs.DC], Dec. 2021 [Online] Available: https://arxiv.org/abs/2111.15071

- [20] Y. C. Eldar and G. Kutyniok, Compressed Sensing: Theory and Applications, Cambridge, U.K.: Cambridge University Press, 2012.

- [21] S. Foucart and H. Rauhut, A Mathematical Introduction to Compressive Sensing, Boston, MA: Birkhüuser, 2013.

- [22] K. Yang, T. Jiang, Y. Shi, and Z. Ding, “Federated learning via over-the-air computation,” IEEE Trans. Wireless Commun., vol. 19, no. 3, pp. 2022–2035, Mar. 2020.

- [23] M. M. Amiri, T. M. Duman, and D. Gündüz, “Collaborative machine learning at the wireless edge with blind transmitters,” in Proc. IEEE Global Conf. Sig. Inf. Process. (GlobalSIP), Nov. 2019, pp. 1–6.

- [24] D. Wen, G. Zhu, and K. Huang, “Reduced-dimension design of MIMO over-the-air computing for data aggregation in clustered IoT networks,” IEEE Trans. Wireless Commun., vol. 18, no. 11, pp. 5255–5268, Nov. 2019.

- [25] G. Zhu, Y. Wang, and K. Huang, “Broadband analog aggregation for low-latency federated edge learning,” IEEE Trans. Wireless Commun., vol. 19, no. 1, pp. 491–506, Jan. 2020.

- [26] T. T. Vu, D. T. Ngo, N. H. Tran, H. Q. Ngo, M. N. Dao, and R. H. Middleton, “Cell-free massive MIMO for wireless federated learning,” IEEE Trans. Wireless Commun., vol. 19, no. 10, pp. 6377–6392, Oct. 2020.

- [27] Y.-S. Jeon, M. M. Amiri, J. Li, and H. V. Poor “A compressive sensing approach for federated learning over massive MIMO communication systems,” IEEE Trans. Wireless Commun., vol. 20, no. 3, 1990–2004, Mar. 2021.

- [28] Z. Wang, J. Qiu, Y. Zhou, Y. Shi, L. Fu, W. Chen, and K. B. Letaief, “Federated Learning via Intelligent Reflecting Surface,” IEEE Trans. Wireless Commun., vol. 21, no. 2, pp. 808–822, Feb. 2022.

- [29] J. P. Vila and P. Schniter, “Expectation-maximization Gaussian-mixture approximate message passing,” IEEE Trans. Signal Process., vol. 61, no. 19, pp. 4658–4672, Oct. 2013.

- [30] Y. LeCun, C. Cortes, and C. Burges, “The MNIST database of handwritten digits,” [Online]. Available: http://yann.lecun.com/exdb/mnist/

- [31] A. Abdi, Y. M. Saidutta, and F. Fekri, “Analog compression and communication for federated learning over wireless MAC,” in Proc. IEEE Workshop Signal Process. Adv. Wirel. Commun. (SPAWC), May 2020, pp. 1–5.

- [32] Z. Y. Peng, “An iterative method for the least squares symmetric solution of the linear matrix equation AXB = C,” Appl. Math. Comput., vol. 170, no. 1, pp. 711–-723, 2005.

- [33] T. Wimalajeewa, Y. C. Eldar, and P. K. Varshney, “Recovery of sparse matrices via matrix sketching,” arXiv:1311.2448 [cs.NA], Nov. 2013 [Online] Available: http://arxiv.org/abs/1311.2448

- [34] D. Cohen, D. Cohen, Y. C. Eldar, and A. M. Haimovich, “SUMMeR: Sub-Nyquist MIMO radar,” IEEE Trans. Signal Process., vol. 66, no. 16, pp. 4315–4330, Aug. 2018.

- [35] M. M. Amiri, D. Gündüz, S. R. Kulkarni, and H. V. Poor, “Convergence of federated learning over a noisy downlink,” IEEE Trans. Wireless Commun., vol. 21, no. 3, pp. 1422–1437, Mar. 2022.

- [36] S. M. Kay, Fundamentals of Statistical Signal Processing: Estimation Theory, NJ: Prentice Hall, 1993.

- [37] Q. Guo and D. D. Huang, “A concise representation for the soft-in soft-out LMMSE detector,” IEEE Commun. Letts., vol. 15, no. 5, pp. 566–568, May 2011.

- [38] L. Liu, Y. Chi, C. Yuen, Y. L. Guan, and Y. Li, “Capacity-achieving MIMO-NOMA: Iterative LMMSE detection,” IEEE Trans. Signal Process., vol. 67, no. 7, pp. 1758–1773, Apr. 2019.

- [39] U. S. Kamilov, S. Rangan, A. K. Fletcher, and M. Unser, “Approximate message passing with consistent parameter estimation and applications to sparse learning,” IEEE Trans. Inf. Theory, vol. 60, no. 5, pp. 2969–2985, May 2014.

- [40] J. A. Tropp, “Greed is good: Algorithmic results for sparse approximation,” IEEE Trans. Inf. Theory, vol. 50, no. 10, pp. 2231–2242, Oct. 2004.

- [41] D. Guo and S. Verdú, “Randomly spread CDMA: Asymptotics via statistical physics,” IEEE Trans. Inf. Theory, vol. 51, no. 6, pp. 1983–2010, Jun. 2005.

- [42] M. Bayati and A. Montanari, “The dynamics of message passing on dense graphs with applications to compressed sensing,” IEEE Trans. Inf. Theory, vol. 57, no. 2, pp. 764–785, Feb. 2011.

- [43] S. Rangan, “Generalized approximate message passing for estimation with random linear mixing,” in Proc. IEEE Int. Symp. Inf. Theory (ISIT), Aug. 2011, pp. 2168–2172.