Communication-Efficient Consensus Mechanism for Federated Reinforcement Learning

Abstract

The paper considers independent reinforcement learning (IRL) for multi-agent decision-making process in the paradigm of federated learning (FL). We show that FL can clearly improve the policy performance of IRL in terms of training efficiency and stability. However, since the policy parameters are trained locally and aggregated iteratively through a central server in FL, frequent information exchange incurs a large amount of communication overheads. To reach a good balance between improving the model’s convergence performance and reducing the required communication and computation overheads, this paper proposes a system utility function and develops a consensus-based optimization scheme on top of the periodic averaging method, which introduces the consensus algorithm into FL for the exchange of a model’s local gradients. This paper also provides novel convergence guarantees for the developed method, and demonstrates its superior effectiveness and efficiency in improving the system utility value through theoretical analyses and numerical simulation results.

Index Terms:

Independent Reinforcement Learning, Federated Learning, Consensus Algorithm, Communication Overheads, Utility FunctionI Introduction

With the development of wireless communication and advanced machine learning technologies in the past few years, a large amount of data has been generated by smart devices and can enable a variety of multi-agent systems, such as smart road traffic control [1], smart home energy management [2], and the deployment of unmanned aerial vehicles (UAVs) [3]. Through deep reinforcement learning (DRL), an intelligent agent can gradually improve the performance of its parameterized policy via the trial-and-error interaction with the environment. Applying DRL directly to multi-agent systems commonly faces several challenging problems, such as the non-stationary learning environment and the difficulty of reward assignment [4]. As an alternative, independent reinforcement learning (IRL) is often employed in practical applications to alleviate the above-mentioned problems, where each agent undergoes an independent learning process with only self-related sensations [5].

For each IRL agent, the training samples are obtained by going through a trajectory with the predefined terminal state or a certain number of Markov state transitions from Markov decision processes (MDPs). With the obtained samples, an agent can calculate the policy gradients and improve its performance by updating its parameters along the gradient descent direction. Typically, performance of the trained policy is closely related to the amount and variety of obtained samples, since the more fully explored state space leads to more accurate estimation of the cumulative reward signal. On the other hand, federated learning (FL) is a parallelly distributed machine learning paradigm, aiming to train specific model through the samples distributed across different devices. In this paper, we model FL in IRL by indicating each device with an agent, and believe that FL can help to indirectly enrich the sample information of each IRL agent through the included gradients aggregation phase, thus improving the policy performance of agents.

Since agents in IRL are naturally distributed, the policy gradients are calculated locally and need to be shared among the multi-agent system through a coordination channel. In the paper, this coordination channel is achieved by deploying a central server, aiming to iteratively aggregate the policy gradients from agents and provide new parameters to update their policies. However, there may be a large number of agents or policy iterations during the training phase. This naive implementation of FL may cause excessive communication overheads between agents and the central server. Recent studies [6, 7] which try to combine distributed DRL with FL mostly focus on improving the involved agents’ capability and their collaboration efficiency, while these excessive communication overheads are ignored. In this paper, we introduce the periodic averaging method in FL to alleviate this problem [8], in which agents are allowed to perform several local updates to the model within a period before their local gradients are transmitted to the central server for averaging. The periodic averaging method can reduce the frequency of information exchange between agents and the central server, thus decreasing the corresponding communication overheads dramatically. However, an increase in the number of local updates becomes essential to guarantee the model’s convergence performance. Therefore, appropriate optimization methods should consider a better balance between reducing communication overheads and improving convergence performance.

To jointly take account of the model’s error convergence bound and the mainly required communication and computation overheads during the training phase, this paper proposes a system utility function and develops a consensus-based optimization scheme on top of the periodic averaging method. We introduce the consensus algorithm [9] into both IRL and FL, where agents are allowed to exchange their models’ local gradients with neighbors directly before performing each local update. The consensus algorithm has been applied in several decentralized averaging methods [10, 11], which normally disable the central server’s functionality but allow agents to average their models’ parameters directly with neighbors. These decentralized averaging methods will highlight their advantages in terms of the communication overheads when the cost of devices-to-devices is much less than that of devices-to-server. However, decentralized averaging methods are unable to take full advantage of FL, and will slow the convergence speed as the size of the network of agents increases. Therefore, in the consensus-based method, we combine FL with the consensus algorithm to guarantee the convergence performance. We also theoretically show that the consensus-based scheme can reduce the model’s error convergence bound dramatically. Different from the spectral radius of a matrix applied in theoretical analyses about the consensus algorithm in FL [12], this paper gives the model’s error convergence bound under the consensus-based method from the perspective of the algebraic connectivity of the graph comprised by agents and their connections, which can additionally reflect the effect of interaction metric (i.e., local interaction step size ) in the consensus algorithm on convergence results. Furthermore, through a multi-agent reinforcement learning (MARL) simulation scenario, we demonstrate the superiority of the developed method in terms of improving the convergence performance and system utility value.

The remainder of this paper is organized as follows. In Section II, we introduce the system model, formulate the optimization problem, and define a system utility function. In Section III, we describe the developed optimization method and analyze its error convergence bound. In Section IV, we introduce a MARL simulation scenario, and present the corresponding results of the developed method. In Section V, we conclude this paper with a summary.

II System Model and Problem Formulation

The framework of federated IRL (FIRL) is illustrated in Fig. 1. We assume that there are totally agents involved in this multi-agent distributed learning scenario. Each agent is designed to learn independently in the training phase, and operate autonomously in the execution phase via DRL. Similar to Dec-POMDP [13], we suppose that each agent can only observe a local state of the global environment, and an individual reward will be returned to the agent immediately after an action being performed. Within this scenario, the role of a conventional central server is played by a virtual agent, which can be deployed at a remote cloud center or some high-powered local agent. Meanwhile, to facilitate the multi-agent collaboration, we allow agents to exchange their local gradients with nearby collaborators through the device-to-device communication whenever needed.

We assume that each agent maintains a DRL model with parameterized policy , where is sampled from the local state space, is selected from the individual action space, is a stochastic policy, and , where indicates the dimension of parameters. According to stochastic policy gradient methods for the policy optimization process of DRL [14], the model’s parameters are updated by

| (1) |

where represents the index of policy iteration, is the learning rate with a reasonable step size, and represents the loss function that needs to be minimized. In this paper, on-policy policy gradient methods are used to improve agents’ policy performance by optimizing the loss function which can be defined by , and can be different expressions, such as the advantage function [15] used in this paper. In addition, according to the well-known experience replay mechanism in DRL [16], we define the sample used in the policy iteration as , where represents the time-stamp within an epoch, and is calculated by a reward function . In practical applications, a certain number of samples are picked out at each iteration to comprise a mini-batch [16]. Therefore, the practical gradients used for training are written as

| (2) |

where denotes the mini-batch at iteration , and indicates its corresponding size. Combining the periodic averaging method in FL, we can obtain

| (3) |

where is the mini-batch from agent , and indicates a predefined number of local updates in a period, which is specially designed for the agent with the fast training speed. The reason for this design is that this fast agent can collect the most samples in the learning environment, and thus has the greatest weight on the training model. To reduce the total training time, we also assume that only the agents which have finished at least one local update at the end of a period need to transmit their local gradients to the virtual agent, and set the maximal number of these involved agents as , where . In the rest of this paper, we will focus on these agents and use the notation to represent . Besides, the maximal length of an epoch and the total number of epochs for training are denoted as and , respectively. To facilitate the convergence speed of policy in DRL, an epoch is further uniformly divided into several steps, each of which generally contains a predefined number of sequential Markov state transitions. In particular, each step involves transitions at most as a mini-batch.

Considering the resources spent by agents in the training process of FIRL, we basically suppose that the main communication overheads required for an agent to transmit its model’s local gradients to the virtual agent are , while the main computation overheads to perform a local updating are . Then, the system’s resource cost can be formulated as

| (4) |

where , for . On the other hand, according to stochastic policy gradient methods, for any epoch, the optimization objective of policy iteration is expressed as

| (5) |

According to and the definition of , we accomplish the optimization objective in (5) by performing stochastic gradient descent (SGD) for the policy parameters . Next, we will replace the notation with in the following discussions. Furthermore, we can define an empirical risk function as

| (6) |

In practical applications, since the empirical risk function may be non-convex, the learning model’s convergence may fall into a local minimum or saddle point. In our framework, similar to [17, 18], the expected gradient norm is used as an indicator of the model’s convergence performance to guarantee that it falls into a stationary point

| (7) |

where represents the expected total number of policy iterations, and . denotes the error convergence bound and helps define a bounded value for a targeted optimal solution. denotes the vector norm. represents the model’s average parameters at iteration , which is also regarded as the final result at the end of each iteration. The model with is maintained by the virtual agent and updated periodically. Accordingly, we define as the model’s initial parameters for all agents and can obtain

| (8) |

where denotes the expected gradient norm of the initial model, which represents the initial convergence error and is gradually decreased by SGD. Finally, we can present the optimization objective of FIRL as

| (9) |

where is a positive constant to reflect the relative importance of convergence performance and resource cost. Hereinafter, we will use (9) as the targeted system’s utility function, for which the optimization progress can help achieve a good balance between improving the model’s convergence performance and reducing the required resource cost.

III Consensus in Periodic Averaging Method

In this paper, the model’s error convergence bound is inferred under the following general assumptions, which are similar to those presented in previous studies for distributed SGD [17, 18].

Assumption 1 (A1).

-

1.

(Smoothness): ;

-

2.

(Lower bounded): ;

-

3.

(Unbiased gradients): ;

-

4.

(Bounded variance): ,

where represents the Lipschitz constant, which implies that the empirical risk function is -smooth [19]. denotes the lower bound of , and we suppose that it can be reached when is large enough. In addition, and are both non-negative constants and are inversely proportional to the size of mini-batch [17]. Condition 3 and 4 in A1 on the bias and variance of the mini-batch gradients are customary for SGD methods [20]. Specifically, the variance of the mini-batch gradients is bounded by Condition 4 through the value that fluctuates with the exact gradients rather than through a fixed constant as in previous studies [8, 20], which thus sets up a looser restriction.

During the training phase of DRL, it is common to keep the learning rate as a proper constant and decay it only when the performance saturates. In the following discussions, we will investigate the model’s error convergence bound under a fixed learning rate. Since the original objective of the consensus algorithm is to make all the distributed nodes in an ad-hoc network reach a consensus, it can be used to reduce the variance of the mini-batch gradients from a cluster of agents. Therefore, we employ the consensus algorithm [9] to improve the local updating process of each agent. Next, we will use the notation to represent , where denotes the index of local interaction, and . To enable all the participating agents to reach a consensus successfully, we make the following assumption for the network of participating agents.

Assumption 2 (A2). The network of participating agents with topology is a strongly connected undirected graph.

Then, according to the consensus algorithm [9], we can obtain the following local interaction process of each agent

| (10) |

where represents the set of neighboring agents that are directly connected with agent in . denotes the local interaction step size, which plays a similar role as the learning rate in the local interaction process. And , where denotes the maximal degree of the graph and is defined by . Furthermore, we can present the update rule of the model’s parameters under the consensus-based method by

| (11) |

| (12) |

where , , and represents the index of the iteration, at which the virtual agent performs the latest periodic averaging before iteration . It can be observed that agents need to exchange their local gradients with nearby collaborators before performing the local updating, and these exchanged gradients are also used to update the model’s average parameters. Note that (11) and (12) also consider the delay of agents in obtaining local gradients, where equals to for some agents at the beginning of local interaction. Thus, although local interactions occur synchronously between neighboring agents, it is not necessary for an agent to wait for all the neighbors to complete the calculation of local gradients, so as to reduce the training time. Finally, we can obtain the following theorem

Theorem 1 (T1). Suppose the number of local updates for agent is , and the total number of iterations is large enough, which can be divided by . Under A1 and A2, if the learning rate satisfies

| (13) |

then the expected gradient norm after iterations is bounded by

| (14) | ||||

where represents the total number of local interactions before each local updating. denotes the Laplace matrix of the graph . denotes the second smallest eigenvalue of , which is also called algebraic connectivity [9].

Remarks: The corresponding proofs are presented in the Appendix. Note that we leave the Appendix in the Supplemental Material due to the space limitation111The Supplemental Material is available at https://www.rongpeng.info/files/sup_icc2022.pdf.. It can be observed that (13) indicates an upper bound for the value of learning rate . Moreover, the right side of (14) shows that the model’s error convergence bound (i.e., ) is determined by several key parameters. In particular, the convergence bound will decrease as the total number of iterations increases, and it can be also declined by the model’s better initial parameters . Taking into account the multi-agent parallel training paradigm, increasing the number of participating agents in the framework of FIRL can reduce the convergence bound, but this comes at the expense of more resource cost according to (4) and may lead to a reduction in the system’s utility value according to (9). Besides, it can be observed that although the implementation of the periodic averaging method can reduce the communication overheads by many times, it will enlarge the convergence bound and may be harmful to the system’s utility value. The part in square brackets of the third term on the right side of (14) describes the topological properties of the graph comprised by agents and their connections through the algebraic connectivity and the local interaction step size, instead of an upper bound on the spectral radius of a stochastic matrix for interaction [12]. In particular, since and the equality holds only when is a fully connected graph, we can find that . Therefore, it can be inferred that the implementation of local interactions can further reduce the convergence bound greatly. In addition, a larger step size or a more densely connected network of participating agents can also help reduce this bound. In practical applications, a small number of local interactions can make the model’s convergence bound decrease dramatically, such as .

On the other hand, considering the resource cost under the consensus-based method, we assume that the communication overheads required for an agent to exchange the mini-batch gradients with one of its neighbors are , and the computation overheads required to perform a local interaction are . Then the system’s resource cost can be updated from (4) as

| (15) |

where represents the size of . Here the possible collision or interference issues in actual communication are omitted. Compared with defined in (4), we can observe that the resource cost under the consensus-based method is increased, resulting from the additionally embedded local interactions. However, since the model’s convergence bound is reduced at the same time, the system’s utility value may be improved in certain cases. For example, suppose that the overheads required for participating agents to implement the device-to-device communication are much less than those to transmit the same messages to the remote virtual agent, then the effectiveness of the consensus-based method will be clearly demonstrated. In addition, the network of participating agents can be also set up as a sparse graph to decrease the number of local interactions between agents, so as to further reduce the total resource cost.

| Methods | Local Updates | Communication overheads | Computation overheads | Expected gradient norm |

|---|---|---|---|---|

| FIRL | 21000 | 21000 | 1.5590 | |

| FIRL | 2100 | 21000 | 6.3421 | |

| FIRL | 1400 | 21000 | 9.6069 | |

| FIRL | 1400 | 19000 | 10.1892 | |

| FIRL | 1400 | 16200 | 8.7182 | |

| FIRL | 1400 | 12600 | 7.6476 | |

| FIRL_C | , , | 2100 + 78000 | 21000 + 78000 | 3.9577 |

| FIRL_C | , , | 2100 + 96000 | 21000 + 96000 | 1.5560 |

| FIRL_C | , , | 2100 + 156000 | 21000 + 156000 | 2.6094 |

| FIRL_C | , , | 2100 + 78000 | 15600 + 78000 | 3.4070 |

IV Simulation Results

In this part, we talk about the simulation results after applying the consensus-based method. The simulation scenario is taken from [21], which is released as a new benchmark in traffic control through the implementation of DRL to create controllers for mixed-autonomy traffic. As illustrated in Fig. 2, the “Figure Eight” simulation scenario in this benchmark is selected in this paper to verify the effectiveness and efficiency of the developed method. Specifically, there are totally 14 vehicles running circularly along a one-way lane that resembles the shape of a figure “8”. An intersection is located at the lane, and each vehicle must adjust its acceleration to pass through this intersection in order to increase the average speed of the whole vehicle team. Note that slamming on the brakes will be forced on the vehicles that are about to crash, and the current epoch will be terminated once the collision occurs. Furthermore, the “Figure Eight” scenario is modified in this paper to assign the related local state of the global environment to each vehicle, including the position and speed of its own, the vehicle ahead and behind. Depending on its local state, each vehicle needs to optimize its acceleration, which is a normalized continuous variable between and . All the involved vehicles are the same, and unless additional controllers are assigned, these vehicles are controlled by the underlying simulation of urban mobility (SUMO) in the same mode, which is an open source with highly portable and widely used traffic simulation package [21]. In the “Figure Eight” scenario, half of the vehicles are assigned the DRL-based controllers. We take the normalized average speed of all vehicles at each iteration as the individual reward, which is assigned to each training vehicle after its action being performed. And unless otherwise specified, the DRL-based controllers are optimized through the proximal policy optimization (PPO) algorithm [22].

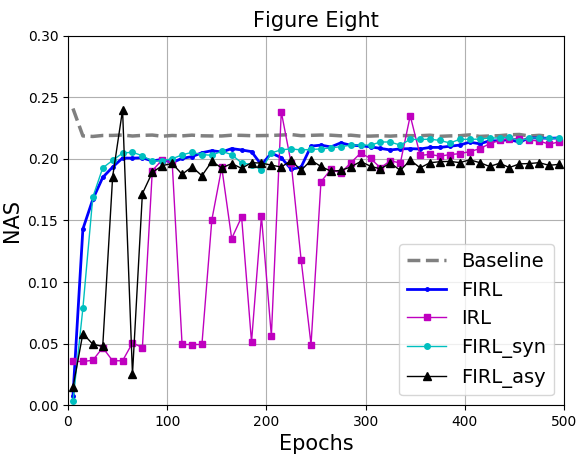

In Fig. 3, we present the simulation performance of naive IRL and FIRL (IRL with naive FL). Here, NAS denotes the normalized average speed of all vehicles during an entire testing epoch. The test is performed every 10 epochs and takes the average of five repetitions. We provide the performance of all vehicles controlled by the underlying SUMO as the optimal baseline. In Fig. 3, we show that FL can clearly improve the policy performance of IRL in terms of training efficiency and stability, while verify the necessity of this combination. We also test the effect of transmission delay in FL on the performance of IRL in the method FIRL_syn and FIRL_asy. Both methods introduce the delay of 2 epochs (i.e. 300 seconds) to the up-link and down-link between each agent and the virtual agent. However, agents in FIRL_syn can update their parameters every iteration, while agents in FIRL_asy can only update their parameters every 2 epochs, which means a worser communication. As indicated in Fig. 3, a smaller frequency of agents updating their parameters may decline the effectiveness of FL in IRL.

In Table I, we present a summarized comparison on the numerical simulation results to verify the effectiveness and efficiency of the developed method. In particular, we set , , , and . The expected gradient norm is calculated upon a predetermined sample set, which is comprised by samples that are uniformly collected during the learning model’s training process when . In addition, the expected gradient norm is calculated whenever the model’s average parameters (i.e., ) are updated, and its final value is averaged across the entire training process of the corresponding learning model. In Table I, we present the performance of FIRL with different local updates in a period. The notation “” denotes that the numbers of local updates from agents are uniformly distributed between and . We can observe a trend that the learning model’s error convergence bound will increase as the number of local updates increases, which is consistent with our previous discussions. In Table I, we also present the performance of FIRL under the consensus-based method (i.e. FIRL_C). On the premise of strong connectivity, the topology of agents’ network with is constructed by random connections from each agent to nearby collaborators, while these connections are increased to when . Note that there is only one connection between any pair of agents. We can observe that being consistent with the conclusions in T1, the error convergence bound of targeted model is decreased when the local interactions are considered, even when the delay in local updating is taken into account (i.e., in case ). In addition, the topology with either a larger (i.e., more connections) or more local interactions (i.e., greater ) generally obtains better training performance. Compared to FIRL with , FIRL_C with requires the same amount of resource cost in FL, and although there are more resources required in local interactions, its expected gradient norm is lower. Thus, the system utility value can be also declined when and are small in FIRL_C.

V Conclusions

This paper has taken advantage of the paradigm of FL to improve the policy performance of IRL agents. Meanwhile, considering the excessive communication overheads generated between agents and a central server in FL, this paper builds the framework of FIRL on the basis of the periodic averaging method. Moreover, to reach a good balance between reducing the system’s communication and computation overheads and improving the model’s convergence performance, a novel utility function has been proposed. Furthermore, to improve the system’s utility value, we have put forward a consensus-based optimization scheme on top of the periodic averaging method. By analyzing the theoretic convergence bounds and performing extensive simulations, both effectiveness and efficiency of the developed method have been verified.

For future works, we plan to implement the proposed optimization method in the real-world application scenarios. In practice, when there is a large number of participating agents in the system, multiple virtual central agents may exist simultaneously and their organization tends to be hierarchical. This is a more complex scenario and requires more careful considerations on the optimization methods. Besides, whether the common shared deep neural network can be applied to heterogeneous tasks faced by different agents remains an open question that is worth exploring.

References

- [1] A. Ata, M. A. Khan, S. Abbas, and M. S. Khan, “Adaptive IoT Empowered Smart Road Traffic Congestion Control System Using Supervised Machine Learning Algorithm,” The Computer Journal, 2020.

- [2] A. R. Al-Ali, I. A. Zualkernan, M. Rashid, and R. Gupta, “A smart home energy management system using IoT and big data analytics approach,” IEEE Trans. on Consumer Electronics, vol. 63, no. 4, pp. 426–434, 2017.

- [3] J. Schwarzrock, I. Zacarias, A. L. C. Bazzan, and L. H. Moreira, “Solving task allocation problem in multi Unmanned Aerial Vehicles systems using Swarm intelligence,” Engineering Applications of Artificial Intelligence, vol. 72, pp. 10–20, 2018.

- [4] K. Arulkumaran, M. P. Deisenroth, M. Brundage, and A. A. Bharath, “Deep Reinforcement Learning: A Brief Survey,” IEEE Signal Processing Magazine, vol. 34, no. 6, pp. 26–38, 2017.

- [5] M. Tan, Multi-Agent Reinforcement Learning: Independent vs. Cooperative Agents. San Francisco, CA, USA: Morgan Kaufmann Publishers Inc., 1997, p. 487–494.

- [6] X. Xu, R. Li, Z. Zhao, and H. Zhang, “Stigmergic Independent Reinforcement Learning for Multi-Agent Collaboration,” November 2019, arXiv. [Online]. Available: https://arxiv.org/abs/1911.12504.

- [7] H. Cha, J. Park, H. Kim, M. Bennis, and S. L. Kim, “Proxy Experience Replay: Federated Distillation for Distributed Reinforcement Learning,” IEEE Intelligent Systems, vol. 35, no. 4, pp. 94–101, 2020.

- [8] H. Yu, S. Yang, and S. Zhu, “Parallel Restarted SGD with Faster Convergence and Less Communication: Demystifying Why Model Averaging Works for Deep Learning,” November 2018, arXiv. [Online]. Available: https://arxiv.org/abs/1807.06629v2.

- [9] R. Olfati-Saber, J. A. Fax, and R. M. Murray, “Consensus and Cooperation in Networked Multi-Agent Systems,” Proceedings of the IEEE, vol. 95, no. 1, pp. 215–233, 2007.

- [10] Z. Jiang, A. Balu, C. Hegde, and S. Sarkar, “Collaborative Deep Learning in Fixed Topology Networks,” in Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, California, USA, December 2017, p. 5906–5916.

- [11] X. Lian, W. Zhang, C. Zhang, and J. Liu, “Asynchronous Decentralized Parallel Stochastic Gradient Descent,” March 2018, arXiv. [Online]. Available: https://arxiv.org/abs/1710.06952.

- [12] S. Hosseinalipour, S. S. Azam, C. G. Brinton, and N. Michelusi, “Multi-Stage Hybrid Federated Learning over Large-Scale Wireless Fog Networks,” February 2020, arXiv. [Online]. Available: https://arxiv.org/abs/2007.09511v4.

- [13] S. Omidshafiei, J. Pazis, C. Amato, and J. P. How, “Deep Decentralized Multi-task Multi-Agent Reinforcement Learning under Partial Observability,” in Proceedings of the 34th International Conference on Machine Learning, vol. 70, Sydney, Australia, 2017, pp. 2681–2690.

- [14] J. Schulman, S. Levine, P. Abbeel, and M. Jordan, “Trust Region Policy Optimization,” in Proceedings of the 32nd International Conference on Machine Learning, vol. 37, Lille, France, 2015, pp. 1889–1897.

- [15] R. Sutton and A. Barto, “Reinforcement Learning: An Introduction,” IEEE Trans. on Neural Networks, vol. 9, no. 5, pp. 1054–1054, 1998.

- [16] M. Volodymyr, K. Koray, S. David, and A. A. Rusu, “Human-level control through deep reinforcement learning,” Nature, vol. 518, no. 7540, pp. 529–533, 2015.

- [17] J. Wang and G. Joshi, “Cooperative SGD: A unified Framework for the Design and Analysis of Communication-Efficient SGD Algorithms,” August 2018, arXiv. [Online]. Available: https://arxiv.org/abs/1808.07576.

- [18] L. Bottou, F. E. Curtis, and J. Nocedal, “Optimization Methods for Large-Scale Machine Learning,” SIAM Review, vol. 60, no. 2, pp. 223–311, 2018.

- [19] X. Zhou, “On the Fenchel Duality between Strong Convexity and Lipschitz Continuous Gradient,” March 2018, arXiv. [Online]. Available: https://arxiv.org/abs/1803.06573.

- [20] R. Hu, Y. Gong, and Y. Guo, “CPFed: Communication-Efficient and Privacy-Preserving Federated Learning,” March 2020, arXiv. [Online]. Available: https://arxiv.org/abs/2003.13761v1.

- [21] E. Vinitsky, A. Kreidieh, L. L. Flem, and N. Kheterpal, “Benchmarks for reinforcement learning in mixed-autonomy traffic,” in Proceedings of the 2nd Conference on Robot Learning, vol. 87, Zurich, Switzerland, 2018, pp. 399–409.

- [22] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal Policy Optimization Algorithms,” July 2017, arXiv. [Online]. Available: http://arxiv.org/abs/1707.06347.