Communication-efficient Byzantine-robust distributed learning with statistical guarantee

Abstract

Communication efficiency and robustness are two major issues in modern distributed learning framework. This is due to the practical situations where some computing nodes may have limited communication power or may behave adversarial behaviors. To address the two issues simultaneously, this paper develops two communication-efficient and robust distributed learning algorithms for convex problems. Our motivation is based on surrogate likelihood framework and the median and trimmed mean operations. Particularly, the proposed algorithms are provably robust against Byzantine failures, and also achieve optimal statistical rates for strong convex losses and convex (non-smooth) penalties. For typical statistical models such as generalized linear models, our results show that statistical errors dominate optimization errors in finite iterations. Simulated and real data experiments are conducted to demonstrate the numerical performance of our algorithms.

Key Words and Phrases: Distributed statistical learning; Byzantine failure; Communication efficiency; Surrogate likelihood; Proximal algorithm

1 Introduction

In many real-world applications, such as computer vision, natural language processing and recommendation systems, the exceedingly large size of data has made it impossible to store all of them on a single machine. Now, more and more data are stored locally in individual agents’ or users’ devices. Statistical analysis in modern era has to deal with the distributed storage data, which faces tremendous challenge on statistical method, computation and communication.

In several practical situations, smart-phone or remote devices with limited communication powers may sever as local nodes, or sometimes communication decay occurs from the constraint of network bandwidth (Konečnỳ et al., 2016). To address communication issue, communication efficiency-oriented algorithms for distributed optimization have been the focus of amounts of works in the past several years, for example, Zhang et al. (2013), Shamir et al. (2014), Wang et al. (2017a), Jordan et al. (2019) and among others. This literature has focused on data-parallel mode in which the overall dataset is partitioned and stored on worker machines that are processed independently. Among those existing distributed approaches, the divide and conquer may be the simplest strategy with a single communication round, where a master machine takes responsible to ultimately aggregate all local results computed independently at each local worker.

Although the divide and conquer strategy has been proved to achieve optimal estimation rates for parametric models and kernel methods (Zhang, Duchi and Wainwright, 2013, 2015), the global estimator based on the naive average may not inherent some useful structures from the model, such as sparsity. Moreover, a lower bound of the sample size assigned at the local nodes is required to attain the optimal statistical rates, that is, , where is the total sample size. This deviates from some practical scenarios where the dataset with the size is also too large to store at a single node/machine. In addition, existing numerical analysis (Jordan, Lee and Yang, 2019) have shown that the naive averaging often performs poorly for nonlinear models, and even its generalization performance is usually unreliable when the local sample sizes among workers differ significantly (Fan et al., 2019).

In the distributed learning literature for communication efficiency, most of existing works on distributed machine learning consist of two categories: 1) how to design communication efficient algorithms to reduce the round of communications among workers (Jordan, Lee and Yang, 2019; Konečnỳ, McMahan, Yu, Richtárik, Suresh and Bacon, 2016; Lee, Lin, Ma and Yang, 2017; Shamir, Srebro and Zhang, 2014); 2) how to choose a suitable (lossy) compression for broadcasting parameters (Wang, Wang and Srebro, 2017b). Notably, Jordan et al. (2019) and Wang et al. (2017a) independently propose a Communication-efficient Surrogate Likelihood (CSL) framework for solving regular M-estimation problems, which also works for high-dimensional penalized regression and Bayesian statistics. Under the master-worker architectures, CSL makes full use of the total information of the data over the master machine, while only merges the first-order gradients from all the workers. Specially, a quasi-newton optimization at the master is solved as the final estimator, instead of merely aggregating all the local estimators like one-shot methods. It has been shown in (Jordan, Lee and Yang, 2019; Wang, Kolar, Srebro and Zhang, 2017a) that CSL-based distributed learning can preserve sparsity structure and achieve optimal statistical estimation rates for convex problems in finite-step iterations.

Despite the generality and elegance of the CSL framework, it is not a wisdom that if it would be directly applied to Byzantine learning. In view that CSL aggregation rule heavily depends on the local gradients, the learning performance will be degraded significantly if these received gradients from local workers are highly noisy. In fact, Byzantine-failure is frequently encountered in distributed or federated learning (Yin et al., 2018). In a decentralized environment, some computing units may exhibit abnormal behavior due to crashes, stalled computation or unreliable communication channels. It is typically modeled as Byzantine failure, meaning that some worker machines may behave arbitrary and potentially adversarial behavior. Thus, it leads to the misleading learning process (Vempaty et al., 2013; Yang et al., 2019; Wu et al., 2019). Robustifying learning against Byzantine failures has attracted a great of attention in recent years.

To copy with Byzantine failures in distributed statistical learning, most resilient approaches in a few recent works tend to combine stochastic gradient descent (SGD) with different robust aggregation rules, such as geometric median (Minsker, 2015; Chen et al., 2017), median (Xie et al., 2018; Yin et al., 2018), trimmed mean (Yin et al., 2018), iterative filtering (Su and Xu, 2018) and Krum (Blanchard et al., 2017). These learning algorithms can tolerate a small number of devices attacked by Byzantine adversaries. Yin et al. (2018) developed two distributed learning algorithms that were provably robust against the Byzantine failures, and also these proposed algorithms can achieve optimal statistical error rates for strongly convex losses. Yet, the above works did not consider the communication cost issue and an inappropriate robust technique can result in increasing the number of communications.

In this paper, we develop two efficient distributed learning algorithms with both communication-efficiency and Byzantine-robustness, in pursuit of accurate statistical estimators. The proposed algorithms integral the framework of CSL for effective communication with two robust techniques, which will be described in Section 3. At each round, the 1st non-Byzantine machine needs to solve a regularized M-estimation problem on its local data. Other workers only need to compute the gradients on their individual data, and then send these local gradients to the 1st non-Byzantine machine. Once receiving these gradient values from the workers, the 1st non-Byzantine machine further aggregates them on basis of coordinate-wise median or coordinate-wise trimmed mean technique, so as to formulate a robust proxy of the global gradient.

In our communication-efficient and Byzantine-robust framework, our estimation error indicates that there exist several trade offs between statistical efficiency, computation efficiency and robustness. In particular, our algorithms attempt to guard against Byzantine failures meanwhile not sacrifice the quality of learning. Theoretically, we show the first algorithm achieves the following statistical error rates

where is the effective standard deviation for each machine with data points, is the bias effect (price) of Byzantine machines, is the averaging effect of normal machines, and is due to the dependence of the median on the skewness of the gradients. For strongly convex problems, Yin et al. (2018) proved that no algorithm can achieve an error lower than under regular conditions. Hence, this shows the optimality of our methods in some senses. As an natural extension of our first algorithm, our 2nd algorithm embeds the proximal algorithm (Parikh and Boyd, 2014) into the distributed procedure. They still perform well even under extremely mild conditions. Particularly, it is more suitable for solving very large scale or high-dimensional problems. In addition, algorithmic convergence can be proved under more mild conditions, without requiring good initialization or a large sample size on each worker machine.

The remainder of this paper is organized as follows. In Section 2, we introduce the problem setup and communication-efficient surrogate likelihood framework for the distributed learning. Section 3 proposes a Byzantine-robust CSL distributed learning algorithm and gives statistical guarantees under general conditions. Section 4 presents another Byzantine-robust CSL-proximal distributed learning algorithm and analyzes their theoretical properties. Section 5 provides simulated and real data examples that illustrate the numerical performance of our algorithms, and thus validate the theoretical results.

Notations. For any positive integer , we denote the set by for brevity. For a vector, the standard -norm and the -norm is written by and , respectively. For a matrix, the operator norm and the Frobenius norm is written by and , respectively. For a different function , denote its partial derivative (or sub-differential set) with respect to the -th argument by . Given a Euclidean space , and , define to be a closed ball with the center , where refers to the th component of . We assume that and is a convex and compact set with diameter . Let to be the set of Byzantine machines, . Without loss of generality, we assume that the 1st worker machine is normal and the other worker machine may be Byzantine. For matrices and , means is strictly positive. Given two sequences and , we denote if for some absolute positive constant , if for some absolute positive constant . Furthermore, we also use notations and to hide logarithmic factors in and respectively.

2 Problem Formulation

In this section, we formally describe the problems setup. We focus on a standard statistical learning problem of (regularized) empirical risk minimization (ERM). In a distributed setting, suppose that we have access to one master and worker machines, and each worker machine independently communicates to the master one; each machine contains data points; and of the worker machines are Byzantine for some proportional level and the remaining fraction of worker machines are normal. Byzantine works can send any arbitrary values to the master machine. In addition, Byzantine workers may completely know the learning algorithm and are allowed to collude with each other (Yin et al., 2018). In this setting, the total number of data points is .

Suppose that the observed data are sampled independently from an unknown probability distribution over some metric space . Let be a loss function of a parameter associated with the data point . To measure the population loss of , we define the expected risk by . Theoretically, the true data-generating parameter we care about is a global minimizer of the population risk,

It is known that negative log-likelihood functions are viewed as typical examples of the loss function , for example, the Gaussian distribution corresponds to the least square loss, while the Bernoulli distribution for the logistic loss. Given that all the available samples are stored on machines, the empirical loss of the th machine is given as , where is the index set of samples over the th machine with for all , and for any . In this paper, we are mainly concerned with learning via minimizing the regularized empirical risk

| (2.1) |

where and is a deterministic penalty function and independent of sample points, such as the square -norm in ridge estimation, the -norm in the Lasso penalty (Tibshirani, 1996).

In the ideal non-Byzantine failure situation, one of core goals in the distributed framework is to develop efficient distributed algorithms to approximate well. As a leading work in the literature, Jordan et al. (2019) and Wang et al. (2017a) independently proposed an efficient distributed approach via the quasi-likelihood estimation. We now introduce the formulation of this method. Without loss of generality, we take the first machine as our master one. An initial estimator in the 1st machine is broadcasted to all other machines, which compute their individual gradients at . Then each gradient vector is communicated back to the 1st machine. This constitutes one round of communication with a communication cost of . At the -th iteration, the 1st machine calculates the following regularized surrogate loss

| (2.2) |

Next, the (approximate) minimizer without any aggregation operation is communicated to all the local machines, which is used to compute the local gradients, and then iterates as (2.2) until convergence.

Different from any first-order distributed optimization, the refined objective (2.2) leverages both global first-order information and local higher-order information (Wang et al., 2017a). The idea of using such an adaptive enhanced function also has been developed in Shamir et al. (2014) and Fan et al. (2019).

Throughout the paper, we assume that and are convex in , and is twice continuously differentiable in . We allow to be non-smooth, for example, the -penalty ().

From (2.2), we observe that this update for interested parameters strongly depends upon local gradients at any iteration. Hence, the standard learning algorithm only based on average aggregation of the workers’ messages would be arbitrarily skewed if some of local workers are Byzantine-faulty machines. To address this robust-related problem, we develop two Byzantine-robust distributed learning algorithms given in next two sections.

3 Byzantine-robust CSL distributed learning

In this section, we introduce our first communication-efficient Byzantine-robust distributed learning algorithm based on the CSL framework, and particularly introduce two robust operations to handle the Byzantine failures. After giving some technical assumptions, we present optimization error and statistical analysis of multi-step estimators. In the end of this section, we further clarify our results by a concrete example of generalized linear models (GLMs).

3.1 Byzantine-robust CSL distributed algorithm

When the Byzantine failures occur, the aggregation rule (2.2) will be sensitive to the bad gradient values. More precisely, although the master machine communicates with the worker machines via some predefined protocol, the Byzantine machines do not have to obey this protocol and may send arbitrary messages to the master machine. At this time, the gradients received by the master machine are not always reliable, since the information from Byzantine machine may be completely out of its local data. To state it clearly, we assume that the Byzantine workers can provide arbitrary values written by the symbol “” to the master machine. In this situation, several robust operations should be implemented to substitute the simply average of local gradients as in (2.2).

Inspired by robust techniques developed recently in Yin et al. (2018), we apply for the coordinate-wise median and coordinate-wise trimmed mean to formulate our Byzantine-robust CSL distributed learning algorithm.

Definition 3.1

(Coordinate-wise median) For vectors , , the coordinate-wise median is a vector with its -th coordinate being for each , where is the usual (one-dimensional) median.

Definition 3.2

(Coordinate-wise trimmed mean) For and vectors , , the coordinate-wise -trimmed mean is a vector with its -th coordinate being for each . Here is a subset of obtained by removing the largest and small fraction of its elements.

See Algorithm 1 below for details, and we call it Algorithm BCSL. In each parallel iteration of Algorithm 1, the 1st machine (the normal master machine) broadcasts the current model parameter to all worker machines. The normal worker machines calculate their own gradients of loss functions based on their local data and then send them to the 1st machine. Considering that the Byzantine machines may send any messages due to their abnormal or adversarial behavior, we implement the coordinate-wise median or trimmed mean operation for these received gradients at the 1st machine. Then the aggregation algorithm in (3.1) is conducted to update the global parameter.

| (3.1) |

In order to provide an optimization error and statistical error of Algorithm 1, we need to introduce some basic conditions for our theoretical analysis.

Assumption 3.1

(Lipschitz Conditions and Smoothness). For any and , the partial derivative with respect to its first component is -Lipschitz.The loss function itself is -smooth in sense that its gradient vector is -Lipschitz continuous under the -norm. Let . Further assume that the population loss function is -smooth.

For Option I in Algorithm 1: median-based algorithm, some moment conditions of the gradient of is introduced to control stochastic behaviors.

Assumption 3.2

There exist two constants and , for any and all , such that

(i) (Bounded variance of gradient). .

(ii) (Bounded skewness of gradient). . Here refers to the coordinate-wise skewness of vector-valued random variables.

Assumption 3.2 is standard in the literature and is satisfied in many learning problems. See Proposition 1 in Yin et al. (2018) for a specific linear regression problem.

Assumption 3.3

(Strong convexity). has a unique minimizer , and is -strongly convex in for some and .

Assumption 3.4

(Homogeneity) for and .

In most existing studies, it is usually assumed that the population risk is smooth and strongly convex. The empirical risk also enjoys such good properties, as long as are i.i.d. and the total sample size is sufficiently large relative to (Fan et al., 2019). From (3.1) in Algorithm 1, we know the local data at the 1st machine are used to optimize. So we need to control the gap between and to contract optimization rate of the algorithm. The similarity between and is depicted by Assumption 3.4. Indeed, the empirical risk should not be too far away from their population risk as long as the sample size of the 1st machine is not too small. Mei et al. (2018) showed it holds with reasonably small and large with high probability under general conditions. Specially, a large implies a small homogeneity index . Obviously, Assumption 3.4 always holds when taking .

The following theorem establishes the global convergence of the proposed algorithm BCSL, involving a trade off between the optimization error and statistical error.

Theorem 3.1

Assume that Assumptions 3.1-3.4 hold, the iterates produced by Option I in Algorithm 1 with for , , and the fraction of Byzantine machines satisfies

| (3.2) |

for some . Then, with probability at least , we have

where

Here and , where being the inverse of the cumulative distribution function of the standard Gaussian distribution .

Theorem 3.1 shows the linear convergence of , which depends explicitly on the homogeneity index , the strong convex index , and the fraction of Byzantine machines . The result is viewed as an extension of that in Jordan et al. (2019) and Fan et al. (2019). A significant difference from theirs is that, we allow the initial estimator to be inaccurate, and with high probability we have more explicit rates of convergence on optimization errors under the Byzantine failures.

Specially, the factor in Theorem 3.1 is a function of , for example, if setting . After running for Algorithm 1, with high probability, we have

| (3.3) |

Notice that . Theorem 3.1 guarantees that after parallel iterating , with high probability we can obtain a solution with an error

In this case, the derived rate of the statistical error between and the centralized empirical risk minimizer is of the order up to some constants, alternatively, up to the logarithmic factor. Note that

Intuitively, the above error rate is a near optimal rate that one should target, as is the effective standard deviation for each machine with data points, is the bias effect of Byzantine machines, is the averaging effect of normal machines, and is the effect of the dependence of median on skewness of the gradients. If , then is the order-optimal rate (Yin et al., 2018). When (no Byzantine machine), one sees the usual scaling with the global sample size; when (some machines are Byzantine), their influence remains bounded and is proportional to . So we do not sacrifice the quality of learning to guard against Byzantine failures, provided that the Byzentine failure proportion satisfies .

Remark also that, our results for convex problems follow for any finite-bounded of the initial radius.

We next turn to an analysis for Option II in Algorithm 1: The robust distributed learning based on coordinate-wise trimmed mean. Compared to Option I, a stronger assumption on the tail behavior of the partial derivatives of the loss functions is needed as follows.

Assumption 3.5

(Sub-exponential gradients). For all and , the partial derivative of with respect to the -th coordinate of , , is -sub-exponential.

The sub-exponential assumption implies that all the moments of the derivatives are bounded. Hence, this condition is stronger a little than the bounded absolute skewness (Assumption 3.2(ii)). Fortunately, Assumption 3.5 can be satisfied in some learning problems, and see Proposition 2 in Yin et al. (2018).

Theorem 3.2

Theorem 3.2 also shows the linear convergence of , which depends explicitly on the homogeneity index , the strong convex index , and the trimmed mean index with choosing the index to satisfy , where is a fraction of Byzantine machines. Note that, the hyperparameter in Assumption (3.5) only affect the statistical error appearing in .

Similar to (3.3), we also have

after -step iterations. By running parallel computations, we can obtain a solution satisfying , since the term can be reduced to be .

It should be pointed out that, the trimmed mean index is strictly controlled by the fraction of Byzantine machines , that is . By choosing with , we still achieve the optimization error rate , which is also order-optimal in the statistical literature.

We now take comparable analysis for the above two Byzantine-robust CSL distributed learning in Algorithm 1 (Options I and II). The trimmed-mean-based algorithm (Option II) has an order-optimal optimization error rate . By contrast, the median-based algorithm (Option I) has the rate involving an additional term , and thus the optimality is achieved only for . Note that Option I algorithm needs milder moment conditions (bounded skewness) on the tail of the loss derivatives than the Option II algorithm (sub-exponentiality). In other words, this provides a profound insight into the underlying relation between the tail decay of the loss derivatives and the block number of local machines ().

On the other hand, Algorithm 1 based on Option II has an additional parameter such that , which requires that the fraction of Byzantine machines is absolutely dominated by the normal ones for guaranteeing robustness. In contrast to this, Algorithm 1 based on Option I has a weaker restriction on .

3.2 Specific Example: Generalized linear models

We now unpack Theorems 3.1 and 3.2 in generalized linear models, taking into consideration the effects of iterations in the proposed estimator, the roles of the initial estimator and the Byzantine failures. We will find an explicit rate of convergence of in Assumption 3.4 in the setting of generalized linear models. Theorem 3.1 and 3.2 guarantee that after running parallel iterations, with high probability we achieve an optimization error with a linear rate. Moreover, through finite steps, the optimization errors are eventually negligible in comparison with the statistical errors. We will give a specific analysis below.

For GLMs, the loss function is the negative partial log-likelihood of an exponential-type variable of the response given any input feature . Suppose that the i.i.d. pairs are drawn from a generalized linear model. Recall that the conditional density of given takes the form

where is some known convex function, and is a known function such that is a valid probability density function. The loss function corresponding to the negative log likelihood of the whole data is given by with

| (3.4) |

We further impose the following standard regularity technical conditions.

Assumption 3.6

(i) There exist universal positive constants such that , where .

(ii) are i.i.d. sub-Gaussian random vectors with bounded .

(iii) are i.i.d. random vectors, and each component is -sub-exponential.

(iv) For all , and are both bounded.

(v) is bounded.

Assumption 3.7

is -strongly convex in , where for some universal constants , and .

Assumptions (3.6)(i)(ii)(iv)(v)-(3.7) have been proposed by Fan et al. (2019) to establish the rate of optimization errors for a distributed statistical inference. In our paper, these assumptions mainly are used to obtain asymptotic properties of in Option I algorithm. For establishing the rate of optimization errors of Option II algorithm, we need Assumption (3.6) (iii) (sub-exponentiality) instead of Assumption (3.6)(ii) (sub-Gaussian), which is similar to Theorem 3.2.

From (3.4), we easily obtain

By Lemma A.5 in Fan et al. (2019), we immediately get

as long as for a given positive constant . So, can be chosen with high probability. From Theorems 3.1 and 3.2, we have the contraction factor with . The explicit parameter is comparable to the condition number in Jordan et al. (2019), where finite and were imposed.

Equipped with these above facts, we have the following corollary.

Theorem 3.3

Theorem 3.4

Theorems 3.3 and 3.4 clearly present how Algorithm 1 depend on structural parameters of the problem. When is bounded, , through finite steps, can be much smaller than . Thus,

| (3.5) |

So, By contrast to that results in Jordan et al. (2019), we allow an inaccurate initial value and give more explicit rates of optimization error even when diverges.

We know that the statistical error of the estimator can be controlled by the optimization error of and statistical error of , that is,

| (3.6) |

Note that the first term is not a deterministic optimization error, it holds in probability. Therefore, it is an optimization error in a statistical sense. We call it statistical optimization error. The second term is of order under mild conditions, which has been well studied in statistics. Thus, the statistical error of is controlled by the magnitude of the first term. If we adopt two-step iteration, and when is obtained in 1st machine, one gets

by (3.5)-(3.6), where is defined in (3.5), provided that for Option I

or for Option II

It implies that Algorithm 1 has the order-optimal statistical error rate, for Option I which needs .

4 Byzantine-robust CSL-proximal distributed learning

For Algorithm 1, we establish the contraction rates of optimization errors and statistical analysis under sufficiently strong convexity of and small discrepancy between and . It requires the data points of each machine to be large enough, and even the required data size of each machine depends on structural parameters, which may not be realistic in practice. The coordinate-wise median and coordinate-wise trimmed mean are proposed in algorithm 1, which is robust for the Byzantine failure, but it is unstable in the optimization process of the 1st worker machine even for moderate . In the section, we propose another Byzantine-robust CSL algorithm via embedding the proximal algorithm. See Rockafellar (1976) and Parikh and Boyd (2014) for proximal algorithm.

4.1 Byzantine-robust CSL-proximal distributed algorithm

First, recall that the proximal operator is defined by

By the proximal operator of the function with , the proximal algorithm for minimizing iteratively computes

starting from some initial value . Rockafellar (1976) showed the converges linearly to some .

For our problem (2.1), the proximal iteration algorithm is

In our setting, we adopt the distributed learning, and optimization is mainly on the 1st worker machine. The is a penalty function, which is used to the optimization step, and keep the local data of the 1st worker machine in . So, the penalty function in our proximal algorithm becomes . Further, the optimization (3.1) in Algorithm 1 is replaced by

| (4.1) |

where is the gradient information at from the other worker machines. This optimization (4.1) can make the Byzantine-robust CSL-proximal distributed learning converges rapidly. See the following Algorithm 2. We call it Algorithm BCSLp.

| (4.2) |

The above Algorithm 2 is a communication-efficient Byzantine-robust accurate statistical learning, which adopts coordinate-wise median and coordinate-wise trimmed mean cope with Byzantine fails, and use the proximal algorithm as the backbone. In each iteration, it has one round of communication and one optimization step similar to Algorithm 1. It is regularized version of Algorithm 1 by adding a strict convex quadratic term in the objective function. The technique has been used in the distributed stochastic optimization such as accelerating first-order algorithm (Lee et al., 2017) and regularizing sizes of updates (Wang et al., 2017b), and in the communication-efficient accurate distributed statistical estimation (Fan et al., 2019).

Now, we give contraction guarantees for Algorithm 2.

Theorem 4.1

Theorem 4.2

Theorems 4.1 and 4.2 present the linear convergence of Algorithm 2 Options I and II, respectively. Obviously, the contraction factor consists of two parts: which comes from the error of the inexact proximal update , and which comes from the residual of proximal point . Remarking similar to Theorems 3.1 and 3.2, within a finite -step , we have for Option I, which is a order-optimal if ; and for Option II, which also is a order optimal. These results just need that are convex and smooth while the penalty is allowed to be non-smooth, for example, norm. However, Most distributed statistical learning algorithms are only designed for smooth problems, and don’t consider Byzantine problems, for instance, Shamir et al. (2014), Wang et al. (2017a), Jordan et al. (2019), and so on.

In Theorems 3.1 and 3.2, we require the homogeneity assumption between and . That is, we need they must be similar enough. By the law of large numbers, the sample size of the 1st worker machine must to be large. From Theorems 4.1 and 4.2, we see that such a condition is no longer needed, as long as the condition . The condition holds definitely by choosing sufficiently large regularity . Therefore, Algorithm 2 needs a weaker homogeneous hypothesis than Algorithm 1. After running a finite step parallel iterations, with high probability we can obtain the error (Option I) or (Option II). Furthermore, the choice of a large can accelerate its contraction. At this time, has little effect on the contraction factor. This is an important aspect of Algorithm 2 contribution.

The following corollary gives the choice of that makes Algorithm 2 converge.

Corollary 4.1

(i) if , then with high probability,

From Corollary 4.1, we can choose

as a default choice for Algorithm 2 to make the algorithm converge naturally. And then we achieve the order-optimal error rate after running a finite parallel iterations; They are for Option I, which is a order-optimal if , and for Option II. From Corollary 4.1(ii), we see that with a regularizer up to , the contraction fact is essentially the same as the case of the unregularized problem (). It also tell us how large can be chose so that the contraction factor is the same order as Algorithm 1. Also see Theorems 3.1 and 3.2.

4.2 Statistical analysis for general models

In the subsection, we consider the case of generalized linear models as in Subsection 3.2. In Algorithm 2, is a regularization parameter which is very important for adapting to the different scenarios of . That is, by specifying the correct order of the , Algorithm 2 can solve the dilemma of small local sample size , while enjoying all the characteristics of Algorithm 1 in the large- local data.

Theorem 4.3

Under the assumptions of Theorem 3.3 or 3.4, except that with high probability for some . Let and . For any , there exists , after parallel iterations,

(i) if and , then the algorithms have linear convergence

For Theorem 4.3(i), we assume that , which is reasonable especially for many big data situations. Theorem 4.3(ii) shows that by taking , Algorithm 2 inherits all the advantages of Algorithm 1 in the large regime, one of them is fast linear contraction with the rate . In practice, it is difficult for us to determine whether the sample size is sufficiently large, but Algorithm 2 always guarantees the convergence via proper choice of . And Theorem 4.3(ii) guarantees that after running parallel iterations, with high probability we can obtain a solution with error . Similar to the discussion to Theorems 3.3 and 3.4, we have

where is defined in (3.5). It means that for Option I, which is a order-optimal if , and for Option II, which also is a order-optimal.

As mentioned, the distributed contraction rates depend strongly on the conditional number , even for generalized linear models. Here, we give another specific case of distributed linear regression on loss, and then obtain the strong results under some specific conditions. We define a loss on the th worker machine as

where and ; and

where and .

We present the following assumptions.

Assumption 4.1

(i) and . The minimum eigenvalue is bounded away from zero.

(ii) and where and are constants.

(iii) are i.i.d. sub-Gaussian random vectors with bounded .

(iv) are i.i.d. random vectors, and each component is -sub-exponential.

Theorem 4.4

Assume that there exist positive constants such that (1) and or (2) , and is bound away from zero. In addition,

Note that if is large enough, then

by choosing based on the weak requirement for regularization (, see Condition (1) in Theorem 4.4); We know that most distributed learning algorithms do not work even without the Byzantine failure if is not very large. But we still guarantee linear convergence with the rate

by choosing (see Condition (2) in Theorem 4.4). Further, in most scenarios, which implies . Therefore, we choose

as a universal and adaptive choice for Algorithm 2 regardless of the size of . Thus, Theorem 4.4 shows that no matter the size of , proper regularization always obtains linear convergence. Hence, we can handle the distributed learning problems where the amount of data per worker machine is not large enough. This situation is difficult for some algorithms (Zhang et al. (2013), Jordan et al. (2019)). We still achieve an order-optimal error rate up to logarithmic factors for two options of Algorithm 2 no matter in the large sample regime or in the general regime.

Another benefit of the Algorithm 2 is that the contraction factor in Theorem 4.4 does not depend on the condition number at all, and has hardly any effect on the optimal statistical rate of the Algorithm 2. Therefore, it helps relax the commonly used boundedness assumption on the condition number in Zhang et al. (2013), Jordan et al. (2019) among others. Also see the remark in Theorem 3.3 of Fan et al. (2019). But their algorithms can not handle the distributed learning of Byzantine-failure.

5 Numerical experiments

5.1 Simulation experiments

In the subsection, we present several simulated examples to illustrate the performance of our algorithms BCLS and BCLSp, which are developed in Sections 3 and 4.

First, we conduct our numerical algorithms using the distributed logistic regression. In the logistic regression model, all the observations for all are generated independently from the model

| (5.1) |

where with . In our simulation, we keep the total sample size and the dimension fixed; the covariate vector is independently generated from with ; for each replicate of the simulation, is a random vector with whose direction is chosen uniformly at random from the sphere.

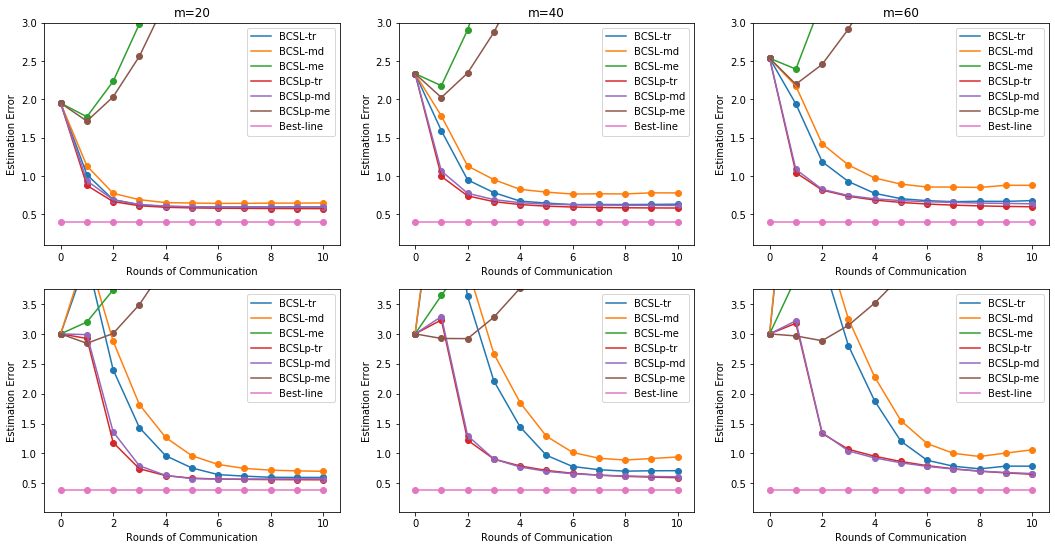

In the distributed learning, we split the whole dataset into each worker machine. According to the regimes “large ”, “moderate ” and “small ”, we set the local sample size and the number of machines as , and respectively. For our BCLS and BCLSp algorithms, we need to simulate the Byzantine failures. The worker machines are randomly chosen to be Byzantine, and one of the rest work machines as the 1st worker machine. In the experiments, we set . In the coordinate-wise trimmed mean, set . For evaluating the effect of initialization on the convergence, we respectively take , and which is the local estimator based on the data of the 1st machine. They are referred to “zero initialization” and “good initialization”, respectively.

We implement Algorithms BCLS and BCLSp based on median, trimmed mean and mean for aggregating all local gradients, which are called BCLS-md, BCLS-tr, BCLS-me, BCLSp-md, BCLSp-tr and BCLSp-me, respectively. In the first experiment, we choose . The optimizations are carried out by mini batch stochastic gradient descent in the above algorithms. The estimation error is used to measure the performance of these different algorithms based on the 50 simulation replications.

Figure 1 shows how the estimation errors evolve with iterations for the case of fixed. We find that our algorithms (BCLS-tr, BCLS-md, BCLSp-tr and BCLSp-md) converge reapidly in all scenarios, but the mean methods (BCLS-me and BCLSp-me) do not converge at all. Our algorithms almost converge after 2 iterations and do not require good initialization, which are in line with our theoretical results. This implies that our algorithms are robust against Byzantine failures, but the mean methods can’t tolerate such failures. In addition, our proposed Algorithm BCLSp is more robust and stable than Algorithm BCLS, especially for large . Note that large implies the more Byzantine worker machines. The embedding of the proximal technique in Algorithm BCLSp adds strict convex quadratic regularization, which leads to better performance.

Next, we use the distributed logistic regression model (5.1) with sparsity. The experiment is used to validate the efficiency of our algorithms in the presence of a nonsmooth penalty. In the simulation, we still set the total sample size , but the dimensionality of is fixed to ; the covariate vector is i.i.d. and with . The -norm of is constrained to 3. We choose the penalty function with so that the nonzeros of can be recovers accurately by the regularized maximum likelihood estimation over the whole dataset. As in the first experiment, we set , and , , , (“zero initialization”) and (“good initialization”). The is the local estimator based on the dataset of the 1st machine. In the Algorithm BCLSp, the penalty parameters are selected appropriately for the cases of “good initialization” and “zero initialization”, respectively. The optimizations are carried out by mini batch stochastic sub-gradient descent in the algorithms. All the results are average values of 20 independent runs.

Figure 2 presents the performance of our algorithms and the mean aggregate method (BCLS-me and BCLSp-me). With proper regularization, our algorithms still work well whether the initial value is “good” or “bad”. The mean aggregate methods (BCLS-me and BCLSp-me) fail to converge. For this nonsmooth problem, Algorithms BCLSp-tr and BCLSp-md are more robust than Algorithms BCLS-tr and BCLS-md, and start to converge after just 2 rounds of communication, specially for the case of “good initialization”.

Here, we summarize the above simulations to highlight several outstanding advantages of our algorithms.

(1) Our proposed Byzantine-Robust CSL distributed learning algorithm (BCLS-md, BCLSp-tr) and Byzantine-Robust CSL-proximal distributed learning algorithm (BCLSp-md and BCLSp-tr) can indeed defend against Byzantine failures.

(2) Our proposed algorithms converge rapidly, usually with several rounds of communication, and do not require good initialization; these are consistent with our statistical theory.

(3) The Algorithms BCLSp-md and BCLSp-tr are more robust than Algorithms BCLS-md and BCLS-tr, due to add strict convex quadratic regularization by embedding proximal technique into Algorithm BCLSp.

5.2 Real data

In the subsection, we further assess the performance of our proposed algorithms by a real data example. We choose Spambase dataset from the UC Irvine Machine Learning Repository (Dua and Graff, 2017). The collection of Span e-mails in Spambase dataset come from their postmaster and individuals who had filed spam, and the collection of non-spam e-mails came from filed work and personal e-mails. Number of instances (total sample) is 4600, and Number of Attributes (features) is 57 based on their word frequencies and other characteristics. The goal is to use distributed logistic regression to construct a personalized spam filter that distinguishes spam emails from normal ones. In the experiment, randomly selecting 1000 instances as the testing set and the rest of 3600 instances as the training set; split the training set to each worker machine according to (“small ”), (“moderate ”) and (“large ”), respectively; set for the fraction of Byzantine worker machines and for the BCLS-tr and BCLSp-tr algorithms. We use classification errors on the test set as the evaluation criteria.

Figure 3 shows the average performance of the 6 algorithms mentioned in Subsection 5.1. We find that the testing errors of our algorithms are very low. It implies our algorithms can accurately filter spam and non spam, even for more Byzantine worker machines (“large ”). But the filters based on Algorithms BCLS-me and BCLSp-me fail. These results are consistent with the ones of simulation experiments. Totally, the experiments on the real data also support our theoretical findings.

Acknowledgments

This work is partially supported by Chinese National Social Science Fund (No. 19BTJ034).

References

- Blanchard et al. (2017) Blanchard P, El Mhamdi EM, Guerraoui R, Stainer J. Machine learning with adversaries: Byzantine tolerant gradient descent. Proceedings of NIPS 2017;:118–28.

- Chen et al. (2017) Chen Y, Su L, Xu J. Distributed statistical machine learning in adversarial settings: Byzantine gradient descent. Proc ACM Meas Anal Comput Syst 2017;1:1–25.

- Dua and Graff (2017) Dua D, Graff C. Uci machine learning repository 2017;URL: https://archive.ics.uci.edu/ml/datasets/Spambase.

- Fan et al. (2019) Fan J, Guo Y, Wang K. Communication-efficient accurate statistical estimation. arXiv preprint 2019;arXiv:1906.04870.

- Jordan et al. (2019) Jordan MI, Lee JD, Yang Y. Communication-efficient distributed statistical inference. Journal of the American Statistical Association 2019;114(526):668–81.

- Konečnỳ et al. (2016) Konečnỳ J, McMahan HB, Yu FX, Richtárik P, Suresh AT, Bacon D. Federated learning: Strategies for improving communication efficiency. arXiv preprint 2016;arXiv:1610.05492.

- Lee et al. (2017) Lee JD, Lin Q, Ma T, Yang T. Distributed stochastic variance reduced gradient methods by sampling extra data with replacement. Journal of Machine Learning Research 2017;18:4404–46.

- Mei et al. (2018) Mei S, Bai Y, Montanari A. The landscape of empirical risk for nonconvex losses. The Annals of Statistics 2018;46:2747–74.

- Minsker (2015) Minsker S. Geometric median and robust estimation in banach spaces. Bernoulli 2015;21(4):2308–35.

- Nesterov (2004) Nesterov Y. Introductory Letures on Convex Optimization: A Basic Course. New York: Springer Science and Business Media, 2004.

- Parikh and Boyd (2014) Parikh N, Boyd S. Proximal algorithms. Foundations and Trends® in Optimization 2014;1:127–239.

- Rockafellar (1976) Rockafellar RT. Monotone operators and the proximal point algorithm. SIAM Journal on control and optimization 1976;14:877–98.

- Shamir et al. (2014) Shamir O, Srebro N, Zhang T. Communication efficient distributed optimization using an approximate newton-type method. Proceedings of the 31st International Conference on Machine Learning 2014;:1000–8.

- Su and Xu (2018) Su L, Xu J. Securing distributed machine learning in high dimensions. arxiv Preprint 2018;arXiv:1804.10140.

- Tibshirani (1996) Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society Series B (Methodological) 1996;58(1):267–88.

- Vempaty et al. (2013) Vempaty A, Tong L, Varshney PK. Distributed inference with byzantine data: State-of-the-art review on data falsification attacks. IEEE Signal Processing Magazine 2013;30(5):65–75.

- Wang et al. (2017a) Wang J, Kolar M, Srebro N, Zhang T. Efficient distributed learning with sparsity. Proceedings of Machine Learning Research, PMLR 2017a;70:3636–45.

- Wang et al. (2017b) Wang J, Wang W, Srebro N. Memory and communication efficient distributed stochastic optimization with minibatch prox. Proceedings of the 2017 Conference on Learning Theory, PMLR 2017b;65:1882–919.

- Wu et al. (2019) Wu Z, Ling Q, Chen T, Giannakis GB. Federated variance-reduced stochastic gradient descent with robustness to byzantine attacks. arXiv Preprint 2019;arXiv:1912.12716v1.

- Xie et al. (2018) Xie C, Koyejo O, Gupta I. Generalized byzantine-tolerant sgd. arXiv Preprint 2018;arXiv:1802.10116.

- Yang et al. (2019) Yang Z, Gang A, Bajwa WU. Adversary-resilient inference and machine learning: From distributed to decentralized. arXiv Preprint 2019;arXiv:1908.08649.

- Yin et al. (2018) Yin D, Chen Y, Ramchandran K, Bartlett P. Byzantine-robust distributed learning: towards optimal statistical rates. Proceedings of the 35th International Conference on Machine Learning, PMLR 2018;80:5650–9.

- Zhang et al. (2015) Zhang Y, Duchi J, Wainwright M. Divide and conquer kernel ridge regression: A distributed algorithm with minimax optimal rates. The Journal of Machine Learning Research 2015;16:3299–340.

- Zhang et al. (2013) Zhang Y, Duchi JC, Wainwright MJ. Communication-efficient algorithms for statistical optimization. Journal of Machine Learning Research 2013;14:3321–63.

Appendix A

A.1 Proof of Theorems 3.1 and 3.2

Proof of Theorem 3.1: Define

Obviously, . By Theorem 8 in Yin et al. (2018) and the law of large numbers, for , we have

| (A.1) | |||||

Therefore, , that is, is a asymptotic fixed point of . For the fixed , by the first order condition of , we have that

| (A.2) |

Further,

| (A.3) |

by using the fact .

By the Taylor expansion, Assumption 3.4 and Theorem 8 in Yin et al. (2018), with probability at least , we have

| (A.4) | |||||

If we know in advance, then

Here the 1st inequality follows from the -strong convex in of and (A.2)-(A.3); the 2nd step uses the Cauchy-Schwarz inequality. Then, we obtain the desired result (A.5). Suppose on the contrary that . We use reduction to absurdity. Define . Obviously, . By the strong convexity of in and (A.2)-(A.3) again,

| (A.6) |

Notice that

Thus, by the convexity of and (A.2), we always have

| (A.7) | |||||

for any . Summing up the (A.6), and by (A.7) and Cauchy-Schwarz inequality, one gets

Thus, , which contradicts (A.4). Therefore, we must have only . And then (A.5) holds. Together with (A.4), with probability at least , we have

Take , then . We complete the proof of Theorem 3.1.

A.2 Proof of Theorems 3.3 and 3.4

A.3 Proofs of Theorems 4.1, 4.2 and Corollary 4.1

Proof of Theorem 4.1: Under the conditions of Theorem 4.1, if , then (4.3) holds. Then Theorem 4.1 can directly follow from the result and induction. Below we prove the result.

Recall that . Denote . By the triangle inequality,

| (A.8) |

For the first term on the right of (A.8), , we can obtain its contracting optimization errors by Theorem 3.1, taking in Algorithm 1 as . Thus, . Together with (4.2), is regarded as the first iterate of Algorithm 1 initialized at for obtaining . Here, is similar to in Algorithm 1. Contrasting the assumptions of Theorem 3.1, we still need the following assertions:

(i) ;

(ii) is -strongly convex in ;

(iii) , and .

Let . Notice that

| (A.9) |

By the well-known “firm non-expansiveness” property of the proximal operation (Parikh and Boyd, 2014), we have

| (A.10) |

From (A.9)-(A.10), we have . So, the condition implies . Assumptions 3.3 and 3.4 imply (ii) and (iii) hold, respectively. In the other hand,

that is,

Further,

| (A.11) |

which leads to . Therefore, (i) holds.

Based the above assertions and by , by Theorem 3.1, we have

| (A.12) |

From (A.8), (A.11) and (A.12), we have

| (A.13) | |||||

where .

Here, we will prove

| (A.14) |

which makes (A.13) valid. Indeed, on the hand, ; on the other hand, and imply that and . Because is -strongly convex in and the basic properties of strong convex functions (Nesterov, 2004), we have

Thus, we get (A.14).

For in (A.14), and is an increasing function on . Notice that implies . Therefore,

Then, by (A.14) and ,

Combining with (A.13), we complete the proof of Theorem 4.1.

A.4 Proofs of Theorems 4.3 and 4.4

Proof of Theorem 4.3: The proof is implied by combining proof of Corollary 4.1 with Lemma A.5 in Fan et al. (2019), which provides the order of Hessian difference on the 1st worker machine in the GLM. Further, it presents a contraction rate and a choice of .

Proof of Theorem 4.4: We only prove (a). Proof of (b) is similar to (a) by applying Bernstein’s inequality for sub-exponential random variables. Let .

First, we have the following basic facts:

(i) for some constant , which is determined by , under the condition (1) and or (2) .

(ii) for some constant in (i).

(iii) for some constants and .

By the fact (i), we can appropriately choose , such that with high probability . Then

(iv) , for any .

These facts can found in Lemmas A.6-A.8 of Fan et al. (2019).

Now we give the main proof. From (4.4), the law of large numbers and Theorem 8 in Yin et al. (2018), we have

Further, one gets

Together with (iv), we have

| (A.15) |

From (i)-(iii), we have , and simultaneously with high probability, where and are some positive constants. So, with high probability, we have

| (A.16) |

| (A.17) | |||||

In addition, from (ii), we have , and then . Therefore,

Thus,

| (A.18) |

We complete the proof (a).