Collaborative Optimization of Wireless Communication and Computing Resource Allocation based on Multi-Agent Federated Weighting Deep Reinforcement Learning

Abstract

As artificial intelligence (AI)-enabled wireless communication systems continue their evolution, distributed learning has gained widespread attention for its ability to offer enhanced data privacy protection, improved resource utilization, and enhanced fault tolerance within wireless communication applications. Federated learning further enhances the ability of resource coordination and model generalization across nodes based on the above foundation, enabling the realization of an AI-driven communication and computing integrated wireless network. This paper proposes a novel wireless communication system to cater to a personalized service needs of both privacy-sensitive and privacy-insensitive users. We design the system based on based on multi-agent federated weighting deep reinforcement learning (MAFWDRL). The system, while fulfilling service requirements for users, facilitates real-time optimization of local communication resources allocation and concurrent decision-making concerning computing resources. Additionally, exploration noise is incorporated to enhance the exploration process of off-policy deep reinforcement learning (DRL) for wireless channels. Federated weighting (FedWgt) effectively compensates for heterogeneous differences in channel status between communication nodes. Extensive simulation experiments demonstrate that the proposed scheme outperforms baseline methods significantly in terms of throughput, calculation latency, and energy consumption improvement.

Index Terms:

AI-enabled wireless communication systems, distributed learning, multi-agent federated weighting deep reinforcement learning (MAFWDRL), privacy protectionI Introduction

According to relevant studies [1, 2, 3, 4], the current number of wireless communication access networks is significantly and continuously growing. A large number of wireless devices will be connected to these networks, resulting in massive data transmission and task computation requirements. The traditional cloud computing paradigm is not suitable for latency-sensitive applications and fails to meet the wireless communication latency-sensitive demands of future network systems. To address this issue, researchers have introduced mobile edge computing (MEC) into the field of wireless communication. Internet of things (IoT) devices can offload data and computational tasks to edge servers. This could have several advantages to the future wireless systems: 1) Processing computational tasks at edge devices or edge servers reduces round-trip data transmission time, which is crucial for latency-sensitive communication scenarios like autonomous driving, virtual reality (VR), and augmented reality (AR). 2) Edge computing nodes can locally process computational tasks, which reduce server load and time delay for data transmission from terminal devices to server. 3) Edge computing can decentralize computation loads, thereby lowering the probability of network congestion. 4) Deploying computational resources at the edge allows for local task computation, thereby reducing security risks associated with long distance transmitting privacy-sensitive data.

In addition, considering the distributed deployment characteristics of the future wireless network, if the centralized learning method is still adopted in the future network, this will cause the algorithm strategy to be unable to expand to large-scale network systems, and cannot cope with increasingly complex wireless network communication needs. Therefore, in order to meet the requirements of future distributed communication scenarios, research on network optimization for distributed communication problems is essential. In a distributed mobile communication scenario, effectively managing computing tasks and communication resources is a complex issue that needs to jointly consider computing task priorities, resource allocation, and scheduling strategies to achieve efficient computing and communication services. Tang et al. [5] considered deployment of a deep learning model (deep learning, DL) to optimize the problem that is difficult to complete local training and upload weights to the edge server in time, which effectively reduces the computing delay. Zhao et al. [6] introduced federated learning (FL) method into edge computing, and the performance impact of data distribution differences in FL was explored, and a wireless data sharing resource optimization method was also designed. For privacy-sensitive and privacy-insensitive user equipments (UEs) in distributed networks, Huang et al. [7] adjusted an FL strategy to optimize computing resources allocation and reduce computing delay. Guo et al. [8] considered the multi-agent deep reinforcement learning (MADRL) method in terms of communication optimization based on distributed learning, and the channel access problem is optimized based on the centralized training and distributed execution (centralized training with decentralized execution, CTDE) architecture.

However, when multiple nodes are involved in computing and communication tasks, it is necessary to consider the issue of coordinating data updates and synchronization among nodes to ensure the accuracy and consistency of calculations. In addition, task computing and communication will also affect each other. When the computing task delay is too long, the waiting time for communication will increase, and vice versa, which will directly degrade the overall performance of the system. In addition, privacy and security issues are also important and difficult challenges in wireless communication systems [9]. Each node may face security risks such as tampering, eavesdropping, and unauthorized access during data transmission. For privacy-sensitive UEs with security risks, effective security measures must be taken to protect the confidentiality and integrity of the data.

Given the above problems, this paper proposes an optimization method of multi-agent federated deep reinforcement learning to solve the joint optimization problem of computing and communication resource allocation and simultaneously to ensure the privacy security of user communication. Our main contributions are as follows:

-

•

We develop an intelligent distributed computing and channel resource allocation method, using the MADRL method to train the local channel state, considering the dynamic optimization of computing and communication resources allocation of each node. The ultimate goal is to solve the joint non-convex optimization problem and coordinate computing and communication resource allocation to achieve optimal system performance.

-

•

We propose a new network framework MAFWDRL, which integrates FedWgt and MADRL. This framework takes into account state heterogeneity among training nodes and further improves distributed machine learning model performance. In addition, we design a novel exploration noise function to fully explore the wireless channel status and further optimize the network model of the communication system.

-

•

Considering privacy and security issues of wireless communication systems, we develop a variety of model training and selection methods for privacy-sensitive and privacy-insensitive UEs. Distributed computing and execution strategies are adopted when dealing with privacy-sensitive UEs, and privacy-sensitive UEs only consider performing computing tasks locally. UEs that are not sensitive to privacy can consider distributed or centralized computing and distributed execution strategies, and tasks can be sent back to the server for computing processing. This personalized service strategy can maximize the application efficiency of the intelligent model based on ensuring the needs of UEs.

The rest of the paper is organized as follows: Section II describes the details of the system model. Section III presents the proposed algorithm. Section IV reports and discusses the simulation results. Finally, Section V presents conclusions and future work.

II System Model and Problem Formulation

We consider a typical wireless communication network serving different classes of devices, as shown in Fig. 1, including privacy-sensitive and privacy-insensitive UEs. Each wireless communication device works in a non-unique communication standard (to simulate the diversity of communication devices in reality). Both the UEs and BS of the communication system are equipped with a neural network (NN) to learn and optimize the communication and computing resource allocation. The UEs (actor networks) perform their own state collection and action determination, and the BS (actor and critic networks) perform task calculation, state collection, and evaluation of the actor value, to achieve the purpose of adaptively adjusting the operating parameters of the communication system.

II-A Channel Propagation Model

To accurately simulate real and complex wireless communication scenarios, we consider the Log Distance Path Loss (LDPL) model [10] for our system. This model takes into account the random shadowing effects caused by obstacles such as hills, trees, and buildings, in different environments, to capture a broader range of propagation losses. Therefore, the path loss model between the BS and the -th UE in the -th time slot can be formulated as follows:

| (1) |

| (2) |

where called close-in reference distance, is obtained by using Friis path loss equation. The variable exists in the path loss equation to account for the effective aperture of the receiving antenna, which is an indicator of the antenna’s ability to collect power. represents field measurements distance, that specifically denotes a value of 1m. is the path loss exponent (PLE) that depends on the type of environment. is a zero-mean Gaussian distributed random variable with standard deviation expressed in dB, used only when there is a shadowing effect. Then, the signal-to-noise ratio (SNR) received by the -th UE can be expressed as follows:

| (3) |

where represents transmission power, the noise power spectral density is denoted as , and the -th UE bandwidth is denoted as . Consequently, the achievable rate of the distributed system during the -th time slot can be expressed as:

| (4) |

The system takes into account the broadband transmission demands of the future wireless network and investigates the enhancements of MAFWDRL in terms of multi-parameter adjustment performance. Consequently, we consider SU-MIMO in the wireless communication system. Herein, the BS is equipped with antennas, while the -th UE possesses antennas. During the -th observation slot , the channel transmission model of the -th UE transmitting data can be represented as follows:

| (9) | ||||

| (18) |

where represents the received data of the -th spatial stream, while denotes the vector employed to calculate the sum of . The channel matrix associated with the -th UE is symbolically represented as . Furthermore, we consider that the amount of data in the transmission queue within the -th time slot is denoted as , while the received data is denoted as . represents the total data volume of the -th user during the -th time slot, the reality of throughput can be formally expressed as follows:

| (19) |

Therefore, the actual throughput of overall system during the period can be expressed as:

| (20) |

However, the inherent instability of wireless systems caused by factors such as system congestion, access contention, and channel state fluctuations, the actual system throughput and Shannon limit within the -th time slot have the following constraints, which are denoted as:

| (21) |

II-B Computing Energy Consumption Model

In the context of AI-enabled future wireless communication systems, it is crucial to establish an AI framework that covers user needs and can intelligently optimize communication and computing resource allocation. To accomplish this, we introduce a binary indicator known as . Here, denotes the privacy-insensitive user category, while corresponds to the privacy-sensitive user category. It is imperative to address privacy and security concerns during the design phase of this model. Consequently, the computing architecture model, rooted in privacy and security considerations within this system, primarily focuses on adapting the following modules.

1) Privacy-insensitive computing model: For , the user type is categorized as privacy-insensitive. Consequently, as there are no privacy security concerns for this type of user, they have an option to select either a semi-centralized or decentralized training mode based on the current distributed learning paradigm. In this mode, the computing model takes into account the energy consumption associated with local actor NN training, server-side critic NN training energy consumption, task calculation energy consumption, as well as the influence of uploading and offloading training parameters on system performance. Therefore, we establish the initial CPU frequency of the -th local UE as , define the data size of each returned gradient vector (or environment observation space) in the UE as , and quantify the local computing task as . In line with the network training process, we denote the number of sampling samples for the -th round of batch gradient descent as , the number of cycles required to process 1 bit of data as , and the computed value of floating point operations per second (FLOPS) in a single cycle as . Consequently, the energy consumption [7] of the actor NN local calculation energy consumption in the -th time slot is expressed as:

| (22) |

| (23) |

| (24) |

where is the CPU effective switched capacitance. In addition, the energy consumption [11] of the actor NN training model is typically denoted as:

| (25) |

| (26) |

Furthermore, the system must also consider the energy consumption associated with computing by the critic NN on the server, which is specifically expressed as:

| (27) |

| (28) |

Similarly, the training energy overhead of the critic NN can be expressed as:

| (29) |

| (30) |

where the size of each channel state sample, representing the environmental observation space in -th UE, is defined as . We denote the number of sampling samples for the -th round of batch gradient descent as . Additionally, computational tasks are quantified as , while the CPU frequency of the server is denoted as . Furthermore, we represent the number of cycles required to process 1-bit data as .

2) Privacy-sensitive computing model: When the type , the user type is categorized as privacy-sensitive. Privacy-sensitive users must carefully consider data security concerns, thus need to deploy NNs locally and the adoption of a decentralized distributed training architecture. This training architecture primarily addresses the computational energy consumption of the local NN network, as well as the performance implications of uploading and downloading NN models on the system. Then the energy consumption in the -th time slot of NN local computing is expressed as:

| (31) |

| (32) |

| (33) |

The size of each return gradient in -th agent, which belongs to an NN, is defined as . Furthermore, represents the size of the channel state in the wireless system. In terms of the network training process, represents the number of sampling samples utilized for batch gradient descent in the -th round. Additionally, denotes the number of cycles required to process 1-bit. The local NN model training energy consumption is denoted as :

| (34) |

| (35) |

Clearly, based on the aforementioned computational model, the computation cost of the entire distributed system, which includes both privacy-sensitive and non-sensitive users, within the framework of the distributed learning architecture during the -th time slot, can be expressed as:

| (36) |

where refers to the energy consumption of the -th time slot in the overall distributed wireless system.

II-C Problem Formulation

Without loss of generality, to realize the MAFWDRL application, this paper constructs a wireless communication environment following Wi-Fi standards using the NS3-simulator. It is worth mentioning that the actions and states of individual agents can be adjusted as necessary to suit Non-Wi-Fi standard wireless scenarios. The objective of this paper is to optimize communication and computing resource allocation in scenarios with varying degrees of communication sensitivity, aiming to minimize the dispersion of task weights by federated learning in the wireless system, minimize federated energy consumption or calculation latency, and maximize system throughput. The objective function is denoted as . The communication network environment is influenced by factors such as aggregation frame length, contention window, and CPU frequency. In this environment, we define the decision variables as follows: ,,. The optimization problem of the system for the -th training round is formulated as:

| (37a) | |||

| (37b) | |||

| (37c) | |||

| (37d) | |||

| (37e) | |||

| (37f) | |||

| (37g) |

where denotes system throughput in -th time slot, and calculation energy consumption is denoted as . Constraints (24a) and (24b) define the limits on CPU frequency for STAs and AP, while constraints (24c) and (24d) specify the limits on CW and aggregate frame length in the action space for channel status. Additionally, constraint (24e) presents the maximum queue length limit for channel transmission. Constraints (24f) and (24g), respectively, guarantee the maximum computation delay for privacy-insensitive and privacy-sensitive tasks, thereby ensuring timely system responsiveness and preventing task failures or performance degradation caused by exceeding the expected computation time.

We consider that the wireless system is dynamic and intricate, leading to unpredictable communication conditions. To guarantee the robustness of the system, it is crucial to ensure its adaptability. Evidently, the interdependence among the variables , , and renders the objective function as a non-convex optimization problem for the wireless system. Consequently, we employ the MAFWDRL method to acquire the optimal action strategy for the system, thereby enabling the objective function to obtain an optimal action at any given point in time.

III resource allocation and energy consumption optimization based on MAFWDRL

In this section, we present the MAFWDRL algorithm, which combines FedWgt and MADRL to address the non-convex optimization problem in wireless systems. The algorithm is intelligently designed for wireless systems, as illustrated in Fig. 2. Furthermore, we examine the impact of privacy issues on the algorithm architecture, discuss the advantage of the FedWgt strategy, and design the explore noise function for MADRL.

III-A Federated Weighting Design

The discrepancy in channel states between different UEs is observed in wireless communication systems, which are influenced by multiple factors, including noise, interference, communication distance, and resource allocation. The performance of FL is affected by the data state distribution (IID and Non-IID) [12, 13, 14]. Based on this, we propose to assess the quality of channels by defining an abstract reward for the system, .

We consider the communication states between each training round to be mutually independent. The channel state for the -th agent during the training process is denoted as , and the probability of the state occurring is denoted as . The local reward of -th agent in the wireless system is . The state of the global channel characteristic is denoted as , and the probability of the state occurring is . Consequently, the global state reward in the wireless system is . Therefore, the distributed learning expected reward for the -th agent under the current channel state can be expressed as:

| (38) |

where is the amount of data to be transmitted. Similarly, in this case, if centralized learning is adopted, the expected reward that should be pursued by the -th agent is denoted as:

| (39) |

where denotes the aggregate data volume intended for global transmission, and represents the total number of UEs. The deviation between local and global states is likely to result in a decrease in model accuracy as a consequence of NN model parameter . Within each training round, the impact of this factor on the accuracy of the neural network model can be expressed as follows:

| (40) |

| (41) |

where represents the model gradient of the current training round, and is 1-norm. Based on the corollary in [6], the difference between the local and global system states can be captured by establishing the correlation between federation weight and loss accuracy. Consequently, based on reference [6, Corollary 1], the model accuracy loss caused by the discrepancy of channel states can be refined as follows:

| (42) |

where , is the learning rate, and are the derivable constraint coefficients, representing the Lipschitz and smooth assumptions respectively. Modifying the weight parameter can alleviate the discrepancy between the local and global states, which can reduce the upper bound of the model accuracy loss . Hence, to mitigate the impact of this discrepancy, we define the weight of FedWgt as follows [6, eq.(17)]:

| (43) |

where is the FedWgt coefficient.

III-B Exploration Noise Design for Off-policy DRL

Introducing exploration noise is an effective approach to address the limited exploration capability in off-policy DRL. During the learning process of NN, our objective is to encourage extensive exploration of the environment by the early-stage network, enabling the collection of a diverse range of environmental states. With the exploration progressing, the scale of noise decreases at an accelerated rate. As the later-stage NN network approaches convergence, the noise level tends to a lower bound for the exploration. Thus, it is essential to design a noise function that ensures the rate of change increases gradually. This strategy facilitates reasonable exploration and aligns better with the NN learning characteristics. As a result, several conditions need to be met in the design of the noise function .

1) For a function defined on set , if for any points , , and on the function where hold, then the condition

| (44) |

is always satisfied.

2) The noise decay curve defined on set is monotonically decreasing and the rate of decrease is monotonically increasing. Within the domain of , it exhibits a concave function with gradually increasing and monotonically decreasing rate of change, expressed as:

| (45) |

III-C MAFWDRL algorithm design for distributed wireless system

From a mathematical perspective, a multi-agent task can be defined as a decentralized partially observable Markov decision process (Dec-POMDP) [15, 16, 17], where each agent independently selects actions to react to the environment ultimately. The Markov game of agents can be defined as the set of states , including the set of action spaces , and the set of observation spaces , where each agent selects actions based on the policy . In addition, this paper considers the issue of local training discrepancies between , and , and introduces FedWgt to integrate the distributed NN models. Thus, the multi-agent policy can be adjusted as follows: , where . The state transition equation for the entire environment can be represented as: . At this point, the mapping between actions and states of the -th agent under the current conditions can be directly characterized by rewards: , where . The goal of each agent is to maximize long-term expected returns, i.e., , where is the discount factor, and is a complete wireless task period in the wireless system. The Dec-POMDP problem in this paper, specifically in the context of distributed wireless systems, can be represented as a tuple .

1) Action Space : In this paper, the optimization of MAC and CPU parameters to optimize the access and computation efficiency of the wireless channel is considered. The optimization is performed using an NN model, which takes into account the learned channel feature and the state of the task volume . As a result, the action of agent at slot is defined as , where represents the contention window, and refers to the aggregation of frame length. Additionally, and denote the CPU computing frequency of the server and clients, respectively.

2) Observation Space : The observation space in the time slot is focused on by each agent in order to capture the changing environmental states within the system. This observation space enables the direct reflection of the communication state information of the wireless system. The information is primarily composed of the following three parts: 1) SNR of each agent is considered (ignore the interference). The SNR is influenced by factors such as user movement and environmental noise, thereby allowing for the intuitive depiction of the system’s communication quality. 2) The packet loss rate of the system is examined. With the number of ACKs received by the device associated with agent ( ) and the number of packets sent by agent ( ) within the time slot , the packet loss rate can be calculated as . This indicator provides insights into the extent of data loss during transmission. 3) The percentage of idle time is taken into account. The proportion of data transmission time during the TXOP period by agent ( ), denoted as , is monitored. Utilizing the formula allows for the estimation of the proportion of idle time during transmission for agent . Then, the local state of agent at slot is denoted as:

| (46) |

At the present moment, the observation space of all agents is represented as . Furthermore, the global state information, in the context of the off-policy, can be denoted as , where represents the action space of all agents.

3) Reward : The optimization objective function is defined as , where is strongly related to the reward, serving as a positive indicator for evaluating network performance. Furthermore, when the system exceeds the defined maximum permissible computing latency threshold, a penalty must be imposed against the DRL model. This penalty is realized by adjusting the reward to a negative value (i.e., ). Such an adjustment aims to instruct the DRL model in recognizing and quantifying the adverse effects that incorrect actions can have on the system’s performance. Consequently, it is inferred that a larger delay signifies a greater deviation of the network from the correct direction. When prioritizing energy consumption, the system demands lower energy consumption within the computing delay limit to achieve an improved overall system reward. In addition, in order to eliminate the impact of numerical deviations of , and on the DRL model as much as possible, we use the arctangent normalization function (i.e., ) to normalize the reward . Given these considerations, the rewards for wireless systems are defined as follows:

| (47) |

where , , and represent the throughput, computing latency, and computing energy consumption of the -th STA, respectively.

The objective of our distributed wireless system is to optimize the throughput and calculation latency or calculation energy consumption of the overall system. If the actions are entirely independent, certain constraints will arise that impact the system as a whole. Taking these considerations into account, we propose a system optimization framework based on the MAFWDRL method for wireless systems with privacy-sensitive and privacy-insensitive requirements. We consider soft MADRL under the off-policy mechanism as the core optimization algorithm. Our algorithm, as shown in Algorithm 1, consists of three main components: 1) Privacy-sensitive users learn actor NN and critic NN based solely on local information. 2) Privacy-insensitive users, having no concerns about privacy issues, would benefit more from prioritizing the semi-centralized training mode to enhance system performance. 3) A FedWgt learning approach is employed to optimize the global NN model.

1) Fully decentralized MADRL algorithm for privacy-sensitive: Due to data security constraints and the privacy concerns of sensitive users (represented by the set , where the identifier ), a fully decentralized soft MADRL optimization algorithm is considered more suitable for communication applications. In this context, the Critic network parameters of agents are denoted as , and the policy network parameter as . The action is governed by the policy function . The updated formula for the training of each critic is as follows:

| (48) |

| (49) |

where represents the -th time slot, represents mini-batch of samples, and the target network parameter denoted as , is subject to updates according to the following equation:

| (50) |

where represents the learning coefficient. Similarly, the updated formula based on Q-value of agent for the training of each actor is as follows:

| (51) |

where is the policy gradient of the NN model for sensitive users.

2) Semi-centralized MADRL algorithm for privacy-insensitive: For privacy-insensitive users , with the identifier , regardless of data security constraints, sufficient state information can be provided for Critic training. Thus, a model framework featuring centralized training and distributed execution is more conducive to the learning of the system environment by the privacy-insensitive users (i.e., semi-centralized MADRL). The observation space of the -th privacy-insensitive agent, denoted as , is represented as . The action of each agent is denoted as , and the policy function follows . Therefore, the centralized action-value function can be expressed as .The updated formula for the training of centralized critic NN is as follows:

| (52) |

| (53) |

The training update formula, within the decentralized architecture, can be expressed as follows:

| (54) |

where is the policy gradient of the NN model for insensitive users.

3) Model Ensemble Based on FedWgt: The fully centralized DRL for privacy-sensitive users and the semi-centralized DRL weighted integration strategy for privacy-insensitive users can be expressed as follows:

| (55) |

In order to address the disparity between distributed learning and centralized learning in wireless network optimization, the substitution of the currently learned model parameters and with is contemplated for different learning architectures. By incorporating into the training process, we aim to bridge the gap and compensate for the differences between the two learning paradigms.

The total complexity of the MAFWDRL algorithm is primarily determined by several key factors: the number of agents, the complexity involved in the NN’s forward and backward propagation, the complexity associated with model integration, the interactions between the NN and wireless systems, and the overall number of iterations. The complexity arising from the training process is influenced by the type of training mode employed. Specifically, for privacy-sensitive agents, the training complexity is given by , whereas for privacy-insensitive agents, it stands at . Here, denotes the number of neurons in the -th layer of the network, and represents the total number of layers in the NN. Furthermore, both the complexities of model integration and agent interactions exhibit a linear relationship with the number of iterations , represented as . Consequently, the overall complexity of the MAFWDRL algorithm can be accurately quantified as .

IV Simulation results

The experiment involves the construction of a cross-platform collaboration simulation for NS3 (communication terminal) and Python (AI terminal) using NS3AI [18]. This simulation platform ensures the reality and reliability of the communication system simulation. The communication process between the STAs and the AP is fully captured by the NS3 terminal, spanning from the application layer to the physical layer. However, our focus lies in the adjustment of certain network parameters in the MAC layer through AI methods. Within this section, we present simulation results to validate the effectiveness of the proposed MAFWDRL algorithm for jointly optimizing computing and communication resource allocation in distributed wireless systems. The impact of adjusting specific parameters of the MAFWDRL algorithm and the execution strategy on wireless communication networks is considered in our analysis.

We construct a mobility model of wireless communication based on NS3-simulator to simulate UEs dynamic access control. We divide the task period into time slots to observe a variation of wireless channel state information within the time slot length . Specifically, a wireless communication system is considered, comprising AP, privacy-sensitive STAs, and privacy-insensitive STAs. The AP is designed to support up to antennas, while each STA is equipped with antennas and is integrated with an individual AI model. Furthermore, the STAs are distributed randomly within a circular area of meters radius. Each STA is assigned a constant velocity of , with a maximum permissible movement radius of meters. Fig. 3 illustrates an instance of the visualization system built on the basis of the NS3-simulator. In Fig. 3 (a) and 3(b), we observe the spatial distribution of user locations and their communication statuses at the 10th and 14th seconds, respectively. The blue arrow symbolizes the connection between the STA and the AP at that specific moment, indicating the establishment of a communication link. Additionally, to meet the constraints imposed by the wireless communication environment, the actor and critic NN models are designed with hidden layers, with one hidden layer containing neurons and uses ReLU activation. This configuration is chosen deliberately to avoid adverse effects on the wireless features [19] and the risk of gradient vanishing. For a detailed summary of the remaining system parameters, please refer to Table I [11, 15, 20, 21]

| Parameters for Communication System | Values |

|---|---|

| Communication Frequency | 5GHz |

| Maximum Channel Bandwidth | 80 MHz |

| AP/STA TX power | 23/20 dBm |

| Antenna TX/RX gain | 3 dB |

| Maximum CPU frequency of AP | cycle/sec |

| Maximum CPU frequency of STA | cycle/sec |

| Effective Switched Capacitance | |

| Maximum Number of AP/STA Antennas | 4/2 |

| CPU Calculation Cycle of 1-bit | 330 cycle/bit |

| FLOPS Calculation Rate of 1-cycle | 8 flops/cycle |

| Number of Privacy-sensitive STAs | 8 |

| Number of Privacy-insensitive STAs | 8 |

| Interaction step | 200 ms |

| Parameters for MAFDRL | Values |

| Memory Capacity | 100 |

| Actor NN Learning Rate | 0.002 |

| Critic NN Learning Rate | 0.02 |

| Soft Update Parameter | 0.1 |

| Mini-batch Size | 8 |

| Discount rate | 0.1 |

IV-A Experiments on noise design for off-policy MADRL

In this section, our primary objective is to conduct a comprehensive and equitable assessment of noise adjustment’s impact on the performance of distributed wireless systems. To achieve this, we have taken several measures. First, we have categorized all experimental users within the communication scenario as privacy non-sensitive, ensuring a level playing field for our evaluations. Additionally, we have employed the off-policy DRL algorithm MADDPG for our testing procedures.

To facilitate meaningful comparisons, we have considered two distinct noise adjustment functions. The first is the conventional noise linear decrease function, denoted as . The second is an illustrative example, which adheres to the criteria outlined in Section III, Subsection B, expressed as . Here, it is important to note that serves as a controlling factor, determining the maximum offset scale for actions that MADDPG can select. Meanwhile, and play crucial roles in regulating the magnitude of decrease in each iteration for the linear function and the concave function , respectively. Our criteria for assessing system performance depend on the observed throughput of the entire system, which provides an objective basis for evaluating the various noise adjustment approaches.

Fig. 4 shows the performance comparison results of MADDPG in optimizing wireless communication throughput using the noise design method and the baseline scheme outlined in this paper. Specifically, Figs. 4(a) and 4(b) examine the impact of noise change functions, denoted as and , at different offset scales () and various change rates ( and ) on the performance of wireless communication networks. When comparing Figs. 4(a) and 4(b), it becomes apparent that the model exhibits greater stability when in comparison to when . This increased stability at is attributed to its ability to provide a better foundation for the off-policy policy model and a broader exploratory space. The change rate, determined by and , influences the distribution range of communication samples within the exploratory space. Optimal values for these change rates result in a richer set of samples in the memory replay.

However, it is crucial to strike a balance with the change rates. If and are set too low, the offset remains consistently at the maximum state, skewing the distribution of communication samples towards larger or smaller values. Conversely, excessively high change rates can limit exploration capabilities of the model, hindering effective training of communication models. For wireless communications, selecting and setting the change rate leads to relatively stable model performance. Additionally, upon closer examination of Fig. 4, we observe that the state distribution of during the initial exploration phase is broader compared to that of .

IV-B Experiments on MAFWDRL

In this section, we conduct experiments involving scenarios depicted in Fig. 2, encompassing both privacy-sensitive and non-sensitive STAs. Utilizing noise design function detailed in this paper, we conducted a comparative analysis of the application between the classic federated averaging (FedAvg) strategy for MADRL (, MAFADRL) and our proposed FedWgt strategy for MADRL (, MAFWDRL) under our proposed model architecture. Among them, these experiments were conducted under varying change rates of exploration function . Our main objective was to evaluate the overall system throughput as the reward feedback for our model. This measure provides a more intuitive reflection of the model’s capability to optimize the communication network, and the results are presented in Fig. 5. We set the change rates to three different values, specifically , , and , for the comparative analysis.

Our objectives were twofold: firstly, to validate the effectiveness of the design and secondly, to evaluate the optimization performance of MAFWDRL in a distributed communication system. The results clearly indicate that our proposed method has substantially enhanced the overall system throughput while ensuring greater model stability when compared to MAFADRL. The heterogeneity causes variability in the performance of DRL models, a phenomenon that our experimental results confirm. Models employing the federated averaging strategy remain relatively stable, unaffected by . Conversely, our MAFWDRL method, as proposed in this paper, exhibits results consistent with those presented in Fig. 4, achieving superior model performance, particularly at .

Fig. 6 presents the convergence results of the MAFWDRL model, which involves joint optimization of computing consumption and throughput. As illustrated in Fig. 6, several notable trends emerge. Initially, as the model iterates, the average loss of the agents shows a diminishing trend while fluctuating. Simultaneously, the average reward is increasing while fluctuating. After approximately 100 iterations, the model reaches a state of convergence. These trends observed during model training align closely with our ideal expectations.

To thoroughly assess the optimization capabilities of MAFWDRL within distributed wireless networks and its adaptability to various real-world challenges, we conducted experiments employing two distinct reward settings:

1) Joint Optimization of Throughput Performance and Delay Priority: Our first reward setting focuses on achieving the dual objectives of minimizing computing delay while maximizing throughput, defined as . Essentially, we aim to strike a balance between minimizing the delay and maximizing system throughput. The objective is to adjust the reward to minimize computing delay and maximize throughput.

2) Joint Optimization of Throughput and Computing Energy under Maximum Computing Delay Constraints: Our second reward setting emphasizes the optimization of throughput and computing energy while adhering to a stringent constraint on the maximum permissible computing delay, as shown in Formula (34). In this case, we aim to jointly optimize throughput and computing energy while ensuring that the computed solutions adhere to the limitations imposed by Formula (34).

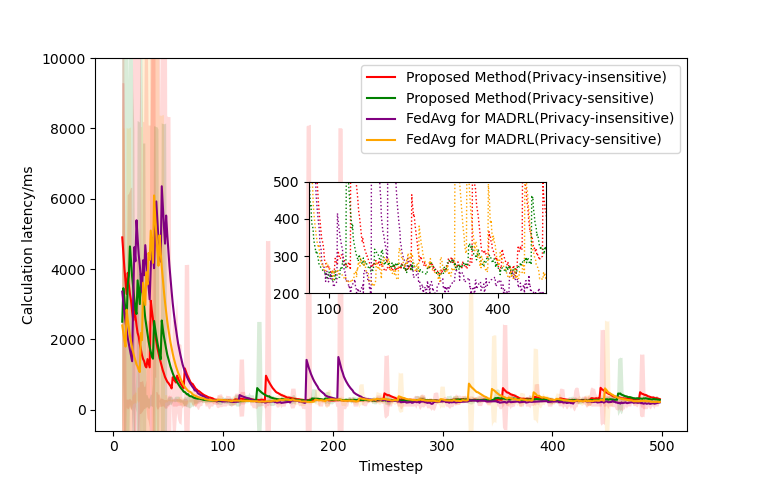

Figs. 7, 8, and 9 depict the performance variations in model system throughput, calculation latency, and energy consumption of the MAFADRL and the proposed MAFWDRL under the aforementioned two reward settings. The maximum calculation delay constraint of reward 2) is set to . Figs. 7(a) and 8(a) illustrate the training curves for throughput and calculation delay under reward 1), while Figs. 7(b) and 8(b) display the training outcomes for reward 2).

In the performance comparison between Fig. 7(a) and Fig. 7(b), we observe that the convergence throughput of the system with reward 1) is approximately 500 Mbps, surpassing the 290 Mbps achieved with reward 2). The setting of this penalty mechanism restricts the throughput of the system to a certain extent to ensure that the system can meet the computing delay constraints. As for the reward scheme 1) (which is no computing delay constraint), the agent will be guided to act in the direction of maximizing . Therefore, reward 1) is more conducive to throughput improvement than reward 2). The simulation analysis results align with our theoretical analysis, thereby enabling the MAFWDRL model to effectively address the diverse communication needs for the future. Furthermore, in Fig. 7, we note that the throughput for both privacy-sensitive and privacy-insensitive users remains relatively consistent. This consistency arises from the federated weighted policy, which synchronously updates all training model parameters on the server and transmits them to the agent within the same communication environment. This approach allows the model to compensate for differences between privacy-sensitive and privacy-insensitive training modes. From the perspective of algorithm application, the simulation results show that the throughput performance of the proposed FedWgt strategy is significantly better than that of the FedAvg strategy in scheme 1) and scheme 2). Theoretical analysis shows that the greater the difference between agents, the better the FedWgt strategy is than the FedAvg strategy, and the model has stronger generalization ability.

Fig. 8 present the simulation results for the calculation latency of both MAFADRL and our proposed MAFWDRL models, utilizing two distinct reward schemes. Figs 8(a) and 8(c) depict the global average latency and convergence latency achieved under reward scheme 1, while Figs. 8(b) and 8(d) illustrate the outcomes for reward scheme 2. The global average latency is indicative of the multi-agent algorithm’s stability, whereas convergence latency reflects the optimal performance attainable by the model. Under reward scheme 2, the MAFWDRL exhibits superior performance in terms of global average latency, maintaining stability within a 500 ms latency constraint, thereby demonstrating enhanced algorithmic stability. Conversely, the MAFADRL achieves a lower convergence latency, correlated to its reduced throughput compared to MAFWDRL. With reward scheme 1, both MAFWDRL and MAFADRL display not stable enough for the wireless system. MAFWDRL’s higher system throughput results in a larger optimal convergence latency which absent a latency constraint. Furthermore, our simulations reveal that under the architecture designed in this study, both FedAvg and FedWgt enable agents to converge to an improved system state.

Fig.s 7 and 8 present the results that form the basis for the analysis in Fig. 9, which illustrates the total energy consumption of both MAFADRL and the proposed MAFWDRL. It is observed that MAFWDRL demonstrates superior system throughput compared to MAFADRL. Consequently, the lower bound for the total energy consumption of MAFWDRL is expected to be higher than that of MAFADRL. Moreover, the trend in total energy consumption depicted in Fig. 9 is consistent with the patterns observed in Fig. 7, further corroborating this conclusion.

V Conclusion

In this paper, we present a novel wireless intelligent distributed communication system based on MAFWDRL. The system comprises two core modules: the MADRL module and the FedWgt module. To address the crucial issue of privacy security, we have devised distinct MADRL training modes for various privacy categories. These training modes effectively tackle the challenge of distributed wireless resource allocation while ensuring robust user privacy protection. However, one limitation of this training approach is that it mainly concentrates on the local communication status of individual agents, overlooking the intricate interplay of communication resource allocation across the entire distributed system. To overcome this limitation, we introduce a FedWgt strategy that considers the diverse training needs of each agent model while protecting the security of privacy. This innovative approach optimizes the global critic model, endowing it with the versatility to accommodate different privacy types. Additionally, we delve into the design of the noise curve within the off-policy DRL model, enabling the model to explore more extensively, enriching the experiment pool of the system, and elevating model optimization performance. The results of our simulation experiments underscore the substantial enhancements achieved by our MAFWDRL model. Notably, it significantly boosts the throughput of the entire communication system, and diminishes computing latency and energy consumption, all while maintaining stringent privacy security requisites.

Nevertheless, the intelligent optimization of wireless communication scenarios continues to confront a range of increasingly complex practical challenges. Looking forward, our research will delve into both intention-driven and data-driven generative AI-aided approaches within wireless communication systems [22, 23], which aims to enhance the model’s generalization capabilities, adaptability, and interpretability. These research are poised to expedite the evolution of intelligent communications, aligning them with the demands of the forthcoming 6G intelligent era.

References

- [1] C. Deng, X. Fang, X. Han, X. Wang, L. Yan, R. He, Y. Long, and Y. Guo, “IEEE 802.11 be Wi-Fi 7: New challenges and opportunities,” IEEE Communications Surveys & Tutorials, vol. 22, no. 4, pp. 2136–2166, 2020.

- [2] Cisco annual internet report (2018–2023) white paper, Mar 2020.

- [3] 6G: The Next Horizon White Paper, Jan 2022.

- [4] W. Xu, Z. Yang, D. W. K. Ng, M. Levorato, Y. C. Eldar, and M. Debbah, “Edge learning for B5G networks with distributed signal processing: Semantic communication, edge computing, and wireless sensing,” IEEE Journal of Selected Topics in Signal Processing, vol. 17, no. 1, pp. 9–39, 2023.

- [5] S. Tang, L. Chen, K. He, J. Xia, L. Fan, and A. Nallanathan, “Computational intelligence and deep learning for next-generation edge-enabled industrial iot,” IEEE Transactions on Network Science and Engineering, vol. 10, no. 5, pp. 2881–2893, 2023.

- [6] Z. Zhao, C. Feng, W. Hong, J. Jiang, C. Jia, T. Q. S. Quek, and M. Peng, “Federated learning with Non-IID data in wireless networks,” IEEE Transactions on Wireless Communications, vol. 21, no. 3, pp. 1927–1942, 2022.

- [7] N. Huang, M. Dai, Y. Wu, T. Q. S. Quek, and X. Shen, “Wireless federated learning with hybrid local and centralized training: A latency minimization design,” IEEE Journal of Selected Topics in Signal Processing, vol. 17, no. 1, pp. 248–263, 2023.

- [8] Z. Guo, Z. Chen, P. Liu, J. Luo, X. Yang, and X. Sun, “Multi-agent reinforcement learning-based distributed channel access for next generation wireless networks,” IEEE Journal on Selected Areas in Communications, vol. 40, no. 5, pp. 1587–1599, 2022.

- [9] X. Huang, P. Li, H. Du, J. Kang, D. Niyato, D. I. Kim, and Y. Wu, “Federated learning-empowered AI-generated content in wireless networks,” arXiv:2307.07146, 2023.

- [10] M. Viswanathan, Wireless Communication Systems in Matlab (second edition). Independently published, 2020.

- [11] WikiChip. FLOPS. https://en.wikichip.org/wiki/flops. Accessed on: June 23, 2023.

- [12] X. Li, K. Huang, W. Yang, S. Wang, and Z. Zhang, “On the convergence of FedAvg on Non-IID data,” arXiv:1907.02189, 2019.

- [13] H. Zhu, J. Xu, S. Liu, and Y. Jin, “Federated learning on non-IID data: A survey,” Neurocomputing, vol. 465, pp. 371–390, 2021.

- [14] Z. Yang, W. Xia, Z. Lu, Y. Chen, X. Li, and Y. Zhang, “Hypernetwork-based physics-driven personalized federated learning for ct imaging,” IEEE Transactions on Neural Networks and Learning Systems, 2023.

- [15] O. Frans A and A. Christopher, A concise introduction to decentralized POMDPs. Springer, 2016, vol. 1.

- [16] Z. Guo, Z. Chen, P. Liu, J. Luo, X. Yang, and X. Sun, “Multi-agent reinforcement learning-based distributed channel access for next generation wireless networks,” IEEE Journal on Selected Areas in Communications, vol. 40, no. 5, pp. 1587–1599, 2022.

- [17] M. L.Littman, “Markov games as a framework for multi-agent reinforcement learning,” in Machine learning proceedings 1994. Elsevier, 1994, pp. 157–163.

- [18] H. Yin, P. Liu, K. Liu, L. Cao, L. Zhang, Y. Gao, and X. Hei, “ns3-ai: Fostering artificial intelligence algorithms for networking research,” in Proceedings of the 2020 Workshop on ns-3, 2020, pp. 57–64.

- [19] Y. E. Sagduyu, S. Ulukus, and A. Yener, “Task-oriented communications for nextg: End-to-end deep learning and ai security aspects,” IEEE Wireless Communications, vol. 30, no. 3, pp. 52–60, 2023.

- [20] T. Q. Dinh, J. Tang, Q. D. La, and T. Q. S. Quek, “Offloading in mobile edge computing: Task allocation and computational frequency scaling,” IEEE Transactions on Communications, vol. 65, no. 8, pp. 3571–3584, 2017.

- [21] S. Bi, L. Huang, and Y.-J. A. Zhang, “Joint optimization of service caching placement and computation offloading in mobile edge computing systems,” IEEE Transactions on Wireless Communications, vol. 19, no. 7, pp. 4947–4963, 2020.

- [22] Y. Huang, M. Xu, X. Zhang, D. Niyato, Z. Xiong, S. Wang, and T. Huang, “AI-generated network design: A diffusion model-based learning approach,” IEEE Network, pp. 1–1, 2023.

- [23] H. Du, R. Zhang, Y. Liu, J. Wang, Y. Lin, Z. Li, D. Niyato, J. Kang, Z. Xiong, S. Cui, B. Ai, H. Zhou, and D. In Kim, “Beyond deep reinforcement learning: A tutorial on generative diffusion models in network optimization,” arXiv:2308.05384, 2023.