Collaborative Machine Learning with Incentive-Aware Model Rewards

Abstract

Collaborative machine learning (ML) is an appealing paradigm to build high-quality ML models by training on the aggregated data from many parties. However, these parties are only willing to share their data when given enough incentives, such as a guaranteed fair reward based on their contributions. This motivates the need for measuring a party’s contribution and designing an incentive-aware reward scheme accordingly. This paper proposes to value a party’s reward based on Shapley value and information gain on model parameters given its data. Subsequently, we give each party a model as a reward. To formally incentivize the collaboration, we define some desirable properties (e.g., fairness and stability) which are inspired by cooperative game theory but adapted for our model reward that is uniquely freely replicable. Then, we propose a novel model reward scheme to satisfy fairness and trade off between the desirable properties via an adjustable parameter. The value of each party’s model reward determined by our scheme is attained by injecting Gaussian noise to the aggregated training data with an optimized noise variance. We empirically demonstrate interesting properties of our scheme and evaluate its performance using synthetic and real-world datasets.

1 Introduction

Collaborative machine learning (ML) is an appealing paradigm to build high-quality ML models. While an individual party may have limited data, it is possible to build improved, high-quality ML models by training on the aggregated data from many parties. For example, in healthcare, a hospital or healthcare firm whose data diversity and quantity are limited due to its small patient base can draw on data from other hospitals and firms to improve the prediction of some disease progression (e.g., diabetes) (Center for Open Data Enterprise, 2019). This collaboration can be encouraged by a government agency, such as the National Institute of Health in the United States. In precision agriculture, a farmer with limited land area and sensors can combine his collected data with the other farmers to improve the modeling of the effect of various influences (e.g., weather, pest) on his crop yield (Claver, 2019). Such data sharing also benefits other application domains, including real estate in which a property agency can pool together its limited transactional data with that of the other agencies to improve the prediction of property prices (Conway, 2018).

However, any party would have incurred some nontrivial cost to collect its data. So, they would not altruistically donate their data and risk losing their competitive edge. These parties will be motivated to share their data when given enough incentives, such as a guaranteed benefit from the collaboration and a fair higher reward from contributing more valuable data. To this end, we propose to value each party’s contributed data and design an incentive-aware reward scheme to give each party a separate ML model as a reward (in short, model reward) accordingly. We use only model rewards and exclude monetary compensations as (a) in the above-mentioned applications, every party such as a hospital is mainly interested in improving model quality for unlimited future test predictions; (b) there may not be a feasible and clear source of monetary revenue to compensate participants (e.g., due to restrictions, the government may not be able to pay firms using tax revenues); and (c) if parties have to pay to participate in the collaboration, then the total payment is debatable and many may lack funding while still having valuable data.

How then should we value a party’s data and its effect on model quality? To answer this first question, we propose a valuation method based on the informativeness of data. In particular, more informative data induces a greater reduction in the uncertainty of the model parameters, hence improving model quality. In contrast, existing data valuation methods (Ghorbani & Zou, 2019; Ghorbani et al., 2020; Jia et al., 2019a, b; Yoon et al., 2020) measure model quality via its validation accuracy, which calls for a tedious or even impossible process of needing all parties to agree on a common validation dataset. Inaccurate valuation can also arise due to how a party’s test queries, which are likely to change over time, differ from the validation dataset. Our data valuation method does not make any assumption about the current or future distribution of test queries.

Next, how should we design a reward scheme to decide the values of model rewards for incentivizing a collaboration? Intuitively, a party will be motivated to collaborate if it can receive a better ML model than others who have contributed less valuable data, and than what it can build alone or get from collaborating separately with some parties. Also, the parties often like to maximize the total benefit from the collaboration. These incentives appear related to solution concepts (fairness, individual rationality, stability, and group welfare) from cooperative game theory (CGT), respectively. However, as CGT assumptions are restrictive for the uniquely freely replicable111Data and model reward, like digital goods, can be replicated at zero marginal cost and given to more parties. nature of our model reward, these CGT concepts have to be adapted for defining incentives for our model reward. We then design a novel model reward scheme to provide these incentives.

Finally, how can we realize the values of the model rewards decided by our scheme? How should we modify the model rewards or the data used to train them? An obvious approach to control the values of the model rewards is to select and only train on subsets of the aggregated data. However, this requires considering an exponential number of discrete subsets of data, which is intractable for large datasets such as medical records. We avoid this issue by injecting noise into the aggregated data from multiple parties instead. The value of each party’s model reward can then be realized by simply optimizing the continuous noise variance parameter.

The specific contributions of our work here include:

-

Proposing a data valuation method using the information gain (IG) on model parameters given the data (Sec. 3);

-

Defining new conditions for incentives (i.e., Shapley fairness, stability, individual rationality, and group welfare) that are suitable for the freely replicable nature of our model reward (Sec. 4.1). As these incentives cannot be provided all at the same time, we design a novel model reward scheme with an adjustable parameter to trade off between them while maintaining fairness (Sec. 4.2);

-

Injecting Gaussian noise into the aggregated data from multiple parties and optimizing the noise variance parameter for realizing the values of the model rewards decided by our scheme (Sec. 4.3); and

-

Demonstrating interesting properties of our model reward scheme empirically and evaluating its performance with synthetic and real-world datasets (Sec. 5).

To the best of our knowledge, our work here is the first to propose a collaborative ML scheme that formally considers incentives beyond fairness and relies solely on model rewards to realize them. Existing works (Jia et al., 2019a, b; Ohrimenko et al., 2019) have only looked at fairness and have to resort to monetary compensations if considered.

2 Problem Formulation

In our problem setting, we consider honest and non-malicious parties, each of whom owns some data and assume the availability of a trusted central party222In reality, such a central party can be found in established data sharing platforms like Ocean Protocol (Ocean Protocol Foundation, 2019) and Data Republic (https://datarepublic.com). who aggregates data from these parties, measures the value of their data, and distributes a resulting trained ML model to each party. We first introduce the notations and terminologies used in this work: Let denote a set of parties. Any subset is called a coalition of parties. The grand coalition is the set of all parties. Parties will team up and partition themselves into a coalition structure . Formally, is a set of coalitions such that and for any and . The data of party is represented by where and are the input matrix and output vector, respectively. Let denote the value of the (aggregated) data of any coalition . We use to represent to ease notation. For each party , denotes the value of its received model reward.

The objective is to design a collaborative ML scheme for the central party to decide and realize the values of model rewards distributed to parties . The scheme should satisfy certain incentives (e.g., fairness and stability) to encourage the collaboration. Some works (Ghorbani & Zou, 2019; Jia et al., 2019a, b) have considered similar problems and can fairly partition the (monetary) value of the entire aggregated data into for . They achieved this using results from cooperative game theory (CGT). Our problem, however, differs and cannot be addressed directly using CGT. This is due to the freely replicable nature of our model reward – the total value of received model rewards over all parties can exceed .

3 Data Valuation with Information Gain

A set of data is considered more valuable (i.e., higher ) if it can be used to train a higher-quality (hence more valuable) ML model. Existing data valuation methods (Ghorbani & Zou, 2019; Ghorbani et al., 2020; Jia et al., 2019b; Yoon et al., 2020) measure the quality of a trained ML model via its validation accuracy. However, these methods require the central party to carefully select a validation dataset that all parties must agree on. This is often a tedious or even impossible process, especially if every party’s test queries, which are likely to change over time, differ from the validation dataset.333In the unlikely event that all parties can agree on a common validation dataset, validation accuracy would be the preferred measure of model quality and hence data valuation method. For example, two private hospitals and aggregate their data for diabetes prediction. and prefer accurate test predictions for female and young patients, respectively. Due to the differences in their data sizes, data qualities, and preferred test queries, it is difficult for the central party to decide the demographics of the patients in the validation dataset such that the data valuation is unbiased and accurate.

To circumvent the above-mentioned issues, our proposed data valuation method instead considers an information-theoretic measure of the quality of a trained model in terms of the reduction in uncertainty of the model parameters, denoted by vector , after training on data . We use the prior entropy and posterior entropy to represent the uncertainty of before and after training on , respectively. So, if the data for can induce a greater reduction in the uncertainty/entropy of or, equivalently, information gain (IG) on :

| (1) |

then a higher-quality (hence more valuable) model can be trained using this more valuable/informative data . IG is an appropriate data valuation method as it is often used as a surrogate measure of the test/predictive accuracy of a trained model (Krause & Guestrin, 2007; Kruschke, 2008) since the test queries are usually not known a priori. We empirically demonstrate such a surrogate effect in Appendix F.3. The predictive distribution of the output at the test input given data is calculated by averaging over all possible model parameters weighted by their posterior belief , i.e., By reducing the uncertainty in , we can further rule out models that are unlikely given and place higher weights (i.e., by increasing ) on models that are closer to the true model parameters, thus improving the predictive accuracy for any test query in expectation. The value (1) of data has the following properties:

-

Data of an empty coalition has no value:

-

Data of any coalition has non-negative value:

-

Monotonicity. Adding more parties to a coalition cannot decrease the value of its data:

-

Submodularity. Data of any party is less valuable to a larger coalition which has more parties and data:

4 Incentive-Aware Reward Scheme with Model Rewards

Recall our problem setting in Sec. 2 that the central party will train a model for each party as a reward. The reward should be decided based on the value of each party’s data relative to that of the other parties’. Let be the data (i.e., derived from ) used to train party ’s model reward. The value of party ’s model reward is according to Sec. 3. In this section, we will first present the incentives to encourage collaboration (Sec. 4.1), then describe how our incentive-aware reward scheme will satisfy them (Sec. 4.2), and finally show how to vary to realize the values of the model rewards decided by our scheme (Sec. 4.3).

We desire a collaboration involving the grand coalition (i.e., ) as it results in the largest aggregated data and hence allows the highest value of model reward to be achieved, which eliminates the tedious process of deciding how the parties should team up and partition themselves. In Sec. 4.1.2, we will discuss how to incentivize the grand coalition formation and increase the total benefit from the collaboration.

4.1 Incentives

Our reward scheme has to be valid, fair to all the parties, and guarantee an improved model for each party. To achieve these, we exploit and adapt key assumptions and constraints about rewards in CGT. As has been mentioned in Sec. 2, the modifications are necessary as the model reward is uniquely freely replicable. We require the following incentive conditions to hold for the values of model rewards based on the chosen coalition structure :

-

R1

Non-negativity.

-

R2

Feasibility. The model reward received by each party in any coalition cannot be more valuable than the model trained on their aggregated data :

-

R3

Weak Efficiency. In each coalition , the model reward received by at least a party is as valuable as the model trained on the aggregated data of :

-

R4

Individual Rationality. Each party should receive a model reward that is at least as valuable as the model trained on its own data:

R1 and R4 are the same as the solution concepts in CGT while R2 and R3 have been adapted.444R2 and R3 are, respectively, adapted from and in CGT (Chalkiadakis et al., 2011). When (i.e., grand coalition), R2 and R3 become and , respectively. So, each party cannot receive a more valuable model reward than the model trained on as it would involve creating data artificially.

4.1.1 Fairness

In addition, when (i.e., grand coalition), to guarantee that the reward scheme is fair to all parties, the values of their model rewards must satisfy the following properties which are inspired by the fairness concepts in CGT (Maschler & Peleg, 1966; Shapley, 1953; Young, 1985) and have also been experimentally studied from a normative perspective (de Clippel & Rozen, 2013):

-

F1

Uselessness.555These properties are axioms of Shapley Value (Shapley, 1953) and have been widely used in existing ML works (Ghorbani & Zou, 2019; Jia et al., 2019b; Ohrimenko et al., 2019) for data valuation. If including the data of party does not improve the quality of a model trained on the aggregated data of any coalition (e.g., when ), then party should receive a valueless model reward: For all ,

-

F2

Symmetry.5 If including the data of party yields the same improvement as that of party in the quality of a model trained on the aggregated data of any coalition (e.g., when ), then they should receive equally valuable model rewards: For all s.t. ,

-

F3

Strict Desirability (Maschler & Peleg, 1966). If the quality of a model trained on the aggregated data of at least a coalition improves more by including the data of party than that of party , but the reverse is not true, then party should receive a more valuable model reward than party : For all s.t. ,

-

F4

Strict Monotonicity.666Our definition is similar to coalitional monotonicity in (Young, 1985) except that we consider instead of . This rules out the scenario where the value of party ’s data improves but not that of its model reward. We further check the feasibility of improvement in the value of its model reward. If the quality of a model trained on the aggregated data of at least a coalition containing party improves (e.g., by including more data of party ), ceteris paribus, then party should receive a more valuable model reward than before: Let and denote any two sets of values of data over all coalitions , and and be the corresponding values of model rewards received by party . For all ,

We have the following incentive condition:

- R5

Both F3 and F4 imply that marginal contribution () matters. F4 is the only property guaranteeing that if party adds more valuable/informative data to a coalition containing it, ceteris paribus, then it should receive a more valuable model reward than before. Additionally, F3 establishes a relationship between parties and that if their marginal contributions only differ for coalition (i.e., w.l.o.g.), then party should receive a more valuable model reward than party .

To illustrate their significance, we consider two simpler reward schemes where we directly set the value of model reward received by every party as (a) the value of its data (i.e., ) or (b) the decrease in the value of its data if it leaves the grand coalition (i.e., ). Both schemes violate F3 and F4 as they ignore the values of the other parties’ data: The value of model reward received by party does not change when its marginal contribution to any non-empty coalition increases. Intuitively, a party with a small value of data (e.g., few data points) can be highly valuable to the other parties if its data is distinct. Conversely, a party with a high value of data should be less valuable to the other parties with similar data as its marginal contribution is lower.

Hence, we consider the Shapley value which captures the idea of marginal contribution precisely as it uses the expected marginal contribution of a party when it joins the parties preceding it in any permutation:

| (2) |

where is the set of all possible permutations of and is the coalition of parties preceding in permutation .

Proposition 1.

for all satisfy R5.

Its proof is in Appendix B. A party will have a larger Shapley value and hence value of model reward when its data is highly valuable on its own (e.g., with low inherent noise) and/or to the other parties (e.g., due to low correlation).

Fairness with Weak Efficiency (R3). We simplify the notation of to . Such a reward scheme may not satisfy R3 as the total value of model rewards is only (Chalkiadakis et al., 2011). Due to the freely replicable nature of our model reward, we want the total value to exceed and the value of some party’s model reward to be . To satisfy other incentive conditions, we consider a function to map to (i.e., ). To achieve fairness in R5, must be strictly increasing with . These motivate us to propose the following:

Definition 1 (Shapley Fairness).

Given , if there exists a constant s.t. for all , then the values of model rewards are Shapley fair.

Note that CGT sets (Proposition 1). Also, we can control constant to satisfy other incentive conditions such as R3. A consequence of Definition 1 is that . So, increasing the ratio of expected marginal contributions of party vs. of party (e.g., by including more valuable/informative data of party , ceteris paribus) results in the same increase in the ratio of values of model rewards received by party vs. received by party .

Fairness with Individual Rationality (R4). However, another issue persists in the above modified definition of fairness (Definition 1): R4 may not be satisfied when the value of data is submodular (e.g., IG). We show an example below and leave the detailed discussion to Appendix C:

Example 1.

Since our model reward is freely replicable, it is possible to give all parties in a larger coalition more valuable model rewards. How then should we redefine to derive larger values of model rewards to achieve R4 while still satisfying R5 and R3 (i.e., )? To answer this question, we further modify the above definition of Shapley fairness (Definition 1) to the following:

Definition 2 (-Shapley Fairness).

Given , if there exist constants and s.t. for all , then the values of model rewards are -Shapley fair.

It follows from Definition 2 that when , implies . Furthermore, reducing from “weakens” proportionality of to and decreases the ratio of vs. while preserving . So, if the value of a party’s model reward is (hence satisfying R3), then the values of the other parties’ model rewards will become closer to , thereby potentially satisfying R4. We will discuss the choice of and the effect of in Sec. 4.2.

4.1.2 Stability and Group Welfare

At the beginning of Sec. 4, we have provided justifications for desiring the grand coalition. We will now introduce two other solution concepts in CGT (i.e., stability and group welfare) to incentivize its formation and increase the total benefit from the collaboration, respectively. To the best of our knowledge, these solution concepts have not been considered in existing collaborative ML works.

A coalition structure with a given set of values of model rewards is said to be stable if no subset of parties has a common incentive to abandon it to form another coalition on their own. With stability, parties can be assured that the collaboration will not fall apart and they cannot get a more valuable model reward from adding or removing parties. Similar to other solution concepts in CGT introduced previously, the definition of stability in CGT777In CGT, a coalition structure with given is stable if does not suit the freely replicable nature of our model reward and the redefined incentive condition on feasibility R2. Therefore, we propose the following new definition of stability:

Definition 3 (Stability).

A coalition structure with a given set of values of model rewards is stable if

Conversely, supposing , all parties in may be willing to deviate to form coalition as they can feasibly increase the values of their model rewards (up) to . The condition in Definition 3 is computationally costly to check as it involves an exponential (in ) number of constraints. To ease computation and since we are mainly interested in the grand coalition (i.e., ), we look at the following sufficient condition instead:

-

R6

Stability of Grand Coalition. Suppose that the value of data is monotonic. The grand coalition is stable if for every coalition , the value of the model reward received by the party with largest Shapley value is at least :

The values of model rewards that satisfy R6 will also satisfy Definition 3. To ensure R6, for each party , we will only need to check one constraint involving : where includes all parties whose Shapley value is at most . The monotonic property of the value of data guarantees that for any . The total number of constraints is linear in the number of parties. Unlike R1 to R5, R6 is an optional incentive condition.

The last incentive condition stated below does not have to be fully provided:

-

R7

Group Welfare. The values of model rewards should maximize the group welfare .

4.2 Reward Scheme Considering All Incentives

In this subsection, we will present a reward scheme which considers all the incentives in Sec. 4.1 and assumes that the grand coalition will form. It uses parameter in Definition 2 to trade off between achieving Shapley fairness vs. satisfying the other incentives, as detailed below:

Theorem 1.

Its proof is in Appendix D. As decreases from 1, the values of model rewards deviate further from pure Shapley fairness (i.e., less proportional to ) and the value of any party ’s model reward with will increase. This increases group welfare (R7) and the values of model rewards can potentially satisfy individual rationality (R4) and stability (R6). Thus, a smaller addresses the limitations of pure Shapley fairness. We do not consider (a) as it reduces group welfare and is not needed to satisfy other incentives, nor (b) as it demands that more valuable/informative data and higher Shapley value lead to less valuable model reward, hence not satisfying fairness (R5).

Fig. 1 gives an overview of the incentive conditions and how Theorem 1 uses to satisfy them and trade off between the various incentives. Note that R6 guarantees R4. Ideally, we want the values of model rewards to satisfy fairness (R5), stability (R6), and individual rationality (R4) (i.e., shaded region in Fig. 1). However, the values of model rewards that are purely Shapley fair may not always lie in the desired shaded region. So, a smaller is needed.

Consider how much each party benefits from the collaboration by choosing a smaller than , specifically, by measuring the ratio of the values of its received model reward that are -Shapley fair () vs. purely Shapley fair (). Such a ratio can be evaluated to and is smaller (larger) for a party with a larger (smaller) . So, when a smaller is chosen, a party with close to needs to be “altruistic” as it cannot benefit as much due to a smaller ratio than any party with a smaller . However, note that party is already getting close to the maximum value of model reward. Regardless of the chosen , the party with the largest (i.e., ) always receives the most valuable model reward with the highest value . In practice, the parties may agree to use a smaller if they want to increase their total benefit from the collaboration or if they do not know their relative expected marginal contributions beforehand.

4.3 Realization of Model Rewards

Finally, we will have to train a model for each party as a reward to realize the values of model rewards decided by the above reward scheme. Recall from the beginning of Sec. 4 that the value of model reward received by each party is the IG on model parameters given the data . How is the training data for each party selected to realize ?

A direct approach is to select and only train on a subset of the aggregated data from all parties: . However, this is infeasible due to the need to consider an exponential number of discrete subsets of data, all of which may not be able to realize the decided value of model reward exactly.

To avoid the above issue, we instead consider injecting Gaussian noise into the aggregated data from multiple parties and optimizing the continuous noise variance parameter for realizing the decided value of model reward. Specifically, the central party collects data from every party and constructs by concatenating the input matrices for and the output vector with . When training a model for party , we use the original so that each party gets all the information from its own data and cannot improve its received model reward by subsequently training on it. We inject noise with variance only to the other parties’ data (i.e., ) to reduce the information from parties conveyed to party . In particular, when and when . By varying , we can span different values of . We use an efficient root-finding algorithm to find the optimal such that for each party . The effect of adding noise to the data will be reflected in the variance of party ’s model reward parameters and its predictive distribution. We will show that the added noise affects predictive accuracy reasonably in Sec. 5.

5 Experiments and Discussion

This section empirically evaluates the performance and properties of our reward scheme (Sec. 4.2) on Bayesian regression models with the (a) synthetic Friedman dataset with input features (Friedman, 1991), (b) diabetes progression (DiaP) dataset on the diabetes progression of patients with input features (Efron et al., 2004), and (c) Californian housing (CaliH) dataset on the value of houses with input features (Pace & Barry, 1997). We use a Gaussian likelihood and assume that the model hyperparameters are known or learned using maximum likelihood estimation.

The performance of our reward scheme is evaluated using the IG and mean negative log probability (MNLP) metrics:

| (3) |

where denotes a test dataset, and and denote, respectively, the predictive mean and variance of the predictive distribution . We then use these metrics to show how an improvement in IG can affect the predictive accuracy of a trained model on a common test dataset.999We use a randomly sampled test dataset to illustrate how IG is a suitable surrogate measure of the test/predictive accuracy of a trained model. In Sec. 3, we have discussed that in practice, it is often tedious or even impossible for all parties to agree on a common validation dataset, let alone a common set of test queries. More details of the experimental settings such as how to select the test dataset are in Appendix E. The performance of our reward scheme cannot be directly compared against that of the existing works (Ghorbani & Zou, 2019; Jia et al., 2019b) as they mainly focus on non-Bayesian classification models and assume monetary compensations. For all experiments, we consider a collaboration of parties as it suffices to show interesting results.

Gaussian process (GP) regression with synthetic Friedman dataset. For each party, we generate data points from the Friedman function. We assign party the most valuable data by spanning the first input feature over the entire input domain . In contrast, for parties and , the same input feature spans only the non-overlapping ranges of and , respectively. This makes the data of parties and highly valuable to each other, i.e., is high relative to . Using (2), the Shapley values of the three parties are , , and . Party has the largest and will always receive the most valuable model reward with the highest IG or value .

|

|

| (a) IG | (b) MNLP |

Fig. 2a shows that our reward scheme indeed satisfies fairness (R5): A party with a larger always receives a higher (i.e., more valuable model reward). Also, since here, our reward scheme always satisfies individual rationality (R4): Every party receives a more valuable model reward than that trained on its own data, i.e., its IG is higher than (see dotted lines in Fig. 2a). As decreases (rightwards), party ’s most valuable model reward is unaffected but it has to be “altruistic” to parties and with increasing and (i.e., increasingly valuable model rewards) but smaller and , as discussed in the last paragraph of Sec. 4.2. When decreases to , stability (R6) is reached: Party ’s model reward matches what it will receive by only collaborating with party . When , all parties receive an equally valuable model reward with the same IG/value despite their varying expected marginal contributions. This shows how can be reduced to trade off proportionality in pure Shapley fairness for satisfying other incentives such as stability (R6) and group welfare (R7).

In Fig. 2b, we report the averaged MNLP and shade the confidence interval over different realizations of . As decreases and each party receives an increasingly valuable model reward (i.e., increasing IG/), MNLP decreases, thus showing that an improvement in IG translates to a higher predictive accuracy of its trained model.

|

|

| (a) IG, | (b) IG, |

|

|

| (c) MNLP, | (d) MNLP, |

GP regression with DiaP dataset. We train a GP regression model with a composite kernel (i.e., squared exponential kernel exponential kernel) and partition the training dataset among parties, as detailed later. The results are shown in Fig. 3.

Neural network regression with CaliH dataset. We consider Bayesian linear regression (BLR) on the last layer of a neural network (NN). of the CaliH data is designated as the “public” dataset101010 In practice, the “public” dataset can be provided by the government to kickstart the collaborative ML. Alternatively, it can be historical data that companies are more willing to share. and used to pretrain a NN. Since the correlation of the house value with the input features in the data of the parties may differ from that in the “public” dataset, we perform transfer learning and selectively retrain only the last layer using BLR with a standard Gaussian prior. So, IG is only computed based on BLR. From the remaining data, we select data points for the test dataset and data points111111We restrict the total number of data points so that every individual party cannot train a high-quality model alone. for the training dataset to be partitioned among parties, as detailed later. The results are shown in Fig. 5.

Sparse GP regression with synthetic Friedman dataset (10 parties). We also evaluate the performance of our reward scheme on the collaboration between a larger number of parties. We consider a larger synthetic Friedman dataset of size and partition the training dataset among parties such that each party owns at least of the dataset. We train a sparse GP model based on the deterministic training conditional approximation (Hoang et al., 2015, 2016) and a squared exponential kernel. Since the exact Shapley value (2) is expensive to evaluate with a large number of parties, we approximate it using the simple random sampling algorithm in (Maleki et al., 2013). The results are shown in Fig. 6.

Partitioning Algorithm. To divide any training dataset among parties and vary their contributed data, we first choose an input feature uniformly at random from all the input features. Next, the data point is chosen uniformly at random. We partially sort the dataset and divide it into continuous blocks of random sizes, as detailed in Fig. 4.

Such a setup allows parties to have different quantities of unique or partially overlapping data. The input feature may have real-world significance: If is the age of patients, then this models the scenario where hospitals have data of patients with different demographics.

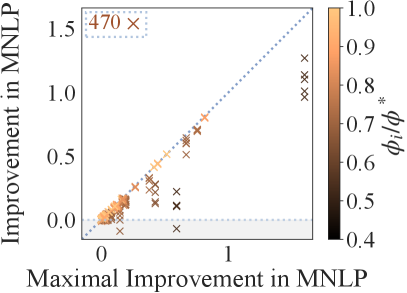

Summary of Observations. For any dataset, we consider different train-test splits and partitions of the training dataset using the above algorithm. For each partition, we compute the optimized noise variance to realize and consider realizations of for each party . For a given partition and choice of , if the values of model rewards satisfy individual rationality (R4), then we compute the ratio , improvement in IG () and MNLP, and maximal (possible) improvement in IG () and MNLP for each party . The improvement is measured relative to a model trained only on party ’s data. The best/lowest MNLP is assumed to be achieved by a model trained on the aggregated data of all parties. Each realization of (from each party ) will produce a point in the scatter graphs in Figs. 3, 5, and 6. If a point lies on the diagonal identity line, then the corresponding party (e.g., with the largest , i.e., ) receives a model reward with MNLP . We also report the number of points with positive improvement in IG and MNLP in the top left hand corner of each graph.

In Figs. 3c-d, 5c-d, and 6c-d, the improvement in MNLP is usually positive: The predictive performance of each party benefits from the collaboration. Occasionally but reasonably, this does not hold when the maximal improvement in MNLP is extremely small or even negative. Lighter colored points lie closer to the diagonal line: Parties with larger receive more valuable model rewards (Figs. 3a-b, 5a-b, 6a-b) which translates to lower MNLP (Figs. 3c-d, 5c-d, 6c-d).

In Figs. 3, 5 and 6, when decreases from to , darker colored points move closer to the diagonal line, which implies that parties with smaller can now receive more valuable model rewards with higher predictive accuracy. The number of points (reported in the top left corner) also increase as more of them satisfy R4.

In Fig. 6d with , most points are close to the diagonal identity line because it may be the case that not all training data points are needed to achieve the lowest MNLP. Instead, fewer or noisier data points are sufficient. In this scenario, a larger may be preferred to reward the parties differently. More experimental results for different Bayesian models and datasets are shown in Appendix F.1.

|

|

| (a) IG, | (b) IG, |

|

|

| (c) MNLP, | (d) MNLP, |

|

|

| (a) IG, | (b) IG, |

|

|

| (c) MNLP, | (d) MNLP, |

Limitations. Though IG is a suitable surrogate measure of the predictive accuracy of a trained model, a higher IG does not always translate to lower MNLP. In Figs. 3c-d and 5c-d, occasionally, the improvement in MNLP is negative (around of the time) and darker points lie above the diagonal line. The latter suggests that parties with smaller may receive model rewards with MNLP lower than (i.e., awarded to the party with largest , i.e., ). This may be purely due to randomness, but we have also investigated factors (e.g., model selection) leading to a weak relationship between IG and MNLP and reported the results in Appendix F.2. Our reward scheme works best when a suitable model is selected and the model prior is not sufficiently informative for any party to achieve a high predictive accuracy by training a model on its own data. In practice, this is reasonable as collaboration precisely happens only when every individual party cannot train a high-quality model alone but can do so from working together.

6 Conclusion

This paper describes a collaborative ML scheme that distributes only model rewards to the parties. We perform data valuation based on the IG on the model parameters given the data and compute each party’s marginal contribution using the Shapley value. To decide the appropriate rewards to incentivize the collaboration, we adapt solution concepts from CGT (i.e., fairness, Shapley fairness, stability, individual rationality, and group welfare) for our freely replicable model rewards. We propose a novel reward scheme (Theorem 1) with an adjustable parameter that can trade off between these incentives while maintaining fairness. Empirical evaluations show that a smaller and more valuable model rewards translate to higher predictive accuracy. Current efforts on designing incentive mechanisms for federated learning can build upon our work presented in this paper. For future work, we plan to address privacy preservation in our reward scheme. The noise injection method used for realizing the model rewards in this work is closely related to the Gaussian mechanism of differential privacy (Dwork & Roth, 2014). This motivates us to explore how the injected noise will affect privacy in the model rewards.

Acknowledgements

This research/project is supported by the National Research Foundation, Singapore under its Strategic Capability Research Centres Funding Initiative and its AI Singapore Programme (Award Number: AISG-GC--). Any opinions, findings, and conclusions or recommendations expressed in this material are those of the author(s) and do not reflect the views of National Research Foundation, Singapore.

References

- Center for Open Data Enterprise (2019) Center for Open Data Enterprise. Sharing and utilizing health data for AI applications. Roundtable report, 2019.

- Chalkiadakis et al. (2011) Chalkiadakis, G., Elkind, E., and Wooldridge, M. Computational aspects of cooperative game theory. In Brachman, R. J., Cohen, W. W., and Dietterich, T. G. (eds.), Synthesis Lectures on Artificial Intelligence and Machine Learning. Morgan & Claypool Publishers, 2011.

- Claver (2019) Claver, H. Data sharing key for AI in agriculture. Future Farming, Feb. 2019. URL https://www.futurefarming.com/Tools-data/Articles/2019/2/Data-sharing-key-for-AI-in-agriculture-389844E/.

- Conway (2018) Conway, J. Artificial Intelligence and Machine Learning: Current Applications in Real Estate. PhD thesis, Massachusetts Institute of Technology, 2018.

- Cover & Thomas (1991) Cover, T. and Thomas, J. Elements of Information Theory. John Wiley & Sons, Inc., 1991.

- de Clippel & Rozen (2013) de Clippel, G. and Rozen, K. Fairness through the lens of cooperative game theory: An experimental approach. Cowles Foundation discussion paper no. 1925, 2013.

- Dwork & Roth (2014) Dwork, C. and Roth, A. The algorithmic foundations of differential privacy. Foundations and Trends® in Theoretical Computer Science, 9(3–4):211–407, 2014.

- Efron et al. (2004) Efron, B., Hastie, T., Johnstone, I., and Tibshirani, R. Least angle regression. Ann. Statist., 32(2):407–499, 2004.

- Friedman (1991) Friedman, J. H. Multivariate adaptive regression splines. Ann. Statist., 19(1):1–67, 1991.

- Ghorbani & Zou (2019) Ghorbani, A. and Zou, J. Data Shapley: Equitable valuation of data for machine learning. In Proc. ICML, pp. 2242–2251, 2019.

- Ghorbani et al. (2020) Ghorbani, A., Kim, M. P., and Zou, J. A distributional framework for data valuation. In Proc. ICML, 2020.

- Harrison & Rubinfeld (1978) Harrison, D. and Rubinfeld, D. Hedonic housing prices and the demand for clean air. Journal of Environmental Economics and Management, 5(1):81–102, 1978.

- Hoang et al. (2015) Hoang, T. N., Hoang, Q. M., and Low, K. H. A unifying framework of anytime sparse Gaussian process regression models with stochastic variational inference for big data. In Proc. ICML, pp. 569–578, 2015.

- Hoang et al. (2016) Hoang, T. N., Hoang, Q. M., and Low, K. H. A distributed variational inference framework for unifying parallel sparse Gaussian process regression models. In Proc. ICML, pp. 382–391, 2016.

- Jia et al. (2019a) Jia, R., Dao, D., Wang, B., Hubis, F. A., Gurel, N. M., Li, B., Zhang, C., Spanos, C., and Song, D. Efficient task-specific data valuation for nearest neighbor algorithms. Proc. VLDB Endowment, 12(11):1610–1623, 2019a.

- Jia et al. (2019b) Jia, R., Dao, D., Wang, B., Hubis, F. A., Hynes, N., Gurel, N. M., Li, B., Zhang, C., Song, D., and Spanos, C. Towards efficient data valuation based on the Shapley value. In Proc. AISTATS, pp. 1167–1176, 2019b.

- Krause & Guestrin (2007) Krause, A. and Guestrin, C. Nonmyopic active learning of Gaussian processes: an exploration-exploitation approach. In Proc. ICML, pp. 449–456, 2007.

- Kruschke (2008) Kruschke, J. K. Bayesian approaches to associative learning: From passive to active learning. Learning & Behavior, 36(3):210–226, 2008.

- Maleki et al. (2013) Maleki, S., Tran-Thanh, L., Hines, G., Rahwan, T., and Rogers, A. Bounding the estimation error of sampling-based Shapley value approximation. arXiv:1306.4265, 2013.

- Maschler & Peleg (1966) Maschler, M. and Peleg, B. A characterization, existence proof and dimension bounds for the kernel of a game. Pacific J. Mathematics, 18(2):289–328, 1966.

- Ocean Protocol Foundation (2019) Ocean Protocol Foundation. Ocean protocol: A decentralized substrate for AI data and services. Technical whitepaper, 2019.

- Ohrimenko et al. (2019) Ohrimenko, O., Tople, S., and Tschiatschek, S. Collaborative machine learning markets with data-replication-robust payments. arXiv:1911.09052, 2019.

- Pace & Barry (1997) Pace, R. K. and Barry, R. Sparse spatial auto-regressions. Statistics and Probability Letters, 33(3):291–297, 1997.

- Shapley (1953) Shapley, L. S. A value for -person games. In Kuhn, H. W. and Tucker, A. W. (eds.), Contributions to the Theory of Games, volume 2, pp. 307–317. Princeton Univ. Press, 1953.

- Yoon et al. (2020) Yoon, J., Arik, S. O., and Pfister, T. Data valuation using reinforcement learning. In Proc. ICML, 2020.

- Young (1985) Young, H. P. Monotonic solutions of cooperative games. International Journal of Game Theory, 14(2):65–72, 1985.

Appendix A Proof of Submodularity of IG (1)

Firstly, we assume that the data and are conditionally independent given model parameters for any and . Then,

where the third and sixth equalities are due to the symmetric property of mutual information, the fourth and fifth equalities are due to the conditional independence of and given , and the inequality is due to the property that conditioning on more information (i.e., ) never increases the entropy (i.e., “information never hurts” bound for entropy) (Cover & Thomas, 1991).

Appendix B Proof of Proposition 1

We prove below that for all satisfy each of the properties F1 to F4:

-

F2 Symmetry. For all s.t. , if

(4) then

Note that is obtained from by swapping the positions of and in . Then, we prove the third equality by considering the following two cases: (a) When precedes in , (i.e., both excluding and ) and thus . It also follows from (4) that by setting , ; (b) when precedes in , (i.e., both containing and ) and thus . It also follows from (4) that by setting , .

-

F3 Strict Desirability. For all s.t. , if

(5) then

Note that is obtained from by swapping the positions of and in . Similar to the proof of F2, we prove the inequality by considering the following two cases: (a) When precedes in , (i.e., both excluding and ) and thus . It also follows from (5) that by setting , ; (b) when precedes in , (i.e., both containing and ) and thus . It also follows from (5) that by setting , . By (5), a strict inequality must hold for either case a or b.

-

F4 Strict Monotonicity. Let and denote any two sets of values of data over all coalitions , and and be the corresponding values of model rewards received by party . For all , if

(6) then

Note that for any , does not contain . Hence, it follows from (6) that which results in the third equality. The inequality also follows from (6).

Appendix C Enforcing Shapley Fairness may not satisfy Individual Rationality (R4) for Submodular Value of Data

When the value of data is submodular, for any party and any coalition , . Then,

| (7) |

Also, for any , due to the efficiency of Shapley value (Chalkiadakis et al., 2011).

Appendix D Proof of Theorem 1

Firstly, the values of model rewards satisfy non-negativity (R1): For each party , due to (2) and the non-negative and monotonic value of data using IG (1).

Next, the values of model rewards satisfy feasibility (R2): For each party , when . Also, weak efficiency (R3) is satisfied since for the party with the largest Shapley value .

When , the values of model rewards are -Shapley fair with in Definition 2.

D.1 Proof of Individual Rationality (R4)

For each party , when , . Since the value of data is monotonic, . So, . It is possible that .

D.2 Proof of Stability (R6)

For each party , when , . Since the value of data is monotonic, . So, . It is possible that .

D.3 Proof of Fairness (R5)

Firstly, we show that the Shapley value satisfies the following party monotonicity property,121212Another solution concept that satisfies the party monotonicity property is the Banzhaf index. which will be used in our proof of F4: For all , if

| (8) |

then

Proof.

Let for any coalition . It follows from (2) that for any party ,

where is a subset of containing all permutations with preceding . We consider only the subset of in the first equality due to (8) where the quality of a model trained on the aggregated data of a coalition can only change/increase when containing party . The first inequality is also due to (8) where for every and thus . The second equality can be understood by swapping the positions of and in permutation to obtain such that . The second inequality holds because there may exist a permutation where (i.e., ) and . The last equality holds since due to (8) where . ∎

Let us now consider . We will prove the following properties for R5:

-

F1 Uselessness.

-

F2 Symmetry. Since each is multiplied by the same factor ,

-

F4 Strict Monotonicity. Let and denote any two sets of values of data over all coalitions , and and be the corresponding Shapley values of party . Let be the party with the largest Shapley value based on . For any party satisfying (6) (i.e., premise of F4), due to definition of party and due to party monotonicity property when . Together, it follows that . Then,

(9) If , then instead. The equality in (9) holds only if . Let be the party with the largest Shapley value based on . Then,

where the first inequality follows from (6), the second inequality is due to (9), and the last inequality is due to the definition of party . For equality in to possibly hold, it has to be the case that and . Based on the definitions of and in Theorem 1, this implies which does not satisfy in (6) (i.e., premise of F4). For all other cases, .

Appendix E Details of Experimental Settings

In all the experiments, we optimize for (Sec. 4.3) by finding the root of using a root-finding algorithm called the TOMS Algorithm method.131313Alefeld, G. E., Potra, F. A., and Shi, Y. Algorithm : Enclosing zeros of continuous functions. ACM Trans. Math. Softw., , –, .

E.1 Gaussian Process (GP) Regression with Synthetic Friedman Dataset

The following Friedman function is used in our experiment:

where is the index of data point and its input features (i.e., ) are independent and uniformly distributed over the input domain . The last input feature is an independent variable which does not affect the output . We standardize the values of output and train a GP model141414For all GP models, we use automatic relevance determination such that each input feature has a different lengthscale parameter. with a squared exponential kernel.

For each tested value of , we reset the random seed to a fixed value and draw samples of from with an optimized (Sec. 4.3). Though differs for varying , the fixed random seed makes the standardized samples of the same across all values of , thus allowing a fair comparison. We evaluate the performance of our reward scheme on a test dataset which consists of points generated from the Friedman function.

E.2 Gaussian Process (GP) Regression with DiaP Dataset

We remove the gender feature and standardize the values of both input matrix and output vector . We train a GP model with a composite kernel comprising the squared exponential kernel and the exponential kernel.

We consider different train-test (i.e., - at random) splits. For each split, we create different partitions of the training dataset using the partitioning algorithm detailed in Sec. 5.

E.3 Neural Network (NN) Regression with CaliH Dataset

We first standardize the values of both input matrix and output vector . Then, we use of the CaliH dataset to train a NN with dense and fully connected hidden layers of and units and use the rectified linear unit (ReLU) as the activation function. Subsequently, we perform BLR on the outputs from the last hidden layer.

We consider different train-test (i.e., - at random) splits. For each split, we create different partitions of the training dataset using the partitioning algorithm detailed in Sec. 5.

E.4 Sparse GP Regression with Synthetic Friedman Dataset (10 Parties)

We consider a larger synthetic Friedman dataset of size and different train-test (i.e., - at random) splits. For each split, we create different partitions of the training dataset using the partitioning algorithm detailed in Sec. 5. But, we divide the training dataset into consecutive blocks instead with the constraint that each party owns at least of the dataset.

E.5 Calculating IG for BLR and GP Models

Let be the training data where is an input matrix and () is the corresponding noisy (latent) output vector such that is perturbed from by an additive Gaussian noise with noise variance .

For BLR with model parameters/weights and prior belief , the IG on given is

We also consider modeling an unknown/latent function with a full-rank GP and setting such that the latent output vector comprises components for all in . Then, the IG on given is

| (10) |

where is a covariance matrix with components for all in and is a kernel function. When we use a sparse GP model151515We use a sparse GP model called the deterministic training conditional (DTC) approximation of the GP model. with inducing input matrix , we replace in (10) with where is a covariance matrix with components for all in and is a covariance matrix with components for all in and in .

When calculating the IG for heteroscedastic data (i.e., data points with different noise variances), instead of dividing by , we multiply by the diagonal matrix such that each diagonal component of represents the noise variance corresponding to a data point in .

Appendix F Additional Experimental Results

F.1 Additional Results for Synthetic Friedman and BosH Datasets

GP regression with synthetic Friedman dataset. We also evaluate the performance of our reward scheme on a synthetic Friedman dataset with data points. We standardize the values of output and train a GP model with a squared exponential kernel. We consider different train-test (i.e., - at random) splits. For each split, we create different partitions of the training dataset using the partitioning algorithm detailed in Sec. 5.

The results are shown in Fig. 7. As before, the improvement in MNLP is usually positive. When , most points move closer to the diagonal line, which implies that parties with smaller can now receive more valuable model rewards with higher predictive accuracy.

|

|

| (a) IG, | (b) IG, |

|

|

| (c) MNLP, | (d) MNLP, |

NN regression with BosH dataset. The Boston housing (BosH) dataset contains the value of houses with selected input features (Harrison & Rubinfeld, 1978). We train a NN with dense and fully connected hidden layers of units each and use the rectified linear unit (ReLU) as the activation function. Subsequently, we perform BLR on the outputs from the last hidden layer.

We remove input features (i.e., proportion of residential land zoned for lots over sq. ft., proportion of non-retail business acres per town, and the Charles River dummy variable) and standardize the values of both input matrix and output vector . Then, we use of the BosH dataset to train the first layers of the NN and divide the entire BosH dataset into a test dataset and among parties using our partitioning algorithm. Due to the small data size, there is overlap between the data used to train the “public” NN layers and the data that is divided among parties.

Fig. 8 shows results of our reward scheme for multiple partitions of BosH dataset among parties. The same observations follow: Most model rewards achieve positive improvement in MNLP.

When a smaller is used (i.e., Figs. 8b and 8d) or when the parties have higher Shapley values relative to the others (i.e., lighter color), they will receive more valuable model rewards which translates to lower MNLP (i.e., points lie closer to the diagonal identity line).

|

|

| (a) IG, | (b) IG, |

|

|

| (c) MNLP, | (d) MNLP, |

F.2 Empirical Analysis of Relationship between IG and MNLP

In this work, our proposed data valuation method and reward scheme are based on IG. Our experimental results have shown that IG is closely related to the predictive accuracy (i.e., MNLP) of a trained model. A model with higher IG usually achieves lower MNLP as it produces smaller predictive variances (i.e., related to the first term of (3)). However, the relationship between IG and MNLP is not strictly monotonic as it is also affected by the second term of (3), i.e., the scaled squared error. In this subsection, we will demonstrate some poor use cases of our scheme where improvement in IG fails to translate to higher predictive accuracy of the trained model more frequently.

The first poor use case is when an unsuitable model is selected. To demonstrate this, we train an alternative GP regression model with an unsuitable squared exponential kernel on the DiaP dataset. Even when ample training data is provided, the squared error remains high relative to the variance in the data. The results achieved using this unsuitable GP model are shown in Figs. 9c-d. For ease of comparison, we copy the results of the GP model with a suitable kernel in Figs. 3c-d to Figs. 9a-b. We can observe that the maximal and actual improvement in MNLP are mostly positive for the suitable model (Figs. 9a-b). However, for the unsuitable model, fewer positive values of the maximal and actual improvement in MNLP can be observed in Figs. 9c-d. In Figs. 9b and 9d, there are, respectively, and points with positive improvement in MNLP (out of a maximum of points). Improvement in IG fails to translate to a higher predictive accuracy of its trained model more frequently when an unsuitable model is selected.

|

|

| (a) , suitable model | (b) , suitable model |

|

|

| (c) , unsuitable model | (d) , unsuitable model |

The next poor use case is when the model prior, which determines its predictive performance with limited data, is sufficiently informative, hence leading to a high predictive accuracy even if the model is only trained on a small amount of data. An example of an informative model prior is when the prior mean of the model parameters is close to the true mean and the prior variance is small. In this case, although the first term of (3) is smaller for a model with higher IG, the small predictive variance will magnify the squared error, thus increasing the second term of (3). So, on the overall, the model with a higher IG may not have a significantly lower MNLP. Instead, it is possible that it has a worse/higher MNLP. We demonstrate this with the BosH dataset by setting the prior mean of the BLR parameters to be the NN last layer’s weights (instead of the zero vector as in Appendix F.1) and prior variance to be a small value of (instead of ). The MNLP results are shown in Fig. 10. It can be observed that the largest maximal improvement in MNLP in Fig. 10 is around which is significantly smaller than that (i.e., ) in Figs. 8c-d. In Fig. 10a, there are more instances with negative improvement in MNLP compared to that in Fig. 8c.

The last poor use case is related to the previous one. Even if the model prior is insufficiently informative, the phenomenon can also happen if each party has ample data to independently train a model with a reasonable predictive accuracy. More training data lead to a higher IG and reduce the first term of (3) but magnify the squared error, thus potentially increasing MNLP.

|

|

| (a) MNLP, | (b) MNLP, |

Summary. Ideally, improvement in IG by training on the additional data decided by our reward scheme should translate to a higher predictive accuracy of the model reward. This should occur when a suitable model is selected and the model prior is not sufficiently informative for any party to achieve a high predictive accuracy by training a model on its own data. In practice, this is reasonable as collaboration precisely happens only when every individual party cannot train a high-quality model alone but can do so from working together.

F.3 Comparing IG-Based vs. MNLP-Based Data Valuation Methods

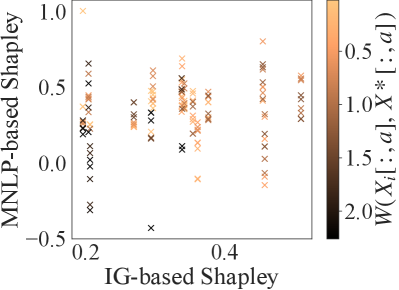

In Sec. 3, our data valuation method is based on IG as it can avoid the need and difficulty of selecting a common validation dataset, unlike other data valuation methods (e.g., using MNLP). We also claim that IG is a suitable surrogate measure of the predictive accuracy of a trained model. In this subsection, we will demonstrate this by comparing the values of data and Shapley values when the data valuation is performed using IG vs. MNLP.

For the MNLP-based data valuation method, let the value of data for any be where is defined in the same way as (3) except that in (3) is replaced by . For each party , we can compute its alternative Shapley value using (2) by replacing with for all .

Since we are more concerned about the relative values of data and Shapley values between parties, Figs. 11a-b show results of the IG-based vs. MNLP-based normalized values of data (respectively, vs. ) of different partitions, while Figs. 11c-d show results of the IG-based vs. MNLP-based normalized Shapley values (respectively, vs. ) of the same partitions. These partitions are generated using the partitioning algorithm in Sec. 5 on a training dataset of data points. For each partition, we consider different validation datasets of size , which results in possible sets of and . The validation datasets are either randomly selected or generated using the partitioning algorithm on the larger withheld test dataset (using the same feature ) so that it may overlap or exclude any party’s data range. We measure the similarity between the party’s data and the validation dataset using the Wasserstein distance between both their values of data or Shapley values for feature . The smaller the distance, the more similar the party’s data and the validation dataset.

|

|

| (a) GP Friedman | (b) NN CaliH |

|

|

| (c) GP Friedman | (d) NN CaliH |

In Fig. 11, as the validation dataset varies, the normalized values of data and Shapley values for the MNLP-based data valuation method differ significantly (i.e, large spread along the y-axis). This shows that the MNLP-based data valuation method is highly sensitive to the choice of the validation dataset. Parties are valued significantly lower if their data is very different from the test dataset. This is reflected by the position of the darker colored points below the lighter colored points.

The IG-based data valuation method can serve as a good surrogate for the MNLP-based data valuation method as it will not favor any specific party. In particular, for the Friedman dataset, the normalized IG-based value of data/Shapley value (i.e., diagonal line) (additionally) closely approximates the normalized MNLP-based values of data/Shapley values over different validation datasets (i.e., scatter points) on average.