Clustering-Based Subset Selection in Evolutionary Multiobjective Optimization*

Abstract

Subset selection is an important component in evolutionary multiobjective optimization (EMO) algorithms. Clustering, as a classic method to group similar data points together, has been used for subset selection in some fields. However, clustering-based methods have not been evaluated in the context of subset selection from solution sets obtained by EMO algorithms. In this paper, we first review some classic clustering algorithms. We also point out that another popular subset selection method, i.e., inverted generational distance (IGD)-based subset selection, can be viewed as clustering. Then, we perform a comprehensive experimental study to evaluate the performance of various clustering algorithms in different scenarios. Experimental results are analyzed in detail, and some suggestions about the use of clustering algorithms for subset selection are derived. Additionally, we demonstrate that decision maker’s preference can be introduced to clustering-based subset selection.

I Introduction

Evolutionary multiobjective optimization (EMO) algorithms have demonstrated great success in solving multi-objective optimization problems. In the EMO field, subset selection is one of the most important topics, which is involved in many phases of EMO algorithms.

During environmental selection, a subset of parents and offspring needs to be selected for the next generation. To this end, various subset selection methods have been used, such as dominance and density-based selection (e.g., NSGA-II [1]), reference vector-based selection (e.g., MOEA/D [2]), and indicator-based selection (e.g., SMS-EMOA [3]).

Additionally, if we run an EMO algorithm with an unbounded external archive (UEA) [4, 5, 6], a pre-specified number of solutions should be selected from non-dominated solutions in the UEA as the final solution set. In this scenario, distance-based subset selection [5, 7], hypervolume-based subset selection [8] and IGD-based subset selection [9] are usually employed.

In recent years, some EMO algorithms with clustering-based subset selection, such as CLUMOEA [10] and CA-MOEA [11], achieve the state-of-the-art performance on a variety of multiobjective optimization problems. Besides, in some adaptive reference vector-based EMO algorithms, clustering algorithms are used to update reference vectors (e.g., SRV [12]). In the UEA scenario, Singh et al. [5] use K-means clustering as a plausible baseline for distance-based subset selection. Although clustering-based subset selection is adopted in many scenarios, different methods have never been analyzed and compared in detail in the literature.

In the past few decades, a huge number of clustering algorithms have been proposed and examined in the literature. However, they are not proposed specially for subset selection in EMO algorithms. Thus, the objective of clustering and the basic assumption about data distributions are different between its traditional applications and its use in EMO algorithms. Currently, only a few clustering algorithms have been used for subset selection in EMO algorithms, which are not always the optimal choice. How to select a clustering algorithm in different EMO scenarios is a difficult problem.

To bridge the gap between clustering algorithms and subset selection in EMO algorithms, in this paper, the performance of different clustering approaches for subset selection on various data sets is examined and analyzed. The main contributions of our paper can be summarized as follows:

-

1.

We point out that IGD-based subset selection can be viewed as clustering-based subset selection.

-

2.

Various promising clustering algorithms are examined on representative data sets. Based on experimental results, we derive some suggestions about the choice of clustering algorithms in different scenarios.

-

3.

We demonstrate that clustering-based subset selection can be extended to include decision-maker’s preference.

The remainder of this paper is organized as follows. We first review clustering algorithms and IGD-based subset selection, and discuss their relation in Section II. We also discuss the difference between general applications of clustering and clustering-based subset selection in Section II. Then, through computational experiments, we compare and analyze the performance of different representative point selection methods and different clustering algorithms in Section III. Based on experimental results, some suggestions about the choice of clustering methods are given. In Section IV, we further extend clustering-based subset selection to include the decision-maker’s preference. Finally, we draw a brief conclusion and suggest some feature research directions in Section V.

II Clustering

II-A General Clustering Algorithms

For a given data set , a clustering algorithm divides into clusters where and .

Partition-based clustering algorithms, such as K-means [13], K-means++ [14], and K-medoids [15], are the most widely-used clustering algorithms. In this type of clustering algorithms, cluster centers are first initialized randomly as in K-means or based on some heuristics as in K-means++. Then, each data point is assigned to the closest cluster center. The data points assigned to the same cluster center belong to the same cluster. After that, the cluster center is updated. In K-means and K-means++, the center of each cluster is updated to the mean (centroid) of all data points in that cluster. In K-medoids, the data point with the smallest total distance to all the other points in each cluster is chosen as the new cluster center of the corresponding cluster. Therefore, the objective of K-medoids clustering is

| (1) |

where .

The main disadvantage of partition-based clustering is that it is not suitable for non-convex clusters. In order to deal with clusters of arbitrary shapes, density-based methods (e.g., DBSCAN [16]) are proposed. This type of clustering algorithms assume that clusters in high-density areas are separated by low-density areas in the data space. These algorithms can obtain clusters with arbitrary shapes efficiently but clustering results are usually sensitive to the parameter setting.

Another popular approach is hierarchical clustering [17], which aims to construct a hierarchical relationship among the given points. The agglomerative approach is usually employed in hierarchical clustering. Each point is considered as a separate cluster at the initial stage. Then, in each iteration, two clusters that are closest to each other are found and merged. There are various criteria (i.e., linkage functions) to evaluate the distance between two clusters, such as single linkage, complete linkage, weighted average linkage [18] and Ward’s linkage [19]. Single linkage calculates the smallest distance between data points in two clusters while complete linkage calculates the largest distance between them. Weighted average linkage calculates the distance between two clusters in a recursive way. If cluster is obtained by merging clusters and , the distance between clusters and is calculate by . The Ward’s linkage calculates the incremental sum of squares, which can be written as where and are the number of data points in clusters and , respectively, and and are the cluster centers of clusters and , respectively.

II-B Clustering-Based Subset Selection

Since clustering algorithms can divide a data set into a pre-specified number of clusters, clustering algorithms can be used for subset selection in EMO algorithms [10, 11, 5]. To select a subset of size from a given data set, we first apply a clustering algorithm to the data set. Based on the obtained clusters, a representative point will be selected from each cluster. These representative points compose the selected subset.

There are some differences between the general use of clustering algorithms and the use of clustering algorithms for subset selection in EMO algorithms. Firstly, since the subset size is usually pre-specified, clustering-based subset selection prefers algorithms that can obtain a fixed number of clusters instead of a variable number of clusters. Besides, some clustering algorithms are designed to deal with complicated cluster shapes or special distributions, which is of little use for subset selection. Additionally, the pre-specified number of clusters is usually much larger in clustering-based subset selection than that in standard applications of clustering algorithms.

Due to these differences, clustering algorithms that perform well in their standard applications may not be suitable for clustering-based subset selection. The performance of clustering algorithms for subset selection are examined in some applications (e.g., NIR spectra analysis [24] and IT service [25]). In our paper, we focus on subset selection in EMO algorithms. More promising clustering algorithms are considered, and different methods for selecting a representative point in each cluster is discussed. To the best of our knowledge, this issue has not been examined in the literature.

II-C IGD-based Subset Selection

Inverted generational distance (IGD) [26] is one of the most widely-used indicators to evaluate the performance of an EMO algorithm. It calculates the average distance from each point in the reference set to the closest point in the obtained solution set . The IGD contribution of a solution to the solution set is defined as

Since the IGD indicator considers the convergence and diversity simultaneously, IGD-based subset selection can be used to select representative solutions from an archive. In this case, all solutions in the archive are used as the reference set for IGD calculation. It aims to select a subset of size with the smallest IGD value with respect to all solutions in the archive. Formally, the objective of IGD-based subset selection is

| (2) |

where is a point in the objective space included in the archive, and is the number of points in the archive (i.e., is the reference point set for IGD calculation). We can divide the whole data set into groups according to the closest point in the subset (where ). Let us define an indicator function as:

| (3) |

Then, the objective of IGD-based subset selection can be re-written as

| (4) |

which is exactly the same as the objective of K-medoids clustering (i.e., Eq. (1)). Therefore, although IGD-based subset selection is usually classified as indicator-based subset selection, it can also be viewed as a clustering-based subset selection method.

Greedy inclusion is usually used in IGD-based subset selection from large data sets. A greedy inclusion algorithm starts from an empty subset. In each iteration, it selects the data point with the largest IGD contribution to the currently selected subset until a pre-specified number of data points are selected. On the contrary, a greedy removal algorithm starts with the whole data set and iteratively removes the solution with the smallest IGD contribution to the current subset. When the data set size is much larger than the subset size, the greedy removal strategy is not efficient.

Greedy inclusion IGD-based subset selection can be viewed as a top-down clustering approach. In the beginning, the entire data set is treated as a single cluster. Then, new cluster centers are added one by one until the pre-specified number of clusters is found. For example, Fig. 1 shows a data set with seven data points. Let us assume that we want to select three data points. In the initial stage, all the data points belong to a big cluster whose center is . In the next iteration, the first cluster center is fixed, and the algorithm aims to find another point to minimize the objective in (4). The cluster center is found and fixed. Now the data set is divided into two clusters (shaded in green). In the same manner, the third cluster center is found and the data set is divided into three clusters (shown by three pink dashed circles).

III Experimental Study

III-A Data Sets Generation

In our experimental study, as a candidate solution set , we consider randomly sampled data points on the true Pareto fronts of six test problems with various Pareto front shapes, including linear (DTLZ1 [27]), concave (DTLZ2 [27]), convex (MaF3 [28]), inverted (IDTLZ2 [29]), and disconnected (DTLZ7 [27] and WFG2 [30]).

Three candidate solution sets (i.e., ) of different size are generated for each test problem as follows. We first generate a large number of uniformly distributed points on the true Pareto front by the method in Tian et al. [31]. Note that this method cannot generate an arbitrary number of points. In our experiments, the number of generated points is specified as the number closest to 200,000 among the number of points that this method can generate. Then, we randomly select 200, 4,000, and 10,000 points from these uniformly generated points. The data set of size 200 is used to test the performance in the environmental selection scenario while the data sets of size 4,000 and 10,000 are used to test the performance in the final solution selection from the UEA scenario.

III-B Compared Algorithms

We examine six promising and well-known clustering algorithms on each candidate data set. The details of the examined algorithms are as follows.

-

1.

K-means++. An improved version of K-means with heuristic cluster center initialization. The maximum number of iterations is specified as 100.

-

2.

K-medoids. We use the same cluster center initialization method as K-means++. The maximum number of iterations is specified as 100.

-

3.

Greedy IGD-based subset selection (Greedy IGD). Since the select subset size is smaller than or equal to half of the data set size , we choose greedy inclusion IGD-based subset selection. Lazy greedy inclusion IGD-based subset selection [32] is used to accelerate the selection. The obtained subset by the lazy greedy inclusion algorithm is exactly the same as the naive greedy inclusion algorithm.

-

4.

Three variants of of Hierarchical Clustering (HC). Following common practice, we use the agglomerative hierarchical clustering approach. Three linkage functions are tested, i.e., Ward’s linkage, weighted average linkage and single linkage.

Since density-based clustering algorithms and grid-based clustering algorithms cannot generate a pre-specified number of clusters, they are not examined in this paper. Model-based clustering algorithms are very expensive when the data set is large. Therefore, they are not suitable for our test scenarios.

In all experiments in this section, the number of clusters (i.e., subset size) is set to 100. The IGD value of the obtained subset with respect to the corresponding candidate data set (i.e., with respect to the reference point set for the IGD calculation) is used to evaluate the performance of each algorithm. All the experiments are carried out on the server equipped with Intel Xeon E5-2620.

III-C How to Select a Representative Point in Each Cluster?

In some clustering algorithms, such as K-medoids and IGD-based subset selection, the representative point of each cluster is obtained as the cluster center when the execution of the clustering algorithm is terminated. We can directly use these points (i.e., the obtained cluster centers) as the final subset. However, in K-means++, although a pre-specified number of cluster centers are determined, each cluster center is not a data point but the mean (centroid) of the data points in the cluster. In hierarchical clustering algorithms, no clear cluster center is determined. Therefore, we need to select a representative point for each cluster in those clustering algorithms where the cluster center is not a data point or no cluster center is explicitly calculated. We can choose the representative point using the following two strategies.

-

1.

Strategy 1: Choose the data point closest to the mean (centroid) of the data points in the cluster.

(5) -

2.

Strategy 2: Choose the point with the smallest total distance to all data points in the cluster.

(6)

Table I shows the comparison results between these two strategies on the clusters obtained by K-means++ and hierarchical clustering with Ward’s linkage. We compare these two strategies using the Wilcoxon rank-sum test [33] with the significance level 0.1. The ”+” and ”-” in the table mean Strategy 2 is significantly better and significantly worse than Strategy 1, respectively, while ”=” means there is no statistically significant difference between the two strategies.

As shown in Table I, we can see that when the data set size is 200, there is no significant difference between these two strategies. The reason is that the expected number of data points in each cluster is two when the number of clusters is set to 100. For a cluster with two data points, if Strategy 1 is used, the cluster center is the midpoint of the two data points, and the distance from to each data point is the same. Therefore, one of the two points is randomly selected as the representative point. If Strategy 2 is used, the distance from one point to the other point is the same (independent of the choice of one of the two points). Thus, one of the two points is randomly selected as the representative point. Thus, the difference between these two strategies is not significant.

When the size of the data set is larger, we can see that Strategy 2 always has the better or equal performance to Strategy 1 since the objective of Strategy 2 is consistent with IGD minimization while the objective of Strategy 1 is slightly different from IGD minimization. Besides, Strategy 2 is more robust to outliers in the cluster. Therefore, we use Strategy 2 to select representative data points in the remaining experiments.

| Data Set | K-Means++ | HC (Ward) | |

|---|---|---|---|

| Size | +/=/- | +/=/- | |

| 3-objective | 200 | 0/6/0 | 0/6/0 |

| 4000 | 1/5/0 | 6/0/0 | |

| 10000 | 3/3/0 | 6/0/0 | |

| 8-objective | 200 | 0/6/0 | 0/6/0 |

| 4000 | 3/3/0 | 5/1/0 | |

| 10000 | 2/4/0 | 4/2/0 |

III-D Performance on Three-Objective Data Sets

Table II shows the average IGD value of the subsets obtained by each algorithm on each three-objective data set over 11 runs. The number in parentheses shows the ranking of the corresponding algorithm (among the six algorithms) for the corresponding test problem.

| Data set size | Shape | K-means++ | K-medoids | Greedy IGD | HC (ward) | HC (weighted) | HC (single) |

|---|---|---|---|---|---|---|---|

| 200 | Linear | 1.5605E-02 (6) | 1.5046E-02 (4) | 1.4034E-02 (3) | 1.3951E-02 (2) | 1.3815E-02 (1) | 1.5251E-02 (5) |

| Concave | 1.9853E-02 (6) | 1.9256E-02 (4) | 1.8238E-02 (3) | 1.8126E-02 (2) | 1.7905E-02 (1) | 1.9356E-02 (5) | |

| Convex | 1.1120E-02 (6) | 1.0633E-02 (4) | 9.9369E-03 (2) | 9.8542E-03 (1) | 9.9591E-03 (3) | 1.0866E-02 (5) | |

| Inverted | 2.1808E-02 (6) | 2.1619E-02 (5) | 2.0249E-02 (3) | 1.9523E-02 (2) | 1.9387E-02 (1) | 2.1053E-02 (4) | |

| Disconnected 1 | 2.0288E-02 (5) | 1.9955E-02 (4) | 1.8635E-02 (3) | 1.8026E-02 (1) | 1.8094E-02 (2) | 2.2321E-02 (6) | |

| Disconnected 2 | 5.1448E-02 (5) | 5.0210E-02 (4) | 4.6697E-02 (3) | 4.5358E-02 (2) | 4.5127E-02 (1) | 5.3329E-02 (6) | |

| Avg. Rank | 5.67 | 4.16 | 2.83 | 1.67 | 1.5 | 5.17 | |

| 4000 | Linear | 3.4245E-02 (1) | 3.4610E-02 (3) | 3.5086E-02 (4) | 3.4267E-02 (2) | 3.5127E-02 (5) | 5.4144E-02 (6) |

| Concave | 4.5270E-02 (1) | 4.5841E-02 (3) | 4.6600E-02 (4) | 4.5425E-02 (2) | 4.6955E-02 (5) | 9.7713E-02 (6) | |

| Convex | 2.4799E-02 (2) | 2.5095E-02 (3) | 2.5222E-02 (4) | 2.4690E-02 (1) | 2.7967E-02 (5) | 1.1114E-01 (6) | |

| Inverted | 4.5342E-02 (2) | 4.5933E-02 (3) | 4.6540E-02 (4) | 4.5275E-02 (1) | 4.7141E-02 (5) | 1.3222E-01 (6) | |

| Disconnected 1 | 4.2280E-02 (2) | 4.2566E-02 (3) | 4.3181E-02 (4) | 4.1740E-02 (1) | 4.4241E-02 (5) | 1.1989E-01 (6) | |

| Disconnected 2 | 1.1831E-01 (2) | 1.1997E-01 (4) | 1.1970E-01 (3) | 1.1717E-01 (1) | 1.3169E-01 (5) | 4.2332E-01 (6) | |

| Avg. Rank | 1.67 | 3.16 | 3.83 | 1.33 | 5 | 6 | |

| 10000 | Linear | 3.5000E-02 (1) | 3.5515E-02 (3) | 3.6214E-02 (4) | 3.5495E-02 (2) | 3.6623E-02 (5) | 5.7334E-02 (6) |

| Concave | 4.6263E-02 (1) | 4.6975E-02 (3) | 4.7350E-02 (4) | 4.6844E-02 (2) | 4.8787E-02 (5) | 1.3004E-01 (6) | |

| Convex | 2.5000E-02 (1) | 2.5479E-02 (3) | 2.5527E-02 (4) | 2.5258E-02 (2) | 2.8881E-02 (5) | 1.2888E-01 (6) | |

| Inverted | 4.6183E-02 (1) | 4.6816E-02 (2) | 4.7502E-02 (4) | 4.6865E-02 (3) | 4.8578E-02 (5) | 1.3600E-01 (6) | |

| Disconnected 1 | 4.2736E-02 (1) | 4.3048E-02 (3) | 4.4097E-02 (4) | 4.2901E-02 (2) | 4.5914E-02 (5) | 1.1568E-01 (6) | |

| Disconnected 2 | 1.2030E-01 (1) | 1.2231E-01 (3) | 1.2324E-01 (4) | 1.2134E-01 (2) | 1.3523E-01 (5) | 4.6655E-01 (6) | |

| Avg. Rank | 1 | 2.83 | 4 | 2.17 | 5 | 6 |

When the data set size is 200, hierarchical clustering with weighted average linkage achieves the best average rank while K-means++ gets the worst average rank. However, when the data set size increases, which is similar to subset selection from the archive, the performance of K-means++ clearly improves and the performance of hierarchical clustering with weighted average linkage clearly degrades. When the data set size is 10,000, K-means++ always achieves the best performance. This shows that K-means++ is not suitable when the number of data points in a cluster is too small. This is because the distance between the cluster center of K-means++ and the selected representative point is large when the data set size is small. By increasing the data set size, this distance becomes small. As a result, the performance of K-means++ as a subset selection method is improved. For higher-dimensional problems (e.g., Table III on the 8-objective data sets), much more data points are needed to have a close data point to each cluster center. Thus, K-means++ is not the best even in the case of 10,000 data points.

Computation time is also important in evaluating clustering algorithms. Fig. 2 shows the relation between the average IGD value of the obtained subsets and the average computation time of each algorithm for different data set sizes. Since the IGD values of the selected subsets from data sets on different Pareto fronts have large differences (depending on the Pareto front shape), each IGD value is normalized by dividing the largest IGD value obtained for each data set before calculating the average value. To be consistent, we do the same normalization on the computation time. On the data sets of size 10,000, since the computation time of greedy IGD-based subset selection is much larger than that of the other algorithms, we use the log scale when plotting the figure.

As shown in Fig. 2(a), when the data set size is small (i.e., 200), hierarchical clustering with weighted average linkage dominates all the other algorithms (with respect to both the IGD value and the computation time), and hierarchical clustering with Ward’s linkage achieves similar performance. When the data set size is large (i.e., 10,000), we can observe from Fig. 2(b) that K-means++ is the most efficient one and achieves the best average IGD value. K-medoids and hierarchical clustering with Ward’s linkage are similar in terms of the IGD value and the computation time.

In general, on the three-objective data sets, if the data set size is small, hierarchical clustering with weighted average linkage is the best choice among the examined algorithms. If the data set size is large, K-means++, which can obtain the best IGD value in the shorted computation time is the best choice.

| Data set size | Shape | K-means++ | K-medoids | Greedy IGD | HC ward | HC (weight) | HC (single) |

|---|---|---|---|---|---|---|---|

| 200 | Linear | 9.2909E-02 (5) | 8.9396E-02 (4) | 8.6373E-02 (1) | 8.7361E-02 (3) | 8.7329E-02 (2) | 9.8237E-02 (6) |

| Concave | 1.7983E-01 (6) | 1.7308E-01 (4) | 1.6833E-01 (1) | 1.6986E-01 (2) | 1.6999E-01 (3) | 1.7969E-01 (5) | |

| Convex | 2.5646E-02 (5) | 2.4751E-02 (4) | 2.3729E-02 (1) | 2.4097E-02 (2) | 2.4464E-02 (3) | 2.9236E-02 (6) | |

| Inverted | 1.7442E-01 (5) | 1.6856E-01 (4) | 1.6219E-01 (1) | 1.6429E-01 (3) | 1.6349E-01 (2) | 1.7501E-01 (6) | |

| Disconnected 1 | 3.0614E-01 (5) | 2.9561E-01 (4) | 2.8529E-01 (1) | 2.9072E-01 (3) | 2.8885E-01 (2) | 3.1355E-01 (6) | |

| Disconnected 2 | 2.7587E-01 (5) | 2.6896E-01 (4) | 2.5453E-01 (1) | 2.5721E-01 (3) | 2.5477E-01 (2) | 3.5119E-01 (6) | |

| Avg. Rank | 5.17 | 4 | 1 | 2.67 | 2.33 | 5.83 | |

| 4000 | Linear | 1.7655E-01 (2) | 1.7679E-01 (3) | 1.7381E-01 (1) | 1.7755E-01 (4) | 1.8155E-01 (5) | 2.3244E-01 (6) |

| Concave | 3.3520E-01 (2) | 3.3587E-01 (3) | 3.3000E-01 (1) | 3.3711E-01 (4) | 3.4313E-01 (5) | 4.4839E-01 (6) | |

| Convex | 5.4729E-02 (2) | 5.5212E-02 (4) | 5.3800E-02 (1) | 5.4770E-02 (3) | 6.2500E-02 (5) | 9.2508E-02 (6) | |

| Inverted | 3.3606E-01 (3) | 3.3589E-01 (2) | 3.3106E-01 (1) | 3.3721E-01 (4) | 3.4376E-01 (5) | 4.6123E-01 (6) | |

| Disconnected 1 | 5.6078E-01 (3) | 5.5921E-01 (2) | 5.5612E-01 (1) | 5.6186E-01 (4) | 5.8916E-01 (5) | 7.5353E-01 (6) | |

| Disconnected 2 | 6.2260E-01 (2) | 6.2575E-01 (4) | 6.1100E-01 (1) | 6.2471E-01 (3) | 7.1781E-01 (5) | 1.1343E+00 (6) | |

| Avg. Rank | 2.33 | 3 | 1 | 3.67 | 5 | 6 | |

| 10000 | Linear | 1.7777E-01 (2) | 1.7977E-01 (3) | 1.7637E-01 (1) | 1.7994E-01 (4) | 1.8207E-01 (5) | 2.1790E-01 (6) |

| Concave | 3.3675E-01 (2) | 3.4223E-01 (4) | 3.3569E-01 (1) | 3.4089E-01 (3) | 3.4824E-01 (5) | 4.5376E-01 (6) | |

| Convex | 5.5902E-02 (2) | 5.6630E-02 (4) | 5.5219E-02 (1) | 5.6231E-02 (3) | 6.5573E-02 (5) | 1.0163E-01 (6) | |

| Inverted | 3.3701E-01 (2) | 3.4166E-01 (3) | 3.3555E-01 (1) | 3.4176E-01 (4) | 3.4809E-01 (5) | 4.5171E-01 (6) | |

| Disconnected 1 | 5.7045E-01 (2) | 5.7501E-01 (3) | 5.7029E-01 (1) | 5.7680E-01 (4) | 5.9937E-01 (5) | 7.2398E-01 (6) | |

| Disconnected 2 | 6.3377E-01 (2) | 6.4113E-01 (4) | 6.2533E-01 (1) | 6.3674E-01 (3) | 7.6525E-01 (5) | 1.2024E+00 (6) | |

| Avg. Rank | 2 | 3.5 | 1 | 3.5 | 5 | 6 |

III-E Performance on Eight-Objective Data Sets

We use the eight-objective data sets to test the performance of each algorithm in many-objective scenarios. Table III shows the average IGD value of the subsets obtained by each algorithm on each eight-objective data set over 11 runs.

Although greedy IGD-based subset selection does not have very good performance in the three-objective case in Table II, it always achieves the best performance in the eight-objective case in Table III. We can see that in the three-objective case (Table II), hierarchical clustering with Ward’s linkage consistently outperforms greedy IGD-based subset selection. However, in the eight-objective case, greedy IGD-based subset selection always outperforms hierarchical clustering with Ward’s linkage. This is due to the curse of dimensionality. The linkage functions to measure the distance between two clusters are usually designed for clustering in two or three dimensions. Thus, the performance of hierarchical clustering tends to degrade as the number of dimensions increases. Such performance deterioration can also be observed on the other clustering algorithms from the comparison between Table II and Table III. Besides, we can observe in Table III that the rank of hierarchical clustering with weighted average linkage significantly decreases when the data set size increases as in Table II.

In Fig. 3, we show the relation between the average IGD value of the obtained subsets and the average computation time of each algorithm on the eight-objective data sets. The normalization is performed in the same manner as in Fig. 2.

We can see from Fig. 3(a) that greedy IGD-based subset selection and hierarchical clustering with weighted average linkage are non-dominated to each other. Although greedy IGD-based subset selection can achieve the best IGD performance, it uses a much longer computation time than hierarchical clustering. Hierarchical clustering with weighted average linkage is a cheaper alternative with a slight performance deterioration. In Fig. 3(b), we can see that K-means++ and greedy IGD-based subset selection are non-dominated to each other. All the other algorithms are dominated by K-means++ in Fig. 3(b).

On the eight-objective data sets, greedy IGD-based subset selection always achieves the best IGD performance among the examined algorithms. If the computation time is severely limited, hierarchical clustering with weighted average linkage is a cheaper alternative when the data set size is small, and K-means++ is a cheaper alternative when the data set size is large.

IV Introduce Preference into Clustering

Clustering-based subset selection tends to select uniformly distributed data points from a given data set. However, we may prefer some special solutions among the solutions obtained by an EMO algorithm (i.e., prefer some parts of the Pareto front). For example, in some applications, decision-makers are interested in knee regions [34] since solutions in these regions achieve a better trade-off between objectives. For a solution in the knee region, a small improvement in one objective may cause a large deterioration in other objectives.

To select more useful solutions for decision-makers, we can introduce the preference for knee regions to the K-medoids clustering algorithm by modifying the medoids update and data points assignment procedure. When updating the medoids, instead of using Euclidean distance, we can use the similarity measure in the IGD+ indicator [35]: That is, the distance is calculated only for inferior objectives of than . Therefore, in each iteration, we aim to find a new medoid for each cluster which minimizes . Then, each data point is re-assigned to the medoid with the smallest .

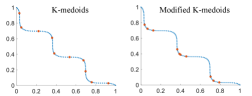

Fig. 4 shows the subsets obtained by K-medoids and the modified K-medoids on two-objective data sets with 100 data points. The blue points in Fig. 4 are data sets and the red points are selected subsets. The number of clusters (i.e., subset size) is set to 10. We generate four data sets with various shapes. The linear and convex data sets contain uniformly distributed data points on the true Pareto front of DTLZ1 [27] and MaF3 [28], respectively. The other two data sets (i.e., wave and DEB2K) with three knee points are generated according to [36] and [34], respectively.

We can see that for the linear data set, similar results are obtained from K-medoids and the modified K-medoids in Fig. 4 (a). However, for the convex data set in Fig. 4 (b), most of the points selected by the modified K-medoids are distributed in the knee region. In Fig. 4(c) and Fig. 4(d), we can see that knee regions are clearly identified by the modified K-medoids, and most of the selected solutions are in these regions.

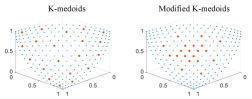

Fig. 5 shows subsets selected by K-medoids and modified K-medoids on three-objective data sets with 210 data points. Accordingly, the size of the subset is increased to 20. We can observe a similar result from Fig. 5 as in Fig. 4 (i.e., most solutions are in knee regions). These experiments clearly demonstrate that we can modify K-medoids to introduce decision-maker’s preference for knee regions, which is important to decision-makers. Other clustering-based subset selection methods can also be modified in a similar way.

V Conclusion and Future Work

In this paper, we reviewed popular clustering algorithms and pointed out that IGD-based subset selection can be viewed as clustering. Then, we compared the IGD value of the obtained subset and the computation time of each algorithm on three-objective and eight-objective data sets. Our experimental results showed that when the data set size is small, hierarchical clustering with weighted average linkage is the best choice in the low-dimensional case and greedy IGD-based subset selection is the best choice in the high-dimensional case. When the data set size is large (e.g., subset selection from the UEA), K-means++ is the best in the low-dimensional case and greedy IGD-based subset selection is the best choice in the high-dimensional case. In addition, we showed that by modifying the similarity measure, we can include decision-maker’s preference in clustering.

As shown in this paper, general clustering algorithms have weakness in some scenarios since they are not specially designed for subset selection in EMO algorithms. Proposing clustering algorithms suitable for EMO algorithms is a promising future research direction. Additionally, comparing different clustering algorithms in more scenarios using various performance indicators is also an important future research topic. Clustering algorithms will be further utilized in EMO algorithms.

References

- [1] K. Deb, A. Pratap, S. Agarwal, and T. Meyarivan, “A fast and elitist multiobjective genetic algorithm: NSGA-II,” IEEE Transactions on Evolutionary Computation, vol. 6, no. 2, pp. 182–197, Apr. 2002.

- [2] Q. Zhang and H. Li, “MOEA/D: A multiobjective evolutionary algorithm based on decomposition,” IEEE Transactions on Evolutionary Computation, vol. 11, no. 6, pp. 712–731, Dec. 2007.

- [3] N. Beume, B. Naujoks, and M. Emmerich, “SMS-EMOA: Multiobjective selection based on dominated hypervolume,” European Journal of Operational Research, vol. 181, no. 3, pp. 1653–1669, Sep. 2007.

- [4] H. Ishibuchi, L. M. Pang, and K. Shang, “A new framework of evolutionary multi-objective algorithms with an unbounded external archive,” in Proc. of 24th European Conference on Artificial Intelligence, vol. 325, 2020, pp. 283–290.

- [5] H. K. Singh, K. S. Bhattacharjee, and T. Ray, “Distance-based subset selection for benchmarking in evolutionary multi/many-objective optimization,” IEEE Transactions on Evolutionary Computation, vol. 23, no. 5, pp. 904–912, Oct. 2019.

- [6] R. Tanabe, H. Ishibuchi, and A. Oyama, “Benchmarking multi- and many-objective evolutionary algorithms under two optimization scenarios,” IEEE Access, vol. 5, pp. 19 597–19 619, Sep. 2017.

- [7] W. Chen, H. Ishibuchi, and K. Shang, “Modified distance-based subset selection for evolutionary multi-objective optimization algorithms,” in Proc. of IEEE Congress on Evolutionary Computation, 2020, pp. 1–8.

- [8] J. Bader and E. Zitzler, “HypE: An algorithm for fast hypervolume-based many-objective optimization,” Evolutionary Computation, vol. 19, no. 1, pp. 45–76, Mar. 2011.

- [9] H. Ishibuchi, L. M. Pang, and K. Shang, “Solution subset selection for final decision making in evolutionary multi-objective optimization,” in arXiv, 2020.

- [10] H. Zhang, S. Song, A. Zhou, and X.-Z. Gao, “A clustering based multiobjective evolutionary algorithm,” in Proc. of IEEE Congress on Evolutionary Computation, 2014, pp. 723–730.

- [11] Y. Hua, Y. Jin, and K. Hao, “A clustering-based adaptive evolutionary algorithm for multiobjective optimization with irregular pareto fronts,” IEEE Transactions on Cybernetics, vol. 49, no. 7, pp. 2758–2770, Jul. 2019.

- [12] S. Liu, Q. Lin, K.-C. Wong, C. A. C. Coello, J. Li, Z. Ming, and J. Zhang, “A self-guided reference vector strategy for many-objective optimization,” IEEE Transactions on Cybernetics (Early Access), 2020.

- [13] J. MacQueen, “Some methods for classification and analysis of multivariate observations,” in Proc. of the Fifth Berkeley Symposium on Mathematical Statistics and Probability, 1967, pp. 281–297.

- [14] D. Arthur and S. Vassilvitskii, “k-means++: The advantages of careful seeding,” Stanford, Tech. Rep., 2006.

- [15] H.-S. Park and C.-H. Jun, “A simple and fast algorithm for k-medoids clustering,” Expert Systems with Applications, vol. 36, no. 2, pp. 3336–3341, Mar. 2009.

- [16] M. Ester, H.-P. Kriegel, J. Sander, X. Xu et al., “A density-based algorithm for discovering clusters in large spatial databases with noise,” in Proc. of the Second International Conference on Knowledge Discovery and Data Mining, vol. 96, no. 34, 1996, pp. 226–231.

- [17] S. C. Johnson, “Hierarchical clustering schemes,” Psychometrika, vol. 32, no. 3, pp. 241–254, Sep. 1967.

- [18] R. R. Sokal, “A statistical method for evaluating systematic relationships,” Science Bulletin, vol. 38, pp. 1409–1438, 1958.

- [19] J. H. Ward Jr, “Hierarchical grouping to optimize an objective function,” Journal of the American Statistical Association, vol. 58, no. 301, pp. 236–244, Mar. 1963.

- [20] C. E. Rasmussen, “The infinite gaussian mixture model,” in Proc. of Annual Conference on Neural Information Processing Systems, vol. 12, 1999, pp. 554–560.

- [21] W. Wang, J. Yang, R. Muntz et al., “STING: A statistical information grid approach to spatial data mining,” in Proc. of International Conference on Very Large Data Bases, vol. 97, 1997, pp. 186–195.

- [22] J. C. Bezdek, R. Ehrlich, and W. Full, “FCM: The fuzzy c-means clustering algorithm,” Computers & Geosciences, vol. 10, no. 2-3, pp. 191–203, 1984.

- [23] D. Xu and Y. Tian, “A comprehensive survey of clustering algorithms,” Annals of Data Science, vol. 2, no. 2, pp. 165–193, Aug. 2015.

- [24] M. Daszykowski, B. Walczak, and D. Massart, “Representative subset selection,” Analytica Chimica Acta, vol. 468, no. 1, pp. 91–103, Sep. 2002.

- [25] C. Boutsidis, J. Sun, and N. Anerousis, “Clustered subset selection and its applications on it service metrics,” in Proc. of the 17th ACM Conference on Information and Knowledge Management, 2008, pp. 599–608.

- [26] A. Zhou, Y. Jin, Q. Zhang, B. Sendhoff, and E. Tsang, “Combining model-based and genetics-based offspring generation for multi-objective optimization using a convergence criterion,” in Proc. of IEEE International Conference on Evolutionary Computation, 2006, pp. 892–899.

- [27] K. Deb, L. Thiele, M. Laumanns, and E. Zitzler, “Scalable multi-objective optimization test problems,” in Proc. of IEEE Congress on Evolutionary Computation, vol. 1, 2002, pp. 825–830.

- [28] R. Cheng, M. Li, Y. Tian, X. Zhang, S. Yang, Y. Jin, and X. Yao, “A benchmark test suite for evolutionary many-objective optimization,” Complex & Intelligent Systems, vol. 3, no. 1, pp. 67–81, Mar. 2017.

- [29] H. Jain and K. Deb, “An evolutionary many-objective optimization algorithm using reference-point based nondominated sorting approach, part II: Handling constraints and extending to an adaptive approach,” IEEE Transactions on Evolutionary Computation, vol. 18, no. 4, pp. 602–622, Aug. 2014.

- [30] S. Huband, P. Hingston, L. Barone, and L. While, “A review of multiobjective test problems and a scalable test problem toolkit,” IEEE Transactions on Evolutionary Computation, vol. 10, no. 5, pp. 477–506, Oct. 2006.

- [31] Y. Tian, X. Xiang, X. Zhang, R. Cheng, and Y. Jin, “Sampling reference points on the pareto fronts of benchmark multi-objective optimization problems,” in Proc. of IEEE Congress on Evolutionary Computation, 2018, pp. 1–6.

- [32] W. Chen, H. Ishibuchi, and K. Shang, “Fast greedy subset selection from large candidate solution sets in evolutionary multi-objective optimization,” IEEE Transactions on Evolutionary Computation (Early Access), 2021.

- [33] T. Ulrich, D. Brockhoff, and E. Zitzler, “Pattern identification in pareto-set approximations,” in Proc. of 10th Annual Conference on Genetic and Evolutionary Computation, 2008, pp. 737–744.

- [34] J. Branke, K. Deb, H. Dierolf, and M. Osswald, “Finding knees in multi-objective optimization,” in Proc. of International Conference on Parallel Problem Solving from Nature, 2004, pp. 722–731.

- [35] H. Ishibuchi, H. Masuda, Y. Tanigaki, and Y. Nojima, “Modified distance calculation in generational distance and inverted generational distance,” in Proc. of International Conference on Evolutionary Multi-criterion Optimization, 2015, pp. 110–125.

- [36] A. P. Guerreiro, C. M. Fonseca, and L. Paquete, “Greedy hypervolume subset selection in low dimensions,” Evolutionary Computation, vol. 24, no. 3, pp. 521–544, Sep. 2016.