Cleaning Images with Gaussian Process Regression

Abstract

Many approaches to astronomical data reduction and analysis cannot tolerate missing data: corrupted pixels must first have their values imputed. This paper presents astrofix, a robust and flexible image imputation algorithm based on Gaussian Process Regression (GPR). Through an optimization process, astrofix chooses and applies a different interpolation kernel to each image, using a training set extracted automatically from that image. It naturally handles clusters of bad pixels and image edges and adapts to various instruments and image types. For bright pixels, the mean absolute error of astrofix is several times smaller than that of median replacement and interpolation by a Gaussian kernel. We demonstrate good performance on both imaging and spectroscopic data, including the SBIG 6303 0.4m telescope and the FLOYDS spectrograph of Las Cumbres Observatory and the CHARIS integral-field spectrograph on the Subaru Telescope.

1 Introduction

The history of data artifacts is as long as the history of observational astronomy. Artifacts such as dead pixels, hot pixels, and cosmic ray hits are common in astronomical images. They at best render the pixels’ data unusable while at worst disable the entire image in downstream approaches.

In dealing with missing pixels, some astronomical procedures simply ignore them while others require imputing their values first. Optimal extraction of spectra (Horne, 1986) and Point Spread Function (PSF) photometry ignore missing data, while box spectral extraction and aperture photometry do not. Aperture photometry and box extraction have the advantage of requiring little knowledge about the PSF or line-spread function (LSF). For this reason, aperture photometry has been used for ultra-precise Kepler photometry (Jenkins et al., 2010). Box extraction is currently standard for the SPHERE (Claudi et al., 2008) and GPI (Macintosh et al., 2008) integral-field spectrographs.

In general, correcting the corrupted data in an image involves two steps: identifying what they are and imputing their values. Existing algorithms have emphasized bad pixel identification and rejection. For example, there are well-developed packages that detect cosmic rays (CRs) by comparing multiple exposures (e.g. Windhorst et al., 1994). When multiple exposures are not available, Rhoads (2000) rejects CRs by applying a PSF filter, van Dokkum (2001) by Laplacian edge detection (LACosmic), and Pych (2004) by iterative histogram analysis. Among the above methods, LACosmic offers the best performance (Farage & Pimbblet, 2005). Approaches based on machine learning like deepCR (Zhang & Bloom, 2020), a deep-learning algorithm, may offer further improvements.

In contrast, the literature on methods of imputing missing data is sparse. Currently, the most common approach is the median replacement, which replaces a bad pixel with the median of its neighbours. Algorithms that apply median replacement include LACosmic. Some other packages, such as astropy.convolution, take an average of the surrounding pixels, weighted by Gaussian kernels. An alternative is a 1D linear interpolation. This approach is standard for the integral-field spectrographs GPI (Macintosh et al., 2008) and SPHERE (Claudi et al., 2008). deepCR, on the other hand, predicts the true pixel values by a trained neural network. However, none of these methods are statistically well-motivated, and they usually apply a fixed interpolation kernel to all images. In reality, however, the optimal kernel could vary from images to images. Moreover, in a continuous region of bad pixels or near the boundary of an image, most existing data imputation approaches either have their performance compromised or have to treat these regions as special cases. Only deepCR can handle them naturally with minimal performance differences.

In this paper, we present astrofix, a robust and flexible data imputation approach based on Gaussian Process Regression (GPR). GPR defines a family of interpolation kernels, within which we choose the optimal kernel for each image, using a training set that is extracted automatically from that image. We structure the paper as follows: Section 2 introduces GPR and the covariance function, explains how they lead to a parametric family of interpolation kernels and shows how this formalism handles additional missing data naturally. Section 3 discusses our strategies of choosing the optimal kernel. Section 4 specifies the implementation of our algorithm. Section 5 shows four examples—a CCD image of a globular cluster, a spectroscopic CCD exposure, a raw CHARIS image, and PSF photometry and astrometry on a stellar image—to demonstrate the consistently good performance of our algorithm. Finally, Section 6 summarizes our approach, discusses its limitations, and proposes directions for future work.

2 An Interpolation Kernel from Gaussian Process Regression

GPR is a statistical method of inferring the values of a function at locations , given that we know its values at locations and the covariance function that gives the covariance between any two points and . The true values of the function are assumed to be drawn from a multivariate Gaussian distribution with zero mean and an infinite-dimensional covariance matrix given by the covariance function:

| (1) |

In many cases, we assume that we have only imperfect measurements of the true function values . We assume the measurement errors to be Gaussian with zero mean and covariance matrix . Then, the function values at locations are related to the measured values at locations by

| (2) |

The function then has the expectation value

| (3) |

GPR has been implemented in code packages such as GPy (The GPy authors, 2014) and George (Ambikasaran et al., 2015) and is commonly used for data interpolation and model inference, but its applications in image processing are rare because of the enormous size of imaging data. In the present paper we apply GPR to the problem of imputing missing data in a two-dimensional image. We take the function to be the measured counts by an instrument subject to read noise, shot noise, and instrumental effects; the function to be the true intensity on the sky; to be the good pixels; and to be the bad pixels where the measured values are not available. The problem is then equivalent to finding from by Equation (3) given a covariance function . The values approximate the missing data at pixels and can replace them in the original two-dimensional image.

Because of the complexity of matrix inversion, using Equation (3) on an entire image with several million pixels is not possible. We instead correct for one bad pixel at a time using only its neighbors. The resulting estimate of a bad pixel’s value turns out to be a weighted sum of its neighbours’ counts. This may be thought of as an interpolation kernel applicable to the entire image. For example, if we use all pixels that are in the region centered at the bad pixel, then would be a scalar, an vector, a vector, and an matrix. If is diagonal with constant variance , and if depends only on the relative position , then the vector would be the same for all . Filling in zero for the missing central pixel (so that it is neglected when estimating its value) and reshaping to a array, becomes an interpolation kernel. Figure 1 compares the convolution of an image with a GPR kernel to that with a median filter and with a Gaussian kernel.

The residual of the convolution at a pixel can be thought of as the residual of image imputation if that pixel were the only bad pixel on the image. The convolution kernel that produces the least residual is thus the most suitable for image imputation. In our comparison, the GPR kernel best restores the original image because we have optimized it for image imputation using methods described in Section 3.1. In Figure 1 and all subsequent figures, the kernels and residuals are plotted on a color scale such that white is zero, red is positive, and blue is negative.

2.1 The Covariance Function

The choice of covariance function determines the smoothness and the extent of the kernel. In principle, a wide variety of covariance functions could be used, but we will focus on the squared exponential covariance function because of its simplicity and its good approximation to a typical instrumental PSF:

| (4) |

The parameter in Equation (4) describes the magnitude of the correlation between pixels; it should be compared to the data variance . The kernel does not depend on and individually but on the correlation-to-noise ratio , due to the fact that and , so , canceling in Equation (3). Hence, in the rest of the paper we will set (so that is the identity matrix) and use to mean . The noiseless limit implies a constant, dark image, and a kernel independent of . The other limit, , implies a noisy image where no useful information about a pixel’s value can be extracted from its neighbors. The parameter gives the characteristic scale over which the image varies. Larger values of correspond to broader kernels. The values of the hyperparameters and , together with a particular choice of the covariance function, parameterize a family of GPR kernels.

Figure 2 shows four examples of GPR kernels with different hyperparameters and . As increases, GPR makes use of more pixels in the region and assigns larger weights to the adjacent pixels, allowing significant negative weights to be used. As increases, the absolute values of the weights decay more slowly with increasing distance; the weights change over a larger scale. All GPR kernels with a single bad pixel have ring-like structure because the value of the squared exponential covariance function depends only on the Euclidean distance to the central pixel, and they are zero at the center where the bad pixel is located.

2.2 Additional Missing Pixels in the Kernel

The fact that each particular choice of , , and covariance function uniquely determines a convolution kernel has several advantages. One of them is that when there is a continuous region of bad pixels to be assigned zero weights, GPR is able to recompute the weights on all other pixels instead of simply re-normalizing them. To give zero weights to additional bad pixels near the bad pixel to be corrected, all we need to do is remove the corresponding entries in the covariance function and GPR automatically compensates for the loss of information by increasing or reducing the weights of their neighbours. Figure 3 panels (a) through (c) show how additional bad pixels near the central bad pixel re-weight the entire GPR kernel and break its symmetry.

Another advantage of GPR is its straightforward handling of bad pixels on the edges of an image. Similar to the accommodation of additional bad pixels, we only need to remove all points that are outside of the image from the covariance function, and GPR will recompute the weights of the rest of the points. Figure 3 panel (d) gives an example of a truncated GPR kernel due to a bad pixel on the top left corner of the image. Because the bad pixel is at relative to the corner, with a kernel width of , we are only able to construct a kernel. GPR enables the weights to change accordingly to deal with the loss of information.

3 Optimizing the GPR Kernel

Because , , and the covariance function parameterize a family of convolution kernels, we can optimize within the parameter space for each image to obtain a kernel that is the most suitable for repairing that image. In the high signal-to-noise ratio (SNR) limit, the optimization process can be viewed as modelling the instrumental effects on the structure of an image. For an image of distant stars, for example, it is roughly equivalent to finding the correlation function that best represents the instrumental PSF. The optimal kernel should not change as long as this PSF is stable across the image. Variations in the image itself, such as a spatially changing flux, do not affect the optimal kernel as long as the kernel is normalized. Hence, we optimize only once per image, and in general, one can optimize only once per instrument and per configuration.

3.1 Choosing the Hyperparameters

To choose the optimal hyperparameters and , our strategy is to identify a set of well-measured pixels in the image to serve as the training set. Given and , we can then convolve the entire image by the kernel defined by and and compute the subsequent change in value at each pixel in the training set. We find the and that minimize the mean absolute deviation of these residuals.

The training set has to be large enough to be representative of the image, and it should focus on the pixels of scientific importance. Generally this means pixels with count rates appreciably higher than the background level. A kernel trained on low-SNR background pixels would simply learn to average a large set of surrounding pixels rather than identifying the correlation between pixels containing astronomically useful flux. A kernel optimized on a high-SNR training set would be good at correcting pixels of scientific importance even for low-SNR images (see Section 5.4). For a typical application, then, the training set should consist of pixels well above the background level but below saturation. Section 4.1 describes astrofix’s choice of training set.

3.2 Choosing the Optimal Covariance Function

In principle, any covariance function can be used to generate GPR kernels, but only covariance functions that resemble the instrumental PSF would be useful. The squared exponential covariance function is therefore a reasonable choice. One notable alternative that we investigate here is the Ornstein-Uhlenbeck covariance function:

| (5) |

Compared to the squared exponential covariance function, the Ornstein-Uhelenbeck covariance function is not quadratic but linear in Euclidean distance in the exponent. As a consequence, it tends to under-emphasize pixels that are the closest to the central bad pixel, which often contain the most important information. Figure 4 compares the optimized kernels for the star image in Figure 1 using the Squared Exponential covariance function (Equation (4)) with those using the Ornstein-Uhelenbeck covariance function (Equation (5)). The magnitude of the weights in the central region is significantly smaller for the kernels than for the kernels.

One other feature of a kernel is that its more slowly changing function values lead to smaller fluctuations in the weights and less prominent negative weights. As a consequence, most outer pixels have almost zero weights. In fact, for most values of smaller than the kernel width, almost all of the weight in a kernel is concentrated in the central region. Hence, a kernel of width is almost identical to a kernel of width , which is not the case for kernels.

As a result of the two aforementioned features, a kernel is usually not as accurate and as flexible as a kernel. Figure 5 shows the histogram of residuals (normalized by the ) from convolving the star image with the two types of kernels. In terms of the mean absolute deviations of the residuals, the kernel with width 9 performs better than the other kernels, while the kernel with width 9 has almost the same residual distribution as the kernel with width 5.

astrofix does have other covariance functions implemented, but we have found the squared exponential to produce the narrowest residual distribution. We restrict further analysis in this paper to the squared exponential covariance function.

3.3 A Stretched Kernel for 2D Spectroscopic Data

So far, we have discussed GPR kernels that have weights depending only on the Euclidean distance , but for anisotropic images, it is sometimes preferred to use a kernel that has directional dependence. Such a kernel can be particularly useful to the imputation of 2D spectroscopic data. For this purpose, we define the stretched squared exponential covariance function:

| (6) |

which produces kernels determined by three parameters: , , and . We assume here that the preferred direction (e.g. the dispersion direction) approximately follows one of the orthogonal axes of the detector, though it would be straightforward to adopt a different angle between the preferred direction of the image and the detector axes. For isotropic images, we expect the optimization to give . For vertical spectroscopic lines (horizontal dispersion) in a slit spectrograph, we expect that because the pixel values vary on a larger scale in the -direction (along the slit). Figure 6 compares the isotropic and the stretched kernel optimized for the spectroscopic arc lamp image in Section 5.3, and Figure 10 shows that the stretched kernel produces smaller residuals in this example.

We encourage the user to exercise care with a stretched covariance function. The extra free parameter increases the risk of overfitting the hyperparameters. A stretched kernel can also smooth out a point source on the slit, especially if the hyperparameters are optimized on the sky background, a galaxy stretching the length of the slit, or a lamp flat. Our training image in this case was an arc lamp for wavelength calibration; the best-fit hyperparameters would differ for a galaxy or a twilight spectrum.

4 Implementation and Computational Cost

We implement the GPR image imputation algorithm in the Python package astrofix (Zhang & Brandt, 2021), which is available at https://github.com/HengyueZ/astrofix. astrofix accepts images with a bad pixel mask or images with bad pixels flagged as NaN, and it fixes any given image in three steps:

-

1.

Determine the training set of pixels that astrofix will attempt to reproduce.

-

2.

Find the optimal hyperparameters and (or , and ) given the training set from Step 1.

-

3.

Fix the image by imputing data for the bad pixels using the optimized kernel from Step 2.

We describe the details of each step in the subsections below. Appendix A details the computational cost of our approach. The computation time is a function of the image size and the fraction of bad pixels. On a single processor, it is 10 seconds for a image with 0.5% bad pixels, or a image with 0.1% bad pixels.

4.1 Defining the Training Set

The set of pixels used to optimize the GPR should include as many pixels of scientific importance as possible, while avoiding pixels that are close to the background level, saturated, or themselves bad. By default, we include all good (unflagged) pixels that are below of the brightest pixel’s value and median absolute deviations (MAD) above the median of the background. We take the median and the MAD of the image in lieu of the mean and standard deviation, because of the robustness and convenience of the median. The MAD is an underestimate of the standard deviation: for a Gaussian background distribution, MAD correspond to about .

In many cases, the users can replace the default training set selection parameters ( and ) with better ones by deriving the background distribution and the saturation level from the histogram of the image, but for high-SNR images with sufficient bright pixels, the quality of the training is largely insensitive to the parameters. The default training set usually works sufficiently well without requiring a highly accurate model of the background. For low-SNR images, one has to tune the parameters more carefully or use a high-SNR image taken by the same instrument (and under the same configuration) as the training set.

The training set might include pixels that are themselves good but are close to bad pixels that can ruin the convolution. We remove such pixels during the training process described in the next subsection.

4.2 Tuning the Hyperparameters

In the high-SNR limit, the training process can be considered as modeling the structure that an instrument imparts to an image, such as the PSF. Therefore, an efficient strategy is to run the training only once for each instrument using a combined training set built from multiple images. However, the optimal kernel may still vary from image to image due to, e.g., variations in the seeing. It is not computationally expensive to redo the training as appropriate for each image (see Appendix A).

In the training process, we convolve the training set pixels with GPR kernels and vary the hyperparameters and (or , , and ) to minimize the mean absolute residual in the training set. We only need to compute the kernel once at fixed values for and . The computational cost then scales as , where is the width (in pixels) of the kernel and is the number of pixels in the training set. We construct an image array of size to perform the convolution.

A problem naturally occurs in the limit of noiseless data, where the optimal value of the parameter (i.e. in our notation) would be infinite. If and is relatively large, evaluating Equation (3) requires the inversion of an ill-conditioned (though not formally singular) matrix. This leads to numerical instability in the constructed GPR kernel, and the optimization procedure can end up in a spurious minimum.

We restrict to small values by multiplying the mean absolute residual with a penalty function:

| (7) |

Here, can be interpreted as a ”soft” upper bound on . For , ; for , increases exponentially. determines how much can penetrate the soft upper bound. The best values of and are determined by the largest condition number that the machine can handle in a matrix inversion without much loss of accuracy. Figure 7 shows how the condition number depends on the hyperparameters and for 3 different kernel widths. A larger kernel width requires inverting a larger matrix, which leads to a larger condition number. The condition number increases quickly with for small and increases quickly with for larger . The contours indicate the upper bound on given a desired upper bound on the condition number. For instance, if the maximum acceptable condition number is , then in the worst case scenario (largest and ), is roughly the upper bound on . By default, we set and , which corresponds to a maximum condition number of about for and . The user can change the upper bound parameters according to their own needs and referring to Figure 7.

Then, for a given image and a chosen training set, astrofix’s steps to tune the GPR hyperparameters are:

-

1.

Flag all bad pixels as NaN.

-

2.

Construct the image array for pixels in the training set, and remove rows that contain NaN values.

-

3.

Propose the values of and (or , , and ), and construct the corresponding kernel.

-

4.

Convolve the image with the kernel, restricting the convolution to pixels in the training set.

-

5.

Find the mean absolute residual of the convolved image and the original image for pixels in the training set.

-

6.

Minimize the mean absolute residual times the penalty function with respect to the parameters.

-

7.

Return the optimal parameter values and the residual distribution.

Steps 3 through 5 are wrapped into one single function to be passed to scipy.optimize.minimize, which is responsible for step 6. In step 3, we do not reweight the kernel in the presence of additional bad pixels because of its expense. We simply remove, in step 2, all affected pixels from the training set by checking each row of the image array for NaN values. In step 6, we require that because would imply that the correlation between pixels is smaller than the measurement noise. If is small, neighboring pixels would contribute little information about a missing data point and our effort would be meaningless. We also require that . A characteristic scale larger than the kernel width would imply a spatially uniform image, while one much smaller than a pixel would again mean that a pixel’s neighbors do not contain meaningful information to interpolate its value.

The computational expense of the optimization process depends on the size of the training set, but it usually takes only a few seconds. For example, it took 6 seconds to train on the CHARIS spectrograph in Section 5.2 with 439,000 pixels in the training set. Appendix A includes a detailed analysis of the computational cost of astrofix.

4.3 Fixing the Image

The optimal kernel found in the training process relies on the assumption that for every bad pixel, no other bad pixels or image edges lie within the region of the kernel. In this case, correcting the image is equivalent to a simple convolution with the optimal kernel at the location of each bad pixel. However, if there are additional bad pixels or pixels off the edges of the image, we must set their weights to zero and recompute the entire kernel by removing the corresponding entries in the covariance function (as discussed in Section 2.2). When the bad pixel density is large, almost every bad pixel requires computing a new kernel, so the resulting computational cost scales linearly with the bad pixel density and can be expensive (see Appendix A).

The optimal kernel that corresponds to an isolated bad pixel is usually used many times, so we pre-construct it before looping through all bad pixels. Because we correct only one bad pixel at a time and only a small fraction of the image overall, we use the weighted sum of the neighboring pixels rather than convolving the entire image. Finally, we replace the bad pixels with their imputed values only after exiting the loop. This avoids using imputed data as if they were actual measurements.

To sum up, astrofix’s steps to impute the missing data in an image are as follows:

-

1.

Pre-construct the optimal kernel for an isolated bad pixel.

-

2.

For each bad pixel:

Check if there are additional bad pixels or image edges nearby.

If not, use the optimal kernel. Otherwise, construct a kernel that has the largest possible size ( in each direction) and has weights reassigned according to the locations of additional missing pixels.

Compute the sum of the neighboring pixels weighted by the kernel to find the bad pixel’s imputed value. Store this value.

-

3.

Replace the bad pixels with the imputed values.

-

4.

Return the fixed image.

5 Examples and Results

We tested astrofix on a variety of images with real and artificial bad pixels. In this section we give a few examples to demonstrate its consistently good performance.

5.1 Artificial Bad Pixels on a CCD Image of a Globular Cluster

Images of star clusters often contain a high density of useful information. While approaches like PSF photometry can handle missing data appropriately (if a good model of the PSF is available), such an analysis is not always possible. Aperture photometry, for example, cannot tolerate missing data. We therefore test astrofix on a real CCD exposure of the globular cluster NGC 104, taken by the SBIG 6303 0.4m telescope at the Las Cumbres Observatory (LCO). We randomly generated 62069 (1%) artificial bad pixels and imputed their values.

The left panel of Figure 8 compares the residual of image imputation by GPR to that by two standard approaches: astropy.convolution (with a Gaussian kernel of standard deviation 1) and the median filter used by LACosmic. The residual is normalized by the shot noise. Because most bad pixels that we have randomly selected were near the background level, we only include in the histogram the 2642 bad pixels that originally had counts 10 above the background. This tests astrofix’s ability to restore useful information. The right panels of Figure 8 show the unnormalized residuals in a region at the center of the cluster. GPR outperforms the other approaches, especially in dealing with continuous regions of bad pixels. In terms of the outlier-resistant mean absolute deviation, GPR outperforms astropy’s interpolation by about a factor of 2, and median replacement by more than a factor of 3.

5.2 Raw CHARIS Imaging Data

Infrared detectors are more specialized than CCDs, and often have a higher incidence of bad pixels. The CHARIS integral-field spectrograph (IFS), for example, uses a Hawaii2-RG array (Peters et al., 2012), and has a bad pixel fraction of about 0.5% (Brandt et al., 2017). Similar instruments include the GPI (Macintosh et al., 2014) and SPHERE (Beuzit et al., 2019) IFSs; these have comparable bad pixel rates. The data processing pipelines for GPI (Perrin et al., 2014) and SPHERE both use box extraction to reconstruct the spectra of each lenslet. This approach cannot accommodate missing data, so both pipelines interpolate to fill in bad pixel values.

We test astrofix on a raw CHARIS image with 26338 intrinsic bad pixels flagged by values of zero. There were 438996 pixels in the training set, and the total computational time was 14.96 seconds. The top panels of Figure 9 show a slice of the raw image as well as the corrected images by GPR, by median replacement, and by astropy.convolution (). The bottom panels show a slice through the corresponding extracted data cubes. The original data cube comes from the standard CHARIS data reduction pipeline (Brandt et al., 2017) which ignores bad pixels. GPR clearly outperforms both astropy and the median filter and performs comparably well to the standard pipeline. When the data processing pipeline cannot ignore bad pixels (e.g. box extraction for GPI and SPHERE), astrofix would become a useful interpolation method.

5.3 Artificial Bad Pixels on 2D Spectroscopic CCD Data

The standard GPR approach does not require any special treatment to adapt well to spectroscopic data, but we can apply a stretched GPR kernel to further improve its performance. In a real spectroscopic CCD exposure taken by the FLOYDS spectrograph (Sand et al., 2011) at LCO, we randomly generated 53537 (5%) artificial bad pixels, of which 1524 pixels had counts above the background. We applied both GPR and stretched GPR (sGPR) to impute the missing data, and their residuals (normalized by the shot noise) are compared in the left panel of Figure 10 with the residuals from astropy.convolution () and the median filter. The right panels of Figure 10 show the unnormalized residuals in a region. Both GPR and sGPR outperform other approaches by a factor , and sGPR offers a small improvement over GPR.

The data in this case are from an arc lamp and show little structure perpendicular to the dispersion direction. The results would differ for spectroscopy of a point source. Depending on the strength of spectral features, the image in the dispersion direction might appear smoother than in the slit direction, the reverse of this case. For this reason, we urge caution in the use of sGPR with astrofix.

5.4 PSF Photometry and Astrometry on Low-SNR Stellar Images with Bad Pixels

The previous examples demonstrate astrofix’s ability to repair high-SNR images. In the low-SNR limit, however, the residual minimization process of astrofix favors a uniform kernel with to smooth out the background noise. This reduces astrofix to a simple average, which is suboptimal for restoring the useful information in a pixel. Therefore, we recommend that the user restrict the training to the brighter pixels in the low-SNR image or train on a high-SNR image taken by the same instrument (and under the same configuration) and apply the optimized kernel to the low-SNR image. Because such a kernel is trained on strongly correlated neighbouring pixels, it would be non-uniform and could produce a wider residual distribution for low-SNR bad pixels. However, the optimized kernel would perform substantially better than the uniform kernel in repairing pixels of practical use, such as pixels containing the PSF of a star.

As an example, we compare astrofix and astropy.convolution in terms of PSF photometry and astrometry on the truncated stellar image in Figure 1. We generate artificial bad pixels in its PSF and add white Gaussian noise to lower its SNR. We then apply both astrofix and astropy.convolution to repair the PSF at . We train the GPR kernel on the rest of the image with high SNR and get . For astropy.convolution, we apply a Gaussian kernel of to match the of the fitted PSF. Finally, we perform PSF photometry and astrometry on the fixed images and compute the deviations of the fitted centroids from the true centroid position ( and , in the unit of pixels) and the fractional difference between the fitted flux and the true flux (). For both photometry and astrometry, we assume Gaussian PSFs and use IterativelySubtractedPSFPhotometry and IRAFStarFinder in the Python package Photutils with the default parameters. We repeat the procedure for 1000 random noise samples at each SNR level.

Figure 11 shows the results for two different sets of bad pixels. astrofix produces much smaller systematic errors on all three fitted parameters, showing that the optimized GPR kernel is the more accurate model of the PSF, although, at very small SNRs, it generally produces a slightly larger spread in the fitted parameters. In other words, we trade precision for accuracy by training the kernel on high-SNR pixels, putting greater weights on modeling the PSF instead of averaging out the noise. This strategy maintains the scientific value of astrofix even for low-SNR images, and a high-SNR training set is almost always available from the brighter regions on the same image or other images taken by the same instrument.

A fully uniform background would remove the bias seen in Figure 11. In this case, each neighboring pixel would contain the same information as the bad pixel, and the optimal approach would be a simple average of neighboring good pixels. Our approach with astrofix, however, will better retain small-scale structure atop a smoothly varying background.

6 Conclusions

We have presented astrofix, an image imputation algorithm based on Gaussian Process Regression. The ability to optimize the interpolation kernel within a parametric family of kernels, combined with the simple and natural handling of clusters of bad pixels and image edges, enables astrofix to perform several times better than median replacement and astropy.convolution. It adapts well to various instruments and image types, including both imaging and spectroscopy.

As discussed in Section 5.4, the actual performance of astrofix depends on the SNR of the image, and its optimization process requires a high-SNR training set. For a low-SNR image, the training set can only be obtained from other images taken by the same instrument, assuming that the instrument has negligible variations from images to images. The assumption is usually reliable, but it can sometimes fail due to changes in the seeing conditions, so it takes some extra effort to check these conditions before choosing a training set. The sGPR covariance function in astrofix should be used with caution because it may smooth out a point source in the slit direction. The default parameters in astrofix, such as the initial guess for the optimization and the cutoffs for the training set selection, were chosen carefully but have not been tested extensively on all image types. Nevertheless, they worked sufficiently well in all of our examples.

It is worth noting that astrofix works best when there is sufficient structure on the scale of the kernel (). For a uniform background or a smooth, extended source varying only on a large scale , the correlation-to-noise ratio would be extremely low within the size of the kernel, meaning that neighbouring pixels contribute almost no information to interpolate a pixel’s value. In this case, astrofix may not be as good as a simple average of neighbouring pixels. However, astrofix will still outperform other methods in correcting bad pixels if the extended source does have structure on the kernel scale for astrofix to learn, which is often the case. A better interpolation for each bad pixel then leads naturally to a better recovery of large-scale information.

Appendix A Computational Cost of astrofix

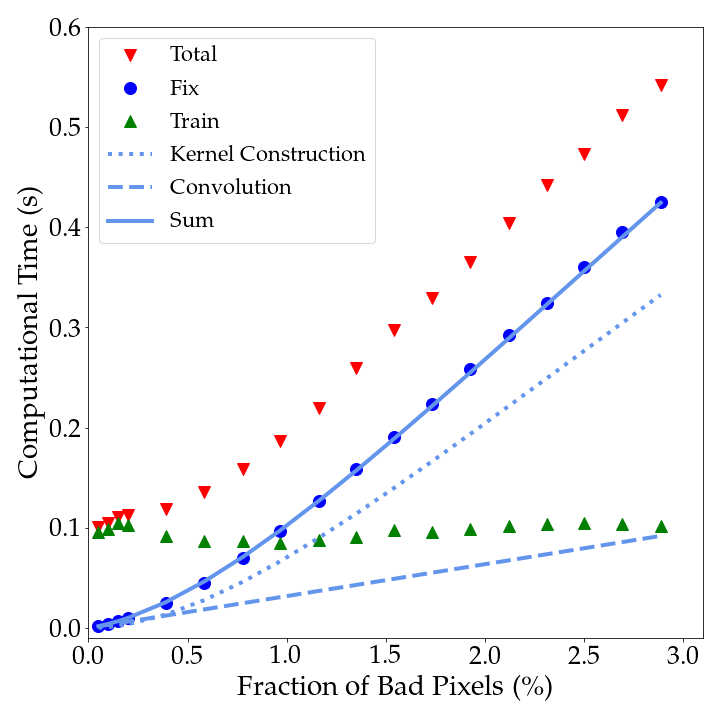

Because astrofix requires optimization and reconstruction of kernels, it has a greater computational cost than most existing image imputation approaches. Nevertheless, one can reduce the cost by using a smaller kernel width or by optimizing only once for each instrument without much loss in accuracy (see Figure 5). The computational cost also depends on the number of bad pixels in an image. In particular, the cost of training is largely independent of the bad pixel fraction, while the cost of fixing the image scales with the bad pixel fraction as follows:

| (A1) |

Here, the first term gives the total cost of convolving bad pixels with a kernel of width , and the constant is the computational cost of floating point operations. The second term gives the total cost of reconstructing the kernel, where is the bad pixel fraction and is the probability that a bad pixel has at least one bad pixel neighbour in the surrounding region. The constant is the average time of applying Equation (3), which consists of a matrix inversion of complexity and a matrix-vector multiplication of complexity . As a consequence, and the cost of kernel construction is greater than the cost of convolution for large values of . Assuming the bad pixels to be randomly positioned throughout the detector, the scaling of with depends on how compares to :

| (A2) |

In the limit of , , and both terms in increase linearly with . The cost per bad pixel stays constant because almost every bad pixel has at least one bad neighbour which requires reconstructing the kernel. In the limit of , , so depends quadratically on . The cost per bad pixel becomes smaller because the kernel does not need to be recomputed for most bad pixels. The two limits are visible in Figure 12, which shows how the computational time of different components of astrofix scales with the fraction of bad pixels on the cluster image in Section 5.1. Each point is the median of 100 samples. The solid blue line fits for using Equation (A1), while the dashed and dotted lines are the corresponding convolution cost and kernel construction cost. With , only when does the scaling of the computational cost deviate from the linear relation of the large limit. This linear scaling of the computational cost will set in at much lower values of if bad pixels tend to come in clusters (for example, due to cosmic rays).

For real images with real bad pixels, it usually takes 1 second on our Intel Core i5-8300H 2.30 GHz CPU to correct 2000 bad pixels, and the total time ranges from a few seconds to almost a minute. For example, the CHARIS data in Section 5.2 took a total of 14.96 seconds, of which 8.56 seconds were spent on fixing 26338 bad pixels on the image, and 6.33 seconds were spent on the optimization process with 438996 pixels in the training set. The rest of the time went to defining the training set.

References

- Ambikasaran et al. (2015) Ambikasaran, S., Foreman-Mackey, D., Greengard, L., Hogg, D. W., & O’Neil, M. 2015, IEEE Transactions on Pattern Analysis and Machine Intelligence, 38, 252

- Astropy Collaboration et al. (2018) Astropy Collaboration, Price-Whelan, A. M., Sipőcz, B. M., et al. 2018, AJ, 156, 123

- Astropy Collaboration et al. (2013) Astropy Collaboration, Robitaille, T. P., Tollerud, E. J., et al. 2013, A&A, 558, A33

- Beuzit et al. (2019) Beuzit, J. L., Vigan, A., Mouillet, D., et al. 2019, A&A, 631, A155

- Bradley et al. (2020) Bradley, L., Sipőcz, B., Robitaille, T., et al. 2020, astropy/photutils: 1.0.0

- Brandt et al. (2017) Brandt, T. D., Rizzo, M., Groff, T., et al. 2017, Journal of Astronomical Telescopes, Instruments, and Systems, 3, 048002

- Claudi et al. (2008) Claudi, R. U., Turatto, M., Gratton, R. G., et al. 2008, in Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series, Vol. 7014, Ground-based and Airborne Instrumentation for Astronomy II, ed. I. S. McLean & M. M. Casali, 70143E

- Farage & Pimbblet (2005) Farage, C. L., & Pimbblet, K. A. 2005, PASA, 22, 249

- Harris et al. (2020) Harris, C. R., Millman, K. J., van der Walt, S. J., et al. 2020, Nature, 585, 357–362

- Horne (1986) Horne, K. 1986, PASP, 98, 609

- Hunter (2007) Hunter, J. D. 2007, Computing in Science Engineering, 9, 90

- Jenkins et al. (2010) Jenkins, J. M., Caldwell, D. A., Chandrasekaran, H., et al. 2010, ApJ, 713, L87

- Macintosh et al. (2014) Macintosh, B., Graham, J. R., Ingraham, P., et al. 2014, Proceedings of the National Academy of Science, 111, 12661

- Macintosh et al. (2008) Macintosh, B. A., Graham, J. R., Palmer, D. W., et al. 2008, in Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series, Vol. 7015, Adaptive Optics Systems, ed. N. Hubin, C. E. Max, & P. L. Wizinowich, 701518

- Perrin et al. (2014) Perrin, M. D., Maire, J., Ingraham, P., et al. 2014, in Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series, Vol. 9147, Ground-based and Airborne Instrumentation for Astronomy V, ed. S. K. Ramsay, I. S. McLean, & H. Takami, 91473J

- Peters et al. (2012) Peters, M. A., Groff, T., Kasdin, N. J., et al. 2012, in Society of Photo-Optical Instrumentation Engineers (SPIE) Conference Series, Vol. 8446, Ground-based and Airborne Instrumentation for Astronomy IV, ed. I. S. McLean, S. K. Ramsay, & H. Takami, 84467U

- Pych (2004) Pych, W. 2004, PASP, 116, 148

- Rhoads (2000) Rhoads, J. E. 2000, PASP, 112, 703

- Sand et al. (2011) Sand, D. J., Brown, T., Haynes, R., & Dubberley, M. 2011, in American Astronomical Society Meeting Abstracts, Vol. 218, American Astronomical Society Meeting Abstracts #218, 132.03

- The GPy authors (2014) The GPy authors. 2012–2014, GPy: A gaussian process framework in python, http://github.com/SheffieldML/GPy

- van Dokkum (2001) van Dokkum, P. G. 2001, PASP, 113, 1420

- Virtanen et al. (2020) Virtanen, P., Gommers, R., Oliphant, T. E., et al. 2020, Nature Methods, 17, 261

- Windhorst et al. (1994) Windhorst, R. A., Franklin, B. E., & Neuschaefer, L. W. 1994, PASP, 106, 798

- Zhang & Brandt (2021) Zhang, H., & Brandt, T. D. 2021, Hengyuez/astrofix: v0.1.0

- Zhang & Bloom (2020) Zhang, K., & Bloom, J. S. 2020, ApJ, 889, 24