inkscapelatex=true

Classification of Radio Galaxies with trainable COSFIRE filters

Abstract

Radio galaxies exhibit a rich diversity of characteristics and emit radio emissions through a variety of radiation mechanisms, making their classification into distinct types based on morphology a complex challenge. To address this challenge effectively, we introduce an innovative approach for radio galaxy classification using COSFIRE filters. These filters possess the ability to adapt to both the shape and orientation of prototype patterns within images. The COSFIRE approach is explainable, learning-free, rotation-tolerant, efficient, and does not require a huge training set. To assess the efficacy of our method, we conducted experiments on a benchmark radio galaxy data set comprising of 1180 training samples and 404 test samples. Notably, our approach achieved an average accuracy rate of 93.36%. This achievement outperforms contemporary deep learning models, and it is the best result ever achieved on this data set. Additionally, COSFIRE filters offer better computational performance, 20 fewer operations than the DenseNet-based competing method (when comparing at the same accuracy). Our findings underscore the effectiveness of the COSFIRE filter-based approach in addressing the complexities associated with radio galaxy classification. This research contributes to advancing the field by offering a robust solution that transcends the orientation challenges intrinsic to radio galaxy observations. Our method is versatile in that it is applicable to various image classification approaches.

keywords:

COSFIRE – image processing – radio continuum: galaxies – trainable filters1 Introduction

Automatically classifying radio galaxies according to their morphology has been extensively studied within the literature over the last few years. This has been motivated by the introduction of various innovative and diverse machine/deep learning techniques. Three main factors have driven the adoption of these state-of-the-art methods in the processing of interferometric data and images: the availability of high-resolution images from modern radio telescopes such as MeerKAT (Knowles et al., 2022) and LOFAR (Shimwell et al., 2022); the availability of labelled data sets from public initiatives such as Radio Galaxy Zoo (Banfield et al., 2015), LOFAR Galaxy Zoo111https://www.zooniverse.org/projects/chrismrp/radio-galaxy-zoo-lofar, and other catalogs (Proctor, 2011; Best & Heckman, 2012; Baldi et al., 2015; Miraghaei & Best, 2017; Capetti et al., 2017a, b; Baldi et al., 2018; Ma et al., 2019); and the interdisciplinary and collaborative research with other fields such as computer science, statistics, and data science. These techniques have opened up new possibilities in the field of radio astronomy, as they provide fundamental insights into astrophysical phenomena and facilitate serendipitous discoveries. Automatic galaxy classification is essential for understanding the physical processes that shape and transform radio galaxies (Ndung’u et al., 2023). This is especially true in the case of radio interferometry, due to the large data sets that are currently being generated by modern radio surveys. These data sets can be terabytes to exabytes in size (Booth & Jonas, 2012; Swart et al., 2022).

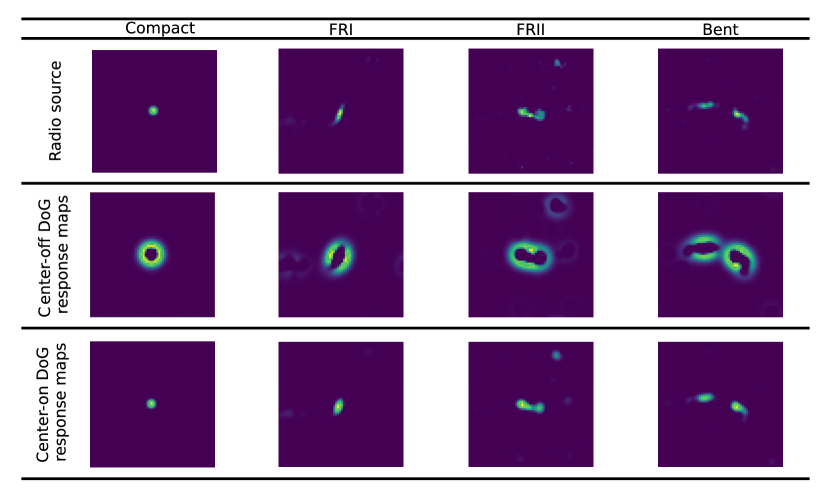

Radio galaxies can be divided into four primary categories based on their intrinsic morphological differences. The most important two are Fanaroff & Riley I (FRI) and Fanaroff & Riley II (FRII) (Fanaroff & Riley, 1974). FRI galaxies have bright cores relative to their lobes (have decreasing luminosity from the core). FRII galaxies, on the other hand, have edge-brightened lobes with a core at the centre. The third and most common category of radio galaxies is the Compact class, which refers to point-like radio sources (Baldi et al., 2015, 2018). The fourth category is Bent, which is composed of radio sources with jets that are bent at an angle, either in a narrow-angled tail (NAT) or a wide-angled tail (WAT) configuration (Rudnick & Owen, 1976). While the Compact and FRI classes are quite distinctive, the FRII and Bent classes are much more challenging. In Fig. 1 we illustrate a few examples of each of the four classes as well as superimposed variants created by utilizing all images from the data set described in Table 1. In addition to the difficulties related to their shape characteristics, the classification of FRI, FRII, and Bent galaxies is further exacerbated by the fact that the galaxies in the data set have differing orientations. Upon visual inspection, it becomes apparent that each category exhibits a discernibly distinct brightness distribution. Nonetheless, it is worth noting that the FRII and Bent classes share substantial similarities, making it difficult to discern between the two classes hence a challenging task to differentiate them.

In this paper, we propose a lightweight paradigm that involves trainable COSFIRE (Combination of Shifted Filter Responses) filters (Azzopardi & Petkov, 2012a; Azzopardi et al., 2016), which have been found effective in various computer vision applications. This approach is efficient, learning-free, rotation-tolerant, explainable, and does not require a huge training set.

The implementation of a COSFIRE-based classification pipeline is relatively easy and straightforward from a conceptual standpoint as described in Section 4. It involves the configuration of COSFIRE filters (Azzopardi & Petkov, 2012a) whose selectivity of each filter is automatically determined from the shape properties of a single training example. The objective is to set up multiple filters, whose combined responses generate a feature signature for the type of galaxy present in an image. This approach is analogous to how visual cells in the mammalian brain encode visual information, a concept known as population coding (Pasupathy & Connor, 1999, 2002). COSFIRE filters have been applied in various computer vision tasks: gender recognition (Azzopardi et al., 2016), contour detection (Azzopardi & Petkov, 2012b) and handwritten digit classification (Azzopardi & Petkov, 2013). In this work, COSFIRE filters are configured to extract the hyperlocal geometric arrangements that uniquely describe the patterns of radio sources (in terms of blobs) in a given image.

The rest of the paper is structured as follows. Section 2 presents the current state-of-the-art approaches. Section 3 describes the data set used in this study. Section 4 describes the proposed COSFIRE-based paradigm. Section 5 presents the evaluation criteria used to assess the performance of our approach. Section 6 describes the experiments and the results obtained. Section 7 provides a discussion of the results obtained in relation to the relevant work. Finally, we draw conclusions in Section 8.

2 Related Works

Different automated computer intelligence paradigms have been exploited to automate the rather daunting manual classification approaches that radio astronomers use to identify different types of radio sources (Ndung’u et al., 2023). Convolutional neural networks (CNNs) have dominated the field in recent years. In particular, Aniyan & Thorat (2017a), used the AlexNet architecture (Krizhevsky et al., 2017), calling the trained model Toothless222https://github.com/ratt-ru/toothless, to achieve accuracies of 91%, 75% and 95% for the FRI, FRII, and Bent-tailed morphologies, respectively. Subsequently, notable incremental breakthroughs have been made in the applications of deep learning to the field of radio astronomy, ranging from shallow CNN architectures (Lukic et al., 2019) to deep and complex architectures such as DenseNet (Huang et al., 2017; Samudre et al., 2022). Furthermore, other noteworthy advancements within the field are: model-centric strategies such as group-equivariant CNNs (G-CNNs) (Scaife & Porter, 2021) to support equivariance translations on various isometries of radio galaxies and multidomain multibranch CNNs (Tang et al., 2022) to allow models to learn jointly from various survey inputs; data-centric approaches such as data augmentation (Maslej-Krešňáková et al., 2021; Kummer et al., 2022; Ma et al., 2019; Slijepcevic et al., 2022); transfer learning (Tang et al., 2019; Lukic et al., 2019) and -shot learning333The algorithms for -shot learning have been developed to optimally utilize the limited amount of supervised information that is accessible, i.e., labelled data sets, to create precise predictions while circumventing the obstacles like overfitting. (Samudre et al., 2022) to overcome the limited availability of annotated data sets in radio astronomy. These models have been shown to perform competitively, providing promising alternatives to prior models such as the one by Aniyan & Thorat (2017a), which showed signs of overfitting.

Conventional machine learning has also been explored for the morphological classification of Fanaroff-Riley (FR) radio galaxies (Sadeghi et al., 2021; Becker et al., 2021; Ntwaetsile & Geach, 2021; Darya et al., 2023). This paradigm involves extracting handcrafted features such as intensity, shape, lobe size, number of lobes, and texture from input radio image data, which are then utilized in machine learning algorithms (Brand et al., 2023). Previous studies have leveraged feature descriptors to capture the texture of radio images, such as Haralick features, which consist of a group of 13 non-parametric measures derived from the Grey Level Co-occurrence Matrix (Ntwaetsile & Geach, 2021). Gradient boosting methods (Friedman, 2002) such as XGBoost (Chen & Guestrin, 2016), LightGBM (Ke et al., 2017) and CatBoost (Dorogush et al., 2018) have been explored (Darya et al., 2023). Research has shown that feature engineering can provide machine learning algorithms with highly significant features, leading to promising results (Sadeghi et al., 2021). Moreover, Darya et al. (2023) demonstrated that conventional machine learning (LightGBM, XGBoost, and CatBoost) perform competitively to CNN-based models with deep learning on relatively small data sets (about 10,000 images and below).

CNNs are regarded as the state-of-the-art in various image classification applications (Aniyan & Thorat, 2017a; Lukic et al., 2019; Scaife & Porter, 2021; Tang et al., 2022). However, they require large amounts of training data and are susceptible to overfitting when trained with small data sets, which is the case in radio astronomy. Moreover, the high computational demands of deep architectures for training and applying CNNs often require GPUs, which can be costly and limit their applicability in resource-limited settings. Moreover, CNN-based models lack insufficient intrinsic robustness to rotations. To address rotational variations in radio sources, multiple approaches have been taken. One approach is to utilize group-equivariant CNNs, where the network is designed to capture the diverse orientation information of a given input galaxy in encoded form (Scaife & Porter, 2021). Another method involves augmenting the training data by applying rotations to the training samples, enabling the CNNs to learn different orientations of the classes. Furthermore, a pre-processing step can be employed to standardize the rotation of all radio sources. This may be achieved by using principal component analysis (PCA) to align the galaxies’ principal components with the coordinate system’s axes, effectively normalizing their orientations (Brand et al., 2023).

As evident from this literature review, numerous challenges remain, including the need for efficient (computationally inexpensive) and rotationally invariant methods. In this work we address these limitations with the proposed COSFIRE filter approach.

3 Data

The data set of radio galaxies used in this paper was compiled and processed by Samudre et al. (2022). It was constructed by selecting well-resolved radio galaxies from multiple catalogs: Proctor catalog (Proctor, 2011) (derived from the FIRST444Faint Images of the Radio Sky at Twenty Centimeters survey, 2003) for the Bent radio galaxies; FR0CAT (Baldi et al., 2018) and CoNFIG555Combined NVSS–FIRST galaxies catalogs (Gendre & Wall, 2008; Gendre et al., 2010) for Compact radio galaxies; FRICAT (Capetti et al., 2017a) and CoNFIG catalogs for FRI radio galaxies and finally FRIICAT catalog (Capetti et al., 2017b) and CoNFIG catalogs for FRII radio galaxies. This data set is used in this paper with the objective of conducting comparative analyses of similar work done by Samudre et al. (2022).

The initial data set is composed of the following classes: Compact (406 samples), Bent (508 samples), FRI (389 samples), and FRII (679 samples). These samples are further divided into training, validation, and testing data sets as shown in Table 1. According to Samudre et al. (2022), the original data set’s underrepresented classes were balanced by adding randomly duplicated samples to the training and validation data sets. Table 2 depicts the distribution of the balanced data set.

The images were pre-processed by utilizing sigma-clipping with a threshold of 3 (Aniyan & Thorat, 2017b). This technique involves eliminating or discarding pixels that have background noise levels above or below 3 standard deviations from the mean (Aniyan & Thorat, 2017b).

Although there exist more recent catalogs as described by Ndung’u et al. (2023), the latest catalogs, such as LoTSS (DR1 & DR2)666The LOFAR Two-metre Sky Survey (Data Release I & II) (Shimwell et al., 2019; Shimwell et al., 2022), are not annotated into Compact, FRI, FRII and Bent classes. Instead, they are labelled by automated tools such as the Python Blob Detector and Source-Finder (PyBDSF) (Mohan & Rafferty, 2015) that categorize astronomical sources into three types: ‘S’ for single isolated sources modeled with one Gaussian, ‘C’ for sources that are within a group but can be individually modeled with a single Gaussian, and ‘M’ for extended sources that need multiple Gaussians for accurate modeling. This system aids in the efficient identification and analysis of space emissions.

| Source catalog | Type | Total | Training | Validation | Test |

|---|---|---|---|---|---|

| Proctor | Bent | 508 | 305 | 100 | 103 |

| FR0CAT & CoNFIG | Compact | 406 | 226 | 80 | 100 |

| FRICAT & CoNFIG | FRI | 389 | 215 | 74 | 100 |

| FRIICAT & CoNFIG | FRII | 679 | 434 | 144 | 101 |

| Total | 1982 | 1180 | 398 | 404 |

| Source catalog | Type | Total | Training | Validation | Test |

|---|---|---|---|---|---|

| Proctor | Bent | 680 | 433 | 144 | 103 |

| FR0CAT & CoNFIG | Compact | 675 | 431 | 144 | 100 |

| FRICAT & CoNFIG | FRI | 674 | 430 | 144 | 100 |

| FRIICAT & CoNFIG | FRII | 679 | 434 | 144 | 101 |

| Total | 2708 | 1728 | 576 | 404 |

4 Methods

This section gives a comprehensive overview of the methodology that we propose for radio galaxy image classification with the COSFIRE filter approach. We explain the process of radio source blob detection, the configuration of COSFIRE filters with rotation invariance, the formation of feature descriptors, and the utilization of these descriptors for classification. An overview of this pipeline is illustrated in Fig. 2.

4.1 Blob detection

Blob detection is a technique used for identifying points or regions in an image that exhibit a sudden change in intensity (areas that are either brighter or darker than the surrounding areas), known as a "blob". This approach enables the identification of regions that may correspond to objects or structures of interest within the image in our case radio source(s). In computer vision, one of the commonly used blob detectors is based on the Laplacian of Gaussian (LoG) (Wang et al., 2017, 2020). The LoG is the second-order derivative of the Gaussian function, denoted by :

| (1) |

where is the standard derivation. The Laplacian of Gaussian is typically estimated by a Difference-of-Gaussian (DoG) function, which is separable, and thus convolving it with a 2D image is much more computationally efficient. Given two Gaussian functions, and with their respective standard deviations and , the Gaussians are defined as:

| (2) |

| (3) |

The DoG function is derived by subtracting two Gaussian functions with different standard deviations:

| (4) |

For a point and an image with intensity distribution , we calculate the response of a DoG filter with a kernel function by convolution:

| (5) |

where the rectification linear unit (ReLU) is an activation function that sets to zero all values below and represents convolution.

When we refer to the resulting function as a center-on or for brevity, with the central region exhibiting a positive response and the surround exhibiting a negative response. Conversely, when the configuration results in a center-off function, which we denote by . In this work, we adopt the approach used by Azzopardi et al. (2015) and always set the smaller standard deviation to be half of the larger standard deviation.

The centre-on DoG filter highlights bright blobs on a dark background and is more sensitive to intensity increases at the centre of the blob. This filter responds well to objects and is less sensitive to edges or sharp changes in intensity. On the other hand, the centre-off DoG filter highlights dark blobs on a bright background and is more sensitive to intensity decreases at the centre of the blob. This filter responds well to corners and edges and is less sensitive to objects or regions of uniform intensity. Therefore, both centre-on and centre-off DoG filters are used to detect blobs or regions of interest in an image, see Fig. 3 for an illustration on Compact, FRI, FRII and Bent radio sources. The DoG filter is a type of band-pass filter that can eliminate high-frequency components that represent noise as well as some low-frequency components that represent homogenous areas.

4.2 COSFIRE filter configuration

A COSFIRE filter is automatically configured by examining the shape properties of a given prototype pattern of interest in an image, which ultimately determines its selectivity. The process of configuration can be summarized in three main steps: convolve-ReLU-keypoint detection. The first two steps involve the convolution of center-on and center-off DoG filters followed by ReLU as described above. Finally, keypoint detection requires the determination of local maximum thresholded DoG responses along a set of concentric circles around a point of interest. For this application, we use the center of the image as the point of interest, but in principle, any location can be used for this purpose. The point of interest is the location that best characterizes a pattern of interest. The number and radii of the concentric circles along with the threshold used by the ReLU function are hyperparameters of the COSFIRE approach.

A keypoint denoted by , which is identified as a local maximum of a DoG filter along a concentric circle, is characterized by a four-element tuple: (, , , ). Here, and indicate the standard deviation of the outer Gaussian function of the DoG function and its polarity that achieved the highest response in the polar coordinates with radius at an angle of radians with respect to the given point of interest. We denote by a COSFIRE filter, which is represented as a list of such tuples:

| (6) |

where refers to the number of DoG responses considered in , which plays a crucial role in the selectivity and generalization of the COSFIRE filter. Typically, selectivity increases and generalization decreases with increasing value of (i.e. number of keypoints).

Fig. 4 illustrates an example of the automatic configuration of a COSFIRE filter from the superposition of synthetic center-on and center-off DoG maps. The example uses two concentric circles and the resulting COSFIRE filter is a set of five tuples describing the five keypoints indicated in Fig. 4b. The keypoints are identified at the positions along the circles where the DoG responses reach local maxima.

|

|

| (a) | (b) |

4.3 COSFIRE filter response

The response of a COSFIRE filter for a given location is computed by combining the responses of the DoG functions whose scale , polarity and position ( with respect to are indicated in the set . The process of computing the response can be summarized in five main steps: convolve-ReLU-blur-shift-combine. The convolve step refers to the convolution of center-on and center-off DoG filters followed by the ReLU operation that sets to zero all values below the given threshold . These are the same two steps that were required for the configuration described above. Then, in order to allow for some tolerance in the preferred positions of the involved DoG responses, we blur each DoG response with a Gaussian function , whose standard deviation is a linear function of the distance : , with and being hyperparameters. Moreover, we shift the blurred responses in the direction opposite of the polar coordinates such that all DoG responses of interest meet at the same location. Finally, the combine step is implemented as suggested by Azzopardi & Petkov (2012a), where a COSFIRE filter response, which we denote by , is obtained by the geometric mean function that combines all blurred and shifted thresholded DoG responses:

| (7) |

where

| (8) |

is the combined blurred and shifted DoG filter response map of tuple . The shifting operation displaces each blurred DoG response of interest to the support centre of the COSFIRE filter. The shift vector is denoted by , where and . Additionally, .

4.4 Tolerance to rotations

Tolerance to rotations can be attained by configuring multiple COSFIRE filters using rotated versions of a single prototype pattern. An effective approach to achieve this involves defining new filters by modifying the parameters of an existing COSFIRE filter. For instance, consider a COSFIRE filter denoted as , designed to be selective for the same underlying pattern that was employed to configure the original COSFIRE filter , but rotated by radians. This new filter is defined as follows:

| (9) |

The rotation-tolerant response of a COSFIRE filter is then achieved by taking the maximum response across all COSFIRE filters selective for the same pattern at 12 equally-spaced orientations:

| (10) |

4.5 COSFIRE descriptor

For a given image, a COSFIRE descriptor, which we denote by and define below, is generated by applying all COSFIRE filters in rotation-tolerant mode and extracting the maximum value from each filter, regardless of its location. Consequently, for a set of filters, the resulting description of a given image is represented by a -dimensional vector.

| (11) |

This concept is inspired by neurophysiology research, which suggests that the shape representation of a stimulus is based on the combined activity of a group of shape-selective neurons in area V4777V4 cells are neurons in the visual cortex that are involved in form perception (recognizing objects and their features such as shape). (Wielaard et al., 2001; Azzopardi & Petkov, 2012b; Weiner & Ghose, 2015).

4.6 COSFIRE descriptor pre-processing

The only pre-processing step done to the COSFIRE descriptors is L2 normalization888The L2 norm is determined by taking the square root of the sum of the squares of all the values in the vector. Also known as Euclidean norm.. Data normalization is a common preprocessing step in tabular machine learning tasks that transforms the input data into a more uniform scale across the features. In particular, the Support Vector Machines (SVM) objective function includes the distance between data points, making it sensitive to the scale of the input features. Therefore, non-normalized features with larger scales can dominate distance calculations and may hence affect the performance (Hsu et al., 2003).

4.7 Classification model

The SVM model, introduced by Cortes & Vapnik (1995), has been selected for the classification of COSFIRE-based image descriptors due to its capacity to handle high-dimensional data, manage outliers, and achieve robust generalization. The training of the SVM model is conducted using the COSFIRE descriptors extracted from a training set that encompasses four distinct classes of radio galaxies. Given that the training set is characterized by an imbalance in class distribution (as indicated in Table 1), a bagging (bootstrap aggregating) approach is employed to train an ensemble of classification models. This technique improves model generalization and suppresses any biases towards the majority classes (Breiman, 1996). Figure 2 illustrates the entire process of training and inference. In the training phase, we employ a resampling technique with replacement to randomly select a balanced subset of 290 images from each class. The rationale behind this choice is as follows: we aim to create a balanced training data set for learning an SVM model. To achieve this balance, we calculate two-thirds of the size of the majority class (in this case, 434), which results in 290 images. By selecting 290 images from each class, we also ensure that the resulting balanced subset is roughly the same size as the original imbalanced training set. With the subsets, we then train a set of ten different SVM models. In the inference phase, we use the ten SVM models to perform classification by aggregating their predictions on the test data set. In practice, the subset size and the number of classifiers are two hyperparameters, which can be fine-tuned by cross validation. However, we choose to work with the mentioned default values as the fine-tuning of such parameters is beyond the scope of the proposed COSFIRE paradigm.

5 Performance metrics for evaluation

5.1 Accuracy

As in prior research on morphological classification (Lukic et al., 2019; Samudre et al., 2022), we evaluate the performance of the proposed COSFIRE approach following the widely adopted accuracy metric. To assess the performance of our model in the experiments, we leverage the validation set for determining the hyperparameter values.

5.2 Floating Point Operations (FLOPs)

FLOPs is a measure of computer performance that indicates the number of floating-point operations that a processor executes to complete a task. This measurement is commonly used in scientific programs/applications that heavily depend on floating-point (FP) calculations such as CNNs. We will evaluate the computational complexity of our approach by measuring the number of floating point operations (FLOPs) required, and then compare it to the computational complexity of DenseNet161 (Samudre et al., 2022).

FLOPs estimation for both algorithms is performed during the inference phase rather than the training phase of the classification process. This phase entails only the forward pass of input data through the model to produce predictions, focusing on the computational cost of executing the already trained model.

6 Experiments and results

In this section, we present and analyze the experimental results obtained in our research on radio galaxy classification. We also compare the performance and computational complexities of our method with other existing approaches in radio galaxy classification.

6.1 Performance

We conducted a series of experiments to evaluate the performance of the proposed trainable COSFIRE filter approach. As detailed in Section 4, our pipeline involves the automatic configuration of COSFIRE filters and their application to training, validation and test data sets for each distinct class (Bent, Compact, FRI, and FRII). Our primary objective was to attain the highest classification accuracy for distinguishing among the four radio source classes. Through this rigorous evaluation process, we conducted an in-depth exploration of the trainable COSFIRE filters’ performance and their potential in the realm of radio source classification.

We used the validation data set to determine the hyperparameters of the COSFIRE filter configuration (, 999These are the radii used to configure COSFIRE filters., 101010This is the threshold used by the ReLU function.), and application (, and ). In our experimentation, we explored three values per hyperparameter: , , , , and . This resulted in a total of 243 unique parameter sets. For every unique set, we configured up to 100 COSFIRE filters for each class, leading to a total of 400 COSFIRE filters. The filters were configured by selecting random images from the training set. To compensate for the randomness of the image selection, we executed three experiments with the same set of hyperparameters and then took the average results across the three experiments. These experiments resulted in a matrix of accuracy rates. We then identified the maximum accuracy rate for each row (i.e. for each set of hyperparameters). Importantly, multiple sets of hyperparameters yielded very close accuracies. Therefore, to account for all the hyperparameters generating similar results, we performed a right-tailed student -test statistic between the row with the global maximum accuracy rate and all the other rows. It turned out that 26 sets of hyperparameters yielded results that are not statistically different from the global maximum. The average maximum accuracy of the experiments with these 26 sets of hyperparameters was achieved with 93 COSFIRE filters per class on the validation set.

Subsequently, we employ the COSFIRE filters in conjunction with the corresponding classifiers, which are configured using 26 distinct sets of hyperparameters determined previously on the validation set, to the test data. The outcomes of this process are graphically represented in Fig. 5. This figure includes two principal plots illustrating the variation in accuracy rates as a function of the number of COSFIRE filters used. One plot delineates the performance when the filters operate in a rotation-invariant manner, while the other depicts the scenario without rotation invariance. Each plot is the average across the results obtained by the experiments using the 26 sets of hyperparameters, with the grey shading indicating the standard deviations. With 93 filters per class (as determined from the validation data) and rotation invariance, the average accuracy on the test set is 93.36% ± 0.57 (with the minimum being 92.24% and the maximum being 94.31%), whereas, without rotation invariance, the same filters yielded an average accuracy of 82.25% ± 2.06. This performance trend is visually depicted in Fig. 5, highlighting that as the number of filters increases, the disparity in performance between including and excluding rotation becomes more pronounced. Additionally, the figure shows that the model performance is more stable with rotation invariance than without it. The latter has high variability even with more COSFIRE filters.

6.2 Comparison with previous works

The most direct comparison to our study is the research that used DenseNet-161 (Huang et al., 2017) and Siamese networks (Koch et al., 2015) methodologies conducted by Samudre et al. (2022) on the same data. In their work, they conducted their experiments on two versions of the data: the original and the balanced data set as presented in Table 1 and Table 2, respectively. According to their reported results, Siamese networks and DenseNet-161 achieved the highest accuracies of 71.1% ± 0.40 and 91.2% ± 0.60, respectively, on the original data set. On the other hand, Siamese networks achieved 73.9% ± 0.50 and DenseNet-161 achieved 92.1% ± 0.40 accuracy on the balanced data set. Notably, our COSFIRE approach achieves an accuracy of 93.36% ± 0.57 without data augmentation or any further image pre-processing. This is more than 16% reduction in the classification error rate compared to the DenseNet-161 model (Samudre et al., 2022). The significant reduction in the error rate is mainly attributed to the rotation invariance properties of the COSFIRE approach. We did not perform runs on the balanced data set (Table 2) since this would not generate new insights, as the data set was augmented through image duplication of the minority classes.

6.3 Computational complexity

6.3.1 Convolutional Neural Networks

Calculating the FLOPs in a convolutional neural network involves determining the number of arithmetic operations performed during the forward pass. This includes the multiplications and additions between the input data elements and the trainable convolution kernels. The FLOPs computation takes into account factors such as the input size, kernel size, number of filters, and the presence of padding, dilation, and stride. A CNN convolutional operation with padding set to 0, dilation set to 1, and stride set to 1, for simplicity (Freire et al., 2022), can be summarised as follows:

| (12) |

In equation 12, the output of a specific convolutional layer is denoted by , which represents a feature map. Here, the superscript indicates the filter index, while the subscripts , , and represent the row, column, and channel indices of the output feature map, respectively. The trainable convolution kernel is denoted by , and it performs element-wise multiplications with the corresponding input elements of image of dimensions 111111To meet the input requirements of a DenseNet model, the original images are resized from to pixels.. The bias term is added to the sum, and the resulting value undergoes the activation function to generate the output feature map element . This equation embodies the convolution operation in a CNN, where each output feature map element is computed by convolving the corresponding region of the input feature maps with trainable filters and introducing non-linearity through the activation function.

The output size of the CNN at each layer is important when calculating the FLOPs of the CNN architecture used. It is given by,

| (13) |

where is the image input size, is padding, is the kernel size and is the stride. The number of FLOPs can then be estimated as:

| (14) |

where is the number of filters. In each sliding window, there are multiplications and the sliding window process needs to be repeated as many times as the OutputSize . This entire procedure is then repeated for all filters. The estimated number of FLOPs of the DenseNet-161 model is 15.6 GFLOPs based on the workflow121212Roughly GMACs = 0.5 * GFLOPs 131313https://github.com/sovrasov/flops-counter.pytorch of (Sovrasov, 2023). Importantly, the 15.6 GFLOPs calculations include the operations involving the dense and activation layers in the DenseNet architecture.

6.3.2 COSFIRE filter approach

The FLOPs associated with the single convolution required by the COSFIRE filter approach are computationally inexpensive due to the exploitation of the separability property of Gaussian filters. For the separability of the Gaussian filter, equation 15, the 2D Gaussian can be written as the multiplication of two functions: one that depends on and the other that depends on . In this case, the two functions are the same and they are both 1D Gaussian141414This reduces the number of operations required to apply the filter to an image. For example, applying a filter to an image requires 25 multiplications per pixel, but applying two 1D filters of length 5 requires only 10 multiplications per pixel.. Because the DoG function combines two Gaussian functions linearly, the separability property is also preserved in the DoG function.

| (15) | ||||

Table 3 provides a breakdown of the FLOPs that are required by the COSFIRE methodology at the inference stage. In that Table, we use the following set of hyperparameters as an example: , , , and . The FLOPs computations are mainly on the processes of convolve-ReLU-blur-shift-combine. For a given image of pixels and 400 COSFIRE filters, the total estimated number of FLOPs is 1.5 GFLOPs. Also, in order to optimize the computational efficiency in a system using 4800 (i.e. 100 filters for each of the four classes applied in 12 orientations) COSFIRE filters that collectively involve 64,284 tuples, we employ the following strategy:

-

•

Elimination of Redundancy: Recognizing that numerous tuples are repeated across these filters, we first isolate every distinct tuple from the entire set. In fact, the number of tuples increases sublinearly with the increase in the number of COSFIRE filters used, Fig. 7.

-

•

Computation and Storage: We calculate the response map for each unique tuple only once. This response map is essentially a modified version of the center-on or center-off DoG response map, subjected to blurring and shifting. We then store each computed response map in a hash table for quick retrieval, avoiding redundant computations.

-

•

Configuration Sharing: Upon further analysis, we find that the configurations of many COSFIRE filters share identical pairs of tuples.

-

•

Pairwise Response Maps: We compile a list of these common tuple pairs and pre-calculate the combined response map for each pair by performing a multiplication of the individual response maps.

-

•

HashTable for Pairs: The resulting combined response maps of tuple pairs are also stored in a hash table. This ensures that the response for any pair that is used by more than one filter can be quickly fetched without recalculating it.

By implementing this approach, we make the process more efficient, as we avoid unnecessary recalculations for both individual tuples and their pairs, which significantly reduce the computational load and speeds up the overall filtering process. Using this efficient technique, the total number of FLOPs required is reduced to 1.1 GFLOPs; i.e. 26% reduction in the FLOPs (comparison of FLOPs basic and FLOPs column values in Table 3).

Similarly, conducting three trials with 26 sets of optimal hyperparameters from the validation data set, we noted a significant decrease in FLOPs, as depicted in Figure 6, corresponding to an increasing number of COSFIRE filters. In fact, with reference to Fig. 6 and Fig. 5 we demonstrate that we can achieve very high performance with fewer filters using the COSFIRE approach. Using just 48 filters for each class yields an accuracy rate of 92.46% ± 0.76, with a computational cost of 0.8 GFLOPs—approximately 20 times less than the DenseNet161 model that achieves a similar result.

| Step | Formula | FLOPs basic | FLOPs |

|---|---|---|---|

| Center-On DoG sigma: Convolve the image with a DoG kernel whose size is (here ). The expression combines the product and summation operations, and the result is multiplied by 2 to account for the vertical and horizontal separable filters of the DoG functions. | 2,745,000 | – | |

| Center-off DoG (same sigma): Center-off is simply the negative of the center-on response map. | 22,500 | – | |

| ReLU of center-on DoG map: Set all values below to zero across the center-on responses using ReLU activation function. | 22,500 | – | |

| ReLU of center-off DoG map: Same as above but for the center-off response map. | 22,500 | – | |

| Blurring: Similar to , convolve the image with a DoG kernel of size: . The expression is multiplied by two to account for center-on and center-off DoG response maps. The extent of blurring depends on the radii: 0, 5, 10, 15, and 20. The kernel size of the blurring function for the -th radius is calculated as : . | = | 1,170,000 | – |

| = | 1,170,000 | – | |

| = | 2,250,000 | – | |

| = | 2,250,000 | – | |

| = | 3,330,000 | – | |

| Shifting: Shift the two DoG response maps as many times as the number of all unique tuples across all COSFIRE filters. In our experiments, the was 3187 (with rotation invariance). | 71,707,500 | – | |

|

Multiplication: Compute the responses of all COSFIRE filters by multiplying the corresponding shifted response maps. The number of rotations is denoted by . The variable represents the number of COSFIRE filters, which here is set to 400 (100 per class) and multiplied by the total number of rotations (). represents the total number of tuples across all the COSFIRE filters ().

The set of total tuples configured considering rotation invariance have repetitions that do not need to be re-computed. In this step, we pair the tuples that appear more than once among the 64,284 tuples. Then, we only compute and store in the memory a single pair of the shared tuples (duplicates) to save on computations. Therefore, the FLOPS are calculated from the pre-computed response maps of pairs of tuples and those of unique tuples. |

1,338,390,000 | 937,305,300 | |

| Hashkey: Before applying the multiplication operation, the shifted response map must first be retrieved from a hashtable residing in memory. The keys of this hashtable are determined by multiplying the first four prime numbers raised to given , , and : . The retrieval of each tuple response map from memory is therefore 7 FLOPS. | . | 449,988 | – |

| Taking the Root for Geometric Mean: Geometric mean calculation operations for each response map of all COSFIRE filters. | 108,000,000 | – | |

| Descriptor formation: Determination of a -element descriptor by taking the maximum value of each of the response maps. | 9,000,000 | – | |

| Decision fusion of 10 SVM classifiers: SVM calculations depend on the number of decision function hyperplanes () given the number of classes under classification. This experiment uses the one-vs-one (‘ovo’) decision function approach, which means that . In this case, and hence . Each hyperplane separates one class from the rest of the classes. The number of FLOPs is therefore based on the dot products needed for one SVM between the given feature vector of size and the hyperplanes. Since the dot product involves multiplications and additions then one SVM takes FLOPs. Having 10 SVM, this is repeated 10 times and finally, we choose the maximum which takes another 9 FLOPs. | ) + 9 | 48,009 | – |

| Total FLOPs | 1,540,825,497 | 1,139,740,797 |

7 Discussion

The proposed trainable COSFIRE filter approach, which is designed to capture radio galaxies at different orientations, achieved better results than the CNN-based approach for radio galaxy classification on the same data set. This suggests that the trainable COSFIRE filter approach can capture more relevant salient features of the radio galaxy images than the CNN-based approach. Rotation invariance plays a vital role in robust galaxy classification151515The shape of radio galaxies that we observe depends on how their jets are oriented in the plane of the sky., facilitating accurate and consistent outcomes. Also, the limited availability of annotated training data is attributed to the high cost of the labelling and the limited number of experienced astronomers dedicated to this task (considering the size of the astronomical data generated by modern telescopes). Therefore, for effective model generalization, it is essential that a classification model remains invariant to rotations, ensuring consistent predictions irrespective of the input’s orientation.

Our results are consistent with previous studies exploring different approaches to incorporating rotation invariants of radio galaxies in the training set as a means of improving classification model accuracy and generalization. In other similar studies, in radio astronomy, Brand et al. (2023) showed that classification performance is improved when the orientations of training galaxies are normalized as opposed to when no such attempt was made to address rotational variations. Scaife & Porter (2021) used group-equivariant convolutional neural networks that encode multiple orientation information of a given input galaxy. Moreover, the widely adopted data augmentation pre-processing step in machine model development is another approach that also addresses this challenge of radio galaxy equivariance (Becker et al., 2021). While data augmentation is a useful tool, especially in small data sets, it comes with its own problems, namely the potential introduction of unrealistic or irrelevant variations into the data, which can lead to model overfitting and reduced interpretability. In addition, it requires domain knowledge to develop a good augmentation strategy, which considers all possible equivariant transformations given a galaxy image. The rotation invariance in the COSFIRE approach is intrinsic to the method and does not require data augmentation. This makes the approach more robust, versatile, and completely data-driven, with no domain expertise required, and hence highly adaptable to various computer vision applications.

CNN-based networks are computationally expensive. For instance, the forward pass of DenseNet161 consumes 15.6 GFLOPs. On the other hand, the COSFIRE filter approach demonstrates efficiency by utilizing a substantially lower number of FLOPs (Fig. 6), resulting in significantly lower demand for computational resources. The workflow of the COSFIRE approach is also more flexible than conventional CNN architectures. The number of filters used is a hyperparameter of this paradigm, which is in contrast to the fixed number of filters used in its counterpart. Our approach, based on the COSFIRE filter, is a novel algorithm for the classification of radio galaxies and comes with a low computational cost.

8 Conclusion

In this work, we introduce a novel descriptor based on trainable COSFIRE filters for radio galaxy classification. We combine this descriptor with an SVM classifier using a linear kernel and achieve superior results compared to a DenseNet161 transfer learning-based pre-trained network and a few-shot learning-based Siamese network on the same training data set. Our technique is computationally inexpensive (20 lower cost with the same accuracy as the DenseNet161 model), rotation invariant, free from data augmentation and does not rely on domain expert knowledge. These features make our technique not only effective (in terms of accuracy) but also efficient (in terms of FLOPs) for radio galaxy image classification tasks. The accuracy rate of 93.36% that we achieved is the best result ever reported for the benchmark data set prepared by (Samudre et al., 2022)161616https://github.com/kiryteo/RG_Classification_code.

This work contributes to the field of radio astronomy by providing an alternative technique for identifying and analyzing radio sources. As the next-generation telescopes (such as LOFAR, MeerKAT, and SKA) produce high-resolution images of the sky, our future work will explore the effectiveness of our methodology on these new data and assess the possibility of cross-survey predictions.

Acknowledgements

Part of this work is supported by the Foundation for Dutch Scientific Research Institutes. This work is based on the research supported in part by the National Research Foundation of South Africa (grant numbers 119488 and CSRP2204224243).

We thank the Center for Information Technology of the University of Groningen for their support and for providing access to the Hábrók high performance computing cluster.

Data Availability

The data set is available at: https://github.com/kiryteo/RG_Classification_code.

References

- Aniyan & Thorat (2017a) Aniyan A., Thorat K., 2017a, The Astrophysical Journal Supplement Series, 230, 20

- Aniyan & Thorat (2017b) Aniyan A., Thorat K., 2017b, The Astrophysical Journal Supplement Series, 230, 20

- Azzopardi & Petkov (2012a) Azzopardi G., Petkov N., 2012a, IEEE Transactions on Pattern Analysis and Machine Intelligence, 35, 490

- Azzopardi & Petkov (2012b) Azzopardi G., Petkov N., 2012b, Biological cybernetics, 106, 177

- Azzopardi & Petkov (2013) Azzopardi G., Petkov N., 2013, in Computer Analysis of Images and Patterns: 15th International Conference, CAIP 2013, York, UK, August 27-29, 2013, Proceedings, Part II 15. pp 9–16

- Azzopardi et al. (2015) Azzopardi G., Strisciuglio N., Vento M., Petkov N., 2015, Medical image analysis, 19, 46

- Azzopardi et al. (2016) Azzopardi G., Greco A., Vento M., 2016, in 13th IEEE international conference on advanced video and signal based surveillance (AVSS). pp 235–241

- Baldi et al. (2015) Baldi R. D., Capetti A., Giovannini G., 2015, Astronomy & Astrophysics, 576, A38

- Baldi et al. (2018) Baldi R. D., Capetti A., Massaro F., 2018, Astronomy & Astrophysics, 609, A1

- Banfield et al. (2015) Banfield et al. 2015, Monthly Notices of the Royal Astronomical Society, 453, 2326

- Becker et al. (2021) Becker B., Vaccari M., Prescott M., Grobler T., 2021, Monthly Notices of the Royal Astronomical Society, 503, 1828

- Best & Heckman (2012) Best P. N., Heckman T. M., 2012, Monthly Notices of the Royal Astronomical Society, 421, 1569

- Booth & Jonas (2012) Booth R., Jonas J., 2012, African Skies, 16, 101

- Brand et al. (2023) Brand K., Grobler T. L., Kleynhans W., Vaccari M., Prescott M., Becker B., 2023, Monthly Notices of the Royal Astronomical Society, 522, 292

- Breiman (1996) Breiman L., 1996, Machine learning, 24, 123

- Capetti et al. (2017a) Capetti A., Massaro F., Baldi R. D., 2017a, Astronomy & Astrophysics, 598, A49

- Capetti et al. (2017b) Capetti A., Massaro F., Baldi R., 2017b, Astronomy & Astrophysics, 601, A81

- Chen & Guestrin (2016) Chen T., Guestrin C., 2016, in Proceedings of the 22nd acm sigkdd international conference on knowledge discovery and data mining. pp 785–794

- Cortes & Vapnik (1995) Cortes C., Vapnik V., 1995, Machine learning, 20, 273

- Darya et al. (2023) Darya et al. 2023, arXiv preprint arXiv:2304.12729

- Dorogush et al. (2018) Dorogush et al. 2018, arXiv preprint arXiv:1810.11363

- Fanaroff & Riley (1974) Fanaroff B. L., Riley J. M., 1974, Monthly Notices of the Royal Astronomical Society, 167, 31P

- Freire et al. (2022) Freire P. J., Srivallapanondh S., Napoli A., Prilepsky J. E., Turitsyn S. K., 2022, arXiv preprint arXiv:2206.12191

- Friedman (2002) Friedman J. H., 2002, Computational Statistics & Data Analysis, 38, 367

- Gendre & Wall (2008) Gendre M. A., Wall J. V., 2008, Monthly Notices of the Royal Astronomical Society, 390, 819

- Gendre et al. (2010) Gendre M. A., Best P. N., Wall J. V., 2010, Monthly Notices of the Royal Astronomical Society, 404, 1719

- Hsu et al. (2003) Hsu C.-W., Chang C.-C., Lin C.-J., et al., 2003, A practical guide to support vector classification

- Huang et al. (2017) Huang G., Liu Z., Van Der Maaten L., Weinberger K. Q., 2017, in Proceedings of the IEEE conference on computer vision and pattern recognition. pp 4700–4708

- Ke et al. (2017) Ke et al. 2017, Advances in neural information processing systems, 30

- Knowles et al. (2022) Knowles K., et al., 2022, Astronomy & Astrophysics, 657, A56

- Koch et al. (2015) Koch G., Zemel R., Salakhutdinov R., et al., 2015, in ICML deep learning workshop.

- Krizhevsky et al. (2017) Krizhevsky A., Sutskever I., Hinton G. E., 2017, Communications of the ACM, 60, 84

- Kummer et al. (2022) Kummer et al. 2022, arXiv preprint arXiv:2206.15131

- Lukic et al. (2019) Lukic et al. 2019, Monthly Notices of the Royal Astronomical Society, 487, 1729

- Ma et al. (2019) Ma et al. 2019, The Astrophysical Journal Supplement Series, 240, 34

- Maslej-Krešňáková et al. (2021) Maslej-Krešňáková V., El Bouchefry K., Butka P., 2021, Monthly Notices of the Royal Astronomical Society, 505, 1464

- Miraghaei & Best (2017) Miraghaei H., Best P. N., 2017, Monthly Notices of the Royal Astronomical Society, 466, 4346

- Mohan & Rafferty (2015) Mohan N., Rafferty D., 2015, Astrophysics Source Code Library, ascl–1502

- Ndung’u et al. (2023) Ndung’u S., Grobler T., Wijnholds S. J., Karastoyanova D., Azzopardi G., 2023, New Astronomy Reviews, p. 101685

- Ntwaetsile & Geach (2021) Ntwaetsile K., Geach J. E., 2021, Monthly Notices of the Royal Astronomical Society, 502, 3417

- Pasupathy & Connor (1999) Pasupathy A., Connor C. E., 1999, Journal of neurophysiology, 82, 2490

- Pasupathy & Connor (2002) Pasupathy A., Connor C., 2002, Neuroscience, 5, 1252

- Proctor (2011) Proctor D. D., 2011, The Astrophysical Journal Supplement Series, 194, 31

- Rudnick & Owen (1976) Rudnick L., Owen F. N., 1976, The Astrophysical Journal, 203, L107

- Sadeghi et al. (2021) Sadeghi M., Javaherian M., Miraghaei H., 2021, The Astronomical Journal, 161, 94

- Samudre et al. (2022) Samudre A., George L. T., Bansal M., Wadadekar Y., 2022, Monthly Notices of the Royal Astronomical Society, 509, 2269

- Scaife & Porter (2021) Scaife A. M., Porter F., 2021, Monthly Notices of the Royal Astronomical Society, 503, 2369

- Shimwell et al. (2019) Shimwell et al. 2019, Astronomy & Astrophysics, 622, A1

- Shimwell et al. (2022) Shimwell T., et al., 2022, Astronomy & Astrophysics, 659, A1

- Slijepcevic et al. (2022) Slijepcevic et al. 2022, Monthly Notices of the Royal Astronomical Society, 514, 2599

- Sovrasov (2023) Sovrasov V., 2018-2023, ptflops: a flops counting tool for neural networks in pytorch framework, https://github.com/sovrasov/flops-counter.pytorch

- Swart et al. (2022) Swart G. P., Dewdney P. E., Cremonini A., 2022, Journal of Astronomical Telescopes, Instruments, and Systems, 8, 011021

- Tang et al. (2019) Tang H., Scaife A. M., Leahy J., 2019, Monthly Notices of the Royal Astronomical Society, 488, 3358

- Tang et al. (2022) Tang H., Scaife A., Wong O., Shabala S., 2022, Monthly Notices of the Royal Astronomical Society, 510, 4504

- Wang et al. (2017) Wang G., Lopez-Molina C., De Baets B., 2017, in Proceedings of the IEEE International Conference on Computer Vision. pp 4817–4825

- Wang et al. (2020) Wang G., Lopez-Molina C., De Baets B., 2020, Digital Signal Processing, 96, 102592

- Weiner & Ghose (2015) Weiner K. F., Ghose G. M., 2015, Journal of neurophysiology, 113, 3021

- Wielaard et al. (2001) Wielaard D. J., Shelley M., Mclaughlin D., Shapley R., 2001, The Journal of Neuroscience, 21, 14