Classification of Bark Beetle-Induced Forest Tree Mortality Using Deep Learning

Abstract

Bark beetle outbreaks can dramatically impact forest ecosystems and services around the world. For the development of effective forest policies and management plans, the early detection of infested trees is essential. Despite the visual symptoms of bark beetle infestation, this task remains challenging, considering overlapping tree crowns and non-homogeneity in crown foliage discoloration. In this work, a deep learning-based method is proposed to effectively classify different stages of bark beetle attacks at the individual tree level. The proposed method uses RetinaNet architecture (exploiting a robust feature extraction backbone pre-trained for tree crown detection) to train a shallow subnetwork for classifying the different attack stages of images captured by unmanned aerial vehicles (UAVs). Moreover, various data augmentation strategies are examined to address the class imbalance problem, and consequently, the affine transformation is selected to be the most effective one for this purpose. Experimental evaluations demonstrate the effectiveness of the proposed method by achieving an average accuracy of 98.95%, considerably outperforming the baseline method by 10%. The code and results are publicly available at https://github.com/rudrakshkapil09/BarkBeetle-Damage-Classification-DL

I Introduction

Bark beetle outbreaks significantly impact forests worldwide, thereby disrupting the functioning and properties of natural ecosystems. As a result of various factors (e.g., population density, tree moisture & condition, beetle & host tree species), a successful bark beetle attack gradually reveals itself by affecting various parts of the host tree [1]. Over time, the crown of an infested tree begins to fade – there is a gradual change in foliage color from a healthy green to yellow, red, and finally a leafless (i.e., needle-less) gray. These are referred to as different attack stages). The rate of discoloration depends on the progress of bark-beetle induced fungal infection that interrupts nutrient and water flow through the phloem and xylem, as well as environmental conditions such as soil moisture content [2]. The fading process is linked to the ecology of bark beetles (see Fig. 1), in which female bark beetles bore tunnels (called oviposition galleries) in the phloem to lay their eggs, and the larvae hatch and excavate additional larval galleries to feed on phloem tissue. If colonization is successful, the tree ultimately dies, and the next generation of beetles disperses from the parental tree in search of new hosts [3]. The detection of infested trees by Dendroctonus mexicanus is studied in this paper, which is among the most damaging insects to pine forests in Mexico.

Emerging bark beetles disperse in a number of ways in search of new hosts, with the majority partaking in short-range dispersal [4]. They fly below the forest canopy and attack suitable host trees within a few hundred meters. Hence, identifying attacked trees will help determine the next likely location of infestations and guide beetle management activities (e.g., sanitation, removal, or disposal) to prevent infestations from further spreading [5]. Automated systems can be designed to detect and analyze bark beetle infestations using remote sensing and machine learning (ML), avoiding labor- and cost-intensive efforts of traditionally employed ocular assessments.

Although satellite and aircraft platforms are widely used at the landscape level, recent research has focused on leveraging UAVs for data collection due to their advantages at the individual tree level (e.g., higher spatial and temporal resolution). Besides, classical ML-based approaches (e.g., random forests (RF) or support vector machines (SVM)) require feature selection which demands prior experience and extensive effort to achieve satisfactory results. Thus, exploiting deep learning (DL)-based models is of interest due to their capacity for “learning” powerful representations and exhibiting good generalization through the discovery of intricate, underlying data patterns.

Although DL has achieved great success in a wide range of computer vision applications, a few recent studies have attempted to use DL-based models to classify infested trees from UAV-captured images [7, 8, 9]. Due to the lack of sufficient samples, these works either train customized shallow networks (i.w., six convolutional layers in [7, 9]) or apply transfer learning to models pre-trained on ImageNet [10] (i.e., VGGNet [11] or ResNet [12] in [7, 9]). However, transferring pre-trained models has produced lower results potentially due to the frozen weights that were previously trained for generic object classification purposes (e.g., cats vs. dogs).

This paper proposes a DL-based method that exploits modified RetinaNet architecture (pre-trained for tree crown detection) to classify four infestation stages of trees attacked by bark beetles (see Fig. 2). The simple and efficient architecture allows handling data class imbalance problems and performs well in aerial imagery applications with dense targets. Moreover, the proposed method considers various data augmentation strategies to alleviate the problem of limited number of samples and investigates their effects on network performance. Empirical evaluations demonstrate that the proposed method outperforms the baseline and classical ML methods considerably.

II Related Work

This section briefly describes DL-based approaches that seek to detect infested trees by different bark beetle species from UAV captured images (i.e., individual tree level).

First, the potential of deep neural networks (DNNs) to detect bark beetle outbreaks in fir forests is studied in [7]. It employs a two-stage method consisting of a classical image processing-based crown detection and a six-layer convolutional neural network (CNN) for predicting red- and gray-attacked trees by four-eyed fir bark beetles (Polygraphus proximus Blandford, Coleoptera, Curculionidae). This method uses RGB images captured by a DJI Phantom 3 Pro quadcopter, and the performance is compared with six well-known CNN models (e.g., VGGNet [11], ResNet [12], and DenseNet [13]). After that, the classification accuracy of infested trees in a temperate forest is investigated in [8] by training two shallow CNNs (with three and six convolutional layers) and applying transfer learning to a pre-trained DenseNet-169 [13]. Despite the availability of multi-spectral images from a DJI Matrice 210 RTK, the best results of this method are obtained using only RGB bands for detecting yellow-attacked trees. Finally, the health statuses of Maries fir trees are evaluated [9] by adopting pre-trained CNN models of AlexNet [14], SqueezeNet [15], VGGNet [11], ResNet [12], and DenseNet [13]. Using a DJI Mavic 2 Pro & DJI Phantom 4 Quadcopter, this method uses RGB images to select treetops in a traditional manner and classify healthy and gray-attacked trees.

In contrast, we propose to adapt a state-of-the-art deep network [16] for the classification of bark beetle attacks by exploiting the weights that have been specifically trained for tree crown detection from UAV images and training a shallow subnetwork for discriminating attack stages.

III Proposed Method

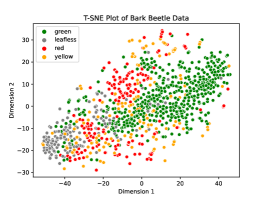

Even though this task seems like a simple color classification, ill-defined attack labels and imbalanced datasets make it more challenging than it appears. For instance, the distribution and some challenging samples are visualized in Fig. 3, in which green and leafless (needle-less) classes overlap with other classes.

Also, Fig. 4 shows the RGB color space histograms for each class that reveals similarities between the yellow and red attack stages due to the gradual nature of foliage discoloration.

Our proposed method is based on the RetinaNet architecture [16], which has been successfully used for other remote sensing applications (e.g., [17]) owing to its ability to detect dense targets from data with highly imbalanced classes. The proposed RetinaNet-based architecture includes the backbone network (i.e., ResNet-50 [12]), feature pyramid network (FPN), classification subnetwork, and focal loss. Although the backbone network seeks to extract multi-scale features, the FPN combines semantically low-resolution features with low-level, high-resolution ones. The classification subnetwork then predicts the category of bark beetle attacks (i.e., green (healthy) tree, yellow-/red-attacked tree, or leafless) using focal loss. This loss function helps to simultaneously handle the inherent similarity of attack classes and limited-sized data by focusing on hard samples and avoiding easy negatives.

As shown in Fig. 2, the cropped images of tree crowns are normalized according to the mean and standard deviation of the training set images and then fed into the backbone network. The computations are forward propagated through the bottleneck layers, as well as being combined with the layers in the feature pyramid network. Each level of the pyramid feeds its computation to a classification subnetwork, each of which consists of four convolutional layers. Then, the network outputs a score for each attack stage. At last, one-hot encoding is done to get the class prediction for each individual tree111Bounding box regression subnetwork has been removed considering the available tree crown collections..

In contrast to previous studies that train either a shallow network or deep models pre-trained on ImageNet [10], we exploit a pre-trained deep model (i.e., DeepForest [6] for tree crown detection) and train the modified network for the classification of attack stages.

As a result of appropriately initializing network weights with features relevant to tree crowns, the classification subnetwork can learn to differentiate different bark beetle attack stages.

Meanwhile, several different data augmentation strategies are considered to address the imbalance in the dataset. Although it is generally assumed that data augmentation will result in higher performance, we will show that blindly utilizing these techniques can drastically affect classification results for this task.

IV Empirical Evaluations

We evaluate the proposed method using the dataset presented in [18] that utilized a hexacopter with a Tarot FY680 Pro to capture multiple RGB video sequences of a forested region in Northern Mexico from a top-down perspective. Five flights in total were conducted at three different average heights above ground (60m, 90m, and 100m) during three months (June, July, and August). The individual frames from each flight were combined into five different orthomosaics, and the ground truth information for each tree’s center and attack stage were made available (see [18] for more details). The proposed method is compared with the baseline method [18] as well as the most promising SVM, RF, and K-nearest neighbors (KNN) classifiers. Hyperparameter tuning was done in a grid search manner for each classifier.

IV-A Implementation Details

We cropped individual tree crowns from the five orthomosaics as square patches and then split them into five separate training, validation, and testing sets, as shown in Table I. For each flight, one model is trained and evaluation is performed for each individually and averaged. The proposed networks were trained using the AdamW optimizer for 50 epochs and a batch size of 2 (five models for flights). The training procedure was performed on a Nvidia GeForce RTX 3090 GPU, with each model taking approximately 1.5 hours to train. The dataset was augmented by generating minority class samples using i) random affine warps, ii) vertical/horizontal flips, iii) 90∘/180∘/270∘ rotations, iv) cropping by a factor of 85%, v) color jittering with random brightness, contrast, & saturation, and vi) Gaussian blurring with kernel size 5. Furthermore, early stopping was considered to avoid overfitting during the training procedure.

| Subsets of Samples | Jun60 | Jul90 | Jul100 | Aug90 | Aug100 |

|---|---|---|---|---|---|

| Green Trees | 68 | 81 | 103 | 141 | 98 |

| Yellow Trees | 34 | 19 | 28 | 45 | 49 |

| Red Trees | 24 | 26 | 48 | 52 | 48 |

| Leafless Trees | 25 | 28 | 26 | 33 | 25 |

| Train | 128 | 130 | 174 | 230 | 187 |

| Augmented Train | 232 | 276 | 352 | 480 | 332 |

| Validation | 7 | 7 | 10 | 13 | 11 |

| Test | 16 | 17 | 21 | 28 | 22 |

| Model | Average Accuracy () | |

| SVM | 53.10% | |

| KNN | 53.10% | |

| RF | 40.24% | |

| Baseline [18] (Best result) | 89% | |

| Ours (with warping) | 98.95% | |

| Ablation Study | Ours (without augmentation) | 97.69% |

| Ours (with cropping) | 96.29% | |

| Ours (with flips) | 94.74% | |

| Ours (with blurring) | 92.23% | |

| Ours (with rotation) | 84.71% | |

| Ours (with color jittering) | 83.90% |

IV-B Experimental Results

The experimental comparison of the proposed method (including the best model with affine warping data augmentation) with the baseline and best performing models for classical ML methods is shown in Table II. According to the results, the proposed method considerably outperforms (by 9.9% (& 7.6%) with (& without) data augmentation in average accuracy) the cellular automaton baseline method. Also, the classical ML methods have achieved significantly lower accuracy than our method, which can be explained by the ill-defined separation between classes in the RGB color space, as shown in Fig. 3. The confusion matrices for the challenging flights are shown in Fig. 5. Accordingly, the proposed method has no misclassifications for four of the flights, and only one leafless image is incorrectly predicted as red in the June 60m flight due to the considerable overlap from nearby red attack stage trees. Since classic ML methods rely on manual feature selection, applying them directly to raw data results in poor performance. However, the proposed DL-based method can automatically learn the most relevant and robust features from the dataset, enabling it to perform significantly better.

IV-C Ablation Study

To assess the effectiveness of data augmentation, various probabilistic augmentation strategies are applied. In each strategy, additional samples belonging to the red, yellow, and leafless classes are randomly generated to obtain the same number as the green samples (i.e., balancing the dataset). The classification results for models trained on each strategy are shown in Table II. Accordingly, affine warping is found to be the most effective strategy considering tree crowns are not always circular. This strategy changes the apparent geometry of the trees, promoting more diversity in the dataset. Also, it accounts for angular variation in the UAV during data collection. Color jittering unsurprisingly leads to the most performance degradation (major effect based on visual symptoms of trees). These results can further be explained using the t-SNE visualizations in Fig. 6. The middle and left plots display similar separations in the dataset, indicating that warping adds minority class samples without adversely impacting the separation of the classes. On the other hand, the right plot is obtained from the color-jittered dataset, and significantly more overlap between the classes can be observed (e.g., in the bottom right corner). The other augmentation strategies do not improve performance either.

V Conclusion

A DL-based approach was proposed to classify bark beetle-infested trees in this work. Based on the RetinaNet architecture, the proposed method simultaneously trains a shallow subnetwork and exploits a deep network initialized with weights trained to detect tree crowns from UAV images. To overcome the data imbalance problem, different data augmentation strategies were investigated and affine warping is found the most effective for this purpose. Despite the challenges of inter-class overlap and intra-class non-homogeneity in the dataset, the proposed method achieves an average accuracy of 98.95%, thereby significantly outperforming the baseline method. Future work involves accurate detection of tree crowns from aerial orthomosaic images and discrimination of non-beetle factors that may result in similar foliage discoloration (e.g., drought).

Acknowledgments

This work was supported in-part by the Federal-Provincial Mountain Pine Beetle Research Partnership program.

References

- [1] N. Erbilgin, L. Zanganeh, J. G. Klutsch, S.-h. Chen, S. Zhao, G. Ishangulyyeva, S. J. Burr, M. Gaylord, R. Hofstetter, K. Keefover-Ring, K. F. Raffa, and T. Kolb, “Combined drought and bark beetle attacks deplete non-structural carbohydrates and promote death of mature pine trees,” Plant, Cell & Environment, vol. 44, no. 12, pp. 3866–3881, 2021.

- [2] O. F. Niemann and F. Visintini, “Assessment of potential for remote sensing detection of bark beetle-infested areas during green attack : A literature review,” Natural Resources Canada, Canadian Forest Service, Pacific Forestry Centre, 2005.

- [3] L. Safranyik and A. L. Carroll, “The biology and epidemiology of the mountain pine beetle in lodgepole pine forests,” The Mountain Pine Beetle: A Synthesis of Its Biology, Management and Impacts on Lodgepole Pine, pp. 3–66, 2006.

- [4] L. Safranyik, D. A. Linton, R. Silversides, and L. H. McMullen, “Dispersal of released mountain pine beetles under the canopy of a mature lodgepole pine stand,” Journal of Applied Entomology, vol. 113, no. 1-5, pp. 441–450, 1992.

- [5] R. J. Hall, G. Castilla, J. C. White, B. J. Cooke, and R. S. Skakun, “Remote sensing of forest pest damage: A review and lessons learned from a canadian perspective,” The Canadian Entomologist, vol. 148, no. S1, p. S296–S356, 2016.

- [6] B. G. Weinstein, S. Marconi, M. Aubry-Kientz, G. Vincent, H. Senyondo, and E. P. White, “DeepForest: A Python package for RGB deep learning tree crown delineation,” Methods in Ecology and Evolution, vol. 11, no. 12, pp. 1743–1751, 2020.

- [7] A. Safonova, S. Tabik, D. Alcaraz-Segura, A. Rubtsov, Y. Maglinets, and F. Herrera, “Detection of fir trees (abies sibirica) damaged by the bark beetle in unmanned aerial vehicle images with deep learning,” Remote Sensing, vol. 11, no. 6, 2019.

- [8] R. Minařík, J. Langhammer, and T. Lendzioch, “Detection of bark beetle disturbance at tree level using UAS multispectral imagery and deep learning,” Remote Sensing, vol. 13, no. 23, 2021.

- [9] H. T. Nguyen, M. L. Lopez Caceres, K. Moritake, S. Kentsch, H. Shu, and Y. Diez, “Individual sick fir tree (abies mariesii) identification in insect infested forests by means of UAV images and deep learning,” Remote Sensing, vol. 13, no. 2, 2021.

- [10] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei, “ImageNet: A large-scale hierarchical image database,” in Proc. CVPR, 2009, pp. 248–255.

- [11] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” in Proc. ICLR, 2014, pp. 1–14.

- [12] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proc. CVPR, 2016, pp. 770–778.

- [13] G. Huang, Z. Liu, L. Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” in Proc. CVPR, 2017, pp. 2261–2269.

- [14] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” in Proc. NIPS, vol. 2, 2012, pp. 1097–1105.

- [15] J. Hu, L. Shen, and G. Sun, “Squeeze-and-excitation networks,” in Proc. IEEE CVPR, 2018, pp. 7132–7141.

- [16] T.-Y. Lin, P. Goyal, R. Girshick, K. He, and P. Dollár, “Focal loss for dense object detection,” IEEE Trans. PAMI, vol. 42, no. 2, pp. 318–327, 2020.

- [17] W. Yu, T. Yang, and C. Chen, “Towards resolving the challenge of long-tail distribution in UAV images for object detection,” in Proc. WACV, 2021, pp. 3258–3267.

- [18] S. Schaeffer, M. Jimenez-Lizarraga, S. Rodriguez-Sanchez, G. Cuéllar-Rodríguez, O. Aguirre-Calderón, A. Reyna-González, and A. Escobar, “Detection of bark beetle infestation in drone imagery via thresholding cellular automata,” Journal of Applied Remote Sensing, vol. 15, no. 1, pp. 1–20, 2021.