icml

ClassContrast: Bridging the Spatial and Contextual Gaps for Node Representations

Abstract

Graph Neural Networks (GNNs) have revolutionized the domain of graph representation learning by utilizing neighborhood aggregation schemes in many popular architectures, such as message passing graph neural networks (MPGNNs). This scheme involves iteratively calculating a node’s representation vector by aggregating and transforming the representation vectors of its adjacent nodes. Despite their effectiveness, MPGNNs face significant issues, such as oversquashing, oversmoothing, and underreaching, which hamper their effectiveness. Additionally, the reliance of MPGNNs on the homophily assumption, where edges typically connect nodes with similar labels and features, limits their performance in heterophilic contexts, where connected nodes often have significant differences. This necessitates the development of models that can operate effectively in both homophilic and heterophilic settings.

In this paper, we propose a novel approach, ClassContrast, grounded in Energy Landscape Theory from Chemical Physics, to overcome these limitations. ClassContrast combines spatial and contextual information, leveraging a physics-inspired energy landscape to model node embeddings that are both discriminative and robust across homophilic and heterophilic settings. Our approach introduces contrast-based homophily matrices to enhance the understanding of class interactions and tendencies. Through extensive experiments, we demonstrate that ClassContrast outperforms traditional GNNs in node classification and link prediction tasks, proving its effectiveness and versatility in diverse real-world scenarios.

Code — https://anonymous.4open.science/r/CC-77A6/

1 Introduction

Over the past decade, GNNs have made a breakthrough in graph machine learning by adapting several deep learning models, originally developed for domains such as computer vision and natural language processing, to the graph setting (Section 2) . By using different approaches and architectures, GNNs produce powerful node embeddings by integrating neighborhood information with domain features. While recent GNN models consistently outperform the state-of-the-art (SOTA) results, in the seminal papers (Xu et al. 2019; Morris et al. 2019), the authors showed that the expressive power of message passing GNNs (MP-GNNs) is about the same as a well-known former method in graph representation learning, i.e. the Weisfeiler-Lehman algorithm (Shervashidze et al. 2011). Furthermore, recent studies show that GNNs suffer from significant challenges like oversquashing (Topping et al. 2021), oversmoothing (Oono and Suzuki 2020), and underreaching (Barceló et al. 2020).

Another major issue with MPGNNs is their reliance on the (useful) homophily assumption, where edges typically connect nodes sharing similar labels and node features networks (Sen et al. 2008). However, many real-world scenarios exhibit heterophilic behavior, such as in protein and web networks (Pei et al. 2019) where conventional GNNs (Hamilton et al. 2017; Veličković et al. 2018a) may experience significant performance degradation, sometimes even underperforming compared to a multilayer perceptron (MLP) (Zhu et al. 2020; Luan et al. 2022). Therefore, there is a pressing need to develop models that function effectively in both homophilic and heterophilic settings.

We hypothesize that the limitations in current methods arise from the ineffective utilization of two key types of information in graphs: spatial information, which captures the local neighborhood’s class distribution for each node, and contextual information, which assesses each node’s similarity or dissimilarity to class representatives within a latent space, incorporating information from relevant but distant nodes. To address this issue, we leverage Energy Landscape Theory (Wales 2002; Wales et al. 1998) from Chemical Physics, a framework used to describe and analyze the behavior of complex systems, such as protein folding (Plotkin and Onuchic 2002). This theory visualizes the potential energy of a system as a multidimensional landscape, with valleys and peaks representing states of different energies.

We apply this theory to model a node’s position within a network, aiming to understand how nodes form connections based on specific contextual influences encoded as node attributes. In ClassContrast, integrating spatial and contextual information is similar to navigating an energy landscape where each node’s embedding seeks a low-energy state that is both highly discriminative and informative. In a homophilic setting, this state ideally balances similarity to nodes within the same class (lowering energy) and dissimilarity to nodes in different classes (avoiding overly general local minima). In a heterophilic setting, the model uses both types of information to address the complexities of non-conforming node relationships, where nodes that are dissimilar may still be connected. The energy landscape model helps identify and strengthen valuable connections that cross traditional boundaries, enhancing the model’s classification accuracy in environments with non-standard “like-likes-like” patterns. This dual capability allows ClassContrast to generate robust embeddings that perform well across varied graph structures, regardless of network homophily or heterophily.

Overall, ClassContrast effectively integrates spatial and contextual information to produce robust node embeddings, demonstrating impressive performance in node classification and link prediction tasks.

Our contributions can be summarized as follows:

-

We propose a physics-inspired approach, leveraging Energy Landscape Theory, to construct class-aware node representations.

-

We introduce contrast-based homophily matrices that provide detailed insights into interactions and homophily tendencies among classes.

-

Our ClassContrast framework combines spatial and contextual information, achieving highly competitive results with state-of-the-art GNNs in both node classification and link prediction tasks.

-

Our experiments show that ClassContrast can be smoothly integrated with GNN models, boosting performance across both homophilic and heterophilic settings, proving its versatility across various real-world applications.

2 Related Work

Over the past decade, GNNs have dominated graph representation learning, especially excelling in node classification tasks (Xiao et al. 2022). After the success of Graph Convolutional Networks (GCN) (Kipf and Welling 2017), using a neighborhood aggregation strategy to perform convolution operations on graph, subsequent efforts focused on modifying, approximating, and extending the GCN approach, e.g., involving attention mechanisms (GAT) (Veličković et al. 2018a), sampling and aggregating information from a node’s local neighborhood (GraphSAGE) (Hamilton et al. 2017). However, a notable scalability challenge arose due to its dependence on knowing the full graph Laplacian during training. To address this limitation, numerous works emerged to enhance node classification based on GCN, such as methods , importance sampling node (FastGCN) (Chen, Ma, and Xiao 2018), adaptive layer-wise sampling with skip connections (ASGCN) (Huang et al. 2018), adapting deep layers to GCN architectures (deepGCN) (Li et al. 2019), incorporating node-feature convolutional layers (NFC-GCN) (Zhang et al. 2022).

Two fundamental shortcomings of MPGNNs are the loss of structural information within node neighborhoods and the difficulty in capturing long-range dependencies. To address these inherent issues, numerous studies conducted in the past few years have introduced various enhancements. These improvements encompass diverse aggregation schemes, such as skip connections(Chen et al. 2020), geometric methods(Pei et al. 2019), aiming to mitigate the risk of losing crucial information. Additionally, advancements like implicit hidden layers (Geng et al. 2021) and multiscale modeling (Liu et al. 2022) have been explored to augment the GNN’s capabilities.

Another major issue with MPGNNs is that they operate under the homophily assumption and exhibit poor performance in heterophilic networks (Zhu et al. 2020; Luan et al. 2023). Hence, in the past few years, various efforts have been undertaken to develop GNNs that function effectively in heterophilic settings (Pei et al. 2019; Lim et al. 2021a; Luan et al. 2022; Zheng et al. 2022; Xu et al. 2023).

However, to the best of our knowledge, no previous work has developed a systematic approach to analyze class-wise relations.

3 Energy Landscape Theory and Its Application to Node Representation

Energy Landscape Theory originates from the field of chemical physics (Wales 2002), where it is utilized to describe the potential energy of a molecular system as a multidimensional landscape. This landscape is characterized by a series of valleys and peaks, each representing different states of energy. Valleys correspond to stable states (or low-energy configurations) where a system tends to reside under normal conditions, while peaks represent high-energy states that are less likely to be occupied.

In the context of graph neural networks, we apply the principles of Energy Landscape Theory to the problem of node representation learning. Here, we envisage nodes as entities navigating through an ’energy landscape’ constructed from the network structure and node attributes (see Appendix E for connections to the Structural Balance Theory (Heider 1946) on social networks). This landscape is shaped by the distribution of node features and the topological arrangement of the graph, wherein each node seeks a position corresponding to a state of minimal energy. For example, a fundamental result in social networks states that nodes aim to close friendship triangles (Granovetter 1973) to achieve a lower state (and foster trust). The absence of closed triangles indicates higher energy states, which may signify weaker relationships or even potential animosity. Such relationships are studied in signed network analysis using balance and structural theories (Leskovec, Huttenlocher, and Kleinberg 2010). We analogously define energy states by the optimal embedding that reflects accurate class membership and distinctiveness from nodes of different classes, which we measure with a class-aware definition of homophily (see Section 4.4). This approach allows us to conceptualize the embedding process as a dynamic system where nodes move towards lower-energy configurations that represent stable, informative, and discriminative embeddings in the context of the given graph.

Why is Energy Landscape Approach Useful?

We posit that a graph exhibits not merely a singular energy peak or valley, but multiple such features shaped by group formation dynamics. Consequently, nodes may display either homophilous or heterophilous behaviors depending on their group affiliations within a single graph. This variability implies that node representation should not uniformly assume proximity of neighbors as a reliable descriptor of a node’s characteristics. For example, Zhu et al. report that “in the Chameleon dataset, intra-class similarity is generally higher than inter-class similarity so MLP works better than GCN” (Zhu et al. 2020). Therefore, message-passing-based schemes should be employed selectively, based primarily on the presence of demonstrable homophily within groups. In the absence of such homophily, the system should learn to model heterophilic behaviors effectively. To this end, we claim that our framework, ClassContrast, is capable of simultaneously modeling both homophilous and heterophilous behaviors, adapting its approach as necessary for different groups.

We begin by detailing ClassContrast, focusing on two primary objectives: the discovery of spatial and contextual information. The first objective is to extract information from the node’s neighborhood (spatial), while the second is to derive insights from the node’s domain attributes (contextual). Our framework is visualized in Figure 1.

4 ClassContrast Embeddings

In the following discussion, we adhere to the notation where represents the vertices (nodes), represents edges, represents edge weights, and represents node features. If no node features are provided, . Consider a graph where nodes are categorized into classes. For each node , we aim to construct an embedding of dimension , where is determined by specifics of the graph such as its directedness, weighted nature, and the format of domain features.

Specifically, we generate spatial embeddings , derived from local neighborhood information, and contextual embeddings , derived from node properties specific to the domain. Each embedding, and , is -dimensional, with one entry corresponding to each class (ClassContrast coordinates). These embeddings are then concatenated to form the final embedding of size . For simplicity, we focus on applications in an inductive setting. In Section C.2, we discuss adaptations of our methods to accommodate transductive settings.

4.1 Spatial Node Embedding

Spatial node embedding leverages the structure of a graph to measure the proximity of a node to various known classes within that graph. For each node in the graph with vertices , the -hop neighborhood of , denoted as , includes all vertices such that the shortest path distance between and is at most hops: . Here, represents the shortest path length between and . If no path exists, then . In the case of a directed graph , edge directions are disregarded when calculating distances.

Assume that there are classes of nodes, represented as . Let be the set of nodes with known labels in the training dataset.

The feature vector for node is initialized as the distribution of class occurrences: where counts how many neighbors of belong to class within the k-hop neighborhood intersecting with : . Consider the toy example of Figure 2(a), where the (k=1)-hop neighborhood of node contains node from class and node from class . As a result, . We extend the spatial embeddings to directed and weighted graph cases by incorporating edge directions and weights in additional dimensions (see Appendix Section C.3) for the definitions.

4.2 Contextual Node Embedding

Inspired by Energy Landscapes, contextual node embeddings utilize the attribute space to measure the distance of a node to known classes. In social networks, node attributes might include user-specific details (Mislove et al. 2007), while in citation networks, they often involve the presence or absence of certain keywords (Tang et al. 2008). These attributes are crucial for predicting node behavior as they provide intrinsic information about the nodes themselves. Hence, we develop a general and versatile approach to effectively utilize this information in node classification task.

We use the term contextual node embeddings to highlight the importance of context within the graph structure, beyond isolated node attributes. Unlike attribute embeddings, which embed node characteristics directly, contextual node embeddings integrate the node’s attributes and its relational and structural position in the graph. This is crucial in scenarios where class behavior and node features interact, such as an article’s topic influencing its citation links.

A real-life context example.

Consider the CORA dataset where publications that are frequently cited by other papers, especially by those within the same class (topic), may be considered authoritative in their field. An embedding that captures this citation context can differentiate between a highly influential paper and one that is similarly cited (by papers from other classes) but less authoritative.

In contextual embeddings we identify two cases on attribute availability and quality. First, attributes may already be available in high quality vectors, such as word embeddings (e.g., word2vec (Mikolov et al. 2013a, b)). We directly integrate these vectors without additional processing, as detailed in Appendix D. When node attributes are available but unprocessed, we define an attribute vector for each node , which maps nodes to an -dimensional real vector space. For each class , we identify a cluster of points and establish a representative landmark in for this cluster.

Definition 4.1 (Class Landmark).

Given a node class and its corresponding cluster of node embeddings , the landmark is computed as the centroid of the points in , normalized by the number of nodes in :

Definition 4.2 (Distances to Class Landmarks).

Let be a metric that measures the distance in . For a node with an embedding , the distance to the class landmark is defined as: for each class . These distances help in assessing how similar or distinct the node is from each class represented by the landmarks.

Definition 4.3 (Contextual Embedding).

Given a node and a set of class landmarks , one for each class , the contextual embedding of node is defined by a vector of distances from the node’s embedding to each class landmark: where for each class (see Figure 2(b)). This vector encapsulates the node’s position relative to each class within the attribute space.

Landmark Sets.

To obtain information about the domain attributes of nodes for each class, multiple landmarks can be defined. For instance, while the first landmark represents the center of each cluster , the second landmark may capture the most frequent attributes of (see Appendix D). For each type of landmark, a corresponding distance or similarity measure is defined, such as Euclidean or cosine distance for real-valued attribute vectors, or Jaccard similarity for categorical attributes (Niwattanakul et al. 2013). Subsequently, another -dimensional vector is obtained, where for each class . Landmark set extensions are explained in Appendix D.

4.3 ClassContrast Embedding

We may expand spatial neighborhoods, and extend landmark sets arbitrarily. However, for exposition purposes, we will define ClassContrast embeddings over a directed graph where each node’s neighborhood is considered up to two hops and each class has two landmarks. We define the ClassContrast Embedding of a node by concatenating spatial and contextual embeddings. Specifically, we consider:

Spatial embeddings from incoming and outgoing 1-hop neighborhoods ( and ) and the 2-hop neighborhood ().

Contextual embeddings based on distances to two class landmarks ( and ), representing domain-specific characteristics.

Definition 4.4 (ClassContrast Embedding).

The final embedding for node is a concatenated vector of these embeddings, resulting in a -dimensional vector where is the number of classes. For clarity, we represent in a 2D format () where each column corresponds to one class, and each row represents one type of "contrast" vector:

4.4 Homophily and ClassContrast

In this section, we develop the final component of the energy landscape theory for ClassContrast that will enable us to quantify the energy landscapes between classes.

In recent years, several metrics have been introduced to study the effect of homophily on graph representation learning (Lim et al. 2021b; Jin et al. 2022; Luan et al. 2022; Platonov et al. 2023) (see overview in Section B.1). A widely used metric, the node homophily ratio, is defined as , where represents the number of adjacent nodes to sharing the same class. A graph is termed homophilic if , and heterophilic otherwise.

Although homophily measures similarity across an entire graph, individual groups within a graph may display different homophily behaviors. Our ClassContrast approach leverages class interactions and introduces a class-aware homophily score through non-symmetric measures:

Definition 4.5 (ClassContrast (CC) Homophily Matrices).

Let be graph with node classes . Let be a spatial or contextual embedding. We define the homophily rate between classes and as where is the entry of . Considering pairwise homophily rates of all classes, we create the matrix as the Homophily Matrix of .

In Appendix Table 9, we provide examples of CC homophily matrices. This matrix provides detailed insights into both intra-class (homophily) and inter-class (heterophily) interactions, with diagonal elements indicating the propensity for nodes to connect within their own class.

Definition 4.6 ( Homophily Ratio).

For a given Homophily Matrix , Homophily is defined as the average of the diagonal elements. i.e, .

For example, the Spatial-1 Homophily ratio reveals a node’s likelihood to connect with nodes of the same class within its immediate neighborhood. Homophily matrices not only introduce new ways to measure homophily but also relates to various existing homophily metrics:

Theorem 4.7.

For a given , let be CC spatial-1 vector. Let be the vector where the entry corresponding to the class of is set to . Then, .

The proof of this theorem and further discussions on how our ClassContrast homophily relates to other forms of homophily are detailed in the Appendix B.

5 Experiments

We evaluate ClassContrast in two tasks: node classification and link prediction. We share our Python implementation at https://anonymous.4open.science/r/CC-77A6/

Datasets.

We use three widely-used homophilic datasets which are citation networks, CORA, CITESEER, and PUBMED (Sen et al. 2008), two Open Graph Benchmark (OGB) datasets: OGBN-ARXIV and OGBN-MAG (Hu et al. 2020), and five heterophilic datasets, TEXAS, CORNELL, WISCONSIN, CHAMELEON and SQUIRREL (Pei et al. 2019). The datasets are described in Appendix D and their statistics are given in Table 1.

Model Setup and Metrics.

For the three citation networks and five heterophilic datasets, the same setup is used as outlined by Bodnar et al. (Bodnar et al. 2022): we split nodes of each class into 48%, 32%, and 20% for training, validation and testing and report averages of 10 runs. We follow the train/validation/test split set by the OGB to report the accuracy score for OGBN-ARXIV and OGBN-MAG (Hu et al. 2020).

Embeddings.

We give the details of ClassContrast embeddings for each dataset in appendix D. The dimensions of the embeddings used for each dataset are given in Table 11.

Model Hyperparameters.

ClassContrast uses an MLP classifier (Haykin 1998). We configure the architecture with neurons for the hidden layers, a learning rate of , and employed the Adam optimization method for training for 500 epochs. We utilized the ReLU activation function for the hidden layers and applied a L2 regularization value of to mitigate overfitting.

Hardware.

We ran non-OGB experiments on a machine with Apple m2 chip Processor (8-core CPU, 10-core GPU, and, 6-core Neural Engine), and 16Gb of RAM. For the OGB data sets, we ran experiments on Google Colab with Intel(R) processor, 2.20GHz CPU, V100 GPUs, and 25.5Gb of RAM.

Runtime.

ClassContrast is computationally efficient. ClassContrast requires approximately hours for OGBN-ARXIV and hours for OGB-MAG to create all embeddings, and about minutes to train for all OGB datasets. End-to-end, our model processes three citation network datasets and three WebKb datasets, including the CHAMELEON dataset, in under a minute. Moreover, PUBMED takes about minutes and SQUIRREL approximately minutes. For comparison, RevGNN-Deep requires days and RevGNN-Wide takes days to train for 2000 epochs on a single NVIDIA V100 for the OGB datasets.

5.1 Node Classification Results

Baselines.

We compare ClassContrast with 12 state-of-the-art models (Table 2) and three classical models: GCN (Kipf and Welling 2017), GraphSage (Hamilton et al. 2017) and GAT (Veličković et al. 2018a). We choose ALT-APPNP (Liang et al. 2023) and LRGNN (Liang et al. 2023) SOTA models as they are recent and outperform the competitors in their respective papers. All baselines in Tables 2 and 3 use the transductive setting except for GraphSAGE, which uses the inductive setting.

| Datasets | Nodes | Edges | Classes | Features | Tr/Val/Test () | Hom. |

| CORA | 2,708 | 5,429 | 7 | 1,433 | 48/32/20 | 0.83 |

| CITESEER | 3,312 | 4,732 | 6 | 3,703 | 48/32/20 | 0.72 |

| PUBMED | 19,717 | 44,338 | 3 | 500 | 48/32/20 | 0.79 |

| OGBN-ARXIV | 169,343 | 1,166,243 | 40 | 128 | OGB | 0.65 |

| OGBN-MAG | 1,939,743 | 21,111,007 | 349 | 128 | OGB | 0.30 |

| TEXAS | 183 | 309 | 5 | 1,703 | 48/32/20 | 0.10 |

| CORNELL | 183 | 295 | 5 | 1,703 | 48/32/20 | 0.39 |

| WISCONSIN | 251 | 499 | 5 | 1,703 | 48/32/20 | 0.15 |

| CHAMELEON | 2,277 | 36,101 | 5 | 2,325 | 48/32/20 | 0.25 |

| SQUIRREL | 5,201 | 2,17,073 | 5 | 2,089 | 48/32/20 | 0.22 |

| Dataset | CORA | CITESEER | PUBMED | TEXAS | CORNELL | WISC. | CHAM. | SQUIR. |

|---|---|---|---|---|---|---|---|---|

| Node Homophily | 0.83 | 0.72 | 0.79 | 0.10 | 0.39 | 0.15 | 0.25 | 0.22 |

| GCN (Kipf and Welling 2017) | 86.141.10 | 75.511.28 | 87.220.37 | 56.225.81 | 60.545.30 | 51.96 5.17 | 65.943.23 | 49.632.43 |

| GraphSAGE (Hamilton et al. 2017) | 86.261.54 | 76.041.30 | 88.450.50 | 75.955.01 | 75.955.01 | 81.185.56 | 58.731.68 | 41.610.74 |

| GAT (Veličković et al. 2018a) | 85.031.61 | 76.551.23 | 87.301.10 | 54.326.30 | 61.895.05 | 49.414.09 | 60.262.50 | 40.721.55 |

| Geom-GCN (Pei et al. 2019) | 85.351.57 | 78.021.15 | 89.950.47 | 66.762.72 | 60.543.67 | 64.513.66 | 60.002.81 | 38.150.92 |

| H2GCN (Zhu et al. 2020) | 87.871.20 | 77.111.57 | 89.490.38 | 84.867.23 | 82.705.28 | 87.654.98 | 60.112.15 | 36.481.86 |

| GPRGCN (Chien et al. 2020) | 87.951.18 | 77.131.67 | 87.540.38 | 81.355.32 | 78.116.55 | 82.556.23 | 46.581.71 | 31.611.24 |

| GCNII (Chen et al. 2020) | 88.371.25 | 77.331.48 | 90.150.43 | 77.573.83 | 77.863.79 | 80.393.40 | 63.863.04 | 38.471.58 |

| WRGAT (Suresh et al. 2021) | 88.202.26 | 76.811.89 | 88.520.92 | 83.625.50 | 81.623.90 | 86.983.78 | 65.240.87 | 48.850.78 |

| LINKX (Lim et al. 2021a) | 84.641.13 | 73.190.99 | 87.860.77 | 74.608.37 | 77.845.81 | 75.495.72 | 68.421.38 | 61.811.80 |

| NLGAT (Liu, Wang, and Ji 2021) | 88.501.80 | 76.201.60 | 88.200.30 | 62.607.10 | 54.707.60 | 56.907.30 | 65.701.40 | 56.802.50 |

| GloGNN++(Li et al. 2022) | 88.331.09 | 77.221.78 | 89.240.39 | 84.054.90 | 85.955.10 | 88.043.22 | 71.211.84 | 57.881.76 |

| GGCN (Yan et al. 2022) | 87.951.05 | 77.141.45 | 89.150.37 | 84.864.55 | 85.686.63 | 86.863.29 | 71.141.84 | 55.171.58 |

| Gen-NSD (Bodnar et al. 2022) | 87.301.15 | 76.321.65 | 89.330.35 | 82.975.13 | 85.686.51 | 89.213.84 | 67.931.58 | 53.171.31 |

| LRGNN (Liang et al. 2023) | 88.300.90 | 77.501.30 | 90.200.60 | 90.304.50 | 86.505.70 | 88.203.50 | 79.162.05 | 74.381.96 |

| ALT-APPNP (Xu et al. 2023) | 89.601.30 | 79.901.20 | 90.300.50 | 89.502.20 | 90.404.50 | 88.603.30 | 77.001.90 | 69.401.50 |

| ClassContrast | 88.651.25 | 86.861.91 | 91.000.45 | 90.544.80 | 90.812.90 | 93.332.95 | 84.081.55 | 54.651.27 |

| Model | ARXIV | MAG |

|---|---|---|

| GCN (Kipf and Welling 2017) | 71.74 | 34.87 |

| GraphSAGE (Hamilton et al. 2017) | 71.49 | 37.04 |

| GAT (Veličković et al. 2018a) | 73.91 | 37.67 |

| DeepGCN (Li et al. 2019) | 72.32 | – |

| DAGNN (Liu, Gao, and Ji 2020) | 72.09 | – |

| UniMP-v2 (Shi et al. 2020) | 73.92 | – |

| RevGAT (Li et al. 2021) | 74.26 | – |

| RGCN (Yu et al. 2022a) | – | 47.96 |

| HGT (Yu et al. 2022a) | – | 49.21 |

| R-HGNN (Yu et al. 2022a) | – | 52.04 |

| LEGNN (Yu et al. 2022b) | 73.71 | 53.78 |

| ClassContrast | 71.56 | 50.03 |

Performance.

We present the classification results in Table 2 and Table 3. OGB results are shown in Table 3. ClassContrast performance is within 3% of the SOTA accuracy for the OGB datasets. Table 2 shows that ClassContrast outperforms SOTA models in 6 out of 8 datasets. In particular, ClassContrast achieves a median accuracy of compared to of the best baseline, ALT-APPNP (Xu et al. 2023). The improvements are most notable in the CITESEER () and Chameleon () datasets, underlining the contrasive power of our approach in both homophilic and heterophilic datasets.

SQUIRREL performance.

An exception to the SOTA values in Table 2 is the SQUIRREL dataset, which allows us to showcase the use of energy landscape theory through homophily, as follows.

SQUIRREL homophily matrices in Table 6 reveal significant diversity in class interactions, particularly evident in the "Spatial-1" and "Spatial-2" matrices. These matrices show substantial inter-class interactions, sometimes exceeding intra-class interactions. Such a pattern can be interpreted using energy landscape theory, where the energy barriers between different classes are low, suggesting a disordered energy landscape. This scenario indicates that the energy required for a node to transition from one class to another, in terms of classification, is reduced. Consequently, there isn’t a well-defined ’valley’ or ’well’ that confines nodes strictly within one class, complicating the classification model’s ability to accurately define class boundaries and leading to lower accuracy. Please see Table 10 where the inter-calls interactions are more moderate for the Wisconsin dataset. Furthermore, we can contrast the SQUIRREL and WISCONSIN matrices with those of the highly homophilous CORA dataset in Table 9, where diagonal values are more pronounced.

Domain homophily matrix in Table 6 shows some improvement in intra-class interactions, yet the highest homophily ratios remain relatively modest (around ). This implies that while domain features do provide some level of class-specific clustering, the low energy states are shallow, allowing nodes to exhibit characteristics similar to multiple classes. Such shallow states suggest that during the learning process, nodes are not distinctly categorized into deep, well-separated classes, which can influence the training dynamics and lead to blurred class separations.

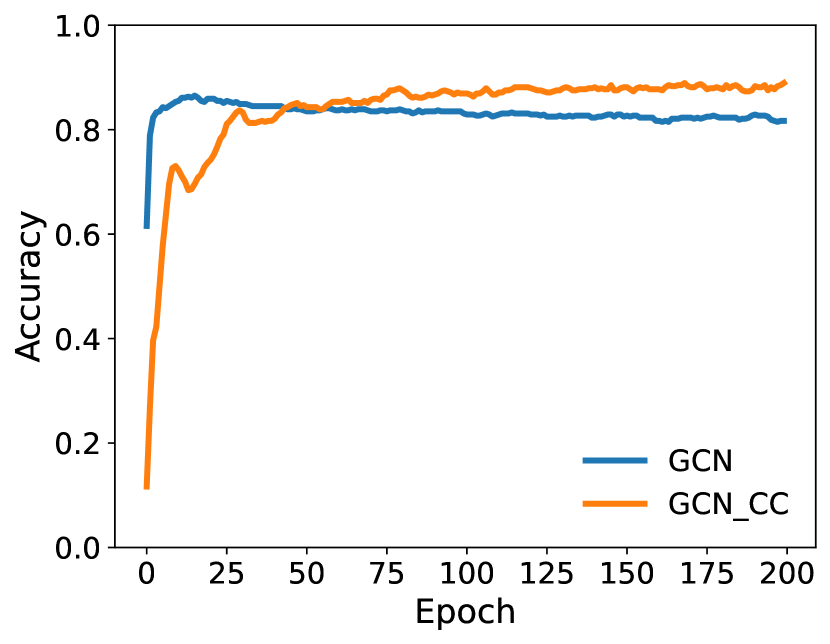

GNNs with ClassContrast Embeddings.

To evaluate the integration of ClassContrast (CC) with GNNs, we replace the initial node embeddings in GNNs with CC embeddings and assess their effectiveness. Accuracy results are presented in Table 4 and Figures 3 and 4 with additional details given in Appendix A. The results indicate that CC embeddings accelerate the convergence of GNN performance while maintaining a consistent accuracy gap over the vanilla model. Furthermore, combining CC with the recent LINKX model yields the best results across all baselines for both datasets, highlighting a promising avenue for enhancing GNN performance.

| Dataset | Model | GNN | CC-GNN | Imp.() |

|---|---|---|---|---|

| CORA | GCN | 86.141.10 | 88.430.92 | 2.29 |

| SAGE | 86.261.54 | 90.081.37 | 3.82 | |

| GAT | 85.031.61 | 87.182.12 | 2.15 | |

| LINKX | 84.641.13 | 90.061.28 | 5.42 | |

| TEXAS | GCN | 56.225.81 | 70.816.43 | 14.59 |

| SAGE | 75.955.01 | 87.846.65 | 11.89 | |

| GAT | 54.326.30 | 62.165.70 | 7.84 | |

| LINKX | 74.608.37 | 93.784.04 | 19.18 |

| Dataset | CORA | CITESEER | PUBMED | WISC. | CORNELL | TEXAS | Overall |

|---|---|---|---|---|---|---|---|

| Node2vec (Grover et al. 2016) | 0.8560.015 | 0.8940.014 | 0.9190.004 | – | – | – | – |

| GAE (Kipf and Welling 2016) | 0.8950.165 | 0.8870.084 | 0.9570.012 | 0.6890.384 | 0.7361.090 | 0.7531.297 | 0.820 |

| VGAE (Kipf and Welling 2016) | 0.8520.493 | 0.8100.339 | 0.9290.134 | 0.6690.866 | 0.7830.401 | 0.7670.557 | 0.802 |

| ARVGE (Pan et al. 2018) | 0.9130.079 | 0.8780.177 | 0.9650.015 | 0.7110.377 | 0.7890.501 | 0.7650.468 | 0.837 |

| DGI (Veličković et al. 2018b) | 0.8980.080 | 0.9550.100 | 0.9120.060 | – | – | – | – |

| G-VAE (Grover et al. 2019) | 0.9470.011 | 0.9730.006 | 0.9740.004 | – | – | – | – |

| GNAE (Ahn and Kim 2021) | 0.9410.063 | 0.9690.022 | 0.9540.019 | 0.7820.829 | 0.7291.083 | 0.7511.067 | 0.854 |

| VGNAE (Ahn and Kim 2021) | 0.8920.067 | 0.9550.055 | 0.8970.040 | 0.7030.120 | 0.7330.573 | 0.7890.302 | 0.828 |

| GIC (Mavromatis et al. 2021) | 0.9350.060 | 0.9700.050 | 0.9370.030 | – | – | – | – |

| LINKX (Lim et al. 2021a) | 0.9340.030 | 0.9350.050 | – | 0.8010.380 | – | 0.7580.470 | 0.857 |

| DisenLink (Zhou et al. 2022) | 0.9710.040 | 0.9830.030 | – | 0.8440.190 | – | 0.8070.400 | 0.901 |

| DGAE (Fu et al. 2023) | 0.9580.044 | 0.9720.034 | 0.9780.012 | 0.7570.586 | 0.6811.207 | 0.6831.279 | 0.838 |

| VDGAE (Fu et al. 2023) | 0.9590.042 | 0.9780.030 | 0.9700.012 | 0.8500.478 | 0.7610.475 | 0.8130.849 | 0.889 |

| ClassContrast | 0.9670.047 | 0.9520.059 | 0.9800.012 | 0.7960.338 | 0.8140.413 | 0.8310.393 | 0.905 |

| ClassContrast Spatial-1 Homophily Matrix | |||||

|---|---|---|---|---|---|

| 0.147 | 0.146 | 0.154 | 0.213 | 0.340 | |

| 0.132 | 0.162 | 0.173 | 0.221 | 0.312 | |

| 0.104 | 0.156 | 0.194 | 0.233 | 0.314 | |

| 0.106 | 0.146 | 0.176 | 0.252 | 0.320 | |

| 0.106 | 0.139 | 0.178 | 0.236 | 0.341 | |

| ClassContrast Spatial-2 Homophily Matrix | |||||

|---|---|---|---|---|---|

| 0.167 | 0.170 | 0.197 | 0.229 | 0.236 | |

| 0.133 | 0.192 | 0.200 | 0.229 | 0.247 | |

| 0.121 | 0.168 | 0.236 | 0.226 | 0.250 | |

| 0.119 | 0.158 | 0.197 | 0.261 | 0.266 | |

| 0.118 | 0.152 | 0.193 | 0.230 | 0.307 | |

| ClassContrast Domain Homophily Matrix | |||||

|---|---|---|---|---|---|

| 0.174 | 0.187 | 0.203 | 0.212 | 0.223 | |

| 0.164 | 0.194 | 0.205 | 0.214 | 0.224 | |

| 0.165 | 0.188 | 0.210 | 0.214 | 0.223 | |

| 0.162 | 0.187 | 0.205 | 0.220 | 0.226 | |

| 0.161 | 0.186 | 0.205 | 0.216 | 0.231 | |

| Features | CORA | CITESEER | PUBMED | TEXAS | CORNELL | WISC. | CHAM. | SQUIR. |

|---|---|---|---|---|---|---|---|---|

| Spatial Only | 80.911.64 | 60.634.35 | 85.860.32 | 67.296.29 | 49.454.23 | 57.846.14 | 63.703.05 | 44.672.55 |

| Context Only | 83.131.94 | 90.441.27 | 89.700.50 | 91.354.37 | 92.714.23 | 94.712.62 | 78.811.33 | 44.081.58 |

| Both (CC) | 88.651.25 | 86.861.91 | 91.000.45 | 90.544.80 | 90.812.90 | 93.332.95 | 84.081.55 | 54.651.27 |

Ablation Study.

To evaluate the efficacy of our feature vectors, we conducted ablation studies for the node classification task. We employed three submodels: utilizing (1) spatial embeddings, (2) domain embeddings, and (3) both (ClassContrast embeddings). As given in Table 7, we observe that in the CORA, CHAMELEON, SQUIRREL, and PUBMED datasets, using only spatial or domain feature embeddings individually yields satisfactory performance. However, their combination significantly enhances performance in most cases. SQUIRREL () and CHAMELEON () experience a significant increase in accuracy in the combined setting. We note that when the ablation study has a large accuracy gap between the spatial and domain only models for a dataset (e.g., in TEXAS), the accuracies of the SOTA models in Table 2 show huge accuracy deviations for the dataset as well (e.g., TEXAS accuracies range from to ). A possible explanation is that models might individually capture either spatial or contextual information. Consequently, they may be unable to combine these two sets of features to counterbalance the insufficient information present in one of them, leading to diminished accuracy scores. In contrast, the ablation study offers evidence that the ClassContrast approach is resilient to this limitation and, experiences smaller accuracy losses (e.g., for TEXAS in Table 7).

5.2 Link Prediction Results

In this part, we show the utilization of ClassContrast Embeddings in the link prediction task. We utilize the common setting described in (Zhou et al. 2022) where the datasets were partitioned into training, validation, and test sets with a ratio of and respectively.

The model architecture for the link prediction problem consists of three MLP layers. In this framework, for each considered node pairs , the normalized CC encodings are term-wise multiplied, , and feed into MLP. We configure the model with a hidden neuron dimension of 16, a learning rate of 0.001, and train it over 100 epochs with a batch size of 128.

Table 5 reports the prediction results. We report the baselines from (Fu et al. 2023) and (Zhou et al. 2022), which use our experiment settings. Out of three homophilic and three heterophilic datasets, ClassContrast outperforms existing models in three datasets, and gets the second best result in one. On the six datasets, ClassContrast reaches the highest mean AUC of 0.905.

Limitations. The effectiveness of ClassContrast heavily depends on the quality and relevance of the landmarks used to define the contextual embeddings. If these are not representative of the actual data distributions, the model’s performance could be adversely affected. Moreover, the computational overhead associated with generating and processing these enhanced embeddings might be prohibitive for extremely large graphs or datasets with high-dimensional features.

6 Conclusion

In this work, we introduced ClassContrast, a novel method that integrates domain and spatial information from graphs. Our results show that ClassContrast effectively addresses the predictive limitations of using either spatial or domain information alone, yielding significant improvements when both feature sets are informative. Our computationally efficient model has outperformed or achieved highly competitive results compared to state-of-the-art GNNs across multiple graph tasks, including small/large and homophilic/heterophilic settings. For future work, we plan to extend the ClassContrast approach to effectively integrate with GNNs and to apply it to temporal graphs, incorporating both temporal information and node characteristics.

References

- Abu-El-Haija et al. (2019) Abu-El-Haija, S.; Perozzi, B.; Kapoor, A.; Alipourfard, N.; Lerman, K.; Harutyunyan, H.; Ver Steeg, G.; and Galstyan, A. 2019. Mixhop: Higher-order graph convolutional architectures via sparsified neighborhood mixing. In international conference on machine learning, 21–29. PMLR.

- Ahn and Kim (2021) Ahn, S. J.; and Kim, M. 2021. Variational graph normalized autoencoders. In Proceedings of the 30th ACM international conference on information & knowledge management, 2827–2831.

- Arnold, Nallapati, and Cohen (2007) Arnold, A.; Nallapati, R.; and Cohen, W. W. 2007. A comparative study of methods for transductive transfer learning. In Seventh IEEE international conference on data mining workshops (ICDMW 2007), 77–82. IEEE.

- Barceló et al. (2020) Barceló, P.; Kostylev, E. V.; Monet, M.; Pérez, J.; Reutter, J.; and Silva, J.-P. 2020. The logical expressiveness of graph neural networks. In 8th International Conference on Learning Representations (ICLR 2020).

- Bodnar et al. (2022) Bodnar, C.; Di Giovanni, F.; Chamberlain, B.; Lio, P.; and Bronstein, M. 2022. Neural sheaf diffusion: A topological perspective on heterophily and oversmoothing in gnns. Advances in Neural Information Processing Systems, 35: 18527–18541.

- Cartwright and Harary (1956) Cartwright, D.; and Harary, F. 1956. Structural balance: a generalization of Heider’s theory. Psychological review, 63(5): 277.

- Chen, Ma, and Xiao (2018) Chen, J.; Ma, T.; and Xiao, C. 2018. FastGCN: Fast Learning with Graph Convolutional Networks via Importance Sampling. In International Conference on Learning Representations.

- Chen et al. (2020) Chen, M.; Wei, Z.; Huang, Z.; Ding, B.; and Li, Y. 2020. Simple and deep graph convolutional networks. In International conference on machine learning, 1725–1735. PMLR.

- Chien et al. (2020) Chien, E.; Peng, J.; Li, P.; and Milenkovic, O. 2020. Adaptive Universal Generalized PageRank Graph Neural Network. In International Conference on Learning Representations.

- Ciano et al. (2021) Ciano, G.; Rossi, A.; Bianchini, M.; and Scarselli, F. 2021. On inductive–transductive learning with graph neural networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 44(2): 758–769.

- Fu et al. (2023) Fu, J.; Zhang, X.; Li, S.; and Chen, D. 2023. Variational Disentangled Graph Auto-Encoders for Link Prediction. arXiv preprint arXiv:2306.11315.

- Geng et al. (2021) Geng, Z.; Zhang, X.-Y.; Bai, S.; Wang, Y.; and Lin, Z. 2021. On training implicit models. NeurIPS, 34: 24247–24260.

- Granovetter (1973) Granovetter, M. S. 1973. The strength of weak ties. American journal of sociology, 78(6): 1360–1380.

- Grover et al. (2016) Grover, A.; et al. 2016. node2vec: Scalable feature learning for networks. In Proceedings of the 22nd ACM SIGKDD international conference on Knowledge discovery and data mining, 855–864.

- Grover et al. (2019) Grover, A.; et al. 2019. Graphite: Iterative generative modeling of graphs. In International conference on machine learning, 2434–2444. PMLR.

- Hamilton et al. (2017) Hamilton, W.; et al. 2017. Inductive representation learning on large graphs. NeurIPS, 30.

- Haykin (1998) Haykin, S. 1998. Neural networks: a comprehensive foundation. Prentice Hall PTR.

- Heider (1946) Heider, F. 1946. Attitudes and cognitive organization. The Journal of psychology, 21(1): 107–112.

- Hu et al. (2020) Hu, W.; Fey, M.; Zitnik, M.; Dong, Y.; Ren, H.; Liu, B.; Catasta, M.; and Leskovec, J. 2020. Open graph benchmark: Datasets for machine learning on graphs. NeurIPS, 33: 22118–22133.

- Huang et al. (2018) Huang, W.; Zhang, T.; Rong, Y.; and Huang, J. 2018. Adaptive sampling towards fast graph representation learning. NeurIPS, 31.

- Jin et al. (2022) Jin, D.; Wang, R.; Ge, M.; He, D.; Li, X.; Lin, W.; and Zhang, W. 2022. RAW-GNN: RAndom Walk Aggregation based Graph Neural Network. In 31st International Joint Conference on Artificial Intelligence, IJCAI 2022, 2108–2114. International Joint Conferences on Artificial Intelligence.

- Kipf and Welling (2016) Kipf, T. N.; and Welling, M. 2016. Variational graph auto-encoders. arXiv preprint arXiv:1611.07308.

- Kipf and Welling (2017) Kipf, T. N.; and Welling, M. 2017. Semi-supervised classification with graph convolutional networks. ICLR.

- Leskovec, Huttenlocher, and Kleinberg (2010) Leskovec, J.; Huttenlocher, D.; and Kleinberg, J. 2010. Signed networks in social media. In Proceedings of the SIGCHI conference on human factors in computing systems, 1361–1370.

- Li et al. (2021) Li, G.; Müller, M.; Ghanem, B.; and Koltun, V. 2021. Training graph neural networks with 1000 layers. In International conference on machine learning, 6437–6449. PMLR.

- Li et al. (2019) Li, G.; Muller, M.; Thabet, A.; and Ghanem, B. 2019. Deepgcns: Can gcns go as deep as cnns? In Proceedings of the IEEE/CVF international conference on computer vision, 9267–9276.

- Li et al. (2022) Li, X.; Zhu, R.; Cheng, Y.; Shan, C.; Luo, S.; Li, D.; and Qian, W. 2022. Finding global homophily in graph neural networks when meeting heterophily. In International Conference on Machine Learning, 13242–13256. PMLR.

- Liang et al. (2023) Liang, L.; Hu, X.; Xu, Z.; Song, Z.; and King, I. 2023. Predicting global label relationship matrix for graph neural networks under heterophily. Advances in Neural Information Processing Systems, 36.

- Lim et al. (2021a) Lim, D.; Hohne, F.; Li, X.; Huang, S. L.; Gupta, V.; Bhalerao, O.; and Lim, S. N. 2021a. Large scale learning on non-homophilous graphs: New benchmarks and strong simple methods. NeurIPS, 34: 20887–20902.

- Lim et al. (2021b) Lim, D.; Li, X.; Hohne, F.; and Lim, S.-N. 2021b. New benchmarks for learning on non-homophilous graphs. arXiv preprint arXiv:2104.01404.

- Liu et al. (2022) Liu, J.; Hooi, B.; Kawaguchi, K.; and Xiao, X. 2022. Mgnni: Multiscale graph neural networks with implicit layers. NeurIPS, 35: 21358–21370.

- Liu, Gao, and Ji (2020) Liu, M.; Gao, H.; and Ji, S. 2020. Towards deeper graph neural networks. In KDD, 338–348.

- Liu, Wang, and Ji (2021) Liu, M.; Wang, Z.; and Ji, S. 2021. Non-local graph neural networks. IEEE transactions on pattern analysis and machine intelligence, 44(12): 10270–10276.

- Luan et al. (2022) Luan, S.; Hua, C.; Lu, Q.; Zhu, J.; Zhao, M.; Zhang, S.; Chang, X.-W.; and Precup, D. 2022. Revisiting heterophily for graph neural networks. NeurIPS, 35: 1362–1375.

- Luan et al. (2023) Luan, S.; Hua, C.; Xu, M.; Lu, Q.; Zhu, J.; Chang, X.-W.; Fu, J.; Leskovec, J.; and Precup, D. 2023. When do graph neural networks help with node classification: Investigating the homophily principle on node distinguishability. NeurIPS.

- Mavromatis et al. (2021) Mavromatis, C.; et al. 2021. Graph infoclust: Maximizing coarse-grain mutual information in graphs. In Pacific-Asia Conference on Knowledge Discovery and Data Mining, 541–553. Springer.

- Mikolov et al. (2013a) Mikolov, T.; Chen, K.; Corrado, G.; and Dean, J. 2013a. Efficient estimation of word representations in vector space. arXiv preprint arXiv:1301.3781.

- Mikolov et al. (2013b) Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G. S.; and Dean, J. 2013b. Distributed representations of words and phrases and their compositionality. NeurIPS, 26.

- Mislove et al. (2007) Mislove, A.; Marcon, M.; Gummadi, K. P.; Druschel, P.; and Bhattacharjee, B. 2007. Measurement and analysis of online social networks. In Proceedings of the 7th ACM SIGCOMM conference on Internet measurement, 29–42.

- Morris et al. (2019) Morris, C.; Ritzert, M.; Fey, M.; Hamilton, W. L.; Lenssen, J. E.; Rattan, G.; and Grohe, M. 2019. Weisfeiler and leman go neural: Higher-order graph neural networks. In Proceedings of the AAAI conference on artificial intelligence, volume 33-01, 4602–4609.

- Niwattanakul et al. (2013) Niwattanakul, S.; Singthongchai, J.; Naenudorn, E.; and Wanapu, S. 2013. Using of Jaccard coefficient for keywords similarity. In Proceedings of the international multiconference of engineers and computer scientists, volume 1, 380–384.

- Oono and Suzuki (2020) Oono, K.; and Suzuki, T. 2020. Graph Neural Networks Exponentially Lose Expressive Power for Node Classification. In International Conference on Learning Representations.

- Pan et al. (2018) Pan, S.; Hu, R.; Long, G.; Jiang, J.; Yao, L.; and Zhang, C. 2018. Adversarially regularized graph autoencoder for graph embedding. arXiv preprint arXiv:1802.04407.

- Pei et al. (2019) Pei, H.; Wei, B.; Chang, K. C.-C.; Lei, Y.; and Yang, B. 2019. Geom-GCN: Geometric Graph Convolutional Networks. In International Conference on Learning Representations.

- Platonov et al. (2023) Platonov, O.; Kuznedelev, D.; Babenko, A.; and Prokhorenkova, L. 2023. Characterizing graph datasets for node classification: Homophily-heterophily dichotomy and beyond. Advances in Neural Information Processing Systems, 36.

- Plotkin and Onuchic (2002) Plotkin, S. S.; and Onuchic, J. N. 2002. Understanding protein folding with energy landscape theory part I: basic concepts. Quarterly reviews of biophysics, 35(2): 111–167.

- Sen et al. (2008) Sen, P.; Namata, G.; Bilgic, M.; Getoor, L.; Galligher, B.; and Eliassi-Rad, T. 2008. Collective classification in network data. AI Magazine, 29(3): 93–93.

- Shervashidze et al. (2011) Shervashidze, N.; Schweitzer, P.; Van Leeuwen, E. J.; Mehlhorn, K.; and Borgwardt, K. M. 2011. Weisfeiler-lehman graph kernels. JMLR, 12(9).

- Shi et al. (2020) Shi, Y.; Huang, Z.; Feng, S.; Zhong, H.; Wang, W.; and Sun, Y. 2020. Masked label prediction: Unified message passing model for semi-supervised classification. arXiv preprint arXiv:2009.03509.

- Suresh et al. (2021) Suresh, S.; Budde, V.; Neville, J.; Li, P.; and Ma, J. 2021. Breaking the limit of graph neural networks by improving the assortativity of graphs with local mixing patterns. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, 1541–1551.

- Tang et al. (2008) Tang, J.; Zhang, J.; Yao, L.; Li, J.; Zhang, L.; and Su, Z. 2008. Arnetminer: extraction and mining of academic social networks. In Proceedings of the 14th ACM SIGKDD international conference on Knowledge discovery and data mining, 990–998.

- Topping et al. (2021) Topping, J.; Di Giovanni, F.; Chamberlain, B. P.; Dong, X.; and Bronstein, M. M. 2021. Understanding over-squashing and bottlenecks on graphs via curvature. arXiv preprint arXiv:2111.14522.

- Veličković et al. (2018a) Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; and Bengio, Y. 2018a. Graph attention networks. ICLR.

- Veličković et al. (2018b) Veličković, P.; Fedus, W.; Hamilton, W. L.; Liò, P.; Bengio, Y.; and Hjelm, R. D. 2018b. Deep graph infomax. arXiv preprint arXiv:1809.10341.

- Wales (2002) Wales, D. J. 2002. Energy landscapes. In Atomic clusters and nanoparticles. Agregats atomiques et nanoparticules: Les Houches Session LXXIII 2–28 July 2000, 437–507. Springer.

- Wales et al. (1998) Wales, D. J.; et al. 1998. Archetypal energy landscapes. Nature, 394(6695): 758–760.

- Wang et al. (2020) Wang, K.; Shen, Z.; Huang, C.; Wu, C.-H.; Dong, Y.; and Kanakia, A. 2020. Microsoft academic graph: When experts are not enough. Quantitative Science Studies, 1(1): 396–413.

- Xiao et al. (2022) Xiao, S.; Wang, S.; Dai, Y.; and Guo, W. 2022. Graph neural networks in node classification: survey and evaluation. Machine Vision and Applications, 33: 1–19.

- Xu et al. (2019) Xu, K.; Hu, W.; Leskovec, J.; and Jegelka, S. 2019. How Powerful are Graph Neural Networks? In International Conference on Learning Representations.

- Xu et al. (2023) Xu, Z.; Chen, Y.; Zhou, Q.; Wu, Y.; Pan, M.; Yang, H.; and Tong, H. 2023. Node classification beyond homophily: Towards a general solution. In Proceedings of the 29th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, 2862–2873.

- Yan et al. (2022) Yan, Y.; Hashemi, M.; Swersky, K.; Yang, Y.; and Koutra, D. 2022. Two sides of the same coin: Heterophily and oversmoothing in graph convolutional neural networks. In 2022 IEEE International Conference on Data Mining (ICDM), 1287–1292. IEEE.

- Yu et al. (2022a) Yu, L.; Sun, L.; Du, B.; Liu, C.; Lv, W.; and Xiong, H. 2022a. Heterogeneous graph representation learning with relation awareness. IEEE Transactions on Knowledge and Data Engineering.

- Yu et al. (2022b) Yu, L.; Sun, L.; Du, B.; Zhu, T.; and Lv, W. 2022b. Label-Enhanced Graph Neural Network for Semi-supervised Node Classification. IEEE Transactions on Knowledge and Data Engineering.

- Zhang et al. (2022) Zhang, L.; Song, H.; Aletras, N.; and Lu, H. 2022. Node-feature convolution for graph convolutional networks. Pattern Recognition, 128: 108661.

- Zheng et al. (2022) Zheng, X.; Liu, Y.; Pan, S.; Zhang, M.; Jin, D.; and Yu, P. S. 2022. Graph neural networks for graphs with heterophily: A survey. arXiv preprint arXiv:2202.07082.

- Zhou et al. (2022) Zhou, S.; Guo, Z.; Aggarwal, C.; Zhang, X.; and Wang, S. 2022. Link prediction on heterophilic graphs via disentangled representation learning. arXiv preprint arXiv:2208.01820.

- Zhu et al. (2020) Zhu, J.; Yan, Y.; Zhao, L.; Heimann, M.; Akoglu, L.; and Koutra, D. 2020. Beyond homophily in graph neural networks: Current limitations and effective designs. NeurIPS, 33: 7793–7804.

Appendix

Appendix A Integrating ClassContrast with GNNs

While our primary goal is to develop an approach that synergistically combines spatial and contextual information, we also conducted additional experiments to evaluate how well the ClassContrast features integrate with existing GNN models. In particular, we tested the three classical GNN models, namely GCN, GraphSAGE and GAT, as well as the recent model LINKX. We replaced the node features as initial node embeddings in original GNN models with our ClassContrast features. We implemented a two-layer GNN framework using the Adam optimizer with an initial learning rate of 0.05. The hyperparameters include a dropout rate of 0.5, a weight decay of 5e-4, and 32 hidden channels. The results presented in Figure 3 and Table 4 indicate a noteworthy improvement in GNN performance when utilizing ClassContrast vectors. This enhancement is attributed to the effective embeddings provided by ClassContrast vectors. Furthermore, the observed accuracy gap remains stable. In our future endeavors, we plan to delve deeper into this direction for a more comprehensive exploration.

Appendix B Heterophily and ClassContrast

B.1 Recent Homophily Metrics

Until recently, the prevailing homophily metrics were node homophily (Pei et al. 2019) and edge homophily (Abu-El-Haija et al. 2019; Zhu et al. 2020). Node homophily simply computes, for each node, the proportion of its neighbors that belong to the same class, and averages across all nodes, while edge homophily measures the proportion of edges connecting nodes of the same class compared to all edges in the network. In the past few years, to study heterophily phenomena in graph representation learning, several new homophily metrics were introduced, e.g., class homophily (Lim et al. 2021b), generalized edge homophily (Jin et al. 2022) and aggregation homophily (Luan et al. 2022), adjusted homophily (Platonov et al. 2023) and label informativeness (Platonov et al. 2023). In Table 8, we give these metrics for our datasets. The details of these metrics can be found in (Luan et al. 2023).

| Metric | CORA | CITES. | PUBMED | TEXAS | CORNELL | WISC. | CHAM. | SQUIR. |

|---|---|---|---|---|---|---|---|---|

| -spat-1 | 0.8129 | 0.6861 | 0.7766 | 0.1079 | 0.1844 | 0.2125 | 0.2549 | 0.2564 |

| -context | 0.1702 | 0.1949 | 0.3245 | 0.2352 | 0.2409 | 0.2449 | 0.2564 | 0.2057 |

| 0.8252 | 0.7175 | 0.7924 | 0.3855 | 0.1498 | 0.0968 | 0.2470 | 0.2156 | |

| 0.8100 | 0.7362 | 0.8024 | 0.5669 | 0.4480 | 0.4106 | 0.2795 | 0.2416 | |

| 0.7657 | 0.6270 | 0.6641 | 0.0468 | 0.0941 | 0.0013 | 0.0620 | 0.0254 | |

| 0.9904 | 0.9826 | 0.9432 | 0.8032 | 0.7768 | 0.6940 | 0.6100 | 0.3566 | |

| 0.1700 | 0.1900 | 0.2700 | 0.3100 | 0.3400 | 0.3500 | 0.0152 | 0.0157 | |

| 0.8178 | 0.7588 | 0.7431 | 0.1889 | 0.0826 | 0.0258 | 0.0663 | 0.0196 | |

| LI | 0.5904 | 0.4508 | 0.4093 | 0.0169 | 0.1311 | 0.1923 | 0.0480 | 0.0015 |

| ClassContrast Spatial-1 Homophily Matrix | |||||||

|---|---|---|---|---|---|---|---|

| 0.743 | 0.029 | 0.014 | 0.083 | 0.050 | 0.037 | 0.043 | |

| 0.040 | 0.769 | 0.062 | 0.080 | 0.020 | 0.028 | 0.002 | |

| 0.010 | 0.025 | 0.917 | 0.032 | 0.001 | 0.014 | 0.001 | |

| 0.055 | 0.020 | 0.016 | 0.839 | 0.051 | 0.015 | 0.004 | |

| 0.058 | 0.014 | 0.002 | 0.064 | 0.849 | 0.011 | 0.003 | |

| 0.058 | 0.017 | 0.030 | 0.051 | 0.018 | 0.786 | 0.040 | |

| 0.113 | 0.001 | 0.003 | 0.022 | 0.006 | 0.067 | 0.788 | |

| ClassContrast Context-S Homophily Matrix | |||||||

|---|---|---|---|---|---|---|---|

| 0.206 | 0.143 | 0.126 | 0.111 | 0.124 | 0.143 | 0.147 | |

| 0.123 | 0.236 | 0.146 | 0.121 | 0.101 | 0.147 | 0.126 | |

| 0.109 | 0.171 | 0.253 | 0.107 | 0.088 | 0.136 | 0.137 | |

| 0.122 | 0.170 | 0.168 | 0.159 | 0.130 | 0.134 | 0.118 | |

| 0.130 | 0.154 | 0.118 | 0.110 | 0.240 | 0.137 | 0.111 | |

| 0.112 | 0.148 | 0.135 | 0.103 | 0.078 | 0.283 | 0.142 | |

| 0.150 | 0.146 | 0.123 | 0.093 | 0.097 | 0.154 | 0.236 | |

| ClassContrast Context-I Homophily Matrix | |||||||

|---|---|---|---|---|---|---|---|

| 0.159 | 0.134 | 0.142 | 0.154 | 0.143 | 0.136 | 0.132 | |

| 0.142 | 0.159 | 0.146 | 0.154 | 0.139 | 0.138 | 0.122 | |

| 0.136 | 0.140 | 0.162 | 0.156 | 0.140 | 0.138 | 0.127 | |

| 0.145 | 0.136 | 0.146 | 0.168 | 0.151 | 0.133 | 0.122 | |

| 0.144 | 0.131 | 0.144 | 0.159 | 0.167 | 0.133 | 0.124 | |

| 0.142 | 0.132 | 0.145 | 0.149 | 0.145 | 0.158 | 0.129 | |

| 0.142 | 0.126 | 0.142 | 0.152 | 0.141 | 0.138 | 0.159 | |

| ClassContrast Spatial-1 Homophily Matrix | |||||

|---|---|---|---|---|---|

| 0.323 | 0.000 | 0.000 | 0.323 | 0.323 | |

| 0.001 | 0.219 | 0.595 | 0.185 | 0.001 | |

| 0.000 | 0.547 | 0.225 | 0.145 | 0.084 | |

| 0.044 | 0.268 | 0.468 | 0.071 | 0.150 | |

| 0.149 | 0.039 | 0.397 | 0.200 | 0.215 | |

| ClassContrast Spatial-2 Homophily Matrix | |||||

|---|---|---|---|---|---|

| 0.444 | 0.278 | 0.111 | 0.111 | 0.056 | |

| 0.001 | 0.439 | 0.438 | 0.106 | 0.016 | |

| 0.011 | 0.351 | 0.481 | 0.111 | 0.046 | |

| 0.029 | 0.238 | 0.485 | 0.110 | 0.138 | |

| 0.149 | 0.084 | 0.374 | 0.136 | 0.258 | |

| ClassContrast Domain Homophily Matrix | |||||

|---|---|---|---|---|---|

| 0.221 | 0.198 | 0.207 | 0.192 | 0.181 | |

| 0.148 | 0.247 | 0.223 | 0.201 | 0.181 | |

| 0.174 | 0.205 | 0.228 | 0.204 | 0.189 | |

| 0.145 | 0.203 | 0.217 | 0.237 | 0.199 | |

| 0.149 | 0.204 | 0.209 | 0.209 | 0.230 | |

where is the local homophily value for node ; ; is the class-wise homophily metric (Lim et al. 2021b); takes the average over of a given multiset of values or variables and is the post-aggregation node similarity matrix; , .

In Table 9 below, we give ClassContrast Homophily matrices which provides detailed insights on the class interactions in CORA dataset. In particular, Spatial-1 matrix interprets as for any class, the nodes likely to form a link with same class node in their one neighborhood. In the following Contextual-Selective and Contextual-Inclusive Homophily matrices, independent of graph distance, we see the positions of attribute vectors similarity to the chosen class landmarks.

B.2 ClassContrast Homophily Matrices

We provide further homophily matrices for one homophilic and one heterophilic graphs. In Table 9, for CORA dataset, we observe in CC Spatial-1, Context-S and Context-I Homophily matrices show the strong homophily behavior in both spatial and contextual aspects. In Spatial-1 matrix the entry represents how likely the nodes in to connect to nodes in among their -neighborhood. The very high numbers in the diagonal shows that most classes likely to connect with their own class, as CORA’s node homophily ratio (0.83) suggest. From the matrix, we have finer information that the class is very highly homophilic. The domain matrices represents the average similarity/closeness to class landmarks. We observe that in both domain matrices, the node feature vectors likely to land close to their own class landmark, and ClassContrast vectors captures this crucial information.

In Table 10, for WISCONSIN dataset, spatial-1 and Spatial-2 matrices show the irregular behavior as the WISCONSIN node homophily ratio (0.09) suggest. The nodes are unlikely to connect their adjacent nodes in the same class. Even in their two neighborhoods, they don’t have many of their fellow classmate for and . However, while the other three classes are not connecting their own classmate, they have several common neighbors. Finally, the domain homophily matrix again shows that while they are not very close in the graph, they all share common interests, as every node’s feature vector lands close to their classmates’.

While different homophily ratios are summarizing crucial information about node tendencies, we observe that our matrices are giving much finer and easily intepretable information no class behaviors.

B.3 Proof of the Theorem and New Homophily Metrics

In Section 4.4, we have defined homophily and discussed the relation between ClassContrast vectors and the homophily notion. Here we give details how to generalize this idea to give finer homophily notions for graphs. First, we give the proof of Theorem 4.7.

Theorem B.1.

For an undirected graph , let be the spatial vector defined in Appendix B. Let be the vector where the entry corresponding to class of is set to . Then,

Proof.

For an undirected graph with node class assignment function , where . Let . Then, by setting , we get a new vector

-norm of is . Similarly, . Hence, we have . Notice that by definition, , the number of neighbors in the same class with . Similarly, . Hence,

| (1) |

As , we have as

where the last equality follows by Equation 1. The proof follows. ∎

This perspective inspires different ways to generalize the homophily concept by using ClassContrast vectors. Notice that in ClassContrast matrices above, we employed a classwise grouping to measure class interactions. If we do not use any grouping for the nodes, we get natural generalizations of existing homophily ratios. First, we define higher homophily by using the ratio of the number of nodes in the -neighborhood with the same class to .

Definition B.2 (Higher Homophily).

Given with representing node classes. Let be the number of nodes in in the same class with . Then, homophily ratio of is defined as

By applying similar ideas to the proof of Theorem 4.7, we obtain the following result.

Theorem B.3.

For an undirected graph , let be the spatial vector defined above. Let be the vector where the entry corresponding to the class of is set to . Then,

While the above notions represent structural homophily, we introduce another homophily by comparing the contextual vectors of neighboring nodes with the central node’s.

Definition B.4 (Contextual Homophily).

Given with representing node classes. Let be the contextual vector as defined in Section 4.2. Let be the vector where the entry corresponding to the class of is set to .Then, define the contextual homophily of as

Similar homophily metrics, as introduced in (Luan et al. 2023; Lim et al. 2021b; Zhu et al. 2020; Jin et al. 2022), were employed to investigate the concept of heterophily in graph representation learning. In particular, our ClassContrast features inherently encompass this information, enabling the ML classifier to adapt accordingly. This explains the outstanding performance of ClassContrast in both homophilic and heterophilic settings for node classification and link prediction.

Appendix C Generalizations of ClassContrast

C.1 Inductive and Transductive Settings

Inductive Setting. In the inductive learning framework, a model is trained by using the training data while the test data, is completely hidden during the training time. This means that no information about test nodes (e.g., edges between training and test nodes) is provided during the training stage. The learning procedure aims to minimize a suitable loss function to capture the statistical distribution of the training data. Once an inductive model has undergone training, it can be utilized to make predictions on new (unseen) data, thereby determining the labels for unlabeled nodes.

Transductive Setting. In the transductive learning framework, which closely aligns with semi-supervised learning, both the training data and the test data can be simultaneously leveraged to capitalize on their interconnectedness. This interrelationship can be employed either during the training phase, the prediction phase, or both. Specifically, in the training stage, the information about and its position in the graph is known, while its label remains concealed. Consequently, the model is trained with explicit awareness of the nodes it will be evaluated on after the training process. This can serve as a valuable asset for the model, enabling it to establish a sound decision function by exploiting the characteristics observed in .

To clarify the differences between the inductive and transductive settings in graph-based learning, consider a given graph , with datasets and . In the inductive setting, all test nodes and their connected edges are removed to create the training subgraph . The model is trained exclusively on and only gains access to the complete graph during the testing stage. Conversely, in the transductive learning approach, the model has access to the entire graph during training; however, the labels of the test nodes remain hidden throughout this process. It is noteworthy that any dataset configured for transductive learning can be adapted to the inductive setting by excluding test nodes and their connecting edges during the training phase. However, converting from the inductive to the transductive setting is not generally feasible. For further details, see the references (Ciano et al. 2021; Arnold, Nallapati, and Cohen 2007).

C.2 ClassContrast for Transductive Setting and Iterated Predictions

So far, we outlined our ClassContrast vectors for simplicity in inductive setting. To adapt to the transductive setting, we make adjustments without altering contextual vectors. In transductive setting, test node labels are hidden during training, but connection information to training nodes is available. We introduce a new "unknown" class for test nodes with unknown labels, considering them as neighbors to training nodes. Each node is represented by a dimensional vector , where is the count of neighboring test nodes (unknown labels) of node , and is the count of neighboring training nodes in class for . Similar representations are defined for , , and . The superscript indicates no iterations have occurred yet.

Iterated Predictions: Recall that the ultimate goal in the node classification problem is to predict the labels of new (test) nodes. After we obtain all ClassContrast vectors for training and test nodes above, we let our ML model make a prediction for each test node . Let be our predictions. Hence, we have a label for each node in our graph .

While the original spatial vector cannot use any class information for the test nodes, in the next step, we remedy this by using our class predictions for test nodes. In particular, by using the predictions , we define a new (improved) spatial vector where we use predictions for neighboring test nodes. Notice that this is no longer a -dimensional vector as there is no unknown class anymore. Similarly, we update all spatial vectors, train our ML model with these new node labels, and make a new prediction for test nodes. Then, we get new label predictions . By using predictions , we define the next iteration and train our model with these updated vectors. Again, we get new predictions . In our experiments, we observe that or iterations ( or ) improve the performance significantly, but further iterations do not, in the transductive setting.

C.3 Spatial Embeddings on Directed, Weighted Graphs

Directed Graphs. When is directed, to obtain finer information about node neighborhoods, we produce two different embeddings of size , and for the k-hop neighborhood:

where is the count of k-hop neighbors incoming to belonging to class while is the count of neighbors outgoing from belonging to class (See Figure 2(a)).

Weighted Graphs: In weighted graphs, the counts incorporate edge weight information. Specifically, we define the weighted feature vector for node as: where is the sum of the weights of the edges connecting to the -hop neighbors belonging to class . If the weight of an edge is inversely proportional to the similarity between nodes, one can use the sum of the reciprocals of the weights instead: . This approach allows for adjustments in how edge weights influence the calculation of proximity and class association. Additionally, in the context of directed graphs within a weighted graph setting, different vectors can be defined for incoming and outgoing neighborhood connections, such as for incoming edges and for outgoing edges, providing a more detailed representation of node relationships based on directionality and weight.

| CORA | CITESEER | PUBMED | OGBN-ARXIV | OGBN-MAG | TEXAS | CORNELL | WISCONSIN | CHAMELEON | SQUIRREL | |

|---|---|---|---|---|---|---|---|---|---|---|

| Dimension | 42 | 36 | 112 | 120 | 1,524 | 30 | 30 | 30 | 20 | 20 |

Appendix D Details of ClassContrast Embeddings

In all datasets, we basically used the same method to obtain our vectors, however, when the graph type (directed, undirected), or node attribute vector format varies, our methodology naturally adapts the corresponding setting as detailed below. Note that details of OGBN datasets can be found at (Hu et al. 2020) and at Stanford’s Open Graph Benchmark site 111https://ogb.stanford.edu/docs/nodeprop/.

CORA: The CORA dataset is a directed graph of scientific publications classified into one of the classes. Each node represents a paper and comes with a binary () vector of length 1433 indicating the presence/absence of the corresponding word in the paper from a dictionary of 1433 unique words. The task is to predict the subject area of the paper, e.g. Neural Networks, Theory, Case-Based. The directed graph approach is used to extract the attribute vector, , from the CORA dataset. Recall that this is transductive setting, and the first row is an dimensional spatial vector (Section 4.1) where the first entries represent the count of citing (incoming) papers from the corresponding class and the entry is the count of unknown citing paper (Section C.2). The second row is defined similarly using the count of cited (outgoing) papers of the corresponding class. The third and fourth row of the spatial feature vector is obtained similarly using the second neighborhood information of each node for citing and cited paper respectively. For the first iteration , the same setup is followed ignoring the entry of each row because there is no unknown class now.

For the contextual vector , we follow the landmark approach described in Section 4.2. We define the first landmark vector which is a binary word vector of length 1433 such that the entry is if the corresponding word is present in any of binary vectors belonging to class , and the entry is otherwise. Then, each entry of is the count of common words between and for each class, which produces a dimensional contextual vector. Similarly, for the contextual vector , we use a more selective landmark vector is defined as a binary word vector of length 1433 indicating the presence/absence of the corresponding word in at least nodes in the class . Hence, the initial vector is dimensional ( spatial, contextual), in the next iterations, are both -dimensional ( spatial, contextual).

CITESEER: The CITESEER is also a directed graph of scientific publication classified into one of the classes. Each node represents a paper and comes with a binary vector like CORA from a dictionary of 3703 unique words. Here, the aim of the node classification task is to make a prediction about the subject area of the paper. Since the graph properties/structure and node representing word vector is similar to the CORA dataset, the same feature-extracting techniques for both spatial and contextual vectors is followed here. Hence, the initial vector is dimensional ( spatial, contextual), in the next iterations, are both -dimensional ( spatial, contextual).

PUBMED: The PUBMED dataset is a directed graph of scientific publications from the PubMed database pertaining to diabetes classified into one of three classes. Each node represents a publication and is described by a TF/IDF weighted word vector from a dictionary which consists of unique words. Since the graph structure is quite similar to CORA and CITESEER, a similar method is followed to extract the spatial features. So the initial spatial vector is dimensional and it is dimensional for the second iteration. For the contextual vector , Principal component analysis (PCA) is used to reduce the dimension of the given weighted word vector from to . Hence, the initial vector, is dimensional (spatial only) and the vector is dimensional ( spatial, contextual) in the next iteration.

OGBN-ARXIV: The OGBN-ARXIV dataset is a directed graph, representing the citation network between all Computer Science (CS) arXiv papers indexed by MAG (Wang et al. 2020). Each node is an arXiv paper and each directed edge indicates that one paper cites another one. Each paper comes with a 128-dimensional vector obtained by averaging the embeddings of words in its title and abstract. The embeddings of individual words are computed by running the skip-gram model (Mikolov et al. 2013b) over the MAG corpus. We also provide the mapping from MAG paper IDs into the raw texts of titles and abstracts here. In addition, all papers are also associated with the year that the corresponding paper was published. The task is to predict the 40 subject areas of arXiv CS papers, e.g., cs.AI, cs.LG, and cs.OS.

The vector for OGBN-ARXIV is obtained by using our directed graph approach (Section 4.1). The first row of spatial vector is -dimensional, and each entry is the count of citing (incoming) papers from the corresponding class. The second row is and is defined similarly, where each entry is the count of cited (outgoing) papers from the corresponding class. For contextual vector , follow the landmark approach described in Section 4.2, employing just one landmark. Since the vectors are weighted vector, we use Euclidean distance to determine the distance between the landmark and a given node. Hence, for each node has -dimensional spatial, and -dimensional contextual vector, which totals -dimensional ClassContrast vector.

OGBN-MAG: The OGBN-MAG dataset is a heterogeneous network composed of a subset of the Microsoft Academic Graph (MAG) (Wang et al. 2020). It contains four types of entities—papers (736,389 nodes), authors (1,134,649 nodes), institutions (8,740 nodes), and fields of study (59,965 nodes)—as well as four types of directed relations connecting two types of entities—an author is “affiliated with” an institution, an author “writes” a paper, a paper “cites” a paper, and a paper “has a topic of” a field of study. Similar to OGBN-ARXIV, each paper is associated with a 128-dimensional word2vec vector, and all the other types of entities are not associated with input node features. Given the heterogeneous OGBN-MAG data, the task is to predict one of 349 venues (conference or journal) of each paper.

The vector for OGBN-MAG is a bit different than OGBN-ARXIV, as OGBN-MAG is a heterogeneous network. We first collapse the network to a homogeneous network for papers. Similar to OGBN-ARXIV, we obtain -dimensional spatial vectors, i.e., (citing papers), and (cited papers). As another spatial vector from a different level of the heterogeneous network, we use author information as follows. Each author has a natural -dimensional vector where each entry is the number of papers the author published in the corresponding venue. For each paper, we consider the author with the most publications and assign their attribute vector to the paper’s attribute vector. We call it author vector . We construct a similar set of vectors for field of study - another type of node information. Each paper belongs to 1 or more fields of studies (or topics), and for each unique topic, we construct a attribute vector such that denotes the number of papers assigned to venue for the given topic. We then aggregate these topic attribute vectors for each paper as follows: for a given paper with assigned topics , let and append this final aggregate vector to the paper’s attribute vector. For contextual vector , we directly use a -dimensional vector for each node as it is. Hence, is concatenation of spatial vectors , , , , and which totals dimensional vector.

WebKB (TEXAS, CORNELL and WISCONSIN): Carnegie Mellon University collected the WebKB dataset from computer science departments of various universities. Three subsets of this dataset are TEXAS, CORNELL and WISCONSIN. The dataset contains links between web pages, indicating relationships like “is located in” or “is a student of” forming a directed graph structure. Node features are represented as bag-of-words, creating a binary vector for each web page. The classification task involves categorizing nodes into five types: student, project, course, staff, and faculty. Similar to the CORA dataset, these datasets share a directed graph structure and binary vector representation for node features, leading to the use of comparable feature extraction methods for spatial and contextual vectors. Therefore, the initial attribute vector is dimensional, comprising spatial and contextual dimensions. In subsequent iterations, it is -dimensional, with spatial and contextual dimensions each.

Wikipedia Network (CHAMELEON and SQUIRREL): The datasets depict page-page networks focused on specific topics such as chameleons and squirrels. In these networks, nodes represent articles, and edges denote mutual links between them. Node features are derived from several informative nouns found in the corresponding Wikipedia pages. If a feature is present in the feature list, it signifies the occurrence of an informative noun in the text of the Wikipedia article. The objective is to classify the nodes into five categories based on the average monthly traffic of the respective web pages. In the context of the Wikipedia network, each link between articles does not imply a one-way relationship; instead, it signifies a mutual connection between the two articles, making it an undirected graph. Therefore, undirected feature extraction approaches are employed for .

Regarding spatial features, the first row consists of a 6-dimensional vector. The first five entries represent the count of five classes, while the sixth entry represents the count of the unknown class in the 1-hop neighborhood. In the second row, the same procedure is applied for the 2-hop neighborhood. Subsequent iterations follow a similar process, but the entries are ignored because there is no unknown class at this point in the analysis. For contextual features in the Wikipedia network, a similar approach as used for the CORA dataset is employed due to their analogous nature. This approach results in a 10-dimensional vector. Consequently, the initial vector is 22-dimensional, and subsequent iterations reduce it to 20-dimensional.

Appendix E ClassContrast & Structural Balance Theory

In the ClassContrast framework, the concepts of valleys and peaks serve as a metaphor for the dynamic process by which homophilous graph nodes adjust their positions within an embedding space. These adjustments reflect the nodes’ tendency to connect more strongly with others from the same class, thereby descending into the valleys of their community and distancing themselves from the peaks representing other classes. This behavior can be understood through the lens of an energy landscape, where each node’s position represents a state of potential energy: valleys correspond to low-energy, stable states (nodes strongly connected within their community), and peaks correspond to high-energy, unstable states (nodes weakly connected or misaligned with their community).