CircleGAN: Generative Adversarial Learning

across Spherical Circles

Abstract

We present a novel discriminator for GANs that improves realness and diversity of generated samples by learning a structured hypersphere embedding space using spherical circles. The proposed discriminator learns to populate realistic samples around the longest spherical circle, i.e., a great circle, while pushing unrealistic samples toward the poles perpendicular to the great circle. Since longer circles occupy larger area on the hypersphere, they encourage more diversity in representation learning, and vice versa. Discriminating samples based on their corresponding spherical circles can thus naturally induce diversity to generated samples. We also extend the proposed method for conditional settings with class labels by creating a hypersphere for each category and performing class-wise discrimination and update. In experiments, we validate the effectiveness for both unconditional and conditional generation on standard benchmarks, achieving the state of the art.

1 Introduction

Generative Adversarial Networks (GANs) [10] aim at learning to produce high-quality data samples, as observed from a target dataset. To this end, it trains a generator, which synthesizes samples, adversarially against a discriminator, which classifies the samples into real or fake ones; the discriminator’s gradient in backpropagation guides the generator to improve the quality of the generated samples. As the gradient is difficult to stabilize, recent methods [2, 11, 20, 35] have suggested using the Lipschitz continuous space for the discriminator so that its gradient is bounded under some constant with respect to input. In a similar spirit, recent work [24] introduces a hypersphere as an embedding space, which enjoys its boundedness of distances between samples and also their gradients, showing its superior performance against the precedent models.

The most important qualities of the generated samples are realness and diversity. In conventional GAN frameworks including [24], the discriminator can be viewed as evaluating realness based on a prototype representation, i.e., the closer a generated sample is to the prototype of real samples, the more realistic it is evaluated as. The single prototype, however, may not be sufficient for capturing all modes of real samples, and thus recent work [1, 8, 21, 30] attempts to tackle this issue by employing multiple discriminators, i.e., multiple prototypes. But, training with multiple discriminators requires higher memory footprints and computation costs, and also introduces another hyperparameter, i.e., the number of discriminators. Conditional GANs [19, 23] use additional information of class labels to increase the coverage of modes across different categories. However, the class label does not ensure the intra-class diversity [23] while being beneficial to learn representative features of the class. Hence, the problem of mode collapse remains even in conditional models.

In this paper, we address the sample diversity issue by learning a structured hypersphere embedding space for the discriminator in GANs. Our discriminator learns to populate realistic samples around a great circle, which is the largest spherical circle, while pushing unrealistic samples toward the poles perpendicular to the great circle. In doing so, since the longer circles occupy the larger area on the hypersphere, they encourage more diversity in representation learning of realistic samples. As the result, multiple modes of real data distribution are effectively represented to guide the generator in GAN training. We also extend the proposed method for conditional settings with class labels by creating a hypersphere for each category and performing class-wise sample discrimination and update. In experimental evaluation, we validate the effectiveness of our approach for both unconditional and conditional generation tasks on the standard benchmarks, including STL10, CIFAR10, CIFAR100, and TinyImagenet, achieving the state of the art.

2 Related Work

2.1 Generative Adversarial Networks

Previous work for improving GANs concentrates on addressing the difficulty of training. These studies have been conducted in different aspects such as network architectures [11, 14, 15, 25], objective functions [13, 22] and regularization techniques [2, 11, 20, 35], which impose the Lipschitz constraint to the discriminator. SphereGAN [24] has shown that using hypersphere as an embedding space affords the stability in GAN training by the boundedness of distances between samples and their gradients. Our work also adopts a hypersphere embedding space, but proposes a different strategy in structuring and learning the hypersphere, which will be discussed in details.

The most relevant line of GAN research to ours is on the lack of sample diversity. In many cases, GAN objectives can be satisfied with samples of a limited diversity, and no guarantee exists that a model in training reaches to generate diverse samples. To tackle the lack of diversity, a.k.a. mode collapse, several approaches are proposed from different perspectives. Chen et al. [5] and Karras et al. [15] modulate normalization layers using a noise vector that is transformed through a sequence of layers. Yang et al. [32] penalize the mode collapsing behavior directly to the generator by maximizing the gradient norm with respect to the noise vector. Yamaguchi and Koyama [31] regularize the discriminator to have local concavity on the support of the generator function to increase its entropy monotonically at every stage of the training. Liu et al. [18] propose a spectral regularization to prevent spectral collapse when training with the spectral normalization, which is shown to be closely linked to the mode collapse. Our approach is very different from these in combating mode collapse.

2.2 Conditional GANs

The most straightforward way to improve the performance of GANs is to incorporate side information of the samples, typically class labels. Depending on how the class information is used, the models are categorized into two types: projection-based models and classifier-based models.

Projection-based models [19] discriminate samples by projecting them onto two embeddings, meaning ‘real’ and ‘fake’, for the corresponding class and measuring the discrepancy of the projection values. As the network architectures are combined with attention modules [33] and increased to high-capacity [4], these models improve synthesizing large-scale and high-fidelity images, achieving the state-of-the-art performance. However, since they do not learn class embeddings through an explicit classifier, it may not be easy for the methods to learn the class-specific features.

In contrast, classifier-based models [23, 26] use an auxiliary classifier with a discriminator to explicitly learn class-specific features for generation. However, when incorporating the auxiliary classifier, the diversity of generated samples often degrades severely because the generator focuses on generating easily-classifiable samples [9, 34]. To prevent the generator from being over-confident to some samples, Zhou et al. [34] introduces an adversarial loss on the classifier. But, the loss often deteriorates the classifier and hinders the models from scaling up to large datasets (e.g., ImageNet). While Gong et al. [9] propose a scalable model using twin auxiliary classifiers, they require one more classifier to prevent the other from degeneration.

Unlike these methods, we address the mode-collapse issue based on the hypersphere-based discriminators by integrating only a single auxiliary classifier without any adversarial loss.

3 Proposed Approach

In the framework of GANs, our method, CircleGAN, learns a discriminator that projects samples on a hypersphere and then scores them using their corresponding spherical circles of the hypersphere. The overall architecture is illustrated in Fig. 1. The discriminator of CircleGAN is composed of feature extractor and score function : . The key idea is to leverage the geometric characteristics of the hypersphere in scoring the quality of samples.

In the following, we first introduce CircleGAN for unconditional settings and then extend it to conditional settings. We also discuss our method in comparison to recent hypersphere-based method [24].

3.1 CircleGAN

Let samples be drawn from both data distribution and the generator distribution . The discriminator first embeds the samples to and then projects them on a unit hypersphere with a learnable center : . We denote the sets of sample embeddings on the hypersphere by . Given a unit pivotal vector , which is learnable, we can define the set of spherical circles that is perpendicular to . Then, each point (i.e., sample embedding) on the hypersphere is assigned to a corresponding circle , where the function represents an injective mapping from to . As shown in the right of Fig. 1, using the sample embedding and the pivotal vector , we define its projected vector and its rejected vector :

| (1) |

where indicates the inner product between two vectors. In a nutshell, each corresponds to a spherical circle that is identified with . Note that both center and pivotal vectors are learned in training to adapt the hypersphere to the sample embeddings.

Our strategy in learning the discriminator is to populate real samples around the longest circle in , i.e., the great circle, while pushing fake samples toward the shortest circles in , i.e., the top or bottom pole. Since longer circles occupy the larger area on the hypersphere, they may allow more diversity in representation learning, and vice versa. In this sense, discriminating real and fake samples based on their corresponding circles can naturally induce diversity to generated samples.

We propose to measure the realness score for sample embedding based on the proximity of its corresponding circle to the great circle, which is computed by

| (2) | ||||

where the score is normalized with its standard deviation to fix the scale consistently through the course of training and is computed as . On the other hand, we define the diversifiability score for by the radius of corresponding circle which can be quantified by

| (3) | ||||

where is computed as .

Note that the diversifiability score increases along with the realness score. Thus we use the realness score function as the discriminator output so that it guides the generator in CircleGAN training. The discrimination based on the spherical circles increases the diversity of realistic samples while suppressing the diversity of unrealistic samples, which improves training the generator. This is supported by the experimental results in Sec. 4.2 and 4.3.

We design two other variants for scoring that explicitly combine both the realness score and the diversifiability score:

| (4a) | |||

| (4b) | |||

The former performs a simple addition of realness and diversifiability scores, and the latter measures an angle between the pivotal vector and sample embedding. The effects of these two variants will be demonstrated in our experiment.

To train the model based on the proposed score functions, we adopt the relativistic averaged loss [13] considering its robustness and simplicity:

| (5) | ||||

where adjusts the range of score difference for the sigmoid functions and is set to 5 for and and 10 for . For the adversarial loss of generator , we set it as the inverse of the discriminator loss by changing the source of the samples.

In the following, we introduce two additional losses to improve the training dynamics. The center estimation loss improves adapting hypersphere to the sample embeddings () and the radius equalization loss increases discriminative power of the hypersphere by enforcing one-to-one mappings from the centered embeddings () to spherical embeddings ().

Center estimation loss.

The center is defined as a point that minimizes the sum of square of the distances to all the sample points. Thus, we optimize this directly by measuring the norms of the centered embeddings but proceed separately from the original objectives not to disrupt the adversarial learning. Here, we use Huber loss rather than -loss.

| (6) |

where is defined as if , otherwise .

Radius equalization loss.

We equalize the radius of centered embeddings through the discriminator. First, we compute the target radius by taking square root of averaged squared norm of the centered embeddings. Then, we penalize the difference in radiuses using Huber loss.

| (7) |

where is computed by .

The total losses for the unconditional settings are and .

3.2 Extension to Conditional GANs

We extend our method for the conditional settings and improve the sample diversity of conditional GANs within each class. The key idea is to create multiple hyperspheres for target categories and perform adversarial learning in a class-wise manner. To be concrete, we create a center and a pivotal vector for each class: and , where is the number of categories. The embeddings are translated with their corresponding center vectors, and the scores for adversarial learning are measured based on their corresponding pivotal vectors. For the rest, in computing non-trainable parameters (e.g., standard deviations in Eqs. 2, 3, 4 and target radius in Eq. 7) and comparing scores in the loss in Eq. 5, we take all samples and associate them together irrespective of class labels due to the limited batch sizes.

We incorporate an auxiliary classifier to learn the class-specific features. The auxiliary classifier is a simple linear layer attached to the discriminator and is trained without any adversarial loss to predict the class of a sample . With this classifier, the generator makes the sample class-specific by maximizing the probability of its corresponding label :

| (8) |

The total losses for the conditional settings are and . The overall algorithm with the proposed components is presented in Algorithm 1.

3.3 Comparison to SphereGAN

As ours, SphereGAN [24] also employs a hypersphere as the embedding space for the discriminator, but constructs it with a different projection function and learns with a different objective, as shown in Fig. 2. For hypersphere projection, CircleGAN uses translation and -normalization while SphereGAN uses inverse stereographic projection (ISP). The translation and -normalization tends to distribute samples evenly onto the hypersphere, but ISP concentrates a large portion of samples around the north pole and maps only a small portion around the south pole. This biased projection may prevent the discriminator from fully exploiting the space in learning. Furthermore, while CircleGAN learns the center and the pivotal point adaptively to sample embeddings, SphereGAN uses a fixed coordinate system fixed with the north pole and the origin . As demonstrated in Sec. 4.2, our projection method shows better performance than ISP in practice. As for the objective in training, the score function is analogous to the SphereGAN objective [24]; both maximize angles between a reference vector and sample embeddings. However, while CircleGAN maximizes the angles to the great circle, SphereGAN does it to the opposite point of the reference vector. The use of circles turns out to make a significant difference in sample diversity and quality, as shown in our experiments. Finally, CircleGAN easily extends to conditional settings by creating multiple hyperspheres, whereas SphereGAN is less flexible due to its specialized projection with the fixed reference vector on the hypersphere.

4 Experiments

We conduct experiments in both unconditional and conditional settings of GANs to demonstrate the effectiveness of the proposed methods. In all the experiments, we use the ResNet-based architecture for both the discriminator and the generator with some modifications from the original model [11]. The modifications and the training details are presented in the supplementary A. In Section 4.1, we describe the metrics to evaluate the realness and diversity of the generated samples. Then, in Section 4.2 and 4.3, we validate our approach by performing unconditional and conditional generation tasks on standard benchmark datasets. To further investigate the scalability of our model, we provide more results on the large-scale dataset, ImageNet, in the supplementary B.

4.1 Evaluation Metrics

The common evaluation metrics for image generation are Inception Score (IS) [26] and Frechet Incéption Distance (FID) [12]. IS measures how distinctively each sample is classified as a certain class and how similar the class distribution of generated samples is to that of the target dataset. FID measures a distance between the distribution of real data and that of generated samples in an embedding space, where the embeddings are assumed to be Gaussian distributed. While these are easy to calculate and correlate well with human assessment of generated samples, there have been some concerns about them. First, IS is computed based on class probabilities over Imagenet classes, and thus the evaluation on other datasets cannot be accurate since their class distributions are different from that of Imagenet [34]. Second, IS is highly sensitive to small changes in weights of classifiers [3] and image samples, as shown in our STL10 experiments of Sec. 4.2. Third, FID does not penalize a sample with a similar but different identity, e.g., a clear cat image generated by a dog label, which would be problematic particularly for conditional generations. Hence, in addition to IS and FID, we use two other metrics [28], GAN-train and GAN-test, for evaluation on conditional settings.

GAN-train and GAN-test evaluate diversity and quality of images, respectively. They can easily adapt to each target dataset and also consider mislabeled images in evaluation. GAN-train trains a classifier using generated images and then measures its accuracy on a validation set of the target dataset. This score is analogous to recall (the diversity of images) since the score would increase if the generated samples cover different modes of the target dataset. In contrast, GAN-test trains a classifier using a training set of the target dataset and then measures its accuracy on the generated images. This measure is not related to diversity, but to precision since high-quality samples even from a single mode can achieve a high score. To sum up, we evaluate the models of unconditional GANs with IS and FID, and along with these scores, we use GAN-train and GAN-test for conditional GANs. To measure IS and FID, we use 50K images for all experiments following original implementations.111IS: https://github.com/openai/improved-gan, FID: https://github.com/bioinf-jku/TTUR

4.2 Unconditional GANs

For unconditional generation task, we evaluate our methods on CIFAR10 and STL10 [6]. CIFAR10 and STL10 consists of 50K and 100K images of 10 classes, respectively. We resize STL images to before training, following the experimental protocol of [20, 24]. We compare our methods with two Lipschitz-based models [11, 20] and one hypersphere-based model [24] (Table 1(a)). The best and second-best results are highlighted with red and blue colors, respectively.

CircleGAN models achieve the best and second-best performance in terms of all metrics on the datasets, except for IS on STL10 where SphereGAN performs the best. We suspect that the exception is due to the sensitivity of IS, as also reported in [3]; IS on the test set of STL10 is 14.8, which is significantly lower than 26.1 on the training set, but for other datasets such as CIFAR10 and CIFAR100, IS values are similar between train and test sets (CIFAR10: 11.2 vs. 11.3, CIFAR100: 14.8 vs. 14.7).

| Model | CIFAR10 | STL10 | ||

|---|---|---|---|---|

| IS() | FID() | IS() | FID() | |

| real images | 11.2 | 3.43 | 26.1 | 17.9 |

| WGAN-GP [11] | 7.76 | 22.2 | 9.06 | 42.6 |

| SNGAN [20] | 8.22 | 21.7 | 9.10 | 40.1 |

| SphereGAN [24] | 8.39 | 17.1 | 9.55 | 31.4 |

| CircleGAN () | 8.54 | 12.2 | 9.18 | 27.0 |

| CircleGAN () | 8.55 | 12.3 | 9.24 | 27.5 |

| CircleGAN () | 8.47 | 12.9 | 8.82 | 30.1 |

| Methods | FID() |

|---|---|

| CircleGAN () | 12.9 |

| - radius equalization | 14.6 |

| - center estimation | 15.2 |

| - circle learning | 15.8 |

| - score normalization | 16.8 |

| - -projection | 20.4 |

To further compare CircleGAN to SphereGAN, we conduct ablation studies on CIFAR10 with the model using angle for score function (Table 1(b)). Each component in the table is subsequently removed from the full CircleGAN model to see its effect. Here we use the FID metric, which is more stable. First and second, we remove radius equalization and center estimation losses, respectively. Third, we replace the CircleGAN objective with that of SphereGAN, which maximizes the angle to the opposite of pivotal point. Forth, we remove the score normalization. Fifth, we replace -normalization with ISP of SphereGAN. The results show that the proposed components consistently improve FIDs. In particular, replacing -normalization with ISP significantly deteriorates FID, which implies that ISP of SphereGAN may be problematic due to the embedding bias of samples. CircleGAN not only outperforms SphereGAN with a large margin, but also easily extends to conditional settings as demonstrated in the next experiment.

4.3 Conditional GANs

We conduct conditional generation experiments on CIFAR10, CIFAR100 [17] and TinyImagenet.222https://tiny-imagenet.herokuapp.com/ CIFAR100 consists of 50K images of 100 classes and TinyImageNet consists of 100K images of 200 classes. We compare our models with a projection-based model [19] and also with two classifier-based models [34, 23], one with adversarial losses on class probability [34] and the other without the losses [23]. We present the results in Table 2, 3, 4 for CIFAR10, CIFAR100, and TinyImagenet, respectively. The numbers inside the parentheses indicate the results of our models without the auxiliary classifier . All the CircleGAN models outperform the other models in terms of all metrics across all the datasets. On the datasets with more classes and more diverse samples with higher resolution, the performance gains over other models become greater. It demonstrates the advantage of class-wise hypersphere placing realistic samples around the great circle in a class-wise manner.

| Model | IS() | FID() | GAN-train() | GAN-test() |

| real images | 11.2 | 3.43 | 92.8 | 100 |

| AC+WGAN-GP [11] | 8.27 | 13.7 | 79.5 | 85.0 |

| Proj. SNGAN [19] | 8.47 | 10.4 | 82.2 | 87.3 |

| AMGAN [34] | 8.79 | 7.62 | 81.0 | 94.5 |

| CircleGAN () | 9.08 (8.91) | 5.72 (7.47) | 87.0 (84.0) | 96.1 (84.5) |

| CircleGAN () | 9.01 (8.80) | 5.90 (8.09) | 86.8 (82.6) | 96.6 (82.9) |

| CircleGAN () | 9.22 (8.83) | 5.83 (12.2) | 86.3 (83.5) | 96.8 (83.5) |

| Model | IS() | FID() | GAN-train() | GAN-test() |

|---|---|---|---|---|

| real images | 14.8 | 3.92 | 69.4 | 100.0 |

| AC+WGAN-GP [11] | 9.10 | 15.6 | 26.7 | 40.4 |

| Proj. SNGAN [19] | 9.30 | 15.6 | 45.0 | 59.4 |

| AMGAN [34] | 10.2 | 16.5 | 23.2 | 70.8 |

| CircleGAN () | 11.8 (9.93) | 7.43 (9.45) | 54.7 (48.6) | 93.9 (58.5) |

| CircleGAN () | 11.9 (10.13) | 7.35 (8.99) | 55.6 (49.9) | 92.5 (57.7) |

| CircleGAN () | 11.9 (9.98) | 8.62 (9.10) | 54.0 (47.4) | 91.0 (58.0) |

The GAN-train and GAN-test results show that CircleGAN produces higher quality and more diverse samples than other models. In particular for the GAN-test, it remains almost the same at the highest level regardless of the datasets, which implies that every sample captures important features of its corresponding class to be classified correctly by the pre-trained classifier. We attribute this to the auxiliary classifier since all the scores drop significantly on all datasets when the classifier is detached. The overall scores without auxiliary classifier are similar to the projection-based models, which have no classifier. Hence, this supports the use of auxiliary classifier for learning the class-specific features in discriminator and correspondingly to update samples in generator.

However, the auxiliary classifier does not benefit all the other classifier-based models [11, 34], instead they perform significantly worse as the number of classes or the resolution increases. Specifically, AMGAN [34], whose discriminator is trained with an adversarial loss on class probability, shows decent performance on CIFAR10, but dramatically degrades on CIFAR100 and fails to train on TinyImagenet. The other classifier-based model having no adversarial loss also [11, 23] shows competitive results on CIFAR10 and CIFAR100, but poor GAN-train and GAN-test scores on TinyImagenet. These results suggest that utilizing spherical circles is highly effective and flexible to integrate the auxiliary classifier in conditional GANs.

| Model | IS() | FID() | GAN-train() | GAN-test() |

|---|---|---|---|---|

| real images | 33.3 | 4.59 | 59.2 | 100.0 |

| AC+WGAN-GP [11] | 10.3 | 32.5 | 0.4 | 0.7 |

| Proj. SNGAN [19] | 9.38 | 42.0 | 22.6 | 19.3 |

| CircleGAN () | 21.6 | 15.5 | 30.4 | 94.6 |

| CircleGAN () | 20.8 | 15.6 | 28.8 | 94.9 |

| CircleGAN () | 20.8 | 17.5 | 30.4 | 93.4 |

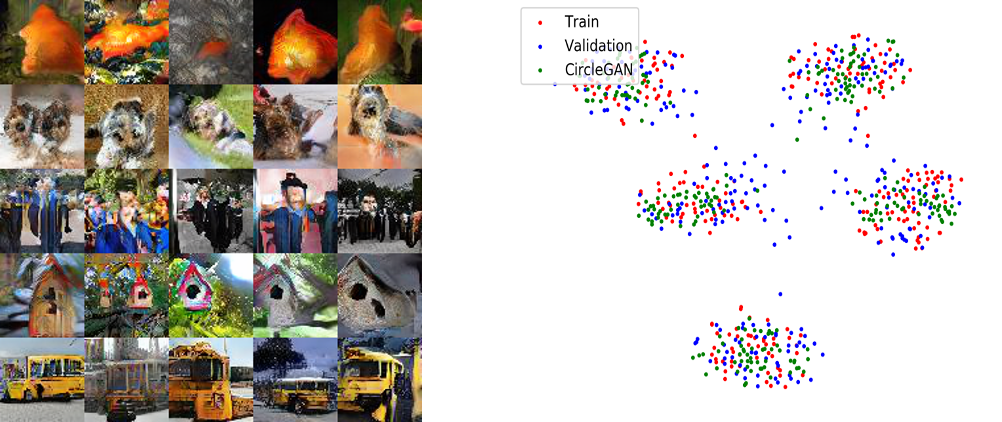

For qualitative evaluation, we sample images and obtain t-SNE using pre-trained classifier for CircleGAN and Proj. SNGAN [19], which is the most competitive algorithm on TinyImagenet. We use 5 classes of images where the classes are selected by the author not to be overlapped in nature: goldfish, yorkshire-terrier, academic gown, birdhouse, schoolbus (Fig. 3). The results demonstrate that the images synthesized from CircleGAN correspond to their classes and almost overlapped to the train and the validation sets of the dataset at the 2D t-SNE space, but the images from Proj. SNGAN are vague in perceiving them as instances of their corresponding classes. Also, the distribution of t-SNE itself certifies that the samples from Proj. SNGAN are far apart from the support of the dataset. The more qualitative results can be found in supplementary material C.

5 Conclusion

In this paper, we have demonstrated that learning and discriminating sample embeddings using their corresponding spherical circles on a hypersphere is highly effective in generating diverse samples of high quality. The proposed method provides the state-of-the-art performance in unconditional generation and also extends to conditional setups with class labels by creating hyperspheres for the classes. The impressive performance gain over the recent methods on standard benchmarks demonstrates the effectiveness of the proposed approach.

Broader Impact

This work addresses the problem of generative modeling and adversarial learning, which is a crucial topic in machine learning and artificial intelligence; b) the proposed technique is generic and does not have any direct negative impact on society; c) the proposed model improves sample diversity, thus contributing to reducing biases in generated data samples.

Acknowledgments

This research was supported by Basic Science Research Program (NRF-2017R1E1A1A01077999) and Next-Generation Information Computing Development Program (NRF-2017M3C4A7069369), through the National Research Foundation of Korea (NRF) funded by the Ministry of Science and ICT (MSIT), and also by Institute for Information & communications Technology Promotion (IITP) grant funded by the Korea government (MSIP) (No. 2019-0-01906, Artificial Intelligence Graduate School Program (POSTECH)).

Supplementary Material for CircleGAN

S.1 Implementation Details

We provide hyperparameter settings and architectural details used in our work.

Hyperparameter.

All the experiments use the same hyperparameters. We train both the discriminator and the generator using ADAM optimizer [16] with , , initial learning rate = 0.0001, minibatch size = 64, and total of 300K iterations each with 1:1 balanced schedule. We set the total iterations to be 3 times of the iterations of SN- and GP- based models (100K), because these models update the network with 1:5 schedule.

Network architecture.

All the models of unconditional and conditional CircleGANs use Resnet-based architectures [4, 11, 20]. Here, we change some regularizers and tricks to the techniques adopted in DCGANs and other classifier-based cGANs [25, 34]. The major differences are as follows: 1) we use dropout and BN with weight normalization (WN) as a regularizer instead of the existing techniques such as spectral normalization (SN) and gradient penalties (GP). The BN is proven to function as a regularizer imposing the Lipschitz constraint [27], which has been achieved by SN and GP [11, 20]. Plus, the dropout and WN have been successfully adopted in the classifier-based model [34]. 2) we feed the fake and real samples together into the discriminator to mimic the target distribution directly, not the whitened one normalized by the BN layers.

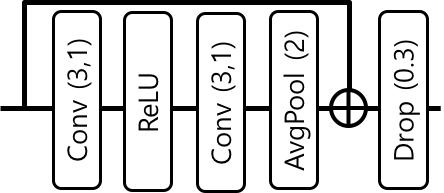

We provide the architectural details for the unconditional and the conditional CircleGANs, where we borrow some expressions from [29]. We denote the ResNet blocks (see Fig. s.1) with 1) , and for the blocks which produce the same resolution, downsampled and upsampled outputs by a factor of 2, respectively, 2) for the first block in the discriminator, where denotes the channel dimensions. Also, we denote with 1) a linear layer with dimensions, 2) a global average pooling layer, 3) a layer that transposes the input feature map to have the target resolution and 4) a block that outputs the image of the same resolution as the input, which consists of BN, Relu, Conv(3), and tanh layers. The architecture configuration on both the unconditional and the conditional settings are shown for each dataset in Table s.1, s.2.

| Dataset | Generator - Discriminator |

|---|---|

| CIFAR10 | ----- |

| ---- | |

| STL10 | ----- |

| ---- |

| Dataset | Generator - Discriminator |

|---|---|

| CIFAR10 | ----- |

| ----- | |

| CIFAR100 | ----- |

| ----- | |

| TinyImageNet | ------ |

| ------ | |

| ImageNet | ------- |

| ------- |

S.2 ImageNet Experiments

We present additional results of high-resolution image generation using ImageNet [7] with resolution, which consists of 1.3M images of 1000 classes. We use the same hyperparameter settings and the network configuration used in other datasets, except the learning rates and the number of layers and filters in the networks. The learning rates of the discriminator and the generator are set according to two-timescale learning rate (TTUR) [12], which is adopted in Proj. SNGAN [19]. Proj. SNGAN sets the learning rates of the discriminator and the generator as 0.0004 and 0.0001, respectively, and they are fixed over the course of the training. Using this as our basic settings, we run an additional experiment where the learning rate of the discriminator is set to of the learning rate of the generator. The architectural details for ImageNet are presented in Table s.2.

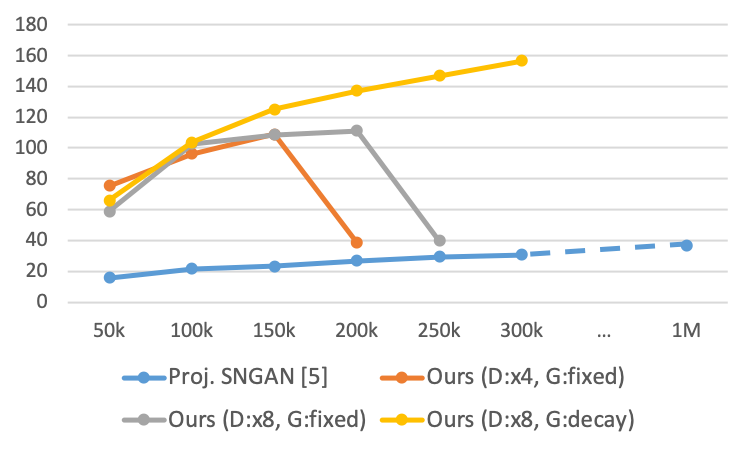

The quantitative results are shown in Fig. s.2. We use CircleGAN - as our basic model. Starting from the fairly good performances in terms of both IS and FID, CircleGAN outperforms the best performance of Proj. SNGAN (Fig. s.2, blue line) with significantly fewer iterations by a large margin (Fig. s.2, orange and gray lines). However, our model undergoes complete training collapse as BigGAN does at a performance similar to ours [4]. To combat the collapse, we simply decay the learning rate of the generator linearly to 0 during 300K iterations (Fig. s.2, yellow line), reaching another significant gain in performance. We provide a comparison of our algorithm with other state-of-the-art algorithms in Table s.3.

| Model | IS | FID |

|---|---|---|

| Proj. SNGAN [19] | 36.80 | 27.62 |

| SAGAN [33] | 52.52 | 18.65 |

| BigGAN [4] | 98.76 | 8.73 |

| CircleGAN - | 156.57 | 22.34 |

For qualitative results, we visualize the images sampled from the same categories in the TinyImagenet (goldfish, yorkshire-terrier, academic-gown, birdhouse, schoolbus) in Fig. s.3. Despite the remarkable performance of CircleGAN, there are still opportunities for further enhancements; class-specific features are well-preserved to each image, but monotonous are the images and look similar to each other. Training with larger batch size (256 2048) and its relevant techniques [4] can help to address this issue, but we leave it to the future work.

S.3 Additional Results

In this section, we show qualitative results on CIFAR10 and STL10 for unconditional settings and CIFAR10, CIFAR100 and TinyImagenet for conditional settings (Fig. s.4, s.5).

References

- Albuquerque et al. [2019] I. Albuquerque, J. Monteiro, T. Doan, B. Considine, T. Falk, and I. Mitliagkas. Multi-objective training of generative adversarial networks with multiple discriminators. In Proceedings of the 36th International Conference on Machine Learning (ICML), 2019.

- Arjovsky et al. [2017] M. Arjovsky, S. Chintala, and L. Bottou. Wasserstein gan. In Proceedings of the 34th International Conference on Machine Learning (ICML), 2017.

- Barratt and Sharma [2018] S. Barratt and R. Sharma. A note on the inception score. arXiv preprint arXiv:1801.01973, 2018.

- Brock et al. [2019] A. Brock, J. Donahue, and K. Simonyan. Large scale gan training for high fidelity natural image synthesis. In Proceedings of International Conference on Learning Representations (ICLR), 2019.

- Chen et al. [2019] T. Chen, M. Lucic, N. Houlsby, and S. Gelly. On self modulation for generative adversarial networks. In Proceedings of International Conference on Learning Representations (ICLR), 2019.

- Coates et al. [2011] A. Coates, A. Ng, and H. Lee. An analysis of single-layer networks in unsupervised feature learning. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics (AISTATS), pages 215–223, 2011.

- Deng et al. [2009] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and L. Fei-Fei. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 248–255, 2009.

- Doan et al. [2019] T. Doan, J. Monteiro, I. Albuquerque, B. Mazoure, A. Durand, J. Pineau, and R. D. Hjelm. On-line adaptative curriculum learning for gans. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 33, pages 3470–3477, 2019.

- Gong et al. [2019] M. Gong, Y. Xu, C. Li, K. Zhang, and K. Batmanghelich. Twin auxiliary classifiers gan. In Advances in Neural Information Processing Systems (NeurIPS), 2019.

- Goodfellow et al. [2014] I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio. Generative adversarial nets. In Advances in Neural Information Processing Systems (NeurIPS), pages 2672–2680, 2014.

- Gulrajani et al. [2017] I. Gulrajani, F. Ahmed, M. Arjovsky, V. Dumoulin, and A. C. Courville. Improved training of wasserstein gans. In Advances in Neural Information Processing Systems (NeurIPS), pages 5767–5777, 2017.

- Heusel et al. [2017] M. Heusel, H. Ramsauer, T. Unterthiner, B. Nessler, and S. Hochreiter. Gans trained by a two time-scale update rule converge to a local nash equilibrium. In Advances in Neural Information Processing Systems (NeurIPS), pages 6626–6637, 2017.

- Jolicoeur-Martineau [2019] A. Jolicoeur-Martineau. The relativistic discriminator: a key element missing from standard gan. In Proceedings of International Conference on Learning Representations (ICLR), 2019.

- Karras et al. [2018] T. Karras, T. Aila, S. Laine, and J. Lehtinen. Progressive growing of gans for improved quality, stability, and variation. In Proceedings of International Conference on Learning Representations (ICLR), 2018.

- Karras et al. [2019] T. Karras, S. Laine, and T. Aila. A style-based generator architecture for generative adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 4401–4410, 2019.

- Kingma and Ba [2014] D. P. Kingma and J. Ba. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980, 2014.

- Krizhevsky and Hinton [2009] A. Krizhevsky and G. Hinton. Learning multiple layers of features from tiny images. Technical report, Citeseer, 2009.

- Liu et al. [2019] K. Liu, W. Tang, F. Zhou, and G. Qiu. Spectral regularization for combating mode collapse in gans. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), pages 6382–6390, 2019.

- Miyato and Koyama [2018] T. Miyato and M. Koyama. cgans with projection discriminator. In Proceedings of International Conference on Learning Representations (ICLR), 2018.

- Miyato et al. [2018] T. Miyato, T. Kataoka, M. Koyama, and Y. Yoshida. Spectral normalization for generative adversarial networks. In Proceedings of International Conference on Learning Representations (ICLR), 2018.

- Neyshabur et al. [2017] B. Neyshabur, S. Bhojanapalli, and A. Chakrabarti. Stabilizing gan training with multiple random projections. arXiv preprint arXiv:1705.07831, 2017.

- Nowozin et al. [2016] S. Nowozin, B. Cseke, and R. Tomioka. f-gan: Training generative neural samplers using variational divergence minimization. In Advances in Neural Information Processing Systems (NeurIPS), pages 271–279, 2016.

- Odena et al. [2017] A. Odena, C. Olah, and J. Shlens. Conditional image synthesis with auxiliary classifier gans. In Proceedings of the 34th International Conference on Machine Learning (ICML), pages 2642–2651, 2017.

- Park and Kwon [2019] S. W. Park and J. Kwon. Sphere generative adversarial network based on geometric moment matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 4292–4301, 2019.

- Radford et al. [2016] A. Radford, L. Metz, and S. Chintala. Unsupervised representation learning with deep convolutional generative adversarial networks. In Proceedings of International Conference on Learning Representations (ICLR), 2016.

- Salimans et al. [2016] T. Salimans, I. Goodfellow, W. Zaremba, V. Cheung, A. Radford, and X. Chen. Improved techniques for training gans. In Advances in Neural Information Processing Systems (NeurIPS), pages 2234–2242, 2016.

- Santurkar et al. [2018] S. Santurkar, D. Tsipras, A. Ilyas, and A. Madry. How does batch normalization help optimization? In Advances in Neural Information Processing Systems (NeurIPS), pages 2483–2493, 2018.

- Shmelkov et al. [2018] K. Shmelkov, C. Schmid, and K. Alahari. How good is my gan? In Proceedings of the European Conference on Computer Vision (ECCV), pages 213–229, 2018.

- Siarohin et al. [2019] A. Siarohin, E. Sangineto, and N. Sebe. Whitening and coloring batch transform for gans. In Proceedings of International Conference on Learning Representations (ICLR), 2019.

- Wu et al. [2019] J. Wu, Z. Huang, D. Acharya, W. Li, J. Thoma, D. P. Paudel, and L. V. Gool. Sliced wasserstein generative models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 3713–3722, 2019.

- Yamaguchi and Koyama [2019] S. Yamaguchi and M. Koyama. Distributional concavity regularization for gans. In Proceedings of International Conference on Learning Representations (ICLR), 2019.

- Yang et al. [2019] D. Yang, S. Hong, Y. Jang, T. Zhao, and H. Lee. Diversity-sensitive conditional generative adversarial networks. In Proceedings of International Conference on Learning Representations (ICLR), 2019.

- Zhang et al. [2019] H. Zhang, I. Goodfellow, D. Metaxas, and A. Odena. Self-attention generative adversarial networks. In Proceedings of the 36th International Conference on Machine Learning (ICML), 2019.

- Zhou et al. [2018] Z. Zhou, H. Cai, S. Rong, Y. Song, K. Ren, W. Zhang, Y. Yu, and J. Wang. Activation maximization generative adversarial nets. In Proceedings of International Conference on Learning Representations (ICLR), 2018.

- Zhou et al. [2019] Z. Zhou, J. Liang, Y. Song, L. Yu, H. Wang, W. Zhang, Y. Yu, and Z. Zhang. Lipschitz generative adversarial nets. In Proceedings of the 36th International Conference on Machine Learning (ICML), 2019.