t0.8in

Chebyshev Inertial Landweber Algorithm

for Linear Inverse Problems

Abstract

The Landweber algorithm defined on complex/real Hilbert spaces is a gradient descent algorithm for linear inverse problems. Our contribution is to present a novel method for accelerating convergence of the Landweber algorithm. In this paper, we first extend the theory of the Chebyshev inertial iteration to the Landweber algorithm on Hilbert spaces. An upper bound on the convergence rate clarifies the speed of global convergence of the proposed method. The Chebyshev inertial Landweber algorithm can be applied to wide class of signal recovery problems on a Hilbert space including deconvolution for continuous signals. The theoretical discussion developed in this paper naturally leads to a novel practical signal recovery algorithm. As a demonstration, a MIMO detection algorithm based on the projected Landweber algorithm is derived. The proposed MIMO detection algorithm achieves much smaller symbol error rate compared with the MMSE detector.

I Introduction

A number of signal detection problems in wireless communications and signal processing can be classified into linear inverse problems. In a linear inverse problem, a source signal ( or ) is inferred from the noisy linear observation where and the additive noise .

One of the simplest approaches for the above task is to rely on the least square principle, i.e., one can minimize which corresponds to the maximum likelihood estimation rule for estimating the source signal under the Gaussian noise assumption. The Landweber algorithm [1] [5] is defined by the fixed-point iteration

| (1) |

where if , otherwise . The notation indicates the Hermitian transpose of and the parameter is a real constant. Note that the Landweber algorithm can be defined not only on a finite dimensional Euclidean space but also on an infinite dimensional complex/real Hilbert space. The Landweber algorithm defined on a Hilbert space is especially important for linear inverse problems including convolutions of continuous signals.

The Landweber algorithm can be regarded as a gradient descent method for minimizing the objective function because is the gradient of the objective function. The Landweber algorithm has been widely employed as a signal reconstruction algorithm for image deconvolution [2], inverse problems regarding diffusion partial differential equations [4], MIMO detectors [3], and so on. An advantage of the Landweber algorithm is that we can easily include a proximal or projection operation that utilizes the prior knowledge on the source signal after the gradient descent step (1), which is often called the projected Landweber algorithm [5].

One evident drawback of the Landweber algorithm is that the convergence speed is often too slow and we need to exploit an appropriate acceleration method. Recently, Takabe and Wadayama [8] found that a step-size sequence determined from Chebyshev polynomials can accelerate the convergence of gradient descent algorithms. Wadayama and Takabe [9] generalized the central idea of [8] for general fixed-point iterations. The method is called the Chebyshev inertial iteration. It would be natural to apply the Chebyshev inertial iteration for improving the Landweber algorithm in terms of the convergence speed.

The successive over relaxation (SOR) is a common method for accelerating a fixed-point iteration for solving linear equation such as Jacobi method. The SOR iteration corresponding to the above equation is given by where . A fixed SOR factors is commonly used in practice. The Chebyshev inertial iteration [9] is a natural generalization of the SOR method, which is a method employing defined based on the Chebyshev polynomials. The fundamental properties including the convergence rate are analyzed in [8, 9].

The goal of this paper is twofold. The first goal is to extend the theory of the Chebyshev inertial iteration to the Landweber algorithm on Hilbert spaces. The arguments in [8, 9] are restricted to the case where the underlying space is a finite dimensional Euclidean space. By extending the argument to a Hilbert space, the essential idea of the Chebyshev inertial iteration becomes applicable to iterative algorithms for infinite dimensional linear inverse problems, and we can obtain accelerated convergence of these algorithms. The Chebyshev inertial Landweber algorithm presented in this paper can be applied to wide class of signal reconstruction algorithms on complex/real Hilbert spaces such as deconvolution for continuous signals.

The second goal is to present a novel MIMO detection algorithm based on the projected Landweber algorithm with the Chebyshev inertial iteration for demonstrating that the theoretical discussion developed in this paper naturally leads to a novel practical signal detection algorithm.

II Preliminaries

In this section, several basic facts on functional analysis required for the subsequent argument are briefly reviewed. Precise definitions regarding functional analysis presented in this section can be found in [6].

II-A Hilbert space

Let be a real number satisfying . The set of infinite sequences in satisfying is denoted by where or . If the length of sequences are finite, i.e., a complex or real vector space, we can define a finite dimensional norm space . The set of measurable functions defined on the closed interval , is denoted by .

Let be a complex or real vector space. For any , an inner product is assumed to be given. The norm (inner product norm) associated with the inner product is defined by If the norm space is complete, the space is said to be Hilbert space. The norm spaces and are Hilbert spaces. In the case of , the inner products are defined by On the other hand, the inner product for is defined by

Let be a bounded linear operator on . The operator norm of is defined by where is an element of . For any and an operator , we have the sub-multiplicative inequality

| (2) |

II-B Compact operator and spectral mapping theorem

Let be a linear operator on . A complex number is an eigenvalue of if and only if a nonzero vector in satisfies where is said to be an eigenvector corresponding to . In the following, the set of all eigenvalues of is denoted by . The spectral radius of is given as

| (3) |

Suppose that satisfies For any , let which is a linear operator on and the operator is a compact operator [6]. If the kernel function satisfies then the operator is a compact self-adjoint operator. In the case of the finite dimensional space , a linear operator defined by a Hermitian matrix corresponds to a compact self-adjoint operator.

The next theorem plays an important role in our analysis described below.

Theorem 1 (Spectral mapping theorem [6])

Let be a compact operator defined on . If a complex valued function is analytic around , then the set of eigenvalues of is given by

Let be a compact self-adjoint operator on . It is known that the set of eigenvalues of a compact self-adjoint operator is a countable set of real numbers [6]. Let . A polynomial defined on is analytic over whole . As a consequence of the spectral mapping theorem, the set of eigenvalues of is given as For a compact self-adjoint operator , we also have

| (4) |

II-C Landweber iteration

Assume that is an element in a Hilbert space. The aim of the Landweber iteration is to recover the original signal from a measurement or noisy measurement where is a linear operator. The Landweber iteration is a gradient descent algorithm in a Hilbert space to minimize the error functional The Landweber iteration is defined by the fixed-point iteration

| (5) |

III Chebyshev Inertial Iteration

III-A Fixed-point iteration

Suppose that we have a fixed-point iteration defined on a Hilbert space :

| (6) |

where . The operator is a compact self-adjoint operator on .

Assume also that is a contraction mapping which has a fixed point satisfying where the existence of the fixed point is guaranteed by Banach fixed-point theorem [6]. The inertial iteration [9] corresponding to the original iteration (6) is defined by

| (7) |

where are called the inertial factors. It should be remarked that the fixed point of the inertial iteration exactly coincides with the fixed point of the original iteration (6).

III-B Analysis on spectral radius

From the inertial iteration (7), we have the equivalent update equation

| (8) |

where . From the assumption that is a compact self-adjoint operator, we can show is also compact self-adjoint. Since the fixed point satisfies

| (9) |

for any nonnegative and subtracting (9) from (8), we immediately have a recursive formula on the residual errors:

| (10) |

In the following discussion, we will assume the following inertial factors satisfying the periodical condition:

| (11) |

where a positive integer represents the period.

III-C Chebyshev inertial factors

As we discussed, the operator is a compact self-adjoint operator and it has countable real eigenvalues, which are denoted by . Hereafter, the minimum and the maximum eigenvalues of are denoted by and , respectively.

In the following discussion, we will use the inertial factors defined by

| (16) |

where These inertail factors are called the Chebyshev inertial factors. A Chebyshev inertial factor is the inverse of a root of a Chebyshev polynomial [8, 9].

Let . If the Chebyshev inertial factors are employed in the inertial iteration, we can use Lemma 2 proved in [9] to bound as

| (17) |

for . Combining the spectral mapping theorem and the above inequality, we immediately obtain

| (18) |

The above argument can be summarized in the following theorem.

Theorem 2

Let be a positive integer. For , the Landweber iteration with the Chebyshev inertial factors satisfies the residual error bound:

| (19) |

where .

This theorem implies that the error norm is tightly upper bounded if the iteration index is a multiple of .

Combining the Landweber iteration with the Chebyshev inertial iteration, we have the Chebyshev inertial Landweber algorithm:

| (20) | |||||

The iteration is almost the same as the original Landweber iteration except for the use of the Chebyshev inertial factors . It is common to set the inverse of the maximum eigenvalue of to the fixed factor .

IV Experiments

IV-A Deconvolution by Landweber iteration

In this subsection, deconvolution by the Landweber iteration over the Hilbert space over is studied. Suppose that are measurable functions on . The convolution of and are defined as

| (21) |

which is a compact operator on [6]. We thus can write where is a compact operator defined by (21) and . In a context of an inverse problem regarding a linear system involving a convlution, the function can be considered as a source signal and represents a point spread function (PSF) or impulse response. In the context of a partial differential equation, represents the Green’s function or the integral kernel corresponding to a given partial differential equation.

We further assume that is an even function satisfying . This implies that the operator is a compact self-adjoint operator. In the following, we try to recover the source signal from the blurred signal by using the Landweber iteration on given by where the initial condition is Note that holds due to the assumption on .

In the following experiments shown below, the closed range from to are discretized into 16384 bins, and convolution integration (21) is approximated by cyclic convolution with 16384-points FFT and frequency domain products. Figure 1 presents the source signal , the convolutional kernel , and the convolved signal .

Figure 2 presents a deconvolution process by the original Landweber algorithm (upper) and the Chebyshev inertial Landweber algorithm (lower). The tentative results for every 30 iterations are shown. In both cases, we can observe that gradually approaches to the source signal . This is because is a contractive mapping under the setting . Comparing two figures, we can see that the Chebyshev inertial Landweber algorithm shows much faster convergence to the source signal . This observation is also supported by the error curves depicted in Fig.3. The Chebyshev inertial Landweber algorithm shows faster convergence compared with the original Landweber iteration. For example, the error is achieved with with the original Landweber iteration but the Chebyshev iteration requires only iterations.

IV-B Finite-dimensional least square problem

In this subsection, we will discuss a least square problem closely related to MIMO detection problems. We here assume a finite dimensional Hilbert space on .

Let be a complex matrix whose elements follow the normal complex Gaussian distribution . Our task is to recover a source signal from a noisy linear measurement where is a complex Gaussian noise vector. The problem can be seen as a MIMO detection problem where the numbers of transmit and receive antennas are . The least square problem is equivalent to the maximum likelihood estimation rule for the above setting.

In this case, the Landweber iteration is exactly same as a gradient descent process

| (22) |

for minimizing the objective function. It is known that the asymptotic convergence rate is optimal if holds. In this case, the matrix becomes a Hermitian matrix and thus is a compact self-adjoint operator on . This means that we can apply the Chebyshev inertial iteration to the Landweber iteration.

The details of the experiment are summarized as follows. The dimension is set to . Each element of is chosen from 8-PSK constellation uniformly at random. The optimal factor is employed in the Landweber iteration. Each element of the noise vector follows where . The minimum and maximum eigenvalues of are used as and for determining the Chebyshev inertial factors.

Figure 4 summarizes the comparison on the squared error norms between the original Landweber iteration and the accelerated iterations. From Fig. 4 (left), we can immediately recognize the zigzag-shaped error curves of the Chebyshev inertial Landweber algorithm. This is because the error norm is exponentially upper bounded only when the iteration index is multiple of as shown in Theorem 2. It mean that the lower envelope of the zigzag curves can be seen as the guaranteed performance of the Chebyshev inertial Landweber algorithm. Figure 4 (right) indicates the squared errors up to . The proposed schemes (Chebyshev ) shows much faster convergence compared with the original Landweber iteration.

The speed of convergence of the Landweber iteration is dominated by the spectral radius On the other hand, indicates an upper bound of the normalized asymptotic convergence rate depending on the period . Figure 5 (left) presents both and as functions of . As becomes larger, the value of becomes strictly smaller than . This means that the Chebyshev inertial iteration certainly accelerates the asymptotic convergence rate. Figure 5 (right) shows approximate error norms derived from and which are given by . Although these values does not include the initial errors , these curves qualitatively explains the behavior of the actual error curves in Fig. 4 (right). These results support the usefulness of the theoretical analysis discussed in the previous section.

IV-C Projected Landweber-based MIMO detection

It is natural to consider the projected Landweber algorithm [5] for making use of the prior information of the source signal to improve the reconstruction performance. We here examine the detection performance of a simple projected Landweber-based MIMO detection algorithm.

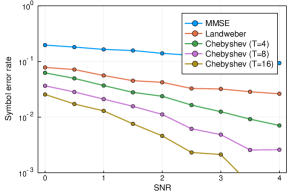

The soft projection operator used here is defined by

| (23) |

where is a signal constellation [7]. The iteration of the projected Landweber algorithm is fairly simple; the soft projection operator is just element-wisely applied to the output in (22) and then, the output is passed to the inertial iteration process. The details of the experiments are as follows. The channel model is exactly the same as that used in the previous subsection ( MIMO channel with 8-PSK input). The minimum and maximum eigenvalues and are determined according to the Marchenko-Pastur law. Figure 6 presents the symbol error rate (SER) of the proposed scheme. The projected Landweber detectors achieves one or two order of magnitude smaller SER than those of the MMSE detector. The accelerated schemes (Chebyshev ) provide steeper error curves than that of the projected Landweber detector without Chebyshev inertial iteration (denoted by “Landweber”). This observation supports the potential of the Chebyshev inertial iteration.

Acknowledgement

This work was supported by JSPS Grant-in-Aid for Scientific Research (B) Grant Number 19H02138.

References

- [1] L. Landweber, “An iteration formula for Fredholm integral equations of the first kind, ” American journal of mathematics, vol. 73, no. 3, pp. 615–624, 1951.

- [2] F. Vankawala and A. Ganatra, “Modified Landweber algorithm for deblur and denoise Images,” International Journal of Soft Computing and Engineering (IJSCE), vol.5, no.6, pp.79–82, 2016.

- [3] W. Zhang, X. Bao, and J. Dai, “Low-complexity detection based on Landweber method in the uplink of massive MIMO systems,” in Yang et al. Boundary Value Problems, 2017.

- [4] F. Yang, Y.-P. Ren, X.-X. Li and D. G. Li, “Landweber iterative method for identifying a space-dependent source for the time-fractional diffusion equation,” Yang et al. Boundary Value Problems, Springer, 2017.

- [5] P. L. Combettes and J. C. Pesquet, “Proximal splitting methods in signal processing, ” in Fixed-point algorithms for inverse problems in Science and Engineering, Springer-Verlag, pp.185–212, 2011.

- [6] J. Muscat, “Functional analysis,” Springer, 2014.

- [7] S. Takabe, T. Wadayama, Y. C. Eldar, “Complex trainable ISTA for linear and nonlinear inverse problems,” arXiv:1904.07409v2, 2019.

- [8] S. Takabe and T. Wadayama, “Theoretical interpretation of learned step size in deep-unfolded gradient descent, ” arXiv:2001.05142, 2020.

- [9] T. Wadayama and S. Takabe, “Chebyshev inertial iteration for accelerating fixed-point iterations, ” arXiv:2001.03280, 2020.