Chat2Layout: Interactive 3D Furniture Layout with a Multimodal LLM

Abstract

Automatic furniture layout is long desired for convenient interior design. Leveraging the remarkable visual reasoning capabilities of multimodal large language models (MLLMs), recent methods address layout generation in a static manner, lacking the feedback-driven refinement essential for interactive user engagement. We introduce Chat2Layout, a novel interactive furniture layout generation system that extends the functionality of MLLMs into the realm of interactive layout design. To achieve this, we establish a unified vision-question paradigm for in-context learning, enabling seamless communication with MLLMs to steer their behavior without altering model weights. Within this framework, we present a novel training-free visual prompting mechanism. This involves a visual-text prompting technique that assist MLLMs in reasoning about plausible layout plans, followed by an Offline-to-Online search (O2O-Search) method, which automatically identifies the minimal set of informative references to provide exemplars for visual-text prompting. By employing an agent system with MLLMs as the core controller, we enable bidirectional interaction. The agent not only comprehends the 3D environment and user requirements through linguistic and visual perception but also plans tasks and reasons about actions to generate and arrange furniture within the virtual space. Furthermore, the agent iteratively updates based on visual feedback from execution results. Experimental results demonstrate that our approach facilitates language-interactive generation and arrangement for diverse and complex 3D furniture.

Introduction

3D furniture layout generation plays a crucial role in various applications such as interior design, game development, and virtual reality. Traditional methods for furniture layout planning often formulate the task as a constrained optimization problem (Qi et al. 2018; Ma et al. 2016; Chang et al. 2015; Chang, Savva, and Manning 2014; Yeh et al. 2012; Fisher et al. 2012; Luo et al. 2020; Wang et al. 2019; Ma et al. 2018; Fu et al. 2017). However, these approaches typically require rules for scene formation and graph annotations, necessitating the expertise of professional artists. This makes these methods less accessible to non-professionals and inflexible to dynamic environments where user preferences and spatial constraints may vary.

Recent learning-based approaches address these limitations by employing neural networks (Zhang et al. 2020; Wang et al. 2018; Ritchie, Wang, and Lin 2019; Zhou, While, and Kalogerakis 2019; Li et al. 2019; Dhamo et al. 2021; Paschalidou et al. 2021; Wang, Yeshwanth, and Nießner 2021; Zhai et al. 2024; Tang et al. 2023a; Lin and Yadong 2023), to automate the object selection and placement. Learning from large datasets, these generative methods can produce diverse plausible layouts (Deitke et al. 2023; Fu et al. 2021b, a). However, despite the promise, they face difficulties in accommodating to objects that are not presented in the training set, limiting their adaptability and versatility.

The rapid advancement of large language models (LLMs) has opened up new possibilities for enhancing user interactions in furniture layout generation. Researchers leverage LLMs to reason about layout plans based on text descriptions of furniture items, including their positions and dimensions (Wen et al. 2023; Feng et al. 2024). These approaches enable users to express their desired living spaces in natural language, eliminating the need for extensive training on large datasets. However, these methods suffer from notable limitations. While some of these methods (Wen et al. 2023) utilize visual information to refine placement, they still primarily rely on textual input without fully incorporating visual perception. As a result, they often produce plausible yet impractical layouts that fail to align with user expectations or spatial constraints. Furthermore, the absence of agent memory and feedback mechanisms prevents multi-turn conversations, hindering users’ ability to interact with the process to iteratively refine the generated layout plan.

Recent developments have highlighted two significant trends in the field: First, Multimodal LLMs (MLLMs) (OpenAI 2023; Zhu et al. 2023; Li et al. 2024a; Chu et al. 2024; Zhan et al. 2024; Li et al. 2024b; Dong et al. 2024; Chen et al. 2024) have attracted huge attention for their impressive capabilities in various vision-language tasks by introducing visual perceptions into LLMs. Second, LLM agents (Belzner, Gabor, and Wirsing 2023; Zhao et al. 2024; Wu et al. 2023; Chan et al. 2023; Deng et al. 2024) emerge as powerful problem-solvers for handling complex tasks through feedback mechanisms and iterative refinement. Inspired by these advancements, we aim to develop a MLLM agent specifically designed for generating furniture layouts.

In this paper, we introduce Chat2Layout, a language-interactive method for generating furniture layouts that employs a MLLM as its agent. To begin, we establish a unified vision-question paradigm for in-context learning, which standardizes communication with the MLLM to guide its reasoning utilizing both textual and visual information without the need to update model weights. Within this framework, we present a novel training-free visual prompting mechanism composed of two key components: 1) We develop a visual-text prompting technique that assists the MLLM in tackling specific layout tasks; 2) We propose an Offline-to-Online search (O2O-Search) method to automatically identify the minimal support set from an example database, facilitating efficient in-context learning from a limited number of references.

Building upon these techniques, we develop a MLLM agent system that automatically perceives 3D indoor environments through both linguistic and visual modalities. The agent is capable of understanding user requirements, planning tasks, reasoning about the necessary actions to generate and arrange furniture within a virtual environment, and learning from the visual feedback of its executed results. By leveraging our sophisticated unified vision-question paradigm, which incorporates both a visual-text prompting technique and an O2O-Search method, in conjunction with the MLLM agent system, our Chat2Layout enables the execution of diverse, complex, and language-interactive 3D furniture generation and placement. This enables new user experiences that are previously unsupported, setting a new milestone in the field of furniture layout generation.

Our main contributions can be summarized as follows:

-

•

A MLLM agent system that enables language-interactive 3D furniture generation and layout. This system supports a multi-turn conversations, allowing users to interact dynamically with the 3D environment and iteratively refine the layouts.

-

•

A unified vision-question paradigm for MLLM to effectively respond to a wide range of tasks. This paradigm incorporates a visual-text prompting technique that facilitates grid-based placement for layout generation, and a O2O-Search method that significantly boosts MLLM’s reasoning capabilities in generating furniture plans.

-

•

Comprehensive support for various furniture layout applications, including layout completion, rearrangement, open-set placement, and multi-conventional interaction, as shown in Figure 1.

Related Work

Furniture Layout Generation. Traditional optimization-based layout generation (Qi et al. 2018; Ma et al. 2016; Chang et al. 2015; Chang, Savva, and Manning 2014; Yeh et al. 2012; Fisher et al. 2012; Luo et al. 2020; Wang et al. 2019; Ma et al. 2018; Fu et al. 2017) relies on prior knowledge of reasonable configurations, such as procedural modeling or pre-defined scene graphs. Such priors require professional expertise, and are less flexible in dynamic environments.

Generative methods address these challenges with CNN (Zhang et al. 2020; Wang et al. 2018; Ritchie, Wang, and Lin 2019; Zhou, While, and Kalogerakis 2019), VAE (Li et al. 2019), GCN (Dhamo et al. 2021), transformer (Paschalidou et al. 2021; Wang, Yeshwanth, and Nießner 2021), or diffusion (Zhai et al. 2024; Tang et al. 2023a; Lin and Yadong 2023) architectures trained on large-scale datasets (Deitke et al. 2023; Fu et al. 2021b, a). InstructScene (Lin and Yadong 2023) handles concrete layout instructions by training a generative model on a scene-instruction dataset, incorporating a semantic graph prior and a diffusion decoder. However, these methods struggle with placing objects not included in the training data. In contrast, Chat2Layout supports open-set furniture placement with required furniture pieces automatically generated.

LLMs offer a new sight of text-based layout reasoning, bypassing the dataset limitations. LayoutGPT (Feng et al. 2024) enables LLMs to deliberate over layout plans with bounding boxes and orientation of furniture items. AnyHome (Wen et al. 2023) enhances LayoutGPT with manually defined placement rules via text instructions. While AnyHome utilizes visual information, it primarily relies on textual input for placement and only uses visual cues for minor adjustments. Without proper visual understanding, these methods can produce plausible but impractical results. Additionally, the absence of agent memory and feedback mechanisms prevents multi-turn conversations, hindering users from interacting with the process to iteratively refine the generated layout plan.

LLMs as Agents. With their potential to be powerful general problem solvers, LLMs have been used as the core controller for agents (Belzner, Gabor, and Wirsing 2023; Zhao et al. 2024; Wu et al. 2023; Chan et al. 2023; Deng et al. 2024; Gravitas 2023; Osika 2024; Nakajima 2024). Recently, MLLMs (OpenAI 2023; Zhu et al. 2023; Li et al. 2024a; Chu et al. 2024; Zhan et al. 2024; Li et al. 2024b; Dong et al. 2024; Chen et al. 2024) demonstrate even more impressive performance by incorporating visual perception. Yet, MLLMs as agents has not been fully explored. We are the first to design such an agent system for furniture layout generation, facilitating continuous layout arrangements.

In-context Learning. ICL integrates task demonstrations into prompts to enhance the reasoning ability of LLMs, such as Chain-of-Thought (CoT) (Wang et al. 2022b) and automatic prompt design (Zhang et al. 2022; Shin et al. 2020; Zhou et al. 2022; Lester, Al-Rfou, and Constant 2021). Recently, visual prompting has been used in vision tasks, such as overlaying masks with a red circle (Shtedritski, Rupprecht, and Vedaldi 2023), highlighting regions (Yang et al. 2024), using multiple circles with arrows (Yang et al. 2023b), and labeling objects with alphabet numbers (Yang et al. 2023a). Other techniques select representative exemplars from a candidate set for prompting. These reference exemplars are defined as support set. Offline methods calculate pairwise similarities among the dataset for selecting the top- exemplars (Su et al. 2022), while online methods calculate the similarity between the test prompt and candidates to select exemplars (Alayrac et al. 2022; Yang et al. 2022; Feng et al. 2024). These methods have not been fully explored for visual tasks with MLLMs like ours.

Text-to-3D Generation. For layout visualization, current methods often pre-select or retrieve furniture objects from a 3D dataset, limiting the ability to meet diverse user expectations. Instead, our method uses text-to-3D generation to create furniture items. Text-to-3D generations (MeshyAI 2023; LumaAI 2023; SudoAI 2023) create 3D content like mesh (Ma et al. 2023; Mohammad Khalid et al. 2022), neural radiance field (Wang et al. 2023; Lin et al. 2023; Raj et al. 2023; Wang et al. 2024; Hong et al. 2024), or gaussian splatting (Tang et al. 2024; Yi et al. 2023; Tang et al. 2023b) from text prompts in an optimization (Poole et al. 2022; Haque et al. 2023) or generative manner (Wang et al. 2022a; Metzer et al. 2023). Compared to generative methods, recent optimization-based approaches offer broader diversity with fast-optimization processes (Tang et al. 2024; Li et al. 2023). In this work, we adopt Tripo3D (TripoAI 2024), which is capable of producing high-quality 3D furniture swiftly.

Overview of Our Agent

Classical Agent System

An agent system typically comprises four main components (Xi et al. 2023): Environment: The context within which the agent operates, such as web engines or mobile apps, defining the state space of the agent. The agent’s actions can modify the environment, thereby influencing its own decision-making processes. Perception: The agent’s sensory component, collecting information from the environment through various modalities like vision and audio. The perceived input is transformed into neural signals and transmitted to the brain for further processing. Brain: The central processing unit of the agent, responsible for storing knowledge and memories, as well as performing essential functions such as information processing and decision-making. It enables the agent to reason and plan, handle unforeseen tasks, and exhibit intelligent behaviors. Action: The component that receives and executes action sequences from the brain, once the decisions are made, allowing the agent to interact with the environment.

MLLM-Based Layout Agent

Chat2Layout draws inspiration from existing research on LLMs as agents, but it distinguishes itself by uniquely integrating an additional visual modality. To create such an agent system specialized for interactive layout generation, we implement specific modifications to the agent framework (Figure 2): Environment: Our environment includes a user and an observer. The user provides text-based requirements, while the observer monitors the 3D user interface to summarize visual elements and furniture attributes like positions and dimensions. The observer also engages in self-reflection, prompting the agent to review initial layouts and request corrections. Perception: The agent perceives both textual and visual information from the environment, encompassing user requirements, furniture attributes, and visual room scenes. Brain: Based on the perceived information, the agent reasons about the necessary 3D actions for layout plan generation. Action: Once the 3D action list is determined, these actions are executed within the 3D visualization engine, bringing the layout plan to life.

Brain

Our core techniques are centered around the Brain module, the central decision-making component of our agent. Its input includes a specific user task and scene information perceived by the Perception module, while its output is a sequence of 3D actions that can be executed by the Action module. Every interaction with the MLLM adheres to our vision-question paradigm. The Brain first decomposes each task into a series of atomic tasks. Then, for each atomic task, it performs visual-text prompting to formulate prompts incorporating references generated by O2O Search for engaging with the MLLM. Subsequently, it receives 3D actions, which are then passed on to the Action module for execution.

We give definitions of the most important terms of Brain below, and will describe its key technical components in following sections.

Task. A task is defined by user requirements described in text. Chat2Layout supports open-vocabulary and unrestricted user descriptions, whether abstract or concrete, complex or simple, mixed or focused. This flexibility leverages the commonsense knowledge inherent in LLMs to guide and control the design generation process.

Atomic Task. An atomic task is the most basic unit of a task that cannot be further decomposed. We identify three specific types of atomic tasks: Add<text>, Remove<text>, and Placement(translate, scale, rotate)<text>.

3D Action. We define five fundamental actions within the 3D engine to manipulate objects:

-

•

‘Add<objkey, text>’: Invokes the text-to-3D API ‘Text-2-3D<text>’ to generate a textured mesh from a text description, then assigns an object key ‘objkey’ to this mesh.

-

•

Remove<objkey>: Removes the object identified by ‘objkey’ from the scene.

-

•

Translate<objkey, (x,y,z)>: Moves an object to a new position specified by the coordinates .

-

•

Scale<objkey, (x,y,z)> - Adjusts the size of an object according to the scale factors.

-

•

Rotate<objkey, angle> - Rotates an object around its center by a specified angle.

Vision-Question Paradigm

We observe a similar prompt pattern in each interaction with the MLLM. Therefore, we adopt a unified vision-question paradigm as the template for every interaction, as depicted in Figure 2.

In this paradigm, we first assign the MLLM a Role such as “You are an expert in 3D interior design”, which has proven crucial in enhancing the LLMs’s performance. For specific tasks involving mathematical reasoning, such as object scale prediction and 3D placement, we add addition constraints as “You are an expert in 3D interior design with a strong math background”.

We incorporate Environment information, includes textual descriptions like object names (represented by ‘objkey’), positions, and dimensions of visualized objects, along with multi-view visual captures of the user interface.

We introduce a visual-text prompting method that formulating each specific Question with visual and textual information to aid the MLLM in reasoning about plausible layout plans.

Additionally, to improve MLLM’s accuracy through in-context learning (ICL) from a few contextual examples, we propose an O2O-Search method for selecting the support set as Reference. This approach automatically identifies the minimal informative support set required for effective in-context learning.

By combining visual and textual information, our unified vision-question paradigm enables more accurate interpretations and responses for each Task, as visual cues help disambiguate textual input, leading to improved decision-making.

Exemplar prompts following our vision-question paradigm can be found in our supplementary material.

Task Decomposition

Given a user task, before applying visual-text prompting, we need to first decompose it into a sequence of atomic tasks. Figure 3 illustrates the vision-question paradigm for task decomposition, including the assigned role and the support set provided by O2O-Search. The support set is presented in JSON format, suitable for representing structured data. Note that, as a special case of vision-question paradigm, environment and visual-text prompting are not included in task decomposition as it is a text-only problem. For other interactions discussed in Section Visual-Text Prompting, we adhere to the complete vision-question paradigm.

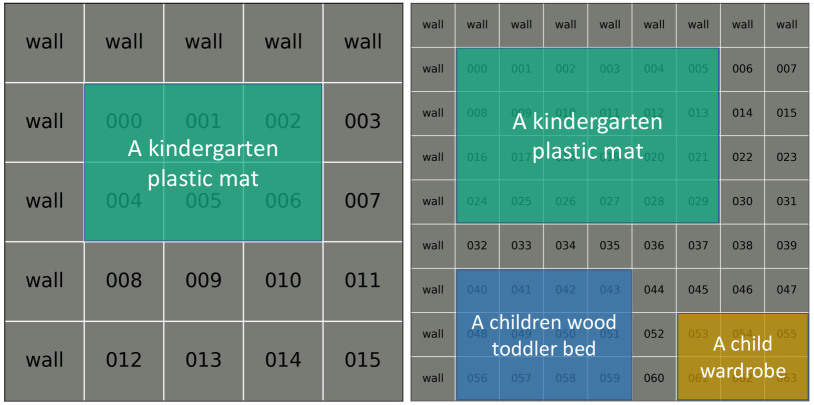

| (a) Local by local placement |

| (b) Scale-adaptive grid placement |

Visual-Text Prompting

Object Initialization

We generate furniture items using ‘Text-2-3D<text>’, which come with arbitrary poses and sizes, which are not ideal for placement. Therefore, before incorporating them into a layout, we initialize them with properly aligned poses and scales using visual-text prompting. In these paradigms, we provide environment information, including visual captures of the scene and object bounding boxes, along with references from our O2O-Search.

Pose Alignment. First, we calculate the Axis-Aligned Bounding Box (AABB) and the Optimal/Oriented Bounding Box (OBB) (Fabri and Pion 2009). Then, we align each object’s pose by rotating it from its OBB to match its AABB.

Scale Prediction. We formulate a prompt to predict the appropriate scaling factor for each object. After adjusting the furniture items to their correct sizes, we compile the set of furniture items as .

Object Placement

The generation of a layout can be defined as follows: given a set of furniture items , where each item is characterized by its identifier and bounding box , the goal is to determine a placement for all items. Here, and denote the position and orientation of the item , respectively. Unlike previous works that primarily focus on placing objects on the floor, our approach allows for arbitrary placement on or under other objects, or on the wall. We categorize into three placement types, , , and , through interaction with the MLLM agent, following the vision-question paradigm. Instead of directly predicting the layout plan in a single interaction like LayoutGPT (Feng et al. 2024) and AnyHome (Wen et al. 2023), we employ Chain-of-Thought (CoT) prompting to systematically reasons through the plan step by step, which leads to a more precise process.

Floor Placement. We first arrange items in using the Grid Coverage Algorithm in Figure 4. We overlay a grid on the floor, where each cell is identified by a unique alphanumeric ID. Assuming that walls are along the top and left edges of the floor, we label wall-adjacent cells as ‘wall’. We then ask the agent to specify the grid cells that each furniture item in should cover. Since directly assigning cells for a large set of items can be challenging, we propose a local by local placement method (Figure 4-(a)), which stages the larger items before smaller ones, leading to simplified tasks and improved performance. For very large objects that can cause inaccurate coverage predictions, we propose a scale-adaptive grid placement method, which initially place them on a sparse grid to minimize the number of covered cells (Figure 4-(b)-left) and then project them onto a denser grid for further placement of additional items (Figure 4-(b)-right).

3D Placement. After placing , we apply the same grid coverage strategy to by similarly overlaying a grid on the wall. In addition, for all items in that are adjacent to the wall, we project them to the wall grid before placing to avoid collision between these two types of items. We then apply the same strategy to place , such as putting a laptop on top of a table. In this way, our layout generation method is highly versatile and adaptable to irregular floor plans, with or without walls.

Orientation Prediction. After placing all furniture items, we further correct their orientations. The aforementioned pose alignment technique ensures that the frontal face of each item aligns with one of four views: left, right, front, or back. The agent only needs to identify the frontal face from the four candidate views (Figure 5). After that, we mark the orientation of the frontal face on the grid with a red arrow (Figure 4). Finally, another chat session is then used to determine the rotation angle for the final face orientation.

Self-Reflection

Self-reflection empowers agents to iteratively improve by refining decisions and correcting past errors (Yao et al. 2023; Shinn et al. 2024). To this end, we incorporate a visual self-reflection mechanism into our agent system. After all furniture items are placed, we start a continuous chat by asking whether the result meets user requirements, and is reasonable and realistic, allowing the agent to visually inspect the arrangement within the 3D interface. If the placement does not satisfy user requirements or violates realistic and rational constraints, the agent is prompted to adjust the layout accordingly. Furthermore, we integrate surface collision detection during object placement. This is particularly important for irregularly shaped objects, as it helps prevent collisions or floating objects.

Offline-to-Online Reference Search

To provide references for the visual-question paradigm, we propose an Offline-to-Online Search method (O2O-Search) to identify the minimal informative support set.

Given a database containing examples , where each input set consists of a user text prompt , an indoor scene text description , and visual captures of the scene , and represents the corresponding output result, all in JSON format. Our O2O-Search method aims to automatically identify the minimal informative support set within this dataset.

Offline Grouping. We observe that each example can be categorized based on its characteristics. To achieve this, we assign a category identifier to each example in , effectively dividing it into distinct groups. Within each group, we measure the similarity between any two examples using the following criteria:

| (1) |

where is the CLIP cosine similarity (Radford et al. 2021) and is the DINOv2 cosine similarity (Oquab et al. 2023). The information entropy of an example is defined as:

| (2) |

We calculate the information entropy for each group and select the top- demonstrations with the highest information entropy values within each group.

Online Retrieval. Given a test input during user interaction, we ask the agent to assign a category identifier. We then select the group corresponding to this identifier as the final supporting set, providing the most relevant reference examples for the current task.

Experiments

In this section, we detail the evaluation setup, present ablation studies and comparisons to baselines, and showcase diverse results generated by out method. For further implementation details and additional evaluations, please refer to our supplementary materials.

Evaluation Setup

To evaluate the quality of our layout generation, we first collected a set of various real scenes online and wrote corresponding text descriptions for each. We then generated the dataset by producing 10 layout plans for each scene, similar to cases 1, 2, and 7 in Figure 7. We then conduct a comparative analysis using the following metrics:

Out of Bound Rate (OOB). This measures the percentage of layout plans where objects extend beyond the room’s boundaries or intersect with other objects, indicating the quality of spatial arrangement. We report OOB at the layout plan level, checking for the presence of these issues with each plan, following LayoutGPT and AnyHome.

Orientation Correctness (ORI). This evaluates the correctness of object orientations within the layout context. Correct orientation is defined as the object being easily accessible and usable by users in the room. For example, a wardrobe adjacent to but facing a wall is considered incorrectly oriented. We manually check each layout plan and report the percentage of layout plans where furniture has no unreasonable face orientations.

CLIP Similarity (CLIP-Sim). This assesses the alignment between the user’s text description and the scene content by calculating the text-to-image CLIP similarity, using multi-view renderings of the 3D layout scene. Random viewpoint perturbations are incorporated to enhance the robustness of our evaluations.

Ablation Studies

| OOB | ORI | CLIP-Sim | |

| InstructScene | 38.8 | 73.3 | 13.1 |

| LayoutGPT | 52.8 | 61.3 | 18.4 |

| AnyHome | 32.8 | 72.3 | 23.2 |

| Ours-Visual | 36.8 | 63.8 | 20.1 |

| Ours-w/o VTP | 37.5 | 62.0 | 19.6 |

| Ours-w/o O2O-Search | 31.8 | 75.3 | 21.9 |

| Ours-w/o SR | 24.8 | 81.0 | 24.7 |

| Ours | 21.0 | 84.8 | 27.1 |

| Ours | AnyHome | LayoutGPT | InstructScene |

Visual-Text Prompting. In Table 1, we evaluate the effectiveness of visual-text prompting. Results demonstrate that visual-text prompting significantly enhances layout generation compared to the baseline of Ours-w/o-VTP, which relies solely on text descriptions. This is evidenced by a lower OBB, better ORI, and higher CLIP-Sim scores.

We also observe a marginal improvement in the baseline of Ours-Visual, which directly integrates visual captures of the environment without visual-text prompting, compared to Ours-w/o-VTP. This suggests that while visual information is beneficial, its inclusion alone without structural integration does not lead to substantial performance gains. In contrast, our visual-text prompting technique enables more effective utilization of visual information, contributing to better overall performance.

Furthermore, our full method incorporating the self-reflection mechanism further enhances layout generation performance compared to the baseline of Ours-w/o-SR by providing feedback to verify and refine results.

O2O-Search. We also conduct an ablation study to assess the effectiveness of our O2O-Search method. We randomly select of the questions from our example dataset to form the test set. Our method is compared against two baselines: 1) An offline-only method that calculates pairwise similarity across the entire dataset and selects the top- exemplars without grouping (Su et al. 2022); and 2) An online-only method that computes similarity between the test prompt and all candidates in the dataset to select supporting references (Alayrac et al. 2022; Yang et al. 2022; Feng et al. 2024).

We present the accuracy across various sizes of the support set in Figure 6. When , meaning no support set, the accuracy remains significantly low, underscoring the important role that support references play. As we increase the value of , there is a general trend of improved accuracy for all baselines;. However, this improvement begin to consistently plateau around , suggesting diminishing returns on accuracy gains for larger . This indicates that there is an optimal range of that maximizes accuracy without incurring too much computational cost due to excessive size of references. Thus, we use in our method. Importantly, our O2O-Search method consistently outperforms both the offline and online baselines in accuracy across all values of , demonstrating its superior scalability and effectiveness in utilizing larger support sets to boost performance.

We also observe a substantial degradation in OBB, ORI, and CLIP-Sim metrics when O2O-Search is removed, as shown in Table 1. This further highlights the curcial role that our O2O-Search plays in enhancing the overall performance of our system.

Comparisons

We compare Chat2Layout with three existing methods: InstructScene (Lin and Yadong 2023), LayoutGPT (Feng et al. 2024), and AnyHome (Feng et al. 2024), with AnyHome being a concurrent work. For a fair comparison, we use the same furniture set generated by our model, with identical scale values and initial orientations, for layoutGPT and AnyHome. Moreover, for InstructScene, we provide a detailed description of our generated layout plan to obtain their results. It should be noted that in our method, the furniture is generated using a text-to-3D API, while layoutGPT and AnyHome require searching a large dataset. But here We use the same set generated by ours for a fair layout quality comparison.

Quantitative Comparison. Table 1 provides the quantitative comparison. InstructScene demonstrates weaker performance in terms of ORI and CLIP-Sim compared to all LLM-based methods. LayoutGPT, while solely leveraging an online-only support set without visual input, struggles to produce satisfactory results. AnyHome attempts to address the issues of LayoutGPT by incorporating a set of manually defined placement rules in texts. However, it only uses visual information for furniture position and pose refinement through SDS optimization, limiting its overall effectiveness. In contrast, Chat2Layout achieve the best results on all metrics, thanks to the integration of visual input and a more advanced support set search method.

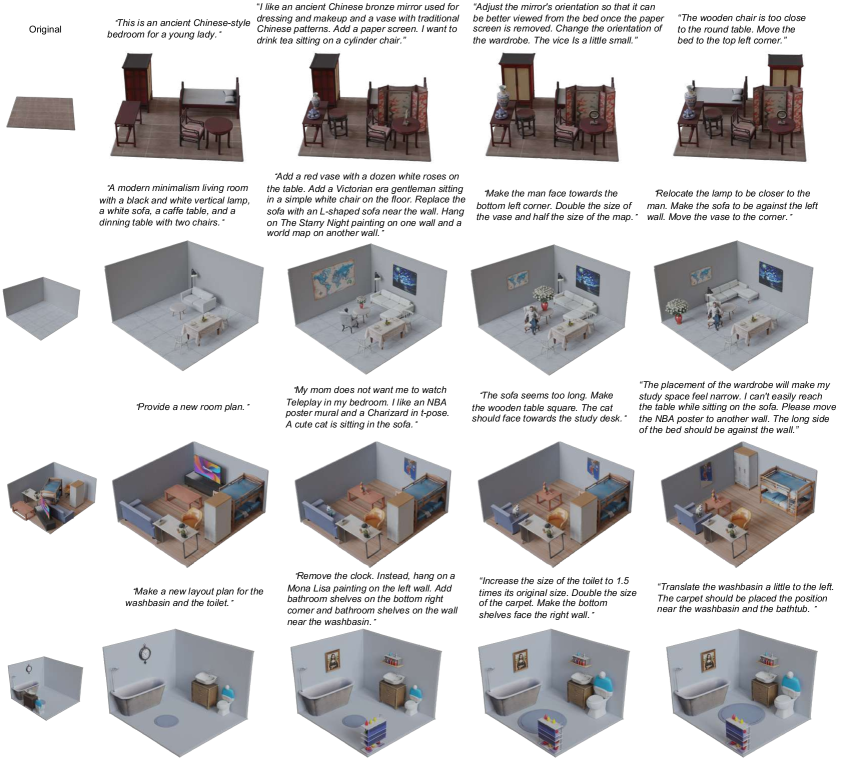

Qualitative Comparison. Figure 10 presents the qualitative comparison. Compared to our method, other approaches are more susceptible to issues like out-of-bound placements, incorrect orientations, and poor content alignments. Specifically, InstructScene is limited to concrete text instructions detailing spatial relations and may generate non-existent objects due to text-to-graph conversion errors. Both InstructScene and LayoutGPT lack support for wall placements such as paintings. AnyHome, while outperforming the other two, relies on pre-defined human-knowledge rules, leading to illogical placements, such as neglecting common-sense spacing between objects. In addition, all three methods are prone to boundary and orientation issues, impacting usability and aesthetics. In contrast, Chat2Layout demonstrates superior performance by fully exploiting visual information. Moreover, Chat2Layout supports multi-turn conventional interactions, as demonstrated in Figure 7, a feature absent in other methods.

More Visual Results

We present our interactive layout generation results in Figure 7, a feature unsupported by other methods. Chat2Layout empowers users to create layouts from scratch, transforming an empty space into diverse designs such as an ancient Chinese-style bedroom or a modern minimalist living room. Users can also perform partial rearrangements, as illustrated in the washing room scenario. Chat2Layout supports precise 3D placements, such as positioning a vase on a table in case one or hanging a painting on a wall in case two. Additionally, users can modify layouts through instructions, including inserting new items, removing objects, and adjusting placements in terms of scale, rotation, and translation. Notably, Chat2Layout excels at handling open-vocabulary instructions, accommodating both abstract and concrete descriptions, whether mixed or focused.

Chat2Layout supports diverse layout generation by prompting the agent to provide multiple layouts for the same set of furniture items. As illustrated in Figure 9, we show three cases, each with four distinct layout plans. Chat2Layout enables the creation of varied layout plans, accommodating objects on both floors and walls, while ensuring that the orientations of objects are not only diverse but also logical within the context of the room and its functionality.

Figure 8 illustrates the versatility and adaptability of our method in handling a variety of layout configurations, including irregular floor shapes and multi-room setups. For instance, we employ an irregular grid to generate a layout for an L-shaped game room. In multi-room scenarios, we sequentially generate each individual room, demonstrating the system’s capability in managing complex spatial arrangements across multiple interconnected spaces.

Conclusion

In this paper, we introduce Chat2Layout, a novel method to indoor layout generation that expands the capabilities of MLLMs beyond vision interpretation and text generation. Our method establishes a unified vision-question paradigm for in-context learning, enabling communication with the MLLM to guide its reasoning with both textual and visual information. Within this framework, we introduce a training-free visual prompting technique that facilitates the creation of realistic and contextually appropriate layouts. In addition, we employ an O2O-Search method to identify the minimal support set as references for effective prompting. Overall, our method interprets and handles user requirements and 3D environments both linguistically and visually and execute challenging furniture arrangement tasks. This enables diverse and complex 3D layout generation, providing interactive user experiences that surpass previous methods.

References

- Alayrac et al. (2022) Alayrac, J.-B.; Donahue, J.; Luc, P.; Miech, A.; Barr, I.; Hasson, Y.; Lenc, K.; Mensch, A.; Millican, K.; Reynolds, M.; et al. 2022. Flamingo: a visual language model for few-shot learning. Advances in neural information processing systems, 35: 23716–23736.

- Belzner, Gabor, and Wirsing (2023) Belzner, L.; Gabor, T.; and Wirsing, M. 2023. Large language model assisted software engineering: prospects, challenges, and a case study. In International Conference on Bridging the Gap between AI and Reality, 355–374. Springer.

- Chan et al. (2023) Chan, C.-M.; Chen, W.; Su, Y.; Yu, J.; Xue, W.; Zhang, S.; Fu, J.; and Liu, Z. 2023. Chateval: Towards better llm-based evaluators through multi-agent debate. arXiv preprint arXiv:2308.07201.

- Chang et al. (2015) Chang, A.; Monroe, W.; Savva, M.; Potts, C.; and Manning, C. D. 2015. Text to 3D Scene Generation with Rich Lexical Grounding. In Proceedings of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), 53–62.

- Chang, Savva, and Manning (2014) Chang, A.; Savva, M.; and Manning, C. D. 2014. Learning spatial knowledge for text to 3D scene generation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), 2028–2038.

- Chen et al. (2024) Chen, Z.; Wang, W.; Tian, H.; Ye, S.; Gao, Z.; Cui, E.; Tong, W.; Hu, K.; Luo, J.; Ma, Z.; et al. 2024. How Far Are We to GPT-4V? Closing the Gap to Commercial Multimodal Models with Open-Source Suites. arXiv preprint arXiv:2404.16821.

- Chu et al. (2024) Chu, X.; Qiao, L.; Zhang, X.; Xu, S.; Wei, F.; Yang, Y.; Sun, X.; Hu, Y.; Lin, X.; Zhang, B.; et al. 2024. MobileVLM V2: Faster and Stronger Baseline for Vision Language Model. arXiv preprint arXiv:2402.03766.

- Deitke et al. (2023) Deitke, M.; Schwenk, D.; Salvador, J.; Weihs, L.; Michel, O.; VanderBilt, E.; Schmidt, L.; Ehsani, K.; Kembhavi, A.; and Farhadi, A. 2023. Objaverse: A universe of annotated 3d objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 13142–13153.

- Deng et al. (2024) Deng, X.; Gu, Y.; Zheng, B.; Chen, S.; Stevens, S.; Wang, B.; Sun, H.; and Su, Y. 2024. Mind2web: Towards a generalist agent for the web. Advances in Neural Information Processing Systems, 36.

- Dhamo et al. (2021) Dhamo, H.; Manhardt, F.; Navab, N.; and Tombari, F. 2021. Graph-to-3d: End-to-end generation and manipulation of 3d scenes using scene graphs. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 16352–16361.

- Dong et al. (2024) Dong, X.; Zhang, P.; Zang, Y.; Cao, Y.; Wang, B.; Ouyang, L.; Zhang, S.; Duan, H.; Zhang, W.; Li, Y.; et al. 2024. InternLM-XComposer2-4KHD: A Pioneering Large Vision-Language Model Handling Resolutions from 336 Pixels to 4K HD. arXiv preprint arXiv:2404.06512.

- Fabri and Pion (2009) Fabri, A.; and Pion, S. 2009. CGAL: The computational geometry algorithms library. In Proceedings of the 17th ACM SIGSPATIAL international conference on advances in geographic information systems, 538–539.

- Feng et al. (2024) Feng, W.; Zhu, W.; Fu, T.-j.; Jampani, V.; Akula, A.; He, X.; Basu, S.; Wang, X. E.; and Wang, W. Y. 2024. Layoutgpt: Compositional visual planning and generation with large language models. Advances in Neural Information Processing Systems, 36.

- Fisher et al. (2012) Fisher, M.; Ritchie, D.; Savva, M.; Funkhouser, T.; and Hanrahan, P. 2012. Example-based synthesis of 3D object arrangements. ACM Transactions on Graphics (TOG), 31(6): 1–11.

- Fu et al. (2021a) Fu, H.; Cai, B.; Gao, L.; Zhang, L.-X.; Wang, J.; Li, C.; Zeng, Q.; Sun, C.; Jia, R.; Zhao, B.; et al. 2021a. 3d-front: 3d furnished rooms with layouts and semantics. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 10933–10942.

- Fu et al. (2021b) Fu, H.; Jia, R.; Gao, L.; Gong, M.; Zhao, B.; Maybank, S.; and Tao, D. 2021b. 3d-future: 3d furniture shape with texture. International Journal of Computer Vision, 129: 3313–3337.

- Fu et al. (2017) Fu, Q.; Chen, X.; Wang, X.; Wen, S.; Zhou, B.; and Fu, H. 2017. Adaptive synthesis of indoor scenes via activity-associated object relation graphs. ACM Transactions on Graphics (TOG), 36(6): 1–13.

- Gravitas (2023) Gravitas, S. 2023. AutoGPT. https://agpt.co.

- Haque et al. (2023) Haque, A.; Tancik, M.; Efros, A. A.; Holynski, A.; and Kanazawa, A. 2023. Instruct-nerf2nerf: Editing 3d scenes with instructions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 19740–19750.

- Hart (2006) Hart, S. G. 2006. NASA-task load index (NASA-TLX); 20 years later. In Proceedings of the human factors and ergonomics society annual meeting, volume 50, 904–908. Sage publications Sage CA: Los Angeles, CA.

- Hong et al. (2024) Hong, F.; Tang, J.; Cao, Z.; Shi, M.; Wu, T.; Chen, Z.; Wang, T.; Pan, L.; Lin, D.; and Liu, Z. 2024. 3DTopia: Large Text-to-3D Generation Model with Hybrid Diffusion Priors. arXiv preprint arXiv:2403.02234.

- Lester, Al-Rfou, and Constant (2021) Lester, B.; Al-Rfou, R.; and Constant, N. 2021. The Power of Scale for Parameter-Efficient Prompt Tuning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, 3045–3059.

- Li et al. (2024a) Li, C.; Wong, C.; Zhang, S.; Usuyama, N.; Liu, H.; Yang, J.; Naumann, T.; Poon, H.; and Gao, J. 2024a. Llava-med: Training a large language-and-vision assistant for biomedicine in one day. Advances in Neural Information Processing Systems, 36.

- Li et al. (2023) Li, J.; Tan, H.; Zhang, K.; Xu, Z.; Luan, F.; Xu, Y.; Hong, Y.; Sunkavalli, K.; Shakhnarovich, G.; and Bi, S. 2023. Instant3D: Fast Text-to-3D with Sparse-view Generation and Large Reconstruction Model. In The Twelfth International Conference on Learning Representations.

- Li et al. (2019) Li, M.; Patil, A. G.; Xu, K.; Chaudhuri, S.; Khan, O.; Shamir, A.; Tu, C.; Chen, B.; Cohen-Or, D.; and Zhang, H. 2019. Grains: Generative recursive autoencoders for indoor scenes. ACM Transactions on Graphics (TOG), 38(2): 1–16.

- Li et al. (2024b) Li, Y.; Zhang, Y.; Wang, C.; Zhong, Z.; Chen, Y.; Chu, R.; Liu, S.; and Jia, J. 2024b. Mini-Gemini: Mining the Potential of Multi-modality Vision Language Models. arXiv preprint arXiv:2403.18814.

- Lin and Yadong (2023) Lin, C.; and Yadong, M. 2023. InstructScene: Instruction-Driven 3D Indoor Scene Synthesis with Semantic Graph Prior. In The Twelfth International Conference on Learning Representations.

- Lin et al. (2023) Lin, C.-H.; Gao, J.; Tang, L.; Takikawa, T.; Zeng, X.; Huang, X.; Kreis, K.; Fidler, S.; Liu, M.-Y.; and Lin, T.-Y. 2023. Magic3d: High-resolution text-to-3d content creation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 300–309.

- LumaAI (2023) LumaAI. 2023. Luma AI. https://lumalabs.ai/.

- Luo et al. (2020) Luo, A.; Zhang, Z.; Wu, J.; and Tenenbaum, J. B. 2020. End-to-end optimization of scene layout. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3754–3763.

- Ma et al. (2016) Ma, R.; Li, H.; Zou, C.; Liao, Z.; Tong, X.; and Zhang, H. 2016. Action-driven 3D indoor scene evolution. ACM Transactions on Graphics (TOG), 35(6): 1–13.

- Ma et al. (2018) Ma, R.; Patil, A. G.; Fisher, M.; Li, M.; Pirk, S.; Hua, B.-S.; Yeung, S.-K.; Tong, X.; Guibas, L.; and Zhang, H. 2018. Language-driven synthesis of 3D scenes from scene databases. ACM Transactions on Graphics (TOG), 37(6): 1–16.

- Ma et al. (2023) Ma, Y.; Zhang, X.; Sun, X.; Ji, J.; Wang, H.; Jiang, G.; Zhuang, W.; and Ji, R. 2023. X-mesh: Towards fast and accurate text-driven 3d stylization via dynamic textual guidance. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2749–2760.

- MeshyAI (2023) MeshyAI. 2023. Meshy. https://www.meshy.ai/.

- Metzer et al. (2023) Metzer, G.; Richardson, E.; Patashnik, O.; Giryes, R.; and Cohen-Or, D. 2023. Latent-nerf for shape-guided generation of 3d shapes and textures. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 12663–12673.

- Mohammad Khalid et al. (2022) Mohammad Khalid, N.; Xie, T.; Belilovsky, E.; and Popa, T. 2022. Clip-mesh: Generating textured meshes from text using pretrained image-text models. In SIGGRAPH Asia 2022 conference papers, 1–8.

- Nakajima (2024) Nakajima, Y. 2024. BabyAGI. https://github.com/yoheinakajima/babyagi.

- OpenAI (2023) OpenAI. 2023. GPT-4 Vision. https://platform.openai.com/docs/guides/vision.

- Oquab et al. (2023) Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H. V.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; HAZIZA, D.; Massa, F.; El-Nouby, A.; et al. 2023. DINOv2: Learning Robust Visual Features without Supervision. Transactions on Machine Learning Research.

- Osika (2024) Osika, A. 2024. GPT-Engineer. https://github.com/gpt-engineer-org/gpt-engineer.

- Paschalidou et al. (2021) Paschalidou, D.; Kar, A.; Shugrina, M.; Kreis, K.; Geiger, A.; and Fidler, S. 2021. Atiss: Autoregressive transformers for indoor scene synthesis. Advances in Neural Information Processing Systems, 34: 12013–12026.

- Poole et al. (2022) Poole, B.; Jain, A.; Barron, J. T.; and Mildenhall, B. 2022. DreamFusion: Text-to-3D using 2D Diffusion. In The Eleventh International Conference on Learning Representations.

- Qi et al. (2018) Qi, S.; Zhu, Y.; Huang, S.; Jiang, C.; and Zhu, S.-C. 2018. Human-centric indoor scene synthesis using stochastic grammar. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, 5899–5908.

- Radford et al. (2021) Radford, A.; Kim, J. W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. 2021. Learning transferable visual models from natural language supervision. In International conference on machine learning, 8748–8763. PMLR.

- Raj et al. (2023) Raj, A.; Kaza, S.; Poole, B.; Niemeyer, M.; Ruiz, N.; Mildenhall, B.; Zada, S.; Aberman, K.; Rubinstein, M.; Barron, J.; et al. 2023. Dreambooth3d: Subject-driven text-to-3d generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 2349–2359.

- Ritchie, Wang, and Lin (2019) Ritchie, D.; Wang, K.; and Lin, Y.-a. 2019. Fast and flexible indoor scene synthesis via deep convolutional generative models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 6182–6190.

- Shin et al. (2020) Shin, T.; Razeghi, Y.; Logan IV, R. L.; Wallace, E.; and Singh, S. 2020. AutoPrompt: Eliciting Knowledge from Language Models with Automatically Generated Prompts. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), 4222–4235.

- Shinn et al. (2024) Shinn, N.; Cassano, F.; Gopinath, A.; Narasimhan, K.; and Yao, S. 2024. Reflexion: Language agents with verbal reinforcement learning. Advances in Neural Information Processing Systems, 36.

- Shtedritski, Rupprecht, and Vedaldi (2023) Shtedritski, A.; Rupprecht, C.; and Vedaldi, A. 2023. What does clip know about a red circle? visual prompt engineering for vlms. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 11987–11997.

- Su et al. (2022) Su, H.; Kasai, J.; Wu, C. H.; Shi, W.; Wang, T.; Xin, J.; Zhang, R.; Ostendorf, M.; Zettlemoyer, L.; Smith, N. A.; et al. 2022. Selective annotation makes language models better few-shot learners. arXiv preprint arXiv:2209.01975.

- SudoAI (2023) SudoAI. 2023. Audo AI. https://www.sudo.ai/.

- Tang et al. (2024) Tang, J.; Chen, Z.; Chen, X.; Wang, T.; Zeng, G.; and Liu, Z. 2024. LGM: Large Multi-View Gaussian Model for High-Resolution 3D Content Creation. arXiv preprint arXiv:2402.05054.

- Tang et al. (2023a) Tang, J.; Nie, Y.; Markhasin, L.; Dai, A.; Thies, J.; and Nießner, M. 2023a. Diffuscene: Scene graph denoising diffusion probabilistic model for generative indoor scene synthesis. arXiv preprint arXiv:2303.14207.

- Tang et al. (2023b) Tang, J.; Ren, J.; Zhou, H.; Liu, Z.; and Zeng, G. 2023b. Dreamgaussian: Generative gaussian splatting for efficient 3d content creation. arXiv preprint arXiv:2309.16653.

- TripoAI (2024) TripoAI. 2024. Tripo3d. https://www.tripo3d.ai/.

- Wang et al. (2022a) Wang, C.; Chai, M.; He, M.; Chen, D.; and Liao, J. 2022a. Clip-nerf: Text-and-image driven manipulation of neural radiance fields. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3835–3844.

- Wang et al. (2023) Wang, C.; Jiang, R.; Chai, M.; He, M.; Chen, D.; and Liao, J. 2023. Nerf-art: Text-driven neural radiance fields stylization. IEEE Transactions on Visualization and Computer Graphics.

- Wang et al. (2019) Wang, K.; Lin, Y.-A.; Weissmann, B.; Savva, M.; Chang, A. X.; and Ritchie, D. 2019. Planit: Planning and instantiating indoor scenes with relation graph and spatial prior networks. ACM Transactions on Graphics (TOG), 38(4): 1–15.

- Wang et al. (2018) Wang, K.; Savva, M.; Chang, A. X.; and Ritchie, D. 2018. Deep convolutional priors for indoor scene synthesis. ACM Transactions on Graphics (TOG), 37(4): 1–14.

- Wang et al. (2022b) Wang, X.; Wei, J.; Schuurmans, D.; Le, Q. V.; Chi, E. H.; Narang, S.; Chowdhery, A.; and Zhou, D. 2022b. Self-Consistency Improves Chain of Thought Reasoning in Language Models. In The Eleventh International Conference on Learning Representations.

- Wang, Yeshwanth, and Nießner (2021) Wang, X.; Yeshwanth, C.; and Nießner, M. 2021. Sceneformer: Indoor scene generation with transformers. In 2021 International Conference on 3D Vision (3DV), 106–115. IEEE.

- Wang et al. (2024) Wang, Z.; Lu, C.; Wang, Y.; Bao, F.; Li, C.; Su, H.; and Zhu, J. 2024. Prolificdreamer: High-fidelity and diverse text-to-3d generation with variational score distillation. Advances in Neural Information Processing Systems, 36.

- Wen et al. (2023) Wen, Z.; Liu, Z.; Sridhar, S.; and Fu, R. 2023. AnyHome: Open-Vocabulary Generation of Structured and Textured 3D Homes. arXiv preprint arXiv:2312.06644.

- Wu et al. (2023) Wu, Q.; Bansal, G.; Zhang, J.; Wu, Y.; Zhang, S.; Zhu, E.; Li, B.; Jiang, L.; Zhang, X.; and Wang, C. 2023. Autogen: Enabling next-gen llm applications via multi-agent conversation framework. arXiv preprint arXiv:2308.08155.

- Xi et al. (2023) Xi, Z.; Chen, W.; Guo, X.; He, W.; Ding, Y.; Hong, B.; Zhang, M.; Wang, J.; Jin, S.; Zhou, E.; et al. 2023. The rise and potential of large language model based agents: A survey. arXiv preprint arXiv:2309.07864.

- Yang et al. (2023a) Yang, J.; Zhang, H.; Li, F.; Zou, X.; Li, C.; and Gao, J. 2023a. Set-of-mark prompting unleashes extraordinary visual grounding in gpt-4v. arXiv preprint arXiv:2310.11441.

- Yang et al. (2024) Yang, L.; Wang, Y.; Li, X.; Wang, X.; and Yang, J. 2024. Fine-grained visual prompting. Advances in Neural Information Processing Systems, 36.

- Yang et al. (2022) Yang, Z.; Gan, Z.; Wang, J.; Hu, X.; Lu, Y.; Liu, Z.; and Wang, L. 2022. An empirical study of gpt-3 for few-shot knowledge-based vqa. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 36, 3081–3089.

- Yang et al. (2023b) Yang, Z.; Li, L.; Lin, K.; Wang, J.; Lin, C.-C.; Liu, Z.; and Wang, L. 2023b. The dawn of lmms: Preliminary explorations with gpt-4v (ision). arXiv preprint arXiv:2309.17421, 9(1): 1.

- Yao et al. (2023) Yao, S.; Zhao, J.; Yu, D.; Du, N.; Shafran, I.; Narasimhan, K.; and Cao, Y. 2023. ReAct: Synergizing Reasoning and Acting in Language Models. In International Conference on Learning Representations (ICLR).

- Yeh et al. (2012) Yeh, Y.-T.; Yang, L.; Watson, M.; Goodman, N. D.; and Hanrahan, P. 2012. Synthesizing open worlds with constraints using locally annealed reversible jump mcmc. ACM Transactions on Graphics (TOG), 31(4): 1–11.

- Yi et al. (2023) Yi, T.; Fang, J.; Wu, G.; Xie, L.; Zhang, X.; Liu, W.; Tian, Q.; and Wang, X. 2023. Gaussiandreamer: Fast generation from text to 3d gaussian splatting with point cloud priors. arXiv preprint arXiv:2310.08529.

- Zhai et al. (2024) Zhai, G.; Örnek, E. P.; Wu, S.-C.; Di, Y.; Tombari, F.; Navab, N.; and Busam, B. 2024. Commonscenes: Generating commonsense 3d indoor scenes with scene graphs. Advances in Neural Information Processing Systems, 36.

- Zhan et al. (2024) Zhan, J.; Dai, J.; Ye, J.; Zhou, Y.; Zhang, D.; Liu, Z.; Zhang, X.; Yuan, R.; Zhang, G.; Li, L.; et al. 2024. AnyGPT: Unified Multimodal LLM with Discrete Sequence Modeling. arXiv preprint arXiv:2402.12226.

- Zhang et al. (2020) Zhang, Z.; Yang, Z.; Ma, C.; Luo, L.; Huth, A.; Vouga, E.; and Huang, Q. 2020. Deep generative modeling for scene synthesis via hybrid representations. ACM Transactions on Graphics (TOG), 39(2): 1–21.

- Zhang et al. (2022) Zhang, Z.; Zhang, A.; Li, M.; and Smola, A. 2022. Automatic Chain of Thought Prompting in Large Language Models. In The Eleventh International Conference on Learning Representations.

- Zhao et al. (2024) Zhao, A.; Huang, D.; Xu, Q.; Lin, M.; Liu, Y.-J.; and Huang, G. 2024. Expel: Llm agents are experiential learners. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 38, 19632–19642.

- Zhou et al. (2022) Zhou, Y.; Muresanu, A. I.; Han, Z.; Paster, K.; Pitis, S.; Chan, H.; and Ba, J. 2022. Large Language Models are Human-Level Prompt Engineers. In The Eleventh International Conference on Learning Representations.

- Zhou, While, and Kalogerakis (2019) Zhou, Y.; While, Z.; and Kalogerakis, E. 2019. Scenegraphnet: Neural message passing for 3d indoor scene augmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 7384–7392.

- Zhu et al. (2023) Zhu, D.; Chen, J.; Shen, X.; Li, X.; and Elhoseiny, M. 2023. MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models. In The Twelfth International Conference on Learning Representations.

We provide supplementary material for Chat2Layout, covering a summary of related furniture layout generation works, implementation details, prompts for floor placement, and pose alignment. Additionally, we provide a user study and discuss our user interface. Lastly, we discuss the limitations of our method.

Appendix A Summary of Layout Generation Works

| Layout Completion |

|

Text Instruction | 3D Placement |

|

|||||

|

|||||||||

|

✓ | ||||||||

|

✓ | ✓ | |||||||

| SceneFormer (Wang, Yeshwanth, and Nießner 2021), (Ma et al. 2016), (Ma et al. 2018) | ✓ | ✓ | |||||||

| (Chang, Savva, and Manning 2014), (Chang et al. 2015) | ✓ | ||||||||

|

✓ | ✓ | ✓ | ||||||

| AnyHome (Wen et al. 2023) | ✓ | ✓ | ✓ | ✓ | |||||

| Chat2Layout | ✓ | ✓ | ✓ | ✓ | ✓ |

Table 2 provides a summary of optimized-based methods (Qi et al. 2018; Ma et al. 2016; Chang et al. 2015; Chang, Savva, and Manning 2014; Yeh et al. 2012; Fisher et al. 2012; Luo et al. 2020; Wang et al. 2019; Ma et al. 2018; Fu et al. 2017), learning-based methods (Zhang et al. 2020; Wang et al. 2018; Ritchie, Wang, and Lin 2019; Zhou, While, and Kalogerakis 2019; Li et al. 2019; Dhamo et al. 2021; Paschalidou et al. 2021; Wang, Yeshwanth, and Nießner 2021; Zhai et al. 2024; Tang et al. 2023a; Lin and Yadong 2023), and LLM-based methods (Feng et al. 2024; Wen et al. 2023) on furniture layout generation. In comparison, Chat2Layout supports a wider range of applications than these baselines. Specifically, Chat2Layout supports layout completion, layout rearrangement, text instruction, 3D placement (onto or under others, or on walls), open-set placement, and multi-conventional interaction. Notably, supporting open-set placement means that Chat2Layout is not restricted to predefined furniture objects nor reliant on retrieving items from a 3D furniture dataset. Moreover, our agent system facilitates continuous user interaction through multi-run conversations, allowing users to iteratively refine the layout plan until desired outcome is reached. It should be noted that AnyHome supports only single-turn conversations for layout adjustments without memorization, whereas our method supports multi-turn interactions through the agent, favoring long-term and complex interactions.

Appendix B Implementation Details

We employ GPT-4 vision (OpenAI 2023) as the MLLM to implement our agent. We develop the pipeline in Python and implement user-interface/visualization in Open3D. The system is deployed on Windows/Linux workstations without GPUs. To ensure the creation of satisfactory and style-consistent 3D meshes from a text prompts, we generate candidates using ‘Text-2-3D<text>’ and have the agent select the most suitable one. We also incorporate style descriptions (if applicable) into ‘Text-2-3D<text>’ to maintain stylistic coherence. For instance, in a Gothic-style living room, if a user requests adding a chair, ‘<text>’ in ‘Text-2-3D<text>’ would specify ‘a Gothic-style chair’ rather than simply ‘a chair’. For support set searching, we compile databases containing to candidate examples per question. In Equation 1, we set and to prioritize textual similarity while integrating textual and visual environmental information. In addition, we utilize the ‘clip-vit-base-patch16’ model to calculate CLIP similarity. In our O2O-Search algorithm, the example is categorized by characteristics. For instance, ‘0’ indicates a removal task and ‘1’ an abstract addition task, and we have 8 categories in total for task decomposition.

Appendix C Pose Alignment

| Before | After |

Before arranging the layout, we generate a set of required furniture items using ‘Text-2-3D<text>’. However, the initial poses of these objects may be arbitrary. To address this, we perform pose alignment, as illustrated in Figure 11. We begin by calculating both the Axis-Aligned Bounding Box (AABB) and the Optimal/Oriented Bounding Box (OBB) (Fabri and Pion 2009) for each object. By comparing the AABB and OBB, we determine the pose difference between them. Then, we rotate the object based on the calculated pose difference, effectively aligning the AABB and OBB to achieve a more suitable initial pose for the object within the scene.

Appendix D Prompts for Floor Placement

Figure 12 illustrates the exemplar prompts used for object placement on the floor. This vision-text prompting approach, employed by our floor placement algorithm, adheres to the vision-question paradigm, consisting of a role, environment information, visual-text prompts, and a reference set provided by our O2O-Search. All other prompts in our system follow a similar structure, but with variations in text descriptions and visual captures specific to their respective tasks. Leveraging the memory capabilities of LLMs, our agent enables users to ask follow-up questions such as “Provide more results” or request layout completion, as demonstrated in the bottom part of Figure 12.

Appendix E User Interface

We design a user-friendly interface using Open3D, as shown in Figure 13. Users can easily input text instructions and initiate an interaction with our agent system by clicking the button. The generated visual results are displayed in the left widget, providing a feedback on the layout design. Our interface also offers controls for adjusting the viewpoint and lighting, allowing users to optimize the visualization for better understanding of the 3D layout. For a more comprehensive demonstration of our interface, please refer to our accompanying video demo.

Appendix F User Study

| Metric |

|

|

|

Performance↑ | Frustration↓ | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean Score | 1.73 | 1.91 | 3.64 | 4.09 | 2.18 |

We conduct a user study, illustrated in Figure 14, comparing AnyHome, LayoutGPT, and InstructScene with Chat2Layout. Participants are presented with questions, each accompanied by text instructions and four layout options. They are asked to select the option that best: 1) matches the given instructions; and 2) presents a reasonable and realistic layout plan, free from unreasonable collisions or boundary exceedances. After collecting valid responses, we analyze the user preference rates for both instruction matching and layout quality. According to the results, our approach demonstrate superior performance, surpassing the other methods in user preference across both criteria.

We have conducted another user study to evaluate the interaction in Table 3. We invited six non-professional participants to create scenes using our system. Initially, we provided a 10-minute tutorial. Participants were then asked to create 5 predefined scenes and 5 scenes of their choice. Each participant then completed a questionnaire using the five-point NASA Task Load Index (NASA-TLX, 1=very low to 5=very high) (Hart 2006) to evaluate usability and perceived workload. In Table 2, the mental demand was low, indicating that users need little effort when using our system. While our system is not perfect in terms of efficiency due to the unstable GPT-4 API, it performs satisfactorily with a high performance value and demonstrates satisfying user satisfaction with a low frustration value.

Appendix G Limitations

While our agent system facilitates a wider range of user interactions and generates more reasonable layout plans than existing methods, it still faces several challenges, as shown in Figure 15.

Firstly, when tasked with arranging a large number of furniture pieces within a severely limited space, collision issue becomes evident. This red rectangle in the left figure highlights how spatial constraints can lead to suboptimal furniture placement and overlapping elements. This occurs because when the available space is insufficient for the size and quantity of objects, it poses a challenge for our agent system to devise a reasonable layout plan. In future iterations, implementing a checking mechanism and user reminders will be crucial to effectively address this issue.

Secondly, our system’s reliance on existing text-to-3D generation methods presents another challenge. Currently, these methods struggle to produce a harmonized set of furniture items that adhere to a consistent style when given a series of text instructions. While we have partially mitigated this issue by generating multiple candidate items and allowing the agent to select the most suitable one, the problem is not entirely resolved, as occasionally one or more items may not fit seamlessly within the scene. For example, as shown in Figure 15-right, the generated wardrobe lacks stylistic consistency with the other items. It would be a valuable advancement to develop a text-to-3D method capable of producing a cohesive set of style-conscious assets, which we intend to explore in the future.

Finally, efficiency remains a limitation of our system. Generating a scene from scratch currently takes around one minute due to the interaction time with the GPT-4 vision API and the generation of a set of candidate 3D furniture—the exact time depends on the complexity of the generated scene. The instability of the GPT-4 vision API sometimes induces errors due to unstable network and negatively impacts user experience. We believe that efficiency can be significantly improved in the future with the development of more efficient large language models (LLMs).